- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

HBase在小米的实践

展开查看详情

1 .HBase Practice At Xiaomi huzheng@xiaomi.com

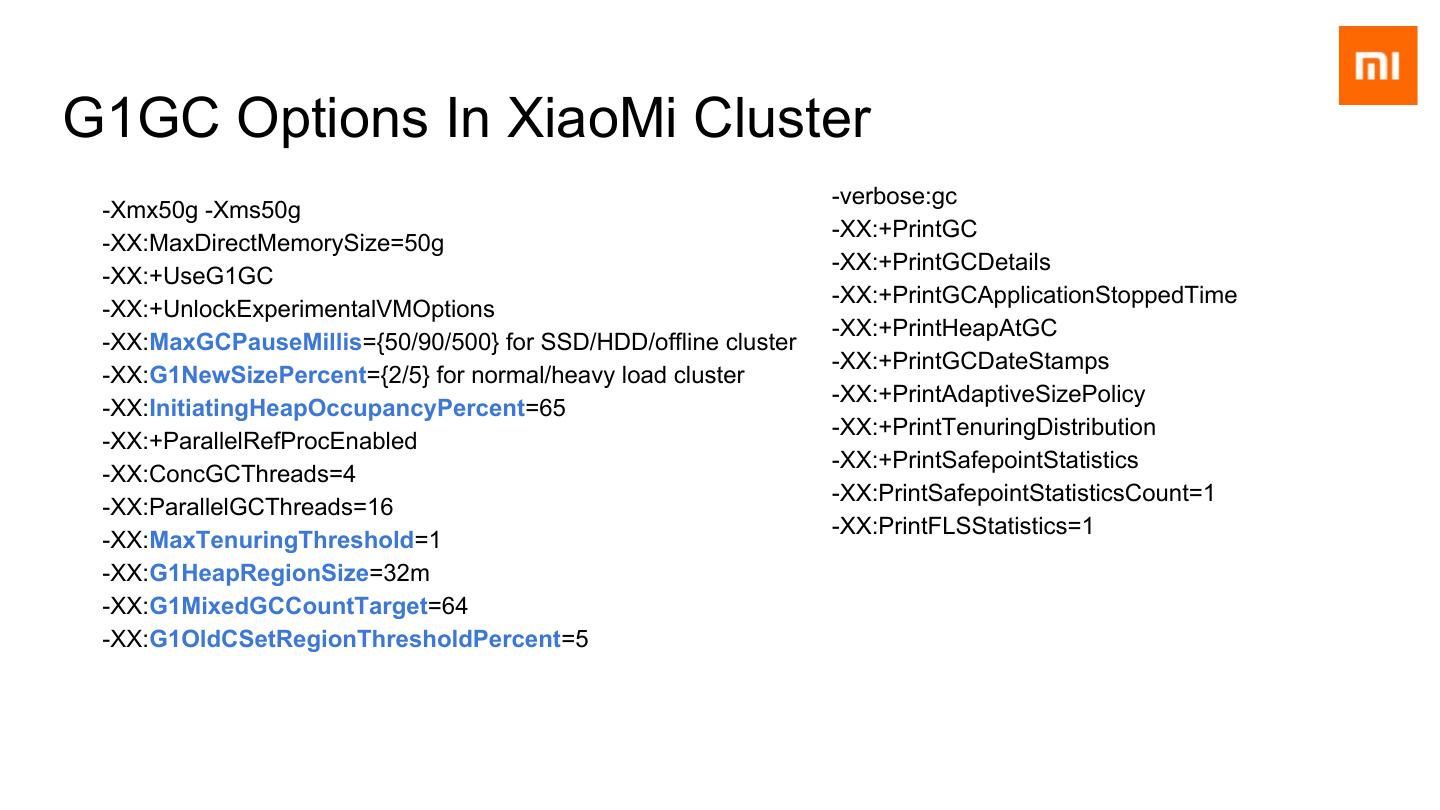

2 .About This Talk ● Async HBase Client ○ Why Async HBase Client ○ Implementation ○ Performance ● How do we tuning G1GC for HBase ○ CMS vs G1 ○ Tuning G1GC ○ G1GC in XiaoMi HBase Cluster

3 .Part-1 Async HBase Client

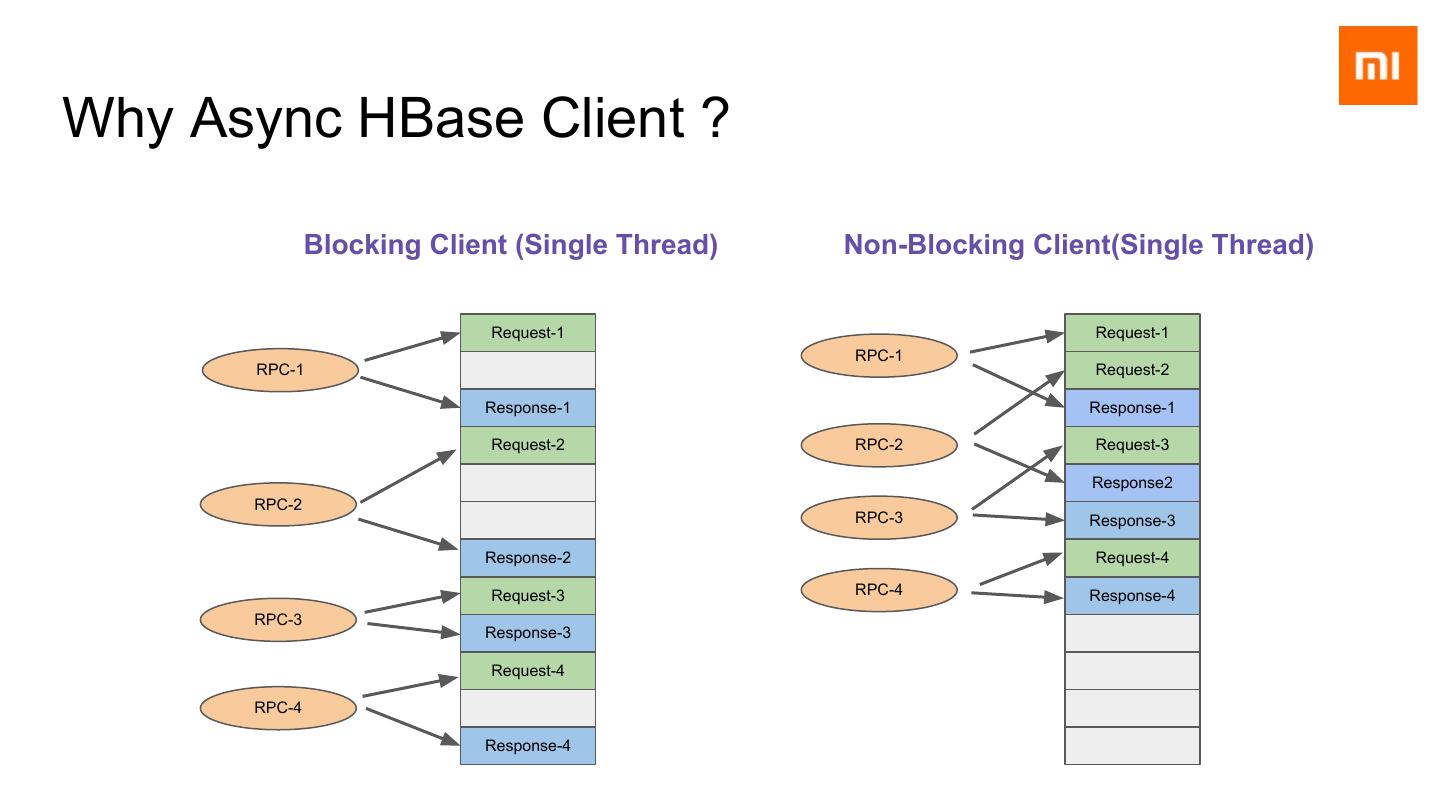

4 .Why Async HBase Client ? Blocking Client (Single Thread) Non-Blocking Client(Single Thread) Request-1 Request-1 RPC-1 RPC-1 Request-2 Response-1 Response-1 Request-2 RPC-2 Request-3 Response2 RPC-2 RPC-3 Response-3 Response-2 Request-4 Request-3 RPC-4 Response-4 RPC-3 Response-3 Request-4 RPC-4 Response-4

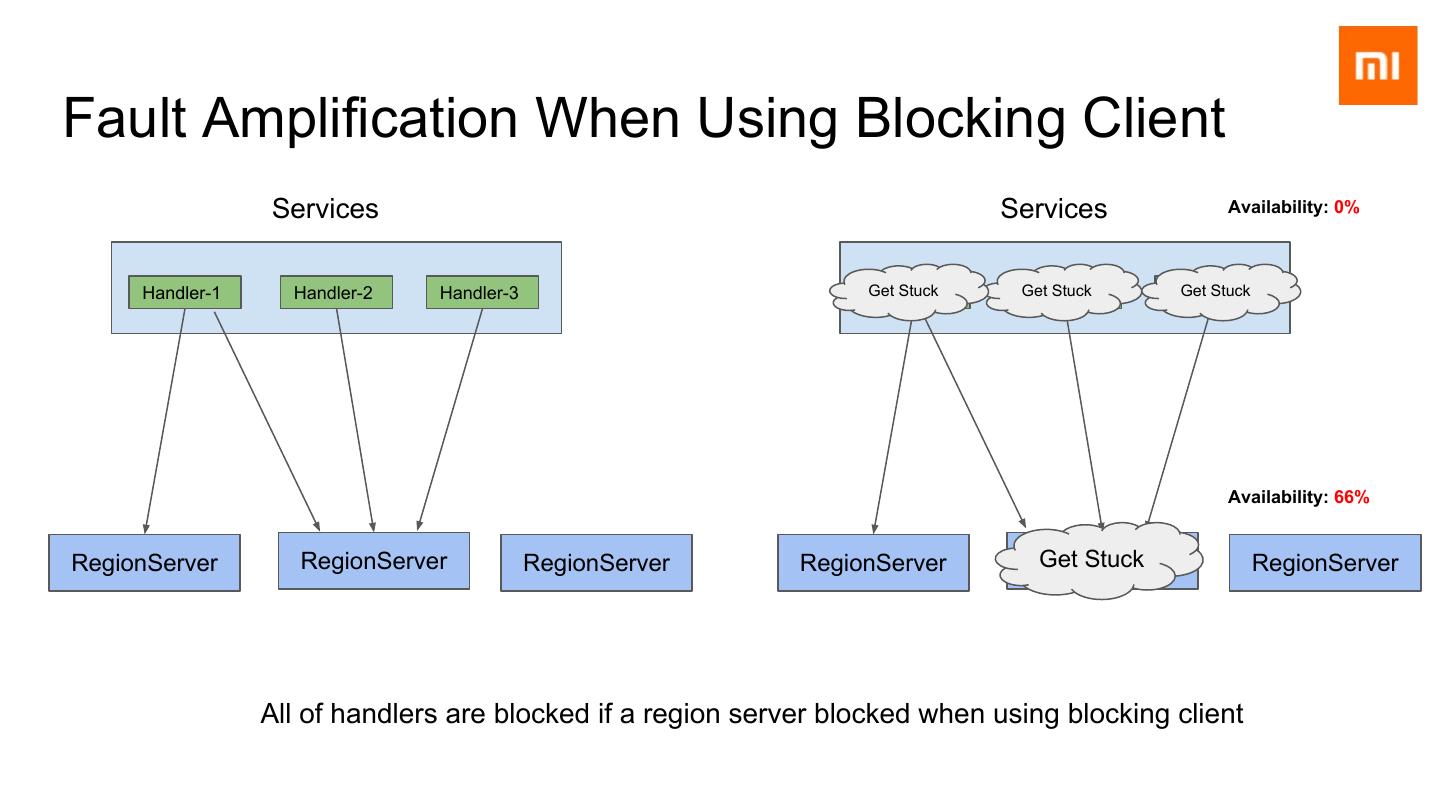

5 .Fault Amplification When Using Blocking Client Services Services Availability: 0% Handler-1 Handler-2 Handler-3 Get Stuck Handler-1 Get Stuck Handler-1 Get Stuck Handler-1 Availability: 66% RegionServer RegionServer RegionServer RegionServer Get Stuck RegionServer RegionServer All of handlers are blocked if a region server blocked when using blocking client

6 .Why Async HBase Client ? ● Region Server / Master STW GC ● Slow RPC to HDFS ● Region Server Crash ● High Load ● Network Failure BTW: HBase may also suffer from fault amplification when accessing HDFS, so AsyncDFSClient ?

7 .Async HBase Client VS OpenTSDB/asynchbase Async HBase Client OpenTSDB/asynchbase(1.8) HBase Version >=2.0.0 Both 0.9x and 1.x.x Table API Every API In Blocking API Parts of Blocking API HBase Admin Supported Not Supported Implementation Included In HBase Project Independent Project Based On PB protocol Coprocessor Supported Not Supported

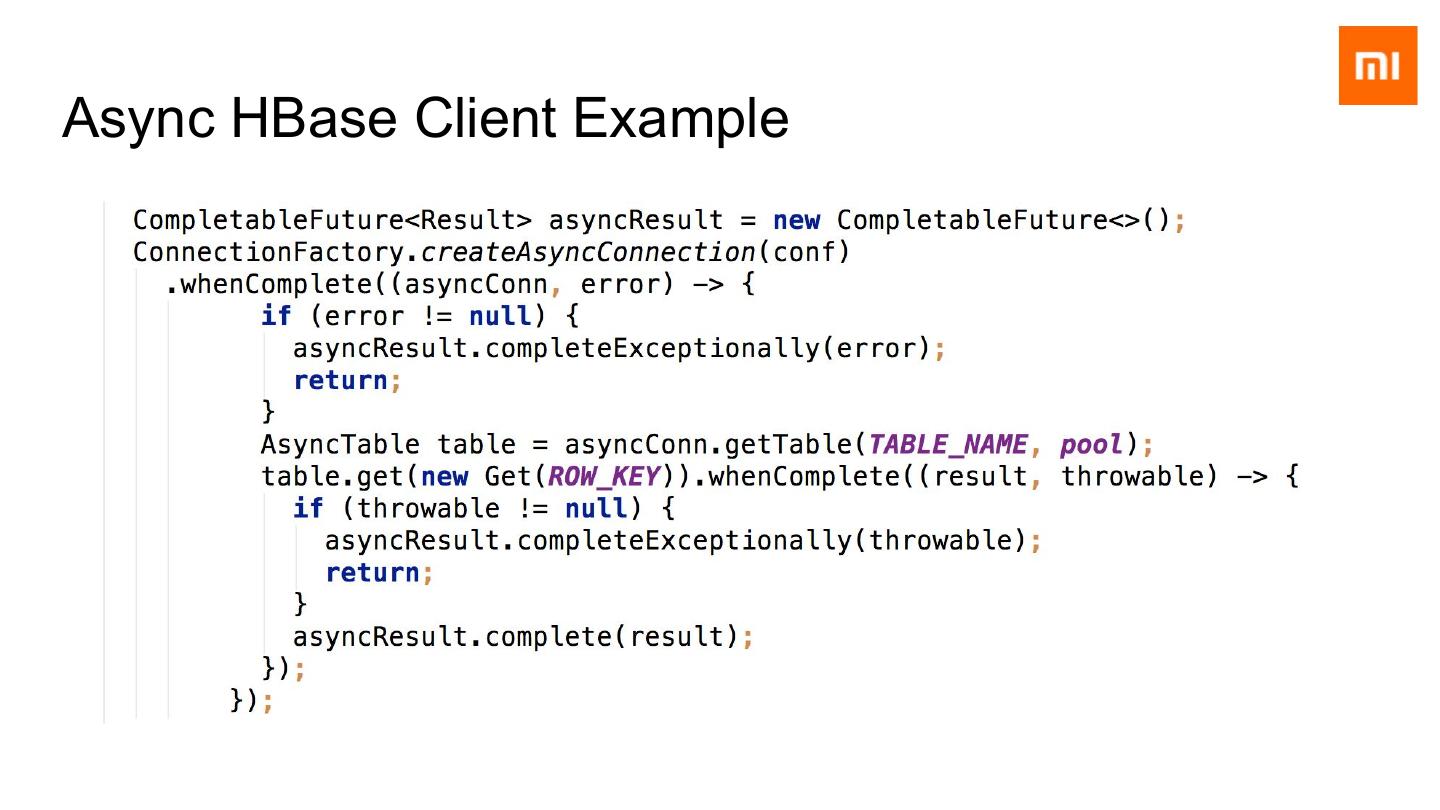

8 .Async HBase Client Example

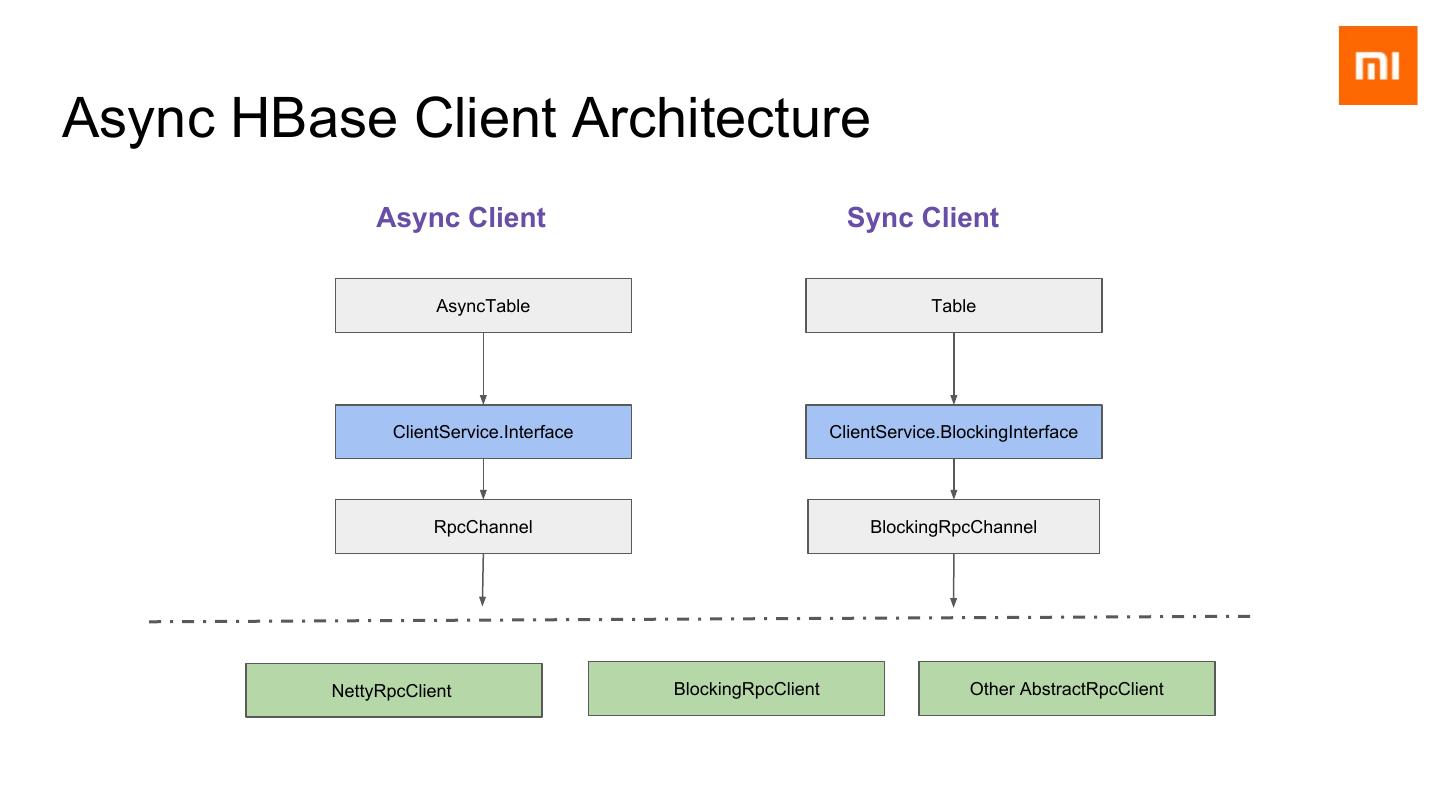

9 .Async HBase Client Architecture Async Client Sync Client AsyncTable Table ClientService.Interface ClientService.BlockingInterface RpcChannel BlockingRpcChannel NettyRpcClient BlockingRpcClient Other AbstractRpcClient

10 .Performance Test ○ Test Async RPC by CompletableFuture.get() (Based on XiaoMi HBase0.98) ○ Proof that latency of async client is at least the same as blocking hbase client.

11 .Part-2 HBase + G1GC Tuning

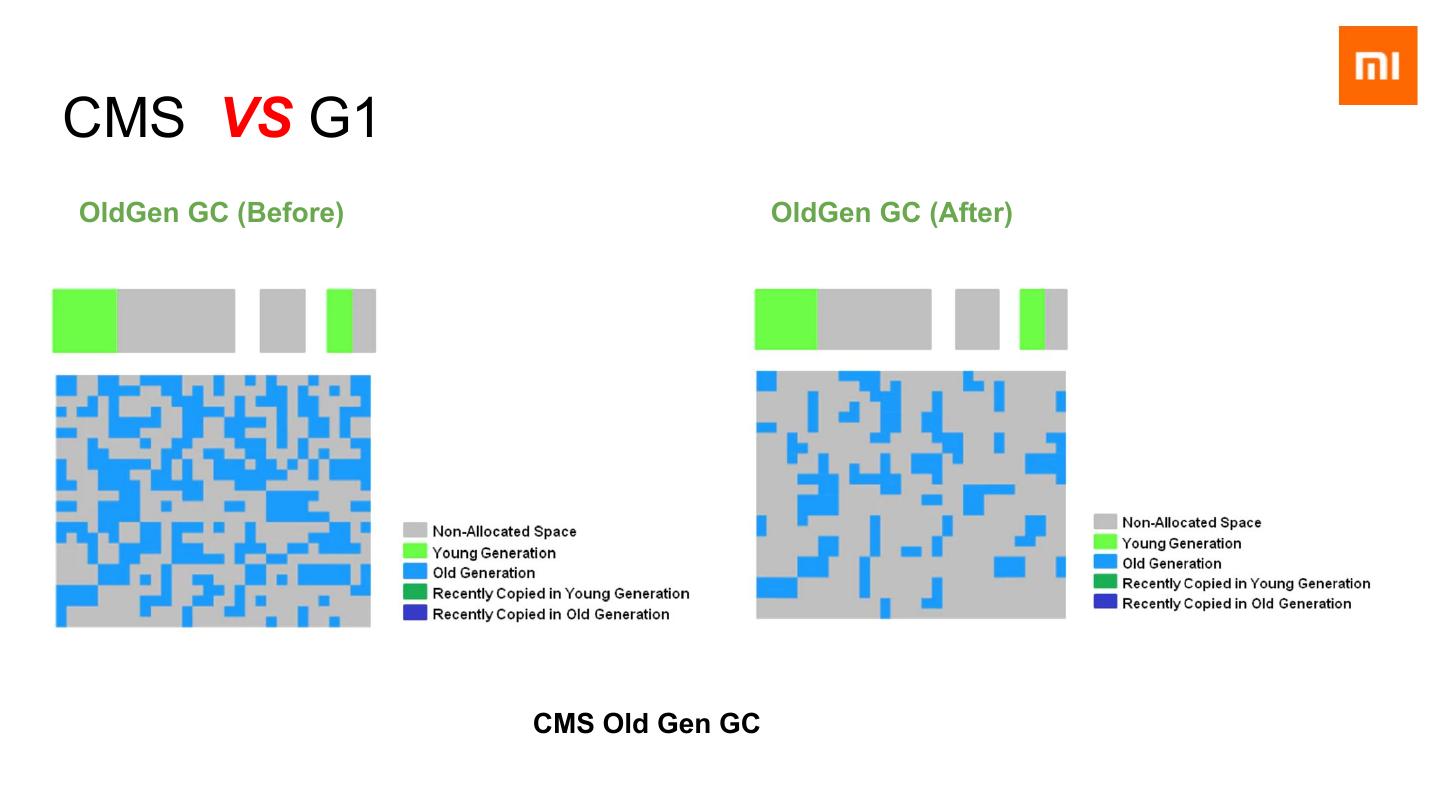

12 .CMS VS G1 OldGen GC (Before) OldGen GC (After) CMS Old Gen GC

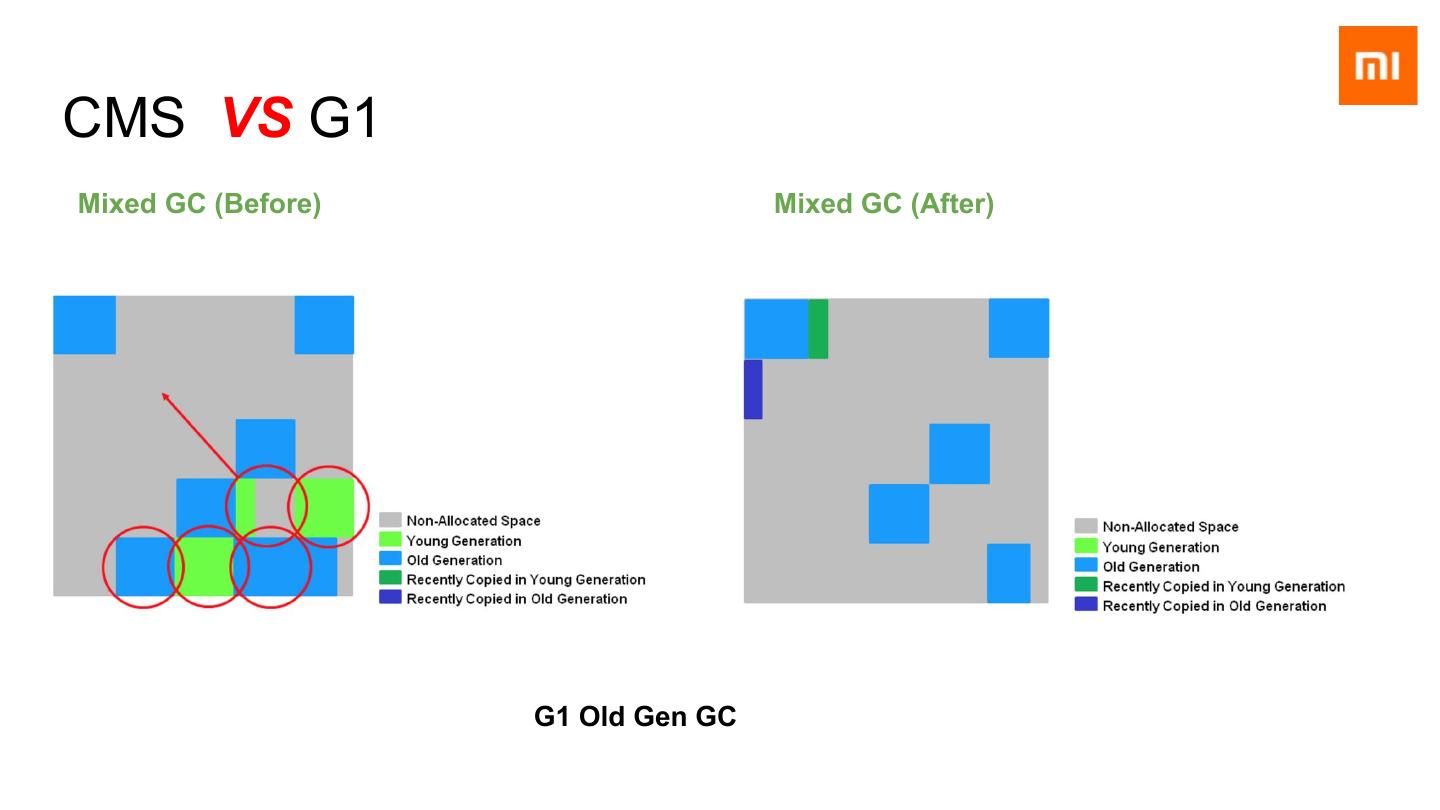

13 .CMS VS G1 Mixed GC (Before) Mixed GC (After) G1 Old Gen GC

14 .CMS VS G1 ● STW Full GC ○ CMS can only compact fragments when full GC, so theoretically you can not avoid full GC. ○ G1 will compact fragments incrementally by multiple mixed GC. so it provides the ability to avoid full GC. ● Heap Size ○ G1 is more suitable for huge heap than CMS

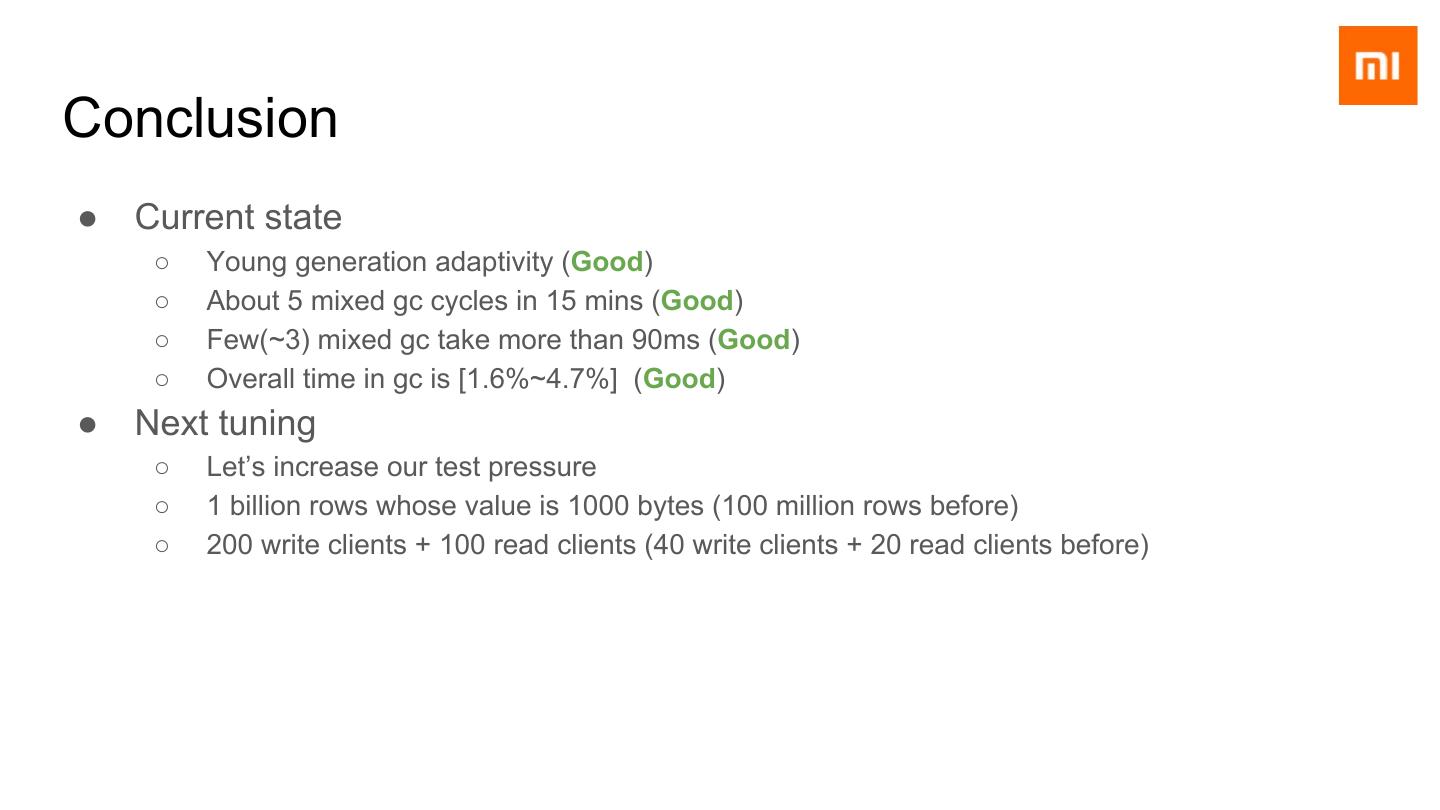

15 .Pressure Test ● Pre-load data ○ A new table with 400 regions ○ 100 millions rows whose value is 1000 bytes ● Pressure test for G1GC tuning ○ 40 write clients + 20 read clients ○ 1 hour for each JVM option changed ● HBase configuration ○ 0.3 <= global memstore limit <= 0.45 ○ hfile.block.cache.size = 0.1 ○ hbase.hregion.memstore.flush.size = 256 MB ○ hbase.bucketcache.ioengine = offheap

16 .Test Environment HBase Cluster HMaster RegionServer RegionServer ● Java: JDK 1.8.0_111 RegionServer ● Heap: 30G Heap + 30G OFF-Heap RegionServer ● CPU: 24 Core RegionServer ● DISK: 4T x 12 HDD ● Network Interface: 10Gb/s 5 Region Server

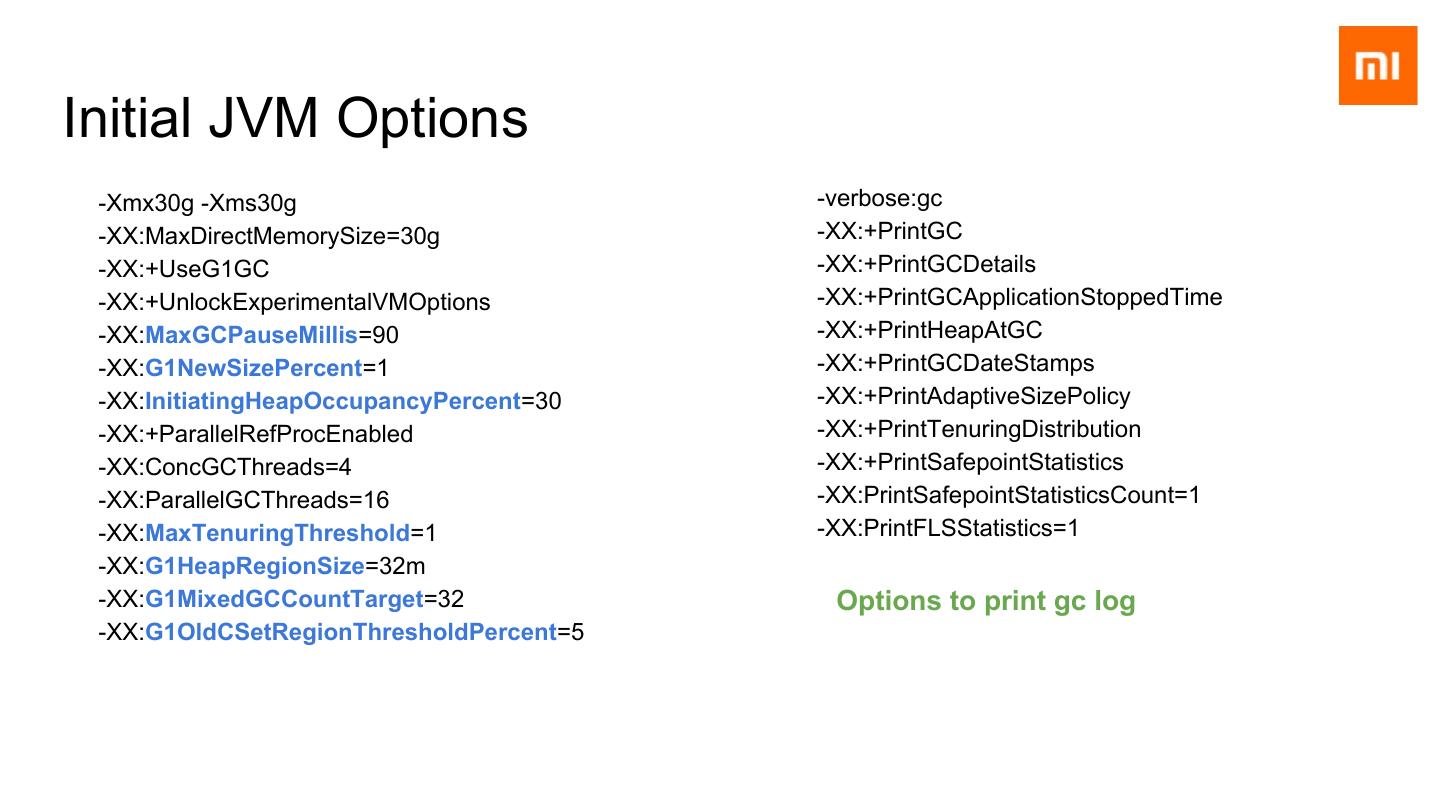

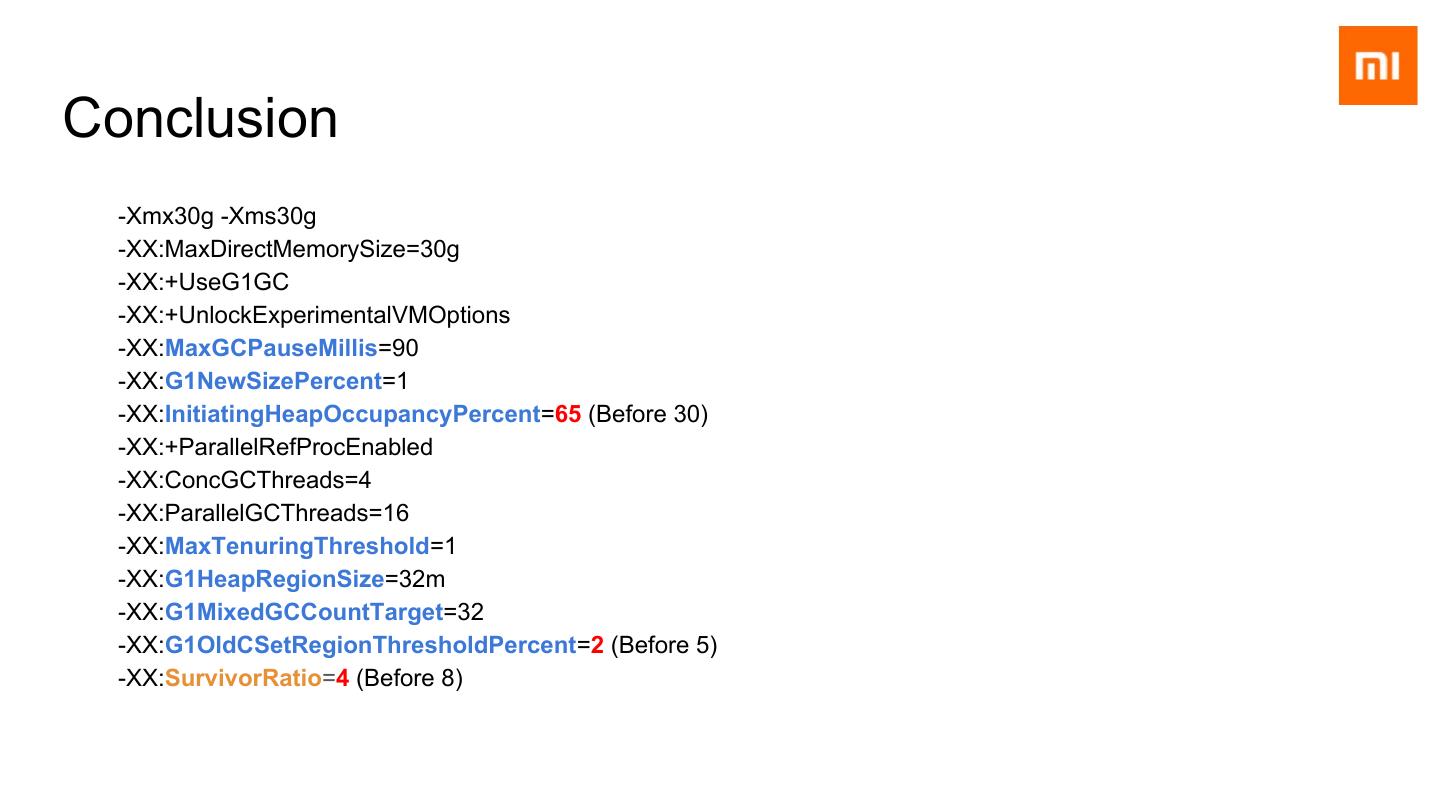

17 .Initial JVM Options -Xmx30g -Xms30g -verbose:gc -XX:MaxDirectMemorySize=30g -XX:+PrintGC -XX:+UseG1GC -XX:+PrintGCDetails -XX:+UnlockExperimentalVMOptions -XX:+PrintGCApplicationStoppedTime -XX:MaxGCPauseMillis=90 -XX:+PrintHeapAtGC -XX:G1NewSizePercent=1 -XX:+PrintGCDateStamps -XX:InitiatingHeapOccupancyPercent=30 -XX:+PrintAdaptiveSizePolicy -XX:+ParallelRefProcEnabled -XX:+PrintTenuringDistribution -XX:ConcGCThreads=4 -XX:+PrintSafepointStatistics -XX:ParallelGCThreads=16 -XX:PrintSafepointStatisticsCount=1 -XX:MaxTenuringThreshold=1 -XX:PrintFLSStatistics=1 -XX:G1HeapRegionSize=32m -XX:G1MixedGCCountTarget=32 Options to print gc log -XX:G1OldCSetRegionThresholdPercent=5

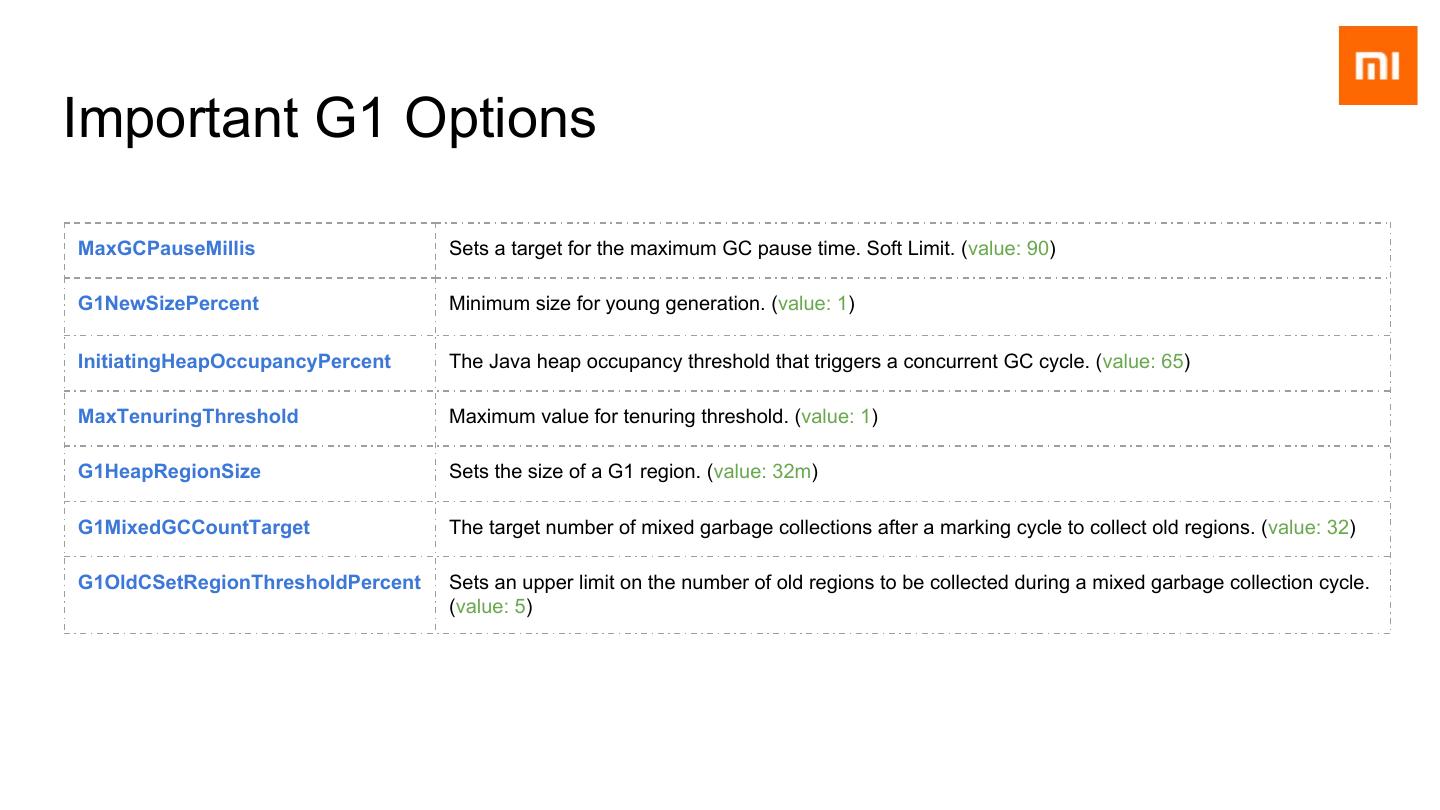

18 .Important G1 Options MaxGCPauseMillis Sets a target for the maximum GC pause time. Soft Limit. (value: 90) G1NewSizePercent Minimum size for young generation. (value: 1) InitiatingHeapOccupancyPercent The Java heap occupancy threshold that triggers a concurrent GC cycle. (value: 65) MaxTenuringThreshold Maximum value for tenuring threshold. (value: 1) G1HeapRegionSize Sets the size of a G1 region. (value: 32m) G1MixedGCCountTarget The target number of mixed garbage collections after a marking cycle to collect old regions. (value: 32) G1OldCSetRegionThresholdPercent Sets an upper limit on the number of old regions to be collected during a mixed garbage collection cycle. (value: 5)

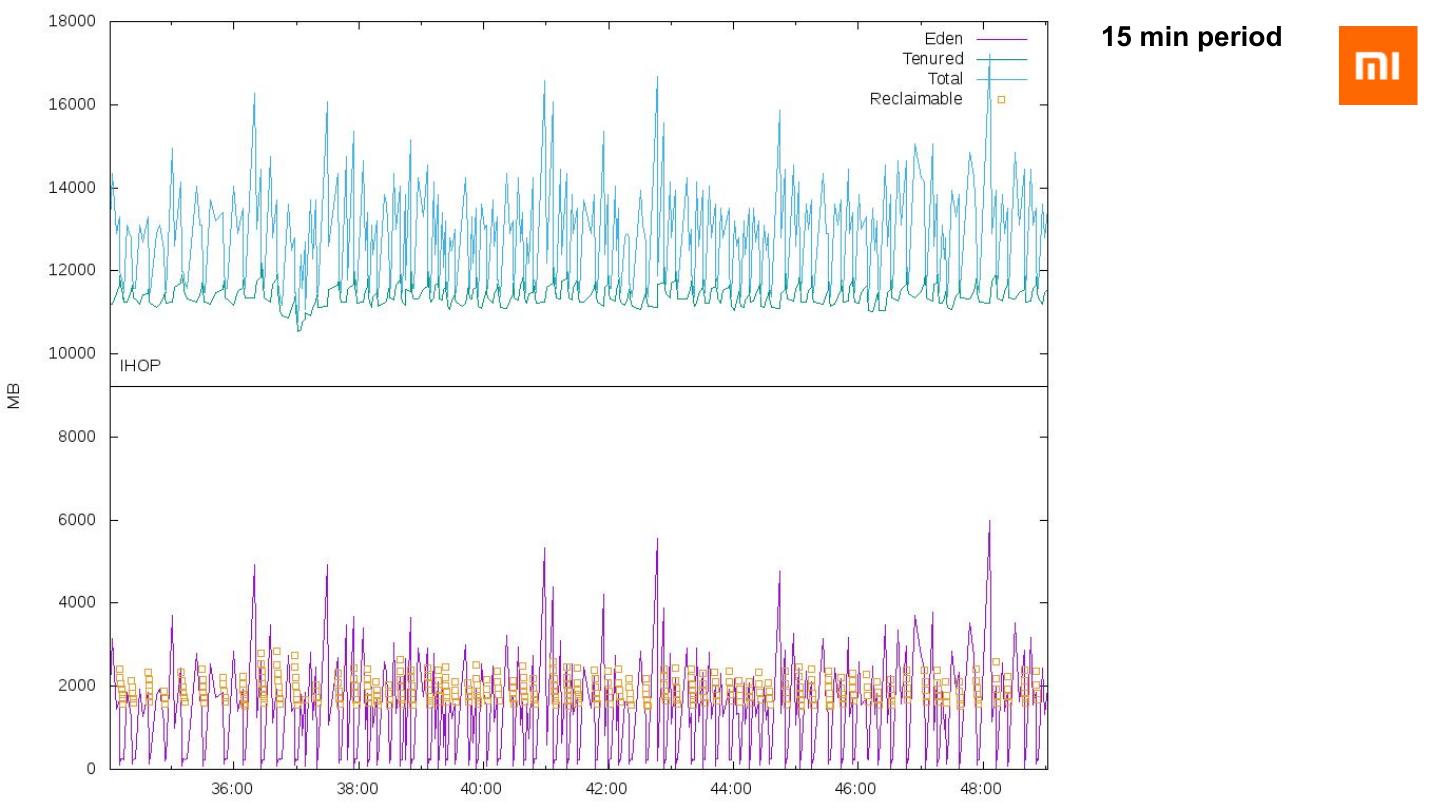

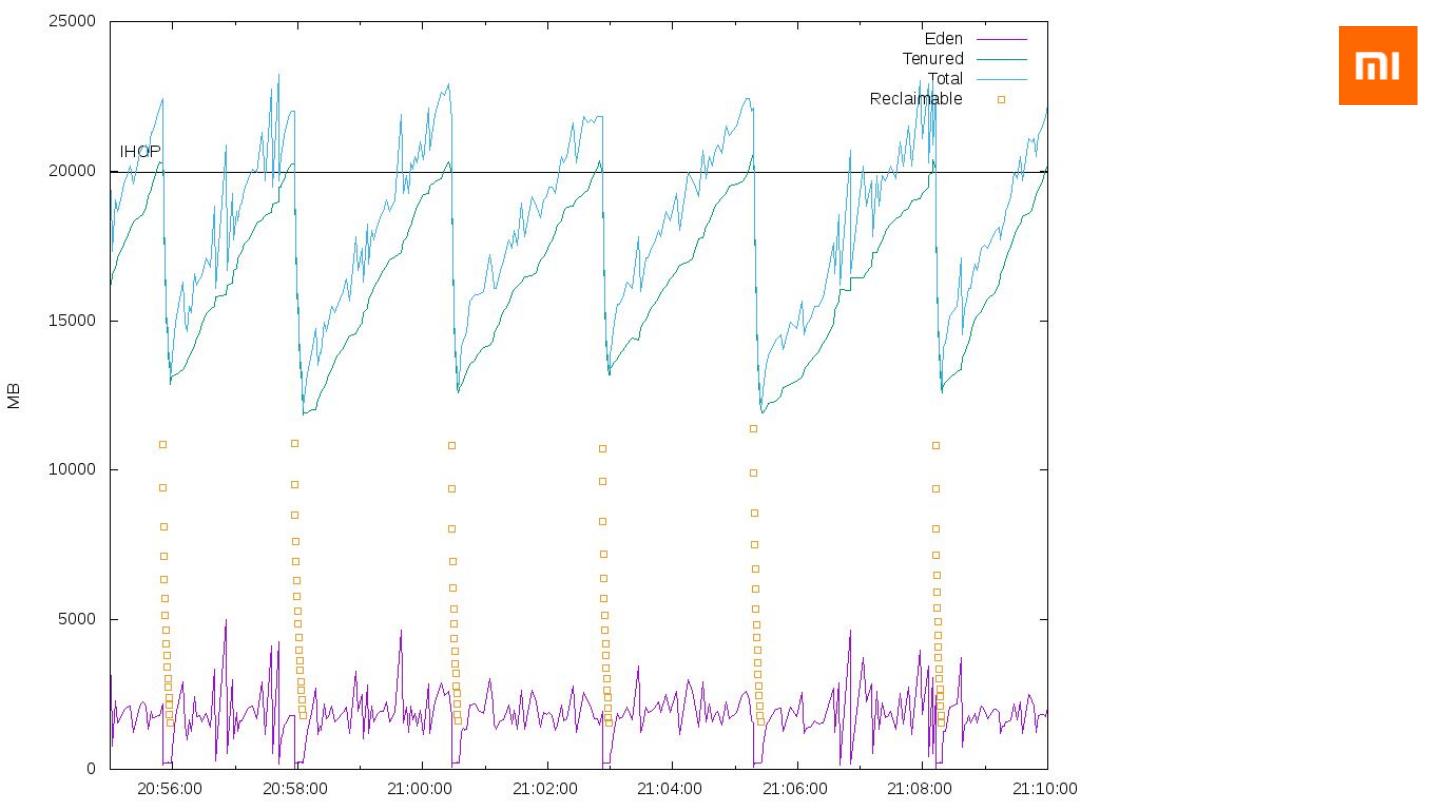

19 .15 min period

20 . 15 min period Real heap usage is much higher than IHOP (Bad) 70 Mixed GC cycles in 15 min Too Frequently (Bad) GC few garbage for one mixed gc cycle (Bad) Young gen adaptivity(Good)

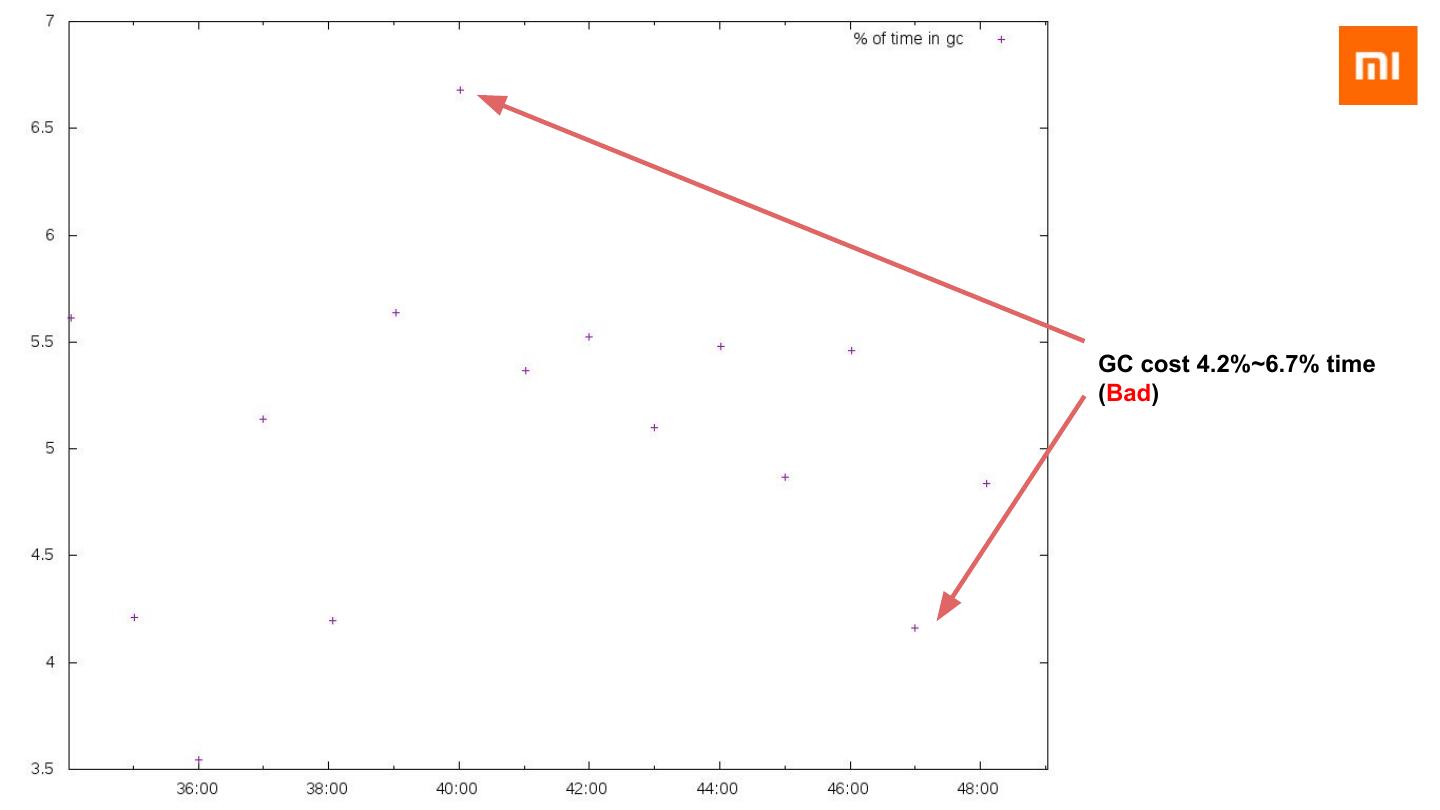

21 .GC cost 4.2%~6.7% time (Bad)

22 .Tuning #1 ● How do we tuning ? ○ IHOP > MaxMemstoreSize%Heap + L1CacheSize%Heap + Delta(~ 10%) ○ Bucket Cache is offheap, need NO consideration when tuning IHOP ● Next Tuning ○ MemstoreSize = 45% , L1CacheSize ~ 10%, Delta ~ 10% ○ Increase InitiatingHeapOccupancyPercent to 65

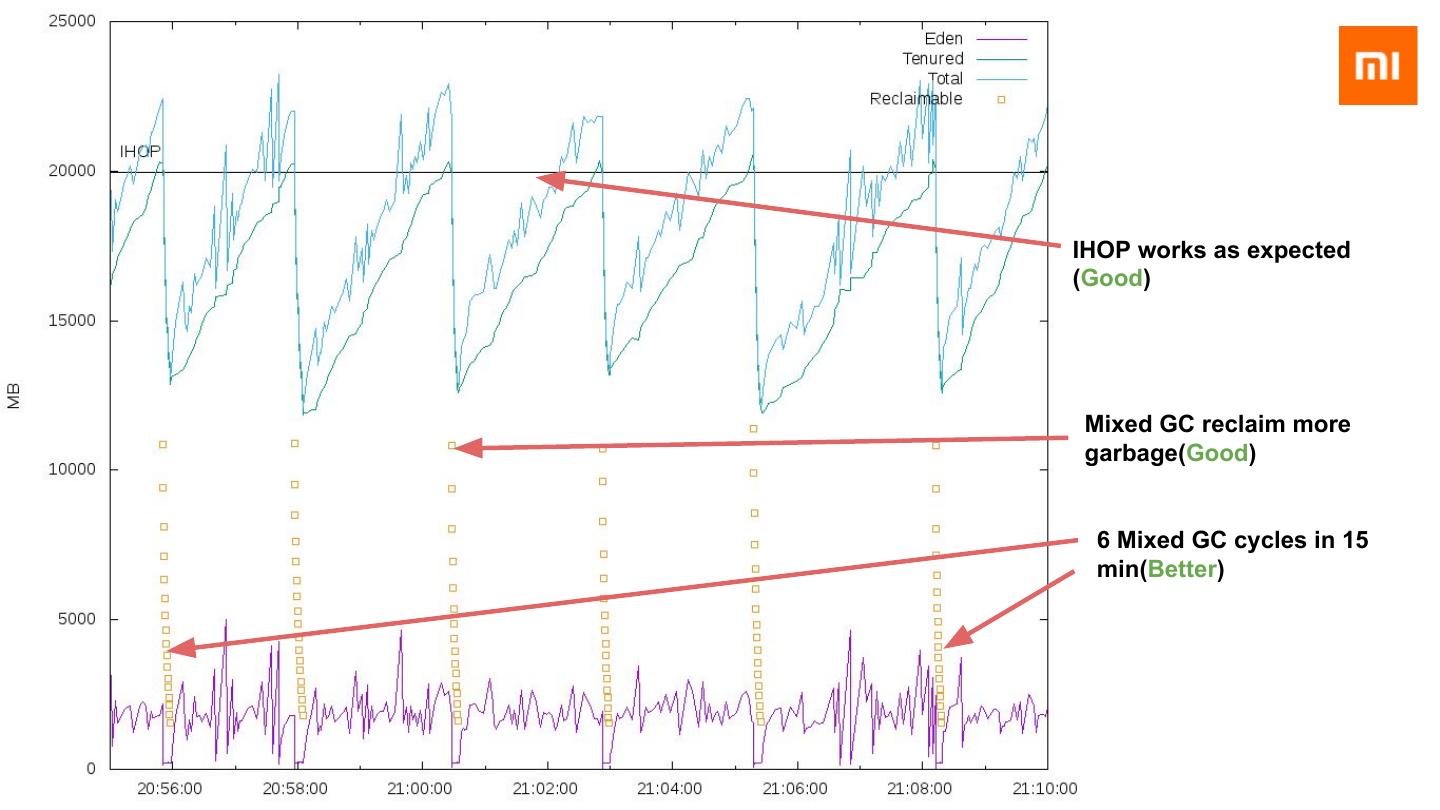

23 .

24 .IHOP works as expected (Good) Mixed GC reclaim more garbage(Good) 6 Mixed GC cycles in 15 min(Better)

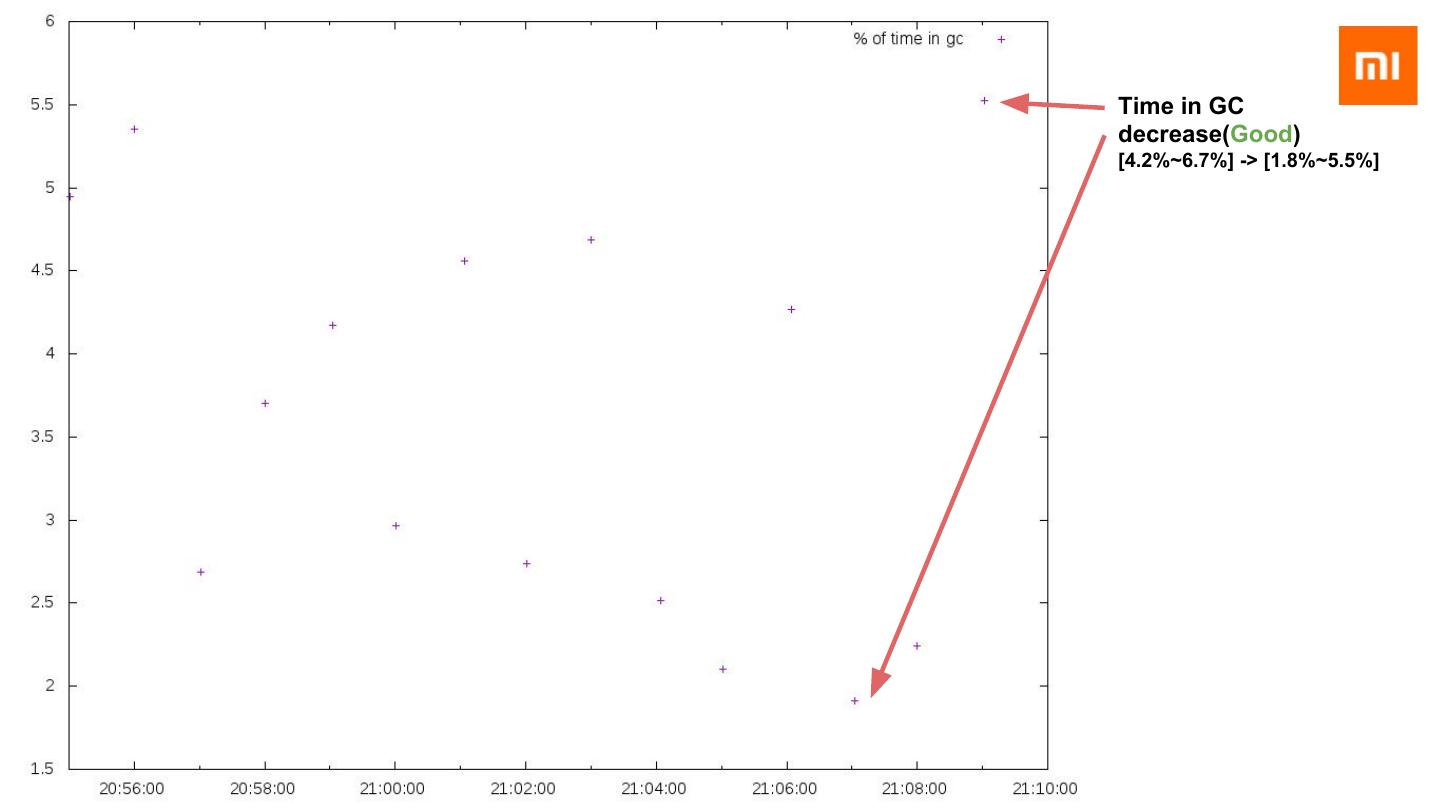

25 .Time in GC decrease(Good) [4.2%~6.7%] -> [1.8%~5.5%]

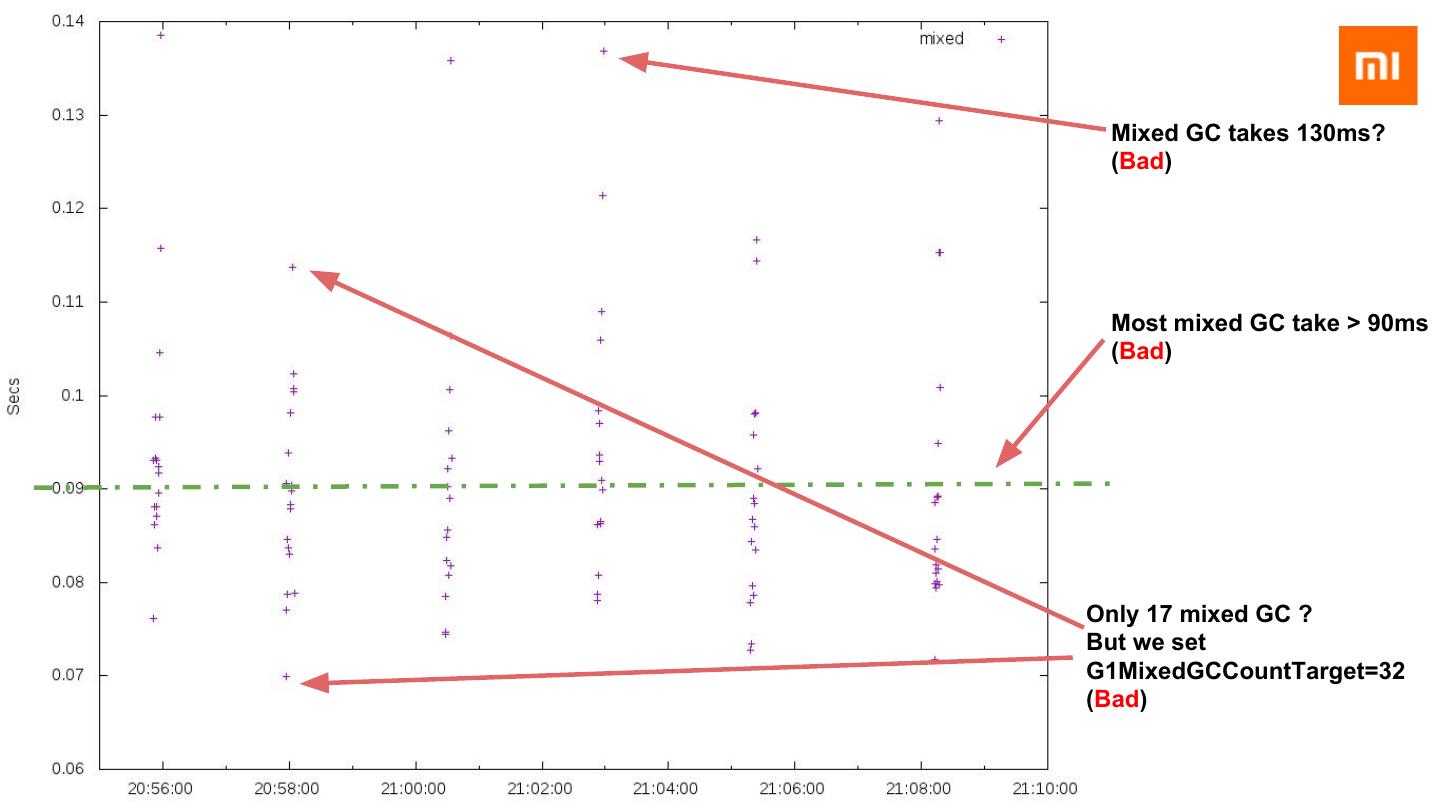

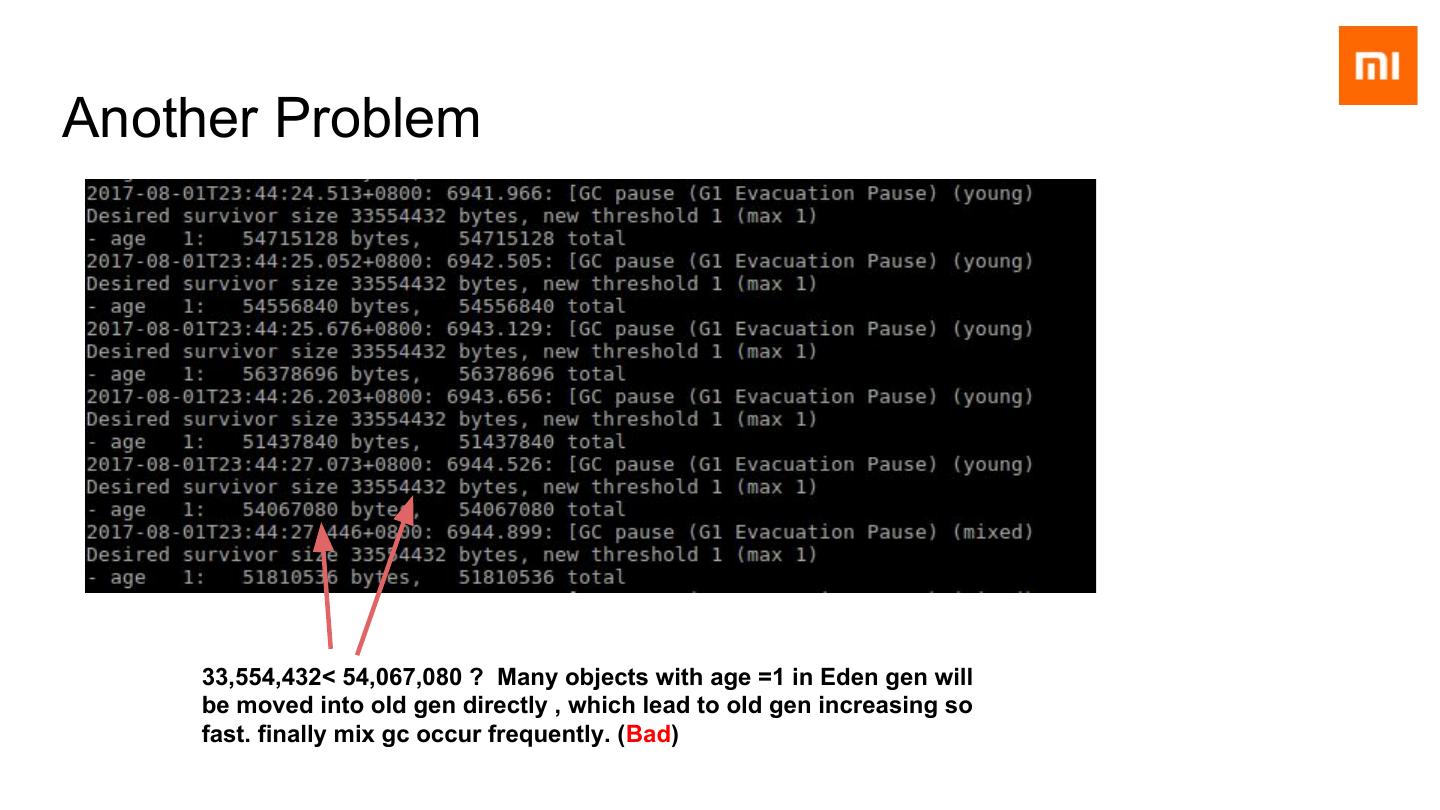

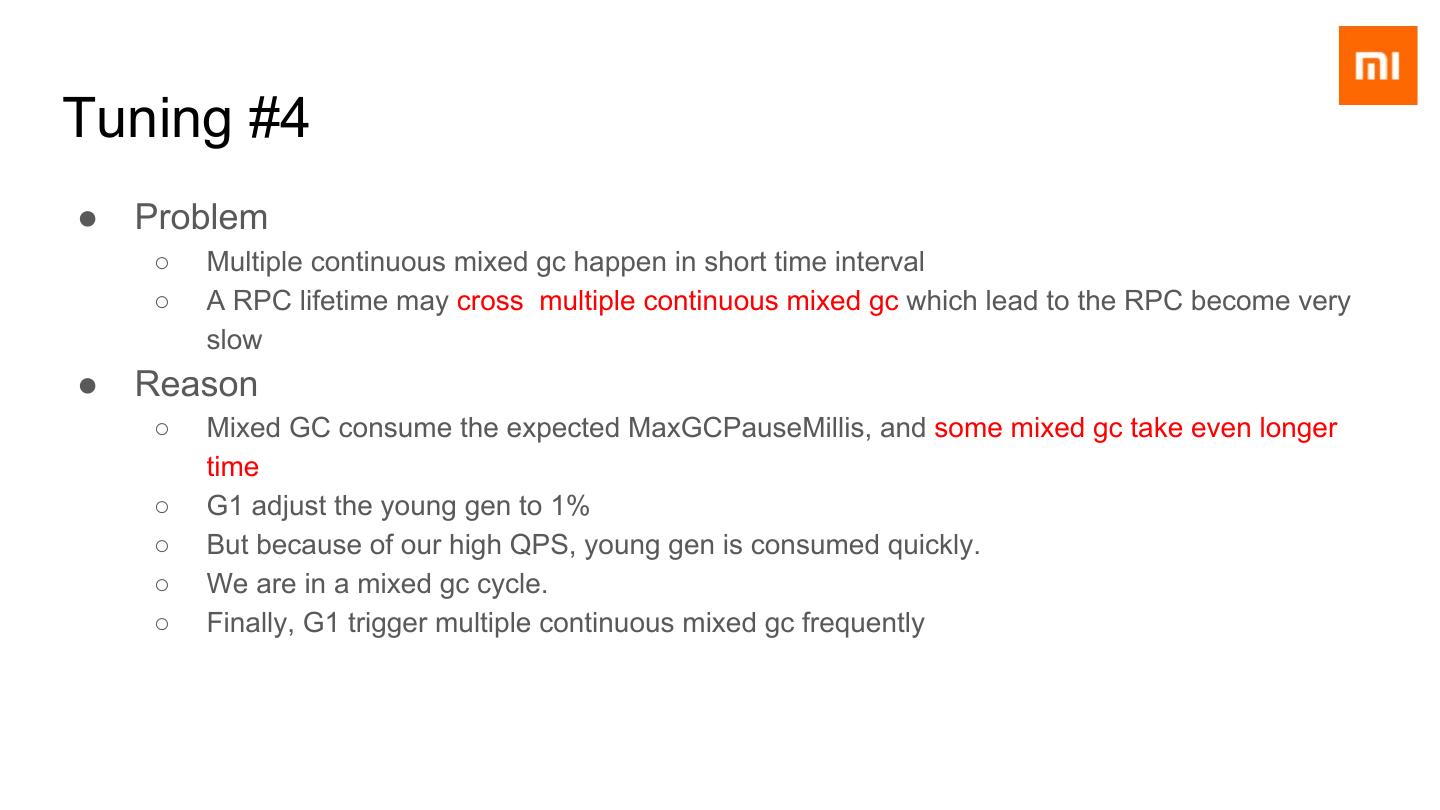

26 . Mixed GC takes 130ms? (Bad) Most mixed GC take > 90ms (Bad) Only 17 mixed GC ? But we set G1MixedGCCountTarget=32 (Bad)

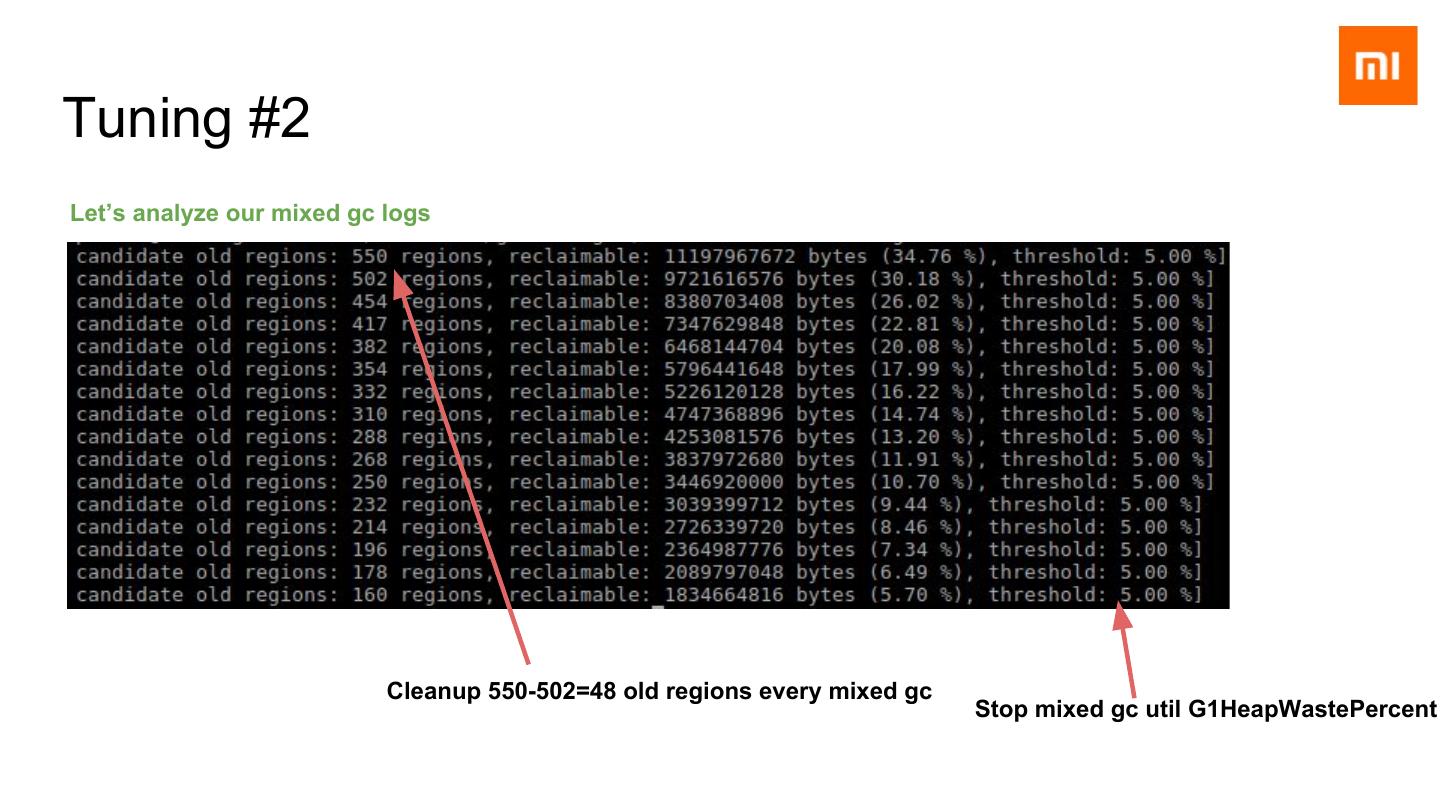

27 .Tuning #2 Let’s analyze our mixed gc logs

28 .Tuning #2 Let’s analyze our mixed gc logs Cleanup 550-502=48 old regions every mixed gc Stop mixed gc util G1HeapWastePercent

29 .Tuning #2 ● How do we tuning ? ○ G1OldCSetRegionThresholdPercent is 5. ○ 48 regions * 32MB(=1536MB) <= 30(g) * 1024 * 0.05 (=1536MB) ○ Try to decrease G1OldCSetRegionThresholdPercent for reducing mixed gc time. ● Next Tuning ○ Decrease G1OldCSetRegionThresholdPercent to 2