- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Quanta:Quora的HBase分层计数系统

展开查看详情

1 . Quanta: Quora’s Hierarchical Counting System On HBase Nikhil Garg Chun-Ho Hung @nikhilgarg28 @chhung6 @Quora HBaseCon 6/12/17

2 .

3 .To Grow And Share World’s Knowledge

4 .

5 .

6 .

7 .Around 200 million monthly uniques Millions of questions & answers In hundreds of thousands of topics Supported by < 100 engineers

8 .Quora’s Big Data In Cloud ● All infrastructure is on AWS from t = 0 ● MySQL and HBase are online production databases ● Redshift: ds2.8xlarge boxes, hundreds of terabytes of data ● Spark: d2.2xlarge, runs HDFS + YARN_MR + Spark, hundreds of terabytes of data

9 . Quanta Over 1 Billion Realtime Updates/Reads Everyday

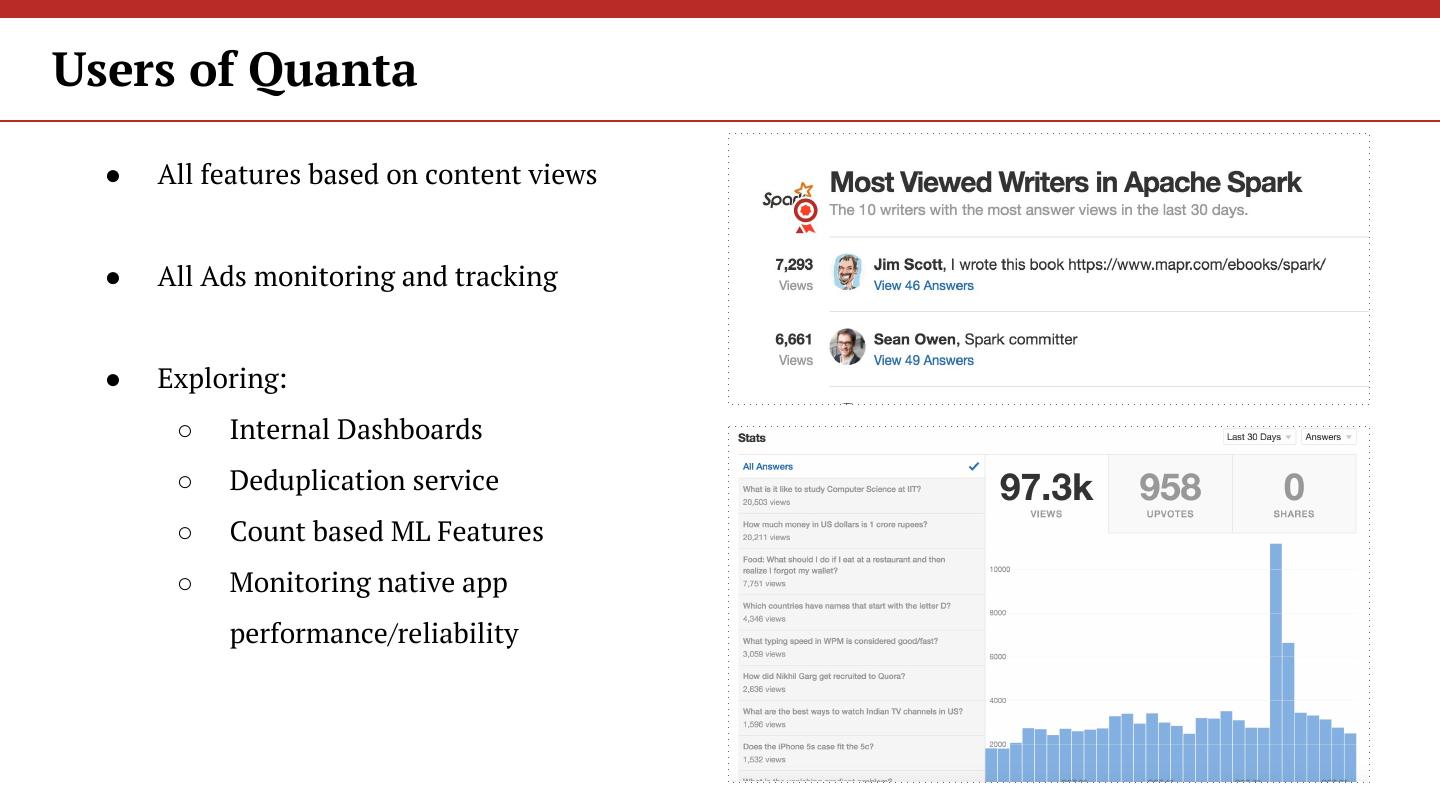

10 .Users of Quanta ● All features based on content views ● All Ads monitoring and tracking ● Exploring: ○ Internal Dashboards ○ Deduplication service ○ Count based ML Features ○ Monitoring native app performance/reliability

11 .1. Design Constraints 2. Update Algorithm 3. Macro Architecture 4. Towards Horizontal Scalability 5. Alternatives

12 .1. Design Constraints 2. Update Algorithm 3. Macro Architecture 4. Towards Horizontal Scalability 5. Alternatives

13 .

14 .Design Constraints ● An explicit commit point after which updates are never lost. ● Maintain billions of counters -- not bound by a single machine. ● Very high volume of writes (> 100K/sec) and reads (>1M/sec).

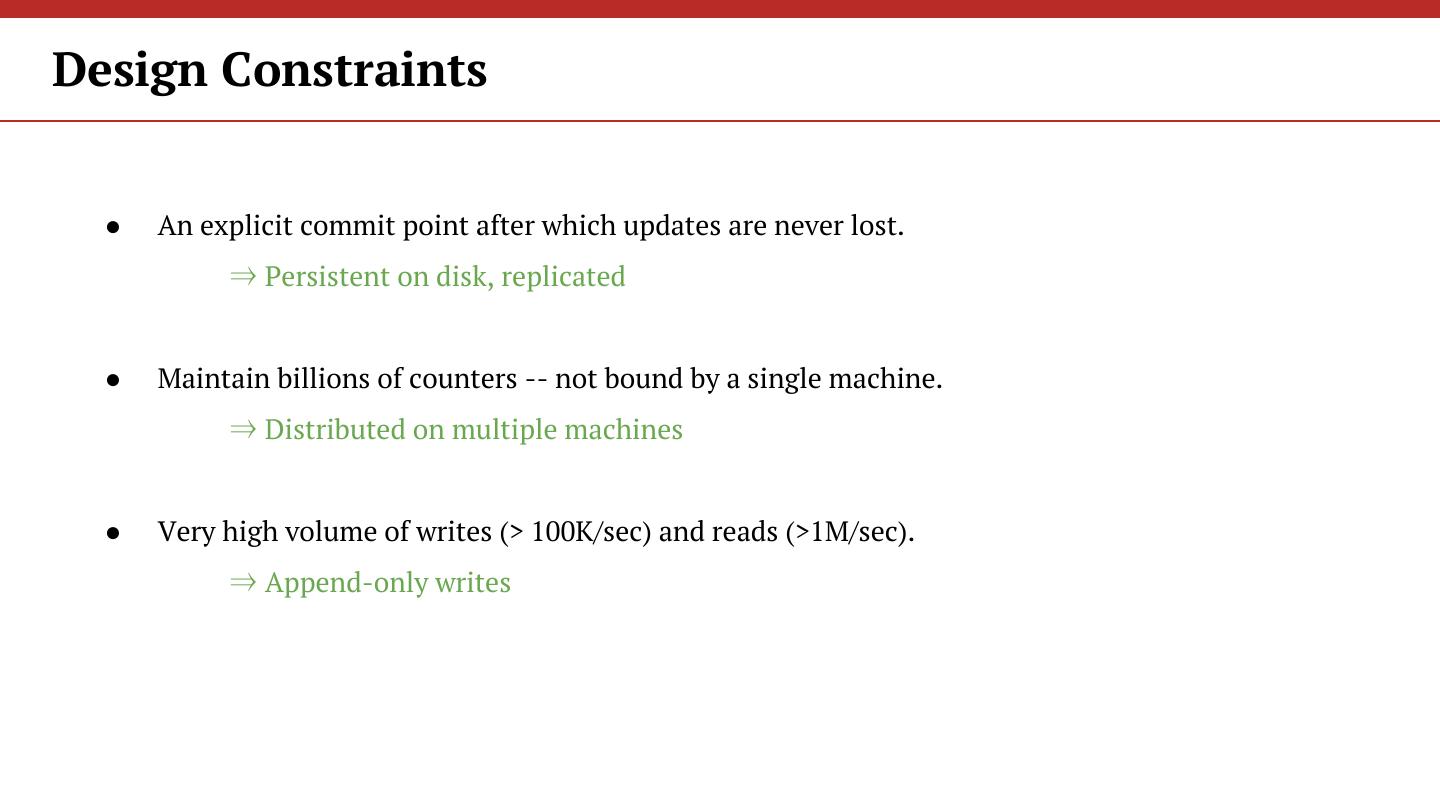

15 .Design Constraints ● An explicit commit point after which updates are never lost. ⇒ Persistent on disk, replicated ● Maintain billions of counters -- not bound by a single machine. ⇒ Distributed on multiple machines ● Very high volume of writes (> 100K/sec) and reads (>1M/sec). ⇒ Append-only writes

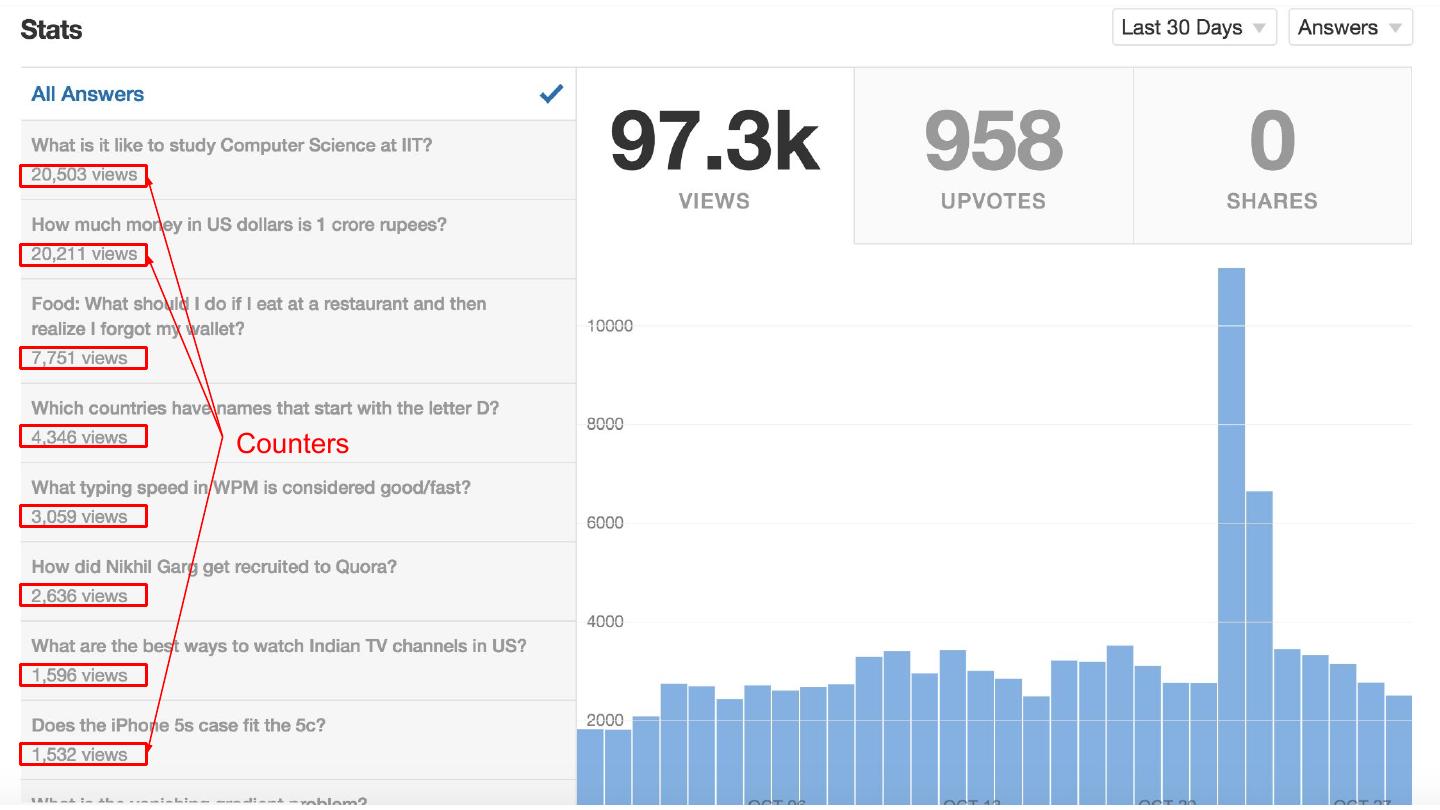

16 .Counters

17 .Design Constraints ● Low latency reads on a single counter and some “related” counters (~1ms)

18 .Design Constraints ● Low latency reads on a single counter and some “related” counters (~1ms) ⇒ avoid random reads in the same request ⇒ heavy in-memory caching ⇒ storing in range-sorted format

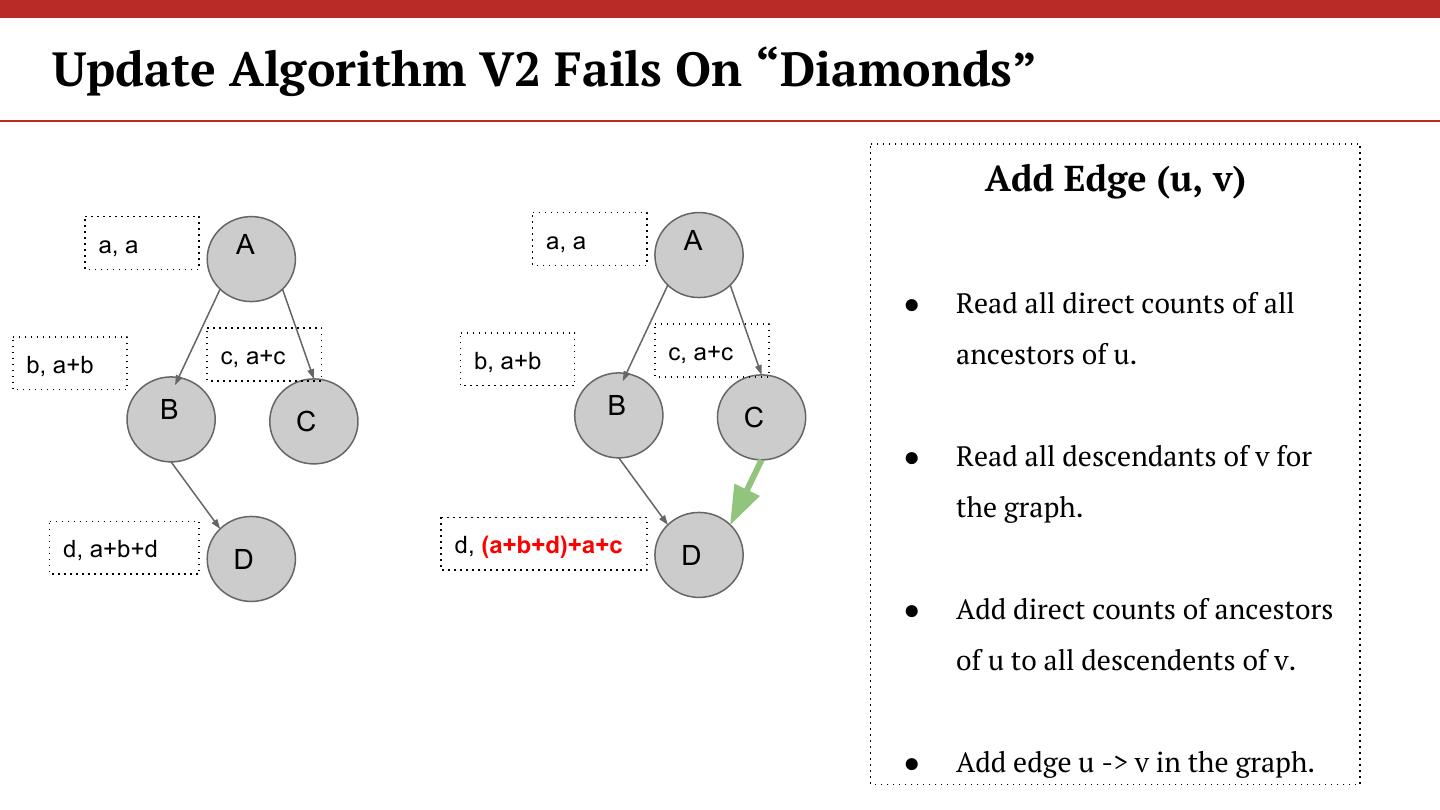

19 .Design Constraints ● Low latency reads on a single counter and some “related” counters (~1ms). ⇒ avoid random reads in the same request ⇒ heavy in-memory caching ⇒ storing in range-sorted format ● Writes lag behind by at most a couple of minutes.

20 .Design Constraints ● Low latency reads on a single counter and some “related” counters (~1ms). ⇒ avoid random reads in the same request ⇒ heavy in-memory caching ⇒ storing in range-sorted format ● Writes lag behind by at most a couple of minutes. ⇒ can do asynchronous writes

21 .Design Constraints ● Should be highly available for both reads and writes. ● Handle 2x the load with 2x the resources, should scale to 20-50x. ● Should be as cheap as possible.

22 . Time Windows Counter Time Series

23 .Arbitrary Time Bucketing ● Should support counting in rolling windows -- “How many times did this happen in last X”? ● Should support storing timeseries -- “How many times did this happen every X in the last Y?” ● X and Y can be arbitrary time units.

24 .Configurable Rolling Errors Sunday Monday Tuesday Wednesday Thursday Friday Saturday Last Week Sunday Monday Tuesday Wednesday Thursday Friday Saturday Sunday Last Week

25 .Aggregation Aggregation Groups

26 .Dynamic Hierarchical Aggregation Increments on aid1 and aid2 should be propagated along the edges to uid direct: 1 direct: 2 direct: 1 direct: 1 total: 1 aid1 total: 2 total: 1 aid1 total: 1 aid2 aid2 direct: 0 direct: 0 total: 3 uid total: 2 uid

27 .Dynamic Hierarchical Aggregation When edge aid2 → uid is removed, we should pull back all previously propagated counts direct: 1 direct: 1 direct: 1 direct: 1 total: 1 aid1 total: 1 total: 1 aid1 total: 1 aid2 aid2 direct: 0 direct: 0 total: 1 uid total: 2 uid

28 .Arbitrary DAGs

29 .1. Design Constraints 2. Update Algorithm 3. Macro Architecture 4. Towards Horizontal Scalability 5. Alternatives