- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Apache Kylin – Customization in Streaming

展开查看详情

1 .Apache Kylin – Customization in Streaming Sea n Z on g

2 .Agenda • Streaming Cube of Apache Kylin • Streaming Data Re-formating in Real-time Biz Scenario • Customized Kafka Parser • Implementation & Furthermore © Kyligence Inc. 2018, Confidential. 2

3 .Streaming Cube of Apache Kylin

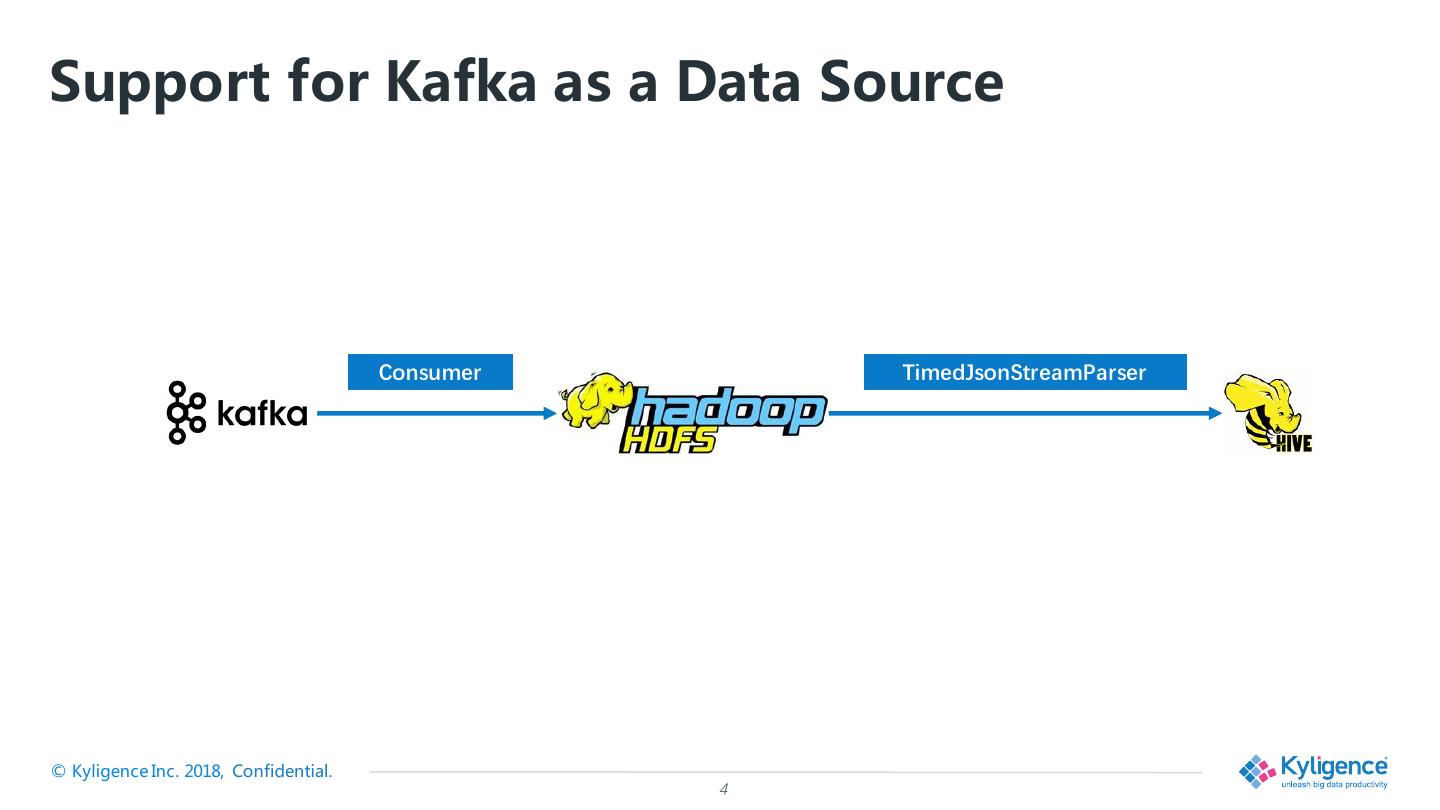

4 .Support for Kafka as a Data Source © Kyligence Inc. 2018, Confidential. 4

5 .Streaming Data Re-formating in Real-time Biz Scenario

6 .Data Re-arrangement • Customization happens everywhere ØAdding / Removing columns ØGenerating rows based on original data ØReal-time calculation © Kyligence Inc. 2018, Confidential. 6

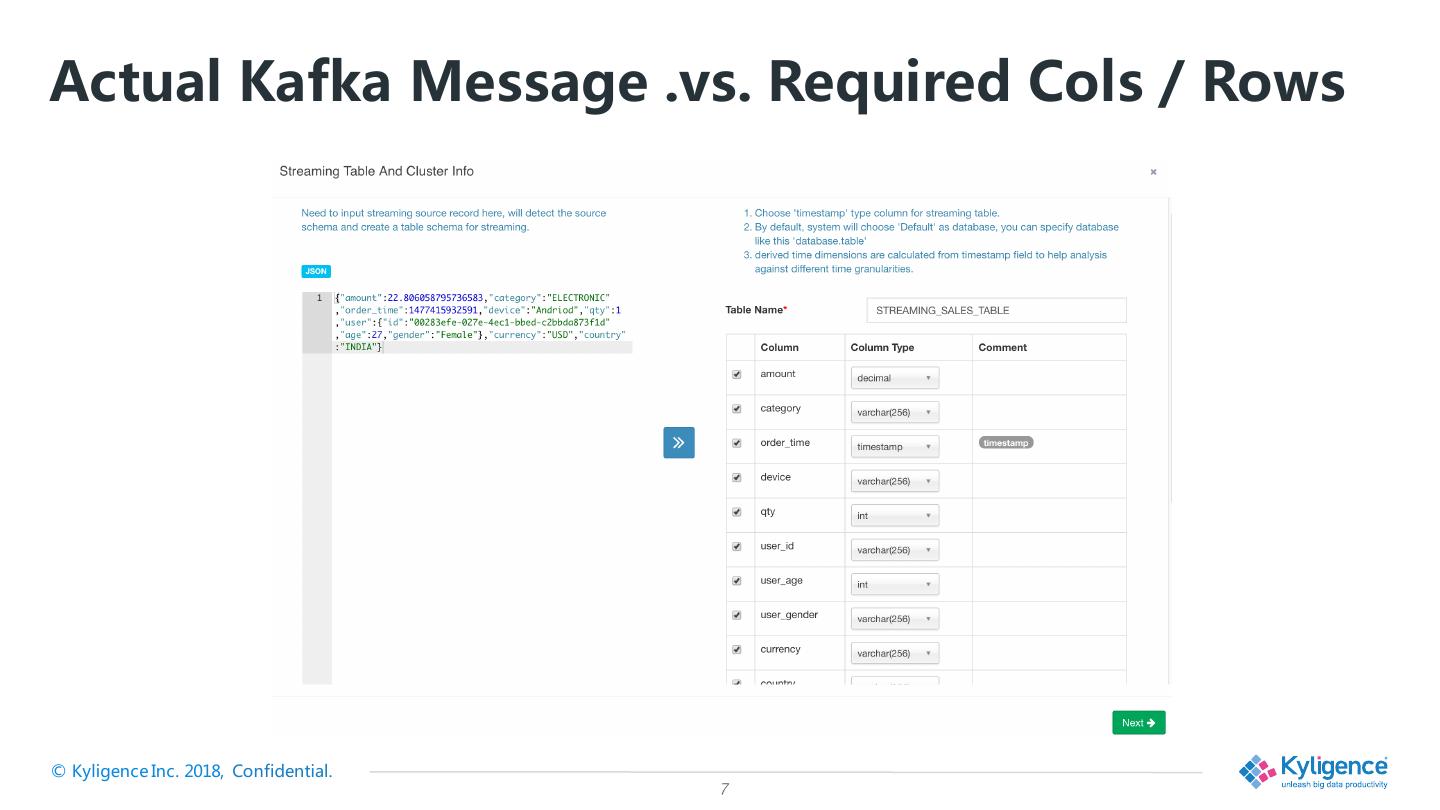

7 .Actual Kafka Message .vs. Required Cols / Rows © Kyligence Inc. 2018, Confidential. 7

8 .Customized Kafka Parser

9 .Default Kafka Parser • Sample Kafka message ØPrepared for columns definition of corresponding Hive table • Invoking parse() of TimedJsonStreamParser ØEach Kafka message equals to one row in Hive by default ØList as return type provides the capability of customized expanding © Kyligence Inc. 2018, Confidential. 9

10 .Customized Kafka Parser • Prepare sample Kafka message (simulated) ØFor the columns of biz required as data source at Hive side • Overriding parse() ØFor parsing the Kafka message (actual) which is different from the columns of biz required ØAdding column(s) in order to acquire parser mapping to the actual one ØGenerate one or more rows for each actual message read and re-map to the columns at Hive side © Kyligence Inc. 2018, Confidential. 10

11 .Implementation & Furthermore

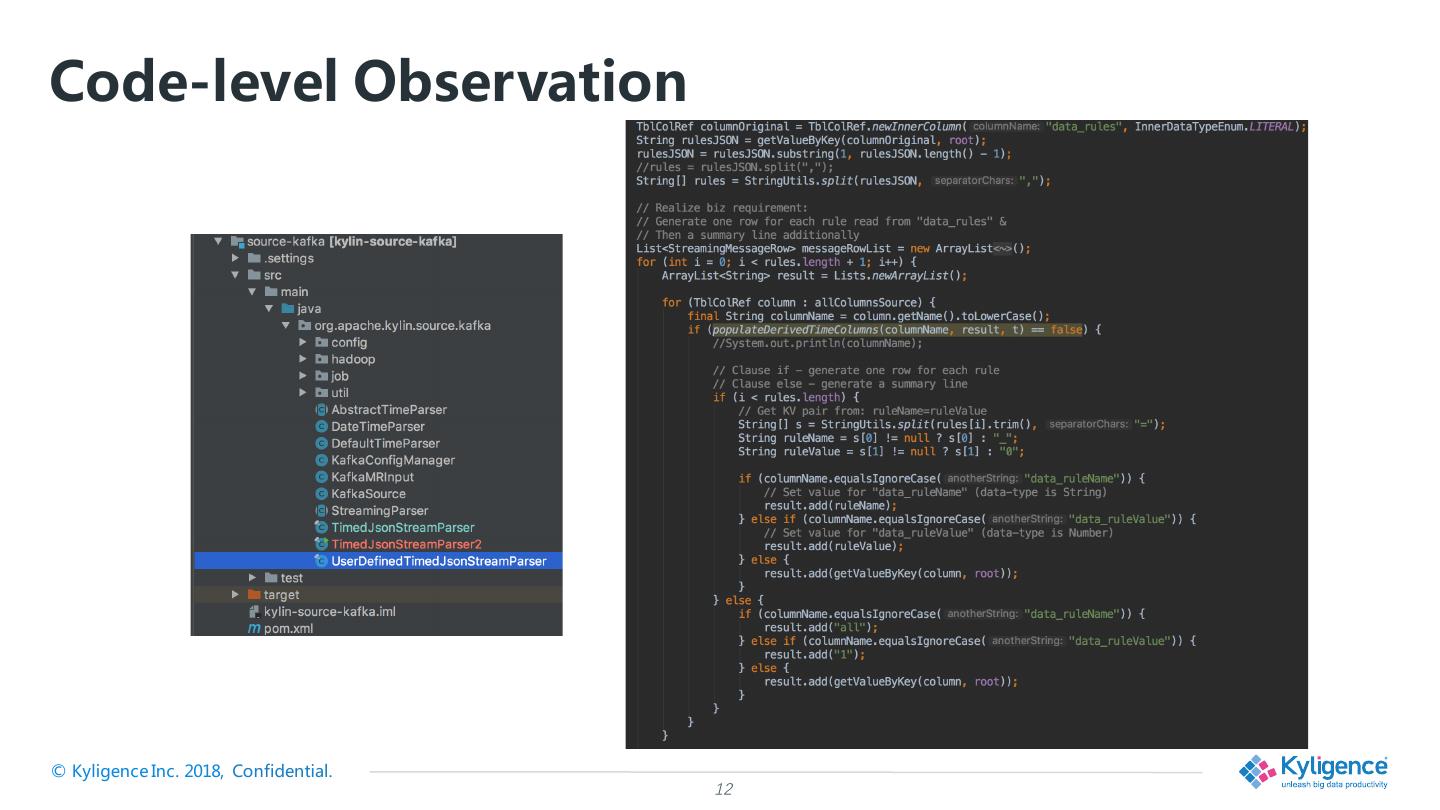

12 .Code-level Observation © Kyligence Inc. 2018, Confidential. 12

13 .Package Suggestions • source-kafka/target/kylin-source-kafka-2.x.x-SNAPSHOT.jar Ø$KYLIN_HOME/tomcat/webapps/kylin/WEB-INF/lib/kylin-source- kafka-2.x.x.jar • org/apache/kylin/source/kafka/UserDefinedTimedJsonStreamP arser.class Øjar -uf $KYLIN_HOME/kylin-job-kap-2.5.4.1000-GA.jar org/* © Kyligence Inc. 2018, Confidential. 13

14 .Live Demo & Case Study • Anti-fraud system in bank ØUsing condition code like KV anti-virus ØApplying condition code as RULE_NAME / RULE_VALUE to each transaction • Data magnitude ØKafka side – up to 2000 messages per second ØCustomized Kafka parser – consume every 2 minutes and applying over 350 condition codes to each message which leads up to 84M rows as input for streaming cube at given time-interval ØOffset and parallel building © Kyligence Inc. 2018, Confidential.

15 .Furthermore • Non-JSON Format • Customized Date Parser (Julian Date) • Limitations © Kyligence Inc. 2018, Confidential. 15

16 . Thanks! Kyligence Inc http://kyligence.io info@kyligence.io Twitter: @Kyligence Apache Kylin http://kylin.apache.org dev@kylin.apache.org Twitter: @ApacheKylin