- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Paper reading #4|十亿规模数据集上高召回高 QPS 的 ANNS 单机方案

段落引用Paper reading直播是由 Milvus 社区发起的学术直播间,分享业内前沿学术论文,与大家交流人工智能与数据库交叉领域最新研究方向。

分享大纲:

- DiskANN 概述

- 磁盘方案和 DiskANN 的动机及贡献

- DiskANN 是如何建索引和查询

- 实验结果

- 总结

我们热烈欢迎社区志愿者参与到 Paper reading 直播中,添加小助手微信:Zilliz-tech 备注“直播”加入讨论群与讲师实时QA。

展开查看详情

1 .Paper Reading #4 DiskANN: Fast Accurate Billion- point Nearest Neighbor Search on a Single Node

2 .Outlines 1. Background 2. DiskANN 3. Continued Works 1. ANNS 1. Contributions 2. Graph-based indexes 2. How it works? 3. Why moving to disk? 3. Experiments 4. Approaches considered 2

3 .01 Background

4 .Overview of ANNS What is Approximate Nearest Neighbor Search? • K-NN • Range Search Popular indexes IVF-Flat, IVF-PQ, IVF-SQ, HNSW, NSG, … 4

5 .Graph-based Indexes HNSW, NSG, HCNNG, NGT, … HNSW NSG 5

6 .Why we need on-disk indices? Are in-memory indexes enough? • Massive data -> Memory is not sufficient • Cost Divide and Conquer • 100M, d = 128, float, NSG -> ~75GB • Inefficient memory usage • Alibaba 2B, d = 128, float -> 32 shards • ~100B -> thousands of machines 6

7 .Other Approaches Considered Inverted Index + Data Compression • Drop in recall • Recall– QPS trade-offs Challenges Rely heavily on main memory! • Reduce # random access on SSD • Reduce # I/O request 7

8 .02 DiskANN

9 .What Can DiskANN Do? • 1B, d > 100, 64G memory, recall@1 > 95%, avearge latency < 3ms • New graph-based index Vamana -> fewer access on SSD • Able to combine Vamana with quantization techniques, such as PQ 9

10 .How Does DiskANN Do That? Building Vamana index • Pruning strategy • Divide into clusters with replicas Querying on DiskANN • Beam Search • Build with raw vectors, query with compressed vectors 10

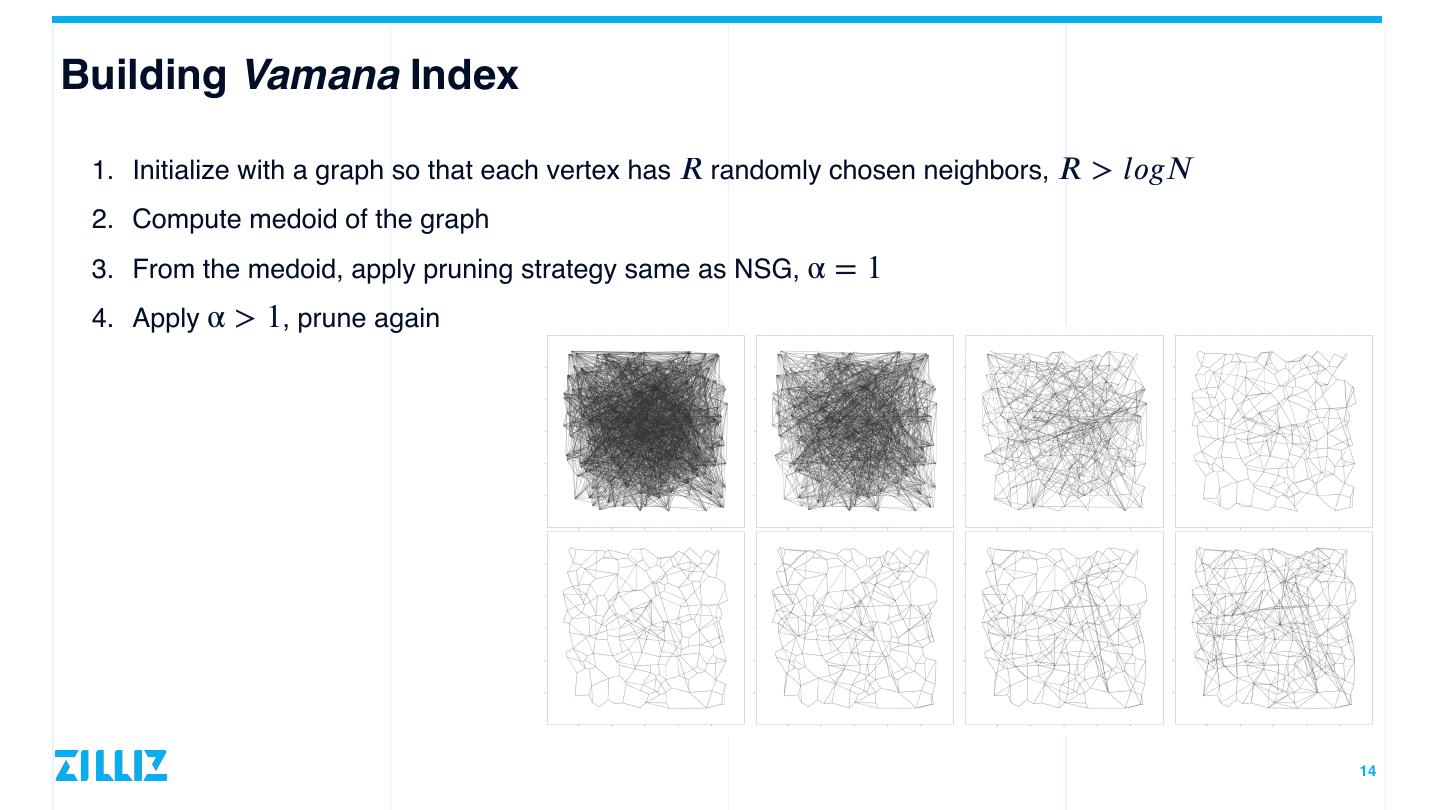

11 . Building Vamana Index 1. Initialize with a graph so that each vertex has randomly chosen neighbors, > 2. Compute medoid of the graph 11 𝑅𝑅 𝑙 𝑜 𝑔 𝑁

12 . Building Vamana Index 1. Initialize with a graph so that each vertex has randomly chosen neighbors, > 2. Compute medoid of the graph 3. From the medoid, apply pruning strategy same as NSG, α =1 12 𝑅𝑅 𝑙 𝑜 𝑔 𝑁

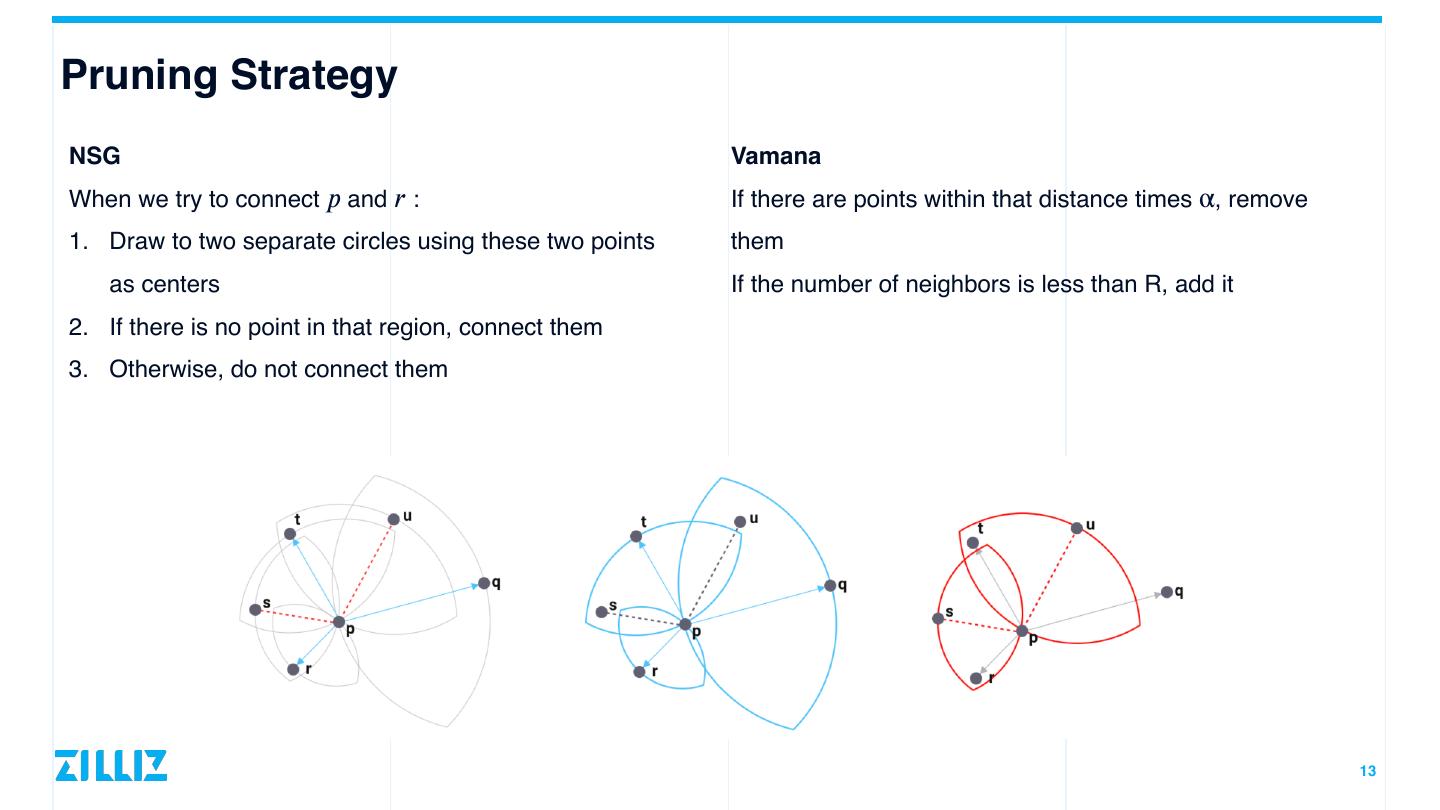

13 . Pruning Strategy NSG Vamana When we try to connect and : If there are points within that distance times α, remove 1. Draw to two separate circles using these two points them as centers If the number of neighbors is less than R, add it 2. If there is no point in that region, connect them 3. Otherwise, do not connect them 13 𝑝𝑟

14 . Building Vamana Index 1. Initialize with a graph so that each vertex has randomly chosen neighbors, > 2. Compute medoid of the graph 3. From the medoid, apply pruning strategy same as NSG, α =1 4. Apply α > 1, prune again 14 𝑅𝑅 𝑙 𝑜 𝑔 𝑁

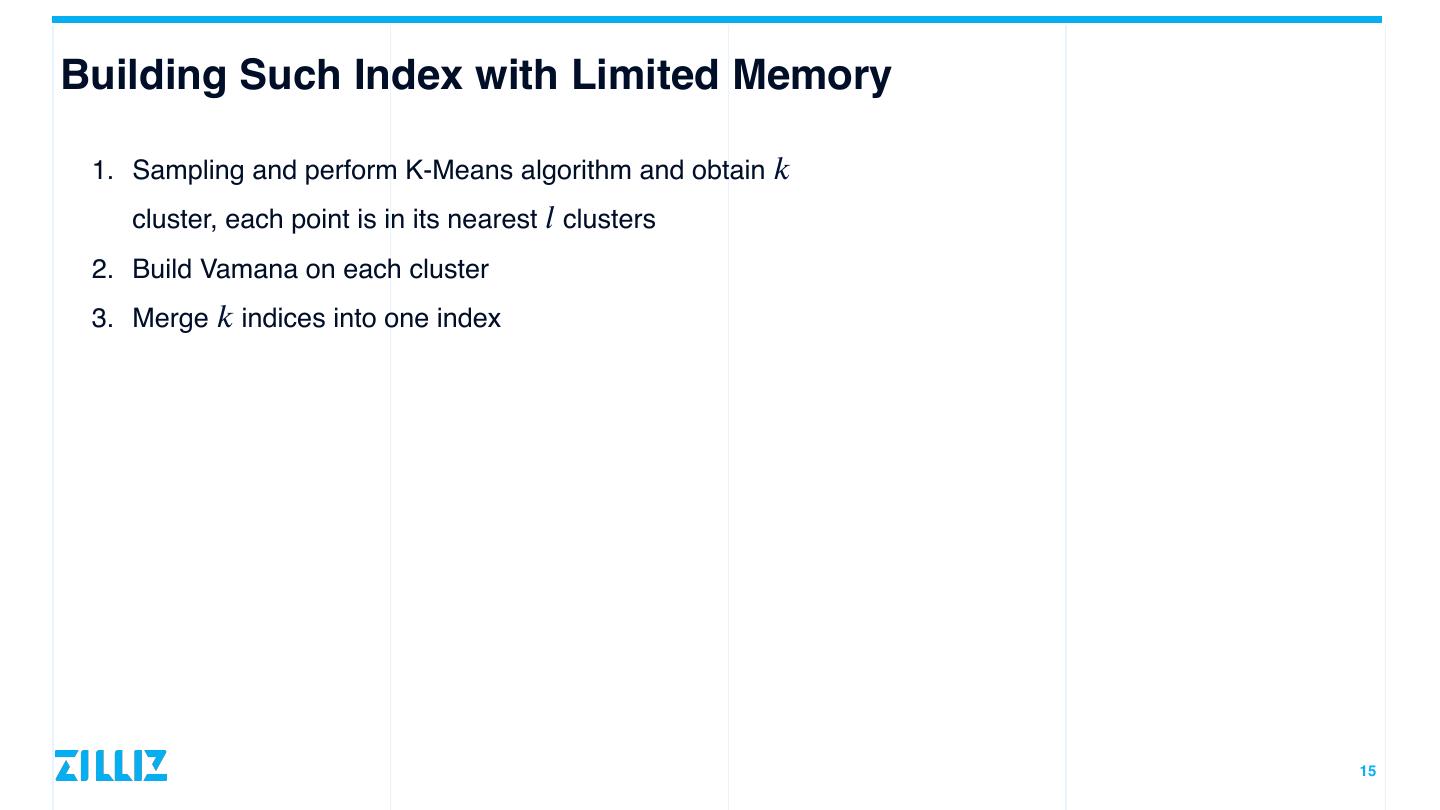

15 . Building Such Index with Limited Memory 1. Sampling and perform K-Means algorithm and obtain cluster, each point is in its nearest clusters 2. Build Vamana on each cluster 3. Merge indices into one index 15 𝑘𝑙𝑘

16 .Query General idea of querying on graph • From the entry point, find the nearest neighbors • Iteratively approaching the answers 16

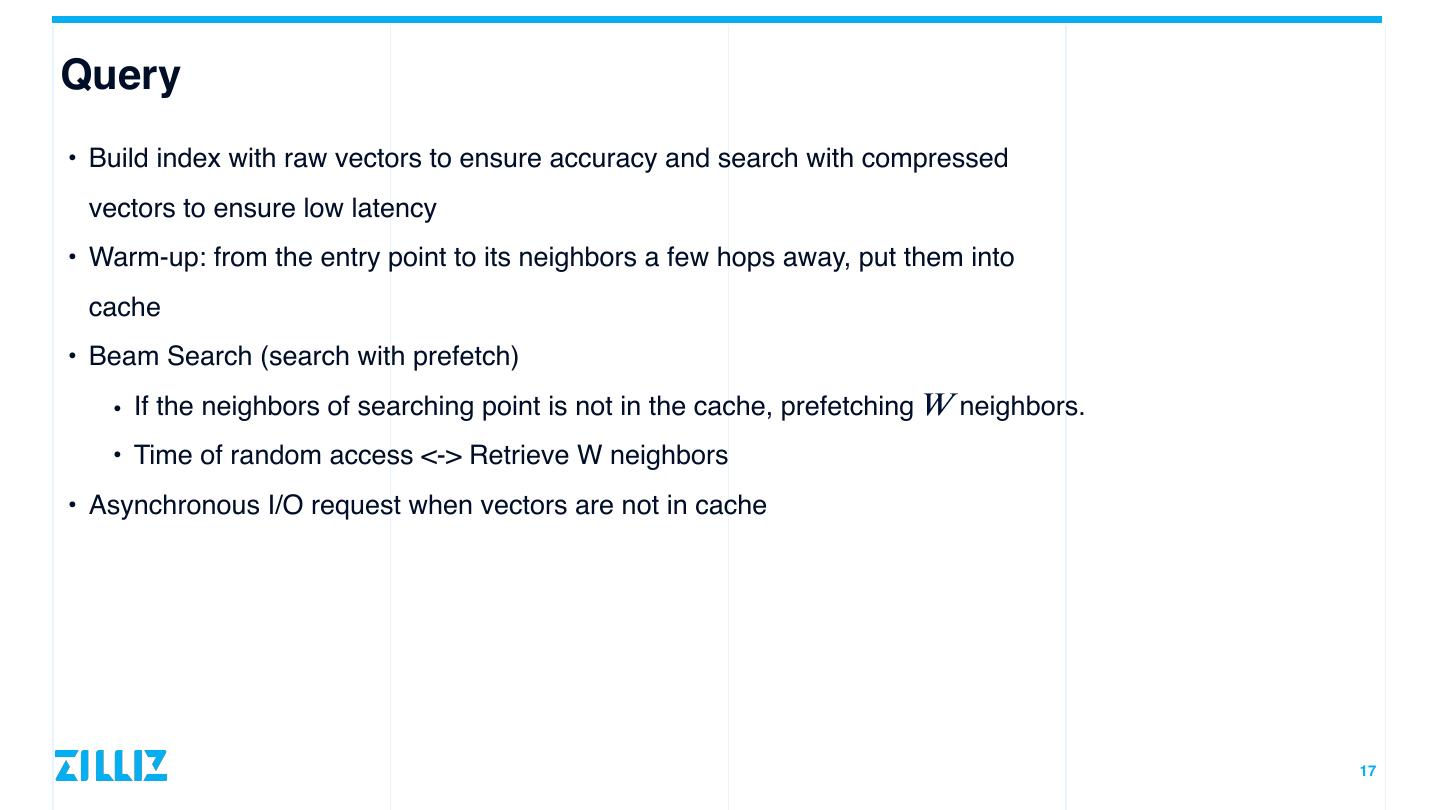

17 . Query • Build index with raw vectors to ensure accuracy and search with compressed vectors to ensure low latency • Warm-up: from the entry point to its neighbors a few hops away, put them into cache • Beam Search (search with prefetch) • If the neighbors of searching point is not in the cache, prefetching neighbors. • Time of random access <-> Retrieve W neighbors • Asynchronous I/O request when vectors are not in cache 17 𝑊

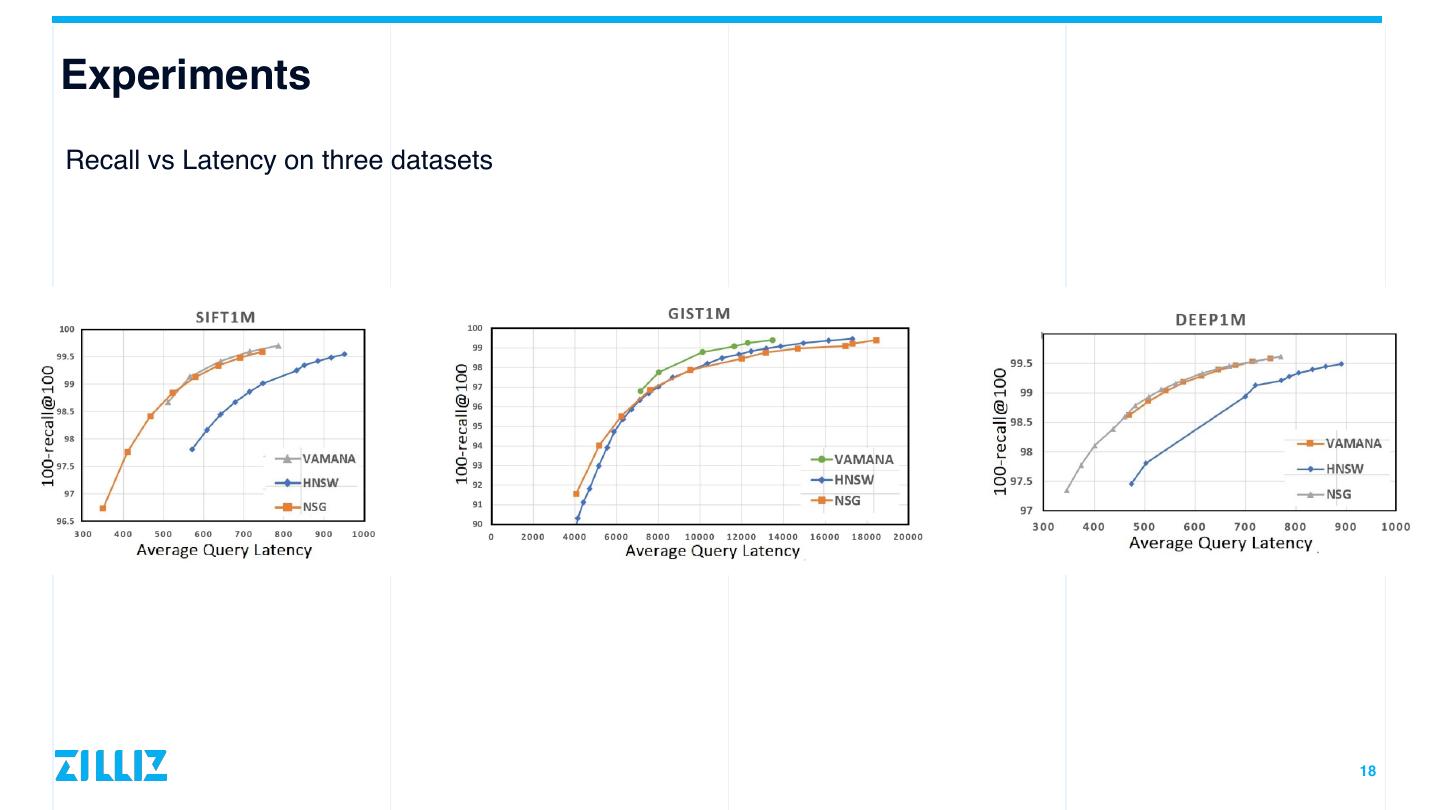

18 .Experiments Recall vs Latency on three datasets 18

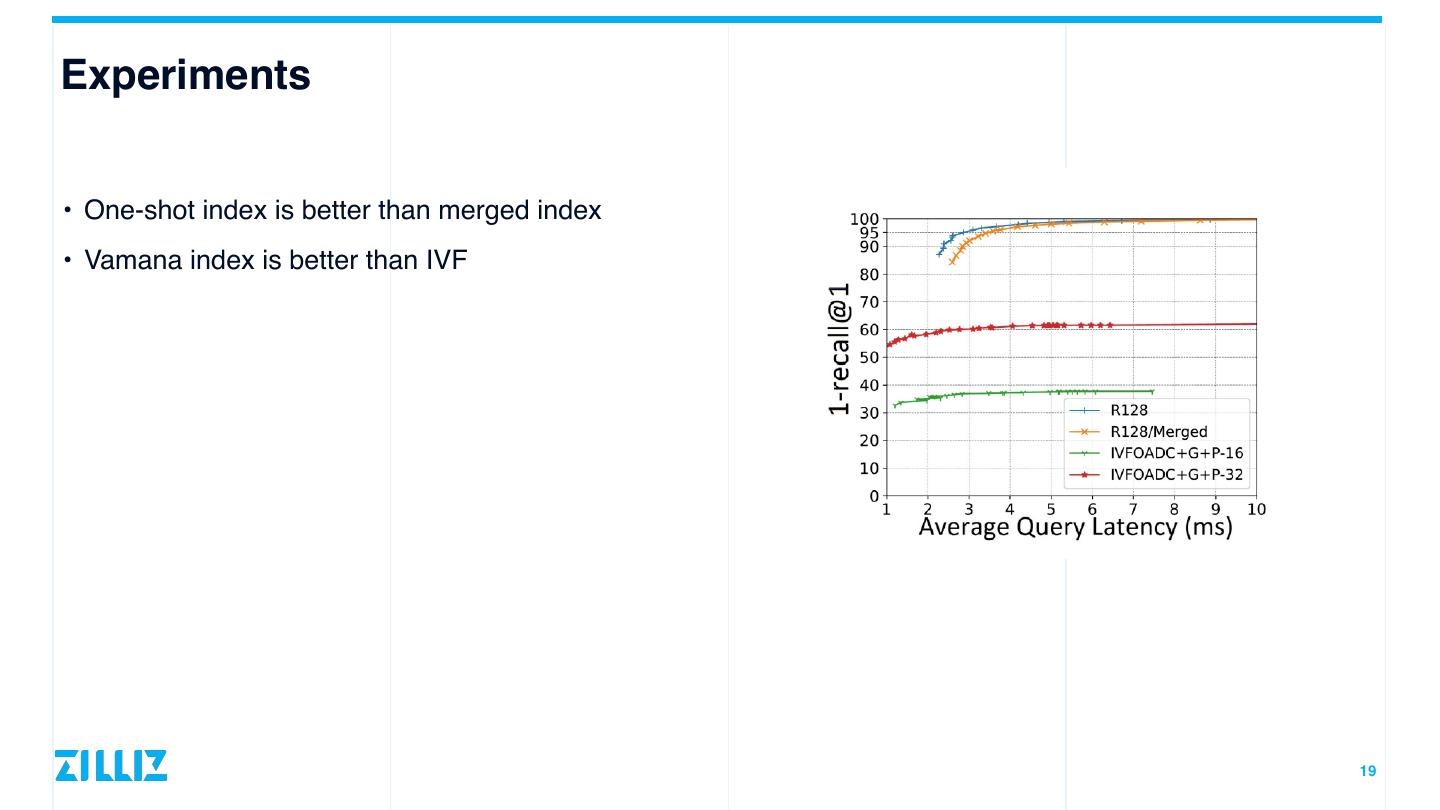

19 .Experiments • One-shot index is better than merged index • Vamana index is better than IVF 19

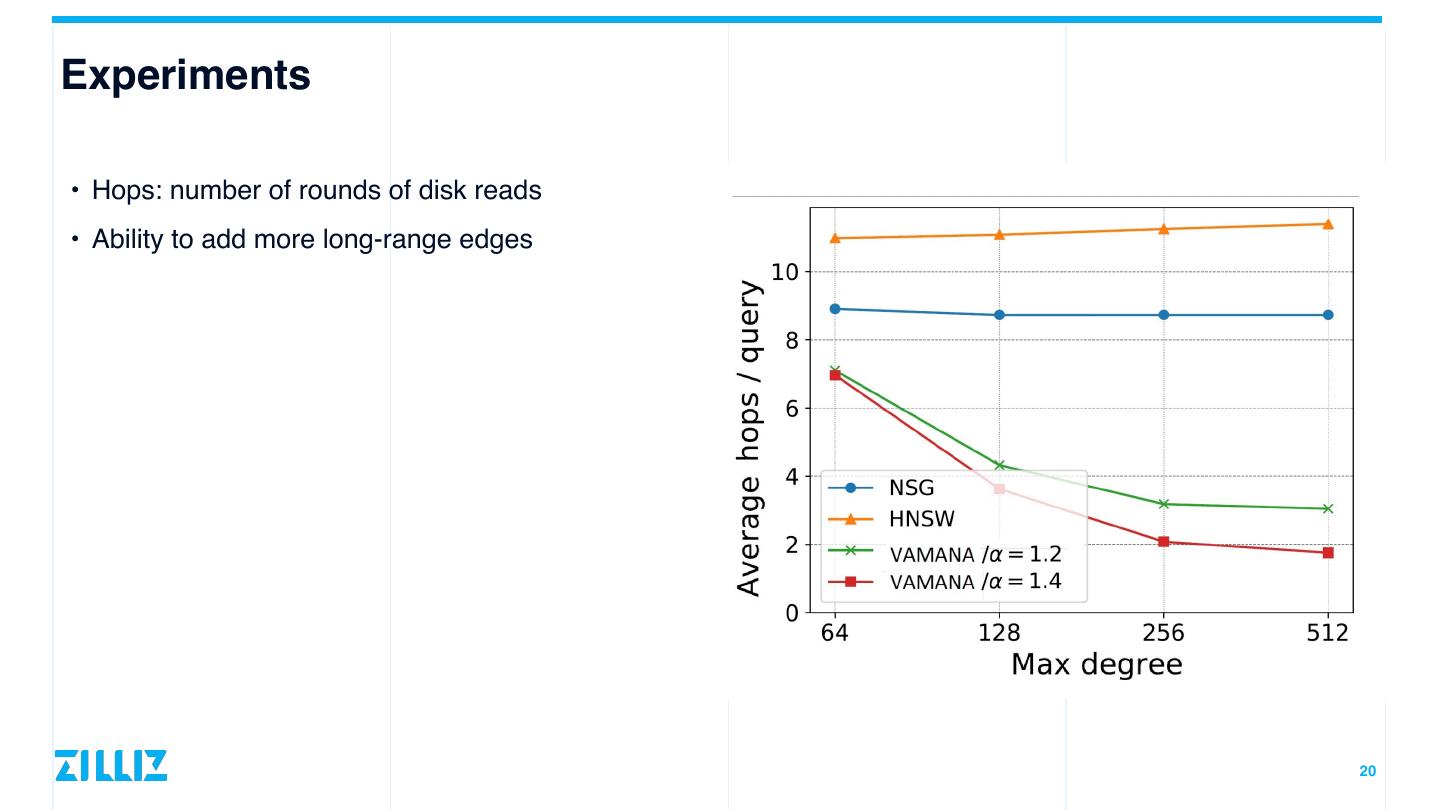

20 .Experiments • Hops: number of rounds of disk reads • Ability to add more long-range edges 20

21 . What is the Downside? • Build is slow! • For 1B, d = 128, uint8, it takes ~5 days with 64GB memory • Index is large, when = 50, = 64, = 1.2, = 2, • ~ 348 GB, average degree ~92.1 • Raw dataset is ~119 GB 21 𝐿 𝑅 𝛼 𝑙

22 .03 Continued Works

23 .Continued Works • FreshDiskANN: supports update • SPANN [NeurlPS 2021]: another disk-based solution, faster than DiskANN 23

24 .Thank You https://milvus.io https://github.com/milvus-io/milvus 添加企业微信 发送 “直播” 加⼊交流群 https://twitter.com/milvusio 领取直播PPT + 回放链接 🔗