- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/Spark/Apache_Spark_NLP_Extending_Spark_ML_to_Deliver_Fast_Scalable?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

Apache Spark NLP: Extending Spark ML to Deliver Fast, Scalable, and Unified NLP

展开查看详情

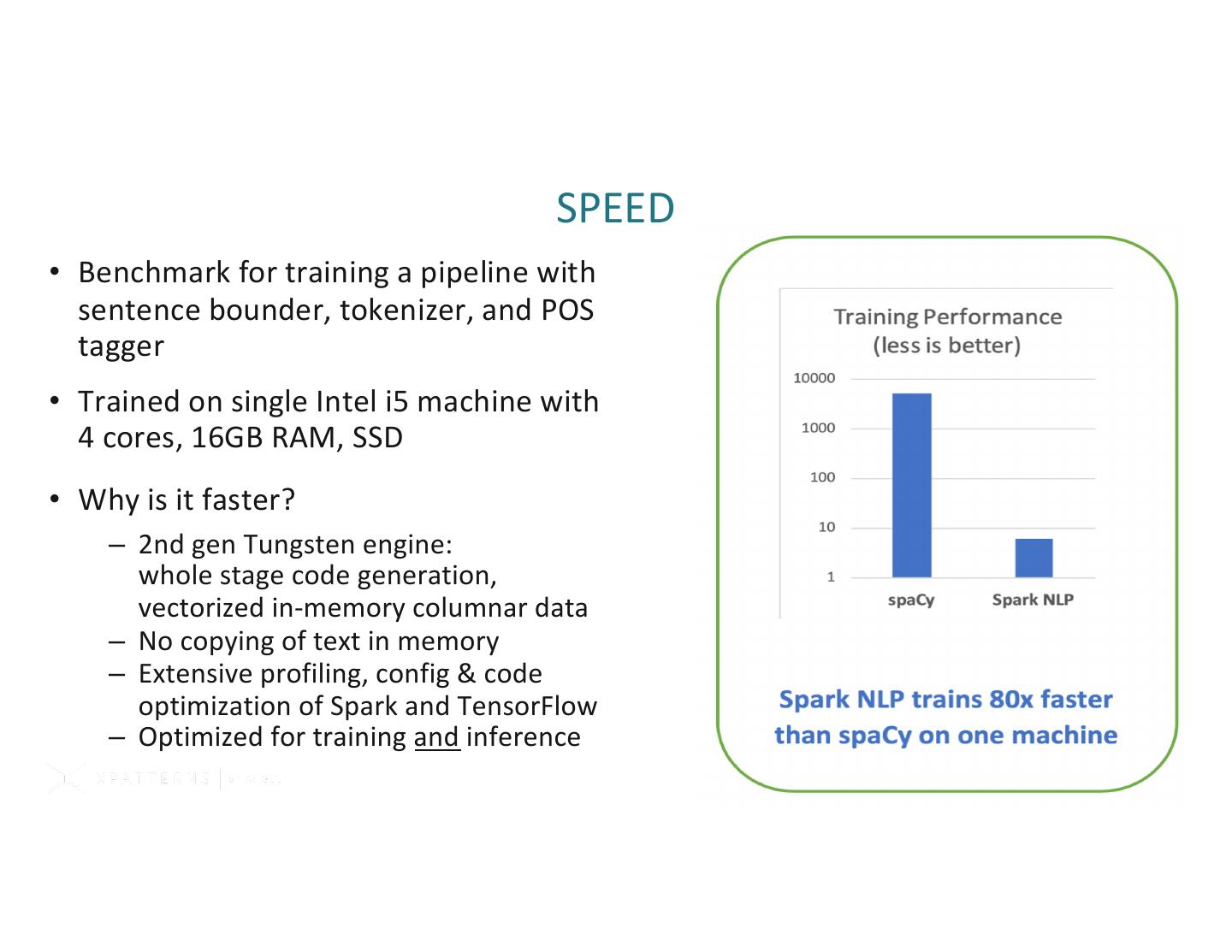

1 .

2 .Apache Spark NLP: Extending Spark ML to Deliver Fast, Scalable, and Unified Natural Language Processing David Talby, Pacific AI Alex Thomas, Indeed #UnifiedAnalytics #SparkAISummit

3 . CONTENTS 1. INTRODUCING SPARK NLP 2. GETTING THINGS DONE 3. GETTING STARTED

4 . INTRODUCING SPARK NLP STATE OF THE ART NLP FOR PYTHON, JAVA & SCALA 1. ACCURACY 2. SPEED 3. SCALABILITY

5 .5th MOST WIDELY USED AI LIBRARY IN THE ENTERPRISE O’REILLY AI ADOPTION IN THE ENTERPRISE SURVEY OF 1,300 PRACTITIONERS, FEB 2019

6 . ACCURACY • ”State of the art” means the best performing academic peer- reviewed results • NER Benchmark on right is on en_core_web_lg dataset, micro- averaged F1 score • Why is it more accurate? – Deep learning models, trainable at scale with GPU’s – TF graph based on 2017 paper (bi-LSTM+CNN+CRF) – BERT embeddings – Contrib LSTM cells

7 . SPEED • Benchmark for training a pipeline with sentence bounder, tokenizer, and POS tagger • Trained on single Intel i5 machine with 4 cores, 16GB RAM, SSD • Why is it faster? – 2nd gen Tungsten engine: whole stage code generation, vectorized in-memory columnar data – No copying of text in memory – Extensive profiling, config & code optimization of Spark and TensorFlow – Optimized for training and inference

8 . SCALABILITY • Zero code changes to scale a pipeline to any Spark cluster • Only natively distributed open-source NLP library • Spark provides execution planning, caching, serialization, shuffling • Caveats – Speedup depends heavily on what you actually do – Not all algorithms scale well – Spark configuration matters

9 . SPARK NLP Permissive Open Source License Apache 2.0 versus Stanford CoreNLP code & spaCy models

10 . SPARK NLP Production Grade & Actively Supported In production in multiple Fortune 500’s 25 new releases in 2018 Full-time development team Active Slack community

11 . THAT’S NICE, BUT WHAT DOES IT ACTUALLY DO? (Everything that other NLP libraries do and more.)

12 .WHERE CAN I USE IT?

13 . BUILT ON THE SHOULDERS OF SPARK ML • Reusing the Spark ML Pipeline – Unified NLP & ML pipelines – End-to-end execution planning – Serializable – Distributable • Reusing NLP Functionality – TF-IDF calculation – String distance calculation – Stop word removal – Topic modeling – Distributed ML algorithms

14 . CONTENTS 1. INTRODUCING SPARK NLP 2. GETTING THINGS DONE 3. GETTING STARTED

15 . SENTIMENT ANALYSIS import sparknlp sparknlp.start() from sparknlp.pretrained import PretrainedPipeline pipeline = PretrainedPipeline('analyze-sentiment', 'en') result = pipeline.annotate('Harry Potter is a great movie’) print(result['sentiment’]) ## will print ['positive']

16 . SPELL CHECKING & CORRECTION Now in Scala: val pipeline = PretrainedPipeline("spell_check_ml", "en") val result = pipeline.annotate("Harry Potter is a graet muvie") println(result("spell")) /* will print Seq[String](…, "is", "a", "great", "movie") */

17 . NAMED ENTITY RECOGNITION pipeline = PretrainedPipeline('recognize_entities_bert', 'en') result = pipeline.annotate('Harry Potter is a great movie') print(result['ner']) ## prints ['I-PER', 'I-PER', 'O', 'O', 'O', 'O']

18 . UNDER THE HOOD 1.sparknlp.start() starts a new Spark session if there isn’t one, and returns it. 2.PretrainedPipeline() loads the English version of the recognize_entities_bert pipeline, the pre-trained models, and the embeddings it depends on. 3. These are stored and cached locally – or distributed if running on a cluster. 4. TensorFlow is initialized, within the same JVM process that runs Spark. The pre-trained embeddings and deep-learning models (like NER) are loaded. 5. The annotate() call runs an NLP inference pipeline which activates each stage’s algorithm (tokenization, POS, etc.). 6. The NER stage is run on TensorFlow – applying a neural network with bi-LSTM layers for tokens and a CNN for characters. 7. BERT Embeddings are used to convert contextual tokens into vectors during the NER inference process. 8. The result object is a plain old local Python dictionary.

19 . OCR Image Pre-Processing · Segments Detection · Distributed OCR DL Sentence Boundary Detection · Context-Based Spell Correction from sparknlp.ocr import OcrHelper data = OcrHelper().createDataset(spark, './immortal_text.pdf') result = pipeline.transform(data) result.select("token.result", "pos.result").show() # will print [would, have, bee...|[MD, VB, VBN, DT,...|

20 .OUT OF THE BOX FUNCTIONALITY

21 . GETTING STARTED 1. READ THE DOCS & JOIN SLACK HTTPS://NLP.JOHNSNOWLABS.COM 2. STAR & FORK THE REPO GITHUB.COM/JOHNSNOWLABS/SPARK-NLP 3. RUN THE TURNKEY CONTAINER & EXAMPLES GITHUB.COM/JOHNSNOWLABS/SPARK-NLP-WORKSHOP

22 .THANK YOU! github.com/JohnSnowLabs/spark-nlp nlp.JohnSnowLabs.com