- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Balancing Automation and Explanation in Machine Learning

展开查看详情

1 .Balancing Automation and Explanation in Machine Learning Leah McGuire, Till Bergmann

2 .

3 . Roadmap for this talk How to automate Why with automate explanation Our solution: What does your How to explain in mind TransmogrifAI this look like modeling your model on Spark Automation vs Why explain your model Explanation

4 . Roadmap for this talk How to automate Why with automate explanation Our solution: What does your How to explain in mind TransmogrifAI this look like modeling your model on Spark Automation vs Why explain your model Explanation

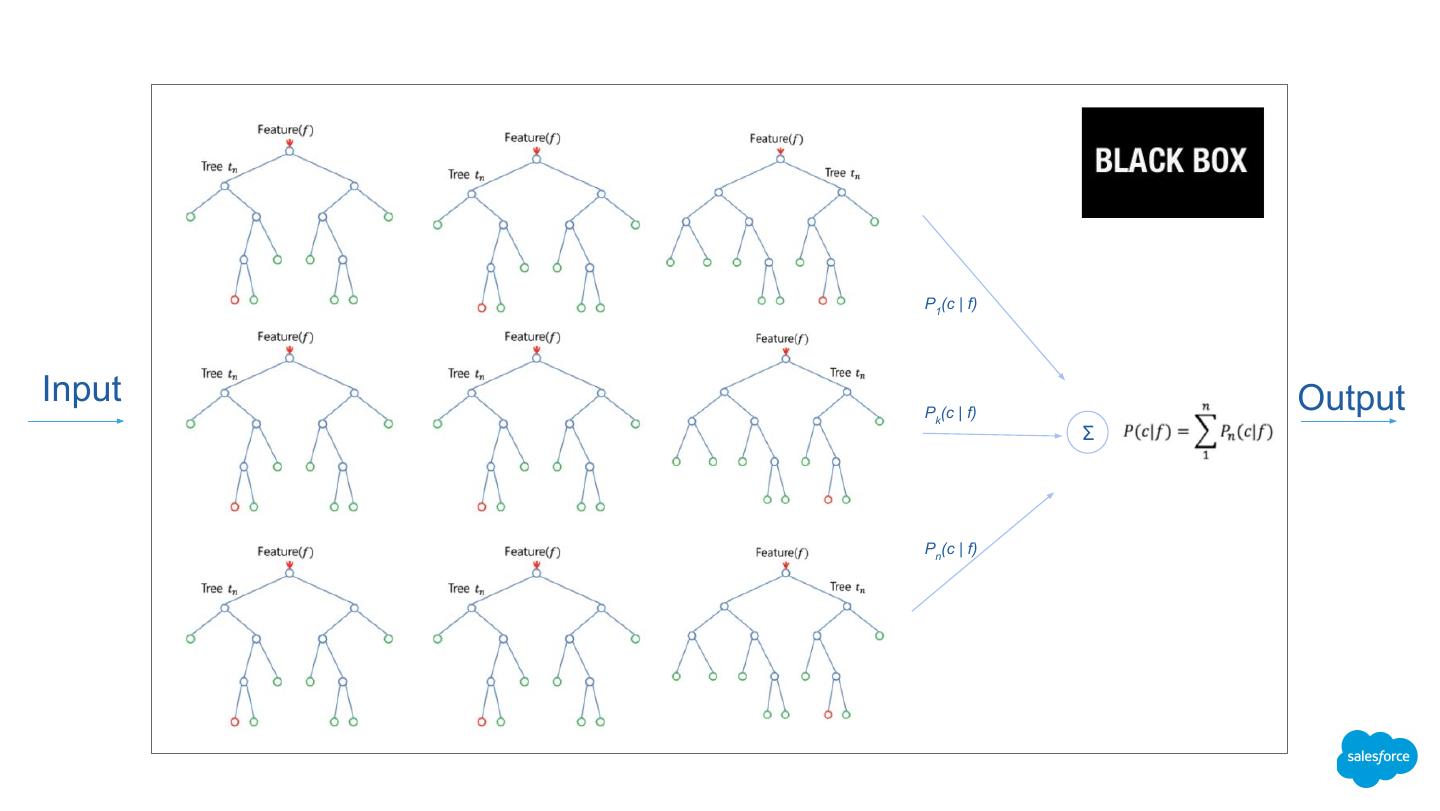

5 . P1(c | f) Input Pk(c | f) Output Σ Pn(c | f)

6 . The Question “ Why did the machine learning model make the decision that it did?

7 .The best model or the model you can explain?

8 . Roadmap for this talk How to automate Why with automate explanation Our solution: What does your How to explain in mind TransmogrifAI this look like modeling your model on Spark Automation vs Why explain your model Explanation

9 .Keep it DRY (don’t repeat yourself) and DRO (don’t repeat others) https://transmogrif.ai/

10 . Simple building blocks for automatic model generation // Automated feature engineering val featureVector = Seq(pClass, name, sex, age, sibSp, parch, ticket, cabin, embarked).transmogrify() // Automated feature selection val checkedFeatures = survived.sanityCheck(featureVector = featureVector, removeBadFeatures = true) // Automated model selection val prediction = BinaryClassificationModelSelector.setInput(survived, checkedFeatures).getOutput() // Model insights val model = new OpWorkflow().setInputDataset(passengersData).setResultFeatures(prediction).train() println("Model insights:\n" + model.modelInsights(prediction).prettyPrint()) // Add individual prediction insights val predictionInsights= new RecordInsightsLOCO(model.getOriginStageOf(prediction)).setInput(pred).getOutput() val insights = new OpWorkflow().setInputDataset(passengersData).setResultFeatures(predictionInsights) .withModelStages(model).train().score()

11 . Roadmap for this talk How to automate Why with automate explanation Our solution: What does your How to explain in mind TransmogrifAI this look like modeling your model on Spark Automation vs Why explain your model Explanation

12 . Debuggability Top contributing features for surviving the Titanic: 1. Gender 2. pClass 3. Body F1

13 . Trust Right Human Machine Wrong

14 .Bias

15 .Legal

16 .Actionable

17 . Roadmap for this talk How to automate Why with automate explanation Our solution: What does your How to explain in mind TransmografAI this look like modeling your model on Spark Automation vs Why explain your model Explanation

18 .24 Hours in the Life of Salesforce B2C B2B Scale Scale 888M 44M commerce reports and 3B page views dashboards 41M Einstein case predictions interactions 260M 3M social posts opportunities created 4M 2M orders leads created 1.5B 8M emails sent cases logged Source: Salesforce March 2018.

19 .The typical Machine Learning pipeline Model Training Model Evaluation ETL Data Cleansing Feature Engineering Score and Update Deploy and Models Operationalize Models

20 .Salesforce

21 .We can’t build one global model •Privacy concerns • Customers don’t want data cross-pollinated •Business Use Cases • Industries are very different • Processes are different •Platform customization • Ability to create custom fields and objects •Scale, Automation • Ability to create

22 .Multiply it by M*N (M = customers; N = use cases)

23 . Roadmap for this talk How to automate Why with automate explanation Our solution: What does your How to explain in mind TransmogrifAI this look like modeling your model on Spark Automation vs Why explain your model Explanation

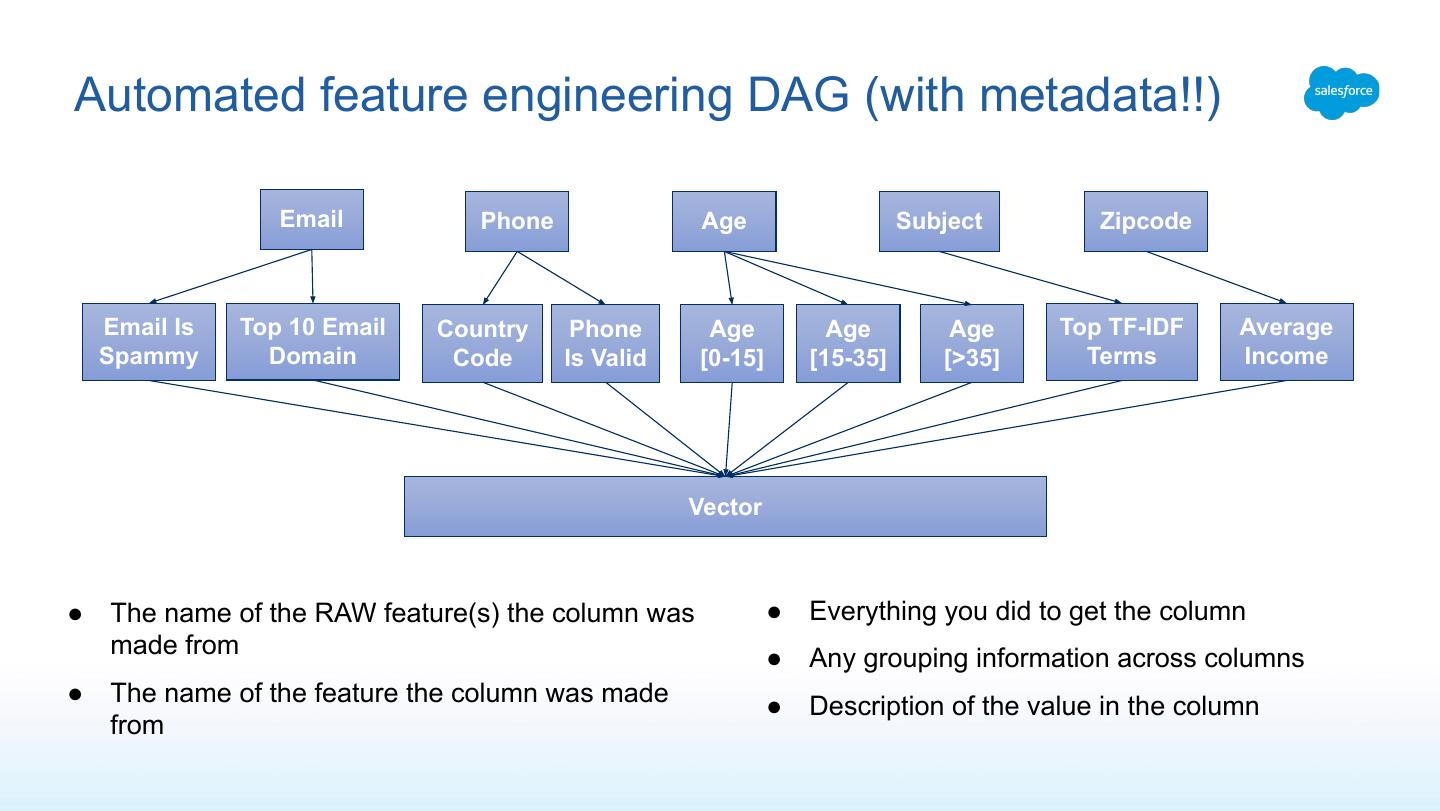

24 .How to explain your model: Concerns: Options: ● How interpretable is the model? ● Feature weights / importance ● How interpretable are the raw ● Model agnostic feature impact features? ● Secondary models ● Do you care about explaining the ● Feature / label interactions model or gaining insights into data? ● Global versus local granularity ● Do we need to explain individual predictions?

25 . Secondary Model Prediction Input Explanation https://www.statmethods.net/advgraphs/images/corrgram1.png

26 .Secondary Model

27 .Feature Weight / Importance / Impact X X2 X3 X4 X5 Y 0 1 0 0 0 A 1 1 1 0 0 B 0 0 1 1 0 B 1 1 1 1 1 A 1 0 1 0 0 A

28 .Global vs local explanations Titanic model top features: ● Gender ● Cabin class (pClass) ● Age Titanic passenger top features (ranking x value): ● Prediction = 1 (survived), Reasons = female, 1st Class ● Prediction = 0 (died), Reasons = male, 3rd Class

29 . Feature Impact (LOCO) Score = 0.27 Reasons => sex = "male" (-0.13), pClass = 3 (-0.05), ... https://www.oreilly.com/ideas/ideas-on-interpreting-machine-learning