- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/Spark/sigmod_structured_streaming?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

Spark Structured Streaming

展开查看详情

1 . Structured Streaming: A Declarative API for Real-Time Applications in Apache Spark Michael Armbrust† , Tathagata Das† , Joseph Torres† , Burak Yavuz† , Shixiong Zhu† , Reynold Xin† , Ali Ghodsi† , Ion Stoica† , Matei Zaharia†‡ † Databricks Inc., ‡ Stanford University Abstract with Spark Streaming [37], one of the earliest stream processing With the ubiquity of real-time data, organizations need streaming systems to provide a high-level, functional API. We found that two systems that are scalable, easy to use, and easy to integrate into challenges frequently came up with users. First, streaming systems business applications. Structured Streaming is a new high-level often ask users to think in terms of complex physical execution streaming API in Apache Spark based on our experience with Spark concepts, such as at-least-once delivery, state storage and triggering Streaming. Structured Streaming differs from other recent stream- modes, that are unique to streaming. Second, many systems focus ing APIs, such as Google Dataflow, in two main ways. First, it is a only on streaming computation, but in real use cases, streaming is purely declarative API based on automatically incrementalizing a often part of a larger business application that also includes batch static relational query (expressed using SQL or DataFrames), in con- analytics, joins with static data, and interactive queries. Integrating trast to APIs that ask the user to build a DAG of physical operators. streaming systems with these other workloads (e.g., maintaining Second, Structured Streaming aims to support end-to-end real-time transactionality) requires significant engineering effort. applications that integrate streaming with batch and interactive Motivated by these challenges, we describe Structured Stream- analysis. We found that this integration was often a key challenge ing, a new high-level API for stream processing that was developed in practice. Structured Streaming achieves high performance via in Apache Spark starting in 2016. Structured Streaming builds on Spark SQL’s code generation engine and can outperform Apache many ideas in recent stream processing systems, such as separating Flink by up to 2× and Apache Kafka Streams by 90×. It also offers processing time from event time and triggers in Google Dataflow [2], rich operational features such as rollbacks, code updates, and mixed using a relational execution engine for performance [12], and of- streaming/batch execution. We describe the system’s design and use fering a language-integrated API [17, 37], but aims to make them cases from several hundred production deployments on Databricks, simpler to use and integrated with the rest of Apache Spark. Specif- the largest of which process over 1 PB of data per month. ically, Structured Streaming differs from other widely used open source streaming APIs in two ways: ACM Reference Format: • Incremental query model: Structured Streaming automati- M. Armbrust et al.. 2018. Structured Streaming: A Declarative API for Real- Time Applications in Apache Spark. In SIGMOD’18: 2018 International Con- cally incrementalizes queries on static datasets expressed through ference on Management of Data, June 10–15, 2018, Houston, TX, USA. ACM, Spark’s SQL and DataFrame APIs [8], meaning that users typ- New York, NY, USA, 13 pages. https://doi.org/10.1145/3183713.3190664 ically only need to understand Spark’s batch APIs to write a streaming query. Event time concepts are especially easy to ex- 1 Introduction press and understand in this model. Although incremental query execution and view maintenance are well studied [11, 24, 29, 38], Many high-volume data sources operate in real time, including we believe Structured Streaming is the first effort to adopt them sensors, logs from mobile applications, and the Internet of Things. in a widely used open source system. We found that this incre- As organizations have gotten better at capturing this data, they also mental API generally worked well for both novice and advanced want to process it in real time, whether to give human analysts the users. For example, advanced users can use a set of stateful pro- freshest possible data or drive automated decisions. Enabling broad cessing operators that give fine-grained control to implement access to streaming computation requires systems that are scalable, custom logic while fitting into the incremental model. easy to use and easy to integrate into business applications. While there has been tremendous progress in distributed stream • Support for end-to-end applications: Structured Streaming’s processing systems in the past few years [2, 15, 17, 27, 32], these sys- API and built-in connectors make it easy to write code that is tems still remain fairly challenging to use in practice. In this paper, “correct by default" when interacting with external systems and we begin by describing these challenges, based on our experience can be integrated into larger applications using Spark and other software. Data sources and sinks follow a simple transactional Permission to make digital or hard copies of all or part of this work for personal or model that enables “exactly-once" computation by default. The classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation incrementalization based API naturally makes it easy to run a on the first page. Copyrights for components of this work owned by others than the streaming query as a batch job or develop hybrid applications author(s) must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission that join streams with static data computed through Spark’s and/or a fee. Request permissions from permissions@acm.org. batch APIs. In addition, users can manage multiple streaming SIGMOD’18, June 10–15, 2018, Houston, TX, USA queries dynamically and run interactive queries on consistent © 2018 Copyright held by the owner/author(s). Publication rights licensed to Associa- tion for Computing Machinery. ACM ISBN 978-1-4503-4703-7/18/06. . . $15.00 https://doi.org/10.1145/3183713.3190664

2 . snapshots of stream output, making it possible to write applica- time discussing these challenges with users and designers of other tions that go beyond computing a fixed result to let users refine streaming systems, including Spark Streaming, Truviso, Storm, and drill into streaming data. Dataflow and Flink. This section details the challenges we saw. Beyond these design decisions, we made several other design 2.1 Complex and Low-Level APIs choices in Structured Streaming that simplify operation and in- crease performance. First, Structured Streaming reuses the Spark Streaming systems were invariably considered more difficult to use SQL execution engine [8], including its optimizer and runtime code than batch ones due to complex API semantics. Some complexity is generator. This leads to high throughput compared to other stream- to be expected due to new concerns that arise only in streaming: for ing systems (e.g., 2× the throughput of Apache Flink and 90× that example, the user needs to think about what type of intermediate of Apache Kafka Streams in the Yahoo! Streaming Benchmark [14]), results the system should output before it has received all the data as in Trill [12], and also lets Structured Streaming automatically relevant to a particular entity, e.g., to a customer’s browsing session leverage new SQL functionality added to Spark. The engine runs on a website. However, other complexity arises due to the low- in a microbatch execution mode by default [37] but it can also use level nature of many streaming APIs: these APIs often ask users to a low-latency continuous operators for some queries because the specify applications at the level of physical operators with complex API is agnostic to execution strategy [6]. semantics instead of a more declarative level. Second, we found that operating a streaming application can be As a concrete example, the Google Dataflow model [2] has a challenging, so we designed the engine to support failures, code powerful API with a rich set of options for handling event time updates and recomputation of already outputted data. For example, aggregation, windowing and out-of-order data. However, in this one common issue is that new data in a stream causes an applica- model, users need to specify a windowing mode, triggering mode tion to crash, or worse, to output an incorrect result that users do and trigger refinement mode (essentially, whether the operator not notice until much later (e.g., due to mis-parsing an input field). outputs deltas or accumulated results) for each aggregation operator. In Structured Streaming, each application maintains a write-ahead Adding an operator that expects deltas after an aggregation that event log in human-readable JSON format that administrators can outputs accumulated results will lead to unexpected results. In use to restart it from an arbitrary point. If the application crashes essence, the raw API [10] asks the user to write a physical operator due to an error in a user-defined function, administrators can up- graph, not a logical query, so every user of the system needs to date the UDF and restart from where it left off, which happens understand the intricacies of incremental processing. automatically when the restarted application reads the log. If the Other APIs, such as Spark Streaming [37] and Flink’s DataStream application was outputting incorrect data instead, administrators API [18], are also based on writing DAGs of physical operators and can manually roll it back to a point before the problem started and offer a complex array of options for managing state [20]. In addition, recompute its results starting from there. reasoning about applications becomes even more complex in sys- Our team has been running Structured Streaming applications tems that relax exactly-once semantics [32], effectively requiring for customers of Databricks’ cloud service since 2016, as well as the user to design and implement a consistency model. using the system internally, so we end the paper with some ex- To address this issue, we designed Structured Streaming to make ample use cases. Production applications range from interactive simple applications simple to express using its incremental query network security analysis and automated alerts to incremental Ex- model. In addition, we found that adding customizable stateful tract, Transform and Load (ETL). Users often leverage the design of processing operators to this model still enabled advanced users to the engine in interesting ways, e.g., by running a streaming query build their own processing logic, such as custom session-based “discontinuously" as a series of single-microbatch jobs to leverage windows, while staying within the incremental model (e.g., these Structured Streaming’s transactional input and output without hav- same operators also work in batch jobs). Other open source systems ing to pay for cloud servers running 24/7. The largest customer have also recently added incremental SQL queries [15, 19], and of applications we discuss process over 1 PB of data per month on course databases have long supported them [11, 24, 29, 38]. hundreds of machines. We also show that Structured Streaming 2.2 Integration in End-to-End Applications outperforms Apache Flink and Kafka Streams by 2× and 90× re- The second challenge we found was that nearly every streaming spectively in the widely used Yahoo! Streaming Benchmark [14]. workload must run in the context of a larger application, and this in- The rest of this paper is organized as follows. We start by dis- tegration often requires significant engineering effort. Many stream- cussing the stream processing challenges reported by users in Sec- ing APIs focus primarily on reading streaming input from a source tion 2. Next, we give an overview of Structured Streaming (Sec- and writing streaming output to a sink, but end-to-end business tion 3), then describe its API (Section 4), query planning (Section 5), applications need to perform other tasks. Examples include: execution (Section 6) and operational features (Section 7). In Sec- tion 8, we describe several large use cases at Databricks and its (1) The business purpose of the application may be to enable inter- customers. We then measure the system’s performance in Section 9, active queries on fresh data. In this case, a streaming job is used discuss related work in Section 10 and conclude in Section 11. to update summary tables in a structured storage system such as an RDBMS or Apache Hive [33]. It is important that when the 2 Stream Processing Challenges streaming job updates its result, it does so atomically, so users Despite extensive progress in the past few years, distributed stream- do not see partial results. This can be difficult with file-based ing applications are still generally considered difficult to develop big data systems like Hive, where tables are partitioned across and operate. Before designing Structured Streaming, we spent files, or even with parallel loads into a data warehouse.

3 .(2) An Extract, Transform and Load (ETL) job might need to join DataFrame or SQL Query a stream with static data loaded from another storage system data.where($“state” === “CA”) or transformed using a batch computation. In this case, it is .groupBy(window($“time”, “30s”)) .avg(“latency”) important to be able to reason about consistency across the two Input Streams systems (e.g., what happens when the static data is updated?), Structured Streaming and it is useful to write the whole computation in a single API. Incrementalizer Microbatch Output Sink Optimizer (3) A team may occasionally need to run its streaming business Execution logic as a batch application, e.g., to backfill a result on old data Spark Tables or test alternate versions of the code. Rewriting the code in a Contiuous separate system would be time-consuming and error-prone. Processing We address this challenge by integrating Structured Streaming closely with Spark’s batch and interactive APIs. 2.3 Operational Challenges One of the largest challenges to deploying streaming applications Log State Store in practice is management and operation. Some key issues include: • Failures: This is the most heavily studied issue in the research Figure 1: The components of Structured Streaming. literature. In addition to single node failures, systems also need to support graceful shutdown and restart of the whole applica- such as high-frequency trading or physical system control loops tion, e.g., to let operators migrate it to a new cluster. often run on a single scale-up processor, or even custom hardware • Code Updates: Applications are rarely perfect, so developers like ASICs and FPGAs [3]. However, we also designed Structured may need to update their code. After an update, they may want Streaming to support executing over latency-optimized engines, the application to restart where it left off, or possibly to re- and implemented a continuous processing mode for this task, which compute past results that were erroneous due to a bug. Both we describe in Section 6.3. This is a change over Spark Streaming, cases need to be supported in the streaming system’s state man- where microbatching was “baked into" the API. agement and fault recovery mechanisms. Systems should also 3 Structured Streaming Overview support updating the runtime itself (e.g., patching Spark). Structured Streaming aims to tackle the stream processing chal- • Rescaling: Applications see varying load over time, and gen- lenges we identified through a combination of API and execution erally increasing load in the long term, so operators may want engine design. In this section, we give a brief overview of the overall to scale them up and down dynamically, especially in the cloud. system. Figure 1 shows Structured Streaming’s main components. Systems based on a static communication topology, while con- Input and Output. Structured Streaming connects to a variety of ceptually simple, are difficult to scale dynamically. input sources and output sinks for I/O. To provide “exactly-once" • Stragglers: Instead of outright failing, nodes in the stream- output and fault tolerance, it places two restrictions on sources and ing system can slow down due to hardware or software issues sinks, which are similar to other exactly-once systems [17, 37]: and degrade the throughput of the whole application. Systems (1) Input sources must be replayable, allowing the system to re-read should automatically handle this situation. recent input data if a node crashes. In practice, organizations • Monitoring: Streaming systems need to give operators clear use a reliable message bus such as Amazon Kinesis or Apache visibility into system load, backlogs, state size and other metrics. Kafka [5, 23] for this purpose, or simply a durable file system. 2.4 Cost and Performance Challenges (2) Output sinks must support idempotent writes, to ensure reliable recovery if a node fails while writing. Structured Streaming Beyond operational and engineering issues, the cost-performance of can also provide atomic output for certain sinks that support it, streaming applications can be an obstacle because these applications where the entire update to the job’s output appears atomically run 24/7. For example, without dynamic rescaling, an application even if it was written by multiple nodes working in parallel. will waste resources outside peak hours; and even with rescaling, it may be more expensive to compute a result continuously than to In addition to external systems, Structured Streaming also supports run a periodic batch job. We thus designed Structured Streaming input and output from tables in Spark SQL. For example, users can to leverage all the execution optimizations in Spark SQL [8]. compute a static table from any of Spark’s batch input sources and So far, we chose to optimize throughput as our main performance join it with a stream, or ask Structured Streaming to output to an metric because we found that it was often the most important metric in-memory Spark table that users can query interactively. in large-scale streaming applications. Applications that require a API. Users program Structured Streaming by writing a query distributed streaming system usually work with large data volumes against one or more streams and tables using Spark SQL’s batch coming from external sources (e.g., mobile devices, sensors or IoT), APIs: SQL and DataFrames [8]. This query defines an output table where data may already incur a delay just getting to the system. This that the user wants to compute, assuming that each input stream is is one reason why event time processing is an important feature replaced by a table holding all the data received from that stream in these systems [2]. In contrast, latency-sensitive applications so far. The engine then determines how to compute and write this

4 .output table into a sink incrementally, using similar techniques to The next sections go into detail about Structured Streaming’s incremental view maintenance [11, 29]. Different sinks also support API (§4), query planning (§5) and job execution and operation (§6). different output modes, which determine how the system may write 4 Programming Model out its results: for example, some sinks are append-only by nature, while others allow updating records in place by key. Structured Streaming combines elements of Google Dataflow [2], To support streaming specifically, Structured Streaming also incremental queries [11, 29, 38] and Spark Streaming [37] to enable adds several API features that fit in the existing Spark SQL API: stream processing beneath the Spark SQL API. In this section, we (1) Triggers control how often the engine will attempt to compute start by showing a short example, then describe the semantics of a new result and update the output sink, as in Dataflow [2]. the model and the streaming-specific operators we added in Spark SQL to support streaming use cases (e.g., stateful operators). (2) Users can mark a column as denoting event time (a timestamp set at the data source), and set a watermark policy to determine 4.1 A Short Example when enough data has been received to output a result for a Structured Streaming operates within Spark’s structured data APIs: specific event time, as in [2]. SQL, DataFrames and Datasets [8]. The main abstraction users work (3) Stateful operators allow users to track and update mutable state with is tables (represented by the DataFrames or Dataset classes), by key in order to implement complex processing, such as cus- which each represent a view to be computed from input sources to tom session-based windows. These are similar to Spark Stream- the system.1 When users create a table/DataFrame from a streaming ing’s updateStateByKey API [37]. input source, and attempt to compute it, Spark will automatically launch a streaming computation. Note that windowing, another key feature for streaming, is done As a simple example, let us start with a batch job that counts using Spark SQL’s existing aggregation APIs. In addition, all the clicks by country of origin for a web application. Suppose that the new APIs added by Structured Streaming also work in batch jobs. input data is JSON files and the output should be Parquet. This job Execution. Once it has received a query, Structured Streaming can be written with Spark DataFrames in Scala as follows: optimizes it, incrementalizes it, and begins executing it. By default, // Define a DataFrame to read from static data the system uses a microbatch model similar to Discretized Streams data = spark . read . format ( " json " ) . load ( " / in " ) in Spark Streaming, which supports dynamic load balancing, rescal- ing, fault recovery and straggler mitigation by dividing work into // Transform it to compute a result small tasks [37]. In addition, it can use a continuous processing counts = data . groupBy ( $ " country " ) . count () mode based on traditional long-running operators (Section 6.3). // Write to a static data sink In both cases, Structured Streaming uses two forms of durable counts . write . format ( " parquet " ) . save ( " / counts " ) storage to achieve fault tolerance. First, a write-ahead log keeps track of which data has been processed and reliably written to the Changing this job to use Structured Streaming only requires output sink from each input source. For some output sinks, this log modifying the input and output sources, not the transformation can be integrated with the sink to make updates to the sink atomic. in the middle. For example, if new JSON files are going to contin- Second, the system uses a larger-scale state store to hold snapshots ually be uploaded to the /in directory, we can modify our job to of operator states for long-running aggregation operators. These are continually update /counts by changing only the first and last lines: written asynchronously, and may be “behind" the latest data written // Define a DataFrame to read streaming data to the output sink. The system will automatically track which state data = spark . readStream . format ( " json " ) . load ( " / in " ) it has last updated in its log, and recompute state starting from that // Transform it to compute a result point in the data on failure. Both the log and state store can run counts = data . groupBy ( $ " country " ) . count () over pluggable storage systems (e.g., HDFS or S3). // Write to a streaming data sink Operational Features. Using the durability of the write-ahead counts . writeStream . format ( " parquet " ) log and state store, users can achieve several forms of rollback and . outputMode ( " complete " ) . start ( " / counts " ) recovery. An entire Structured Streaming application can be shut down and restarted on new hardware. Running applications also The output mode parameter on the sink here specifies how Struc- tolerate node crashes, additions and stragglers automatically, by tured Streaming should update the sink. In this case, the complete sending tasks to new nodes. For code updates to UDFs, it is sufficient mode means to write a complete result file for each update, because to stop and restart the application, and it will begin using the new the file output sink chosen does not support fine-grained updates. code. In addition, users can manually roll back the application to However, other sinks, such as key-value stores, support additional a previous point in the log and redo the part of the computation output modes (e.g., updating just the changed keys). starting then, beginning from an older snapshot of the state store. Under the hood, Structured Streaming will automatically incre- In addition, Structured Streaming’s ability to execute with mi- mentalize the query specified by the transformation(s) from input crobatches lets it “adaptively batch" data so that it can quickly sources to data sinks, and execute it in a streaming fashion. The catch up with input data if the load spikes or if a job is rolled back, 1 Spark SQL offers several slightly different APIs that map to the same query engine. then return to low latency later. This makes operation significantly The DataFrame API, modeled after data frames in R and Pandas [28, 30], offers a simpler (e.g., users can safely update job code more often). simple interface to build relational queries programmatically that is familiar to many users. The Dataset API adds static typing over DataFrames, similar to RDDs [36]. Alternatively, users can write SQL directly. All APIs produce a relational query plan.

5 .engine will also automatically maintain state and checkpoint it Trigger: every 1 sec Trigger: every 1 sec to external storage as needed—in this case, for example, we have t=1 t=2 t=3 t=1 t=2 t=3 a running count aggregation since the start of the stream, so the Processing Time engine will keep track of the running counts for each country. Finally, the API also naturally supports windowing and event Input time through Spark SQL’s existing aggregation operators. For ex- Table Query Query ample, instead of counting data by country, we could count it in 1-hour sliding windows advancing every 5 minutes by changing just the middle line of the computation as follows: Result Table // Count events by windows on the " time " field data . groupBy ( window ( $ " time " ," 1 h " ," 5 min " ) ) . count () Output The time field here (event time) is just a field in the data, similar Written to country earlier. Users can also set a watermark on this field to Complete Output Mode Append Output Mode let the system forget state for old windows after a timeout (§4.3.1). 4.2 Programming Model Semantics Figure 2: Structured Streaming’s semantics for two output Formally, we define the semantics of Structured Streaming’s pro- modes. Logically, all input data received up to a point in pro- gramming model as follows: cessing time is viewed as a large input table, and the user pro- vides a query that defines a result table based on this input. (1) Each input source provides a partially ordered set of records Physically, Structured Streaming computes changes to the over time. We assume partial orders here because some message result table incrementally (without having to store all input bus systems are parallel and do not define a total order across data) and outputs results based on its output mode. For com- records—for example, Kafka divides streams into “partitions" plete mode, it outputs the whole result table (left), while for that are each ordered. append mode, it only outputs newly added records (right). (2) The user provides a query to execute across the input data that can output a result table at any given point in processing time. A second attractive property is that the model has strong consis- Structured Streaming will always produce results consistent tency semantics, which we call prefix consistency. First, it guarantees with running this query on a prefix of the data in all input sources. that when input records are relatively ordered within a source (e.g., That is, it will never show results that incorporate one input log records from the same device), the system will only produce record but do not incorporate its ancestors in the partial order. results that incorporate them in the same records (e.g., never skip- Moreover, these prefixes will be increasing over time. ping a record). Second, because the result table is defined based on (3) Triggers tell the system when to run a new incremental compu- all data in the input prefix at once, we know that all rows in the tation and update the result table. For example, in microbatch result table reflect all input records. In contrast, in some systems mode, the user may wish to trigger an incremental update every based on message-passing between nodes, the node that receives a minute (in processing time). record might send an update to two downstream nodes, but there is no guarantee that the outputs from these two are synchronized. (4) The sink’s output mode specifies how the result table is written Prefix consistency also makes operation easier, as users can roll to the output system. The engine supports three distinct modes: back the system to a specific point in the write-ahead log (i.e., a • Complete: The engine writes the whole result table at once, specific prefix of the data) and recompute outputs from that point. e.g., replacing a whole file in HDFS with a new version. This In summary, with the Structured Streaming models, as long as is of course inefficient when the result is large. users understand a regular Spark or DataFrame query, they can • Append: The engine can only add records to the sink. For understand the content of the result table for their job and the example, a map-only job on a set of input files results in values that will be written to the sink. Users need not worry about monotonically increasing output. consistency, failures or incorrect processing orders. Finally, the reader might notice that some of the output modes we • Update: The engine updates the sink in place based on a key defined are incompatible with certain types of query. For example, for each record, updating only keys whose values changed. suppose we are aggregating counts by country, as in our code Figure 2 illustrates the model visually. One attractive property of example in the previous section, and we want to use the append the model is that the contents of the result table (which is logically output mode. There is no way for the system to guarantee it has just a view that need never be materialized) are defined indepen- stopped receiving records for a given country, so this combination dently of the output mode (whether we output the whole table on of query and output mode will not be allowed by the system. We every trigger, or only deltas). In contrast, APIs such as Dataflow describe which combinations are allowed in Section 5.1. require the equivalent of an output mode on every operator, so users must plan the whole operator DAG keeping in mind whether 4.3 Streaming Specific Operators each operator is outputting complete results or positive or negative Many Structured Streaming queries can be written using just the deltas, effectively incrementalizing the query by hand. standard operators in Spark SQL, such as selection, aggregation and

6 .joins. However, to support some requirements unique to streaming, // Define an update function that simply tracks the we added two new types of operators to Spark SQL: watermarking // number of events for each key as its state , returns // that as its result , and times out keys after 30 min . operators tell the system when to “close" an event time window and def updateFunc ( key : UserId , newValues : Iterator [ Event ], output results or forget state, and stateful operators let users write state : GroupState [ Int ]) : Int = { custom logic to implement complex processing. Crucially, both of val totalEvents = state . get () + newValues . size () these new operators still fit in Structured Streaming’s incremental state . update ( totalEvents ) state . setTimeoutDuration ( " 30 min " ) semantics (§4.2), and both can also be used in batch jobs. return totalEvents 4.3.1 Event Time Watermarks. From a logical point of view, } the key idea in event time is to treat application-specified times- // Use this update function on a stream , returning a tamps as an arbitrary field in the data, allowing records to arrive // new table lens that contains the session lengths . out-of-order [2, 24]. We can then use standard operators and in- lens = events . groupByKey ( event = > event . userId ) cremental processing to update results grouped by event time. In . mapGroupsWithState ( updateFunc ) practice, however, it is useful for the processing system to have some loose bounds on how late data can arrive, for two reasons: Figure 3: Using mapGroupsWithState to track the number of (1) Allowing arbitrarily late data might require storing arbitrarily events per session, timing out sessions after 30 minutes. large state. For example, if we count data by 1-minute event time window, the system needs to remember a count for every 1-minute window since the application began, because a late • newValues of type Iterator[V ] record might still arrive for any particular minute. This can • state of type GroupState[S], where S is a user-specified class. quickly lead to large amounts of state, especially if combined The operator will invoke this function whenever one or more with another grouping key. The same issue happens with joins. new values are received for a key. On each call, the function re- (2) Some sinks do not support data retraction, making it useful to ceives all of the values that were received for that key since the last be able to write the results for a given event time after a timeout. call (multiple values may be batched for efficiency). It also receives For example, custom downstream applications want to start a state object that wraps around a user-defined data type S, and working with a “final" result and might not support retractions. allows the user to update the state, drop this key from state tracking, Append-mode sinks also do not support retractions. or set a timeout for this specific key (either in event time or process- Structured Streaming lets developers set a watermark [2] for ing time). This allows the user to store arbitrary data for the key, event time columns using the withWatermark operator. This operator as well as implement custom logic for dropping state (e.g., custom gives the system a delay threshold tC for a given timestamp column exit conditions when implementing session-based windows). C. At any point in time, the watermark for C is max(C) − tC , that is, Finally, the update function returns a user-specified return type tC seconds before the maximum event time seen so far in C. Note R for its key. The return value of mapGroupsWithState is a new table that this choice of watermark is naturally robust to backlogged with the final R record outputted for each group in the data (when data: if the system cannot keep up with the input rate for a period the group is closed or times out). For example, the developer may of time, the watermark will not move forward arbitrarily during wish to track user sessions on a website using mapGroupsWithState, that time, and all events that arrived within at most T seconds of and output the total number of pages clicked for each session. being produced will still be processed. To illustrate, Figure 3 shows how to use mapGroupsWithState to When present, watermarks affect when stateful operators can track user sessions, where a session is defined as a series of events forget old state (e.g., if grouping by a window derived from a wa- with the same userId and gaps less than 30 minutes between them. termarked column), and when Structured Streaming will output We output the final number of events in each session as our return data with an event time key to append-mode sinks. Different input value R. A job could then compute metrics such as the average streams can have different watermarks. number of events per session by aggregating the result table lens. The second stateful operator, flatMapGroupsWithState, is very 4.3.2 Stateful Operators. For developers who want to write similar to mapGroupsWithState, except that the update function can custom stream processing logic, Structured Streaming’s stateful return zero or more values of type R per update instead of one. operators are “UDFs with state" that give users control over the For example, this operator could be used to manually implement a computation while fitting into Structured Streaming’s semantics stream-to-table join. The return values can either be returned all at and fault tolerance mechanisms. There are two stateful operators, once, when the group is closed, or incrementally across calls to the mapGroupsWithState and flatMapGroupsWithState. Both operators update function. Both operators also work in batch mode, in which act on data that has been assigned a key using groupByKey, and let case the update function will only be called once. the developers track and update a state for each key using custom logic, as well as output records for each key. They are closely based 5 Query Planning on Spark Streaming’s updateStateByKey operator [37]. We implemented Structured Streaming’s query planning using the The mapGroupsWithState operator, on a grouped dataset with Catalyst extensible optimizer in Spark SQL [8], which allows writ- keys of type K and values of type V , takes in a user-defined update ing composable rules using pattern matching in Scala. Query plan- function with the following arguments: ning proceeds in three stages: analysis to determine whether the • key of type K query is valid, incrementalization and optimization.

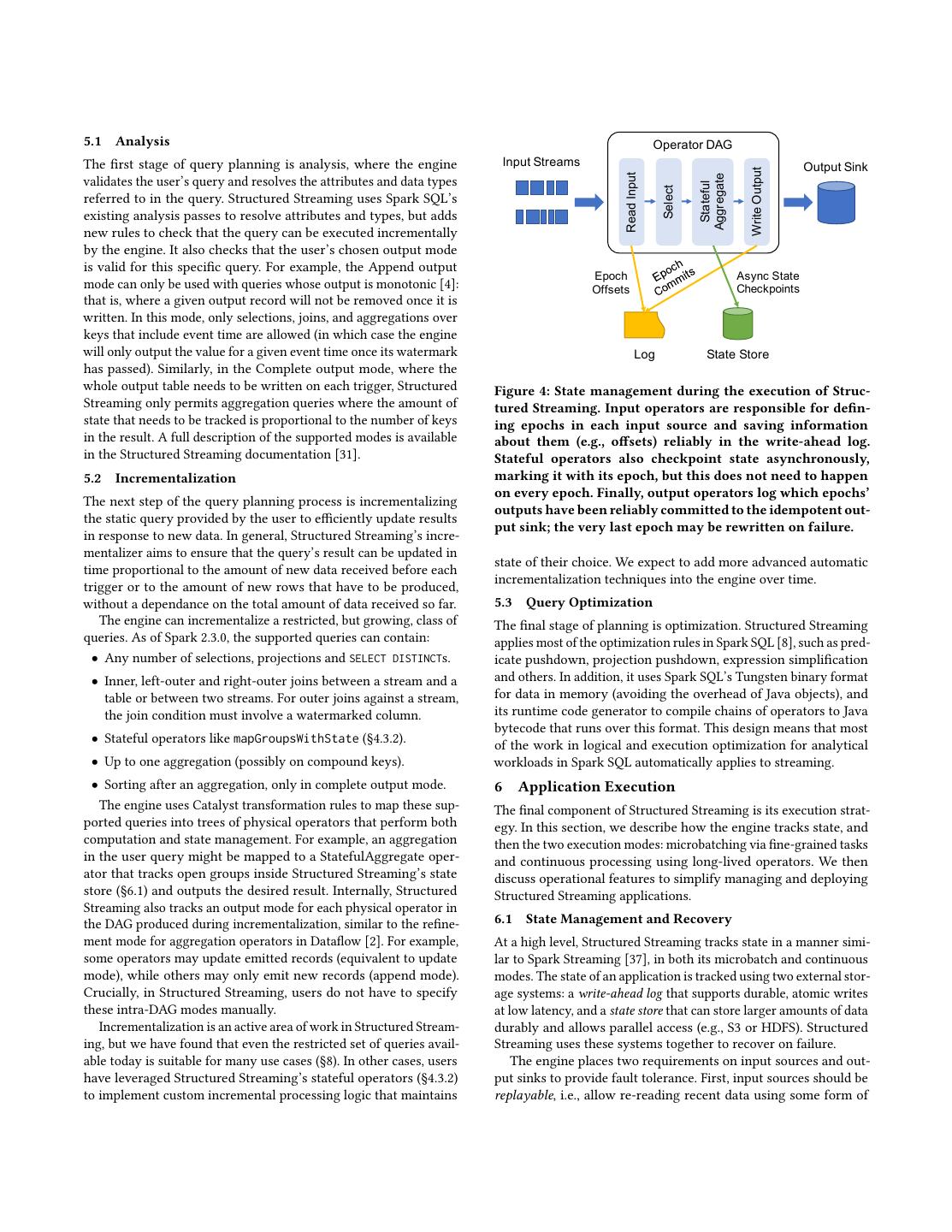

7 .5.1 Analysis Operator DAG The first stage of query planning is analysis, where the engine Input Streams Output Sink Write Output Read Input Aggregate validates the user’s query and resolves the attributes and data types Stateful Select referred to in the query. Structured Streaming uses Spark SQL’s existing analysis passes to resolve attributes and types, but adds new rules to check that the query can be executed incrementally by the engine. It also checks that the user’s chosen output mode is valid for this specific query. For example, the Append output Epoch Async State mode can only be used with queries whose output is monotonic [4]: Offsets Checkpoints that is, where a given output record will not be removed once it is written. In this mode, only selections, joins, and aggregations over keys that include event time are allowed (in which case the engine will only output the value for a given event time once its watermark Log State Store has passed). Similarly, in the Complete output mode, where the whole output table needs to be written on each trigger, Structured Figure 4: State management during the execution of Struc- Streaming only permits aggregation queries where the amount of tured Streaming. Input operators are responsible for defin- state that needs to be tracked is proportional to the number of keys ing epochs in each input source and saving information in the result. A full description of the supported modes is available about them (e.g., offsets) reliably in the write-ahead log. in the Structured Streaming documentation [31]. Stateful operators also checkpoint state asynchronously, 5.2 Incrementalization marking it with its epoch, but this does not need to happen on every epoch. Finally, output operators log which epochs’ The next step of the query planning process is incrementalizing outputs have been reliably committed to the idempotent out- the static query provided by the user to efficiently update results put sink; the very last epoch may be rewritten on failure. in response to new data. In general, Structured Streaming’s incre- mentalizer aims to ensure that the query’s result can be updated in state of their choice. We expect to add more advanced automatic time proportional to the amount of new data received before each incrementalization techniques into the engine over time. trigger or to the amount of new rows that have to be produced, without a dependance on the total amount of data received so far. 5.3 Query Optimization The engine can incrementalize a restricted, but growing, class of The final stage of planning is optimization. Structured Streaming queries. As of Spark 2.3.0, the supported queries can contain: applies most of the optimization rules in Spark SQL [8], such as pred- • Any number of selections, projections and SELECT DISTINCTs. icate pushdown, projection pushdown, expression simplification • Inner, left-outer and right-outer joins between a stream and a and others. In addition, it uses Spark SQL’s Tungsten binary format table or between two streams. For outer joins against a stream, for data in memory (avoiding the overhead of Java objects), and the join condition must involve a watermarked column. its runtime code generator to compile chains of operators to Java bytecode that runs over this format. This design means that most • Stateful operators like mapGroupsWithState (§4.3.2). of the work in logical and execution optimization for analytical • Up to one aggregation (possibly on compound keys). workloads in Spark SQL automatically applies to streaming. • Sorting after an aggregation, only in complete output mode. 6 Application Execution The engine uses Catalyst transformation rules to map these sup- The final component of Structured Streaming is its execution strat- ported queries into trees of physical operators that perform both egy. In this section, we describe how the engine tracks state, and computation and state management. For example, an aggregation then the two execution modes: microbatching via fine-grained tasks in the user query might be mapped to a StatefulAggregate oper- and continuous processing using long-lived operators. We then ator that tracks open groups inside Structured Streaming’s state discuss operational features to simplify managing and deploying store (§6.1) and outputs the desired result. Internally, Structured Structured Streaming applications. Streaming also tracks an output mode for each physical operator in the DAG produced during incrementalization, similar to the refine- 6.1 State Management and Recovery ment mode for aggregation operators in Dataflow [2]. For example, At a high level, Structured Streaming tracks state in a manner simi- some operators may update emitted records (equivalent to update lar to Spark Streaming [37], in both its microbatch and continuous mode), while others may only emit new records (append mode). modes. The state of an application is tracked using two external stor- Crucially, in Structured Streaming, users do not have to specify age systems: a write-ahead log that supports durable, atomic writes these intra-DAG modes manually. at low latency, and a state store that can store larger amounts of data Incrementalization is an active area of work in Structured Stream- durably and allows parallel access (e.g., S3 or HDFS). Structured ing, but we have found that even the restricted set of queries avail- Streaming uses these systems together to recover on failure. able today is suitable for many use cases (§8). In other cases, users The engine places two requirements on input sources and out- have leveraged Structured Streaming’s stateful operators (§4.3.2) put sinks to provide fault tolerance. First, input sources should be to implement custom incremental processing logic that maintains replayable, i.e., allow re-reading recent data using some form of

8 .identifier, such as a stream offset. Durable message bus systems like for the aggregation, where the reduce tasks track state in memory Kafka and Kinesis meet this need. Second, output sinks should be on worker nodes and periodically checkpoint it to the state store. idempotent, allowing Structured Streaming to rewrite some already As in Spark Streaming, this mode provides the following benefits: written data on failure. Sinks can implement this in different ways. Given these properties, Structured Streaming performs state • Dynamic load balancing: Each operator’s work is divided tracking using the following mechanism, as shown in Figure 4: into small, independent tasks that can be scheduled on any node, so the system can automatically balance these across (1) As input operators read data, the master node of the Spark nodes if some are executing slower than others. application defines epochs based on offsets in each input source. • Fine-grained fault recovery: If a node fails, only its tasks For example, Kafka and Kinesis present topics as a series of need to be rerun, instead of having to roll back the whole partitions, each of which are byte streams, and allow reading cluster to a checkpoint as in most systems based on topolo- data using offsets in these partitions. The master writes the start gies of long-lived operators. Moreover, the lost tasks can be and end offsets of each epoch durably to the log. rerun in parallel, further reducing recovery time [37]. (2) Any operators requiring state checkpoint their state periodi- • Straggler mitigation: Spark will launch backup copies of cally and asynchronosuly to the state store, using incremental slow tasks as it does in batch jobs, and downstream tasks will checkpoints when possible. They store the epoch ID along with simply use the output from whichever copy finishes first. each checkpoint written. These checkpoints do not need to • Rescaling: Adding or removing a node is simple as tasks happen on every epoch or to block processing.2 will automatically be scheduled on all the available nodes. (3) Output operators write the epochs they committed to the log. • Scale and throughput: Because this mode reuses Spark’s The master waits for all nodes running an operator to report batch execution engine, it inherits all the optimizations in a commit for a given epoch before allowing commits for the this engine, such as a high-performance shuffle implementa- next epoch. Depending on the sink, the master can also run an tion [34] and the ability to run on thousands of nodes. operation to finalize the writes from multiple nodes if the sink supports this. This means that if the streaming application fails, The main disadvantage of this mode is a higher minimum la- only one epoch may be partially written.3 tency, as there is overhead to launching a DAG of tasks in Spark. In practice, however, latencies of a few seconds are achievable even (4) Upon recovery, the new instance of the application starts by on large clusters running multi-step computations. Depending on reading the log to find the last epoch that has not been commit- the application, these are on a similar time scale to data collection ted to the sink, including its start and end offsets. It then uses and alerting systems. the offsets of earlier epochs to reconstruct the application’s in- memory state from the last epoch written to the state store. This 6.3 Continuous Processing Mode just requires loading the old state and running those epochs A new continuous processing added in Apache Spark 2.3 [6] exe- with the same offsets while disabling output. Finally, the system cutes Structured Streaming jobs using long-lived operators as in reruns the last epoch and relies on the sink’s idempotence to traditional streaming systems such as Telegraph and Borealis [1, 13]. write its results, then starts defining new epochs. This mode enables lower latency at a cost of less operational flexi- Finally, all of the state management in this design is transparent bility (e.g., limited support for rescaling the job at runtime). to user code. Both the aggregation operators and custom state- The key enabler for this execution mode was choosing a declar- ful processing operators (e.g., mapGroupsWithState) automatically ative API for Structured Streaming that is not tied to the execution checkpoint state to the state store, without requiring custom code strategy. For example, the original Spark Streaming API had some to do it. The user’s data types only need to be serializable. operators based on processing time that leaked the concept of mi- 6.2 Microbatch Execution Mode crobatches into the programming model, making it hard to move programs to another type of engine. In contrast, Structured Stream- Structured Streaming jobs can execute in two modes: microbatching ing’s API and semantics are independent of the execution engine: or continuous operators. The microbatch mode uses the discretized continuous execution is similar to having a much larger number of streams execution model from Spark Streaming [37], and inherits triggers. Note that unlike systems based purely on unsynchronized its benefits, such as dynamic load balancing, rescaling, straggler message passing, such as Storm [32], we do retain the concept of mitigation and fault recovery without whole-system rollback. triggers and epochs in this mode so the output from multiple nodes In this mode, epochs are typically set to be a few hundred mil- can be coordinated and committed together to the sink. liseconds to a few seconds, and each epoch executes as a traditional Because the API supports fine-grained execution, Structured Spark job composed of a DAG of independent tasks [36]. For exam- Streaming jobs could theoretically run on any existing distributed ple, a query doing selection followed by stateful aggregation might streaming engine design [1, 13, 17]. In continuous processing, we execute as a set of “map" tasks for the selection and “reduce" tasks built a simple continuous operator engine that lives inside Spark and 2 In Spark 2.3.0, we actually make one checkpoint per epoch, but we plan to make can reuse Spark’s scheduling infrastructure and per-node operators them less frequent in a future release, as is already done in Spark Streaming. 3 Some sinks, such as Amazon S3, provide no way to atomically commit multiple writes (e.g., code-generated operators). The first version released in Spark from different writer nodes. In such cases, we have also created Spark data sources 2.3.0 only supports “map-like” jobs (i.e., no shuffle operations), that add transactions over the underlying storage system. For example, Databricks which were one of the most common scenarios where users wanted Delta [7] offers a consistent view of S3 data for both streaming and batch queries, along with additional features such as index maintenance. lower latency, but the design can be extended to support shuffles.

9 . Compared to microbatch execution, there are two differences the job can start again from a previous point. Message buses like when using continuous processing: Kafka are typically configured for several weeks of retention so (1) The master launches long-running tasks on each partition using rollbacks are often possible. Spark’s scheduler that each read one partition of the input Manual rollbacks interact well with Structured Streaming’s prefix source (e.g., Kinesis stream) but execute multiple epochs. If one consistency guarantee for execution semantics 4.2. Specifically, of these tasks fails, Spark will simply relaunch it. when an administrator rolls back the job to a point in the write- ahead log, she knows which prefix of the input streams this point (2) Epochs are coordinated differently. The master periodically tells corresponds to, and the job can recompute output from that point nodes to start a new epoch, and receives a start offset for the on while retaining consistency within the new output. Beyond this epoch on each input partition, which it inserts into the write- guarantee, Structured Streaming’s support for running the same ahead log. When it asks them to start the next epoch, it also code as a batch job and for rescaling means that administrators can receives end offsets for the previous one, writes these to the run the recovery on a temporarily larger cluster to catch up quickly, log, and tells nodes to commit the epoch when it has written all further reducing the operational complexity of manual rollbacks. the end offsets. Thus, the master is not on the critical path for inspecting all the input sources and defining start/end offsets. 7.3 Hybrid Batch and Streaming Execution We found that the most common use case where organizations The most obvious benefit of Structured Streaming’s unified API is wanted low latency and the scale of a distributed processing engine that users can share code between batch and streaming jobs, or run was “stream to stream” map operations to transform data before it is the same program as a batch job for testing. However, we have also used in other streaming applications. For example, an organization found this useful for purely streaming scenarios in two ways: might upload events to Kafka, run some simple ETL transformations • “Run-once" triggers for cost savings: Many Databricks customers as a streaming job, and write the transformed data to Kafka again wanted the transactionality and state management properties for consumption by other streaming applications. In this type of of a streaming engine without running servers 24/7. Virtually design, each transformation job will add latency to all downstream all ETL workloads require tracking how far in the input one has steps, so organizations wish to minimize this latency. gotten and which results have been saved reliably, which can 7 Operational Features be difficult to implement by hand. These functions are exactly what Structured Streaming’s state management provides. Thus, We used several properties of our execution strategy and API to several customers implemented ETL jobs by running a single design a number of operational features in Structured Streaming epoch of a Structured Streaming job every few hours as a batch that tackle common problems in deployments. Perhaps most im- computation, using the provided “run once" trigger that was portantly across these features, we aimed to make both Structured originally designed for testing. This leads to significant cost sav- Streaming’s semantics and its fault tolerance model easy to under- ings (in one case, up to 10× [35]) for lower-volume applications. stand. With a simple design, operators can form an accurate model With all the major cloud providers now supporting per-second of how a system runs and what various actions will do to it. or per-minute billing [9], we believe this type of “discontinuous 7.1 Code Updates processing" will become more common. Developers can update User-Defined Functions (UDFs) in their • Adaptive batching: Even streaming applications occasionally program and simply restart the application to use the new ver- experience large backlogs. For example, a link between two sion of the code. For example, if a UDF is crashing on a particular datacenters might go down, temporarily delaying data transfer, input record, that epoch of processing will fail, so the developer or there might simply be a spike in user activity. In these cases, can update the code and restart the application again to continue Structured Streaming will automatically execute longer epochs processing. This also applies to stateful operator UDFs, which can in order to catch up with the input streams, often achieving be updated as long as they retain the same schema for their state similar throughput to Spark’s batch jobs. This will not greatly objects. We also designed Spark’s log and state store formats to be increase latency, given that data is already backlogged, but will binary compatible across Spark framework updates. let the system catch up faster. In cloud environments, operators 7.2 Manual Rollback can also add extra nodes to the cluster temporarily. Sometimes, an application outputs wrong results for some time 7.4 Monitoring before a user notices: for example, a field that fails to parse might Structured Streaming uses Spark’s existing metrics API and struc- simply be reported as NULL. Therefore, rollbacks are a fact of life for tured event log to report information such as number of records many operators. In Structured Streaming, it is easy to determine processed, bytes shuffled across the network, etc. These interfaces which records went into each epoch from the write-ahead log and are familiar to operators and easy to connect to a variety of UI tools. roll back the application to the epoch where a problem started 7.5 Fault and Straggler Recovery occurring. We chose to store the write-ahead log as JSON to let administrators perform these operations manually.4 As long as the As discussed in §6.2, Structured Streaming’s microbatch mode can input sources and state store still have data from the failed epoch, recover from node failures, stragglers and load imbalances using 4 Spark’s fine-grained task execution model. The continuous pro- One additional step they may have to do is remove faulty data from the output sink, depending on the sink chosen. For the file sink, for example, it’s straightforward to cessing mode recovers from node failures, but does not yet protect find which files were written in a particular epoch and remove those. against stragglers or load imbalance.

10 . IDS logs small amount of data for historical queries due to using a traditional raw logs (Parquet format) data warehouse for the interactive queries. In contrast, a team of five engineers was able to reimplement the platform using Structured IDS S3 Streaming S3 Streaming alerts Streaming in two weeks. The new platform was simultaneously ETL Alerts more scalable and able to support more complex analysis using … Spark’s ML APIs. Next, we provide a few examples to illustrate the notifications install advantages of Structured Streaming that made this possible. Streaming queries IDS ETL First, Structured Streaming’s ability to adaptively vary the batch as alerts size enabled the developers to build a streaming pipeline that deals not only with spikes in the workload, but also with failures and structured data code upgrades. Consider a streaming job that goes offline either due (Parquet format) S3 Interactive Analytics security to failure or upgrades. When the cluster is brought back online, it analyst will start automatically to process the data all the way back from the moment it went offline. Initially, the cluster will use large batches to Figure 5: Information security platform use case. Using maximize the throughput. Once it catches up, the cluster switches Structured Streaming and Spark SQL, a team of analysts can to small batches for low latency. This allows administrators to query both streaming and historical data and easily install regularly upgrade clusters without the fear of excessive downtime. queries for new attack patterns as streaming alerts. Second, the ability to join a stream with other streams, as well as with historical tables, has considerably simplified the analysis. 8 Production Use Cases Consider the simple task of figuring out which device a TCP con- We have supported Structured Streaming on Databricks’ managed nection originates at. It turns out that this task is challenging in cloud service [16] since 2016, and today, our cloud is running hun- the presence of mobile devices, as these devices are given dynamic dreds of production streaming applications at a given time (i.e., IP addresses every time they join the network. Hence, from TCP applications running 24/7). The largest of these applications ingest logs alone, is not possible to track down the end-points of a connec- over 1 PB of data per month and run on hundreds of servers. We tion. With Structured Streaming, an analyst can easily solve this also use Structured Streaming internally to monitor our services, problem. She can simply join the TCP logs with DHCP logs to map including the execution of Structured Streaming itself. In this sec- the IP address to the MAC address, and then use the organization’s tion, we describe three customer workloads that leverage various internal database of network devices to map the MAC address to aspects of Structured Streaming, as well as our internal use case. a particular machine and user. In addition, users were able to do this join in real time using stateful operators as both the TCP and 8.1 Information Security Platform DHCP logs were being streamed in. A large customer has used Structured Streaming to develop a large- Finally, using the same system for streaming, interactive queries scale security platform to enable over 100 analysts to scour through and ETL has provided developers with the ability to quickly iterate network traffic logs to quickly identify and respond to security and deploy new alerts. In particular, it enables analysts to build incidents, as well as to generate automated alerts. This platform and test queries for detecting new attacks on offline data, and then combines streaming with batch and interactive queries and is thus deploy these queries directly on the alerting cluster. In one example, a great example of the system’s support for end-to-end applications. an analyst developed a query to identify exfiltration attacks via Figure 5 shows the architecture of the platform. Intrusion Detec- DNS. In this attack, malware leaks confidential information from tion Systems (IDSes) monitor all the network traffic in the organiza- the compromised host by piggybacking this information into DNS tion, and output logs to S3. From here, a Structured Streaming jobs requests sent to an external DNS server owned by the attacker. One ETLs these logs into a compact Apache Parquet based table stored simplified query to detect such an attack essentially computes the on Databricks Delta [7] to enable fast and concurrent access from aggregate size of the DNS requests sent by every host over a time multiple downstream applications. Other Structured Streaming jobs interval. If the aggregate is greater than a given threshold, the query then process these logs to produce additional tables (e.g., by joining flags the corresponding host as potentially being compromised. The them with other data). Analysts query these tables interactively, us- analyst used historical data to set this threshold, so as to achieve ing SQL or Dataframes, to detect and diagnose new attack patterns. the desired balance between false positive and false negative rates. If they identify a compromise, they also look back through historical Once satisfied with the result, the analyst simply pushed the query data to trace previous actions from that attacker. Finally, in parallel, to the alerting cluster. The ability to use the same system and the the Parquet logs are processed by another Structured Streaming same API for data analysis and for implementing the alerts led cluster that generates real-time alerts based on pre-written rules. not only to significant engineering cost savings, but also to better The key challenges in realizing this platform are (1) building security, as it is significantly easier to deploy new rules. a robust and scalable streaming pipeline, while (2) providing the analysts with an effective environment to query both fresh and 8.2 Monitoring Live Video Delivery historical data. Using standard tools and services available on AWS, A large media company is using Structured Streaming to compute a team of 20 people took over six months to build and deploy quality metrics for their live video traffic and interactively identify a previous version of this platform in production. This previous delivery problems. Live video delivery is especially challenging version had several limitations, including only being able to store a

11 .because network problems can severely disrupt utility. For pre- 80 250 Millions of records/s 65 recorded video, clients can use large buffers to mask issues, and a 60 200 Millions of records/s degradation at most results in extra buffering time; but for live video, a problem may mean missing a critical moment in a sports match or 40 33 150 similar event. This organization collects video quality metrics from clients in real time, performs ETL operations and aggregation using 20 0.7 100 Structured Streaming, then stores the results in a data warehouse. 0 Kafka Streams This allows operations engineers to interactively query fresh data Apache Flink 50 Structured Streaming to detect and diagnose quality issues (e.g., determine whether an issue is tied to a specific ISP, video server or other cause). 0 0 10 20 8.3 Analyzing Game Performance Number of Nodes A large gaming company uses Structured Streaming to monitor the latency experienced by players in a popular online game with tens (a) vs. Other Systems (b) System Scaling of millions of monthly active users. As in the video use case, high Figure 6: Throughput results on the Yahoo! benchmark. network performance is essential for the user experience when gaming, and repeated problems can quickly lead to player churn. events from Kafka and writes them to a columnar Parquet table This organization collects latency logs from its game clients to in S3. Dozens of other batch and streaming jobs then query this cloud storage and then performs a variety of streaming analyses. table to produce dashboards and other reports. Because Parquet is For example, one job joins the measurements with a table of Internet a compact and column-oriented format, this architecture consumes Autonomous Systems (ASes) and then aggregates the performance drastically fewer resources than having every job read directly from by AS over time to identify poorly performing ASes. When such Kafka, and simultaneously places less load on the Kafka brokers. an AS is identified, the streaming job triggers an alert, and IT staff Overall, streaming jobs’ latencies range from seconds to minutes, can contact the AS in question to remediate the issue. and users can also query the Parquet table interactively in seconds. 8.4 Cloud Monitoring at Databricks 9 Performance Evaluation At Databricks, we have been using Apache Spark since the start of In this section, we measure the performance of Structured Stream- the company to monitor our own cloud service, understand work- ing using controlled benchmarks. We study performance vs. other load statistics, trigger alerts, and let our engineers interactively systems on the Yahoo! Streaming Benchmark [14], scalability, and debug issues. The monitoring pipeline produces dozens of interac- the throughput-latency tradeoff with continuous processing. tive dashboards as well as structured Parquet tables for ad-hoc SQL queries. These dashboards also play a key role for business users to 9.1 Performance vs. Other Streaming Systems understand which customers have increasing or decreasing usage, To evaluate performance compared to other streaming engines, we prioritize feature development, and proactively identify customers used the Yahoo! Streaming Benchmark [14], a widely used workload that are experiencing problems. that has also been evaluated in other open source systems. This We built at least three versions of a monitoring pipeline using benchmark requires systems to read ad click events, join them a combination of batch and streaming APIs starting four years against a static table of ad campaigns by campaign ID, and output ago, and in all the cases, we found that the major challenges were counts by campaign on 10-second event-time windows. operational. Despite our best efforts, pipelines could be brittle, expe- We compared Kafka Streams 0.10.2, Apache Flink 1.2.1 and Spark riencing frequent failures when aspects of our input data changed 2.3.0 on a cluster with five c3.2xlarge Amazon EC2 workers (each (e.g., new schemas or reading from more locations than before), and with 8 virtual cores and 15 GB RAM) and one master. For Flink, upgrading them was a daunting exercise. Worse yet, failures and up- we used the optimized version of the benchmark published by grades often resulted in missing data, so we had to manually go back dataArtisans for a similar cluster [22]. Like in that benchmark, the and re-run jobs to reconstruct the missing data. Testing pipelines systems read data from a Kafka cluster running on the workers with was also challenging due to their reliance on multiple distinct Spark 40 partitions (one per core), and write results to Kafka. The original jobs and storage systems. Our experience with Structured Stream- Yahoo! benchmark used Redis to hold the static table for joining ing shows that it successfully addresses many of these challenges. ad campaigns, but we found that Redis could be a bottleneck, so Not only we were able to reimplement our pipelines in weeks, we replaced it with a table in each system (a KTable in Kafka, a but the management overhead decreased drastically. Restartability DataFrame in Spark, and an in-memory hash map in Flink). coupled with adaptive batching, transactional sources/sinks and Figure 6a shows each system’s maximum stable throughput, i.e., well-defined consistency semantics have enabled simpler fault re- the throughput it can process before a backlog begins to form. We covery, upgrades, and rollbacks to repair old results. Moreover, we see that streaming system performance can vary significantly. Kafka can test the same code in batch mode on data samples or use many Streams implements a simple message-passing model through the of the same functions in interactive queries. Kafka message bus, but only attains 700,000 records/second on our Our pipelines with Structured Streaming also regularly combine 40-core cluster. Apache Flink reaches 33 million records/s. Finally, its batch and streaming capabilities. For example, the pipeline to Structured Streaming reaches 65 million records/s, nearly 2× the monitor streaming jobs starts with an ETL job that reads JSON throughput of Flink. This particular Structured Streaming query is

12 . 1000 From an API standpoint, the closest work is incremental query Latency (ms) systems [11, 24, 29, 38], including recent distributed systems such as 100 Stateful Bulk Processing [25] and Naiad [26]. Structured Streaming’s 10 API is an extension of Spark SQL [8], including its declarative DataFrame interface for programmatic construction of relational 1 queries. Apache Flink also recently added a table API (currently in 0 200000 400000 600000 800000 1000000 beta) for defining relational queries that can map to either streaming Input Rate (records/s) or batch execution [19], but this API lacks some of the features of Structured Streaming, such as custom stateful operators (§4.3.2). Figure 7: Latency of continuous processing vs. input rate. Other recent streaming systems have language-integrated APIs Dashed line shows max throughput in microbatch mode. that operate at a lower, more “imperative" level. In particular, Spark Streaming [37], Google Dataflow [2] and Flink’s DataStream API [18] implemented using just DataFrame operations with no UDF code. provide various functional operators but require users to choose the The performance thus comes solely from Spark SQL’s built in exe- right DAG of operators to implement a particular incrementaliza- cution optimizations, including storing data in a compact binary tion strategy (e.g., when to pass on deltas versus complete results); format and runtime code generation. As pointed out by the authors essentially, these are equivalent to writing a physical execution of Trill [12] and others, execution optimizations can make a large plan. Structured Streaming’s API is simpler for users who are not difference in streaming workloads, and many systems based on experts on incrementalization. Structured Streaming adopts the per-record operations do not maximize performance. definitions of event time, processing time, watermarks and triggers 9.2 Scalability from Dataflow but incorporates them in an incremental model. For execution, Structured Streaming uses concepts similar to dis- Figure 6b shows how Structured Streaming’s performance scales for cretized streams for microbatch mode [37] and traditional streaming the Yahoo! benchmark as we vary the size of our cluster. We used 1, engines for continuous processing mode [1, 13, 21]. It also builds 5, 10 and 20 c3.2xlarge Amazon EC2 workers (with 8 virtual cores on an analytical engine for performance like Trill [12]. The most and 15 GB RAM each) and the same experimental setup as in §9.1, unique contribution here is the integration of batch and stream- including one Kafka partition per core. We see that throughput ing queries to enable sophisticated end-to-end applications. As scales close to linearly, from 11.5 million records/s on 1 node to 225 described in §8, Structured Streaming users can easily write ap- million records/s on 20 nodes (i.e., 160 cores). plications that combine batch, interactive and stream processing 9.3 Continuous Processing using the same code (e.g., security log analysis). In addition, they We benchmarked Structurd Streaming’s continuous processing leverage powerful operational features such as run-once triggers mode on a 4-core server to show the latency-throughput trade- (running a streaming application “discontinuously" as batch jobs to offs it can achieve. (Because partitions run independently in this retain its transactional features but lower costs), code updates, and mode, we expect the latency to stay the same as more nodes are batch processing to handle backlogs or code rollbacks (§7). added.) Figure 7 shows the results for a map job reading from Kafka, with the dashed line showing the maximum throughput achiev- 11 Conclusion able by microbatch mode. We see that continuous mode is able to Stream processing is a powerful tool, but streaming systems are still achieve much lower latency without a large drop in throughput difficult to use, operate and integrate into larger applications. We (e.g., less than 10 ms latency at half the maximum throughput of mi- designed Structured Streaming to simplify all three of these tasks crobatching). Its maximum stable throughput is also slightly higher while integrating with the rest of Apache Spark. Unlike many other because microbatch mode incurs latency due to task scheduling. open source streaming engines, Structured Streaming purposefully 10 Related Work adopts a very high-level API: incrementalizing an existing Spark SQL or DataFrame query. This makes it accessible to a wide range Structured Streaming builds on many existing systems for stream of users. Although Structured Streaming’s API is more declarative processing and big data analytics, including Spark SQL’s DataFrame and constrained, we found that works well for a diverse range of API [8], Spark Streaming [37], Dataflow [2], incremental query applications, including those that require custom logic for state- systems [11, 24, 29, 38] and distributed stream processing [21]. At ful processing. Beyond this focus on a high-level API, Structured a high level, the main contributions of this work are: Streaming also includes several powerful operational features and • An account of real-world user challenges with streaming sys- achieves high performance using the Spark SQL engine. Experi- tems, including operational challenges that are not always dis- ence across hundreds of customer use cases shows that users can cussed in the research literature (§2). leverage the system to build sophisticated business applications. • A simple, declarative programming model that incrementalizes 12 Acknowledgements a widely used batch API (Spark DataFrames/SQL) to provide similar capabilities to Dataflow [2] and other streaming systems. We would like to thank the diverse Apache Spark developer commu- • An execution engine providing high throughput, fault tolerance, nity that has contributed to Structured Streaming, Spark Streaming and rich operational features that combines with the rest of and Spark SQL over the years. We also thank the SIGMOD reviewers Apache Spark to let users easily build end-to-end applications. for their detailed feedback on the paper.