- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/TiDB/PingCAPInfraMeetup99xupengWiredTiger40321?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

PingCAP-Infra-Meetup-99-xupeng-WiredTiger-实现探秘

展开查看详情

1 .WIREDTIGER实现探秘 Presented by 许鹏

2 . 引擎特点 - 最小化线程间的竞争 - 无锁算法 如hazard pinters - 减少并发访问中的互斥操作 - 充分挖掘硬件能力 - 多核 - 大内存 - 缓存和文件压缩

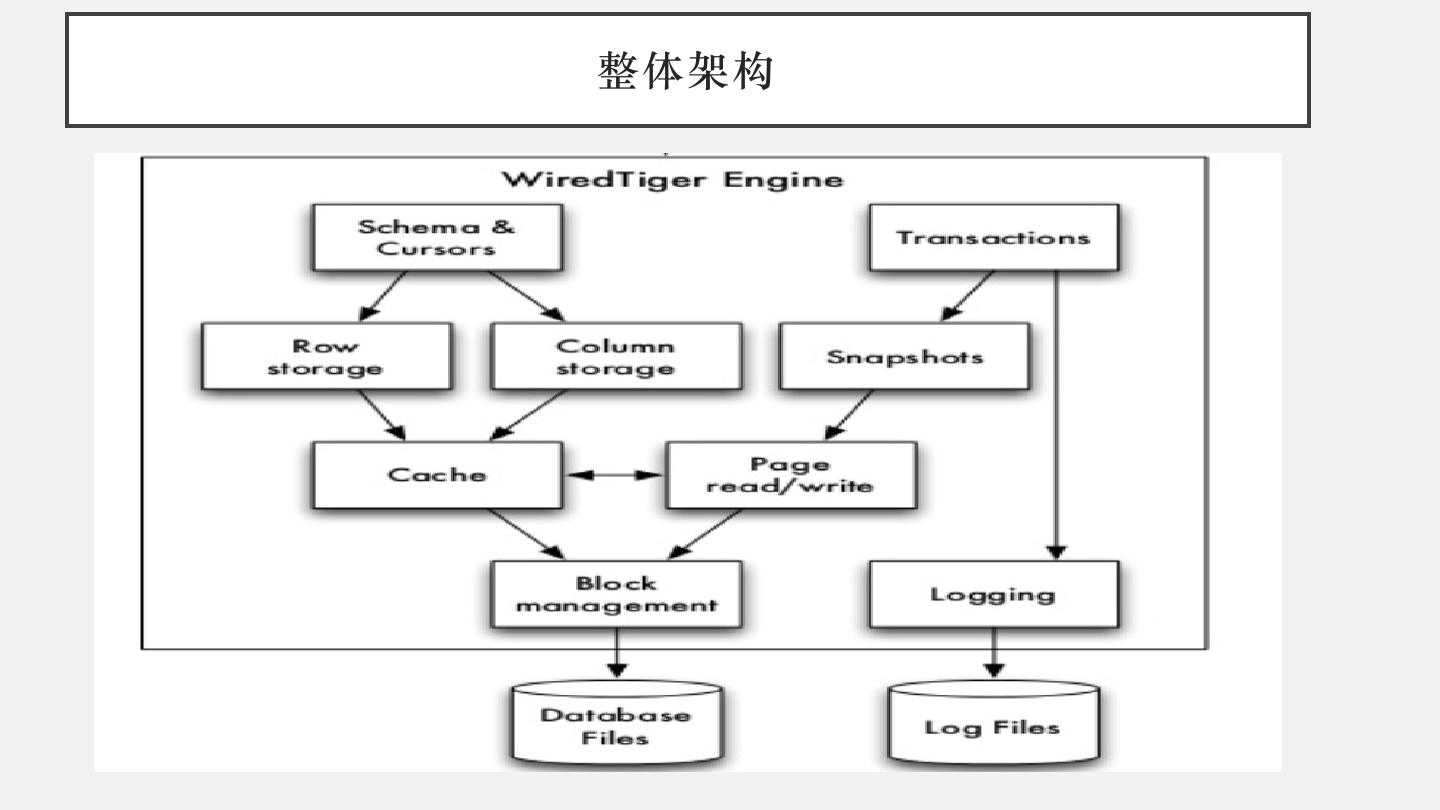

3 .整体架构

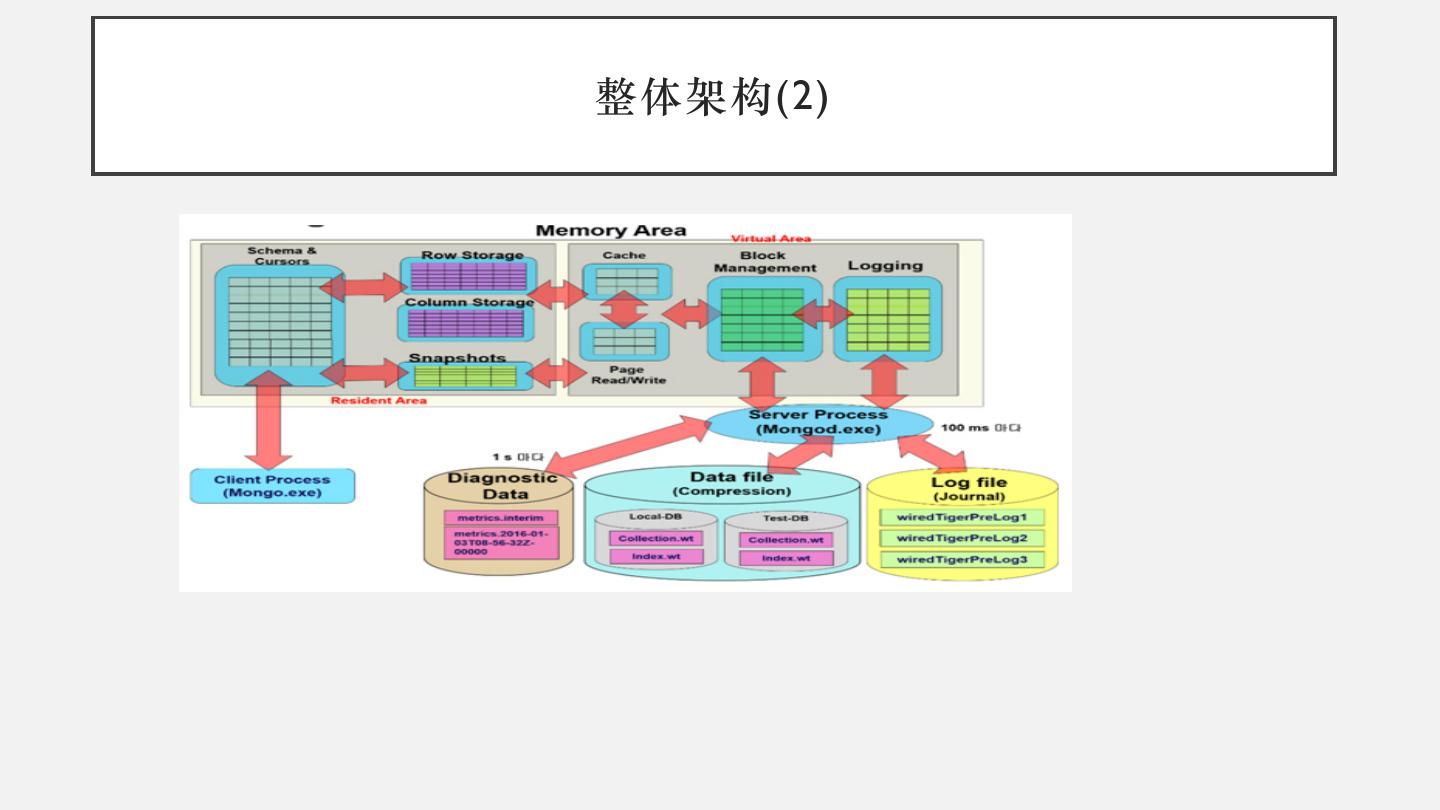

4 .整体架构(2)

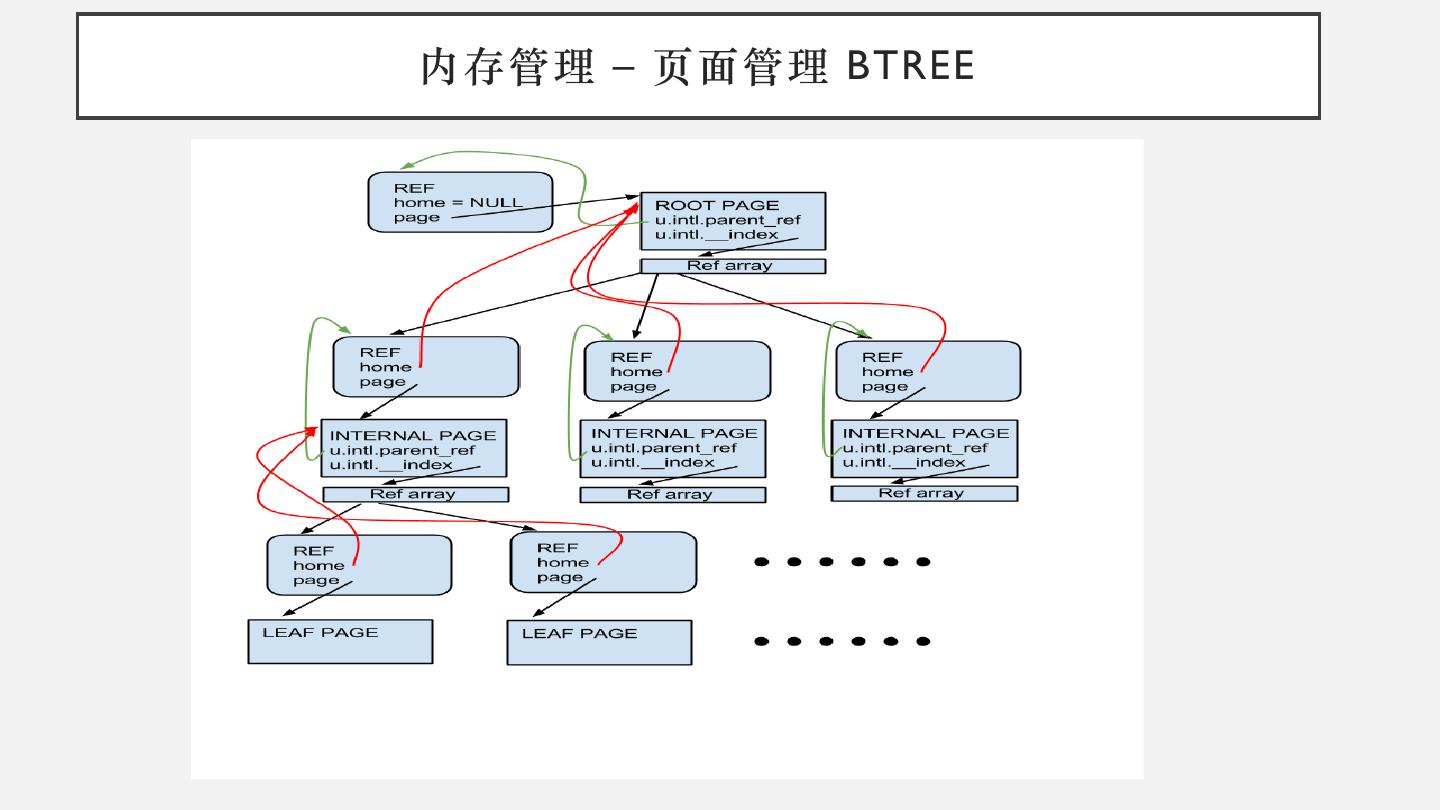

5 .内存管理 – 页面管理 BTREE

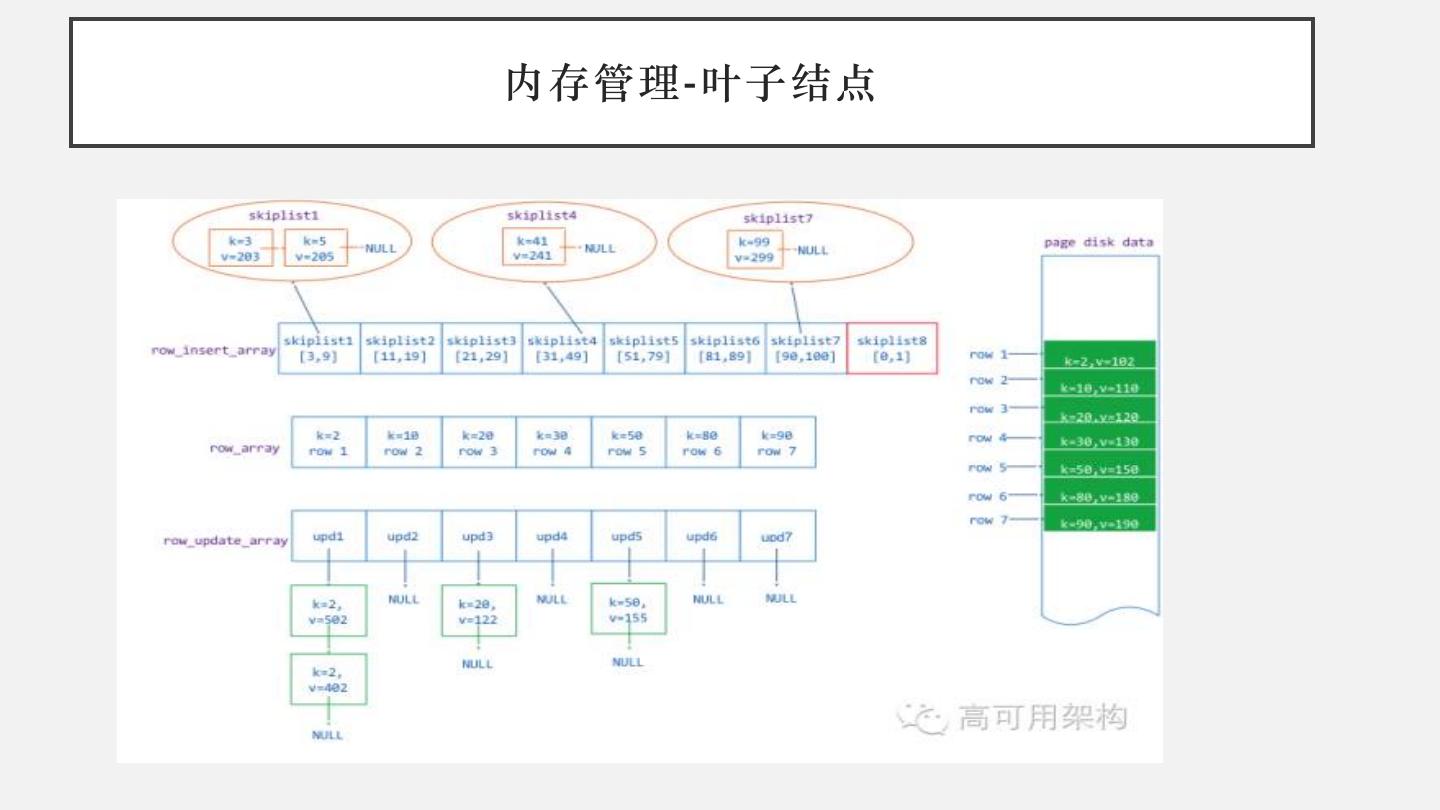

6 .内存管理-叶子结点

7 . 初始化写入 – 数据没有落入磁盘 p *((WT_CURSOR_BTREE*)cursor).btree.root.page.u.intl.__index.index[0].page p ((WT_CURSOR_BTREE*)cursor).btree.root.page.u.intl.__index.index[0].page.modify.u2 $97 = { u={ $99 = { intl = { intl = { parent_ref = 0x0, root_split = 0x693080 split_gen = 0, }, column_leaf = { __index = 0x0 }, append = 0x693080, row = 0x0, update = 0x0, fix_bitf = 0x0, split_recno = 0 col_var = { }, row_leaf = { col_var = 0x0, repeats = 0x0 insert = 0x693080, } update = 0x0 }, } entries = 0, } type = 7 '\a', flags_atomic = 0 '\000', unused = "\000", read_gen = 201, memory_footprint = 746, dsk = 0x0, modify = 0x6933a0, cache_create_gen = 1, evict_pass_gen = 0 }

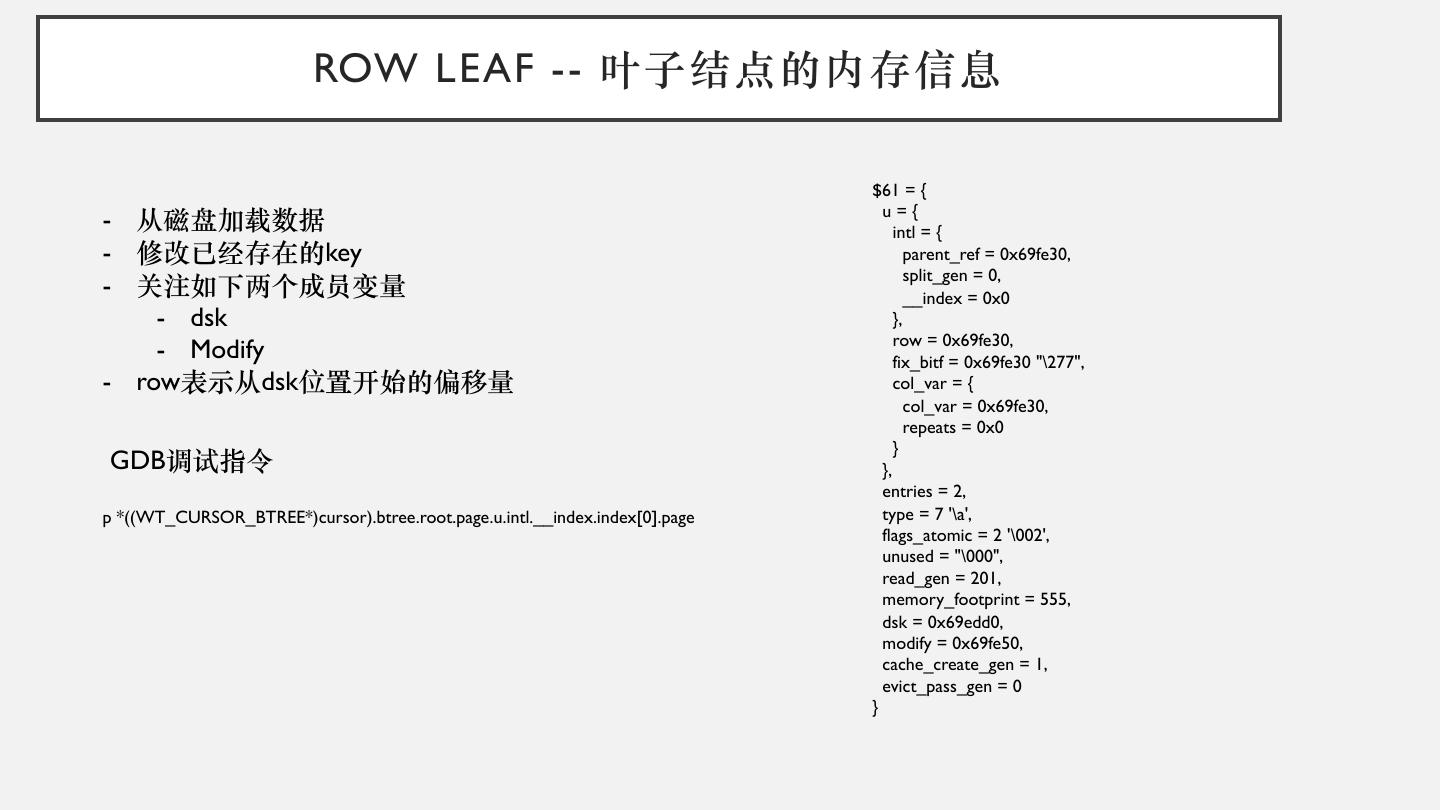

8 . ROW LEAF -- 叶子结点的内存信息 $61 = { u={ - 从磁盘加载数据 intl = { - 修改已经存在的key parent_ref = 0x69fe30, split_gen = 0, - 关注如下两个成员变量 __index = 0x0 - dsk }, row = 0x69fe30, - Modify fix_bitf = 0x69fe30 "\277", - row表示从dsk位置开始的偏移量 col_var = { col_var = 0x69fe30, repeats = 0x0 } GDB调试指令 }, entries = 2, p *((WT_CURSOR_BTREE*)cursor).btree.root.page.u.intl.__index.index[0].page type = 7 '\a', flags_atomic = 2 '\002', unused = "\000", read_gen = 201, memory_footprint = 555, dsk = 0x69edd0, modify = 0x69fe50, cache_create_gen = 1, evict_pass_gen = 0 }

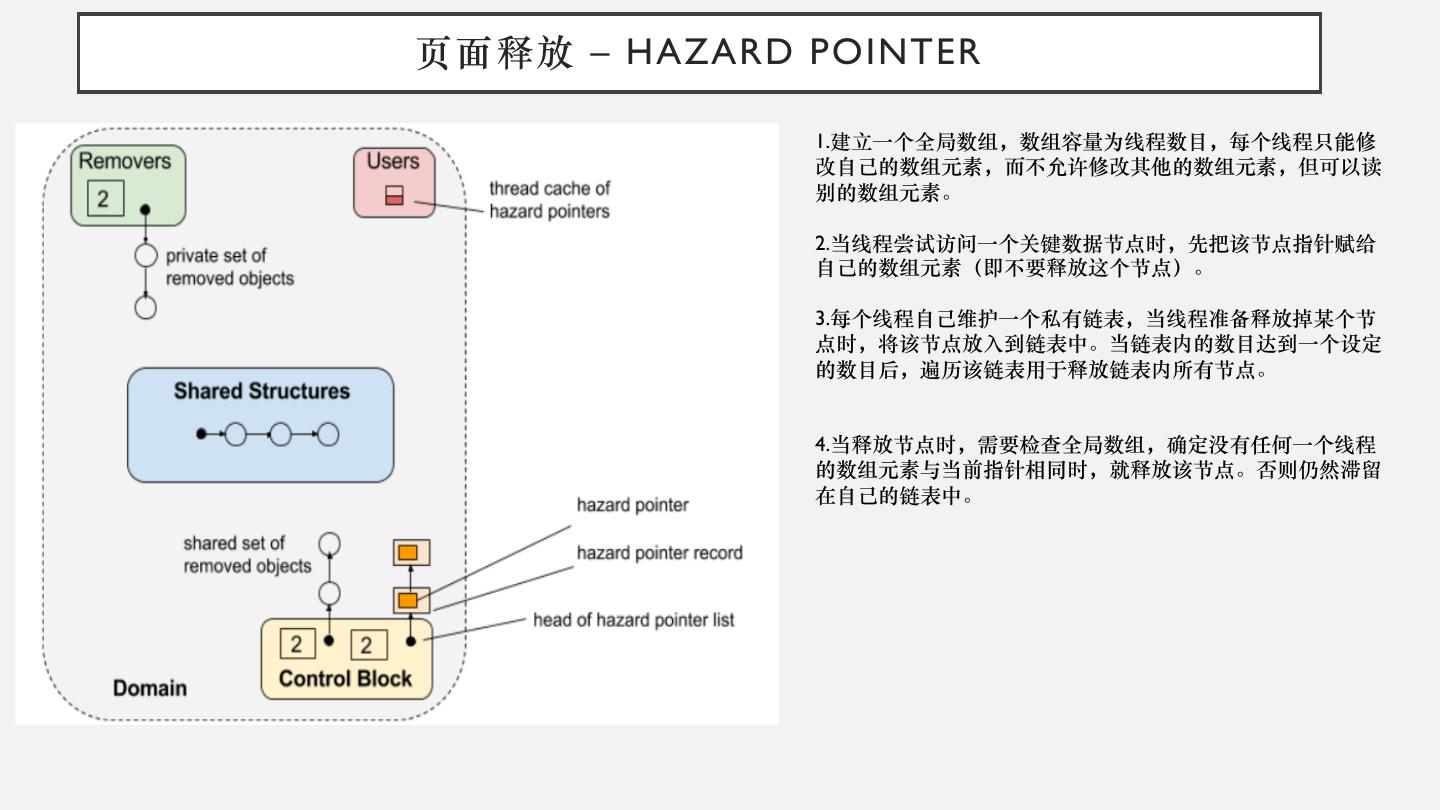

9 .页面释放 – HAZARD POINTER 1.建立一个全局数组,数组容量为线程数目,每个线程只能修 改自己的数组元素,而不允许修改其他的数组元素,但可以读 别的数组元素。 2.当线程尝试访问一个关键数据节点时,先把该节点指针赋给 自己的数组元素(即不要释放这个节点)。 3.每个线程自己维护一个私有链表,当线程准备释放掉某个节 点时,将该节点放入到链表中。当链表内的数目达到一个设定 的数目后,遍历该链表用于释放链表内所有节点。 4.当释放节点时,需要检查全局数组,确定没有任何一个线程 的数组元素与当前指针相同时,就释放该节点。否则仍然滞留 在自己的链表中。

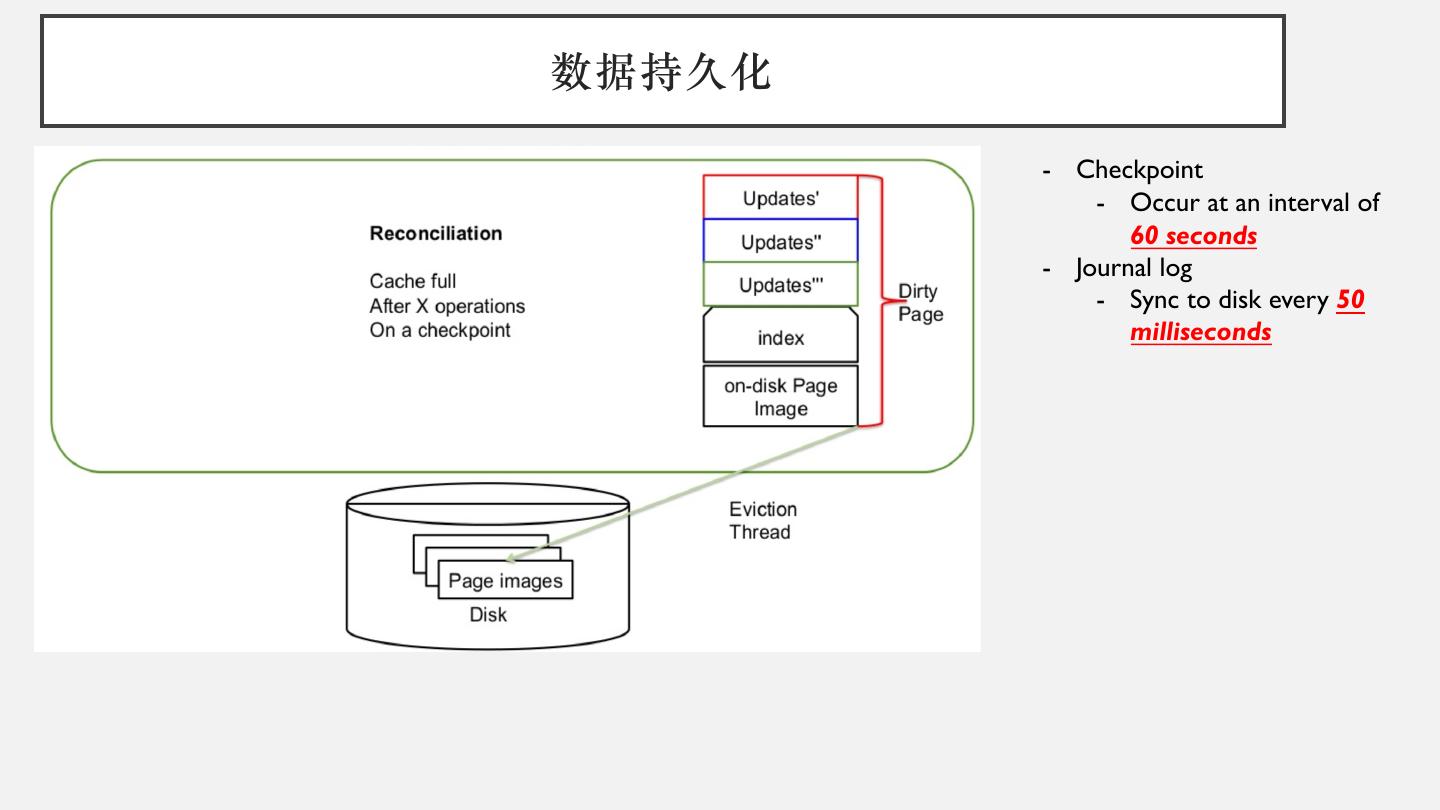

10 .数据持久化 - Checkpoint - Occur at an interval of 60 seconds - Journal log - Sync to disk every 50 milliseconds

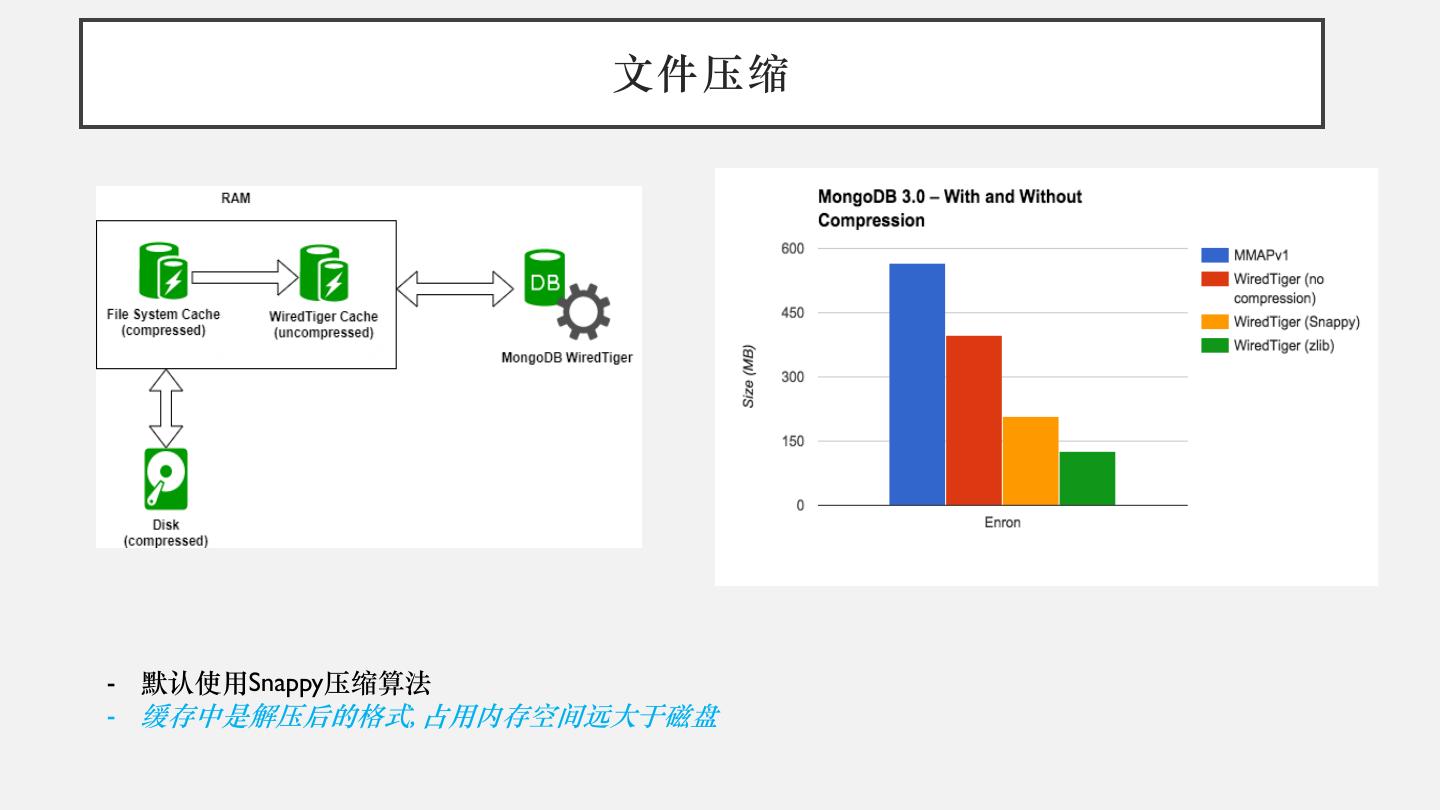

11 . 文件压缩 - 默认使用Snappy压缩算法 - 缓存中是解压后的格式, 占用内存空间远大于磁盘

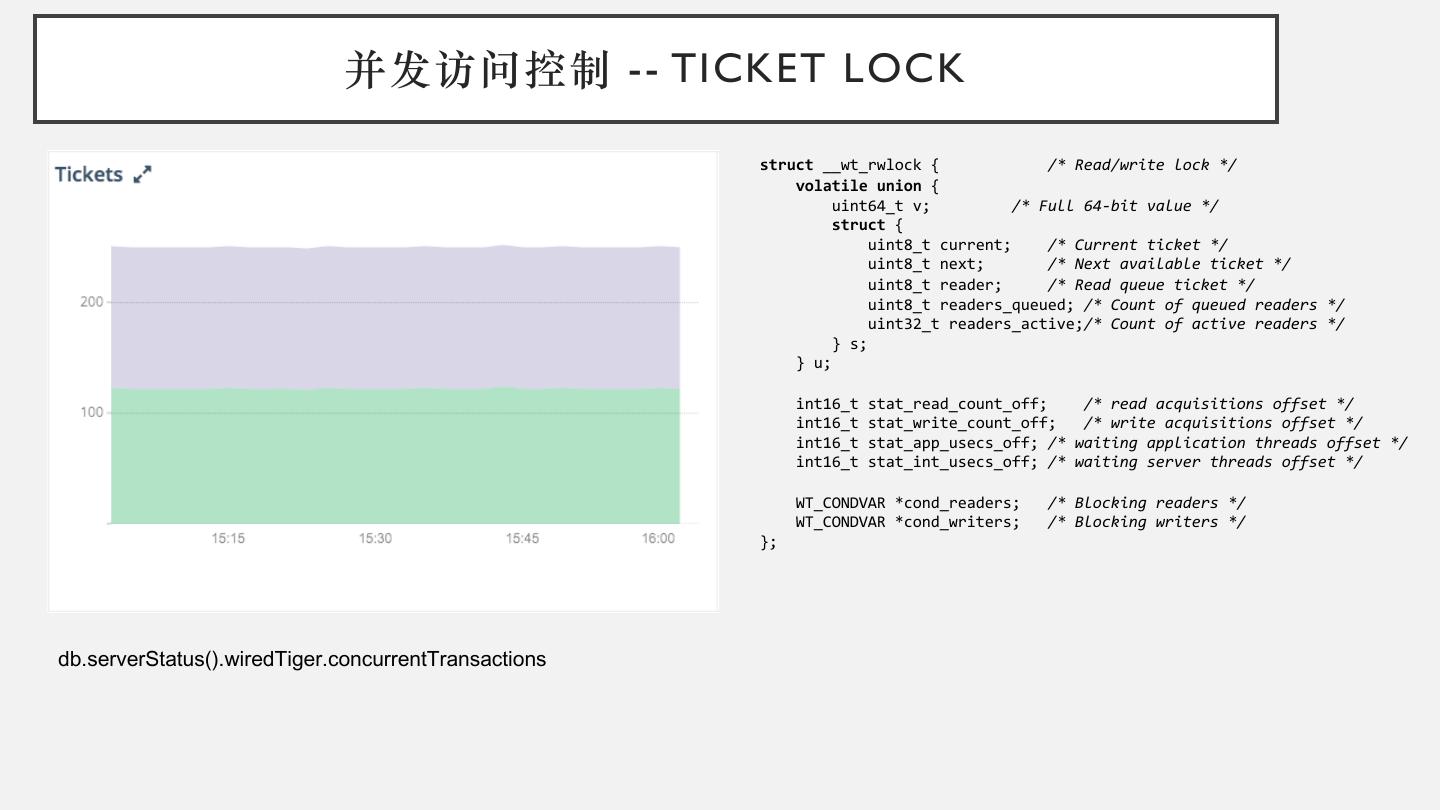

12 . 并发访问控制 -- TICKET LOCK struct __wt_rwlock { /* Read/write lock */ volatile union { uint64_t v; /* Full 64-bit value */ struct { uint8_t current; /* Current ticket */ uint8_t next; /* Next available ticket */ uint8_t reader; /* Read queue ticket */ uint8_t readers_queued; /* Count of queued readers */ uint32_t readers_active;/* Count of active readers */ } s; } u; int16_t stat_read_count_off; /* read acquisitions offset */ int16_t stat_write_count_off; /* write acquisitions offset */ int16_t stat_app_usecs_off; /* waiting application threads offset */ int16_t stat_int_usecs_off; /* waiting server threads offset */ WT_CONDVAR *cond_readers; /* Blocking readers */ WT_CONDVAR *cond_writers; /* Blocking writers */ }; db.serverStatus().wiredTiger.concurrentTransactions

13 . 性能监控指标 • db.server.status().wiredtiger • db.coll.stats() • db.currentop()

14 . 参数设置 db.createCollection( "users", { storageEngine: { wiredTiger: { configString: "leaf_page_max=64kb, leaf_value_max=64MB" } } } )

15 . DEMO APPLIC ATION #include "wiredtiger.h“ #include <stdio.h> int main(int argc, char** argv) { char* home = "/tmp/wt"; WT_CONNECTION *conn; WT_SESSION *session; WT_CURSOR *cursor; const char* key, *value; int ret; wiredtiger_open(home, NULL, "create", &conn); conn->open_session(conn, NULL, NULL, &session); //create a table session->create(session, "table:demo", "key_format=S, value_format=S"); //open the table session->open_cursor(session, "table:demo", NULL, NULL, &cursor); cursor->set_key(cursor, "name"); cursor->set_value(cursor, "peng"); cursor->insert(cursor); cursor->set_key(cursor, "iris"); cursor->set_value(cursor, "teacher"); cursor->insert(cursor); cursor->reset(cursor); while ( (ret = cursor->next(cursor) ) == 0 ) { cursor->get_key(cursor, &key); cursor->get_value(cursor, &value); printf("Got Record: %s, %s\n", key, value); } conn->close(conn, NULL); }

16 . DEMO APPLICATION – 编译和运行 编译 gcc -g -I. -o /tmp/wt_demo.o -c ~/working/wt_demo.c gcc -L .libs/ $PWD/.libs/libwiredtiger-3.1.0.so /tmp/wt_demo.o -o /tmp/wt_demo LD_LIBRARY_PATH=$PWD/.libs /tmp/wt_demo 调试 set print pretty br wt_demo.c:37 r set scheduler-locking on

17 .Q &A