- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

20 Distributed Storage #2

展开查看详情

1 . Today’s Papers EECS 262a • The Google File System. Sanjay Ghemawat, Howard Gobioff, and Shun- Tak Leung, Symposium on Operating Systems Design and Implementation Advanced Topics in Computer Systems (OSDI), 2003 Lecture 23 • Bigtable: a distributed storage system for structured data. Appears in Proceedings of the 7th Conference on USENIX Symposium on Operating GFS/BigTable Systems Design and Implementation (OSDI), 2006 November 1st, 2018 • Thoughts? John Kubiatowicz (with slides from Ion Stoica and Ali Ghodsi) Electrical Engineering and Computer Sciences University of California, Berkeley http://www.eecs.berkeley.edu/~kubitron/cs262 11/01/2018 cs262a-F18 Lecture-20 2 Distributed File Systems before GFS What is Different about GFS environment? • Component failures are norm rather than exception, why? – File system consists of 100s/1,000s commodity storage servers – Comparable number of clients • Much bigger files, how big? – GBs file sizes common – TBs data sizes: hard to manipulate billions of KB size blocks • Really high access rate for files – Streaming access patterns – Apps want to process data in bulk at a high rate • Pros/Cons of these approaches? – Few have stringent response time requirements on individual reads/writes 11/01/2018 cs262a-F18 Lecture-20 3 11/01/2018 cs262a-F18 Lecture-20 4

2 . What is Different about GFS environment? Assumptions and Design Requirements (1/2) • Common mode: Files are mutated by appending new • Should monitor, detect, tolerate, and recover from failures data, and not overwriting – Random writes practically non-existent • Store a modest number of large files (millions of 100s MB – Once written, files are only read sequentially files) – Small files supported, but no need to be efficient • Own both applications and file system can effectively co-designing applications and file system APIs • Workload – Relaxed consistency model – Large sequential reads – Small reads supported, but ok to batch them – Atomic append operation: allow multiple clients to append data to a file with no extra synchronization between them – Large, sequential writes that append data to files – Small random writes supported but no need to be efficient 11/01/2018 cs262a-F18 Lecture-20 5 11/01/2018 cs262a-F18 Lecture-20 6 Assumptions and Design Requirements (2/2) API • Implement well-defined semantics for concurrent appends • Not POSIX, but.. – Producer-consumer model – Support usual ops: create, delete, open, close, read, and write – Support hundreds of producers concurrently appending to a file – Support snapshot and record append operations – Provide atomicity with minimal synchronization overhead • Concurrent Append is correct without additional locking – Support consumers reading the file as it is written • High sustained bandwidth more important than low latency 11/01/2018 cs262a-F18 Lecture-20 7 11/01/2018 cs262a-F18 Lecture-20 8

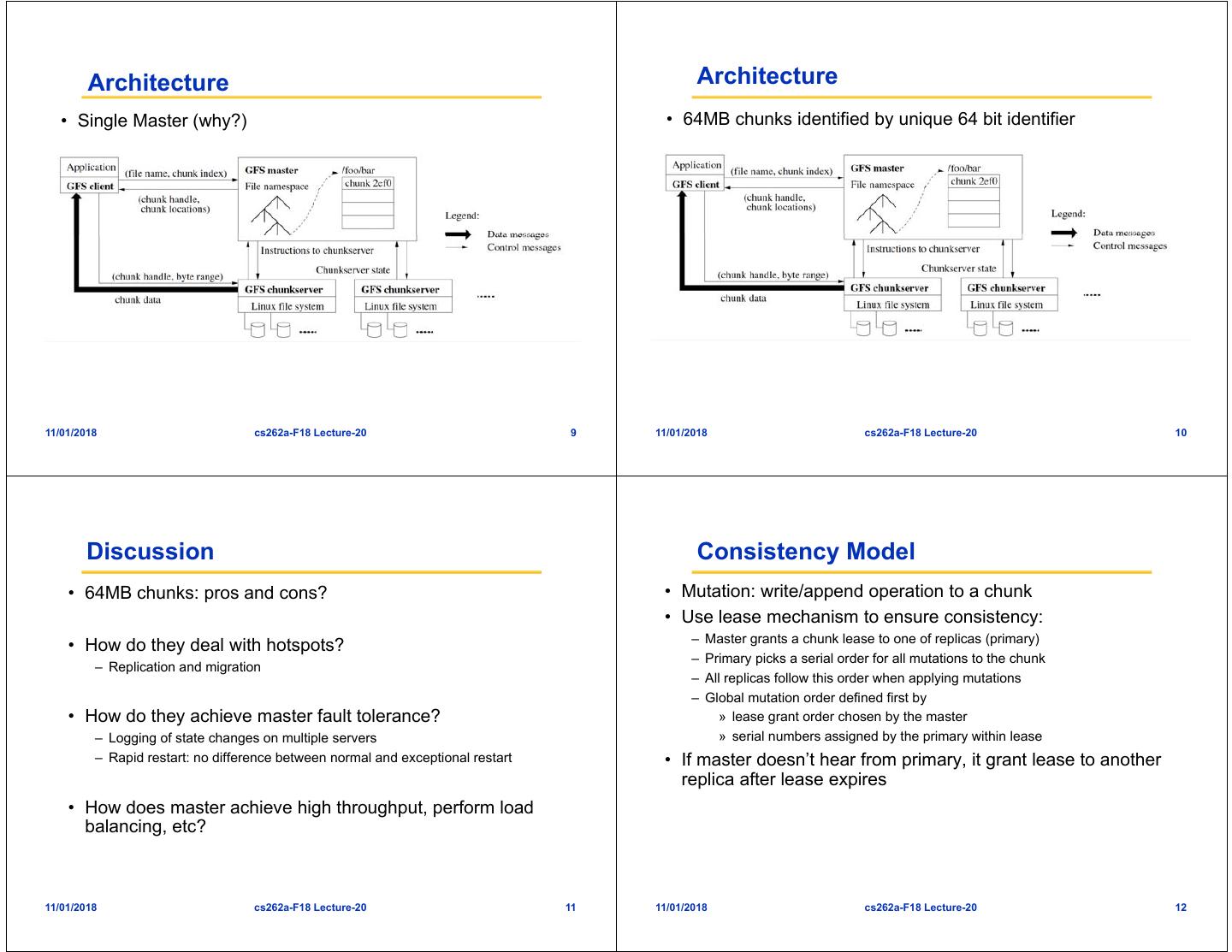

3 . Architecture Architecture • Single Master (why?) • 64MB chunks identified by unique 64 bit identifier 11/01/2018 cs262a-F18 Lecture-20 9 11/01/2018 cs262a-F18 Lecture-20 10 Discussion Consistency Model • 64MB chunks: pros and cons? • Mutation: write/append operation to a chunk • Use lease mechanism to ensure consistency: – Master grants a chunk lease to one of replicas (primary) • How do they deal with hotspots? – Primary picks a serial order for all mutations to the chunk – Replication and migration – All replicas follow this order when applying mutations – Global mutation order defined first by • How do they achieve master fault tolerance? » lease grant order chosen by the master – Logging of state changes on multiple servers » serial numbers assigned by the primary within lease – Rapid restart: no difference between normal and exceptional restart • If master doesn’t hear from primary, it grant lease to another replica after lease expires • How does master achieve high throughput, perform load balancing, etc? 11/01/2018 cs262a-F18 Lecture-20 11 11/01/2018 cs262a-F18 Lecture-20 12

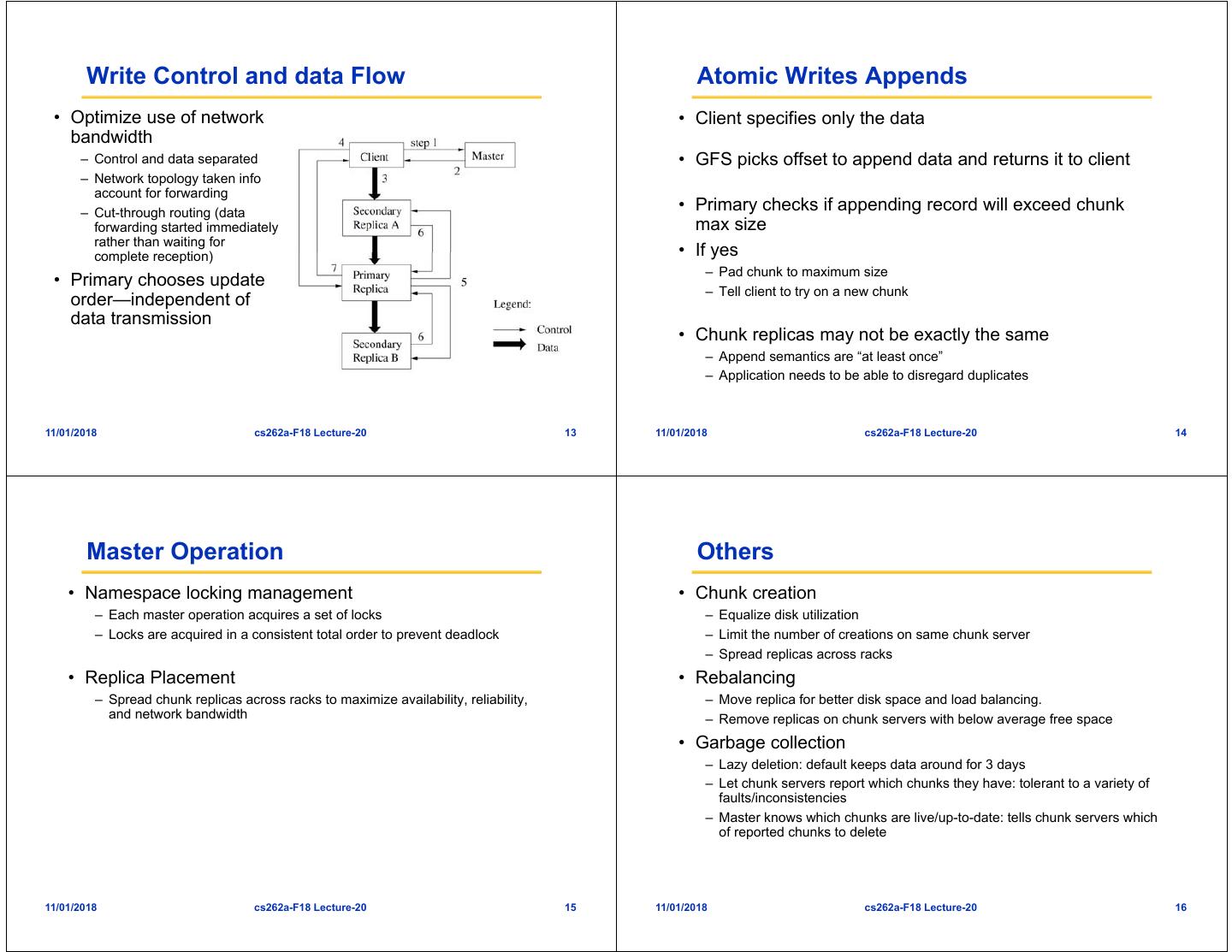

4 . Write Control and data Flow Atomic Writes Appends • Optimize use of network • Client specifies only the data bandwidth – Control and data separated • GFS picks offset to append data and returns it to client – Network topology taken info account for forwarding – Cut-through routing (data • Primary checks if appending record will exceed chunk forwarding started immediately max size rather than waiting for complete reception) • If yes – Pad chunk to maximum size • Primary chooses update – Tell client to try on a new chunk order—independent of data transmission • Chunk replicas may not be exactly the same – Append semantics are “at least once” – Application needs to be able to disregard duplicates 11/01/2018 cs262a-F18 Lecture-20 13 11/01/2018 cs262a-F18 Lecture-20 14 Master Operation Others • Namespace locking management • Chunk creation – Each master operation acquires a set of locks – Equalize disk utilization – Locks are acquired in a consistent total order to prevent deadlock – Limit the number of creations on same chunk server – Spread replicas across racks • Replica Placement • Rebalancing – Spread chunk replicas across racks to maximize availability, reliability, – Move replica for better disk space and load balancing. and network bandwidth – Remove replicas on chunk servers with below average free space • Garbage collection – Lazy deletion: default keeps data around for 3 days – Let chunk servers report which chunks they have: tolerant to a variety of faults/inconsistencies – Master knows which chunks are live/up-to-date: tells chunk servers which of reported chunks to delete 11/01/2018 cs262a-F18 Lecture-20 15 11/01/2018 cs262a-F18 Lecture-20 16

5 . Summary Is this a good paper? • GFS meets Google storage requirements • What were the authors’ goals? – Optimized for given workload • What about the evaluation/metrics? – Simple architecture: highly scalable, fault tolerant • Did they convince you that this was a good system/approach? • Why is this paper so highly cited? • Were there any red-flags? – Changed all DFS assumptions on its head – Thanks for new application assumptions at Google • What mistakes did they make? • Does the system/approach meet the “Test of Time” challenge? • How would you review this paper today? 11/01/2018 cs262a-F18 Lecture-20 17 11/01/2018 cs262a-F18 Lecture-20 18 BigTable • Distributed storage system for managing structured data • Designed to scale to a very large size – Petabytes of data across thousands of servers • Hugely successful within Google – used for many Google projects – Web indexing, Personalized Search, Google Earth, Google Analytics, Google Finance, … • Highly-available, reliable, flexible, high-performance solution for all of Google’s products BREAK • Offshoots/followons: – Spanner: Time-based consistency – LevelDB: Open source incorporating aspects of Big Table 11/01/2018 cs262a-F18 Lecture-20 19 11/01/2018 cs262a-F18 Lecture-20 20

6 . Motivation What about a Parallel DBMS? • Lots of (semi-)structured data at Google • Data is too large scale! – URLs: » Contents, crawl metadata, links, anchors, pagerank, … – Per-user data: • Using a commercial approach would be too expensive » User preference settings, recent queries/search results, … – Building internally means system can be applied across many projects for low incremental cost – Geographic locations: » Physical entities (shops, restaurants, etc.), roads, satellite image data, user annotations, … • Low-level storage optimizations significantly improve • Big Data scale performance – Billions of URLs, many versions/page (~20K/version) – Difficult to do when running on a DBMS – Hundreds of millions of users, thousands or q/sec – 100TB+ of satellite image data 11/01/2018 cs262a-F18 Lecture-20 21 11/01/2018 cs262a-F18 Lecture-20 22 Goals BigTable • A general-purpose data-center storage system • Distributed multi-level map • Asynchronous processes continuously updating different • Fault-tolerant, persistent pieces of data • Scalable – Access most current data at any time – Thousands of servers – Examine changing data (e.g., multiple web page crawls) – Terabytes of in-memory data • Need to support: – Petabyte of disk-based data – Durability, high availability, and very large scale – Millions of reads/writes per second, efficient scans – Big or little objects • Self-managing – Very high read/write rates (millions of ops per second) – Servers can be added/removed dynamically – Ordered keys and notion of locality – Servers adjust to load imbalance » Efficient scans over all or interesting subsets of data » Efficient joins of large one-to-one and one-to-many datasets 11/01/2018 cs262a-F18 Lecture-20 23 11/01/2018 cs262a-F18 Lecture-20 24

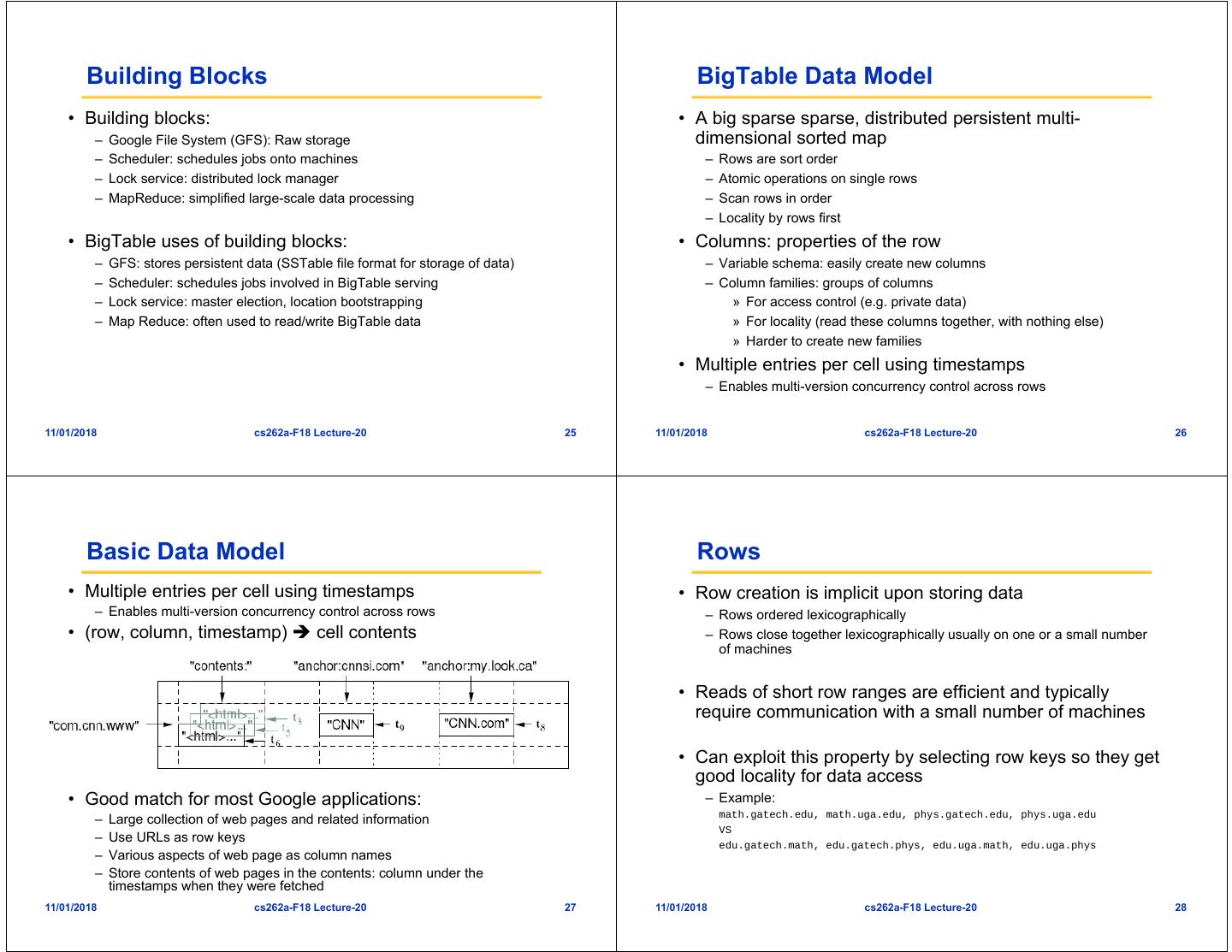

7 . Building Blocks BigTable Data Model • Building blocks: • A big sparse sparse, distributed persistent multi- – Google File System (GFS): Raw storage dimensional sorted map – Scheduler: schedules jobs onto machines – Rows are sort order – Lock service: distributed lock manager – Atomic operations on single rows – MapReduce: simplified large-scale data processing – Scan rows in order – Locality by rows first • BigTable uses of building blocks: • Columns: properties of the row – GFS: stores persistent data (SSTable file format for storage of data) – Variable schema: easily create new columns – Scheduler: schedules jobs involved in BigTable serving – Column families: groups of columns – Lock service: master election, location bootstrapping » For access control (e.g. private data) – Map Reduce: often used to read/write BigTable data » For locality (read these columns together, with nothing else) » Harder to create new families • Multiple entries per cell using timestamps – Enables multi-version concurrency control across rows 11/01/2018 cs262a-F18 Lecture-20 25 11/01/2018 cs262a-F18 Lecture-20 26 Basic Data Model Rows • Multiple entries per cell using timestamps • Row creation is implicit upon storing data – Enables multi-version concurrency control across rows – Rows ordered lexicographically • (row, column, timestamp) cell contents – Rows close together lexicographically usually on one or a small number of machines • Reads of short row ranges are efficient and typically require communication with a small number of machines • Can exploit this property by selecting row keys so they get good locality for data access • Good match for most Google applications: – Example: math.gatech.edu, math.uga.edu, phys.gatech.edu, phys.uga.edu – Large collection of web pages and related information VS – Use URLs as row keys edu.gatech.math, edu.gatech.phys, edu.uga.math, edu.uga.phys – Various aspects of web page as column names – Store contents of web pages in the contents: column under the timestamps when they were fetched 11/01/2018 cs262a-F18 Lecture-20 27 11/01/2018 cs262a-F18 Lecture-20 28

8 . Columns Timestamps • Columns have two-level name structure: • Used to store different versions of data in a cell – family:optional_qualifier – New writes default to current time, but timestamps for writes can also be set explicitly by clients • Column family – Unit of access control • Lookup options: – Has associated type information – “Return most recent K values” – “Return all values in timestamp range (or all values)” • Qualifier gives unbounded columns – Additional levels of indexing, if desired • Column families can be marked w/ attributes: – “Only retain most recent K values in a cell” – “Keep values until they are older than K seconds” 11/01/2018 cs262a-F18 Lecture-20 29 11/01/2018 cs262a-F18 Lecture-20 30 Basic Implementation Basic Implementation • Writes go to log then to in-memory table “memtable” • Reads: maintain in-memory map of keys to {SSTables, (key, value) memtable} – Current version is in exactly one SSTable or memtable • Periodically: move in memory table to disk => SSTable(s) – Reading based on timestamp requires multiple reads – “Minor compaction” – May also have to read many SSTables to get all of the columns » Frees up memory » Reduces recovery time (less log to scan) • Scan = merge-sort like merge of SSTables in order – SSTable = immutable ordered subset of table: range of keys and subset – “Merging compaction” – reduce number of SSTables of their columns – Easy since they are in sorted order » One locality group per SSTable (for columns) – Tablet = all of the SSTables for one key range + the memtable • Compaction » Tablets get split when they get too big – SSTables similar to segments in LFS » SSTables can be shared after the split (immutable) – Need to “clean” old SSTables to reclaim space – Some values may be stale (due to new writes to those keys) » Also to actually delete private data – Clean by merging multiple SSTables into one new one » “Major compaction” => merge all tables 11/01/2018 cs262a-F18 Lecture-20 31 11/01/2018 cs262a-F18 Lecture-20 32

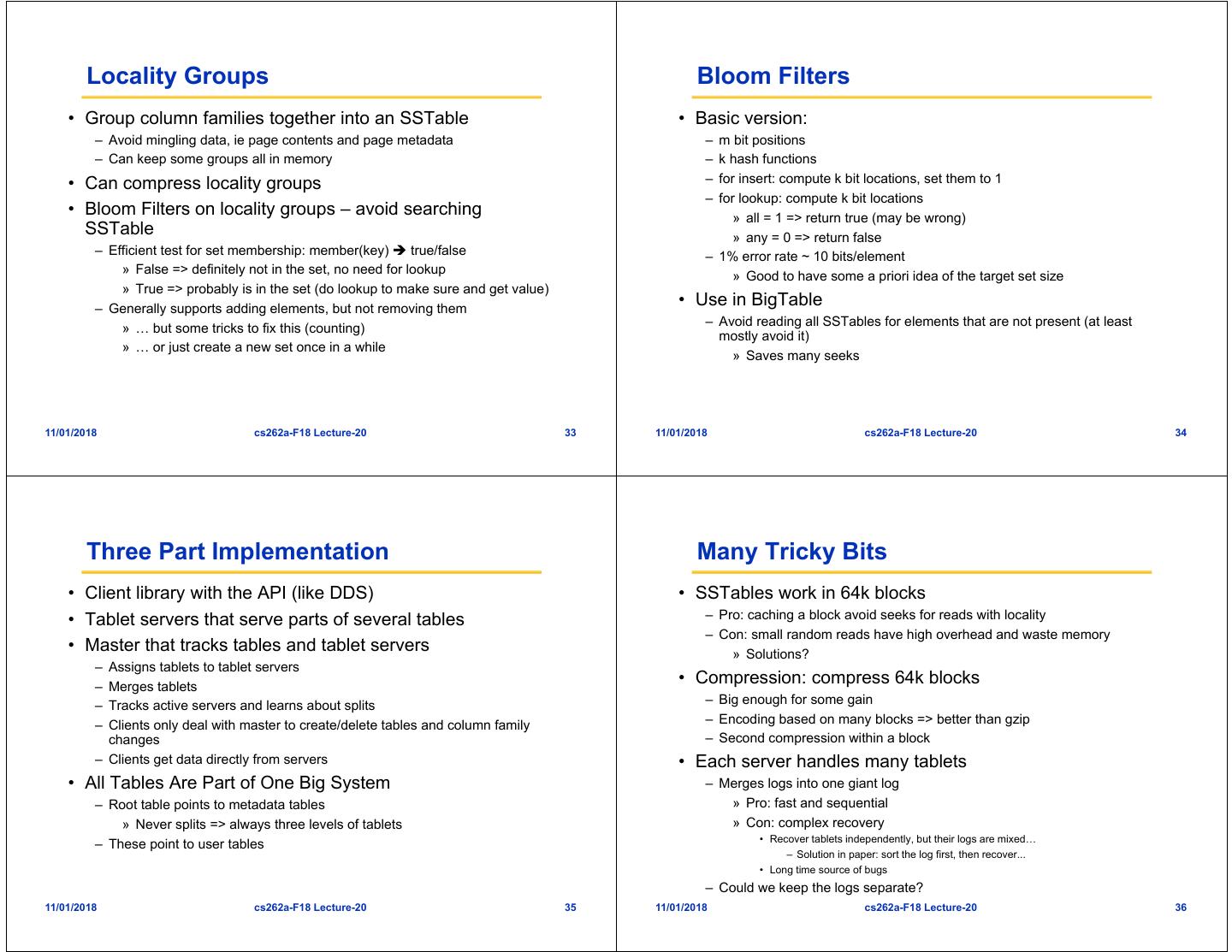

9 . Locality Groups Bloom Filters • Group column families together into an SSTable • Basic version: – Avoid mingling data, ie page contents and page metadata – m bit positions – Can keep some groups all in memory – k hash functions • Can compress locality groups – for insert: compute k bit locations, set them to 1 – for lookup: compute k bit locations • Bloom Filters on locality groups – avoid searching » all = 1 => return true (may be wrong) SSTable » any = 0 => return false – Efficient test for set membership: member(key) true/false – 1% error rate ~ 10 bits/element » False => definitely not in the set, no need for lookup » Good to have some a priori idea of the target set size » True => probably is in the set (do lookup to make sure and get value) – Generally supports adding elements, but not removing them • Use in BigTable » … but some tricks to fix this (counting) – Avoid reading all SSTables for elements that are not present (at least mostly avoid it) » … or just create a new set once in a while » Saves many seeks 11/01/2018 cs262a-F18 Lecture-20 33 11/01/2018 cs262a-F18 Lecture-20 34 Three Part Implementation Many Tricky Bits • Client library with the API (like DDS) • SSTables work in 64k blocks • Tablet servers that serve parts of several tables – Pro: caching a block avoid seeks for reads with locality – Con: small random reads have high overhead and waste memory • Master that tracks tables and tablet servers » Solutions? – Assigns tablets to tablet servers – Merges tablets • Compression: compress 64k blocks – Tracks active servers and learns about splits – Big enough for some gain – Clients only deal with master to create/delete tables and column family – Encoding based on many blocks => better than gzip changes – Second compression within a block – Clients get data directly from servers • Each server handles many tablets • All Tables Are Part of One Big System – Merges logs into one giant log – Root table points to metadata tables » Pro: fast and sequential » Never splits => always three levels of tablets » Con: complex recovery • Recover tablets independently, but their logs are mixed… – These point to user tables – Solution in paper: sort the log first, then recover... • Long time source of bugs – Could we keep the logs separate? 11/01/2018 cs262a-F18 Lecture-20 35 11/01/2018 cs262a-F18 Lecture-20 36

10 . Lessons learned Is this a good paper? • Interesting point – only implement some of the • What were the authors’ goals? requirements, since the last is probably not needed • What about the evaluation/metrics? • Many types of failure possible • Did they convince you that this was a good • Big systems need proper systems-level monitoring system/approach? – Detailed RPC trace of specific requests • Were there any red-flags? – Active monitoring of all servers • What mistakes did they make? • Value simple designs • Does the system/approach meet the “Test of Time” challenge? • How would you review this paper today? 11/01/2018 cs262a-F18 Lecture-20 37 11/01/2018 cs262a-F18 Lecture-20 38