- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

MySQL High Availability Solutions

每个人都希望拥有一个高可用性(HA)数据库。但是你选择哪种解决方案,你应该考虑什么?

在本次培训课程中,我们将研究一系列现有的和新的HA解决方案,包括

1。基于MySQL复制的HA解决方案(mha、Orchestrator)和路由选项(proxysql、ha proxy)

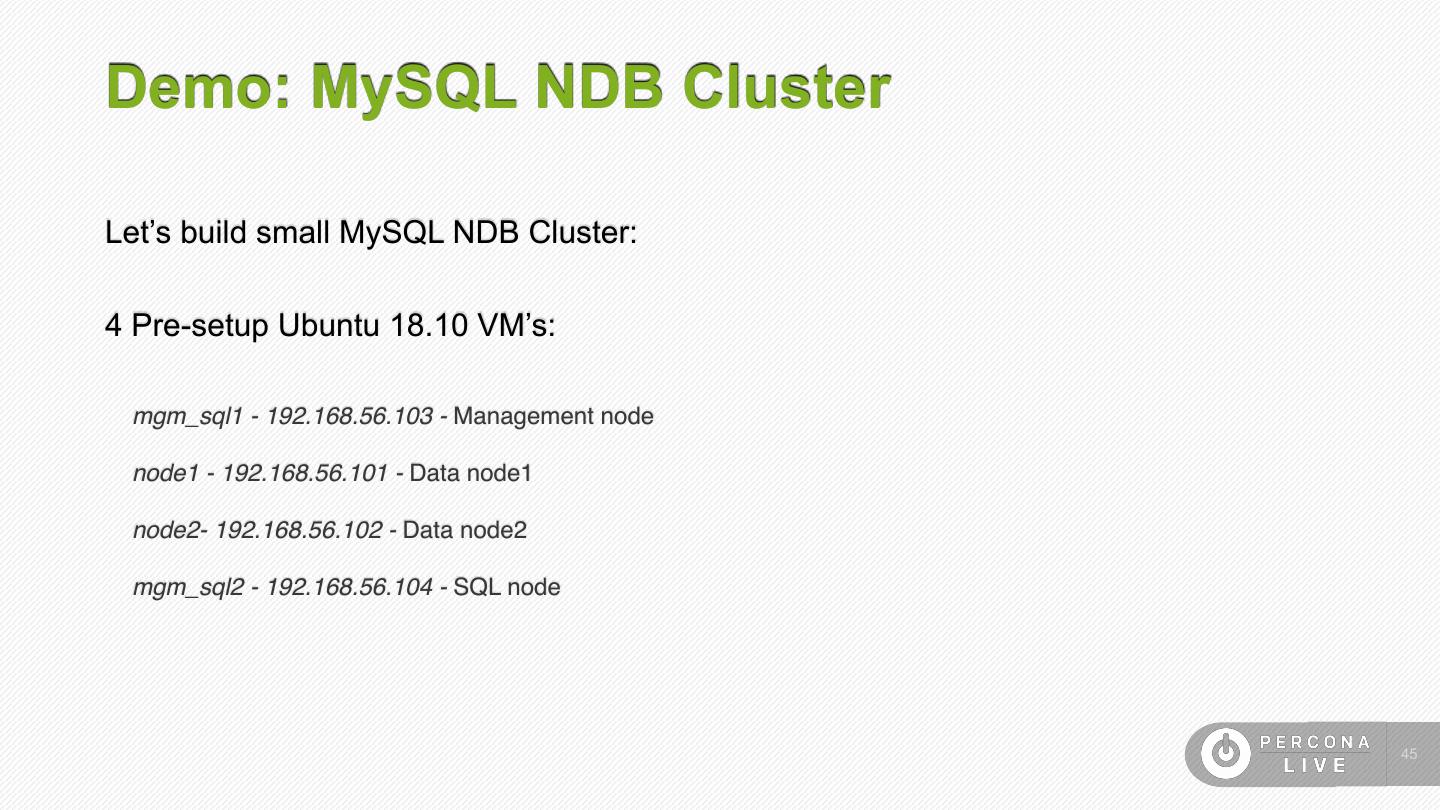

2。集群解决方案(percona xtradb集群、mysql ndb集群)

3。基于云的解决方案(AWS RDS/Aurora、Google Cloud SQL)

4。基于容器的解决方案(kubernetes/openshift)

我们将讨论每个解决方案的优缺点,并展示一些基本演示。

展开查看详情

1 .MySQL High Availability Solutions Alex Poritskiy Percona

2 .The Five 9s of Availability Clustering & Geographical disasters Redundancy power failures Clustering network failures Technologies hardware failures Replication software failures Technologies maintenance operations Well-Managed hardware upgrades software upgrades Unmanaged 35 days 4 days 8 hours 50 mins 5 mins Small ISPs & Telco Data Banking Business Mainstream Military Centers Medical Business Defense 9 9 9 9 9 % !2

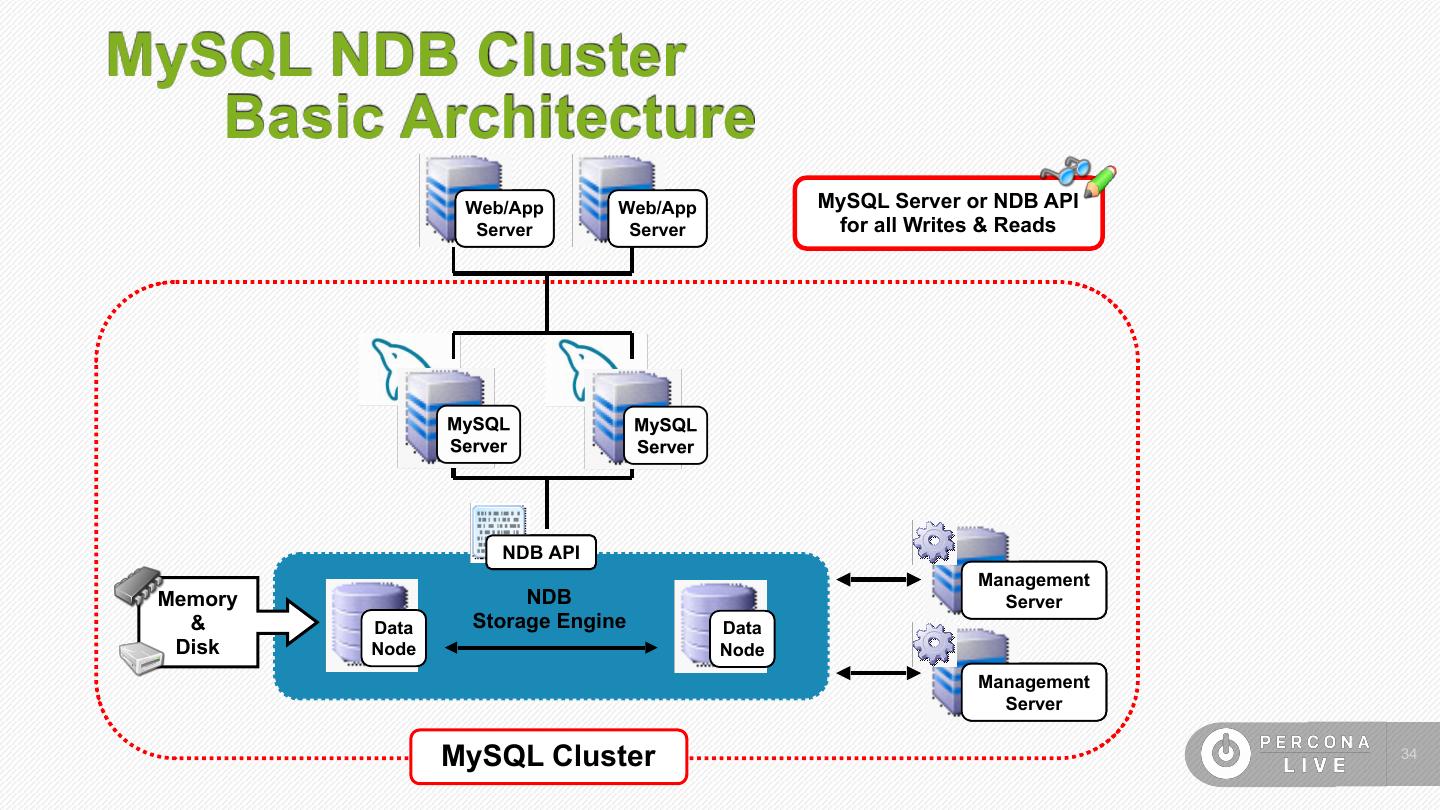

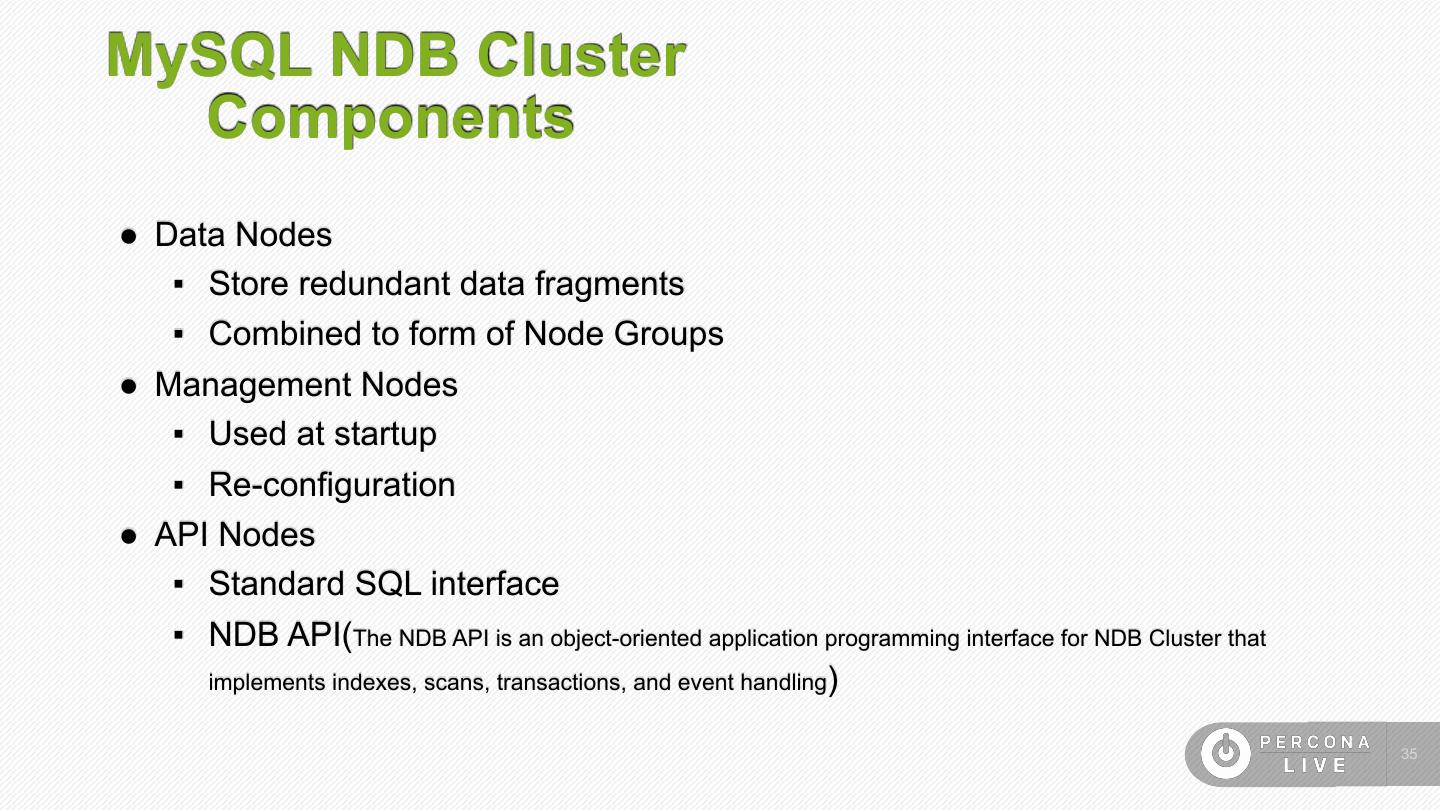

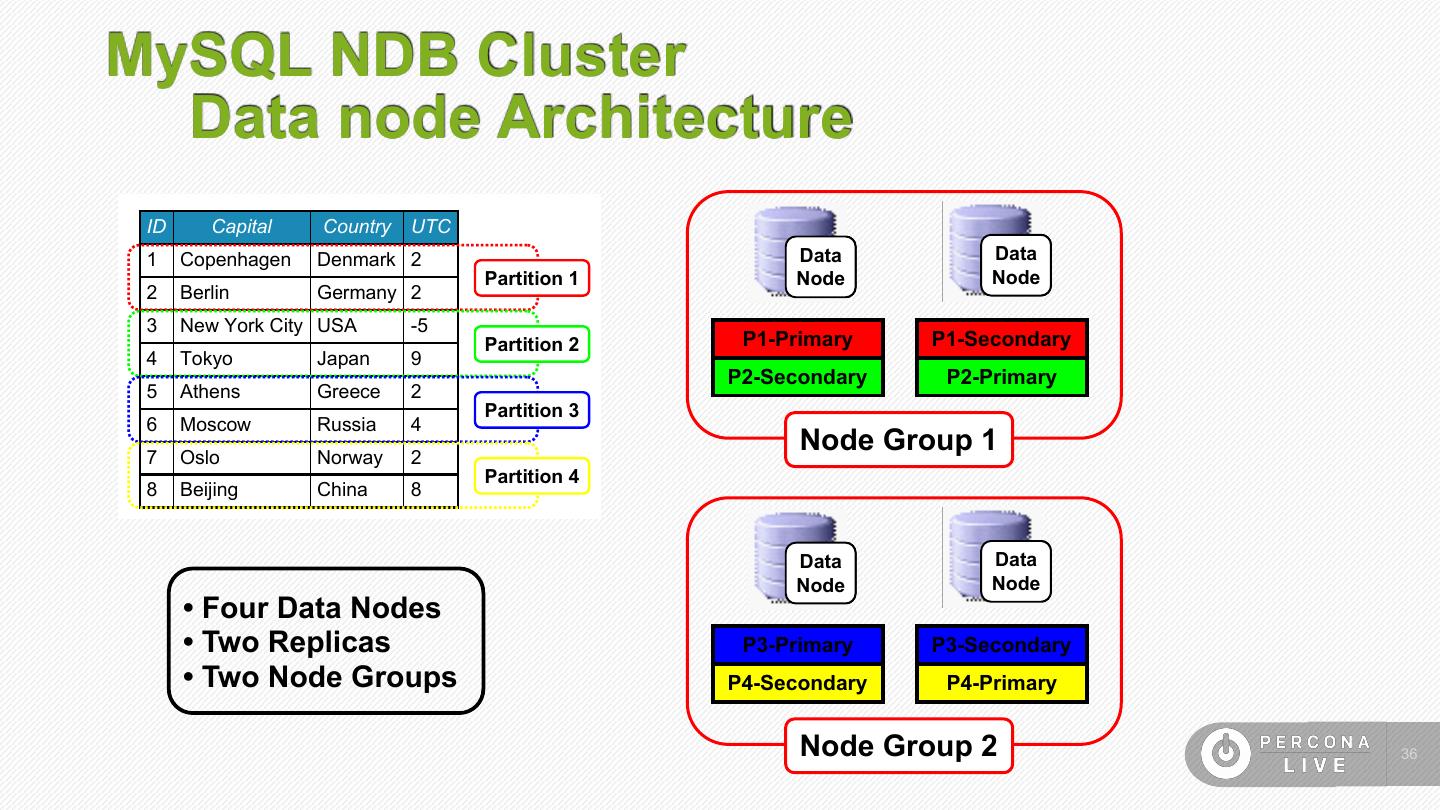

3 .MySQL High Availability Solutions ● MySQL Replication ● MHA/Orchestrator by Github and MySQL Replication ● Percona XtraDB Cluster/Galera ● MySQL InnoDB Cluster (Group Replication) ● MySQL NDB Cluster !3

4 .MySQL Replication 9 9 9 % !4

5 .MySQL Replication Topologies Master > Slave Master > Slaves Masters > Slave (Multi-Source) Master > Slave > Slaves Circular (Multi-Master) Master < > Master (Multi-Master) !5

6 .Inside MySQL Replication Writes & Reads Web/App Server Writes relay binlog mysqld I/O SQL Thread Thread index & binlogs data Replication binlog data mysqld MySQL Master MySQL Slave !6

7 .MySQL Replication (Scale-Out) Web/App Server Writes & Reads Reads Master Server Slave Server Backups Replication Writes Writes Index & Possible Roles Bin Log • Fail over server Rotation • Used for performing backups • Read load balancing • Additional slaves allow Scale-Out !7

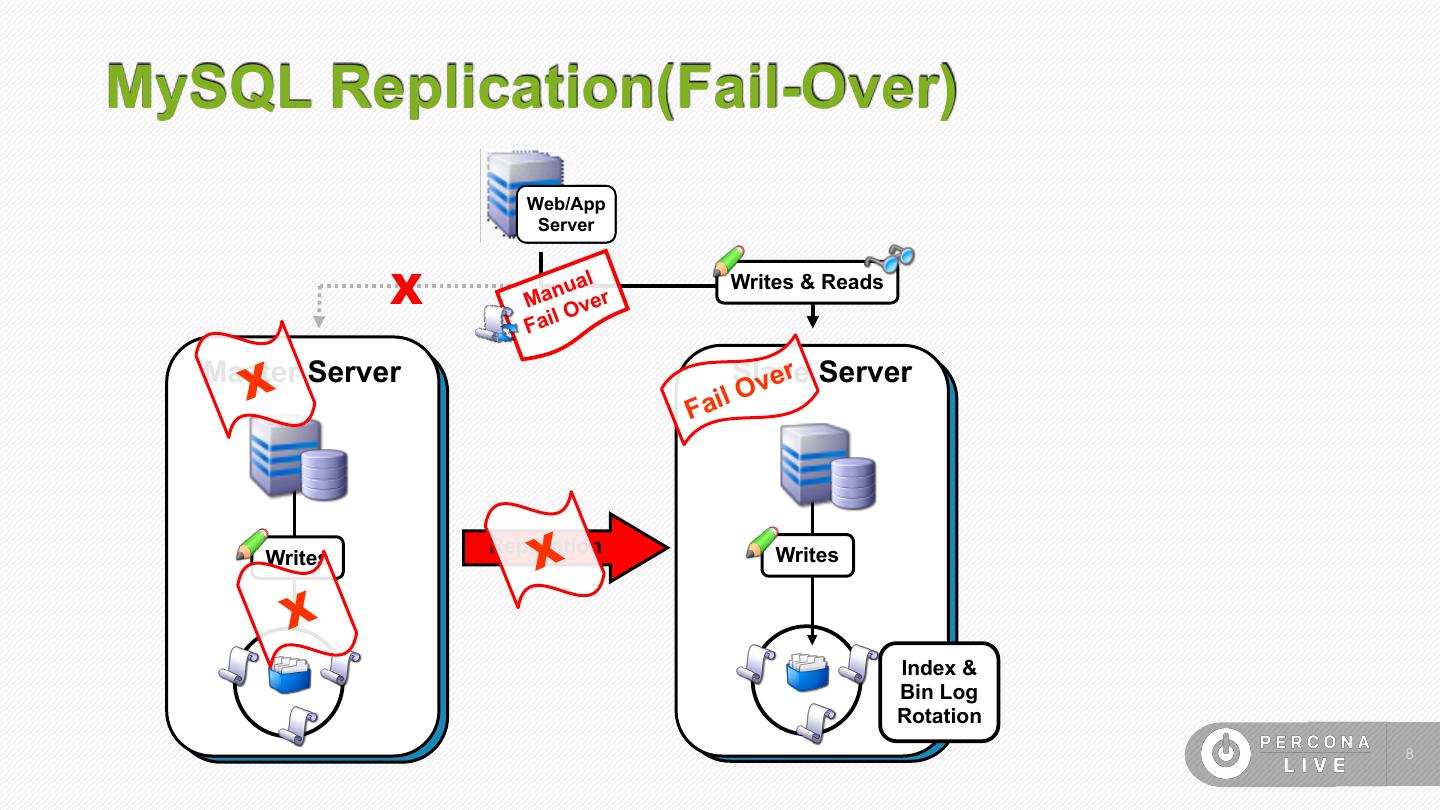

8 .MySQL Replication(Fail-Over) Web/App Server X M a n l ual Over Writes & Reads Fai X Master Server Fai Slave lOver Server Writes X Replication Writes X Index & Bin Log Rotation !8

9 .MySQL Replication Pros ● Mature, standard feature of MySQL. ● Compatible with many topologies. ● Supports multi-datacenter “clusters”. ● Easy to configure. ● Continues to gain new features: ▪ Semi-synchronous ▪ Row-based binary logs ▪ Parallel SQL threads ▪ Global Transaction ID (GTID) !9

10 .MySQL Replication Cons ● Slave lag. ▪ Use row-based binary logs and other strategies. ● Slave drift. ▪ Use read-only, pt-table-checksum, pt-table-sync. ● No automatic failover. ▪ Use haproxy and/or keepalived or other solutions. !10

11 .Topology managers + MySQL replication 9 9. 9 9 % !11

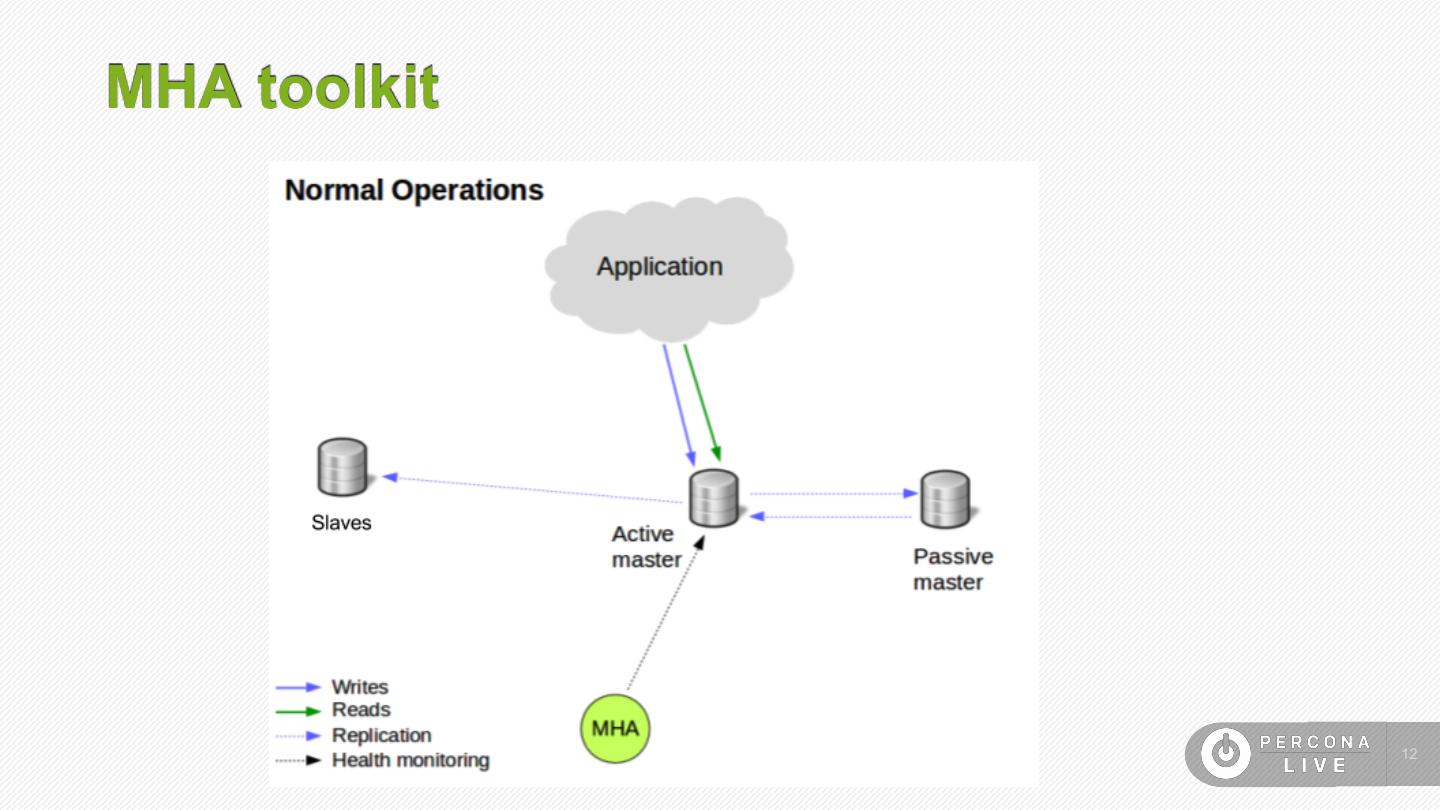

12 .MHA toolkit !12

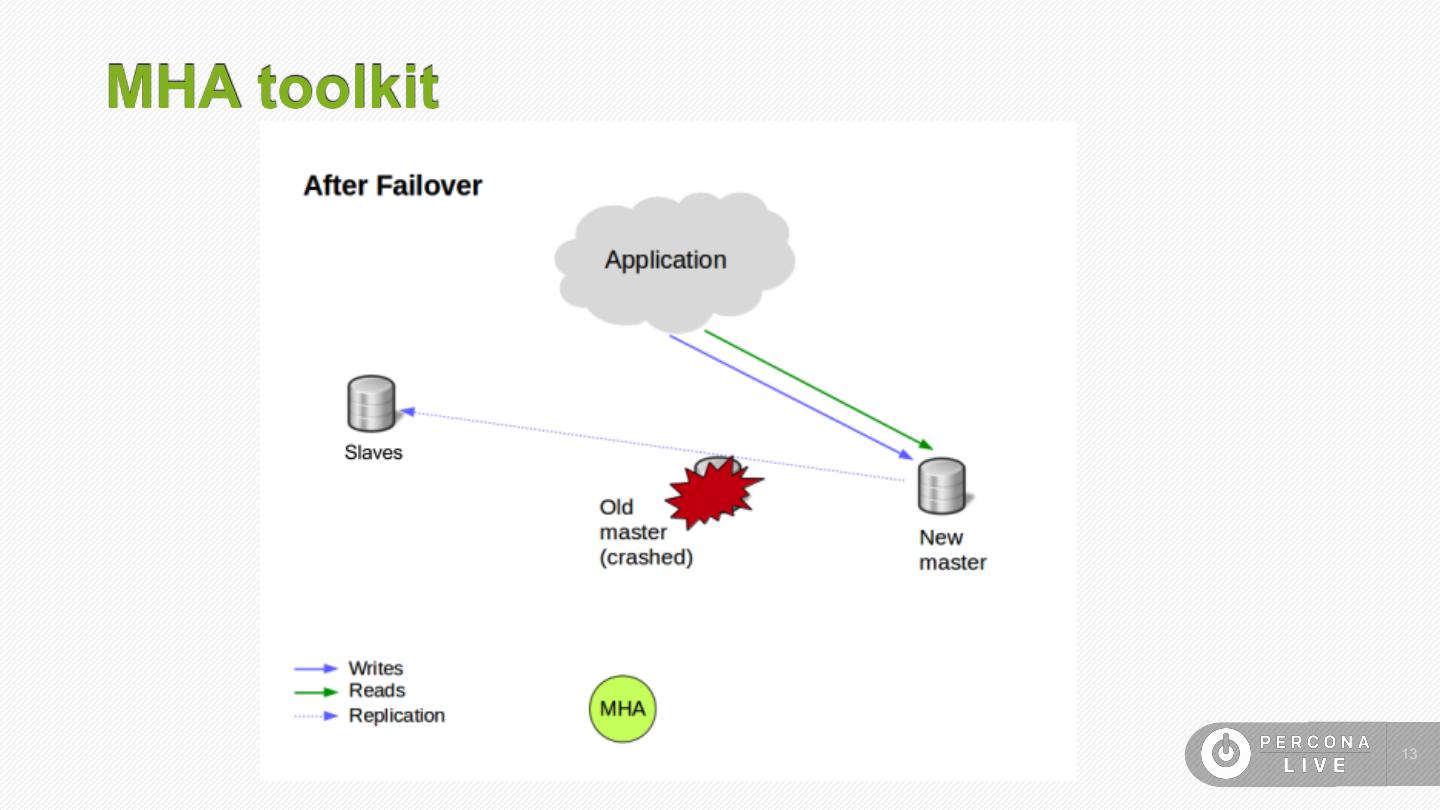

13 .MHA toolkit !13

14 .MHA toolkit ● Note that MHA never rebuilds an existing multi-master replication link, it always reconfigures topology using master- slave. ● MHA only takes care of the MySQL failover but has the ability to add custom code to make the application and any load balancers aware of the change. !14

15 .MHA toolkit: Pros ● Uses traditional MySQL replication. ● Easy to deploy for an existing replication group. ● Subscribes slaves to the correct binlog position. ● Strong command line support ● Very good point in time recovery via binary logs !15

16 .MHA toolkit: Cons ● Disconnects after failover ● No GUI ● Not updated, long term stable release ● Doesn’t crawl and auto discover topology ● Cannot manage PXC, co-masters or active/active ● Failover isn’t guaranteed instantly - can cause delays as logs are scanned. ● Still async replication (Consistency may be compromised) ● Monitor can be a single point of failure (monitor is only needed for failover) !16

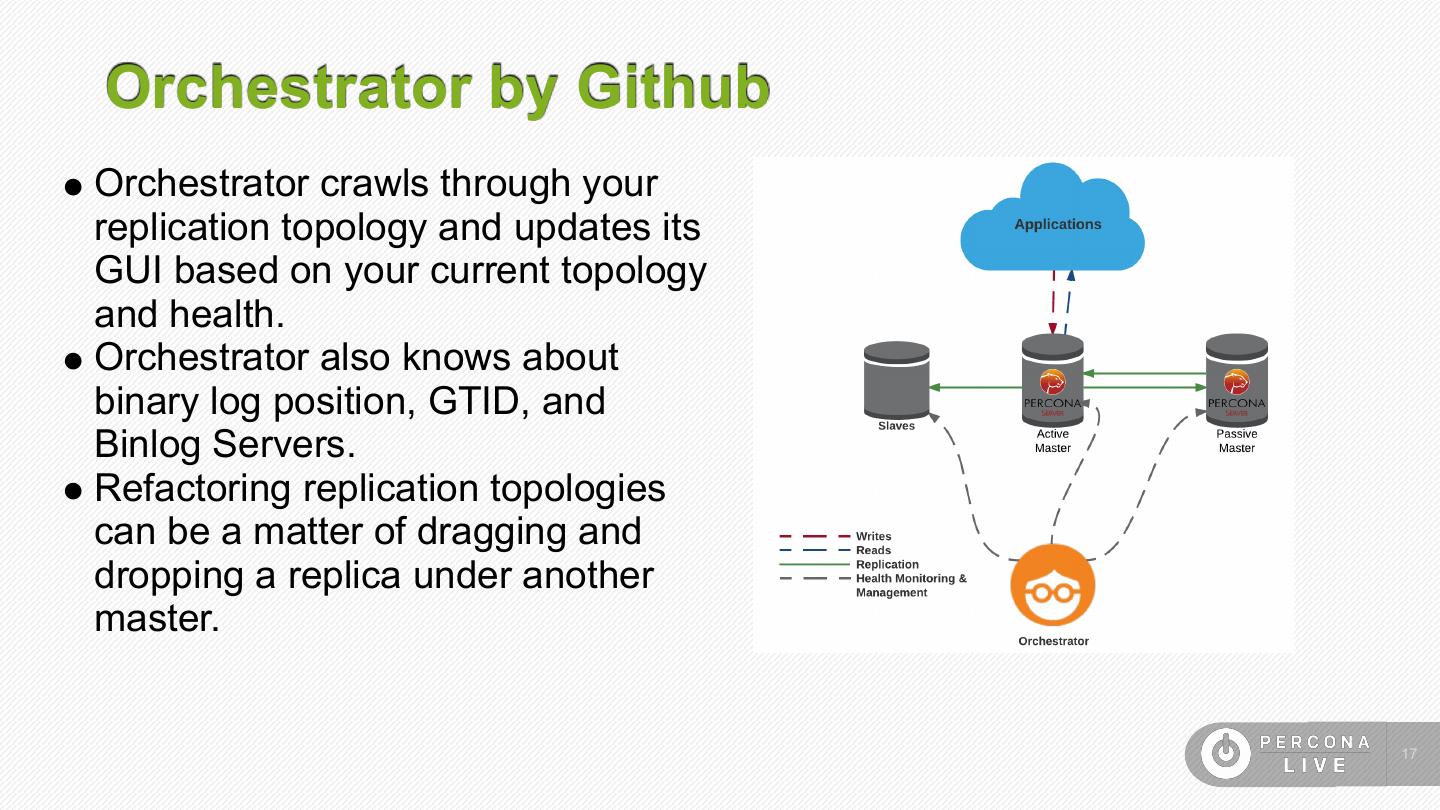

17 . Orchestrator by Github ● Orchestrator crawls through your replication topology and updates its GUI based on your current topology and health. ● Orchestrator also knows about binary log position, GTID, and Binlog Servers. ● Refactoring replication topologies can be a matter of dragging and dropping a replica under another master. !17

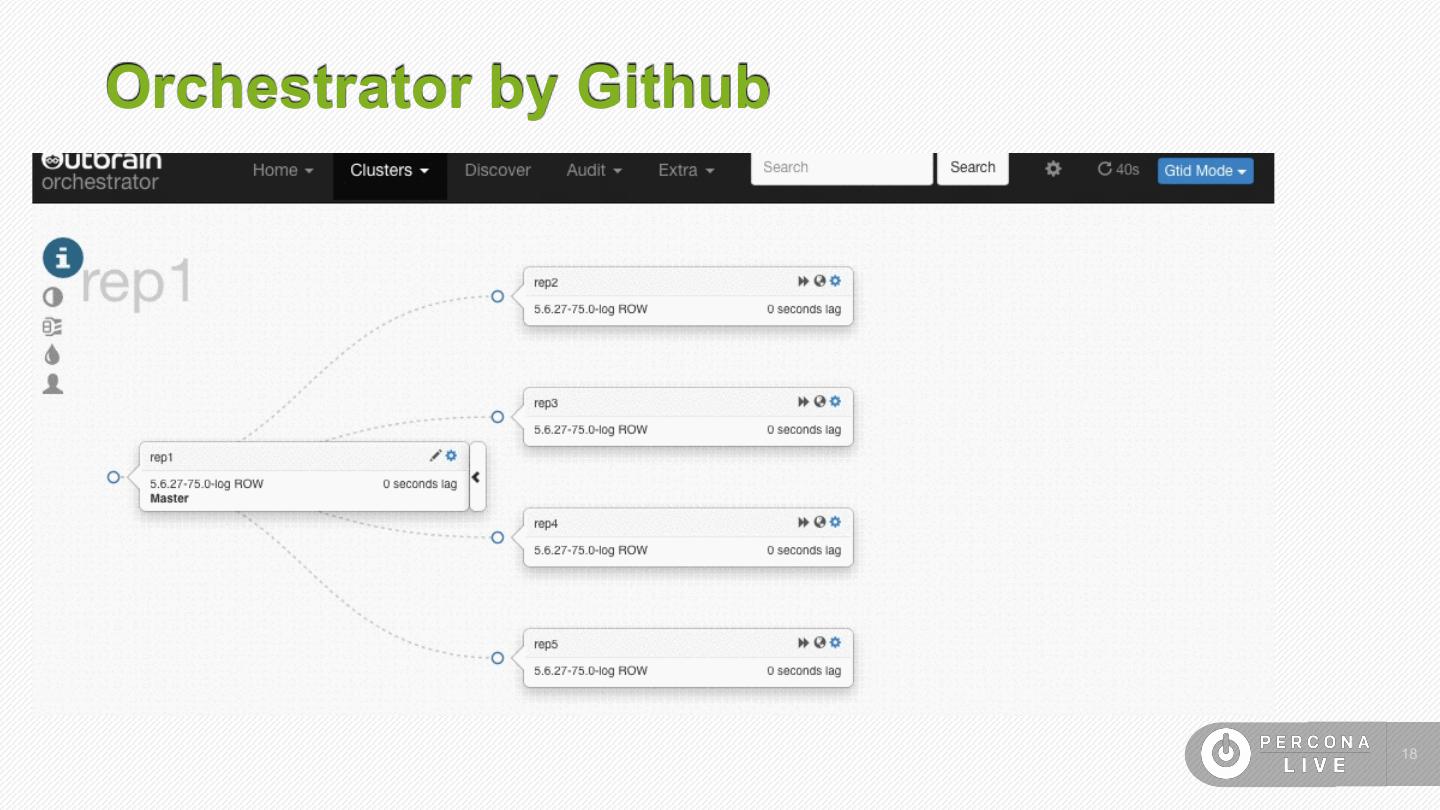

18 .Orchestrator by Github !18

19 .Orchestrator: Pros ● Integrated into PMM ● GUI in addition to command line ● Various options for managing replication (GTID, psuedo-GTID etc) ● Built for GTID ● Can manage backups and other tasks ● Currently in development with a dedicated team ● Auto Discovers topology ● Works with co-masters !19

20 .Orchestrator: Cons ● Does not recognise PXC nodes as part of a single cluster (sees them as separate masters) ● Point in time recovery relies on good slaves, doesn’t do binary log discovery !20

21 .PXC, MySQL InnoDB Cluster 9 9. 9 9 9 % !21

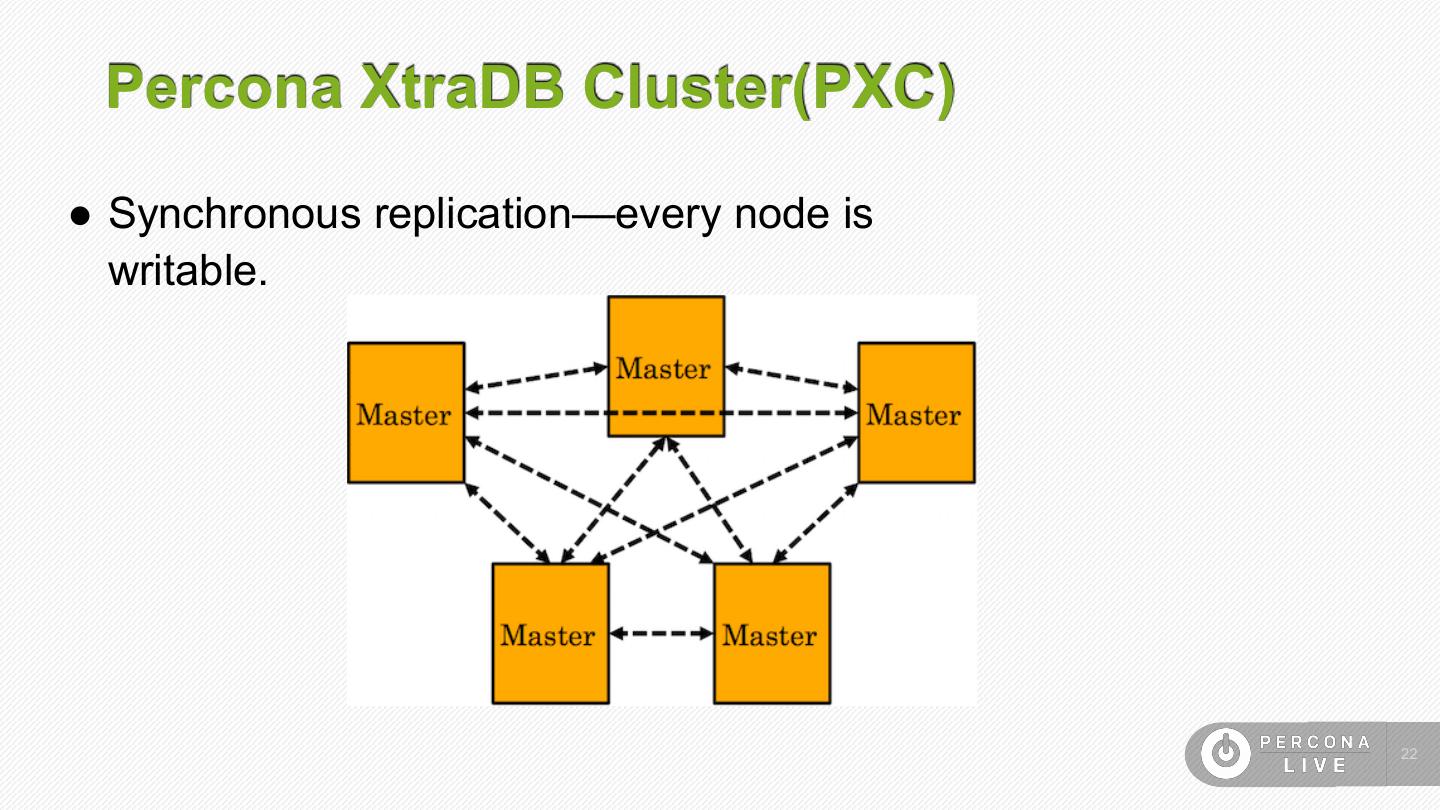

22 . Percona XtraDB Cluster(PXC) ● Synchronous replication—every node is writable. !22

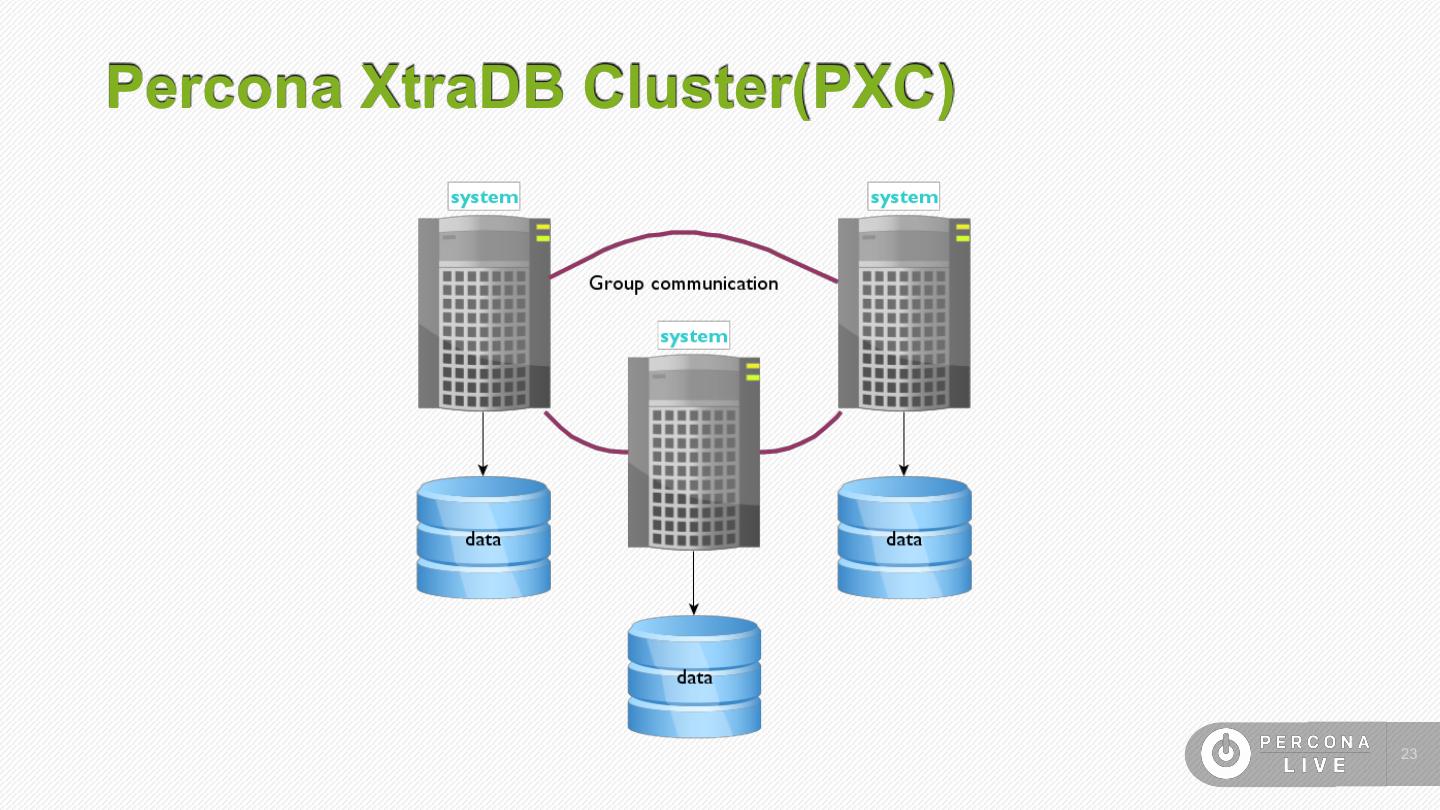

23 .Percona XtraDB Cluster(PXC) !23

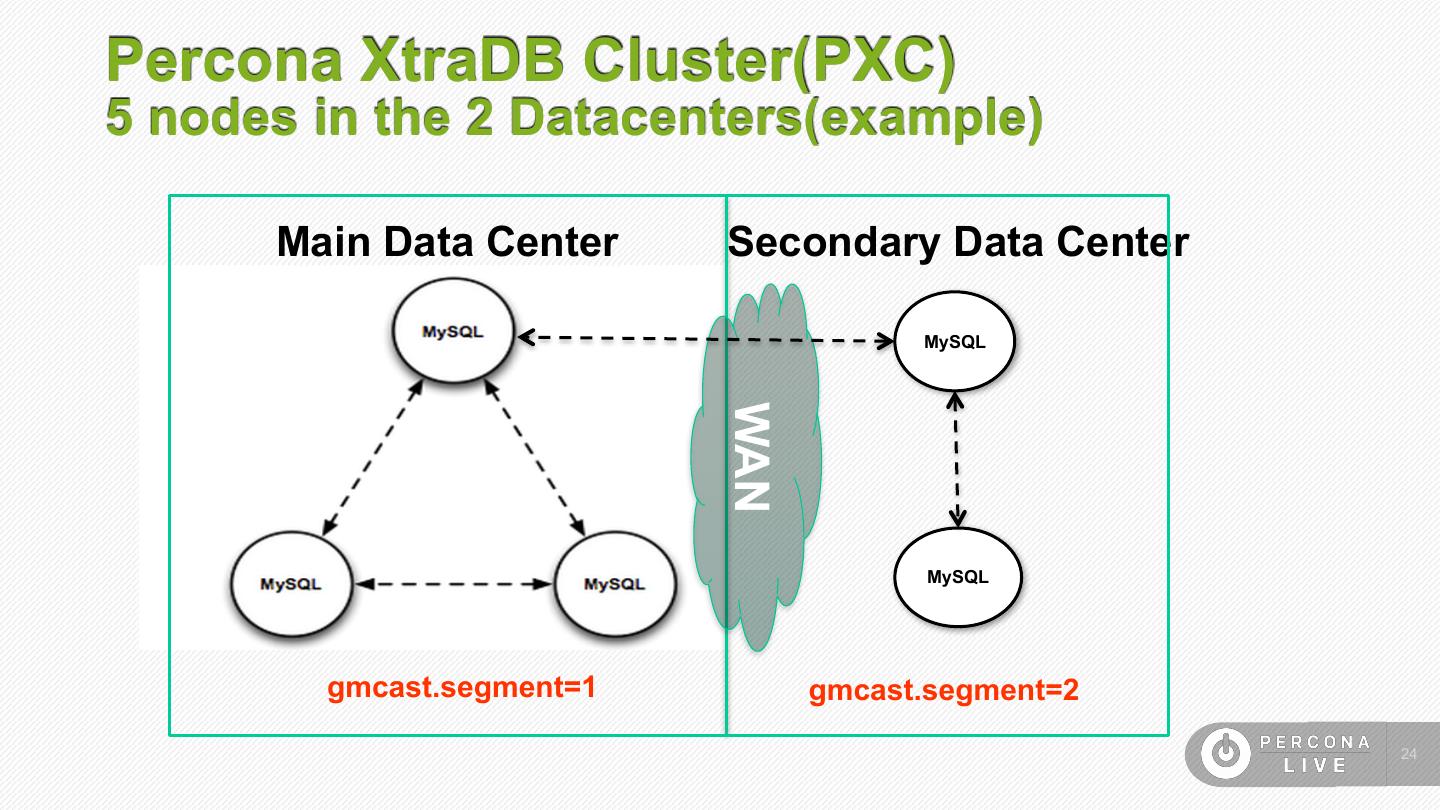

24 .Percona XtraDB Cluster(PXC) 5 nodes in the 2 Datacenters(example) Main Data Center Secondary Data Center MySQL WAN MySQL gmcast.segment=1 gmcast.segment=2 !24

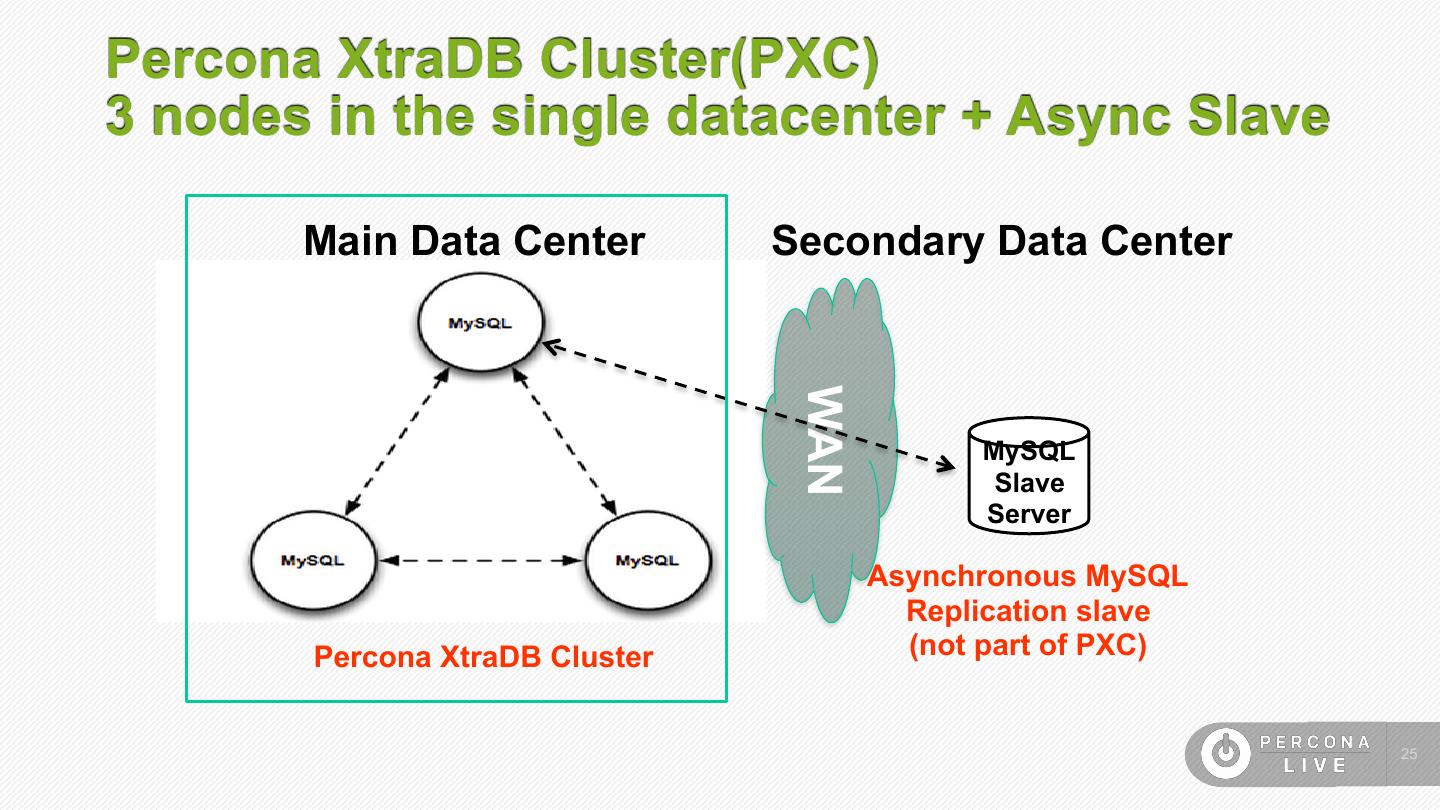

25 .Percona XtraDB Cluster(PXC) 3 nodes in the single datacenter + Async Slave Main Data Center Secondary Data Center WAN MySQL Slave Server Asynchronous MySQL Replication slave Percona XtraDB Cluster (not part of PXC) !25

26 .Percona XtraDB Cluster(PXC) : Pros ● Writing to any node updates all other nodes synchronously (like semi-sync replication). ● All nodes can be writers. ● Adding a new node, or recovering an offline node, initialises data automatically. ● Multi-datacenter (assuming low latency) ● Quorum-based selection of nodes that can take queries. Nodes not in quorum will be shut down and must copy data before they can rejoin the cluster. !26

27 .Percona XtraDB Cluster(PXC): Cons ● Not suitable for large transactions. ● Not suitable for heavy write traffic, especially rapid writes to a hotspot – don't rely on multiple writers. ● Reinitialising a node can be expensive. !27

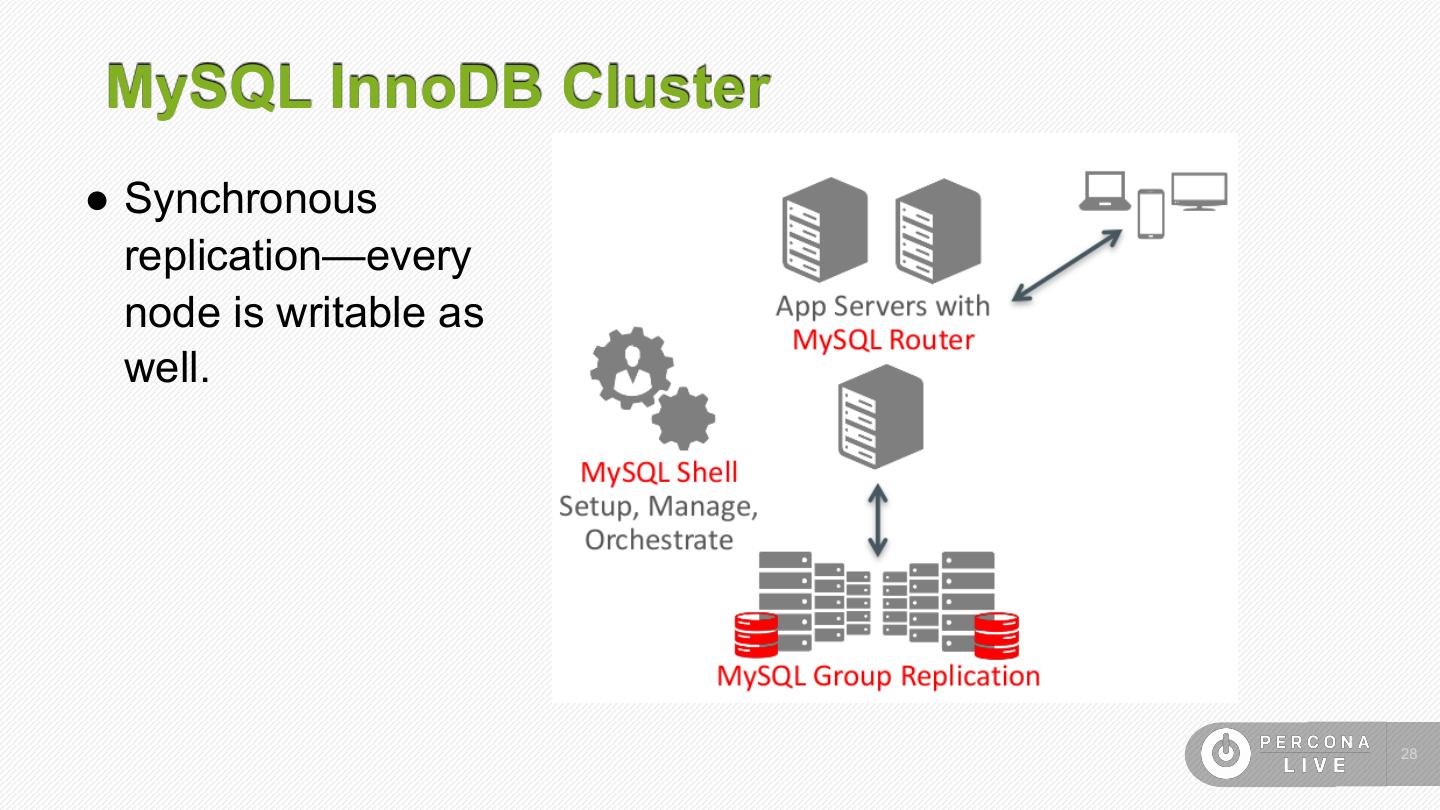

28 . MySQL InnoDB Cluster ● Synchronous replication—every node is writable as well. !28

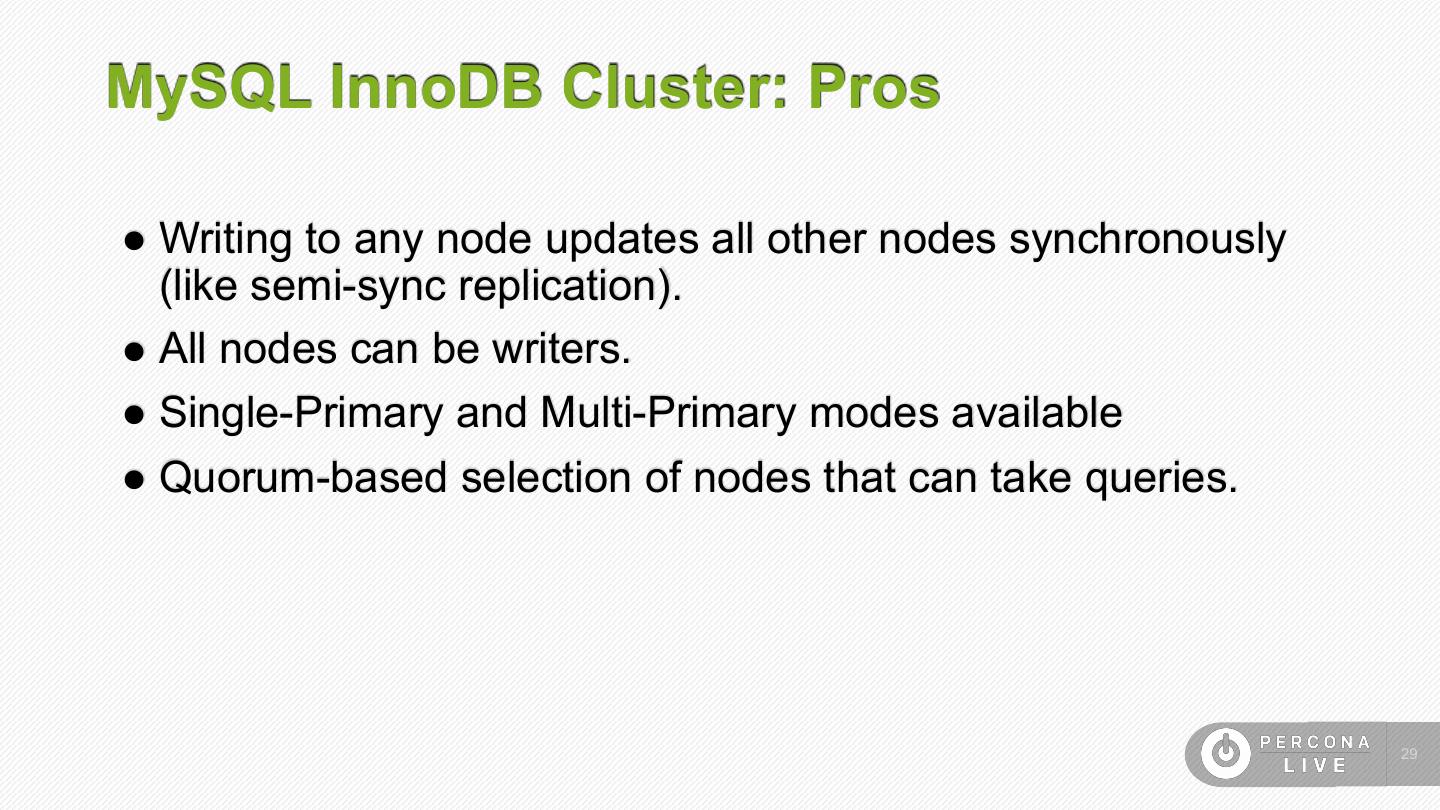

29 .MySQL InnoDB Cluster: Pros ● Writing to any node updates all other nodes synchronously (like semi-sync replication). ● All nodes can be writers. ● Single-Primary and Multi-Primary modes available ● Quorum-based selection of nodes that can take queries. !29