- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

NUMA技术在Nova中的应用

展开查看详情

1 .NUMA 技术以及在 Nova 中的应用 OpenStack

2 .1 2 3 4 Name 陈锐 Rui Chen OpenStack Employer Huawei Weibo @ kiwik Blog http://kiwik.github.io Who?

3 .NUMA Nova & NUMA Nova & NUMA & ? 目录 CONTENTS

4 .NUMA Non-Uniform Memory Access [ 1 ] OpenStack

5 .体系结构 NUMA SMP, NUMA 和 MPP SMP NUMA MPP Symmetric Multi-Processor Non-Uniform Memory Access Massive Parallel Processing

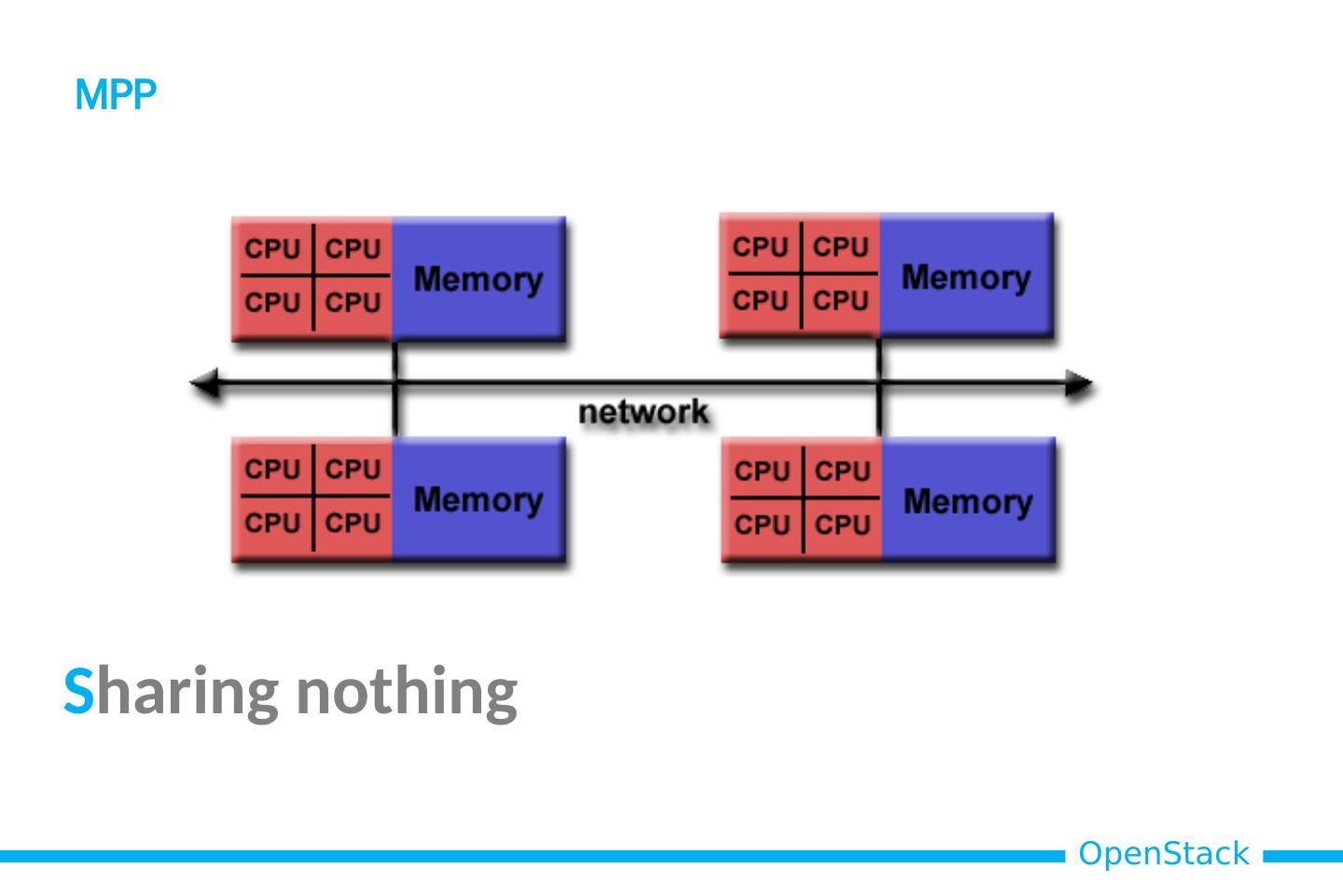

6 .S haring RAM SMP

7 .S haring RAM, local faster NUMA

8 .S haring nothing MPP

9 .NUMA Topology

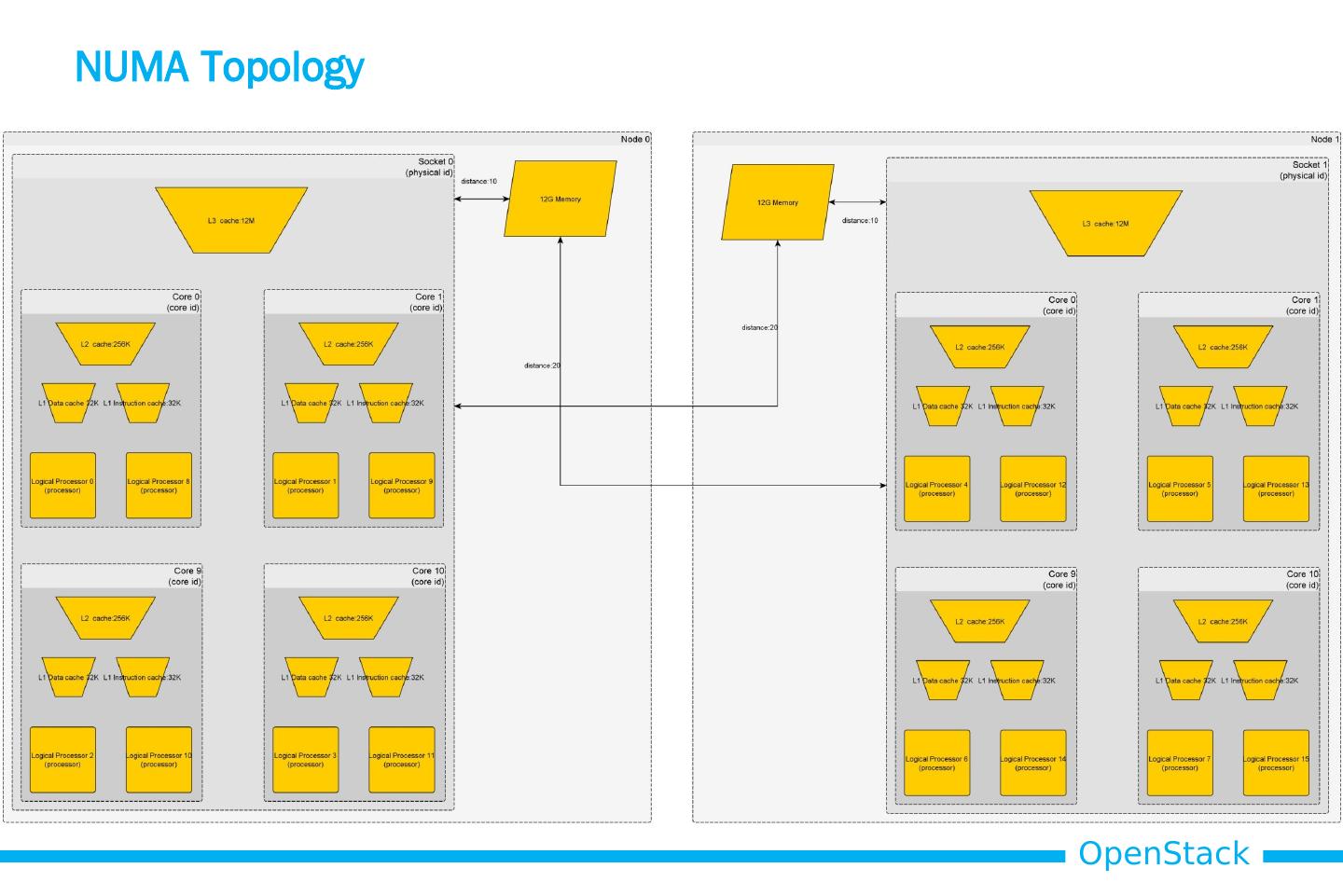

10 .Nova & NUMA guest NUMA node placement & topology [ 2 ] OpenStack

11 .未引入 NUMA 之前的问题 Icehouse 以及之前的版本中, Nova 定义 libvirt.xml 时,不考虑 host NUMA 的情况, libvirt 在默认的情况下,有可能会跨 NUMA node 获取 cpu 和 RAM 资源,造成 guest 性能下降。 Nova 不感知 NUMA 关键

12 .Use Case Use Case #1 使 guest 的 vcpu 和 RAM 从一个 host NUMA node 中获取, 避免跨 NUMA node 的 RAM 接入,提高 guest 性能, 减少不可预知的延时。 例 1 : hw:numa_nodes =1

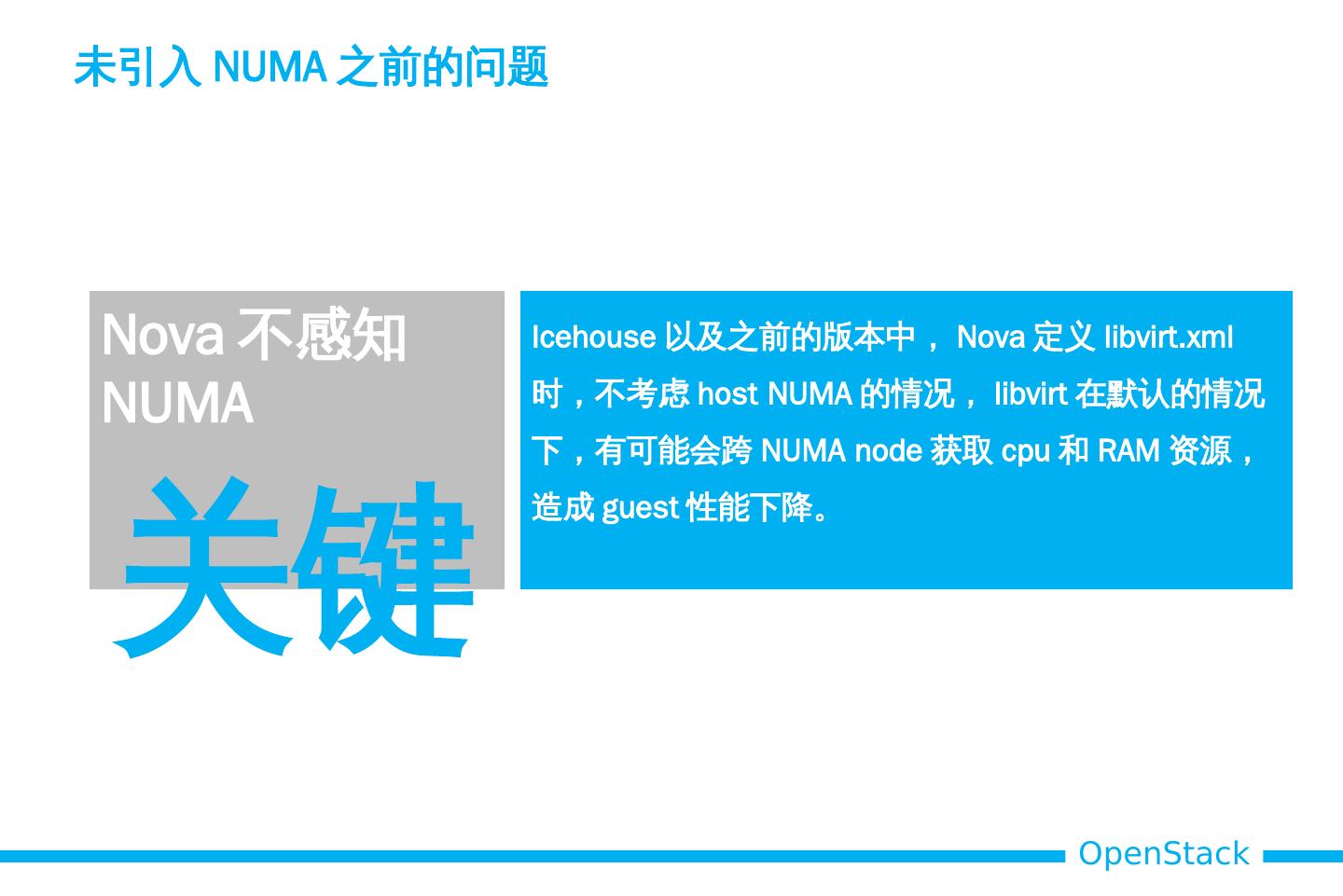

13 .Use Case Use Case #2 当 guest 的 vcpu 和 RAM 超过单个 host NUMA node 时, 将 guest 划分为多个 guest NUMA node ,并与 host NUMA topology 对应,有助于 guest OS 感知到 guest NUMA , 优化应用资源调度,例如: DB 。 例 2 : hw:numa_nodes =N

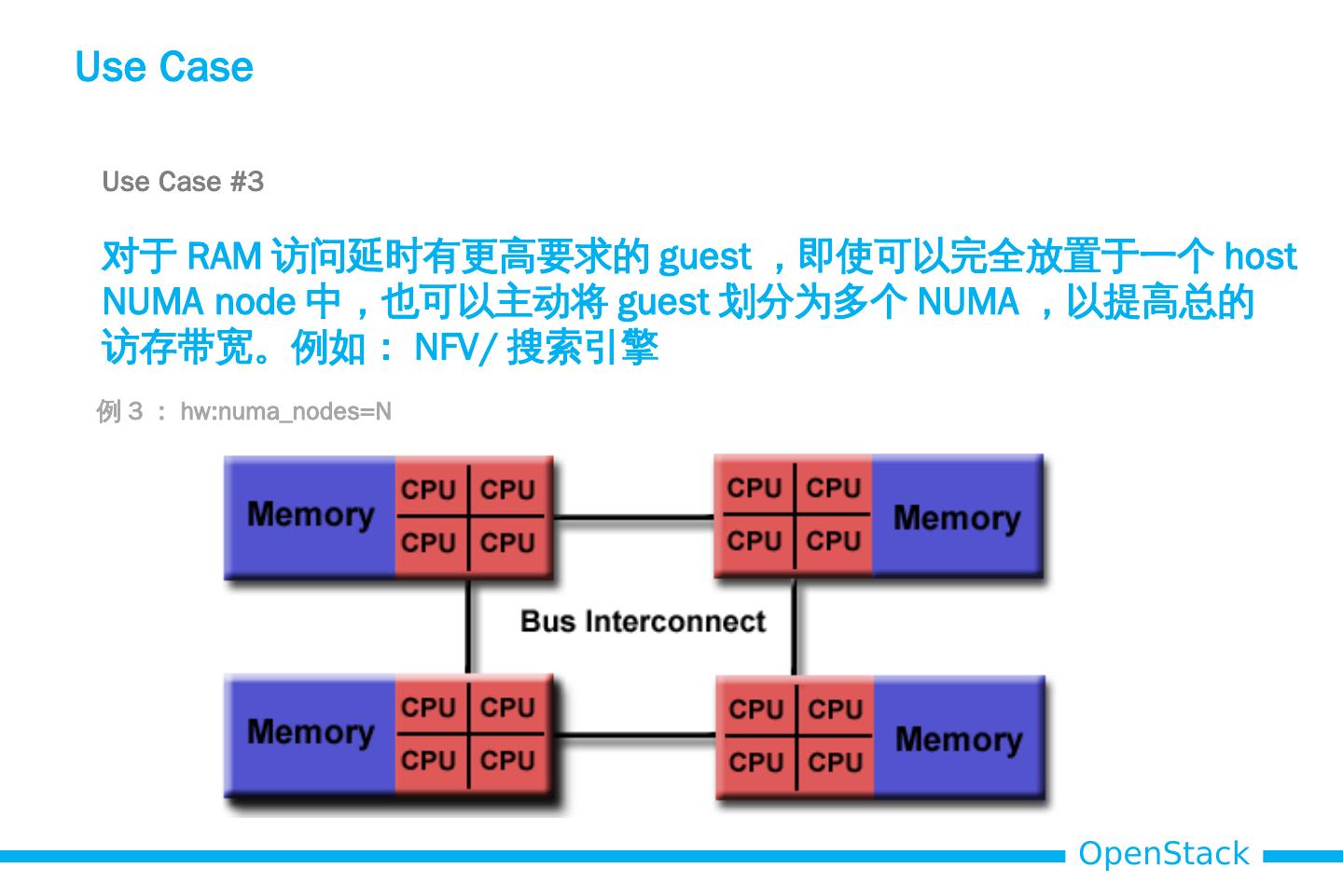

14 .Use Case Use Case #3 对于 RAM 访问延时有更高要求的 guest ,即使可以完全放置于一个 host NUMA node 中,也可以主动将 guest 划分为多个 NUMA ,以提高总的 访存带宽。例如: NFV/ 搜索引擎 例 3 : hw:numa_nodes =N

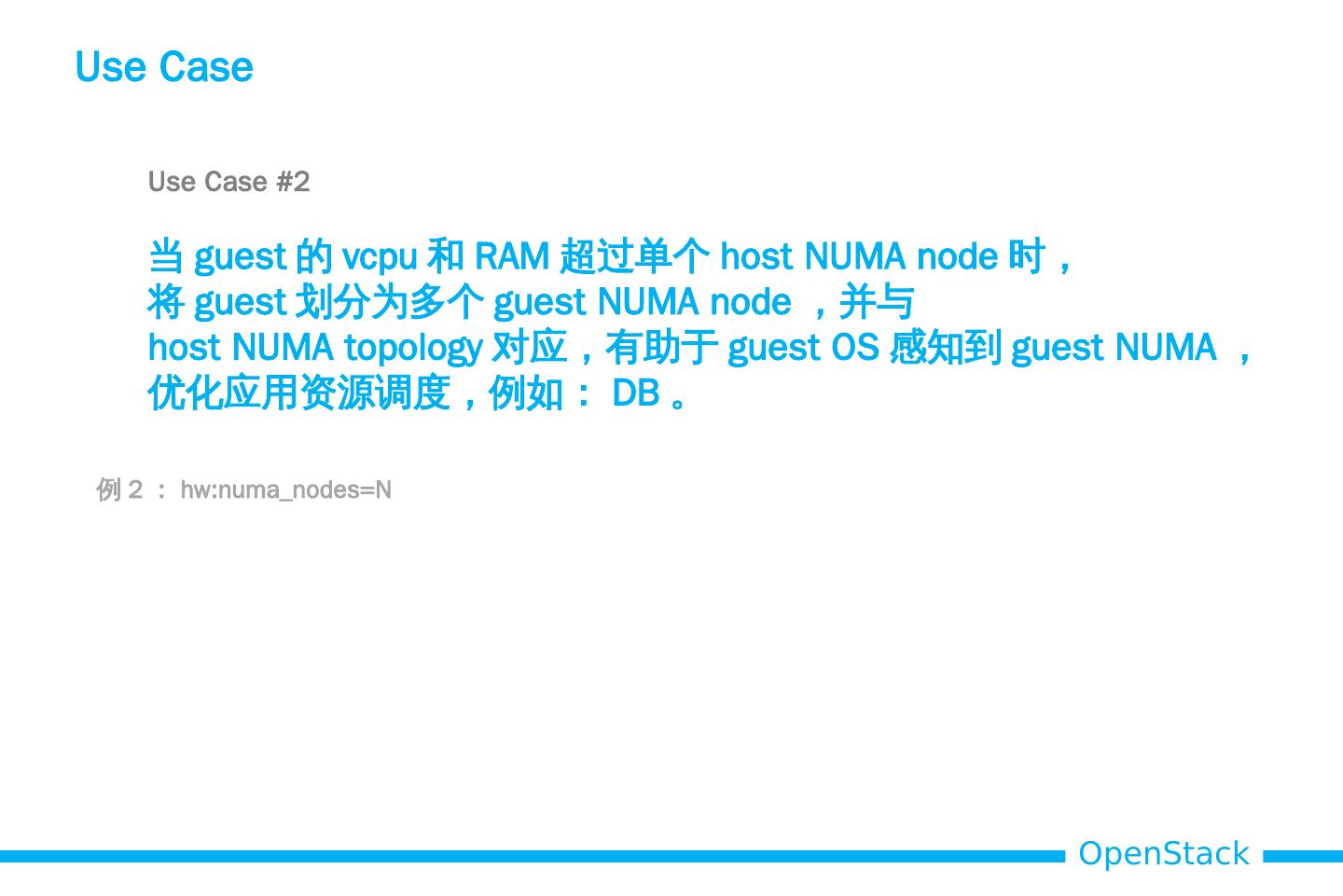

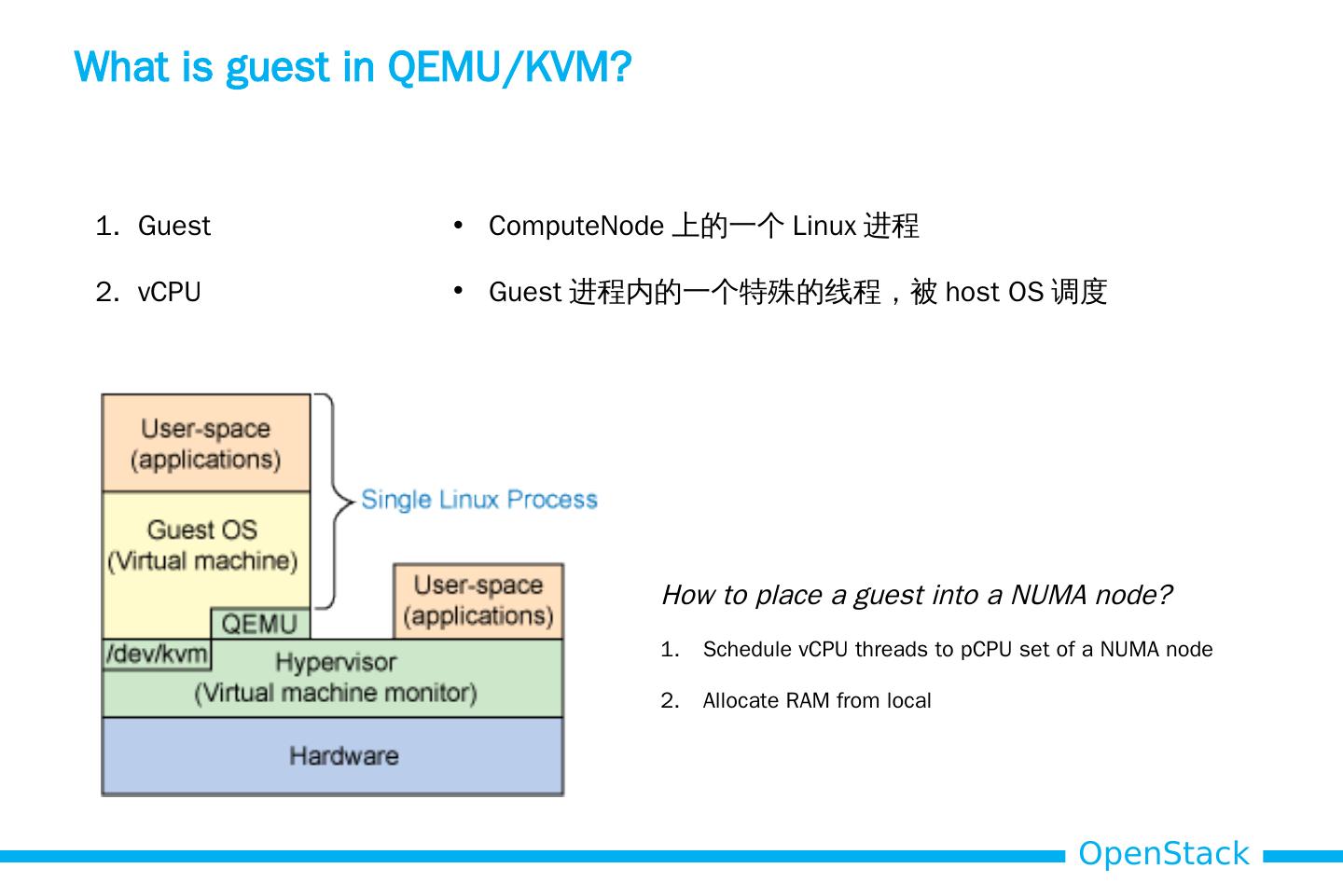

15 .What is guest in QEMU/KVM? Guest vCPU ComputeNode 上的一个 Linux 进程 Guest 进程内的一个特殊的线程,被 host OS 调度 How to place a guest into a NUMA node? Schedule vCPU threads to pCPU set of a NUMA node Allocate RAM from local

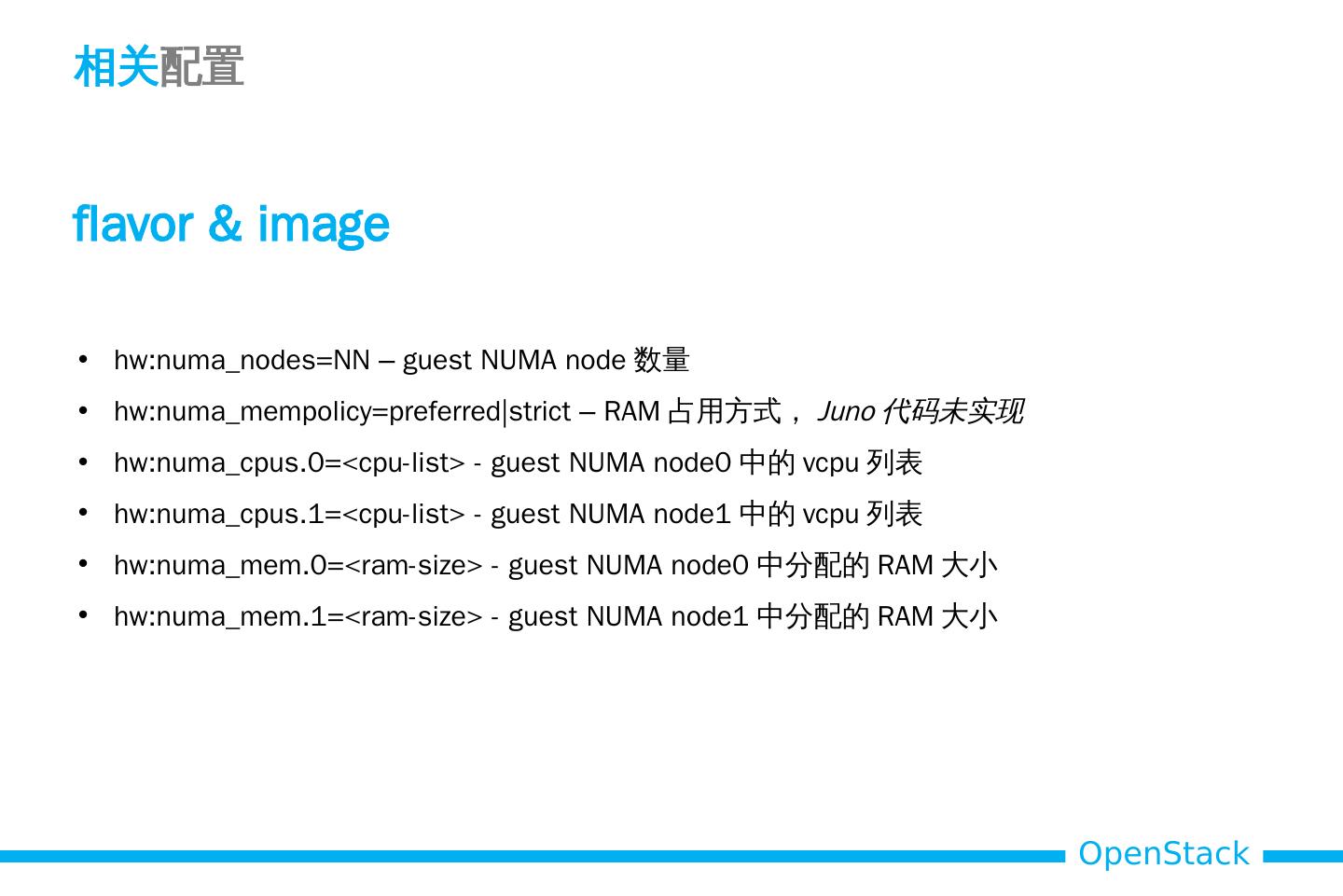

16 .相关 配置 flavor & image hw:numa_nodes =NN – guest NUMA node 数量 hw:numa_mempolicy = preferred|strict – RAM 占用方式, Juno 代码未实现 hw:numa_cpus.0=< cpu -list> - guest NUMA node0 中的 vcpu 列表 hw:numa_cpus.1=< cpu -list> - guest NUMA node1 中的 vcpu 列表 hw:numa_mem.0=<ram-size> - guest NUMA node0 中分配的 RAM 大小 hw:numa_mem.1=<ram-size> - guest NUMA node1 中分配的 RAM 大小

17 .Nova 实现流程 1 2 3 4 Nova Workflow nova- api 生成 guest NUMA topology 保存 instance_extra nova-scheduler 判断 host 是否可以满足 guest NUMA topology nova-compute instance_claim 检查 host 的 NUMA 资源是否满足 建立 guest NUMA 到 host NUMA 的映射 libvirt driver 根据映射关系,生成对应的 libvirt.xml 5 resource tracker 刷新 host numa 资源使用情况

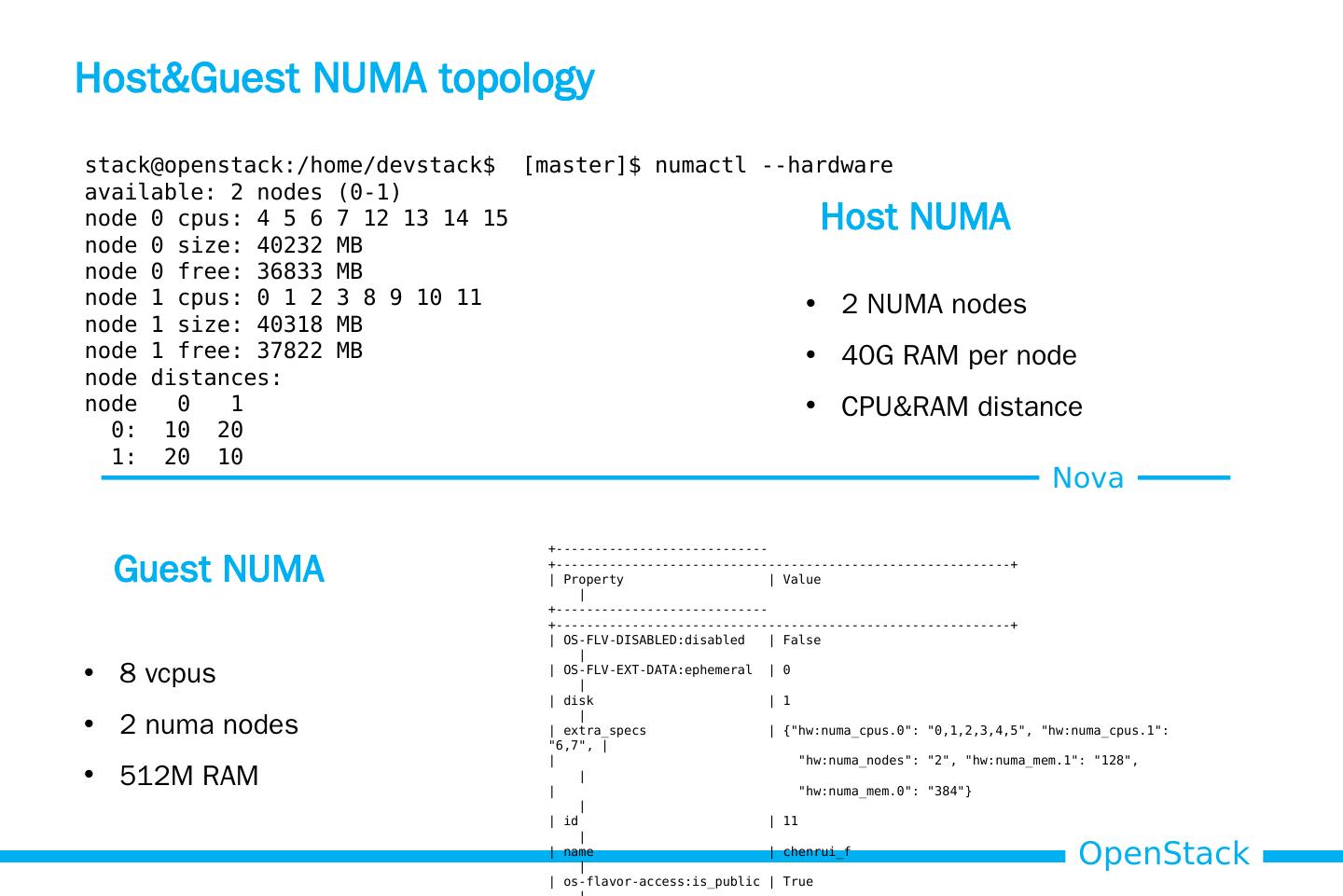

18 .Host NUMA Host&Guest NUMA topology 8 vcpus 2 numa nodes 512M RAM 2 NUMA nodes 40G RAM per node CPU&RAM distance Nova stack@openstack :/home/ devstack $ [master]$ numactl --hardware available: 2 nodes (0-1) node 0 cpus : 4 5 6 7 12 13 14 15 node 0 size: 40232 MB node 0 free: 36833 MB node 1 cpus : 0 1 2 3 8 9 10 11 node 1 size: 40318 MB node 1 free: 37822 MB node distances: node 0 1 0: 10 20 1: 20 10 Guest NUMA +----------------------------+------------------------------------------------------------+ | Property | Value | +----------------------------+------------------------------------------------------------+ | OS-FLV- DISABLED:disabled | False | | OS-FLV-EXT- DATA:ephemeral | 0 | | disk | 1 | | extra_specs | {"hw:numa_cpus.0": "0,1,2,3,4,5", "hw:numa_cpus.1": "6,7", | | " hw:numa_nodes ": "2", "hw:numa_mem.1": "128", | | "hw:numa_mem.0": "384"} | | id | 11 | | name | chenrui_f | | os -flavor- access:is_public | True | | ram | 512 | | rxtx_factor | 1.0 | | swap | | | vcpus | 8 | +----------------------------+------------------------------------------------------------+

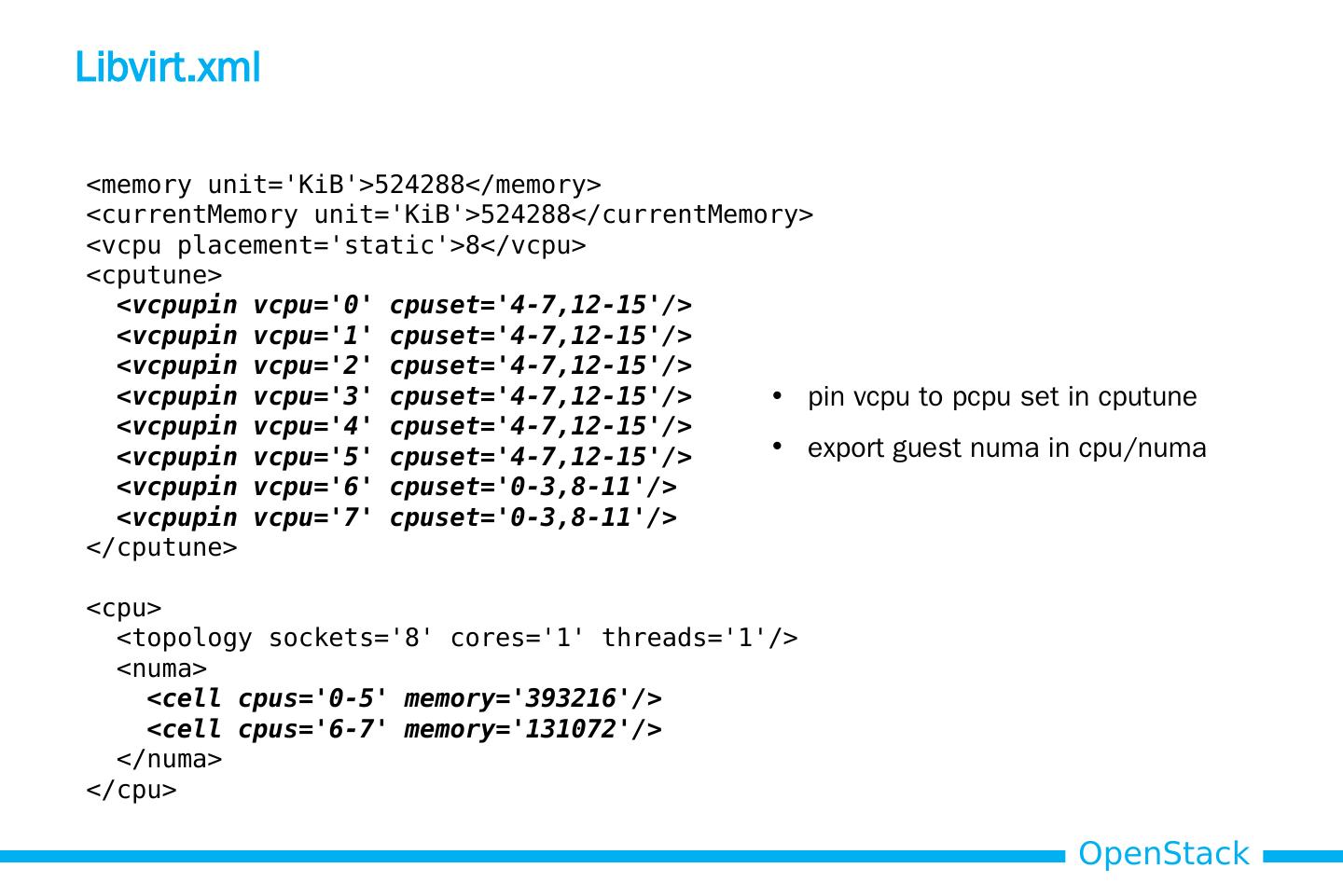

19 .Libvirt.xml pin vcpu to pcpu set in cputune export guest numa in cpu / numa <memory unit= KiB >524288</memory> < currentMemory unit= KiB >524288</ currentMemory > < vcpu placement=static>8</ vcpu > < cputune > < vcpupin vcpu =0 cpuset =4-7,12-15/> < vcpupin vcpu =1 cpuset =4-7,12-15/> < vcpupin vcpu =2 cpuset =4-7,12-15/> < vcpupin vcpu =3 cpuset =4-7,12-15/> < vcpupin vcpu =4 cpuset =4-7,12-15/> < vcpupin vcpu =5 cpuset =4-7,12-15/> < vcpupin vcpu =6 cpuset =0-3,8-11/> < vcpupin vcpu =7 cpuset =0-3,8-11/> </ cputune > < cpu > <topology sockets=8 cores=1 threads=1/> < numa > <cell cpus =0-5 memory=393216/> <cell cpus =6-7 memory=131072/> </ numa > </ cpu >

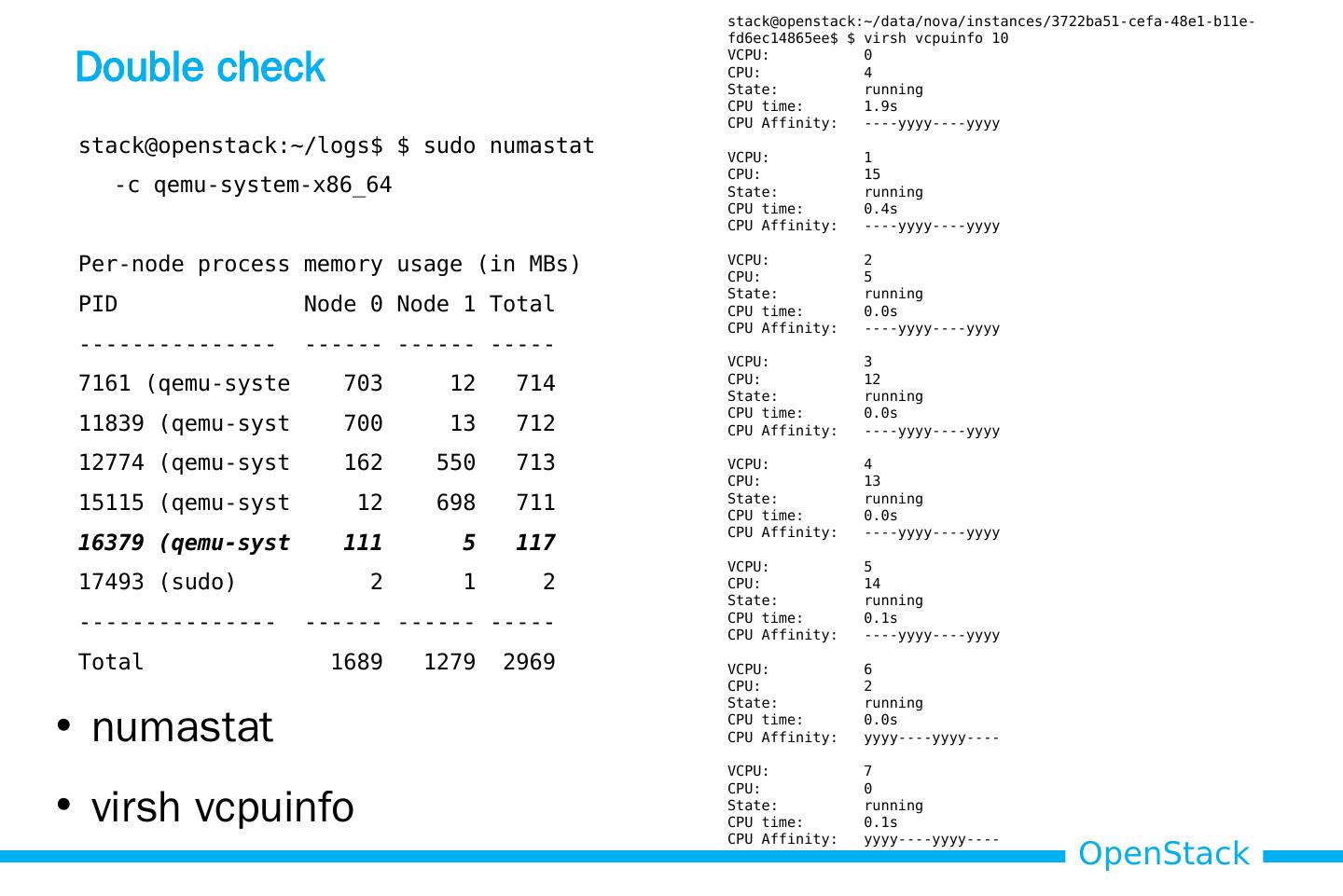

20 .Double check stack@openstack :~/logs$ $ sudo numastat -c qemu-system-x86_64 Per-node process memory usage (in MBs) PID Node 0 Node 1 Total --------------- ------ ------ ----- 7161 ( qemu-syste 703 12 714 11839 ( qemu-syst 700 13 712 12774 ( qemu-syst 162 550 713 15115 ( qemu-syst 12 698 711 16379 ( qemu-syst 111 5 117 17493 ( sudo ) 2 1 2 --------------- ------ ------ ----- Total 1689 1279 2969 stack@openstack :~/data/nova/instances/3722ba51-cefa-48e1-b11e-fd6ec14865ee$ $ virsh vcpuinfo 10 VCPU: 0 CPU: 4 State: running CPU time: 1.9s CPU Affinity: ---- yyyy ---- yyyy VCPU: 1 CPU: 15 State: running CPU time: 0.4s CPU Affinity: ---- yyyy ---- yyyy VCPU: 2 CPU: 5 State: running CPU time: 0.0s CPU Affinity: ---- yyyy ---- yyyy VCPU: 3 CPU: 12 State: running CPU time: 0.0s CPU Affinity: ---- yyyy ---- yyyy VCPU: 4 CPU: 13 State: running CPU time: 0.0s CPU Affinity: ---- yyyy ---- yyyy VCPU: 5 CPU: 14 State: running CPU time: 0.1s CPU Affinity: ---- yyyy ---- yyyy VCPU: 6 CPU: 2 State: running CPU time: 0.0s CPU Affinity: yyyy ---- yyyy ---- VCPU: 7 CPU: 0 State: running CPU time: 0.1s CPU Affinity: yyyy ---- yyyy ---- numastat virsh vcpuinfo

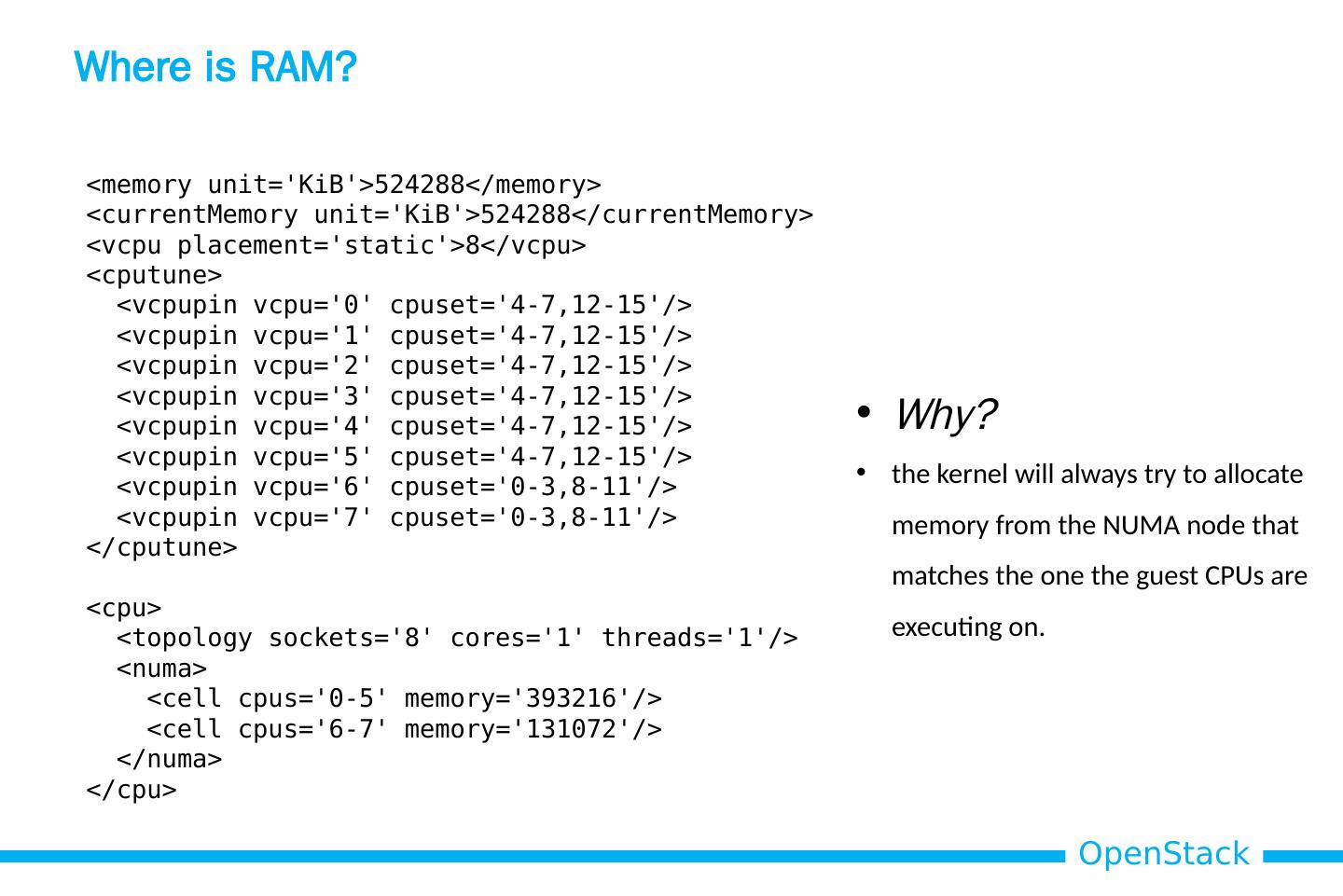

21 .Where is RAM? Why? the kernel will always try to allocate memory from the NUMA node that matches the one the guest CPUs are executing on. <memory unit= KiB >524288</memory> < currentMemory unit= KiB >524288</ currentMemory > < vcpu placement=static>8</ vcpu > < cputune > < vcpupin vcpu =0 cpuset =4-7,12-15/> < vcpupin vcpu =1 cpuset =4-7,12-15/> < vcpupin vcpu =2 cpuset =4-7,12-15/> < vcpupin vcpu =3 cpuset =4-7,12-15/> < vcpupin vcpu =4 cpuset =4-7,12-15/> < vcpupin vcpu =5 cpuset =4-7,12-15/> < vcpupin vcpu =6 cpuset =0-3,8-11/> < vcpupin vcpu =7 cpuset =0-3,8-11/> </ cputune > < cpu > <topology sockets=8 cores=1 threads=1/> < numa > <cell cpus =0-5 memory=393216/> <cell cpus =6-7 memory=131072/> </ numa > </ cpu >

22 .Nova & NUMA & ? I/O NUMA scheduling, vcpu pin, large pages, etc. [ 3 ] OpenStack

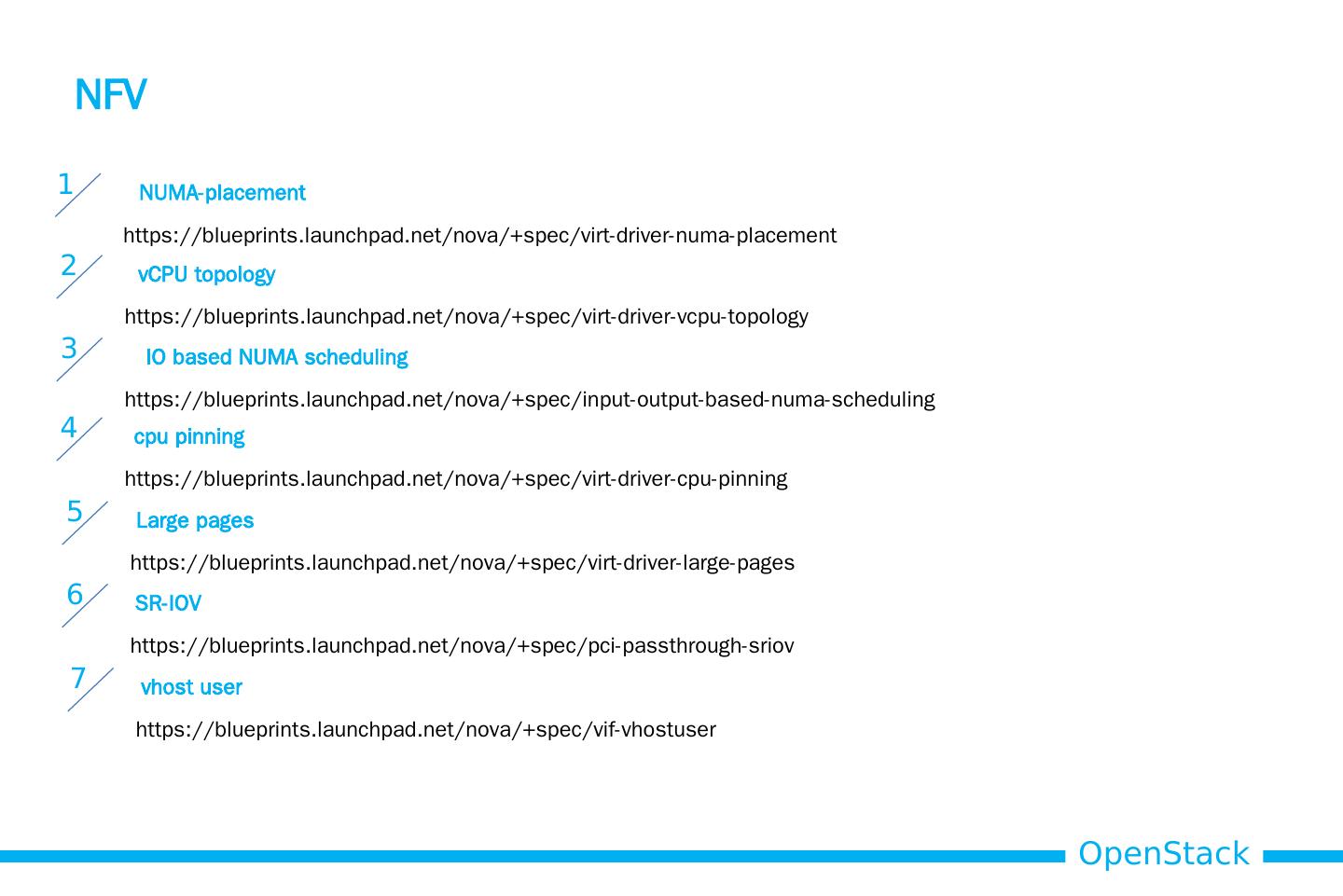

23 .NFV 1 NUMA-placement https://blueprints.launchpad.net/nova/+spec/virt-driver-numa-placement 2 vCPU topology https://blueprints.launchpad.net/nova/+spec/virt-driver-vcpu-topology 3 IO based NUMA scheduling https://blueprints.launchpad.net/nova/+spec/input-output-based-numa-scheduling 4 cpu pinning https://blueprints.launchpad.net/nova/+spec/virt-driver-cpu-pinning 5 Large pages https://blueprints.launchpad.net/nova/+spec/virt-driver-large-pages 6 SR-IOV https://blueprints.launchpad.net/nova/+spec/pci-passthrough-sriov 7 vhost user https://blueprints.launchpad.net/nova/+spec/vif-vhostuser

24 .Thanks!