- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/u227/Efficiently_Compiling_Efficient_Query_Plans?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

Efficiently Compiling Efficient Query Plans

展开查看详情

1 . Efficiently Compiling Efficient Query Plans for Modern Hardware Thomas Neumann Technische Universitat ¨ Munchen ¨ Munich, Germany neumann@in.tum.de ABSTRACT As main memory grows, query performance is more and more determined by the raw CPU costs of query processing itself. The classical iterator style query processing technique is very simple and flexible, but shows poor performance on modern CPUs due to lack of locality and frequent instruction mis- predictions. Several techniques like batch oriented processing or vectorized tuple processing have been proposed in the past to improve this situation, but even these techniques are frequently out-performed by hand-written execution plans. In this work we present a novel compilation strategy that translates a query into compact and efficient machine code Figure 1: Hand-written code vs. execution engines using the LLVM compiler framework. By aiming at good for TPC-H Query 1 (Figure 3 of [16]) code and data locality and predictable branch layout the resulting code frequently rivals the performance of hand- CPUs. Third, this model often results in poor code locality written C++ code. We integrated these techniques into the and complex book-keeping. This can be seen by considering HyPer main memory database system and show that this a simple table scan over a compressed relation. As the tuples results in excellent query performance while requiring only must be produced one at a time, the table scan operator has modest compilation time. to remember where in the compressed stream the current 1. INTRODUCTION tuple is and jump to the corresponding decompression code when asked for the next tuple. Most database systems translate a given query into an These observations have led some modern systems to a expression in a (physical) algebra, and then start evaluating departure from this pure iterator model, either internally this algebraic expression to produce the query result. The (e.g., by internally decompressing a number of tuples at traditional way to execute these algebraic plans is the iterator once and then only iterating over the decompressed data), or model [8], sometimes also called Volcano-style processing [4]: externally by producing more than one tuple during each next Every physical algebraic operator conceptually produces a call [11] or even producing all tuples at once [1]. This block- tuple stream from its input, and allows for iterating over this oriented processing amortizes the costs of calling another tuple stream by repeatedly calling the next function of the operator over the large number of produced tuples, such operator. that the invocation costs become negligible. However, it also This is a very nice and simple interface, and allows for eliminates a major strength of the iterator model, namely the easy combination of arbitrary operators, but it clearly comes ability to pipeline data. Pipelining means that an operator from a time when query processing was dominated by I/O can pass data to its parent operator without copying or and CPU consumption was less important: First, the next otherwise materializing the data. Selections, for example, function will be called for every single tuple produced as are pipelining operators, as they only pass tuples around intermediate or final result, i.e., millions of times. Second, without modifying them. But also more complex operators the call to next is usually a virtual call or a call via a function like joins can be pipelined, at least on one of their input pointer. Consequently, the call is even more expensive than sides. When producing more than one tuple during a call a regular call and degrades the branch prediction of modern this pure pipelining usually cannot be used any more, as the tuples have to be materialized somewhere to be accessible. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are This materialization has other advantages like allowing for not made or distributed for profit or commercial advantage and that copies vectorized operations [2], but in general the lack of pipelining bear this notice and the full citation on the first page. To copy otherwise, to is very unfortunate as it consumes more memory bandwidth. republish, to post on servers or to redistribute to lists, requires prior specific An interesting observation in this context is that a hand- permission and/or a fee. Articles from this volume were invited to present written program clearly outperforms even very fast vectorized their results at The 37th International Conference on Very Large Data Bases, systems, as shown in Figure 1 (originally from [16]). In a August 29th - September 3rd 2011, Seattle, Washington. Proceedings of the VLDB Endowment, Vol. 4, No. 9 way that is to be expected, of course, as a human might use Copyright 2011 VLDB Endowment 2150-8097/11/06... $ 10.00. tricks that database management systems would never come 539

2 .up with. On the other hand the query in this figure is a excellent performance, but, as shown in Figure 1, still does simple aggregation query, and one would expect that there not reach the speed of hand-written code. is only one reasonable way to evaluate this query. Therefore Another way to improve query processing is to compile the existing query evaluation schemes seem to be clearly the query into some kind of executable format, instead of suboptimal. using interpreter structures. In [13] the authors proposed The algebraic operator model is very useful for reasoning compiling the query logic into Java Bytecode, which allows over the query, but it is not necessarily a good idea to exhibit for using the Java JVM. However this is relatively heavy the operator structure during query processing itself. In this weight, and they still use the iterator model, which limits paper we therefore propose a query compilation strategy that the benefits. Recent work on the HIQUE system proposed differs from existing approaches in several important ways: compiling the query into C code using code templates for each operator [6]. HIQUE eliminates the iterator model by 1. Processing is data centric and not operator centric. inlining result materialization inside the operator execution. Data is processed such that we can keep it in CPU However, contrary to our proposal, the operator boundaries registers as long as possible. Operator boundaries are are still clearly visible. Furthermore, the costs of compiling blurred to achieve this goal. 2. Data is not pulled by operators but pushed towards the generated C code are quite high [6]. the operators. This results in much better code and Besides these more general approaches, many individual data locality. techniques have been proposed to speed up query processing. 3. Queries are compiled into native machine code using One important line of work is reducing the impact of branch- the optimizing LLVM compiler framework [7]. ing, where [14] showed how to combine conjunctive predicates such that the trade-off between number of branches and num- The overall framework produces code that is very friendly to ber of evaluated predicates is optimal. Other work has looked modern CPU architectures and, as a result, rivals the speed at processing individual expressions more efficiently by using of hand-coded query execution plans. In some cases we can SIMD instructions [12, 15]. even outperform hand-written code, as using the LLVM as- sembly language allows for some tricks that are hard to do in 3. THE QUERY COMPILER a high-level programming language like C++. Furthermore, by using an established compiler framework, we benefit from 3.1 Query Processing Architecture future compiler, code optimization, and hardware improve- We propose a very different architecture for query process- ments, whereas other approaches that integrate processing ing (and, accordingly, for query compilation). In order to optimizations into the query engine itself will have to update maximize the query processing performance we have to make their systems manually. We demonstrate the impact of these sure that we maximize data and code locality. To illustrate techniques by integrating them into the HyPer main-memory this point, we first give a definition of pipeline-breaker that database management system [5] and performing various is more restrictive than in standard database systems: An comparisons with other systems. algebraic operator is a pipeline breaker for a given input side The rest of this paper is structured as follows: We first if it takes an incoming tuple out of the CPU registers. It is discuss related work in Section 2. We then explain the overall a full pipeline breaker if it materializes all incoming tuples architecture of our compilation framework in Section 3. The from this side before continuing processing. actual code generation for algebraic operators is discussed in This definition is slightly hand-waving, as a single tuple more details in Section 4. We explain how different advanced might already be too large to fit into the available CPU processing techniques can be integrated into the framework registers, but for now we pretend that we have a sufficient in Section 5. We then show an extensive evaluation of our number of registers for all input attributes. We will look techniques in Section 6 and draw conclusions in Section 7. at this in more detail in Section 4. The main point is that we consider spilling data to memory as a pipeline-breaking 2. RELATED WORK operation. During query processing, all data should be kept The classical iterator model for query evaluation was pro- in CPU registers as long as possible. posed quite early [8], and was made popular by the Volcano Now the question is, how can we organize query processing system [4]. Today, it is the most commonly used execution such that the data can be kept in CPU registers as long as strategy, as it is flexible and quite simple. As long as query possible? The classical iterator model is clearly ill-suited processing was dominated by disk I/O the iterator model for this, as tuples are passed via function calls to arbitrary worked fine. However, as the CPU consumption became an functions – which always results in evicting the register issue, some systems tried to reduce the high calling costs contents. The block-oriented execution models have fewer of the iterator model by passing blocks of tuples between passes across function boundaries, but they clearly also break operators [11]. This greatly reduces the number of function the pipeline as they produce batches of tuples beyond register invocations, but causes additional materialization costs. capacity. In fact any iterator-style processing paradigm that Modern main-memory database systems look at the prob- pulls data up from the input operators risks breaking the lem again, as for them CPU costs is a critical issue. The pipeline, as, by offering an iterator-base view, it has to offer MonetDB system [1, 9] goes to the other extreme, and mate- a linearized access interface to the output of an arbitrarily rializes all intermediate results, which eliminates the need complex relational operator. Sometimes operators could to call an input operator repeatedly. Besides simplifying produce a certain small number of output tuples together operator interaction, materialization has other advantages, cheaply, without need for copying. too, but it also causes significant costs. The MonetDB/X100 We therefore reverse the direction of data flow control. system [1] (which evolved into VectorWise) selected a mid- Instead of pulling tuples up, we push them towards the con- dle ground by passing large vectors of data and evaluating sumer operators. While pushing tuples, we continue pushing queries in a vectorized manner on each chunk. This offers until we reach the next pipeline-breaker. As a consequence, 540

3 .select * initialize memory of Ba=b , Bc=z , and Γz from R1,R3, for each tuple t in R1 (select R2.z,count(*) if t.x = 7 from R2 materialize t in hash table of Ba=b where R2.y=3 for each tuple t in R2 group by R2.z) R2 if t.y = 3 where R1.x=7 and R1.a=R3.b and R2.z=R3.c aggregate t in hash table of Γz Figure 2: Example Query for each tuple t in Γz materialize t in hash table of Bz=c a=b a=b for each tuple t3 in R3 for each match t2 in Bz=c [t3 .c] x=7 for each match t1 in Ba=b [t3 .b] x=7 z=c z=c output t1 ◦ t2 ◦ t3 R1 R1 z;count(*) Figure 4: Compiled query for Figure 3 z;count(*) y=3 tuples in CPU registers and only access memory to retrieve y=3 new tuples or to materialize their results. Furthermore, we R2 R3 R2 R3 have very good code locality as small code fragments are original with pipeline boundaries working on large amounts of data in tight loops. As such, Figure 3: Example Execution Plan for Figure 2 we can expect to get very good performance from such an evaluation scheme. And indeed, as we will see in Section 6, data is always pushed from one pipeline-breaker into another such a query evaluation method greatly outperforms iterator- pipeline-breaker. Operators in-between leave the tuples in based evaluation. The main challenge now is to translate a CPU registers and are therefore very cheap to compute. Fur- given algebraic execution plan into such code fragments. We thermore, in a push-based architecture the complex control will first discuss the high-level translation in the next section, flow logic tends to be outside tight loops, which reduces and then explain the actual code generation in Section 4. register pressure. As the typical pipeline-breakers would 3.2 Compiling Algebraic Expressions have to materialize the tuples anyway, we produce execution plans that minimize the number of memory accesses. When looking at the query code in Figure 4, we notice As an illustrational example consider the execution plan in that the boundaries between operators are blurred. The Figure 3 (Γ denotes a group by operator). The corresponding first fragment for example combines the scan of R1 , the SQL query is shown in Figure 2. It selects some tuples selection σx=7 , and the build part of Bc=z into one code from R2 , groups them by z, joins the result with R3 , and fragment. The query execution code is no longer operator joins that result with some tuples from R1 . In the classical centric but data centric: Each code fragment performs all operator model, the top-most join would produce tuples by actions that can be done within one part of the execution first asking its left input for tuples repeatedly, placing each of pipeline, before materializing the result into the next pipeline them in a hash table, and then asking its right input for tuples breaker. The individual operator logic can, and most likely and probing the hash table for each table. The input sides will, be spread out over multiple code fragments, which makes themselves would operate in a similar manner recursively. query compilation more difficult than usual. In addition, When looking at the data flow in this example more carefully, these code fragments have a very irregular structure. For we see that in principle the tuples are always passed from one example, for binary pipeline breakers materializing an input materialization point to another. The join a = b materializes tuple from the left will be very different from materializing an the tuples from its left input in a hash table, and receives input tuple from the right. In the iterator model everything them from a materialized state (namely from the scan of R1 ). is a simple next call, but here the complex operator logic The selection in between pipelines the tuples and performs no directly affects the code generation. It is important to note materialization. These materialization points (i.e., pipeline that this is an advantage, not a limitation of the approach! boundaries) are shown on the right hand side of Figure 3. The iterator model has a nice, simple interface, but it pays As we have to materialize the tuples anyway at some point, for this by using virtual function calls and frequent memory we therefore propose to compile the queries in a way that accesses. By exposing the operator structure, we can generate all pipelining operations are performed purely in CPU (i.e., near optimal assembly code, as we generate exactly the without materialization), and the execution itself goes from instructions that are relevant for the given situation, and we one materialization point to another. The corresponding can keep all relevant values in CPU registers. As we will compilation for our running example is shown in Figure 4. see below, the abstractions that are needed to keep the code (Note that we assume fully in-memory computation for now to maintainable and understandable exist, i.e., all operators keep the example readable.) Besides initialization, the code offer a uniform interface, but they exist only in the query consists of four fragments that correspond to the pipeline compiler itself. The generated code exposes all the details (for fragments in the algebraic plan: The first fragment filters efficiency reasons), but that is fine, as the code is generated tuples from R1 and places them into the hashtable of Ba,b , anyway. the second does the same for R2 and Γz , and the third From the point of view of the query compiler the operators transfers the results from Γz into the hashtable of Bz=c . The offer an interface that is nearly as simple as in the iterator fourth and final fragment passes the tuples of R3 along the model. Conceptually each operator offers two functions: join hash tables and produces the result. All four fragments • produce() in themselves are strongly pipelining, as they can keep their • consume(attributes,source) 541

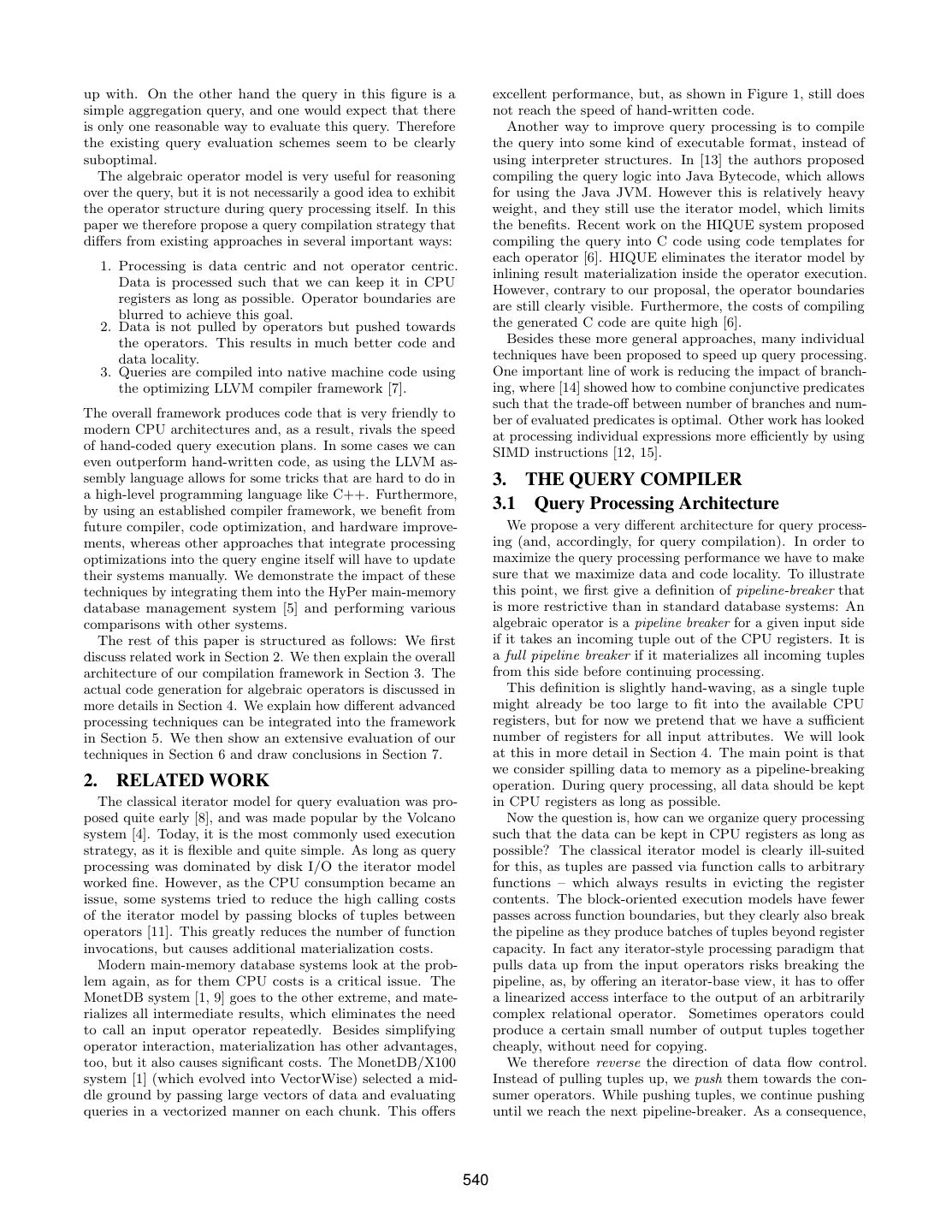

4 . B.produce B.left.produce; B.right.produce; B.consume(a,s) if (s==B.left) + print “materialize tuple in hash table”; C+ else print “for each match in hashtable[” +a.joinattr+“]”; C++ B.parent.consume(a+new attributes) σ.produce σ.input.produce σ.consume(a,s) print “if ”+σ.condition; σ.parent.consume(attr,σ) scan.produce print “for each tuple in relation” C++ scan scan.parent.consume(attributes,scan) Figure 5: A simple translation scheme to illustrate the produce/consume interaction Figure 6: Interaction of LLVM and C++ Conceptually, the produce function asks the operator to imented with generating C++ code from the query and produce its result tuples, which are then pushed towards the passing it through a compiler at runtime, loading the re- consuming operator by calling their consume functions. For sult as shared library. Compiling to C++ was attractive our running example, the query would be executed by calling as the C++ code could directly access the data structures Ba=b .produce. This produce function would then in itself call and the code of our database system, which is also written σx=7 .produce to fill its hash table, and the σ operator would in C++. However, it has several disadvantages. First, an call R1 .produce to access the relation. R1 is a leaf in the optimizing C++ compiler is really slow, compiling a complex operator tree, i.e., it can produce tuples on its own. Therefore query could take multiple seconds. Second, C++ does not it scans the relation R1 , and for each tuple loads the required offer total control over the generated code, which can lead attributes and calls σx=7 .consume(attributes, R1 ) to hand to suboptimal performance. In particular, overflow flags the tuple to the selection. The selection filters the tuples, and etc. are unavailable. Instead, we used the Low Level Vir- if it qualifies it passes it by calling Ba=b (attributes, σx=7 ). tual Machine (LLVM) compiler framework [7] to generate The join sees that it gets tuples from the left side, and thus portable assembler code, which can then be executed directly stores them in the hash table. After all tuples from R1 are using an optimizing JIT compiler provided by LLVM. While produced, the control flow goes back to the join, which will generating assembler code might sound daunting at first, pro- call Bc=z .produce to get the tuples from the probe side etc. ducing assembler code using LLVM is much more robust than However, this produce/consume interface is only a mental writing it manually. For example LLVM hides the problem model. These functions do not exist explicitly, they are only of register allocation by offering an unbounded number of used by the code generation. When compiling an SQL query, registers (albeit in Single Static Assignment form). We can the query is first processed as usual, i.e., the query is parsed, therefore pretend that we have a CPU register available for translated into algebra, and the algebraic expression is opti- every attribute in our tuple, which simplifies life considerably. mized. Only then do we deviate from the standard scheme. And the LLVM assembler is portable across machine architec- The final algebraic plan is not translated into physical al- tures, as only the LLVM JIT compiler translates the portable gebra that can be executed, but instead compiled into an LLVM assembler into architecture dependent machine code. imperative program. And only this compilation step uses the Furthermore, the LLVM assembler is strongly typed, which produce/consume interface internally to produce the required caught many bugs that were hidden in our original textual imperative code. This code generation model is illustrated C++ code generation. And finally LLVM is a full strength in Figure 5. It shows a very simple translation scheme that optimizing compiler, which produces extremely fast machine converts B, σ, and scans into pseudo-code. The readers can code, and usually requires only a few milliseconds for query convince themselves that applying the rules from Figure 5 to compilation, while C or C++ compilers would need seconds the operator tree in Figure 3 will produce the pseudo-code (see Section 6 and [6]). from Figure 4 (except for differences in variable names and Still, one does not want to implement the complete query memory initialization). The real translation code is signifi- processing logic in LLVM assembler. First, because writing cantly more complex, of course, as we have to keep track of assembler code is more tedious than using a high-level lan- the loaded attributes, the state of the operators involved, at- guage like C++, and second, because much of the database tribute dependencies in the case of correlated subqueries, etc., logic like index structures is written in C++ anyway. But but in principle this simple mapping already shows how we one can easily mix LLVM and C++, as C++ methods can can translate algebraic expressions into imperative code. We be called directly from LLVM and vice versa. (To the com- include a more detailed operator translation in Appendix A. piler, there is no difference between both types of code, as As these code fragments always operate on certain pieces of both result in native machine code and both have strongly data at a time, thus having very good locality, the resulting typed prototypes.) This results in a mixed execution model code proved to execute efficiently. which is metaphorically sketched in Figure 6. The complex part of the query processing (e.g., complex data structure 4. CODE GENERATION management or spilling to disk) is written in C++, and forms the cogwheels in Figure 6. The different operators are 4.1 Generating Machine Code connected together by LLVM code, which forms the chain in So far we have only discussed the translation of algebraic Figure 6. The C++ “cogwheels” are pre-compiled; only the expressions into pseudo-code, but in practice we want to LLVM “chain” for combining them is dynamically generated. compile the query into machine code. Initially we exper- Thereby we achieve very low query compilation times. In 542

5 .the concrete example, the complex part of the scan (e.g., data fragment (i.e., a sequence of tuples stored consecutively). locating data structures, figuring out what to scan next) The LLVM code first loads pointers to the columns that it is implemented in C++, and this C++ code “drives” the wants to access during processing. Then, it loops over all execution pipeline. But the tuple access itself and the further tuples contained in the current fragment (code omitted). For tuple processing (filtering, materialization in hash table) is each such tuple, it loads the attribute y into a register and implemented in LLVM assembler code. C++ code is called checks the predicate. If the predicate is false, it continues from time to time (like when allocating more memory), but looping. Otherwise, it loads the attribute z into a register and interaction of the C++ parts is controlled by LLVM. If com- computes a hash value. Using this hash value, it looks up the plex operators like sort are involved, control might go back corresponding hash entry (using the C++ data structures, fully into C++ at some point, but once the complex logic is which are visible in LLVM), and iterates over the entries over and tuples have to be processed in bulk, LLVM takes (code omitted). If no matching group is found, it checks if it over again. For optimal performance it is important that can assert sufficient free space to allocate a new group. If the hot path, i.e., the code that is executed for 99% of the not, it calls into a C++ function that provides new memory tuples, is pure LLVM. Calling C++ from time to time (e.g., and spills to disk as needed. This way, the hot code path when switching to a new page) is fine, the costs for that are remains purely within LLVM, and consists mainly of the code negligible, but the bulk of the processing has to be done in within the %then block plus the corresponding hash table LLVM. While staying in LLVM, we can keep the tuples in iteration. Note that the LLVM call directly calls the native CPU registers all the time, which is about as fast as we can C++ method (using the mangled name @ ZN...), there is expect to be. When calling an external function all registers no additional wrapper. Thus, C++ and LLVM can interact have to be spilled to memory, which is somewhat expensive. directly with each other without performance penalty. In absolute terms it is very cheap, of course, as the registers will be spilled on the stack, which is usually in cache, but if 4.3 Performance Tuning this is done millions of times it becomes noticeable. The LLVM code generated using the strategy sketched above is extremely fast. The main work is done in a tight 4.2 Complex Operators loop over the tuples, which allows for good memory pre- fetching and accurate branch prediction. In fact the code is While code generation for scans and selections is more or so fast that suddenly code fragments become a bottleneck less straightforward, some care is needed when generating that were relatively unimportant as long as the other code code for more complex operators like sort or join. The first was slow. One prominent example is hashing. For TPC-H thing to keep in mind is that contrary to the simple examples Query 1 (which is basically a single scan and a hash-based seen so far in the paper it is not possible or even desirable aggregation) for example more than 50% of the time of our to compile a complex query into a single function. This has initial plan was spent on hashing, even though we only hash multiple reasons. First, there is the pragmatic reason that two simple values. Another critical issue are branches. On the LLVM code will most likely call C++ code at some point modern CPUs, branches are very cheap as long as the branch that will take over the control flow. For example an external prediction works, i.e., as long as the branches are taken either sorting operator will produce the initial runs with LLVM, nearly never or nearly always. A branch that is taken with but will probably control the merge phase from within C++, a probability of 50% however ruins the branch prediction calling LLVM functions as needed. The second reason is that and is very expensive. Therefore the query compiler must inlining the complete query logic into a single function can produce code that allows for good branch prediction. lead to an exponential growth in code. For example outer These issues require some care when generating assembly joins will call their consumers in two different situations, first code. As mentioned before, we keep all tuple attributes in when they have found a match, and second, when producing (virtual) CPU registers. For strings we keep the length and NULL values. One could directly include the consumer code a pointer to the string itself in registers. In general we try to in both cases, but then a cascade of outer joins would lead to load attributes as late as possible, i.e., either in the moment an exponential growth in code. Therefore it makes sense to that we need that attribute or when we get it for free anyway define functions within LLVM itself, that can then be called because we have to access the corresponding memory. Similar from places within the LLVM code. Again, one has to make holds for computations of derived attributes. However when sure that the hot path does not cross a function boundary. these values are needed on the critical path (e.g., when Thus a pipelining fragment of the algebraic expression should accessing a hash table using a hash value), it makes sense result in one compact LLVM code fragment. to compute these values a bit earlier than strictly necessary This need for multiple functions affects the way that we in order to hide the latency of the computation. Similarly, generate code. In particular, we have to keep track of all branches should be laid out such that they are amenable attributes and remember if they are currently available in for ultra efficient CPU execution. For example the following registers. Materializing attributes in memory is a deliberate (high-level) code fragment is not very prediction friendly: decision, similar to spooling tuples to disk. Of course not from a performance point of view, materializing in memory is Entry* iter=hashTable[hash]; relatively fast, but from a code point of view materialization while (iter) { is a very complex step that should be avoided if possible. ... // inspect the entry Unfortunately the generated assembler code for real queries iter=iter->next; becomes complicated very quickly, which prevents us from } showing a complete plan here, but as illustration we include a tiny LLVM fragment that shows the main machinery for The problem is that the while mixes up two things, namely the Γz;count(∗) (σy=3 (R2 )) part of our running example in checking if an entry for this hash value exists at all, and Figure 7: The LLVM code is called by the C++ code for each checking if we reached the end of the collision list. The first 543

6 .define internal void @scanConsumer(%8∗ %executionState, %Fragment R2∗ %data) { body: ... %columnPtr = getelementptr inbounds %Fragment R2∗ %data, i32 0, i32 0 %column = load i32∗∗ %columnPtr, align 8 1. locate tuples in memory %columnPtr2 = getelementptr inbounds %Fragment R2∗ %data, i32 0, i32 1 %column2 = load i32∗∗ %columnPtr2, align 8 ... (loop over tuples , currently at %id, contains label %cont17) 2. loop over all tuples %yPtr = getelementptr i32∗ %column, i64 %id %y = load i32∗ %yPtr, align 4 3. filter y = 3 %cond = icmp eq i32 %y, 3 br i1 %cond, label %then, label %cont17 then: %zPtr = getelementptr i32∗ %column2, i64 %id 4. hash z %z = load i32∗ %zPtr, align 4 %hash = urem i32 %z, %hashTableSize %hashSlot = getelementptr %”HashGroupify::Entry”∗∗ %hashTable, i32 %hash %hashIter = load %”HashGroupify::Entry”∗∗ %hashSlot, align 8 %cond2 = icmp eq %”HashGroupify::Entry”∗ %hashIter, null 5. lookup in hash table (C++ data structure) br i1 %cond, label %loop20, label %else26 ... (check if the group already exists , starts with label %loop20) else26 : %cond3 = icmp le i32 %spaceRemaining, i32 8 6. not found, check space br i1 %cond, label %then28, label %else47 ... (create a new group, starts with label %then28) else47 : %ptr = call i8∗ @ ZN12HashGroupify15storeInputTupleEmj 7. full, call C++ to allocate mem or spill (%”HashGroupify”∗ %1, i32 hash, i32 8) ... (more loop logic) } Figure 7: LLVM fragment for the first steps of the query Γz;count(∗) (σy=3 (R2 )) case will nearly always be true, as we expect the hash table we have to process tuples linearly, one tuple at a time. Our to be filled, while the second case will nearly always be false, initial implementation pushes individual tuples, and this as our collision lists are very short. Therefore, the following already performs very well, but more advanced processing code fragment is more prediction friendly: techniques can be integrated very naturally in the general framework. We now look at several of them. Entry* iter=hashTable[hash]; Traditional block-wise processing [11] has the great disad- if (iter) do { vantage of creating additional memory accesses. However, ... // inspect the entry processing more than one tuple at once is indeed a very good iter=iter->next; idea, as long as we can keep the whole block in registers. In } while (iter); particular when using SIMD registers this is often the case. Processing more than one tuple at a time has several advan- Of course our code uses LLVM branches and not C++ tages: First, of course, it allows for using SIMD instructions loops, but the same is true there, branch prediction improves on modern CPUs [15], which can greatly speed up processing. significantly when producing code like this. And this code Second, it can help delay branching, as predicates can be layout has a noticeable impact on query processing, in our evaluated and combined without executing branches immedi- experiments just changing the branch structure improved ately [12, 14]. Strictly speaking the techniques from [14] are hash table lookups by more than 20%. very useful already for individual tuples, but the effect can be All these issues complicate code generation, of course. But even larger for blocks of tuples. This style of block process- overall the effort required to avoid these pitfalls is not too ing where values are packed into a (large) register fits very severe. The LLVM code is generated anyway, and spending naturally into our framework, as the operators always pass effort on the code generator once will pay off for all sub- register values to their consumers. LLVM directly allows for sequent queries. The code generator is relatively compact. modeling SIMD values as vector types, thus the impact on In our implementation the code generation for all algebraic the overall code generation framework are relatively minor. operators required for SQL-92 consists of about 11,000 lines SIMD instructions are a kind of inter-tuple parallelism, of code, which is not a lot. i.e., processing multiple tuples with one instruction. The second kind of parallelism relevant for modern CPUs is multi- 5. ADVANCED PARALLELIZATION TECH- core processing. Nearly all database systems will exploit NIQUES multi-core architectures for inter-query parallelism, but as In the previous sections we have discussed how to compile the number of cores available on modern CPUs increases, queries into data-centric execution programs. By organizing intra-query parallelism becomes more important. In prin- the data flow and the control flow such that tuples are ciple this is a well studied problem [10, 3], and is usually pushed directly from one pipeline breaker into another, and solved by partitioning the input of operators, processing by keeping data in registers as long as possible, we get each partition independently, and then merging the results excellent data locality. However, this does not mean that from all partitions. For our code generation framework this 544

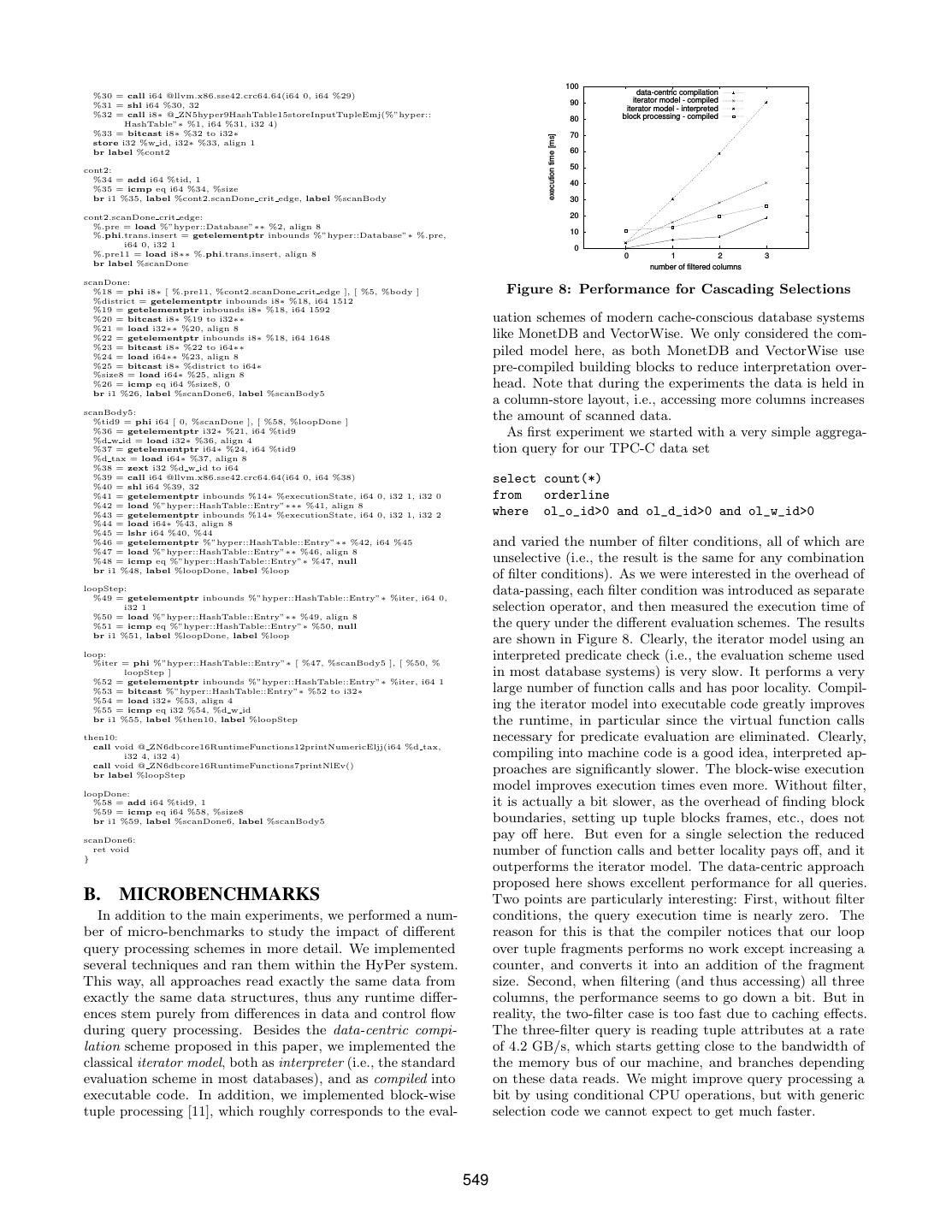

7 . HyPer + C++ HyPer + LLVM Q1 Q2 Q3 Q4 Q5 TPC-C [tps] 161,794 169,491 HyPer + C++ [ms] 142 374 141 203 1416 total compile time [s] 16.53 0.81 compile time [ms] 1556 2367 1976 2214 2592 HyPer + LLVM 35 125 80 117 1105 Table 1: OLTP Performance of Different Engines compile time [ms] 16 41 30 16 34 VectorWise [ms] 98 - 257 436 1107 kind of parallelism can be supported with nearly no code MonetDB [ms] 72 218 112 8168 12028 changes. As illustrated in Figure 7, the code always operates DB X [ms] 4221 6555 16410 3830 15212 on fragments of data, that are processed in a tight loop, and materialized into the next pipeline breaker. Usually, the Table 2: OLAP Performance of Different Engines fragments are determined by the storage system, but they could as well come from a parallelizing decision. Only some are shown in Table 1. As can be seen in the first row, the additional logic would be required to merge the individual performance, measured in transactions per second, of the results. Note that the “parallelizing decision” in itself is LLVM version is slightly better than performance of opti- a difficult problem! Spitting and merging data streams is mized C++ code. The difference is small, though, as most of expensive, and the optimizer has to be careful about intro- the TPC-C transactions are relatively simple and touch less ducing parallelism. This is beyond the scope of this paper. than 30 tuples. More interesting is the compile time, which But for future work it is a very relevant problem, as the covers all TPC-C scripts (in a PL/SQL style script language). number of cores is increasing. Compiling the generated C++ code is more than a factor of ten slower than using LLVM, and results in (slightly) 6. EVALUATION worse performance, which is a strong argument for our query compilation techniques. We have implemented the techniques proposed in this pa- For the OLAP part, we ran the TPC-CH queries as pre- per both in the HyPer main-memory database management pared queries and measured the warm execution time. The systems [5], and in a disk-based DBMS. We found that the results for the first five queries are shown in Table 2 (Q2 techniques work excellent, both when operating purely in triggered a bug in VectorWise). DB X is clearly much slower memory and when spooling to disk if needed. However it than the other systems, but this is not surprising, as it was de- is difficult to precisely measure the impact our compilation signed as a general purpose disk-based system (even though techniques have relative to other approaches, as query per- here the data fits into main memory and we measure warm formance is greatly affected by other effects like differences execution times). The other systems are all much faster, but in storage systems, too. The evaluation is therefore split into HyPer with the LLVM code generation is frequently another two parts: We include a full systems comparison here, includ- factor 2-4 faster, depending on the query. The comparison ing an analysis of the generated code. A microbenchmark between the C++ backend and the LLVM backend is par- for specific operator behavior is included in Appendix B. ticularly interesting here. First, while the C++ version is In the system comparison we include experiments run on reasonably fast, the compact code generated by the LLVM MonetDB 1.36.5, Ingres VectorWise 1.0, and a commercial backend is significantly faster. This is less noticeable for Q5, database system we shall call DB X. All experiments were which is dominated by joins, but for the other queries, in conducted on a Dual Intel X5570 Quad-Core-CPU with particular the aggregation query Q1, the differences are large. 64GB main memory, Red Hat Enterprise Linux 5.4. Our Q1 highlights this very well, as in principle the query is very C++ code was compiled using gcc 4.5.2, and the machine simple, just one scan and an aggregation. The corresponding code was produced using LLVM 2.8. The optimization levels C++ code therefore looks very natural and efficient, but are explained in more detail in Appendix C. simply cannot compete with the LLVM version that tries to keep everything in registers. The second observation is 6.1 Systems Comparison that even though the queries are reasonably fast when cross- The HyPer system in which we integrated our query com- compiled into C++, the compile time itself is unacceptable, pilation techniques is designed as a hybrid OLTP and OLAP which was part of the reason why we looked at alternatives system, i.e., it can handle both kinds of workloads concur- for generating C++ code. The compile time for the LLVM rently. We therefore used the TPC-CH benchmark from version however is reasonably modest (the numbers include [5] for experiments. For the OLTP side it runs a TPC-C all steps necessary for converting the SQL string into exe- benchmark, and for the OLAP side it executes the 22 TPC-H cutable machine code, not only the LLVM code generation). queries adapted to the (slightly extended) TPC-C schema. Changing the backend therefore clearly payed off for HyPer, The first five queries are included in Appendix D. As we are both due to query processing itself and due to compile times. mainly interested in an evaluation of raw query processing speed here, we ran a setup without concurrency, i.e., we 6.2 Code Quality loaded 12 warehouses, and then executed the TPC-C trans- Another interesting point is the quality of the generated actions single-threaded and without client wait times. Simi- LLVM code. As shown above the raw performance is obvi- larly the OLAP queries are executed on the 12 warehouses ously good, but it is interesting to see how the generated single-threaded and without concurrent updates. What is machine code performs regarding branching and cache ef- interesting for the comparison is that HyPer originally com- fects. To study these effects, we ran all five queries using piled the queries into C++ code using hand-written code the callgrind tool of valgrind 3.6.0, which uses binary instru- fragments, which allows us to estimate the impact LLVM mentation to observe branches and cache effects. We used has relative to C++ code. callgrind control to limit profiling to query processing itself. We ran the OLTP part only in HyPer, as the other sys- In the experiment we compared the LLVM version of HyPer tems were not designed for OLTP workloads. The results with MonetDB. MonetDB performs operations in compact, 545

8 . Q1 Q2 Q3 Q4 Q5 LLVM MonetDB LLVM MonetDB LLVM MonetDB LLVM MonetDB LLVM MonetDB branches 19,765,048 144,557,672 37,409,113 114,584,910 14,362,660 127,944,656 32,243,391 408,891,838 11,427,746 333,536,532 mispredicts 188,260 456,078 6,581,223 3,891,827 696,839 1,884,185 1,182,202 6,577,871 639 6,726,700 I1 misses 2,793 187,471 1,778 146,305 791 386,561 508 290,894 490 2,061,837 D1 misses 1,764,937 7,545,432 10,068,857 6,610,366 2,341,531 7,557,629 3,480,437 20,981,731 776,417 8,573,962 L2d misses 1,689,163 7,341,140 7,539,400 4,012,969 1,420,628 5,947,845 3,424,857 17,072,319 776,229 7,552,794 I refs 132 mil 1,184 mil 313 mil 760 mil 208 mil 944 mil 282 mil 3,140 mil 159 mil 2,089 mil Table 3: Branching and Cache Locality tight loops, and can therefore be expected to have a low 8. REFERENCES number of branch mispredictions. The results are shown in [1] P. A. Boncz, S. Manegold, and M. L. Kersten. Table 3. The first block shows the number of branches, the Database architecture evolution: Mammals flourished number of branch mispredictions, and the number of level 1 long before dinosaurs became extinct. PVLDB, instruction cache misses (I1). These numbers are indicators 2(2):1648–1653, 2009. for the control flow and the code locality of the query code. [2] P. A. Boncz, M. Zukowski, and N. Nes. Several things are noticeable. First, our generated LLVM MonetDB/X100: Hyper-pipelining query execution. In code contains far less branches than the MonetDB code. This CIDR, pages 225–237, 2005. is not really surprising, as we try to generate all code up to [3] J. Cieslewicz and K. A. Ross. Adaptive aggregation on the next pipeline breaker in one linear code fragment. Second, chip multiprocessors. In VLDB, pages 339–350, 2007. the number of branch mispredictions is significantly lower for [4] G. Graefe and W. J. McKenna. The Volcano optimizer the LLVM code. One exception is Q2, but this is basically generator: Extensibility and efficient search. In ICDE, a sub-optimal behavior of HyPer and not related to LLVM. pages 209–218. IEEE Computer Society, 1993. (Currently HyPer is very pessimistic about spooling to disc, [5] A. Kemper and T. Neumann. HyPer: A hybrid and copies strings around a lot, which causes more than 60% OLTP&OLAP main memory database system based on of the mispredictions. MonetDB avoids these copies). For virtual memory snapshots. In ICDE, pages 195–206, all other queries the LLVM code has far less mispredictions 2011. than MonetDB. Interestingly the relative misprediction rate [6] K. Krikellas, S. Viglas, and M. Cintra. Generating code of MonetDB is quite good, as can be expected from the for holistic query evaluation. In ICDE, pages 613–624, MonetDB architecture, but in total MonetDB executes far 2010. too many branches and thus has many mispredictions, too. [7] C. Lattner and V. S. Adve. LLVM: A compilation The next block shows the caching behavior, namely the framework for lifelong program analysis & level 1 data cache misses (D1) and level 2 data misses (L2d). transformation. In ACM International Symposium on For most queries these two numbers are very similar, which Code Generation and Optimization (CGO), pages means that if data is not in the level 1 cache it is probably not 75–88, 2004. in the level 2 cache either. This is expected behavior for very [8] R. A. Lorie. XRM - an extended (n-ary) relational large hash tables. Again the LLVM code shows a very good memory. IBM Research Report, G320-2096, 1974. data locality and has less cache misses than MonetDB. As [9] S. Manegold, P. A. Boncz, and M. L. Kersten. with branches, the string handling in Q2 degrades caching, Optimizing database architecture for the new but this will be fixed in future HyPer versions. For all bottleneck: memory access. VLDB J., 9(3):231–246, other queries the LLVM code has far less cache misses than 2000. MonetDB, up to a factor of ten. The last block shows the number of executed instructions. [10] M. Mehta and D. J. DeWitt. Managing intra-operator These numbers roughly follow the absolute execution times parallelism in parallel database systems. In VLDB, from Table 2 and thus are not surprising. However, they pages 382–394, 1995. show clearly that the generated LLVM code is much more [11] S. Padmanabhan, T. Malkemus, R. C. Agarwal, and compact than the MonetDB code. In a way that might stem A. Jhingran. Block oriented processing of relational from the architecture of MonetDB which always operates on database operations in modern computer architectures. Binary Association Tables (BATs), and thus has to touch In ICDE, pages 567–574, 2001. tuples multiple times. [12] V. Raman, G. Swart, L. Qiao, F. Reiss, V. Dialani, D. Kossmann, I. Narang, and R. Sidle. Constant-time 7. CONCLUSION query processing. In ICDE, pages 60–69, 2008. The experiments have shown that data-centric query pro- [13] J. Rao, H. Pirahesh, C. Mohan, and G. M. Lohman. cessing is a very efficient query execution model. By compil- Compiled query execution engine using JVM. In ICDE, ing queries into machine code using the optimizing LLVM page 23, 2006. compiler, the DBMS can achieve a query processing efficiency [14] K. A. Ross. Conjunctive selection conditions in main that rivals hand-written C++ code. memory. In PODS, pages 109–120, 2002. Our implementation of the compilation framework for com- [15] T. Willhalm, N. Popovici, Y. Boshmaf, H. Plattner, piling algebra into LLVM assembler is compact and maintain- A. Zeier, and J. Schaffner. SIMD-scan: Ultra fast able. Thereforem the data-centric compilation approach is in-memory table scan using on-chip vector processing promising for all new database projects. By relying on main- units. PVLDB, 2(1):385–394, 2009. stream compilation frameworks the DBMS automatically [16] M. Zukowski, P. A. Boncz, N. Nes, and S. H´eman. benefits from future compiler and processor improvements MonetDB/X100 - a DBMS in the CPU cache. IEEE without re-engineering the query engine. Data Eng. Bull., 28(2):17–22, 2005. 546

9 .APPENDIX void SelectTranslator::produce(CodeGen& codegen,Context& context) const { // Ask the input operator to produce tuples A. OPERATOR TRANSLATION AddRequired addRequired(context,getCondition().getUsed()); input−>produce(codegen,context); Due to space constraints we could only give a high-level } account of operator translation in Section 3, and include void SelectTranslator::consume(CodeGen& codegen,Context& context) const { a more detailed discussion here. We concentrate on the // Evaluate the predicate operators Scan, Select, Project, Map, and HashJoin here, as ResultValue value=codegen.deriveValue(getCondition(),context); these are sufficient to translate a wide class of queries. The // Pass tuple to parent if the predicate is satisfied CodeGen::If checkCond(codegen,value); first four operators are quite simple, and illustrate the basic { getParent()−>consume(codegen,context); produce/consume interaction, while the hash join is much } more involved. } The query compilation maintains quite a bit of infrastruc- ture that is passed around during operator translation. The Projection most important objects are codegen, which offers an interface The (bag) projection is nearly a no-op, and is compiled to the LLVM code generation (operator-> is overloaded to away during operator translation, as it only informs its input access IRBuilder from LLVM), context, which keeps track of about the required columns. The real effect occurs within available attributes (both from input operators and, for cor- the pipeline breakers, as they discard all columns that are related subqeueries, from “outside”), and getParent, which not required. returns the parent operator. In addition, helper objects are void ProjectTranslator::produce(CodeGen& codegen,Context& context) const used to automate LLVM code generation tasks. In particular { // Ask the input operator to produce tuples Loop and If are used to automate control flow. SetRequired setRequired(context,getOutput()); input−>produce(codegen,context); } Scan void ProjectTranslator::consume(CodeGen& codegen,Context& context) const { The scan uses the ScanConsumer helper class to access all // No code required here, pass to parent relation fragments, accesses all tuples contained in the current } getParent()−>consume(codegen,context); fragment, registers the required columns as available upon demand (they will be cached by the context), and passes the tuple to the consuming operator. Note that, depending Map on the relation type, the ScanConsumer logic might create The map operator computes new columns by evaluating func- calls to C++ functions (e.g., page accesses) to access data tions. Note that placement of computations, and ordering fragments. of maps and selections has already been done by the query optimizer. Therefore the translation is straight forward. void TableScanTranslator::produce(CodeGen& codegen,Context& context) const { void MapTranslator::produce(CodeGen& codegen,Context& context) const // Access all relation fragments { llvm::Value∗ dbPtr=codegen.getDatabasePointer(); // Figure out which columns have to be provided by the input operator llvm::Value∗ relationPtr=codegen.getPtr(dbPtr,db.layout.relations[table]); IUSet required=context.getRequired(); auto& required=scanConsumer.getPartitionPtr(); for (auto iter=functions.begin(),limit=functions.end();iter!=limit;++iter) { ScanConsumer scanConsumer(codegen,context) (∗iter).function−>getUsed(required); for (;db.relations[table]−>generateScan(codegen,relationPtr,scanConsumer);) { required.erase((∗iter).result); // Prepare accessing the current fragment } llvm::Value∗ partitionPtr=required; ColumnAccess columnAccess(partitionPtr,required); // Ask the input operator to produce tuples SetRequired setRequired(context,required); // Loop over all tuples input−>produce(codegen,context); llvm::Value∗ tid=codegen.const64(0); } llvm::Value∗ limit=codegen.load(partitionPtr,getLayout().size); Loop loop(codegen,codegen−>CreateICmpULT(tid,limit),{{tid,”tid”}}); void MapTranslator::consume(CodeGen& codegen,Context& context) const { { tid=loop.getLoopVar(0); // Offer new columns vector<ExpressionAccess> accessors; // Prepare column access code for (auto iter=functions.begin(),limit=functions.end();iter!=limit;++iter) PartitionAccess::ColumnAccess::Row rowAccess(columnAccess,tid); accessors.push back(ExpressionAccess(codegen,∗(∗iter).function)); vector<ValueAccess> access; for (unsigned index=0,limit=accessors.size();index<limit;index++) for (auto iter=required.begin(),limit=required.end();iter!=limit;++iter) context.registerIUProvider(functions[index].result,&accessors[index]); access.push back(rowAccess.loadAttribute(∗iter)); // Pass to parent // Register providers in new inner context getParent()−>consume(codegen,context); ConsumerContext consumerContext(context); } unsigned slot=0; for (auto iter=required.begin(),limit=required.end();iter!=limit;++iter,++slot) consumerContext.registerIUProvider(&(getOutput()[∗iter].iu),&access[slot]); // Push results to consuming operators Hash Join getParent()−>consume(codegen,consumerContext); The four operators shown above are relatively simple, as most // Go to the next tuple tid=codegen−>CreateAdd(tid,codegen.const64(1)); of the logic is handled by pure LLVM code. A hash join is loop.loopDone(codegen−>CreateICmpULT(tid,limit),{tid}); much more involved, as here control flow moves from LLVM } } into C++ and back. One could implement the hash join } using only LLVM, of course, and for a pure main-memory hash join that is even reasonable. But if the hash join is As a scan is a leaf operator, there is no consume part. expected to spool to disk if needed, it will have to call many methods that are query independent (for example I/O), and Selection in our implementation these parts are written in C++. For the selection the produce part is simple, it just adds We first sketch the C++ code, as it defines the code the attributes required for the predicate to the context and template that is then augmented with LLVM fragments. The calls its input operator. The consume part filters out all C++ code loads the build side into main memory, spooling non-satisfying tuples. to disk if needed. If data fits into main memory, it just joins 547

10 .with the probe side. Otherwise, it spools the probe side into // Compute size and hash value llvm::Value∗ size=matHelperLeft.computeSize(codegen,materializedValues); partitions, too, and joins the partitions. For simplicity we llvm::Value∗ hash=matHelperLeft.computeHash(codegen,materializedValues); limit ourselves to inner joins here, non-inner joins require // Materialize in hash table, spools to disk if needed additional bookkeeping to remember which tuples have been llvm::Value∗ ptr=codegen.callBase(HashJoinProxy::storeLeftInputTuple, {opPtr,size,hash}); joined. matHelperLeft.materialize(codegen,ptr,materializedValues); void HashJoin::Inner::produce() // Right side { } else { // Read the build side // Collect registers from the right side initMem(); vector<ResultValue> materializedValues; produceLeft(); matHelperRight.collectValues(codegen,context,materializedValues); if (inMem) { buildHashTable(); // Compute size and hash value } else { llvm::Value∗ size=matHelperRight.computeSize(codegen,materializedValues); // Spool remaining tuples to disk llvm::Value∗ hash=matHelperRight.computeHash(codegen,materializedValues); spoolLeft(); finishSpoolLeft(); // Materialize in memory, spools to disk if needed, implicitly joins } llvm::Value∗ ptr=codegen.callBase(HashJoinProxy::storeRightInputTuple, {opPtr,size}); // Is a in−memory join possible? matHelperRight.materialize(codegen,ptr,materializedValues); if (inMem) { codegen.call(HashJoinInnerProxy::storeRightInputTupleDone,{opPtr,hash}); produceRight(); } return; } } void HJTranslatorInner::join(CodeGen& codegen,Context& context) const // No, spool the right hand side, too { spoolRight(); llvm::Value∗ leftPtr=context.getLeftTuple(),∗rightPtr=context.getLeftTuple(); // Grace hash join // Load into registers. Actual load may be delayed by optimizer loadPartition(0); vector<ResultValue> leftValues,rightValues; while (true) { matHelperLeft.dematerialize(codegen,leftPtr,leftValues,context); // More tuples on the right? matHelperRight.dematerialize(codegen,rightPtr,rightValues,context); for (;rightScanRemaining;) { const void∗ rightTuple=nextRight(); // Check the join condition, return false for mismatches for (LookupHash lookup(rightTuple);lookup;++lookup) { llvm::BasicBlock∗ returnFalseBB=constructReturnFalseBB(codegen); join(lookup.data(),rightTuple); MaterializationHelper::testValues(codegen,leftValues,rightValues, } joinPredicateIs,returnFalseBB); } for (auto iter=residuals.begin(),limit=residuals.end();iter!=limit;++iter) { ResultValue v=codegen.deriveValue(∗∗iter,context); // Handle overflow in n:m case CodeGen::If checkCondition(codegen,v,0,returnFalseBB); if (overflow) { } loadPartitionLeft(); resetRightScan(); // Found a match, propagate up continue; getParent()−>consume(codegen,context); } } // Go to the next partition if ((++inMemPartition)>=partitionCount) { return; } else { Example } loadPartition(inMemPartition); As a small, illustrational example, we show the generated } LLVM code for the query } Thus the LLVM code has to provide three functions: pro- select d_tax from warehouse, district duce/consume as before, and an additional join function that where w_id=d_w_id and w_zip=’137411111’ the C++ code can call directly when joining tuples that had been spooled to disk. Note that in this case the hash below. It first scans warehouse, filters, and materializes into table lookups etc. are already implemented in C++, so join the hash table. Then it scans district and joins. Note that is only called for likely join candidates. The produce func- we “forced” a pure main-memory hash join to keep the code tion simply passes the control flow to the C++ code. The size reasonable. define void @planStart(%14∗ %executionState) { consume functions (one for each join side) hashes the join body: values, determines the relevant registers, and materializes %0 = getelementptr inbounds %14∗ %executionState, i64 0, i32 0, i32 1, i64 0 them into the hash table. Note that for performance reasons store i64 0, i64∗ %0, align 8 %1 = getelementptr inbounds %14∗ %executionState, i64 0, i32 1 the HyPer system skips the in-memory materialization of call void @ ZN5hyper9HashTable5resetEv(%”hyper::HashTable”∗ %1) %2 = bitcast %14∗ %executionState to %”hyper::Database”∗∗ the right hand side and directly probes the hash table if no %3 = load %”hyper::Database”∗∗ %2, align 8 data was spooled to disk, but this was omitted here due to %4 = getelementptr inbounds %”hyper::Database”∗ %3, i64 0, i32 1 %5 = load i8∗∗ %4, align 8 space constraints. %warehouse = getelementptr inbounds i8∗ %5, i64 5712 %6 = getelementptr inbounds i8∗ %5, i64 5784 void HJTranslatorInner::produce(CodeGen& codegen,Context& context) const %7 = bitcast i8∗ %6 to i32∗∗ { %8 = load i32∗∗ %7, align 8 // Construct functions that will be be called from the C++ code %9 = getelementptr inbounds i8∗ %5, i64 5832 { %10 = bitcast i8∗ %9 to %3∗∗ AddRequired addRequired(context,getCondiution().getUsed().limitTo(left)); %11 = load %3∗∗ %10, align 8 produceLeft=codegen.derivePlanFunction(left,context); %12 = bitcast i8∗ %warehouse to i64∗ } %size = load i64∗ %12, align 8 { %13 = icmp eq i64 %size, 0 AddRequired addRequired(context,getCondiution().getUsed().limitTo(right)); br i1 %13, label %scanDone, label %scanBody produceRight=codegen.derivePlanFunction(right,context); } scanBody: %tid = phi i64 [ 0, %body ], [ %34, %cont2 ] // Call the C++ code %14 = getelementptr i32∗ %8, i64 %tid codegen.call(HashJoinInnerProxy::produce.getFunction(codegen), %w id = load i32∗ %14, align 4 {context.getOperator(this)}); %15 = getelementptr inbounds %3∗ %11, i64 %tid, i32 0 } %16 = load i8∗ %15, align 1 %17 = icmp eq i8 %16, 9 void HJTranslatorInner::consume(CodeGen& codegen,Context& context) const br i1 %17, label %then, label %cont2 { llvm::Value∗ opPtr=context.getOperator(this); then: %w zip = getelementptr inbounds %3∗ %11, i64 %tid, i32 1, i64 0 // Left side %27 = call i32 @memcmp(i8∗ %w zip, i8∗ @”string 137411111”, i64 9) if (source==left) { %28 = icmp eq i32 %27, 0 // Collect registers from the left side br i1 %28, label %then1, label %cont2 vector<ResultValue> materializedValues; matHelperLeft.collectValues(codegen,context,materializedValues); then1: %29 = zext i32 %w id to i64 548

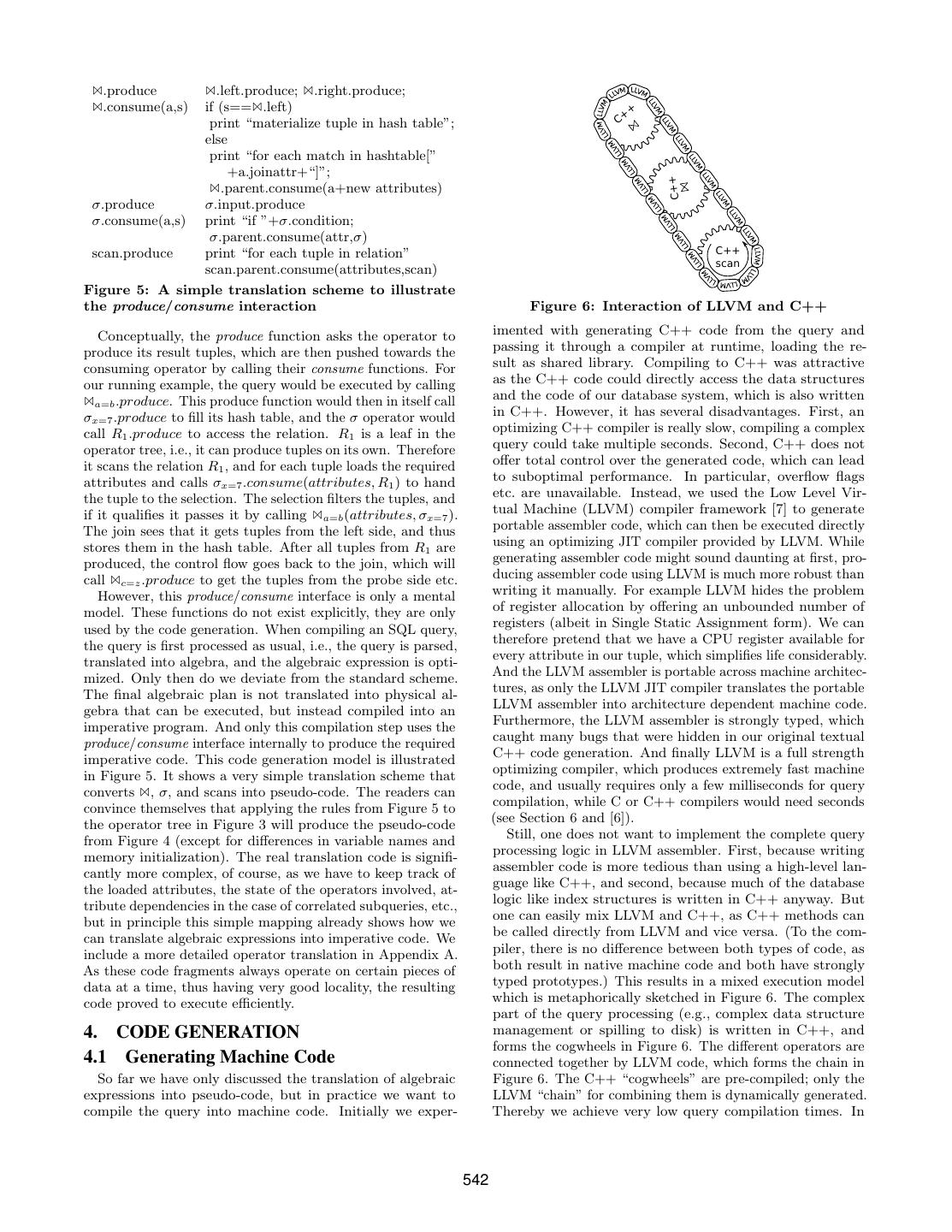

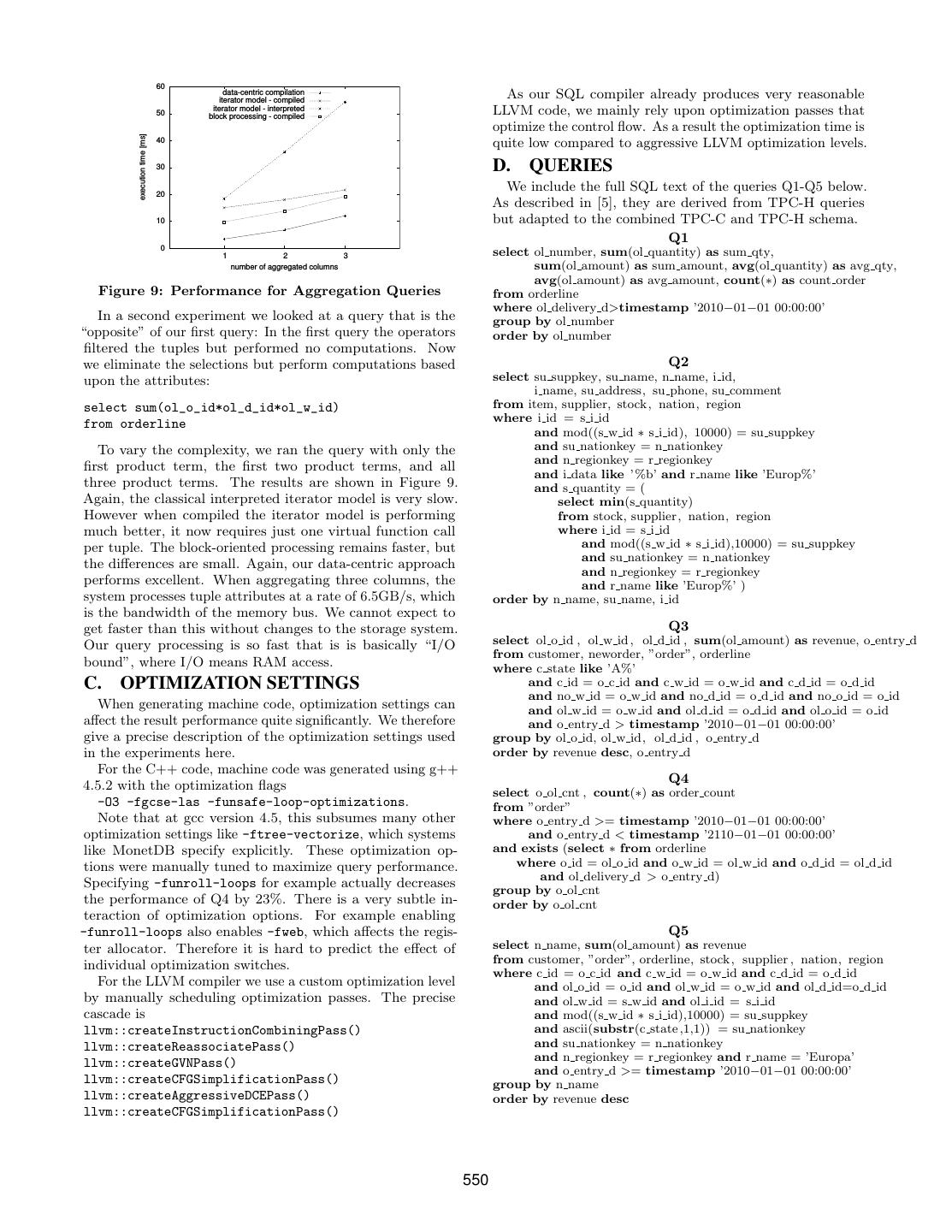

11 . 100 %30 = call i64 @llvm.x86.sse42.crc64.64(i64 0, i64 %29) data-centric compilation 90 iterator model - compiled %31 = shl i64 %30, 32 iterator model - interpreted %32 = call i8∗ @ ZN5hyper9HashTable15storeInputTupleEmj(%”hyper:: block processing - compiled 80 HashTable”∗ %1, i64 %31, i32 4) %33 = bitcast i8∗ %32 to i32∗ 70 execution time [ms] store i32 %w id, i32∗ %33, align 1 br label %cont2 60 cont2: 50 %34 = add i64 %tid, 1 40 %35 = icmp eq i64 %34, %size br i1 %35, label %cont2.scanDone crit edge, label %scanBody 30 cont2.scanDone crit edge: 20 %.pre = load %”hyper::Database”∗∗ %2, align 8 10 %.phi.trans.insert = getelementptr inbounds %”hyper::Database”∗ %.pre, i64 0, i32 1 0 %.pre11 = load i8∗∗ %.phi.trans.insert, align 8 0 1 2 3 br label %scanDone number of filtered columns scanDone: %18 = phi i8∗ [ %.pre11, %cont2.scanDone crit edge ], [ %5, %body ] Figure 8: Performance for Cascading Selections %district = getelementptr inbounds i8∗ %18, i64 1512 %19 = getelementptr inbounds i8∗ %18, i64 1592 %20 = bitcast i8∗ %19 to i32∗∗ uation schemes of modern cache-conscious database systems %21 = load i32∗∗ %20, align 8 %22 = getelementptr inbounds i8∗ %18, i64 1648 like MonetDB and VectorWise. We only considered the com- %23 = bitcast i8∗ %22 to i64∗∗ %24 = load i64∗∗ %23, align 8 piled model here, as both MonetDB and VectorWise use %25 = bitcast i8∗ %district to i64∗ %size8 = load i64∗ %25, align 8 pre-compiled building blocks to reduce interpretation over- %26 = icmp eq i64 %size8, 0 head. Note that during the experiments the data is held in br i1 %26, label %scanDone6, label %scanBody5 a column-store layout, i.e., accessing more columns increases scanBody5: %tid9 = phi i64 [ 0, %scanDone ], [ %58, %loopDone ] the amount of scanned data. %36 = getelementptr i32∗ %21, i64 %tid9 %d w id = load i32∗ %36, align 4 As first experiment we started with a very simple aggrega- %37 = getelementptr i64∗ %24, i64 %tid9 tion query for our TPC-C data set %d tax = load i64∗ %37, align 8 %38 = zext i32 %d w id to i64 %39 = call i64 @llvm.x86.sse42.crc64.64(i64 0, i64 %38) %40 = shl i64 %39, 32 select count(*) %41 = getelementptr inbounds %14∗ %executionState, i64 0, i32 1, i32 0 from orderline %42 = load %”hyper::HashTable::Entry”∗∗∗ %41, align 8 %43 = getelementptr inbounds %14∗ %executionState, i64 0, i32 1, i32 2 where ol_o_id>0 and ol_d_id>0 and ol_w_id>0 %44 = load i64∗ %43, align 8 %45 = lshr i64 %40, %44 %46 = getelementptr %”hyper::HashTable::Entry”∗∗ %42, i64 %45 and varied the number of filter conditions, all of which are %47 = load %”hyper::HashTable::Entry”∗∗ %46, align 8 %48 = icmp eq %”hyper::HashTable::Entry”∗ %47, null unselective (i.e., the result is the same for any combination br i1 %48, label %loopDone, label %loop of filter conditions). As we were interested in the overhead of loopStep: %49 = getelementptr inbounds %”hyper::HashTable::Entry”∗ %iter, i64 0, data-passing, each filter condition was introduced as separate i32 1 selection operator, and then measured the execution time of %50 = load %”hyper::HashTable::Entry”∗∗ %49, align 8 %51 = icmp eq %”hyper::HashTable::Entry”∗ %50, null the query under the different evaluation schemes. The results br i1 %51, label %loopDone, label %loop are shown in Figure 8. Clearly, the iterator model using an loop: %iter = phi %”hyper::HashTable::Entry”∗ [ %47, %scanBody5 ], [ %50, % interpreted predicate check (i.e., the evaluation scheme used loopStep ] in most database systems) is very slow. It performs a very %52 = getelementptr inbounds %”hyper::HashTable::Entry”∗ %iter, i64 1 %53 = bitcast %”hyper::HashTable::Entry”∗ %52 to i32∗ large number of function calls and has poor locality. Compil- %54 = load i32∗ %53, align 4 %55 = icmp eq i32 %54, %d w id ing the iterator model into executable code greatly improves br i1 %55, label %then10, label %loopStep the runtime, in particular since the virtual function calls then10: necessary for predicate evaluation are eliminated. Clearly, call void @ ZN6dbcore16RuntimeFunctions12printNumericEljj(i64 %d tax, i32 4, i32 4) compiling into machine code is a good idea, interpreted ap- call void @ ZN6dbcore16RuntimeFunctions7printNlEv() br label %loopStep proaches are significantly slower. The block-wise execution loopDone: model improves execution times even more. Without filter, %58 = add i64 %tid9, 1 it is actually a bit slower, as the overhead of finding block %59 = icmp eq i64 %58, %size8 br i1 %59, label %scanDone6, label %scanBody5 boundaries, setting up tuple blocks frames, etc., does not scanDone6: pay off here. But even for a single selection the reduced } ret void number of function calls and better locality pays off, and it outperforms the iterator model. The data-centric approach proposed here shows excellent performance for all queries. B. MICROBENCHMARKS Two points are particularly interesting: First, without filter In addition to the main experiments, we performed a num- conditions, the query execution time is nearly zero. The ber of micro-benchmarks to study the impact of different reason for this is that the compiler notices that our loop query processing schemes in more detail. We implemented over tuple fragments performs no work except increasing a several techniques and ran them within the HyPer system. counter, and converts it into an addition of the fragment This way, all approaches read exactly the same data from size. Second, when filtering (and thus accessing) all three exactly the same data structures, thus any runtime differ- columns, the performance seems to go down a bit. But in ences stem purely from differences in data and control flow reality, the two-filter case is too fast due to caching effects. during query processing. Besides the data-centric compi- The three-filter query is reading tuple attributes at a rate lation scheme proposed in this paper, we implemented the of 4.2 GB/s, which starts getting close to the bandwidth of classical iterator model, both as interpreter (i.e., the standard the memory bus of our machine, and branches depending evaluation scheme in most databases), and as compiled into on these data reads. We might improve query processing a executable code. In addition, we implemented block-wise bit by using conditional CPU operations, but with generic tuple processing [11], which roughly corresponds to the eval- selection code we cannot expect to get much faster. 549

12 . 60 data-centric compilation iterator model - compiled As our SQL compiler already produces very reasonable iterator model - interpreted 50 block processing - compiled LLVM code, we mainly rely upon optimization passes that optimize the control flow. As a result the optimization time is execution time [ms] 40 quite low compared to aggressive LLVM optimization levels. 30 D. QUERIES 20 We include the full SQL text of the queries Q1-Q5 below. As described in [5], they are derived from TPC-H queries 10 but adapted to the combined TPC-C and TPC-H schema. Q1 0 1 2 3 select ol number, sum(ol quantity) as sum qty, number of aggregated columns sum(ol amount) as sum amount, avg(ol quantity) as avg qty, avg(ol amount) as avg amount, count(∗) as count order Figure 9: Performance for Aggregation Queries from orderline where ol delivery d>timestamp ’2010−01−01 00:00:00’ In a second experiment we looked at a query that is the group by ol number “opposite” of our first query: In the first query the operators order by ol number filtered the tuples but performed no computations. Now we eliminate the selections but perform computations based Q2 upon the attributes: select su suppkey, su name, n name, i id, i name, su address, su phone, su comment select sum(ol_o_id*ol_d_id*ol_w_id) from item, supplier, stock, nation, region from orderline where i id = s i id and mod((s w id ∗ s i id), 10000) = su suppkey To vary the complexity, we ran the query with only the and su nationkey = n nationkey and n regionkey = r regionkey first product term, the first two product terms, and all and i data like ’%b’ and r name like ’Europ%’ three product terms. The results are shown in Figure 9. and s quantity = ( Again, the classical interpreted iterator model is very slow. select min(s quantity) However when compiled the iterator model is performing from stock, supplier, nation, region much better, it now requires just one virtual function call where i id = s i id per tuple. The block-oriented processing remains faster, but and mod((s w id ∗ s i id),10000) = su suppkey and su nationkey = n nationkey the differences are small. Again, our data-centric approach and n regionkey = r regionkey performs excellent. When aggregating three columns, the and r name like ’Europ%’ ) system processes tuple attributes at a rate of 6.5GB/s, which order by n name, su name, i id is the bandwidth of the memory bus. We cannot expect to get faster than this without changes to the storage system. Q3 Our query processing is so fast that is is basically “I/O select ol o id , ol w id , ol d id , sum(ol amount) as revenue, o entry d from customer, neworder, ”order”, orderline bound”, where I/O means RAM access. where c state like ’A%’ C. OPTIMIZATION SETTINGS and c id = o c id and c w id = o w id and c d id = o d id and no w id = o w id and no d id = o d id and no o id = o id When generating machine code, optimization settings can and ol w id = o w id and ol d id = o d id and ol o id = o id affect the result performance quite significantly. We therefore and o entry d > timestamp ’2010−01−01 00:00:00’ give a precise description of the optimization settings used group by ol o id, ol w id, ol d id , o entry d in the experiments here. order by revenue desc, o entry d For the C++ code, machine code was generated using g++ Q4 4.5.2 with the optimization flags select o ol cnt , count(∗) as order count -O3 -fgcse-las -funsafe-loop-optimizations. from ”order” Note that at gcc version 4.5, this subsumes many other where o entry d >= timestamp ’2010−01−01 00:00:00’ optimization settings like -ftree-vectorize, which systems and o entry d < timestamp ’2110−01−01 00:00:00’ like MonetDB specify explicitly. These optimization op- and exists (select ∗ from orderline tions were manually tuned to maximize query performance. where o id = ol o id and o w id = ol w id and o d id = ol d id and ol delivery d > o entry d) Specifying -funroll-loops for example actually decreases group by o ol cnt the performance of Q4 by 23%. There is a very subtle in- order by o ol cnt teraction of optimization options. For example enabling -funroll-loops also enables -fweb, which affects the regis- Q5 ter allocator. Therefore it is hard to predict the effect of select n name, sum(ol amount) as revenue individual optimization switches. from customer, ”order”, orderline, stock, supplier , nation, region where c id = o c id and c w id = o w id and c d id = o d id For the LLVM compiler we use a custom optimization level and ol o id = o id and ol w id = o w id and ol d id=o d id by manually scheduling optimization passes. The precise and ol w id = s w id and ol i id = s i id cascade is and mod((s w id ∗ s i id),10000) = su suppkey llvm::createInstructionCombiningPass() and ascii(substr(c state ,1,1)) = su nationkey llvm::createReassociatePass() and su nationkey = n nationkey llvm::createGVNPass() and n regionkey = r regionkey and r name = ’Europa’ and o entry d >= timestamp ’2010−01−01 00:00:00’ llvm::createCFGSimplificationPass() group by n name llvm::createAggressiveDCEPass() order by revenue desc llvm::createCFGSimplificationPass() 550