- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Fast Database Restarts at Facebook

展开查看详情

1 . Fast Database Restarts at Facebook ∗ Aakash Goel, Bhuwan Chopra, Ciprian Gerea, Dhrúv Mátáni, Josh Metzler, Fahim Ul Haq, and Janet L. Wiener Facebook, Inc. ABSTRACT 1. INTRODUCTION Facebook engineers query multiple databases to monitor and Facebook engineers query multiple database systems to analyze Facebook products and services. The fastest of monitor and analyze Facebook products and services. Scuba[5] these databases is Scuba, which achieves subsecond query is a very fast, distributed, in-memory database used exten- response time by storing all of its data in memory across sively for interactive, ad hoc, analysis queries. These queries hundreds of servers. We are continually improving the code typically run in under a second over GBs of data. Scuba pro- for Scuba and would like to push new software releases at cesses almost a million queries per day for over 1500 Face- least once a week. However, restarting a Scuba machine book employees. In addition, Scuba is the workhorse behind clears its memory. Recovering all of its data from disk — Facebook’s code regression analysis, bug report monitoring, about 120 GB per machine — takes 2.5-3 hours to read and ads revenue monitoring, and performance debugging. format the data per machine. Even 10 minutes is a long One significant source of downtime is software upgrades, downtime for the critical applications that rely on Scuba, yet upgrades are necessary to introduce new features and such as detecting user-facing errors. Restarting only 2% of apply bug fixes. At Facebook, we are accustomed to the the servers at a time mitigates the amount of unavailable agility that comes with frequent code deployments. New data, but prolongs the restart duration to about 12 hours, code is rolled out to our web product multiple times each during which users see only partial query results and one week [9]. The Facebook Android Alpha program also re- engineer needs to monitor the servers carefully. We need leases code multiple times a week [18, 17]. We would like to a faster, less engineer intensive, solution to enable frequent deploy new code to Scuba at least once a week as well. software upgrades. However, any downtime on Scuba’s part is a problem for In this paper, we show that using shared memory provides the many tools and users that depend on it. When a server a simple, effective, fast, solution to upgrading servers. Our process is shut down, it loses all of the data in its heap key observation is that we can decouple the memory lifetime memory. The new server process must then read all of its from the process lifetime. When we shutdown a server for data from the backup copy Scuba keeps on a local disk. a planned upgrade, we know that the memory state is valid However, Scuba machines have 144 GB of RAM, most of (unlike when a server shuts down unexpectedly). We can which is filled with data. Reading about 120 GB of data therefore use shared memory to preserve memory state from from disk takes 20-25 minutes; reading that data in its disk the old server process to the new process. Our solution does format and translating it to its in-memory format takes 2.5- not increase the server memory footprint and allows recov- 3 hours, a very long time — about 4 orders of magnitude ery at memory speeds, about 2-3 minutes per server. This longer than query response time. solution maximizes uptime and availability, which has led to Scuba can and does return partial query results when not much faster and more frequent rollouts of new features and all servers are available. We can mitigate the long downtime improvements. Furthermore, this technique can be applied by restarting only a handful of servers at a time, usually 2% to the in-memory state of any database, even if the memory of them, to minimize the impact on query results. The entire contains a cache of a much larger disk-resident data set, as system rollover then takes a lot longer, about 12 hours to in most databases. restart the entire Scuba cluster with hundreds of machines. Furthermore, an engineer needs to monitor the rollover for ∗Aakash is a graduate student at Georgia Institute of Tech- its entire duration. This time-consuming procedure discour- ages frequent deployment of new features and bug fixes. nology and was an intern at Facebook. We needed to reduce the total downtime significantly, since it prevented us from upgrading Scuba software as often Permission to make digital or hard copies of all or part of this work for personal or as we want. One possible solution keeps redundant copies of classroom use is granted without fee provided that copies are not made or distributed the data in memory on different servers. When one server is for profit or commercial advantage and that copies bear this notice and the full cita- being upgraded, queries are routed exclusively to the other tion on the first page. Copyrights for components of this work owned by others than server. We discarded that solution as too expensive in two ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or re- dimensions: first, it would require twice as many servers. publish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org. The hardware cost of hundreds of servers with 144 GB of SIGMOD 2014 Park City, UT USA RAM is significant. Second, replication code can be tricky to Copyright 2014 ACM 978-1-4503-2376-5/14/06 ...$15.00. get right: Which server should participate in which queries? http://dx.doi.org/10.1145/2588555.2595642. 541

2 . Data flow through Scuba Scuba backend FB Servers Query aggregator Web Tier queries Scribe distributed Leaf results messaging system Back-end Services add directly to leaf servers Data storage Scuba GUI User behavior + service logs transport Scuba: real-time analysis and trouble-shooting Figure 1: Scuba architecture: data flows from Facebook products and services through Scribe to Scuba. Users query Scuba and visualize the results in the Scuba GUI. How should we keep pairs of servers synchronized with mil- is now fully available 99.5% of the time — and that hour lions of row inserts per second? of downtime can be during offpeak hours (after typical Cal- Instead, we chose a different solution. We observed that ifornia office hours, when many Scuba users, i.e., Facebook when we shutdown a server for a planned upgrade, we know engineers, are not working). that the memory state is good (unlike when a server shuts We are now able to deploy new features and improvements down unexpectedly, which might or might not be due to much more frequently. We believe this restart technique can memory corruption). We decided to decouple the memory’s be applied to the in-memory state of any database, even if lifetime from the process’s lifetime. In this paper, we de- the memory contains a cache of a much larger disk-resident scribe how we use shared memory to persist data from one data set, as in most databases. process to the next. In the next section, we describe Scuba’s architecture. In Using shared memory to store data provides a simple, ef- Section 3, we show Scuba’s data layout in shared memory fective solution to upgrading servers fast. We were inspired and in Section 4 we describe the rollover procedure using by two other big, distributed systems at Facebook that use shared memory. We consider related work in database re- shared memory to keep data alive across software upgrades: covery and using shared memory for fast system restarts in TAO [6] and Memcache [20]. In our solution, we made two Section 5. Finally, we conclude in Section 6. key design decisions: 1. Scuba copies data from heap memory to shared mem- 2. SCUBA ARCHITECTURE ory at shutdown time and copies it back to the heap Figure 1 shows Scuba’s overall architecture. Data flows at startup. from log calls in Facebook products and services into Scribe [3]. 2. During the copy, data structures are translated from Scuba “tailer” processes pull the data for each table out of their heap format to a (very similar but not the same) Scribe and send it into Scuba. shared memory format. Every N rows or t seconds, the tailer chooses a new Scuba leaf server and sends it a batch of rows. How does it choose Copying data between heap and shared memory avoids a server? It picks two servers randomly and asks them both some of the pitfalls in writing a custom allocator in shared for their current state and how much free memory they have, memory, such as fragmentation and problems with thread as described previous [5]. If both are alive (see Figure 5(a)), safety and scalability. It also allows us to modify the in- it sends the data to the server with more free memory. If memory format (in heap memory) and rollover to the new only one is alive, that server gets the data. If neither server format using shared memory. We describe how to copy all is alive, the tailer will try two more servers until it finds of the data to shared memory and back without increasing one that is alive or (after enough tries) sends the data to a the memory footprint of the data. restarting server. Scuba’s new upgrade path is about 2-3 minutes per server, Each machine currently runs eight leaf servers and one rather than 2-3 hours. The entire cluster upgrade time is aggregator server. The leaf servers store the data. Having now under an hour, rather than lasting 12 hours. This path eight servers allows for greater parallelism during query exe- maximizes uptime and availability for Scuba users and mini- cution (without the complexity of multiple threads per query mizes monitoring time of the upgrade for our engineers. For per server). More importantly for recovery, eight servers example, instead of having 100% of the data available only mean that we can restart the servers one at a time, while the 93% of the time with a 12 hour rollover once a week, Scuba other seven servers continue to execute queries. We there- 542

3 . Heap Memory Layout Table Pointers Pointers Zoom In Leaf ... Map Table Data Row Block Data Row Block Column Data Table Table Table ... 0 1 m Row Block Pointers Header ... Table Name RB0 RB1 RB2 ... RBn Row Blocks Number of Row Blocks Row Block Column Pointers Header Schema ... Size Row count Name_0, Type_0 R R R R Min time Name_1, Type_1 B B B B Row Block ... Max time ... C C C C Columns Creation timestamp Name_k, Type_k 0 1 2 k Figure 2: Heap memory layout for tables in Scuba. Each Table has a vector of Row Blocks. A Row Block contains all data for a set of rows. Each Row Block has a header, a schema, and a vector of Row Block Columns. Each Row Block Column contains the values for one column, for all rows in the Row Block. fore maximize the number of disks in use for recovery while Figure 2 depicts the memory layout of a leaf. There is a limiting the amount of offline data to 2% of the total. For leaf map containing a vector of pointers, one pointer to each example, suppose there are 100 machines. With one server table. Each table has a vector of pointers to row blocks per machine, we could restart only two servers. With a total (RBs) plus a header. The table name and a count of the of 800 leaf servers, we can restart 16 leaf servers on 16 ma- row blocks are in the table header. Each row block contains chines at once and read from 16 disks. The full rollover thus 65,536 rows that arrived consecutively. (The row block is takes much less time to complete. This technique also ap- capped at 1 GB, pre-compression, even if there are fewer plies to parallelizing restarts using shared memory, although than 65K rows.) Within each row block, the data is orga- the critical resource is the memory bandwidth rather than nized into a header, a schema, and row block columns. Each the disk speed. row block column contains all of the column values for one The leaf servers both add new data as it arrives and pro- column, for every row in the row block. cess queries over their current data. They also delete data The header describes general properties of the row block: as it expires due to either age or size limits. its size in bytes, the number of rows in it (it may not be full), The aggregator servers distribute a query to all leaves and the minimum and maximum timestamps of rows it contains, then aggregate the results as they arrive from the leaves. and when the row block was first created. Every row in Our previous work [5] describes query processing in more Scuba has a required column called “time” that contains a detail. unix timestamp. These timestamps represent the time of the row-generating event. They are not unique, as many events happen on Facebook in the same second. Since rows flow 2.1 Storage layout into Scuba in roughly chronological order, the time column Within each leaf server, there is a fraction of most tables. is close to an index for each table. Nearly all queries contain Scuba’s storage engine is a column store (a change since [5]. predicates on time; the minimum and maximum timestamps A column layout provides better compression of the data are used to decide whether to even look at a row block when and enables faster query execution strategies, as described processing a query. by others for C-Store [23] and Vertica [14], MonetDB [12], The schema is a description of the columns in the row SAP Hana [22], Dremel [19], and Powerdrill [10]. 543

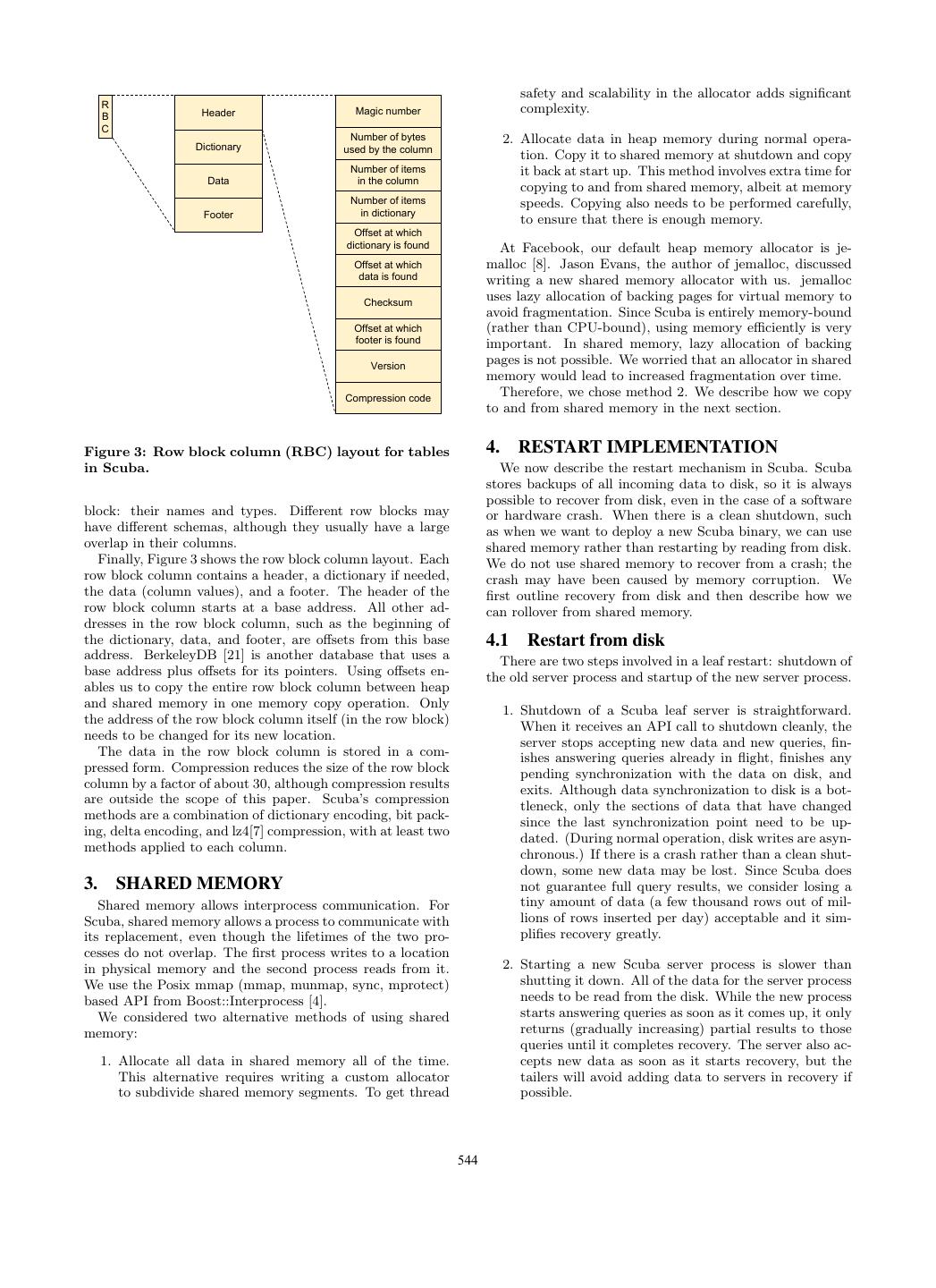

4 . safety and scalability in the allocator adds significant R Header Magic number complexity. B C Number of bytes 2. Allocate data in heap memory during normal opera- Dictionary used by the column tion. Copy it to shared memory at shutdown and copy Number of items it back at start up. This method involves extra time for Data in the column copying to and from shared memory, albeit at memory Number of items speeds. Copying also needs to be performed carefully, Footer in dictionary to ensure that there is enough memory. Offset at which dictionary is found At Facebook, our default heap memory allocator is je- Offset at which malloc [8]. Jason Evans, the author of jemalloc, discussed data is found writing a new shared memory allocator with us. jemalloc Checksum uses lazy allocation of backing pages for virtual memory to avoid fragmentation. Since Scuba is entirely memory-bound Offset at which (rather than CPU-bound), using memory efficiently is very footer is found important. In shared memory, lazy allocation of backing Version pages is not possible. We worried that an allocator in shared memory would lead to increased fragmentation over time. Compression code Therefore, we chose method 2. We describe how we copy to and from shared memory in the next section. Figure 3: Row block column (RBC) layout for tables 4. RESTART IMPLEMENTATION in Scuba. We now describe the restart mechanism in Scuba. Scuba stores backups of all incoming data to disk, so it is always possible to recover from disk, even in the case of a software block: their names and types. Different row blocks may or hardware crash. When there is a clean shutdown, such have different schemas, although they usually have a large as when we want to deploy a new Scuba binary, we can use overlap in their columns. shared memory rather than restarting by reading from disk. Finally, Figure 3 shows the row block column layout. Each We do not use shared memory to recover from a crash; the row block column contains a header, a dictionary if needed, crash may have been caused by memory corruption. We the data (column values), and a footer. The header of the first outline recovery from disk and then describe how we row block column starts at a base address. All other ad- can rollover from shared memory. dresses in the row block column, such as the beginning of the dictionary, data, and footer, are offsets from this base 4.1 Restart from disk address. BerkeleyDB [21] is another database that uses a There are two steps involved in a leaf restart: shutdown of base address plus offsets for its pointers. Using offsets en- the old server process and startup of the new server process. ables us to copy the entire row block column between heap and shared memory in one memory copy operation. Only 1. Shutdown of a Scuba leaf server is straightforward. the address of the row block column itself (in the row block) When it receives an API call to shutdown cleanly, the needs to be changed for its new location. server stops accepting new data and new queries, fin- The data in the row block column is stored in a com- ishes answering queries already in flight, finishes any pressed form. Compression reduces the size of the row block pending synchronization with the data on disk, and column by a factor of about 30, although compression results exits. Although data synchronization to disk is a bot- are outside the scope of this paper. Scuba’s compression tleneck, only the sections of data that have changed methods are a combination of dictionary encoding, bit pack- since the last synchronization point need to be up- ing, delta encoding, and lz4[7] compression, with at least two dated. (During normal operation, disk writes are asyn- methods applied to each column. chronous.) If there is a crash rather than a clean shut- down, some new data may be lost. Since Scuba does 3. SHARED MEMORY not guarantee full query results, we consider losing a Shared memory allows interprocess communication. For tiny amount of data (a few thousand rows out of mil- Scuba, shared memory allows a process to communicate with lions of rows inserted per day) acceptable and it sim- its replacement, even though the lifetimes of the two pro- plifies recovery greatly. cesses do not overlap. The first process writes to a location in physical memory and the second process reads from it. 2. Starting a new Scuba server process is slower than We use the Posix mmap (mmap, munmap, sync, mprotect) shutting it down. All of the data for the server process based API from Boost::Interprocess [4]. needs to be read from the disk. While the new process We considered two alternative methods of using shared starts answering queries as soon as it comes up, it only memory: returns (gradually increasing) partial results to those queries until it completes recovery. The server also ac- 1. Allocate all data in shared memory all of the time. cepts new data as soon as it starts recovery, but the This alternative requires writing a custom allocator tailers will avoid adding data to servers in recovery if to subdivide shared memory segments. To get thread possible. 544

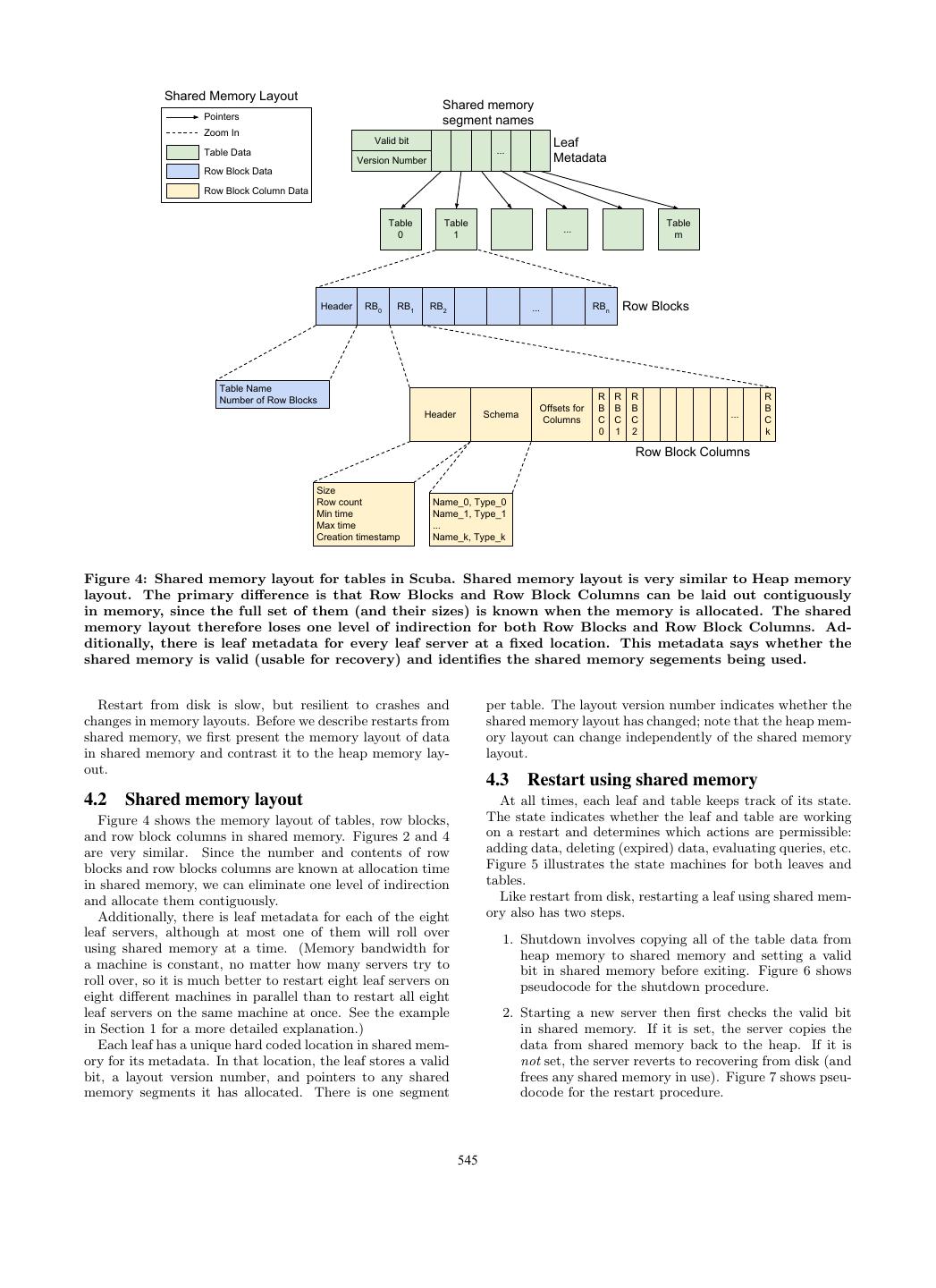

5 . Shared Memory Layout Shared memory Pointers segment names Zoom In Valid bit Leaf Table Data ... Version Number Metadata Row Block Data Row Block Column Data Table Table Table ... 0 1 m Header RB0 RB1 RB2 ... RBn Row Blocks Table Name Number of Row Blocks R R R R Offsets for B B B B Header Schema ... Columns C C C C 0 1 2 k Row Block Columns Size Row count Name_0, Type_0 Min time Name_1, Type_1 Max time ... Creation timestamp Name_k, Type_k Figure 4: Shared memory layout for tables in Scuba. Shared memory layout is very similar to Heap memory layout. The primary difference is that Row Blocks and Row Block Columns can be laid out contiguously in memory, since the full set of them (and their sizes) is known when the memory is allocated. The shared memory layout therefore loses one level of indirection for both Row Blocks and Row Block Columns. Ad- ditionally, there is leaf metadata for every leaf server at a fixed location. This metadata says whether the shared memory is valid (usable for recovery) and identifies the shared memory segements being used. Restart from disk is slow, but resilient to crashes and per table. The layout version number indicates whether the changes in memory layouts. Before we describe restarts from shared memory layout has changed; note that the heap mem- shared memory, we first present the memory layout of data ory layout can change independently of the shared memory in shared memory and contrast it to the heap memory lay- layout. out. 4.3 Restart using shared memory 4.2 Shared memory layout At all times, each leaf and table keeps track of its state. Figure 4 shows the memory layout of tables, row blocks, The state indicates whether the leaf and table are working and row block columns in shared memory. Figures 2 and 4 on a restart and determines which actions are permissible: are very similar. Since the number and contents of row adding data, deleting (expired) data, evaluating queries, etc. blocks and row blocks columns are known at allocation time Figure 5 illustrates the state machines for both leaves and in shared memory, we can eliminate one level of indirection tables. and allocate them contiguously. Like restart from disk, restarting a leaf using shared mem- Additionally, there is leaf metadata for each of the eight ory also has two steps. leaf servers, although at most one of them will roll over 1. Shutdown involves copying all of the table data from using shared memory at a time. (Memory bandwidth for heap memory to shared memory and setting a valid a machine is constant, no matter how many servers try to bit in shared memory before exiting. Figure 6 shows roll over, so it is much better to restart eight leaf servers on pseudocode for the shutdown procedure. eight different machines in parallel than to restart all eight leaf servers on the same machine at once. See the example 2. Starting a new server then first checks the valid bit in Section 1 for a more detailed explanation.) in shared memory. If it is set, the server copies the Each leaf has a unique hard coded location in shared mem- data from shared memory back to the heap. If it is ory for its metadata. In that location, the leaf stores a valid not set, the server reverts to recovering from disk (and bit, a layout version number, and pointers to any shared frees any shared memory in use). Figure 7 shows pseu- memory segments it has allocated. There is one segment docode for the restart procedure. 545

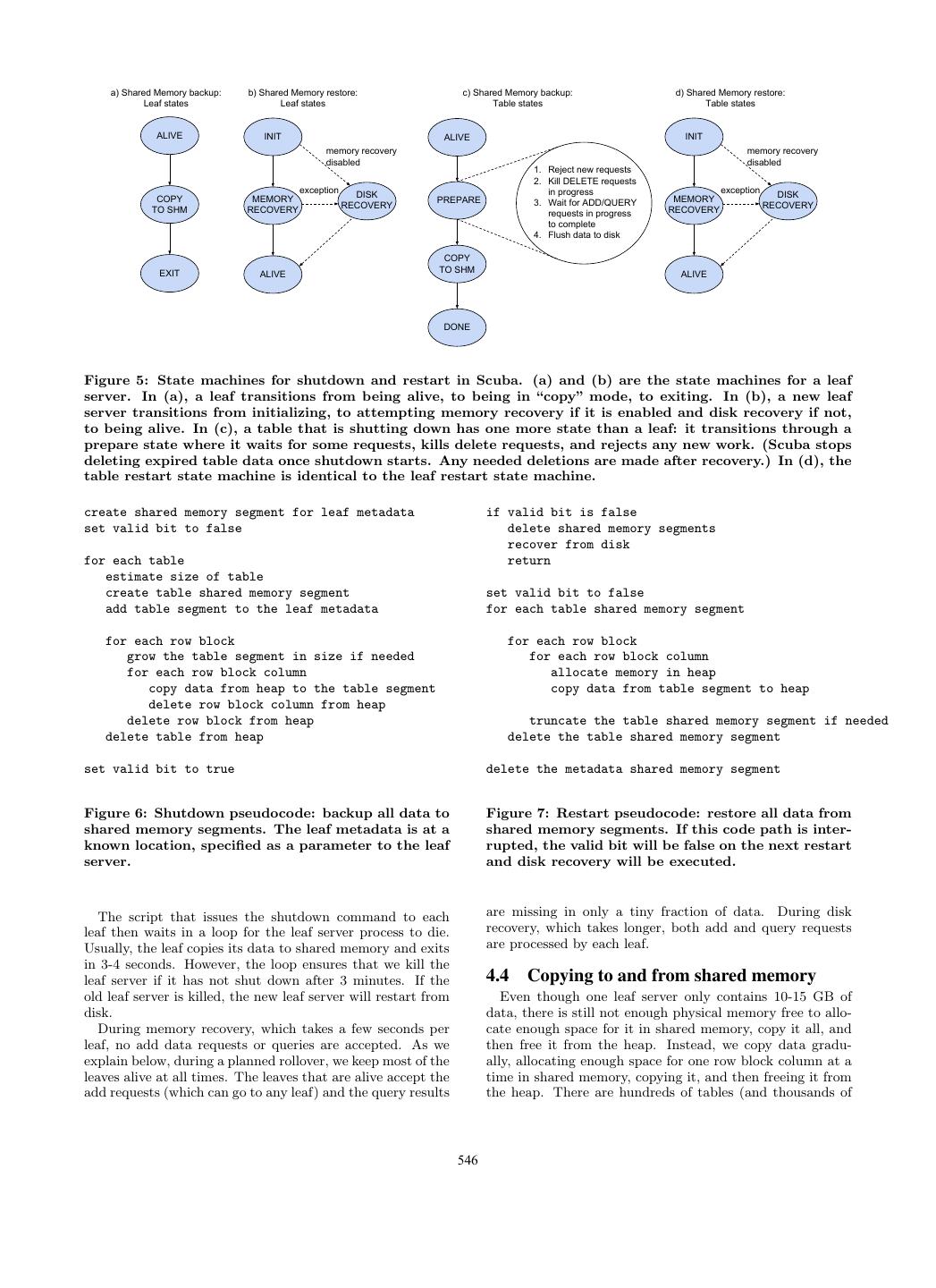

6 . a) Shared Memory backup: b) Shared Memory restore: c) Shared Memory backup: d) Shared Memory restore: Leaf states Leaf states Table states Table states ALIVE INIT ALIVE INIT memory recovery memory recovery disabled disabled 1. Reject new requests 2. Kill DELETE requests exception DISK in progress exception DISK COPY MEMORY PREPARE 3. Wait for ADD/QUERY MEMORY RECOVERY RECOVERY TO SHM RECOVERY requests in progress RECOVERY to complete 4. Flush data to disk COPY EXIT TO SHM ALIVE ALIVE DONE Figure 5: State machines for shutdown and restart in Scuba. (a) and (b) are the state machines for a leaf server. In (a), a leaf transitions from being alive, to being in “copy” mode, to exiting. In (b), a new leaf server transitions from initializing, to attempting memory recovery if it is enabled and disk recovery if not, to being alive. In (c), a table that is shutting down has one more state than a leaf: it transitions through a prepare state where it waits for some requests, kills delete requests, and rejects any new work. (Scuba stops deleting expired table data once shutdown starts. Any needed deletions are made after recovery.) In (d), the table restart state machine is identical to the leaf restart state machine. create shared memory segment for leaf metadata if valid bit is false set valid bit to false delete shared memory segments recover from disk for each table return estimate size of table create table shared memory segment set valid bit to false add table segment to the leaf metadata for each table shared memory segment for each row block for each row block grow the table segment in size if needed for each row block column for each row block column allocate memory in heap copy data from heap to the table segment copy data from table segment to heap delete row block column from heap delete row block from heap truncate the table shared memory segment if needed delete table from heap delete the table shared memory segment set valid bit to true delete the metadata shared memory segment Figure 6: Shutdown pseudocode: backup all data to Figure 7: Restart pseudocode: restore all data from shared memory segments. The leaf metadata is at a shared memory segments. If this code path is inter- known location, specified as a parameter to the leaf rupted, the valid bit will be false on the next restart server. and disk recovery will be executed. The script that issues the shutdown command to each are missing in only a tiny fraction of data. During disk leaf then waits in a loop for the leaf server process to die. recovery, which takes longer, both add and query requests Usually, the leaf copies its data to shared memory and exits are processed by each leaf. in 3-4 seconds. However, the loop ensures that we kill the leaf server if it has not shut down after 3 minutes. If the 4.4 Copying to and from shared memory old leaf server is killed, the new leaf server will restart from Even though one leaf server only contains 10-15 GB of disk. data, there is still not enough physical memory free to allo- During memory recovery, which takes a few seconds per cate enough space for it in shared memory, copy it all, and leaf, no add data requests or queries are accepted. As we then free it from the heap. Instead, we copy data gradu- explain below, during a planned rollover, we keep most of the ally, allocating enough space for one row block column at a leaves alive at all times. The leaves that are alive accept the time in shared memory, copying it, and then freeing it from add requests (which can go to any leaf) and the query results the heap. There are hundreds of tables (and thousands of 546

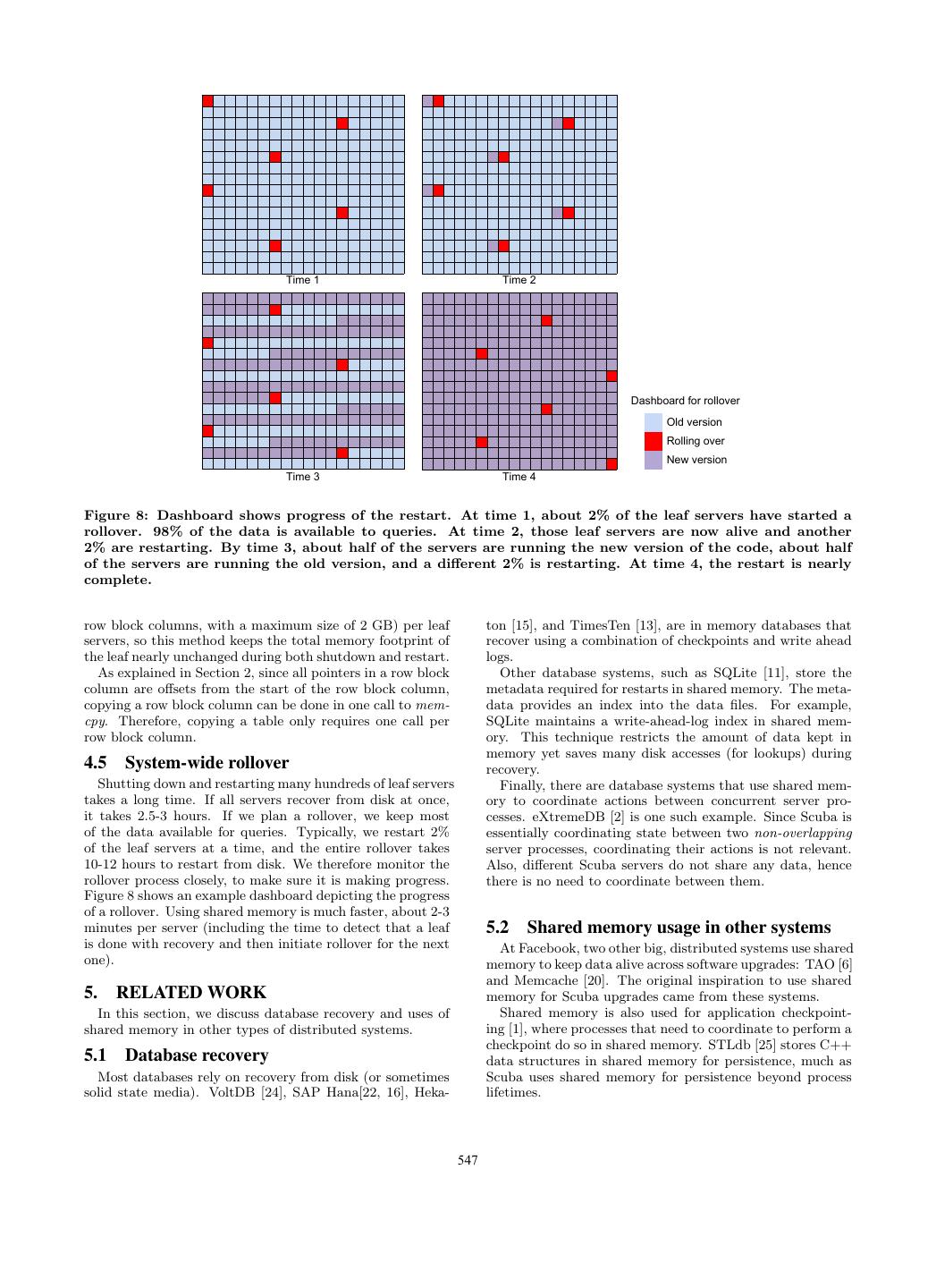

7 . Time 1 Time 2 Dashboard for rollover Old version Rolling over New version Time 3 Time 4 Figure 8: Dashboard shows progress of the restart. At time 1, about 2% of the leaf servers have started a rollover. 98% of the data is available to queries. At time 2, those leaf servers are now alive and another 2% are restarting. By time 3, about half of the servers are running the new version of the code, about half of the servers are running the old version, and a different 2% is restarting. At time 4, the restart is nearly complete. row block columns, with a maximum size of 2 GB) per leaf ton [15], and TimesTen [13], are in memory databases that servers, so this method keeps the total memory footprint of recover using a combination of checkpoints and write ahead the leaf nearly unchanged during both shutdown and restart. logs. As explained in Section 2, since all pointers in a row block Other database systems, such as SQLite [11], store the column are offsets from the start of the row block column, metadata required for restarts in shared memory. The meta- copying a row block column can be done in one call to mem- data provides an index into the data files. For example, cpy. Therefore, copying a table only requires one call per SQLite maintains a write-ahead-log index in shared mem- row block column. ory. This technique restricts the amount of data kept in memory yet saves many disk accesses (for lookups) during 4.5 System-wide rollover recovery. Shutting down and restarting many hundreds of leaf servers Finally, there are database systems that use shared mem- takes a long time. If all servers recover from disk at once, ory to coordinate actions between concurrent server pro- it takes 2.5-3 hours. If we plan a rollover, we keep most cesses. eXtremeDB [2] is one such example. Since Scuba is of the data available for queries. Typically, we restart 2% essentially coordinating state between two non-overlapping of the leaf servers at a time, and the entire rollover takes server processes, coordinating their actions is not relevant. 10-12 hours to restart from disk. We therefore monitor the Also, different Scuba servers do not share any data, hence rollover process closely, to make sure it is making progress. there is no need to coordinate between them. Figure 8 shows an example dashboard depicting the progress of a rollover. Using shared memory is much faster, about 2-3 minutes per server (including the time to detect that a leaf 5.2 Shared memory usage in other systems is done with recovery and then initiate rollover for the next At Facebook, two other big, distributed systems use shared one). memory to keep data alive across software upgrades: TAO [6] and Memcache [20]. The original inspiration to use shared 5. RELATED WORK memory for Scuba upgrades came from these systems. In this section, we discuss database recovery and uses of Shared memory is also used for application checkpoint- shared memory in other types of distributed systems. ing [1], where processes that need to coordinate to perform a checkpoint do so in shared memory. STLdb [25] stores C++ 5.1 Database recovery data structures in shared memory for persistence, much as Most databases rely on recovery from disk (or sometimes Scuba uses shared memory for persistence beyond process solid state media). VoltDB [24], SAP Hana[22, 16], Heka- lifetimes. 547

8 .6. CONCLUSIONS [2] eXtremeDB Embedded In-Memory Database System. Using shared memory to store data between database server http://www.mcobject.com/standardedition.shtml. process lifetimes provides a fast rollover solution for Scuba. [3] Scribe. https://github.com/facebook/scribe. No extra memory or machines are needed, since we allocate, [4] Sharing memory between processes - 1.54.0. copy, and free data in chunks of one row block column (at http://www.boost.org/doc/libs/1 54 0/, 2013. most 1 GB) at a time. We can restart one Scuba machine in [5] L. Abraham, J. Allen, O. Barykin, V. Borkar, 2-3 minutes using shared memory versus 2-3 hours from disk. B. Chopra, C. Gerea, D. Merl, J. Metzler, D. Reiss, These numbers also apply to restarts of all of the machines S. Subramanian, et al. Scuba: diving into data at at the same time. facebook. In VLDB, pages 1057–1067, 2013. Copying data between heap and shared memory has sev- [6] N. Bronson, Z. Amsden, G. Cabrera, P. Chakka, eral advantages. Allocating and freeing heap memory during P. Dimov, H. Ding, J. Ferris, A. Giardullo, normal operation remains simple and uses well-tested code S. Kulkarni, H. Li, M. Marchukov, D. Petrov, paths. The copying code is simple and, even though it is L. Puzar, Y. J. Song, and V. Venkataramani. Tao: used infrequently, less likely to have bugs. Finally, separat- Facebook’s distributed data store for the social graph. ing the heap data structures from the shared memory data In USENIX, 2013. structures means that we can modify the heap data format [7] Y. Collet. Lz4: Extremely fast compression algorithm. and restart using shared memory. code.google.com, 2013. Furthermore, this fast rollover path allows us to deploy ex- [8] J. Evans. A scalable concurrent malloc (3) perimental software builds on a handful of machines, which implementation for FreeBSD. In BSDCan, 2006. we could not do if took longer. We can add more logging, [9] D. G. Feitelson, E. Frachtenberg, and K. L. Beck. test bug fixes, and try new software designs — and then Development and deployment at Facebook. IEEE revert the changes if we wish. This use of shared memory Internet Computing, 17(4):8–17, 2013. rollovers as a software development tool is common in the [10] A. Hall, O. Bachmann, R. B¨ ussow, S. G˘anceanu, and Memcache and TAO teams at Facebook. M. Nunkesser. Processing a trillion cells per mouse To maintain high availability of data without replication, click. PVLDB, 5(11):1436–1446, July 2012. we typically restart only 2% of Scuba servers at a time. By running N leaf servers on each machine (instead of only one [11] D. R. Hipp. Sqlite: Write-ahead log. leaf server), we increase the number of restarting servers by http://www.sqlite.org/draft/wal.html. a factor of N . Restarting only one leaf server per machine [12] S. Idreos, F. Groffen, N. Nes, S. Manegold, K. S. at a time then means that N times as many machines are Mullender, and M. L. Kersten. Monetdb: Two decades active in the rollover — and we get close to N times as much of research in column-oriented database architectures. disk bandwidth (for disk recovery) and memory bandwidth IEEE Data Eng. Bull., 35(1):40–45, 2012. (for shared memory recovery). We can restart the entire [13] T. Lahiri, M.-A. Neimat, and S. Folkman. Oracle cluster of Scuba machines in under an hour by using shared TimesTen: An In-Memory Database for Enterprise memory, with 98% of data online and available to queries. Applications. IEEE Data Eng. Bull., 36(2):6–13, 2013. In contrast, disk recovery takes about 12 hours. (The de- [14] A. Lamb, M. Fuller, R. Varadarajan, N. Tran, ployment software is responsible for about 40 minutes of B. Vandier, L. Doshi, and C. Bear. The Vertica overhead.) Analytic Database: C-Store 7 Years Later . PVLDB, One large overhead in Scuba’s disk recovery is translat- 5(12):1790–1801, 2012. ing from the disk format to the heap memory format. This [15] P.-˚A. Larson, M. Zwilling, and K. Farlee. The Hekaton translation overhead is both time-consuming and CPU-intensive. Memory-Optimized OLTP Engine. IEEE Data Eng. We are planning to use the shared memory format described Bull., 36(2):34–40, 2013. in this paper as the disk format, instead. We expect that the [16] J. Lee, M. Muehle, N. May, F. Faerber, V. Sikka, much simpler translation to heap memory format will speed H. Plattner, J. Krueger, and M. Grund. up disk recovery significantly. We still need to recover from High-Performance Transaction Processing in SAP disk in case of software or hardware failures and hardware HANA. IEEE Data Eng. Bull., 36(2):28–33, 2013. upgrades. [17] C. Legnitto. 1m people try to help Facebook spruce We also expect that replacing disks with solid state drives up Android. will speed up recovery from persistent storage, but writing http://news.cnet.com/8301-1023 3-57614540-93/1m- to and reading back from memory will still be faster. people-try-to-help-facebook-spruce-up-android/. [18] C. Legnitto. Update on the Facebook for Android beta 7. ACKNOWLEDGMENTS testing program. https://m.facebook.com/notes/facebook- Jay Parikh first suggested using shared memory for recov- engineering/update-on-the-facebook-for-android-beta- ery. Jason Evans convinced us not to write a custom alloca- testing-program/10151729114953920. tor in shared memory. Ryan McElroy and Nathan Bronson explained how Facebook’s Memcache and TAO, respectively, [19] S. Melnik, A. Gubarev, J. J. Long, G. Romer, use shared memory to make recovery faster. S. Shivakumar, M. Tolton, and T. Vassilakis. Dremel: Interactive analysis of web-scale datasets. PVLDB, 3(1):330–339, 2010. 8. REFERENCES [20] R. Nishtala, H. Fugal, S. Grimm, M. Kwiatkowski, H. Lee, H. C. Li, R. McElroy, M. Paleczny, D. Peek, [1] Application checkpointing. P. Saab, D. Stafford, T. Tung, and V. Venkataramani. http://en.wikipedia.org/wiki/Application checkpointing. 548

9 . Scaling Memcache at Facebook. In NSDI, pages [23] M. Stonebraker, D. J. Abadi, A. Batkin, X. Chen, 385–398. USENIX Association, 2013. M. Cherniack, M. Ferreira, E. Lau, A. Lin, S. Madden, [21] M. A. Olson, K. Bostic, and M. I. Seltzer. Berkeley E. O’Neil, P. O’Neil, A. Rasin, N. Tran, and DB. In USENIX, pages 183–191, 1999. S. Zdonik. C-Store: A Column-Oriented DBMS. In [22] V. Sikka, F. F¨ arber, W. Lehner, S. K. Cha, T. Peh, VLDB, pages 553–564, 2005. and C. Bornh¨ ovd. Efficient transaction processing in [24] M. Stonebraker and A. Weisberg. The VoltDB Main SAP HANA database: the end of a column store Memory DBMS. IEEE Data Eng. Bull., 36(2):21–27, myth. In SIGMOD, pages 731–742, 2012. 2013. [25] B. Walters. STLdb. http://sourceforge.net/apps/trac/stldb/. 549