- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Online Transaction Processing databases

展开查看详情

1 .OLTP Through the Looking Glass, and What We Found There Stavros Harizopoulos Daniel J. Abadi Samuel Madden Michael Stonebraker HP Labs Yale University Massachusetts Institute of Technology Palo Alto, CA New Haven, CT Cambridge, MA stavros@hp.com dna@cs.yale.edu {madden, stonebraker}@csail.mit.edu ABSTRACT 1. INTRODUCTION Online Transaction Processing (OLTP) databases include a suite Modern general purpose online transaction processing (OLTP) of features — disk-resident B-trees and heap files, locking-based database systems include a standard suite of features: a collection concurrency control, support for multi-threading — that were of on-disk data structures for table storage, including heap files optimized for computer technology of the late 1970’s. Advances and B-trees, support for multiple concurrent queries via locking- in modern processors, memories, and networks mean that today’s based concurrency control, log-based recovery, and an efficient computers are vastly different from those of 30 years ago, such buffer manager. These features were developed to support trans- that many OLTP databases will now fit in main memory, and action processing in the 1970’s and 1980’s, when an OLTP data- most OLTP transactions can be processed in milliseconds or less. base was many times larger than the main memory, and when the Yet database architecture has changed little. computers that ran these databases cost hundreds of thousands to millions of dollars. Based on this observation, we look at some interesting variants of conventional database systems that one might build that exploit Today, the situation is quite different. First, modern processors recent hardware trends, and speculate on their performance are very fast, such that the computation time for many OLTP- through a detailed instruction-level breakdown of the major com- style transactions is measured in microseconds. For a few thou- ponents involved in a transaction processing database system sand dollars, a system with gigabytes of main memory can be (Shore) running a subset of TPC-C. Rather than simply profiling purchased. Furthermore, it is not uncommon for institutions to Shore, we progressively modified it so that after every feature own networked clusters of many such workstations, with aggre- removal or optimization, we had a (faster) working system that gate memory measured in hundreds of gigabytes — sufficient to fully ran our workload. Overall, we identify overheads and opti- keep many OLTP databases in RAM. mizations that explain a total difference of about a factor of 20x Second, the rise of the Internet, as well as the variety of data in raw performance. We also show that there is no single “high intensive applications in use in a number of domains, has led to a pole in the tent” in modern (memory resident) database systems, rising interest in database-like applications without the full suite but that substantial time is spent in logging, latching, locking, B- of standard database features. Operating systems and networking tree, and buffer management operations. conferences are now full of proposals for “database-like” storage systems with varying forms of consistency, reliability, concur- Categories and Subject Descriptors rency, replication, and queryability [DG04, CDG+06, GBH+00, H.2.4 [Database Management]: Systems — transaction process- SMK+01]. ing; concurrency. This rising demand for database-like services, coupled with dra- General Terms matic performance improvements and cost reduction in hard- ware, suggests a number of interesting alternative systems that Measurement, Performance, Experimentation. one might build with a different set of features than those pro- vided by standard OLTP engines. Keywords Online Transaction Processing, OLTP, main memory transaction processing, DBMS architecture. 1.1 Alternative DBMS Architectures Obviously, optimizing OLTP systems for main memory is a good idea when a database fits in RAM. But a number of other data- base variants are possible; for example: Permission to make digital or hard copies of all or part of this work for per- sonal or classroom use is granted without fee provided that copies are not • Logless databases. A log-free database system might either made or distributed for profit or commercial advantage and that copies bear not need recovery, or might perform recovery from other sites this notice and the full citation on the first page. To copy otherwise, or in a cluster (as was proposed in systems like Harp [LGG+91], republish, to post on servers or to redistribute to lists, requires prior spe- Harbor [LM06], and C-Store [SAB+05]). cific permission and/or a fee. SIGMOD’08, June 9–12, 2008, Vancouver, BC, Canada. • Single threaded databases. Since multi-threading in OLTP Copyright 2008 ACM 978-1-60558-102-6/08/06...$5.00. databases was traditionally important for latency hiding in the

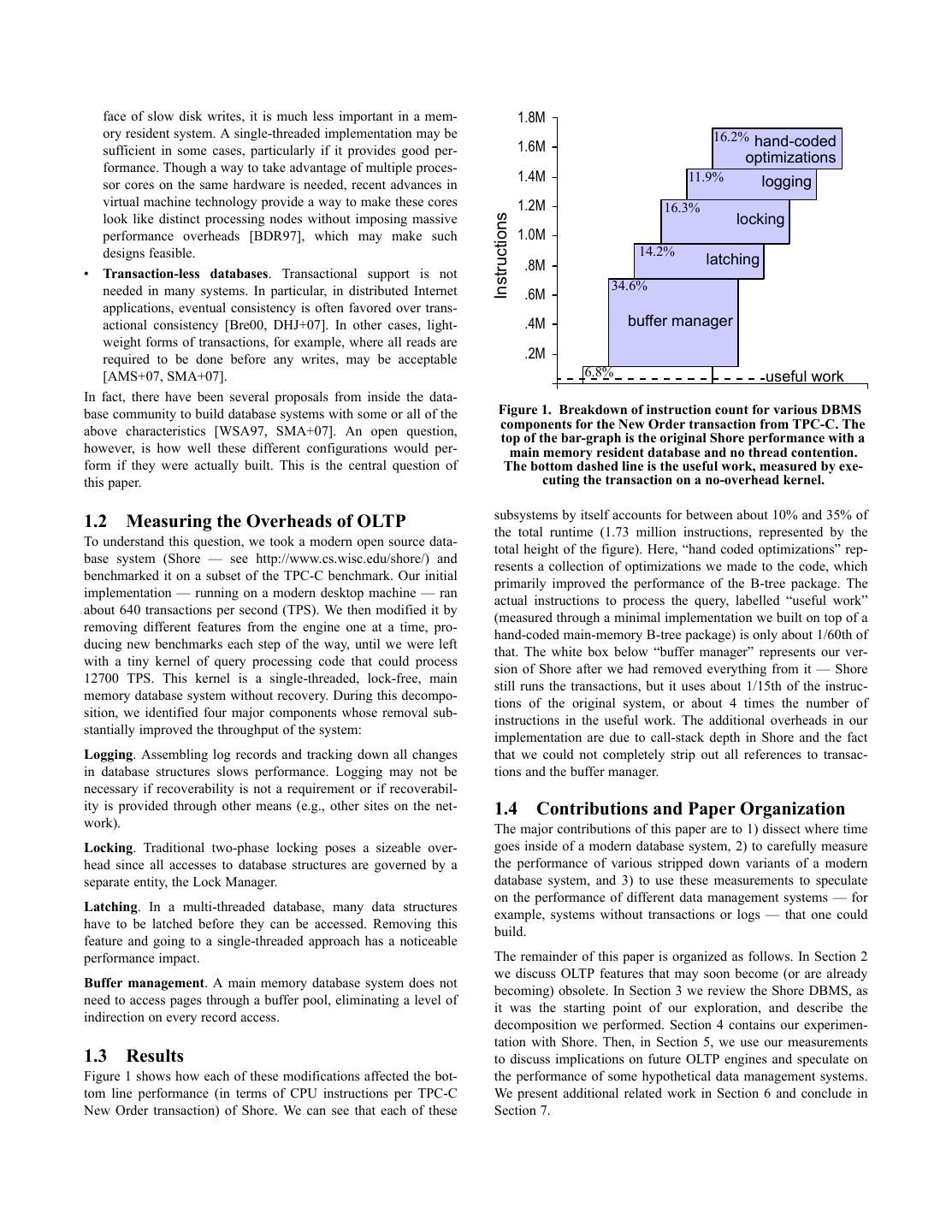

2 . face of slow disk writes, it is much less important in a mem- 1.8M ory resident system. A single-threaded implementation may be 16.2% hand-coded sufficient in some cases, particularly if it provides good per- 1.6M optimizations formance. Though a way to take advantage of multiple proces- 1.4M 11.9% logging sor cores on the same hardware is needed, recent advances in virtual machine technology provide a way to make these cores 1.2M 16.3% look like distinct processing nodes without imposing massive locking Instructions performance overheads [BDR97], which may make such 1.0M designs feasible. 14.2% .8M latching • Transaction-less databases. Transactional support is not needed in many systems. In particular, in distributed Internet 34.6% .6M applications, eventual consistency is often favored over trans- actional consistency [Bre00, DHJ+07]. In other cases, light- .4M buffer manager weight forms of transactions, for example, where all reads are required to be done before any writes, may be acceptable .2M [AMS+07, SMA+07]. 6.8% useful work .0M In fact, there have been several proposals from inside the data- base community to build database systems with some or all of the Figure 1. Breakdown of instruction count for various DBMS above characteristics [WSA97, SMA+07]. An open question, components for the New Order transaction from TPC-C. The top of the bar-graph is the original Shore performance with a however, is how well these different configurations would per- main memory resident database and no thread contention. form if they were actually built. This is the central question of The bottom dashed line is the useful work, measured by exe- this paper. cuting the transaction on a no-overhead kernel. subsystems by itself accounts for between about 10% and 35% of 1.2 Measuring the Overheads of OLTP the total runtime (1.73 million instructions, represented by the To understand this question, we took a modern open source data- total height of the figure). Here, “hand coded optimizations” rep- base system (Shore — see http://www.cs.wisc.edu/shore/) and resents a collection of optimizations we made to the code, which benchmarked it on a subset of the TPC-C benchmark. Our initial primarily improved the performance of the B-tree package. The implementation — running on a modern desktop machine — ran actual instructions to process the query, labelled “useful work” about 640 transactions per second (TPS). We then modified it by (measured through a minimal implementation we built on top of a removing different features from the engine one at a time, pro- hand-coded main-memory B-tree package) is only about 1/60th of ducing new benchmarks each step of the way, until we were left that. The white box below “buffer manager” represents our ver- with a tiny kernel of query processing code that could process sion of Shore after we had removed everything from it — Shore 12700 TPS. This kernel is a single-threaded, lock-free, main still runs the transactions, but it uses about 1/15th of the instruc- memory database system without recovery. During this decompo- tions of the original system, or about 4 times the number of sition, we identified four major components whose removal sub- instructions in the useful work. The additional overheads in our stantially improved the throughput of the system: implementation are due to call-stack depth in Shore and the fact Logging. Assembling log records and tracking down all changes that we could not completely strip out all references to transac- in database structures slows performance. Logging may not be tions and the buffer manager. necessary if recoverability is not a requirement or if recoverabil- ity is provided through other means (e.g., other sites on the net- 1.4 Contributions and Paper Organization work). The major contributions of this paper are to 1) dissect where time Locking. Traditional two-phase locking poses a sizeable over- goes inside of a modern database system, 2) to carefully measure head since all accesses to database structures are governed by a the performance of various stripped down variants of a modern separate entity, the Lock Manager. database system, and 3) to use these measurements to speculate on the performance of different data management systems — for Latching. In a multi-threaded database, many data structures example, systems without transactions or logs — that one could have to be latched before they can be accessed. Removing this build. feature and going to a single-threaded approach has a noticeable performance impact. The remainder of this paper is organized as follows. In Section 2 we discuss OLTP features that may soon become (or are already Buffer management. A main memory database system does not becoming) obsolete. In Section 3 we review the Shore DBMS, as need to access pages through a buffer pool, eliminating a level of it was the starting point of our exploration, and describe the indirection on every record access. decomposition we performed. Section 4 contains our experimen- tation with Shore. Then, in Section 5, we use our measurements 1.3 Results to discuss implications on future OLTP engines and speculate on Figure 1 shows how each of these modifications affected the bot- the performance of some hypothetical data management systems. tom line performance (in terms of CPU instructions per TPC-C We present additional related work in Section 6 and conclude in New Order transaction) of Shore. We can see that each of these Section 7.

3 .2. TRENDS IN OLTP cessors as multiple nodes in a shared-nothing cluster, perhaps As mentioned in the introduction, most popular relational managed by a virtual machine monitor that dynamically allocates RDBMSs trace their roots to systems developed in the 1970’s, resources between these logical nodes [BDR97]. and include features like disk-based indexing and heap files, log- Another concern is that networks will become the new disks, based transactions, and locking-based concurrency control. How- introducing latency into distributed transactions and requiring the ever, 30 years have passed since these architectural decisions re-introduction of transactions. This is certainly true in the gen- were made. At the present time, the computing world is quite dif- eral case, but for many transaction applications, it is possible to ferent from when these traditional systems were designed; the partition the workload to be “single-sited” [Hel07, SMA+07], purpose of this section is to explore the impact of these differ- such that all transactions can be run entirely on a single node in a ences. We made a similar set of observations in [SMA+07]. cluster. Hence, certain classes of database applications will not need sup- 2.1 Cluster Computing port for multi-threading; in such systems, legacy locking and Most current generation RDBMSs were originally written for latching code becomes unnecessary overhead. shared memory multi-processors in the 1970’s. Many vendors added support for shared disk architectures in the 1980’s. The last 2.4 High Availability vs. Logging two decades have seen the advent of Gamma-style shared nothing Production transaction processing systems require 24x7 availabil- databases [DGS+90] and the rise of clusters of commodity PCs ity. For this reason, most systems use some form of high avail- for many large scale computing tasks. Any future database system ability, essentially using two (or more) times the hardware to must be designed from the ground up to run on such clusters. ensure that there is an available standby in the event of a failure. 2.2 Memory Resident Databases Recent papers [LM06] have shown that, at least for warehouse systems, it is possible to exploit these available standbys to facili- Given the dramatic increase in RAM sizes over the past several tate recovery. In particular, rather than using a REDO log, recov- decades, there is every reason to believe that many OLTP systems ery can be accomplished by copying missing state from other already fit or will soon fit into main memory, especially the database replicas. In our previous work we have claimed that this aggregate main memory of a large cluster. This is largely because can be done for transaction systems as well [SMA+07]. If this is the sizes of most OTLP systems are not growing as dramatically in fact the case, then the recovery code in legacy databases as RAM capacity, as the number of customers, products, and becomes also unnecessary overhead. other real world entities they record information about does not scale with Moore’s law. Given this observation, it makes sense for database vendors to create systems that optimize for the com- 2.5 Transaction Variants mon case of a memory resident system. In such systems, opti- Although many OLTP systems clearly require transactional mized indices [RR99, RR00] as well as eschewing disk-optimized semantics, there have recently been proposals — particularly in tuple formats and page layouts (or lack thereof) [GS92] are the Internet domain — for data management systems with relaxed important to consider. consistency. Typically, what is desired is some form of eventual consistency [Bre00, DHJ+07] in the belief that availability is 2.3 Single Threading in OLTP Systems more important than transactional semantics. Databases for such environments are likely to need little of the machinery developed All modern databases include extensive support for multi-thread- for transactions (e.g., logs, locks, two-phase commit, etc.). ing, including a collection of transactional concurrency control protocols as well as extensive infiltration of their code with latch- Even if one requires some form of strict consistency, many ing commands to support multiple threads accessing shared struc- slightly relaxed models are possible. For example, the widespread tures like buffer pools and index pages. The traditional adoption of snapshot isolation (which is non-transactional) sug- motivations for multi-threading are to allow transaction process- gests that many users are willing to trade transactional semantics ing to occur on behalf of one transaction while another waits for for performance (in this case, due to the elimination of read data to come from disk, and to prevent long-running transactions locks). from keeping short transactions from making progress. And finally, recent research has shown that there are limited We claim that neither of these motivations is valid any more. forms of transactions that require substantially less machinery First, if databases are memory resident, then there are never any than standard database transactions. For example, if all transac- disk waits. Furthermore, production transaction systems do not tions are “two-phase” — that is, they perform all of their reads include any user waits — transactions are executed almost exclu- before any of their writes and are guaranteed not to abort after sively through stored procedures. Second, OLTP workloads are completing their reads — then UNDO logging is not necessary very simple. A typical transaction consists of a few index lookups [AMS+07, SMA+07]. and updates, which, in a memory resident system, can be com- pleted in hundreds of microseconds. Moreover, with the bifurca- 2.6 Summary tion of the modern database industry into a transaction processing As our references suggest, several research groups, including and a warehousing market, long running (analytical) queries are Amazon [DHJ+07], HP [AMS+07], NYU [WSA97], and MIT now serviced by warehouses. [SMA+07] have demonstrated interest in building systems that One concern is that multi-threading is needed to support differ substantially from the classic OTLP design. In particular, machines with multiple processors. We believe, however, that this the MIT H-Store [SMA+07] system demonstrates that removing can be addressed by treating one physical node with multiple pro- all of the above features can yield a two-order-of-magnitude

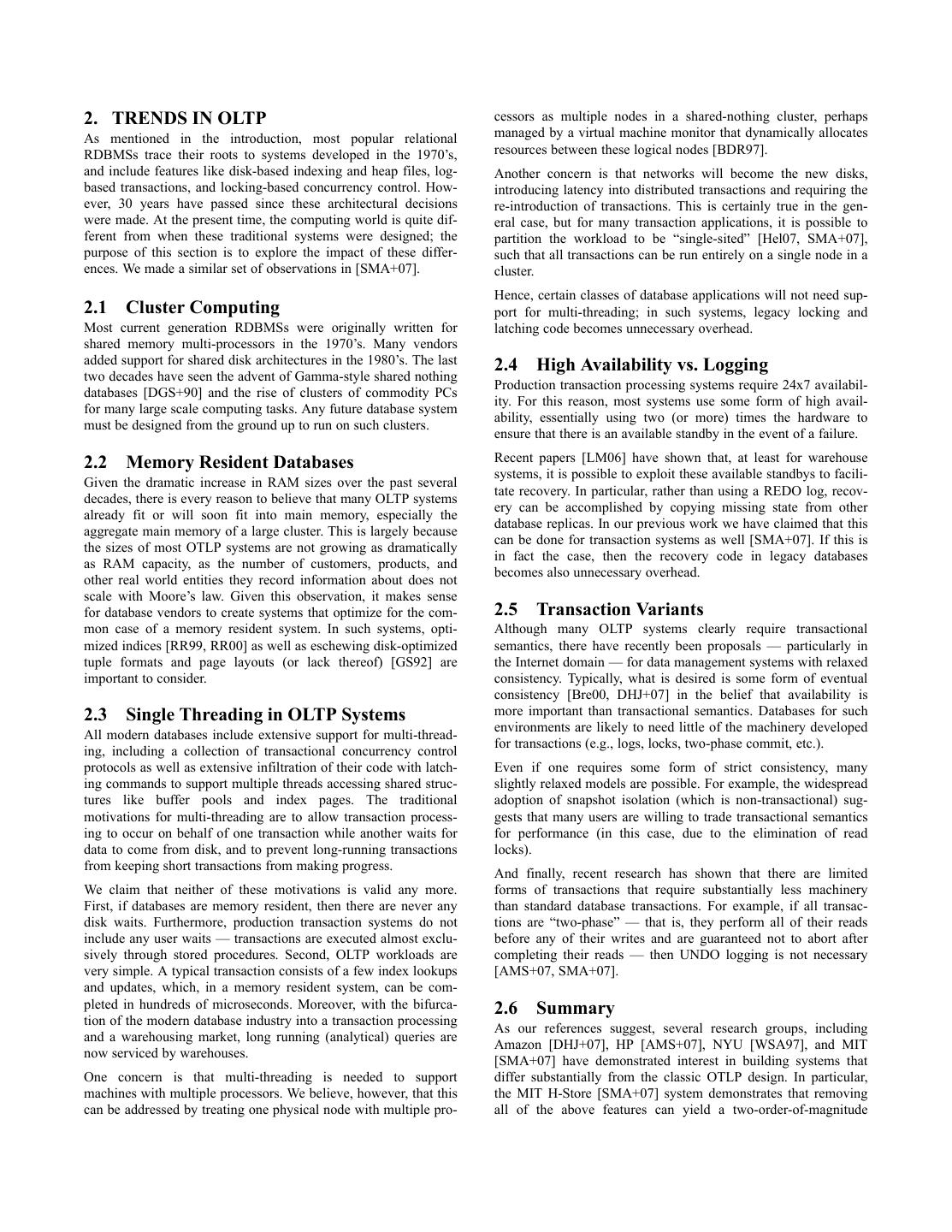

4 .speedup in transaction throughput, suggesting that some of these databases variants are likely to provide remarkable performance. Transaction Manager Hence, it would seem to behoove the traditional database vendors Lock Manager to consider producing products with some of these features Log Manager Buffer Manager explicitly disabled. With the goal of helping these implementers understand the performance impact of different variants they may consider building, we proceed with our detailed performance concurrency control study of Shore and the variants of it we created. storage structures 3. SHORE (indexes, files, directory) Shore (Scalable Heterogeneous Object Repository) was devel- oped at the University of Wisconsin in the early 1990’s and was Application designed to be a typed, persistent object system borrowing from Transactions both file system and object-oriented database technologies (one per thread) [CDF+94]. It had a layered architecture that allowed users to choose the appropriate level of support for their application from several components. These layers (type system, unix compatibil- Figure 2. Basic components in Shore (see text for detailed description). ity, language heterogeneity) were provided on top of the Shore Storage Manager (SSM). The storage manager provided features that are found in all modern DBMS: full concurrency control and Inside the transaction body (enclosed by begin and commit state- recovery (ACID transaction properties) with two-phase locking ments) the application programmer uses Shore’s methods to and write-ahead logging, along with a robust implementation of access the storage structures: the file and indexes, along with a B-trees. Its basic design comes from ideas described in Gray’s directory to find them. All the storage structures use slotted pages and Reuter’s seminal book on transaction processing [GR93], to store information. Shore’s methods run under the transaction with many algorithms implemented straight from the ARIES manager which closely interacts with all other components. papers [MHL+92, Moh89, ML89]. Accessing the storage structures involves calls to the Log Man- ager, the Lock Manager, and the Buffer Pool Manager. These Support for the project ended in the late 1990’s, but continued for invocations always happen through a concurrency control layer, the Shore Storage Manager; as of 2007, SSM version 5.0 is avail- which oversees shared and mutually exclusive accesses to the able for Linux on Intel x86 processors. Throughout the paper we various resources. This is not a separate module; rather, through- use “Shore” to refer to the Shore Storage Manager. Information out the code, all accesses to shared structures happen by acquiring and source code of Shore is available online1. In the rest of this a latch. Latches are similar to database locks (in that they can be section we discuss the key components of Shore, its code struc- shared or exclusive), but they are lightweight and come with no ture, the characteristics of Shore that affect end-to-end perfor- deadlock detection mechanisms. The application programmers mance, along with our set of modifications and the effect of these need to ensure that latching will not lead to deadlock. modifications to the code line. Next, we discuss the thread architecture and give more details on 3.1 Shore Architecture locking, logging, and the buffer pool management. There are several features of Shore that we do not describe as Thread support. Shore provides its own user-level, non-preemp- they are not relevant to this paper. These include disk volume tive thread package that was derived from NewThreads (origi- management (we pre-load the entire database in main memory), nally developed at the University of Washington), providing a recovery (we do not examine application crashes), distributed portable OS interface API. The choice of the thread package had transactions, and access methods other than B-trees (such as R- implications for the code design and behavior of Shore. Since trees). The remaining features can be organized roughly into the threads are user-level, the application runs as a single process, components shown in Figure 2. multiplexing all Shore threads. Shore avoids blocking for I/O by Shore is provided as a library; the user code (in our case, the spawning separate processes responsible for I/O devices (all pro- implementation of the TPC-C benchmark) is linked against the cesses communicate through shared memory). However, applica- library and must use the threads library that Shore also uses. Each tions cannot take direct advantage of multicore (or SMP) systems, transaction runs inside a Shore thread, accessing both local user- unless they are built as part of a distributed application; that, space variables and Shore-provided data structures and methods. however, would add unnecessary overhead for multicore CPUs, The methods relevant to OLTP are those needed to create and when simple, non-user level threading would be sufficient. populate a database file, load it into the buffer pool, begin, com- Consequently, for the results reported throughout this paper, we mit, or abort a transaction, and perform record-level operations use single-threaded operation. A system that uses multithreaded such as fetch, update, create, and delete, along with the associated operation would consume a larger number of instructions and operations on primary and secondary B-tree indexes. CPU cycles per transaction (since thread code would need to be executed in addition to transactional code). Since the primary goal of the paper is to look at the cost in CPU instructions of var- ious database system components, the lack of a full multi-thread- 1. http:// www.cs.wisc.edu/shore/ ing implementation in Shore only affects our results in that we

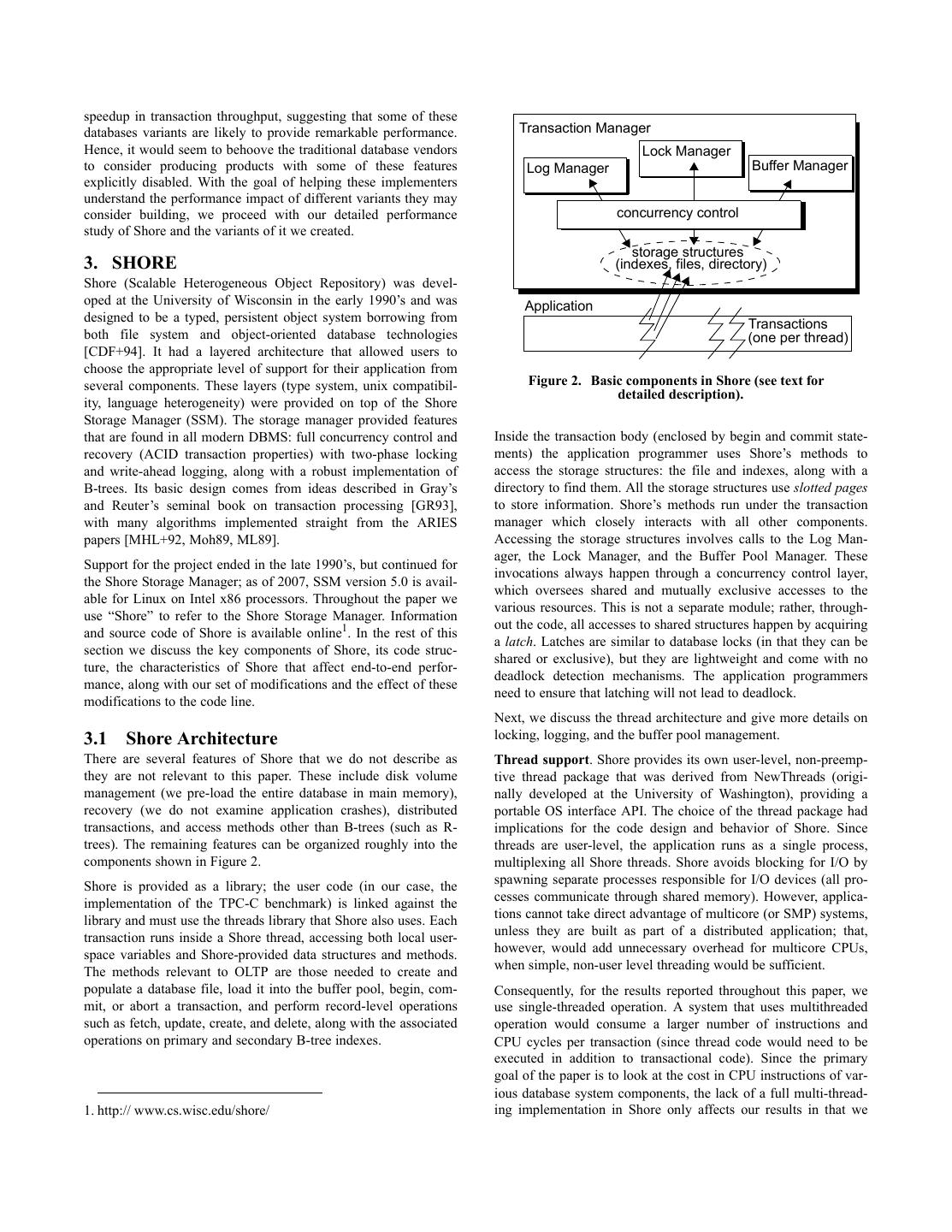

5 . Table 1: Possible set of optimizations for OLTP performing the update there, and handing the new data to the stor- age manager. OLTP properties and DBMS modification More details on the architecture of Shore can be found at the new platforms project’s web site. Some additional mechanisms and features are logless architectures remove logging also described in the following paragraphs, where we discuss our own modifications to Shore. remove locking partitioning, commutativity (when applicable) 3.2 Removing Shore Components single thread, Table 1 summarizes the properties and characteristics of modern one transaction at a time remove locking, OLTP systems (left column) that allow us to strip certain func- remove latching tionality from a DBMS (right column). We use these optimiza- remove buffer manager, tions as a guideline for modifying Shore. Due to the tight main memory resident integration of all managers in Shore, it was not possible to cleanly directory separate all components so that they could be removed in an arbi- transaction-less databases avoid transaction bookkeeping trary order. The next best thing was to remove features in an order dictated by the structure of the code, allowing for flexibility whenever possible. That order was the following: begin at a lower starting point in total CPU instructions when we begin removing system components. 1. Removing logging. Locking and logging. Shore implements standard two-phase 2. Removing locking OR latching. locking, with transactions having standard ACID properties. It 3. Removing latching OR locking. supports hierarchical locking with the lock manager escalating up the hierarchy by default (record, page, store, volume). Each trans- 4. Removing code related to the buffer manager. action keeps a list of the locks it holds, so that the locks can be In addition, we found that the following optimizations could be logged when the transaction enters the prepared state and released performed at any point: at the end of the transaction. Shore also implements write ahead logging (WAL), which requires a close interaction between the • Streamline and hardcode the B-tree key evaluation logic, as is log manager and the buffer manager. Before a page can be presently done in most commercial systems. flushed from the buffer pool, the corresponding log record might • Accelerate directory lookups. have to be flushed. This also requires a close interaction between • Increase page size to avoid frequent allocations (subsumed by the transaction manager and the log manager. All three managers step 4 above). understand log sequence numbers (LSNs), which serve to identify • Remove transactional sessions (begin, commit, various and locate log records in the log, timestamp pages, identify the checks). last update performed by a transaction, and find the last log Our approach to implementing the above-mentioned actions is record written by a transaction. Each page bears the LSN of the described next. In general, to remove a certain component from last update that affected that page. A page cannot be written to the system, we either add a few if-statements to avoid executing disk until the log record with that page’s LSN has been written to code belonging to that component, or, if we find that if-statements stable storage. add a measurable overhead, we rewrite entire methods to avoid Buffer Manager. The buffer manager is the means by which all invoking that component altogether. other modules (except the log manager) read and write pages. A Remove logging. Removing logging consists of three steps. The page is read by issuing a fix method call to the buffer manager. first is to avoid generating I/O requests along with the time asso- For a database that fits in main memory, the page is always found ciated to perform these requests (later, in Figure 7, we label this in the buffer pool (in the non-main memory case, if the requested modification “disk log”). We achieve this by allowing group com- page is not in the buffer pool, the thread gives up the CPU and mit and then increasing the log buffer size so that it is not flushed waits for the process responsible for I/O to place the page in the to disk during our experiments. Then, we comment out all func- buffer pool). The fix method updates the mapping between page tions that are used to prepare and write log records (labeled “main IDs and buffer frames and usage statistics. To ensure consistency log” in Figure 7). The last step was to add if-statements through- there is a latch to control access to the fix method. Reading a out the code to avoid processing Log Sequence Numbers (labeled record (once a record ID has been found through an index “LSN” in Figure 7). lookup) involves Remove locking (interchangeable with removing latching). In 1. locking the record (and page, per hierarchical locking), our experiments we found that we could safely interchange the 2. fixing the page in the buffer pool, and order of removing locking and latching (once logging was already removed). Since latching is also performed inside locking, remov- 3. computing the offset within the page of the record’s tag. ing one also reduces the overhead of the other. To remove locking Reading a record is performed by issuing a pin / unpin method we first changed all Lock Manager methods to return immedi- call. Updates to records are accomplished by copying out part or ately, as if the lock request was successful and all checks for all of the record from the buffer pool to the user’s address space, locks were satisfied. Then, we modified methods related to pin-

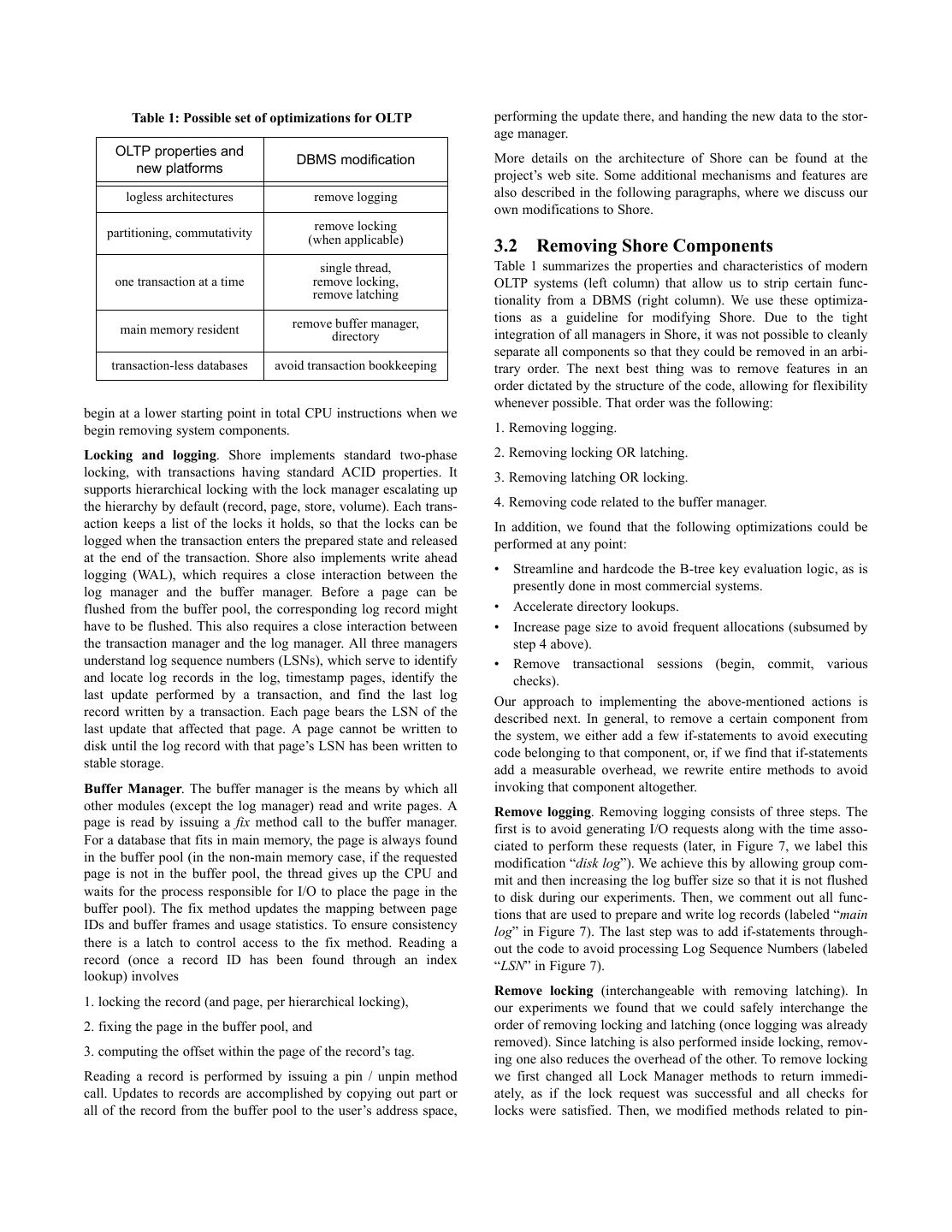

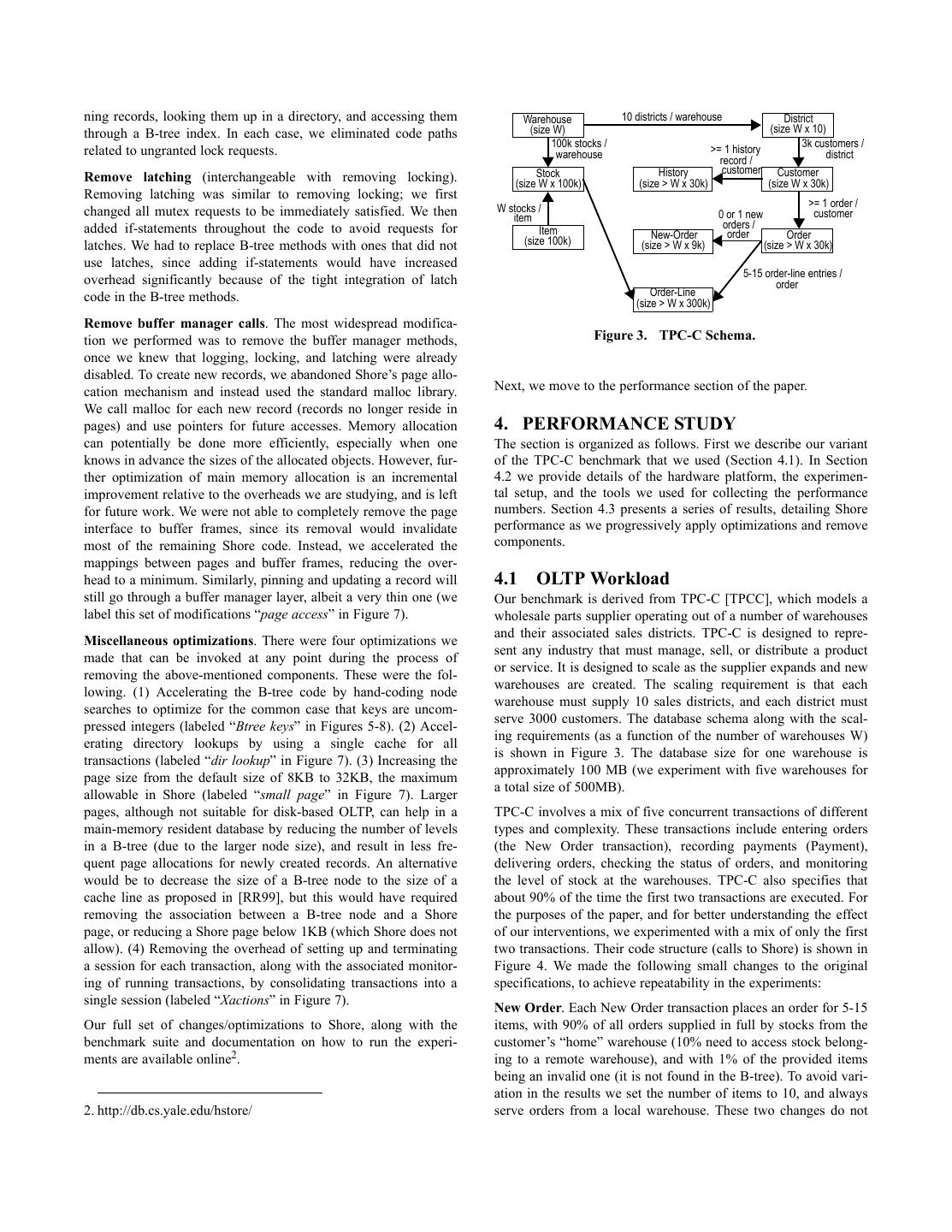

6 .ning records, looking them up in a directory, and accessing them Warehouse 10 districts / warehouse District through a B-tree index. In each case, we eliminated code paths (size W) (size W x 10) 100k stocks / 3k customers / related to ungranted lock requests. warehouse >= 1 history district record / Stock History customer Customer Remove latching (interchangeable with removing locking). (size W x 100k) (size > W x 30k) (size W x 30k) Removing latching was similar to removing locking; we first W stocks / >= 1 order / changed all mutex requests to be immediately satisfied. We then item 0 or 1 new customer added if-statements throughout the code to avoid requests for orders / Item New-Order order Order latches. We had to replace B-tree methods with ones that did not (size 100k) (size > W x 9k) (size > W x 30k) use latches, since adding if-statements would have increased 5-15 order-line entries / overhead significantly because of the tight integration of latch order code in the B-tree methods. Order-Line (size > W x 300k) Remove buffer manager calls. The most widespread modifica- tion we performed was to remove the buffer manager methods, Figure 3. TPC-C Schema. once we knew that logging, locking, and latching were already disabled. To create new records, we abandoned Shore’s page allo- cation mechanism and instead used the standard malloc library. Next, we move to the performance section of the paper. We call malloc for each new record (records no longer reside in pages) and use pointers for future accesses. Memory allocation 4. PERFORMANCE STUDY can potentially be done more efficiently, especially when one The section is organized as follows. First we describe our variant knows in advance the sizes of the allocated objects. However, fur- of the TPC-C benchmark that we used (Section 4.1). In Section ther optimization of main memory allocation is an incremental 4.2 we provide details of the hardware platform, the experimen- improvement relative to the overheads we are studying, and is left tal setup, and the tools we used for collecting the performance for future work. We were not able to completely remove the page numbers. Section 4.3 presents a series of results, detailing Shore interface to buffer frames, since its removal would invalidate performance as we progressively apply optimizations and remove most of the remaining Shore code. Instead, we accelerated the components. mappings between pages and buffer frames, reducing the over- head to a minimum. Similarly, pinning and updating a record will 4.1 OLTP Workload still go through a buffer manager layer, albeit a very thin one (we Our benchmark is derived from TPC-C [TPCC], which models a label this set of modifications “page access” in Figure 7). wholesale parts supplier operating out of a number of warehouses and their associated sales districts. TPC-C is designed to repre- Miscellaneous optimizations. There were four optimizations we sent any industry that must manage, sell, or distribute a product made that can be invoked at any point during the process of or service. It is designed to scale as the supplier expands and new removing the above-mentioned components. These were the fol- warehouses are created. The scaling requirement is that each lowing. (1) Accelerating the B-tree code by hand-coding node warehouse must supply 10 sales districts, and each district must searches to optimize for the common case that keys are uncom- serve 3000 customers. The database schema along with the scal- pressed integers (labeled “Btree keys” in Figures 5-8). (2) Accel- ing requirements (as a function of the number of warehouses W) erating directory lookups by using a single cache for all is shown in Figure 3. The database size for one warehouse is transactions (labeled “dir lookup” in Figure 7). (3) Increasing the approximately 100 MB (we experiment with five warehouses for page size from the default size of 8KB to 32KB, the maximum a total size of 500MB). allowable in Shore (labeled “small page” in Figure 7). Larger pages, although not suitable for disk-based OLTP, can help in a TPC-C involves a mix of five concurrent transactions of different main-memory resident database by reducing the number of levels types and complexity. These transactions include entering orders in a B-tree (due to the larger node size), and result in less fre- (the New Order transaction), recording payments (Payment), quent page allocations for newly created records. An alternative delivering orders, checking the status of orders, and monitoring would be to decrease the size of a B-tree node to the size of a the level of stock at the warehouses. TPC-C also specifies that cache line as proposed in [RR99], but this would have required about 90% of the time the first two transactions are executed. For removing the association between a B-tree node and a Shore the purposes of the paper, and for better understanding the effect page, or reducing a Shore page below 1KB (which Shore does not of our interventions, we experimented with a mix of only the first allow). (4) Removing the overhead of setting up and terminating two transactions. Their code structure (calls to Shore) is shown in a session for each transaction, along with the associated monitor- Figure 4. We made the following small changes to the original ing of running transactions, by consolidating transactions into a specifications, to achieve repeatability in the experiments: single session (labeled “Xactions” in Figure 7). New Order. Each New Order transaction places an order for 5-15 Our full set of changes/optimizations to Shore, along with the items, with 90% of all orders supplied in full by stocks from the benchmark suite and documentation on how to run the experi- customer’s “home” warehouse (10% need to access stock belong- ments are available online2. ing to a remote warehouse), and with 1% of the provided items being an invalid one (it is not found in the B-tree). To avoid vari- ation in the results we set the number of items to 10, and always 2. http://db.cs.yale.edu/hstore/ serve orders from a local warehouse. These two changes do not

7 . New Order Payment and they are deterministic. Equal instruction counts among differ- begin begin ent components can of course result in different wall clock execu- for loop(10) Btree lookup(D), pin tion times (CPU cycles), because of different microarchitectural .....Btree lookup(I), pin Btree lookup (W), pin behavior (cache misses, TLB misses, etc.). In Section 4.3.4 we Btree lookup(D), pin Btree lookup (C), pin compare instruction counts to CPU cycles, illustrating the compo- Btree lookup (W), pin update rec (C) nents where there is high micro-architectural efficiency that can Btree lookup (C), pin update rec (D) update rec (D) update rec (W) be attributed to issues like few L2 cache misses and good instruc- for loop (10) create rec (H) tion-level parallelism. .....Btree lookup(S), pin commit Cycle count, however, is susceptible to various parameters, rang- .....update rec (S) .....create rec (O-L) ing from CPU characteristics, such as cache size/associativity, .....insert Btree (O-L) branch predictors, TLB operation, to run-time variables such as create rec (O) concurrent processes. Therefore it should be treated as indicative insert Btree (O) of relative time breakdown. We do not expand on the issue of create rec (N-O) CPU cache performance in this paper, as our focus is to identify insert Btree (N-O) the set of DBMS components to remove that can produce up to insert Btree 2ndary(N-O) commit two orders of magnitude better performance for certain classes of OLTP workloads. More information on the micro-architectural Figure 4. Calls to Shore’s methods for New Order and behavior of database workloads can be found elsewhere [Ail04]. Payment transactions. Next, we begin the presentation of our results. 4.3 Experimental Results affect the throughput. The code in Figure 4 shows the two-phase In all experiments, our baseline Shore platform is a memory-resi- optimization mentioned in Section 2.5, which allows us to avoid dent database that is never flushed to disk (the only disk I/O that aborting a transaction; we read all items at the beginning, and if might be performed is from the Log Manager). There is only a we find an invalid one we abort without redoing changes in the single thread executing one transaction at a time. Masking I/O (in database. the case of disk-based logging) is not a concern as it only adds to Payment. This is a lightweight transaction; it updates the cus- overall response time and not to the instructions or cycles that the tomer’s balance and warehouse/district sales fields, and generates transaction has actually run. a history record. Again, there is a choice of home and remote We placed 11 different switches in Shore to allow us to remove warehouse which we always set to the home one. Another ran- functionality (or perform optimizations), which, during the pre- domly set input is whether a customer is looked up by name or sentation of the results, we organize into six components. For a ID, and we always use ID. list of the 11 switches (and the corresponding components) and the order we apply them, see Figure 7. These switches were 4.2 Setup and Measurement Methodology described in more detail in Section 3.2 above. The last switch is All experiments are performed on a single-core Pentium 4 to bypass Shore completely and run our own, minimal-overhead 3.2GHz, with 1MB L2 cache, hyperthreading disabled, 1GB kernel, which we call “optimal” in our results. This kernel is basi- RAM, running Linux 2.6. We compiled with gcc version 3.4.4 cally a memory-resident, hand-built B-tree package with no addi- and O2 optimizations. We use the standard linux utility iostat to tional transaction or query processing functionality. monitor disk activity and verify in the main memory-resident experiments there is no generated disk traffic. In all experiments 4.3.1 Effect on Throughput we pre-load the entire database into the main memory. Then we After all of these deletions and optimizations, Shore is left with a run a large number of transactions (40,000). Throughput is mea- code residue, which is all CPU cycles since there is no I/O what- sured directly by dividing wall clock time by the number of com- soever; specifically, an average of about 80 microseconds per pleted transactions. transaction (for a 50-50 mix of New Order and Payment transac- For detailed instruction and cycle counts we instrument the tions), or about 12,700 transactions per second. benchmark application code with calls to the PAPI library In comparison, the useful work in our optimal system was about [MBD+99] http://icl.cs.utk.edu/papi/, which provides access to 22 microseconds per transaction, or about 46,500 transactions per the CPU performance counters. Since we make a call to PAPI second. The main causes of this difference are a deeper call stack after every call to Shore, we have to compensate for the cost of depth in our kernel, and our inability to remove some of the trans- PAPI calls when reporting the final numbers. These had an action set up and buffer pool calls without breaking Shore. As a instruction count of 535-537 and were taking between 1350 and point of reference, “out of the box” Shore, with logging enabled 1500 cycles in our machine. We measure each call to Shore for but with the database cached in main memory, provides about 640 all 40,000 transactions and report the average numbers. transactions per second (1.6 milliseconds per transaction), Most of the graphs reported in the paper are based on CPU whereas Shore running in main memory, but without log flushing instruction counts (as measured through the CPU performance provides about 1,700 transactions per second, or about 588 micro- counters) and not wall clock time. The reason is that instruction seconds per transaction. Hence, our modifications yield a factor counts are representative of the total run-time code path length, of 20 improvement in overall throughput.

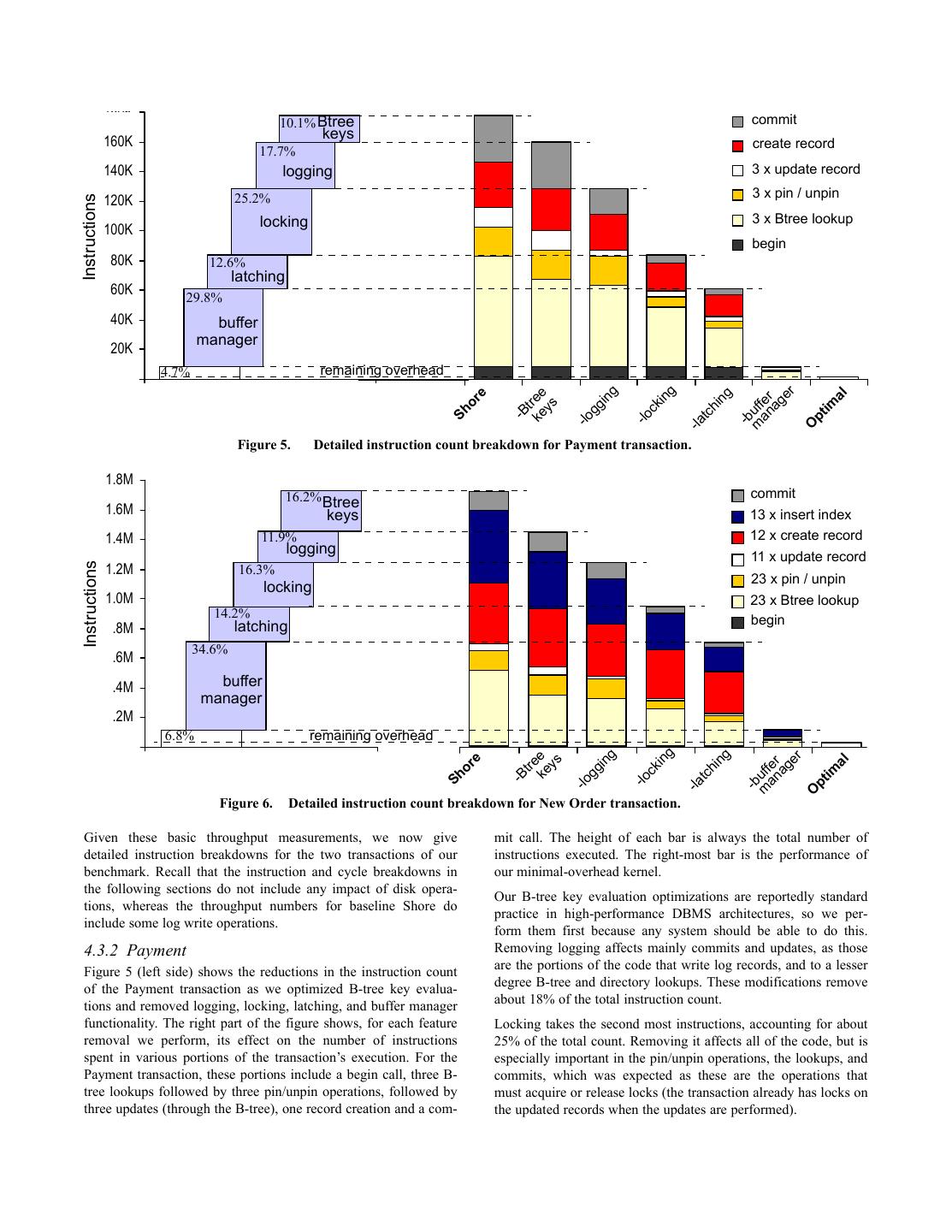

8 . 180K 10.1% Btree commit 160K keys create record 17.7% 140K logging 3 x update record 25.2% 3 x pin / unpin 120K Instructions locking 3 x Btree lookup 100K begin 80K 12.6% latching 60K 29.8% 40K buffer manager 20K 4.7% remaining overhead K g r g ke ree e ng al e in a n er in or ag im ys ck hi gg m uff t Sh -B tc pt -lo -lo -b -la O Figure 5. Detailed instruction count breakdown for Payment transaction. 1.8M 16.2% Btree commit 1.6M keys 13 x insert index 1.4M 11.9% 12 x create record logging 11 x update record 1.2M Instructions 16.3% 23 x pin / unpin locking 1.0M 23 x Btree lookup 14.2% begin .8M latching 34.6% .6M .4M buffer manager .2M 6.8% remaining overhead .0M g g g er ke e e in ys al in n an er tre or ag hi ck im gg m uff -B tc Sh -lo -lo pt -b -la Figure 6. Detailed instruction count breakdown for New Order transaction. O Given these basic throughput measurements, we now give mit call. The height of each bar is always the total number of detailed instruction breakdowns for the two transactions of our instructions executed. The right-most bar is the performance of benchmark. Recall that the instruction and cycle breakdowns in our minimal-overhead kernel. the following sections do not include any impact of disk opera- Our B-tree key evaluation optimizations are reportedly standard tions, whereas the throughput numbers for baseline Shore do practice in high-performance DBMS architectures, so we per- include some log write operations. form them first because any system should be able to do this. 4.3.2 Payment Removing logging affects mainly commits and updates, as those Figure 5 (left side) shows the reductions in the instruction count are the portions of the code that write log records, and to a lesser of the Payment transaction as we optimized B-tree key evalua- degree B-tree and directory lookups. These modifications remove tions and removed logging, locking, latching, and buffer manager about 18% of the total instruction count. functionality. The right part of the figure shows, for each feature Locking takes the second most instructions, accounting for about removal we perform, its effect on the number of instructions 25% of the total count. Removing it affects all of the code, but is spent in various portions of the transaction’s execution. For the especially important in the pin/unpin operations, the lookups, and Payment transaction, these portions include a begin call, three B- commits, which was expected as these are the operations that tree lookups followed by three pin/unpin operations, followed by must acquire or release locks (the transaction already has locks on three updates (through the B-tree), one record creation and a com- the updated records when the updates are performed).

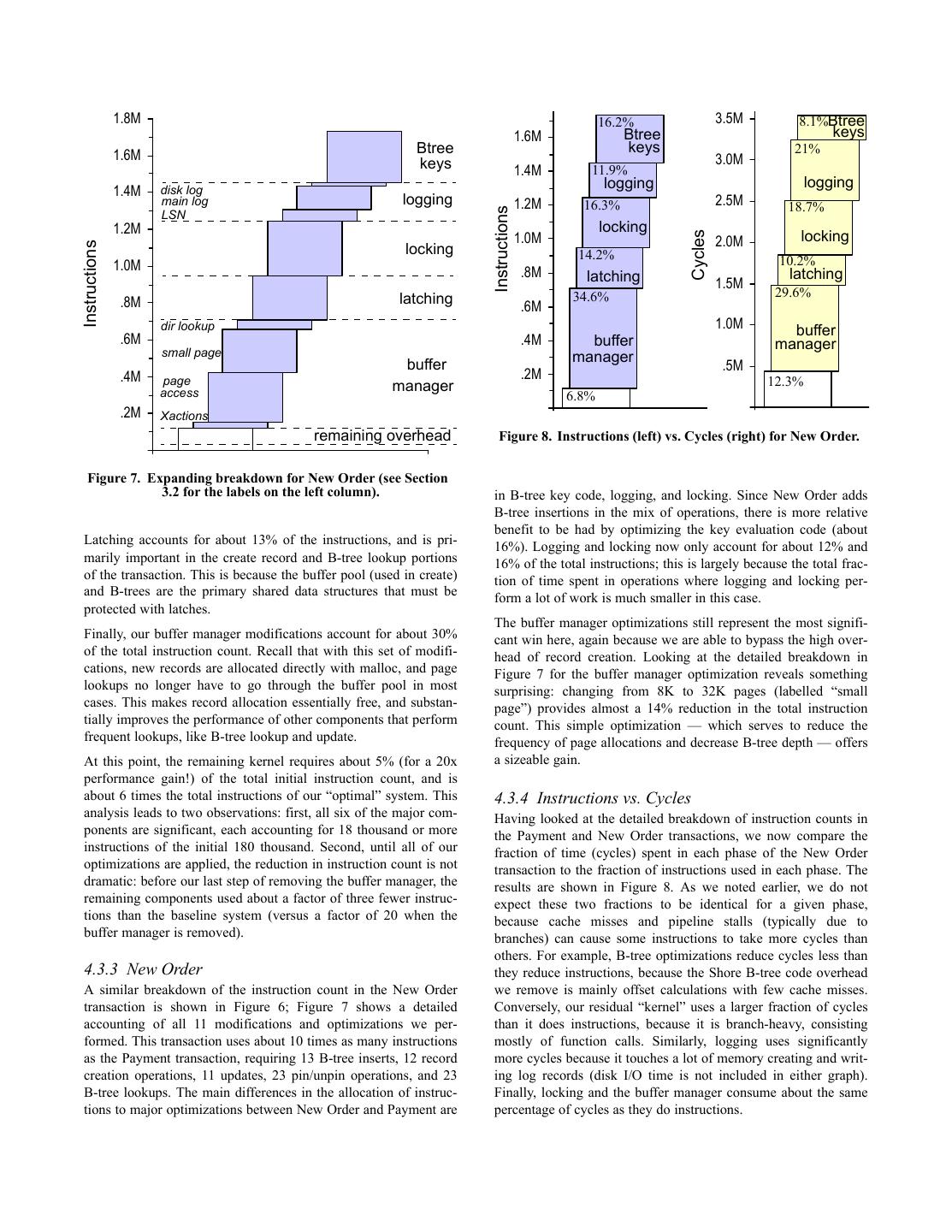

9 . 1.8M 16.2% 3.5M 8.1%Btree 1.6M Btree keys 1.6M Btree keys 21% keys 3.0M 1.4M 11.9% logging logging 1.4M disk log main log logging 1.2M 16.3% 2.5M 18.7% Instructions LSN 1.2M locking 1.0M locking Cycles locking 2.0M Instructions 14.2% 10.2% 1.0M .8M latching latching 1.5M latching 34.6% 29.6% .8M .6M dir lookup 1.0M buffer .6M .4M buffer manager small page manager buffer .5M .4M page .2M 12.3% access manager 6.8% .2M .0M .0M Xactions remaining overhead Figure 8. Instructions (left) vs. Cycles (right) for New Order. .0M Figure 7. Expanding breakdown for New Order (see Section 3.2 for the labels on the left column). in B-tree key code, logging, and locking. Since New Order adds B-tree insertions in the mix of operations, there is more relative benefit to be had by optimizing the key evaluation code (about Latching accounts for about 13% of the instructions, and is pri- 16%). Logging and locking now only account for about 12% and marily important in the create record and B-tree lookup portions 16% of the total instructions; this is largely because the total frac- of the transaction. This is because the buffer pool (used in create) tion of time spent in operations where logging and locking per- and B-trees are the primary shared data structures that must be form a lot of work is much smaller in this case. protected with latches. The buffer manager optimizations still represent the most signifi- Finally, our buffer manager modifications account for about 30% cant win here, again because we are able to bypass the high over- of the total instruction count. Recall that with this set of modifi- head of record creation. Looking at the detailed breakdown in cations, new records are allocated directly with malloc, and page Figure 7 for the buffer manager optimization reveals something lookups no longer have to go through the buffer pool in most surprising: changing from 8K to 32K pages (labelled “small cases. This makes record allocation essentially free, and substan- page”) provides almost a 14% reduction in the total instruction tially improves the performance of other components that perform count. This simple optimization — which serves to reduce the frequent lookups, like B-tree lookup and update. frequency of page allocations and decrease B-tree depth — offers At this point, the remaining kernel requires about 5% (for a 20x a sizeable gain. performance gain!) of the total initial instruction count, and is about 6 times the total instructions of our “optimal” system. This 4.3.4 Instructions vs. Cycles analysis leads to two observations: first, all six of the major com- Having looked at the detailed breakdown of instruction counts in ponents are significant, each accounting for 18 thousand or more the Payment and New Order transactions, we now compare the instructions of the initial 180 thousand. Second, until all of our fraction of time (cycles) spent in each phase of the New Order optimizations are applied, the reduction in instruction count is not transaction to the fraction of instructions used in each phase. The dramatic: before our last step of removing the buffer manager, the results are shown in Figure 8. As we noted earlier, we do not remaining components used about a factor of three fewer instruc- expect these two fractions to be identical for a given phase, tions than the baseline system (versus a factor of 20 when the because cache misses and pipeline stalls (typically due to buffer manager is removed). branches) can cause some instructions to take more cycles than others. For example, B-tree optimizations reduce cycles less than 4.3.3 New Order they reduce instructions, because the Shore B-tree code overhead A similar breakdown of the instruction count in the New Order we remove is mainly offset calculations with few cache misses. transaction is shown in Figure 6; Figure 7 shows a detailed Conversely, our residual “kernel” uses a larger fraction of cycles accounting of all 11 modifications and optimizations we per- than it does instructions, because it is branch-heavy, consisting formed. This transaction uses about 10 times as many instructions mostly of function calls. Similarly, logging uses significantly as the Payment transaction, requiring 13 B-tree inserts, 12 record more cycles because it touches a lot of memory creating and writ- creation operations, 11 updates, 23 pin/unpin operations, and 23 ing log records (disk I/O time is not included in either graph). B-tree lookups. The main differences in the allocation of instruc- Finally, locking and the buffer manager consume about the same tions to major optimizations between New Order and Payment are percentage of cycles as they do instructions.

10 .5. IMPLICATIONS FOR FUTURE OLTP grams on multicore machines mature and find their way into products, it will be very interesting to revisit new implementa- ENGINES tions for latching and reassess the overhead of multithreading in Given the performance results in the previous section, we revisit OLTP. our discussion of future OLTP designs from Section 2. Before going into the detailed implications of our results for the design A second option is to use virtualization, either at the operating of various database subsystems, we make two high level observa- system or DBMS level, to make it appear that each core is a sin- tions from our numbers: gle-threaded machine. It is unclear what the performance implica- tions of that approach would be, warranting a careful study of • First, the benefit of stripping out any one of the components such virtualization. A third option, complementary to the other of the system has a relatively small benefit on overall perfor- two, is to attempt to exploit intra-query parallelism, which may mance. For example, our main memory optimizations be feasible even if the system only runs one transaction at a time. improved the performance of Shore by about 30%, which is However, the amount of intra-query parallelism available in a significant but unlikely to motivate the major database ven- typical OLTP transaction is likely to be limited. dors to re-engineer their systems. Similar gains would be obtained by eliminating just latching or switching to a single- 5.3 Replication Management threaded, one-transaction-at-a-time approach. The traditional database wisdom is to support replication through • The most significant gains are to be had when multiple opti- a log-shipping based active-passive scheme; namely, every object mizations are applied. A fully stripped down system provides has an “active” primary copy, to which all updates are first a factor of twenty or more performance gain over out-of-the- directed. The log of changes is then spooled over the network to box Shore, which is truly significant. Note that such a system one or more “passive” backup sites. Recovery logic rolls the can still provide transactional semantics, if only one transac- remote database forward from the log. This scheme has several tion is run at a time, all transactions are two phase, and recov- disadvantages. First, unless a form of two-phase commit is used, ery is implemented by copying state from other nodes in the the remote copies are not transactionally consistent with the pri- network. Such a system is very, very different from what any mary. Hence, reads cannot be directed to replicas if transaction- of the vendors currently offers, however. consistent reads are required. If reads are directed to replicas, nothing can be said about the accuracy of the answers. A second 5.1 Concurrency Control disadvantage is that failover is not instantaneous. Hence, the stall during failures is longer than it needs to be. Third, it requires the Our experiments showed a significant contribution (about 19% of availability of a log; our experiments show that maintaining a log cycles) of dynamic locking to total overhead. This suggests that takes about 20% of total cycles. Hence, we believe it is interest- there is a large gain to be had by identifying scenarios, such as ing to consider alternatives to active-passive replication, such as application commutativity, or transaction-at-a-time processing, an active-active approach. that allow concurrency control to be turned off. However, there are many DBMS applications which are not sufficiently well- The main reason that active-passive replication with log shipping behaved or where running only one transaction at a time per site has been used in the past is that the cost of rolling the log forward will not work. In such cases, there is an interesting question as to has been assumed to be far lower than the cost of performing the what concurrency control protocol is best. Twenty years ago, var- transaction logic on the replica. However, in a main memory ious researchers [KR81, ACL87] performed exhaustive simula- DBMS, the cost of a transaction is typically less than 1 msec, tions that clearly showed the superiority of dynamic locking requiring so few cycles that it is likely not much slower than relative to other concurrency control techniques. However, this playing back a log. In this case, an alternate active-active archi- work assumed a disk-based load with disk stalls, which obviously tecture appears to make sense. In this case, all replicas are impacts the results significantly. It would be highly desirable to “active” and the transaction is performed synchronously on all redo these sorts of simulation studies with a main memory work- replicas. The advantage of this approach is nearly instantaneous load. We strongly suspect that some sort of optimistic concur- failover and there is no requirement that updates be directed to a rency control would be the prevailing option. primary copy first. Of course, in such a scenario, two-phase com- mit will introduce substantial additional latency, suggesting that 5.2 Multi-core Support techniques to avoid it are needed — perhaps by performing trans- actions in timestamp order. Given the increasing prevalence of many-core computers, an interesting question is how future OLTP engines should deal with multiple cores. One option is to run multiple transactions concur- 5.4 Weak Consistency rently on separate cores within a single site (as it is done today); Most large web-oriented OLTP shops insist on replicas, usually of course, such parallelism requires latching and implies a num- over a WAN, to achieve high availability and disaster recovery. ber of resource allocation issues. Our experiments show that However, seemingly nobody is willing to pay for transactional although the performance overhead of latching is not particularly consistency over a WAN. As noted in Section 2, the common high (10% of cycles in the dominant transaction, New Order), it refrain in web applications is “eventual consistency” [Bre00, still remains an obstacle in achieving significant performance DHJ+07]. Typically, proponents of such approach advocate improvements in OLTP. As technologies (such as transactional resolving inconsistencies through non-technical means; for exam- memory [HM93]) for efficiently running highly concurrent pro- ple, it is cheaper to give a credit to a customer who complains

11 .than to ensure 100% consistency. In other words, the replicas 7. CONCLUSIONS eventually become consistent, presumably if the system is qui- We performed a performance study of Shore motivated by our esced. desire to understand where time is spent in modern database sys- It should be clear that eventual consistency is impossible without tems, and to help understand what the potential performance of transaction consistency under a general workload. For example, several recently proposed alternative database architectures might suppose transaction 1 commits at site 1 and aborts or is lost at site be. By stripping out components of Shore, we were able to pro- 2. Transaction 2 reads the result of transaction 1 and writes into duce a system that could run our modified TPC-C benchmark the database, causing the inconsistency to propagate and pollute about 20 times faster than the original system (albeit with sub- the system. That said, clearly, there must be workloads where stantially reduced functionality!). We found that buffer manage- eventual consistency is achievable, and it would be an interesting ment and locking operations are the most significant contributors exercise to look for them, since, as noted above, our results sug- to system overhead, but that logging and latching operations are gest that removing transactional support — locking and logging also significant. Based on these results, we make several interest- — from a main memory system could yield a very high perfor- ing observations. First, unless one strips out all of these compo- mance database. nents, the performance of a main memory-optimized database (or a database without transactions, or one without logging) is unlikely to be much better than a conventional database where 5.5 Cache-conscious B-trees most of the data fit into RAM. Second, when one does produce a In our study we did not convert Shore B-trees to a “cache-con- fully stripped down system — e.g., that is single threaded, imple- scious” format [RR99, RR00]. Such an alteration, at least on a ments recovery via copying state from other nodes in the net- system without all of the other optimizations we present, would work, fits in memory, and uses reduced functionality transactions have only a modest impact. Cache-conscious research on B-trees — the performance is orders of magnitude better than an unmodi- targets cache misses that result from accessing key values stored fied system. This suggests that recent proposals for stripped down in the B-tree nodes. Our optimizations removed between 80% to systems [WSA97, SMA+07] may be on to something. 88% of the time spent in B-tree operations, without changing the key access pattern. Switching from a stripped-down Shore to our minimal-overhead kernel — which still accesses the same data — 8. ACKNOWLEDGMENTS removed three quarters of the remaining time. In other words, it We thank the SIGMOD reviewers for their helpful comments. appears to be more important to optimize other components, such This work was partially supported by the National Science Foun- as concurrency control and recovery, than to optimize data struc- dation under Grants 0704424 and 0325525. tures. However, once we strip a system down to a very basic ker- nel, cache misses in the B-tree code may well be the new 9. REPEATABILITY ASSESSMENT bottleneck. In fact, it may be the case that other indexing struc- All the results in this paper were verified by the SIGMOD repeat- tures, such as hash tables, perform better in this new environ- ability committee. Code and/or data used in the paper are avail- ment. Again, these conjectures should be carefully tested. able at http://www.sigmod.org/codearchive/sigmod2008/ 6. RELATED WORK 10. REFERENCES There have been several studies of performance bottlenecks in [ACL87] Agrawal, R., Carey, M. J., and Livny, M. “Concurrency modern database systems. [BMK99] and [ADH+99] show the control performance modeling: alternatives and implications.” increasing contribution of main memory data stalls to database ACM Trans. Database Syst. 12(4), Dec. 1987. performance. [MSA+04] breaks down bottlenecks due to conten- tion for various resources (such as locks, I/O synchronization, or [AMS+07] Aguilera, M., Merchant, A., Shah, M., Veitch, A. C., CPU) from the client’s point of view (which includes perceived and Karamanolis, C. T. “Sinfonia: a new paradigm for building latency due to I/O stalls and preemptive scheduling of other con- scalable distributed systems.” In Proc. SOSP, 2007. current queries). Unlike the work presented here, these papers [AHU74] Aho, A. V., Hopcroft, J. E., and Ullman, J. D. “The analyze complete databases and do not analyze performance per Design and Analysis of Computer Algorithms.” Addison-Wesley database component. Benchmarking studies such as TPC-B Publishing Company, 1974. [Ano85] in the OLTP space and the Wisconsin Benchmark [BDT83] in general SQL processing, also characterize the perfor- [ADH+99] Ailamaki, A., DeWitt, D. J., Hill, M. .D., and Wood, mance of complete databases and not that of individual OLTP D. A. “DBMSs on a Modern Processor: Where Does Time Go?” components. In Proc. VLDB, 1999, 266-277. Additionally, there has been a large amount of work on main [Ail04] Ailamaki, A. “Database Architecture for New Hardware.” memory databases. Work on main memory indexing structures Tutorial. In Proc. VLDB, 2004. has included AVL trees [AHU74] and T-trees [LC86]. Other tech- [Ano85] Anon et al. “A Measure of Transaction Processing niques for main memory applicability appear in [BHT87]. Com- Power.” In Datamation, February 1985. plete systems include TimesTen [Tim07], DataBlitz [BBK+98], and MARS [Eic87]. A survey of this area appears in [GS92]. [BBK+98] Baulier, J. D., Bohannon, P., Khivesara, A., et al. “The However, none of this work attempts to isolate the components of DataBlitz Main-Memory Storage Manager: Architecture, Perfor- overhead, which is the major contribution of this paper. mance, and Experience.” In The VLDB Journal, 1998.

12 .[BDT83] Bitton, D., DeWitt, D. J., and Turbyfill, C. “Benchmark- [LM06] Lau, E. and Madden, S. “An Integrated Approach to ing Database Systems, a Systematic Approach.” In Proc. VLDB, Recovery and High Availability in an Updatable, Distributed Data 1983. Warehouse.” In Proc. VLDB, 2006. [BHT87] Bitton, D., Hanrahan, M., and Turbyfill, C. “Perfor- [LC86] Lehman, T. J. and Carey, M. J. “A study of index struc- mance of Complex Queries in Main Memory Database Sys- tures for main memory database management systems.” In Proc. tems.” In Proc. ICDE, 1987. VLDB, 1986. [BMK99] Boncz, P. A., Manegold, S., and Kersten, M. L. “Data- [LGG+91] Liskov, B., Ghemawat, S., Gruber, R., Johnson, P., base Architecture Optimized for the New Bottleneck: Memory Shrira, L., and Williams, M. “Replication in the harp file system.” Access.” In Proc. VLDB, 1999. In Proc. SOSP, pages 226-238, 1991. [Bre00] Brewer, E. A. “Towards robust distributed systems [MSA+04] McWherter, D. T., Schroeder, B., Ailamaki, A., and (abstract).” In Proc. PODC, 2000. Harchol-Balter, M. “Priority Mechanisms for OLTP and Transac- tional Web Applications.” In Proc.ICDE, 2004. [BDR97] Bugnion, E., Devine, S., and Rosenblum, M. “Disco: running commodity operating systems on scalable multiproces- [MHL+92] Mohan, C., Haderle, D., Lindsay, B., Pirahesh, H., sors.” In Proc. SOSP, 1997. and Schwarz, P. “ARIES: a transaction recovery method support- ing fine-granularity locking and partial rollbacks using write- [CDF+94] Carey, M. J., DeWitt, D. J., Franklin, M. J. et al. ahead logging.” ACM Trans. Database Syst. 17(1):94-162, 1992. “Shoring up persistent applications.” In Proc. SIGMOD, 1994. [Moh89] Mohan, C. “ARIES/KVL: A Key-Value Locking [CDG+06] Chang, F., Dean, J., Ghemawat, S., Hsieh, W. C., Method for Concurrency Control of Multiaction Transactions Wallach, D. A., Burrows, M., Chandra, T., Fikes, A., and Gruber, Operating on B-Tree Indexes.” 1989, Research Report RJ 7008, R. E. “Bigtable: A Distributed Storage System for Structured Data Base Technology Institute, IBM Almaden Research Center. Data.” In Proc. OSDI, 2006. [ML89] Mohan, C. and Levine, F. “ARIES/IM: An Efficient and [DG04] Dean, J. and Ghemawat, S. “MapReduce: Simplified High Concurrency Index Management Method Using Write- Data Processing on Large Clusters.” In Proc. OSDI, 2004. Ahead Logging.” 1989, Research Report RJ 6846, Data Base [DHJ+07] DeCandia, G., Hastorun, D., Jampani, M., Kakulapati, Technology Institute, IBM Almaden Research Center. G., Lakshman, A., Pilchin, A., Sivasubramanian, S., Vosshall, P., [MBD+99] Mucci, P. J., Browne, S., Deane, C., and Ho, G. and Vogels, W. “Dynamo: amazon’s highly available key-value “PAPI: A Portable Interface to Hardware Performance Counters.” store.” In Proc. SOSP, 2007. In Proc. Department of Defense HPCMP Users Group Confer- [DGS+90] DeWitt, D. J., Ghandeharizadeh, S., Schneider, D. A., ence, Monterey, CA, June 1999. Bricker, A., Hsiao, H., and Rasmussen, R. “The Gamma Database [RR99] Rao, J. and Ross, K. A. “Cache Conscious Indexing for Machine Project.” IEEE Transactions on Knowledge and Data Decision-Support in Main Memory.” In Proc. VLDB, 1999. Engineering 2(1):44-62, March 1990. [RR00] Rao, J. and Ross, K. A. “Making B+- trees cache con- [Eic87] Eich, M. H. “MARS: The Design of A Main Memory scious in main memory.” In SIGMOD Record, 29(2):475-486, Database Machine.” In Proc. of the 1987 International workshop June 2000. on Database Machines, October, 1987. [SMK+01] Stoica, I., Morris, R., Karger, D. R., Kaashoek, M. F., [GS92] Garcia-Molina, H. and Salem, K. “Main Memory Data- and Balakrishnan, H. “Chord: A Scalable Peer-to-peer Lookup base Systems: An Overview.” IEEE Trans. Knowl. Data Eng. Protocol for Internet Applications.” In Proc. SIGCOMM, 2001. 4(6): 509-516 (1992). [SAB+05] Stonebraker, M., Abadi, D. J., Batkin, A., Chen, X., [GR93] Gray, J. and Reuter, A. “Transaction Processing: Con- Cherniack, M., Ferreira, M., Lau, E., Lin, A., Madden, S., O’Neil, cepts and Techniques.” Morgan Kaufmann Publishers, Inc., 1993. E., O’Neil, P., Rasin, A., Tran, N., and Zdonik, S. “C-Store: A [GBH+00] Gribble, S. D., Brewer, E. A., Hellerstein, J. M., and Column-oriented DBMS.” In Proc. VLDB, 2005. Culler, D .E. “Scalable, Distributed Data Structures for Internet [SMA+07] Stonebraker, M., Madden, S., Abadi, D. J., Harizopou- Service Construction.” In Proc. OSDI, 2000. los, S., Hachem, N., and Helland, P. “The End of an Architectural [Hel07] Helland, P. “Life beyond Distributed Transactions: an Era (It's Time for a Complete Rewrite).” In Proc. VLDB, 2007. Apostate’s Opinion.” In Proc. CIDR, 2007. [Tim07] Oracle TimesTen. http://www.oracle.com/timesten/ [HM93] Herlihy, M. P. and Moss, J. E. B. “Transactional Mem- index.html. 2007. ory: architectural support for lock-free data structures.” In Proc. [TPCC] The Transaction Processing Council. TPC-C Benchmark ISCA, 1993. (Rev. 5.8.0), 2006. http://www.tpc.org/tpcc/spec/tpcc_current.pdf [KR81] Kung, H. T. and Robinson, J. T. “On optimistic methods [WSA97] Whitney, A., Shasha, D., and Apter, S. “High Volume for concurrency control.” ACM Trans. Database Syst. 6(2):213- Transaction Processing Without Concurrency Control, Two Phase 226, June 1981. Commit, SQL or C.” In Proc. HPTPS, 1997.