- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/u227/Rethinking_SIMD_Vectorization_for_In_Memory_Databases?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

Rethinking SIMD Vectorization for In-Memory Databases

展开查看详情

1 . Rethinking SIMD Vectorization for In-Memory Databases Orestis Polychroniou∗ Arun Raghavan Kenneth A. Ross† Columbia University Oracle Labs Columbia University orestis@cs.columbia.edu arun.raghavan@oracle.com kar@cs.columbia.edu ABSTRACT The advent of large main-memory capacity is one of the Analytical databases are continuously adapting to the un- reasons that blink-of-an-eye analytical query execution has derlying hardware in order to saturate all sources of par- become possible. Query optimization used to measure blocks allelism. At the same time, hardware evolves in multiple fetched from disk as the primary unit of query cost. Today, directions to explore different trade-offs. The MIC architec- the entire database can often remain main-memory resident ture, one such example, strays from the mainstream CPU and the need for efficient in-memory algorithms is apparent. design by packing a larger number of simpler cores per chip, The prevalent shift in database design for the new era relying on SIMD instructions to fill the performance gap. are column stores [19, 28, 37]. They allow for higher data Databases have been attempting to utilize the SIMD ca- compression in order to reduce the data footprint, minimize pabilities of CPUs. However, mainstream CPUs have only the number of columns accessed per tuple, and use column recently adopted wider SIMD registers and more advanced oriented execution coupled with late materialization [9] to instructions, since they do not rely primarily on SIMD for eliminate unnecessary accesses to RAM resident columns. efficiency. In this paper, we present novel vectorized designs Hardware provides performance through three sources of and implementations of database operators, based on ad- parallelism: thread parallelism, instruction level parallelism, vanced SIMD operations, such as gathers and scatters. We and data parallelism. Analytical databases have evolved to study selections, hash tables, and partitioning; and com- take advantage of all sources of parallelism. Thread par- bine them to build sorting and joins. Our evaluation on the allelism is achieved, for individual operators, by splitting MIC-based Xeon Phi co-processor as well as the latest main- the input equally among threads [3, 4, 5, 8, 14, 31, 40], stream CPUs shows that our vectorization designs are up to and in the case of queries that combine multiple operators, an order of magnitude faster than the state-of-the-art scalar by using the pipeline breaking points of the query plan to and vector approaches. Also, we highlight the impact of ef- split the materialized data in chunks that are distributed to ficient vectorization on the algorithmic design of in-memory threads dynamically [18, 28]. Instruction level parallelism is database operators, as well as the architectural design and achieved by applying the same operation to a block of tu- power efficiency of hardware, by making simple cores com- ples [6] and by compiling into tight machine code [16, 22]. parably fast to complex cores. This work is applicable to Data parallelism is achieved by implementing each operator CPUs and co-processors with advanced SIMD capabilities, to use SIMD instructions effectively [7, 15, 26, 30, 39, 41]. using either many simple cores or fewer complex cores. The different sources of parallelism were developed as a means to deliver more performance within the same power budget available per chip. Mainstream CPUs have evolved 1. INTRODUCTION on all sources of parallelism, featuring massively superscalar Real time analytics are the steering wheels of big data pipelines, out-of-order execution of tens of instructions, and driven business intelligence. Database customer needs have advanced SIMD capabilities, all replicated on multiple cores extended beyond OLTP with high ACID transaction through- per CPU chip. For example, the latest Intel Haswell ar- put, to interactive OLAP query execution across the entire chitecture issues up to 8 micro-operations per cycle with database. As a consequence, vendors offer fast OLAP solu- 192 reorder buffer entries for out-of-order execution, 256-bit tions, either by rebuilding a new DBMS for OLAP [28, 37], SIMD instructions, two levels of private caches per core and or by improving within the existing OLTP-focused DBMS. a large shared cache, and scales up to 18 cores per chip. ∗ Work partly done when first author was at the Oracle Labs. Concurrently with the evolution of mainstream CPUs, a † Supported by NSF grant IIS-1422488 and an Oracle gift. new approach on processor design has surfaced. The design, named the many-integrated-cores (MIC) architecture, uses Permission to make digital or hard copies of all or part of this work for personal or cores with a smaller area (transistors) and power footprint classroom use is granted without fee provided that copies are not made or distributed by removing the massively superscalar pipeline, out-of-order for profit or commercial advantage and that copies bear this notice and the full cita- execution, and the large L3 cache. Each core is based on a tion on the first page. Copyrights for components of this work owned by others than Pentium 1 processor with a simple in-order pipeline, but ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or re- is augmented with large SIMD registers, advanced SIMD publish, to post on servers or to redistribute to lists, requires prior specific permission instructions, and simultaneous multithreading to hide load and/or a fee. Request permissions from permissions@acm.org. SIGMOD’15, May 31–June 4, 2015, Melbourne, Victoria, Australia. and instruction latency. Since each core has a smaller area Copyright 2015 ACM 978-1-4503-2758-9/15/05 ...$15.00. and power footprint, more cores can be packed in the chip. http://dx.doi.org/10.1145/2723372.2747645. 1493

2 . The MIC design was originally intended as a GPU [33], available as a co-processor, and will support more advanced but now targets high performance computing applications. SIMD instructions (AVX 3), also supported by the next gen- Using a high FLOPS machine to execute compute-intensive eration of mainstream CPUs.2 Our work does not focus on algorithms with superlinear complexity is self-evident. Ex- evaluating Xeon Phi as a co-processing accelerator, such as ecuting analytical queries in memory, however, consists of GPUs, that would also be bound by the PCI-e bandwidth, data-intensive linear algorithms that mostly “move” rather but as an alternative CPU design that is suitable and more than “process” data. Previous work to add SIMD in databases power efficient for executing analytical database workloads. has optimized sequential access operators such as index [41] We summarize our contributions: or linear scans [39], built multi-way trees with nodes that • We introduce design principles for efficient vectoriza- match the SIMD register layout [15, 26], and optimized spe- tion of in-memory database operators and define fun- cific operators, such as sorting [7, 11, 26], by using ad-hoc damental vector operations that are frequently reused. vectorization techniques, useful only for a specific problem. In this paper, we present good design principles for SIMD • We design and implement vectorized selection scans, vectorization of main-memory database operators, without hash tables, and partitioning, that are combined to modifying the logic or the data layout of the baseline scalar design and build sorting and multiple join variants. algorithm. The baseline algorithm is defined here as the most straightforward scalar implementation. Formally, as- • We compare our implementations against state-of-the- sume an algorithm that solves a problem with optimal com- art scalar and vectorized techniques. We achieve up plexity, its simplest scalar implementation, and a vectorized to an order of magnitude speedups by evaluating on implementation. We say that the algorithm can be fully vec- Xeon Phi as well as on the latest mainstream CPUs. torized, if the vector implementation executes O(f (n)/W ) • We show the impact of vectorization on the algorith- vector instructions instead of O(f (n)) scalar instructions mic design of in-memory operators, as well as the ar- where W is the vector length, excluding random memory chitectural design and power efficiency of hardware, by accesses that are by definition not data-parallel. We define making simple cores comparably fast to complex cores. fundamental vector operations that are frequently reused in the vectorizations and are implemented using advanced The rest of the paper is organized as follows. Section 2 SIMD instructions, such as non-contiguous loads (gathers) presents related work. Sections 4, 5, 6, and 7 discuss the and stores (scatters). The fundamental operations that are vectorization of selection scans, hash tables, Bloom filters, not directly supported by specific instructions can be imple- and partitioning. Sections 8 and 9 discuss algorithmic de- mented using simpler instructions at a performance penalty. signs for sorting and hash join. We present our experimental We implement vectorized operators in the context of main- evaluation in Section 10, we discuss how SIMD vectorization memory databases: selection scans, hash tables, and parti- relates to GPUs in Section 11, and conclude in Section 12. tioning, which are combined to build more advanced opera- Implementation details are provided in the Appendix. tors: sorting and joins. These operators cover a large por- tion of the time needed to execute analytical queries in main 2. RELATED WORK memory. For selection scans, we show branchless tuple selec- Previous work that added SIMD instructions in database tion and in-cache buffering. For hash tables, we study both operators is briefly summarized. Zhou et al. used SIMD for building and probing across using multiple hashing schemes. linear scans, index scans, and nested loop joins [41]. Ross For partitioning, we describe histogram generation, includ- proposed probing multiple keys per bucket for cuckoo hash- ing all partitioning function types: radix, hash, and range. ing [30]. Willhalm et al. optimized decompression of bit We also describe data shuffling, including inputs larger than packed data [39]. Inoue et al. proposed data-parallel comb- the cache. All of the above are combined to build radixsort sort and merging [11]. Chhugani et al. optimized bitonic and multiple hash join variants that highlight the impact of merging for mergesort [7]. Kim et al. designed multi-way vectorization on determining the best algorithmic design. trees tailored to the SIMD layout [15]. Polychroniou et We compare our vectorized implementations of in-memory al. discussed trade-offs for updating heavy hitter aggregates database operators against the respective state-of-the-art [25], fast range partitioning via a range function index [26], scalar and vector techniques, by evaluating on the Intel Xeon and Bloom filter probing using gathers [27]. Schlegel et al. Phi co-processor and on the latest mainstream CPUs. Xeon described scalable frequent itemset mining on Xeon Phi [32]. Phi is currently the only available hardware based on the Database operators have been extensively optimized for MIC architecture and is also the only generally available modern processors. Manegold et al. introduced hash joins hardware that supports gathers and scatters, while the lat- with cache-conscious partitioning [19], which Kim et al. ex- est mainstream CPUs (Intel Haswell) support gathers. tended to multi-core CPUs and compared against SIMD We use the sorting and join operator to compare a Xeon sort-merge joins [14]. Cieslewicz et al. and Ye et al. studied Phi 7120P co-processor (61 P54C cores at 1.238 GHz, 300 contention-free aggregation [8, 40]. Blanas et al. and Balke- Watts TDP) against four high-end Sandy Bridge CPUs (4×8 sen et al. evaluated hardware-tuned hash joins [3, 5]. Albu- Sandy Bridge cores at 2.2 GHz, 4 × 130 Watts TDP), and tiu et al. introduced NUMA-aware joins and Balkesen et al. found that they have similar performance, but on a different evaluated join variants on multiple CPUs [1, 4]. Satish et al. power budget, since Xeon Phi spends almost half the energy. and Wassenberg et al. introduced buffered partitioning for The next generation of Xeon Phi will also be available as radixsort [31, 38]. Polychroniou et al. introduced in-place a standalone CPU,1 even if the current generation is only and range partitioning for sorting variants [26]. Jha et al. 1 optimized hash joins on Xeon Phi, but used SIMD partially newsroom.intel.com/community/intel_newsroom/blog/2014/ only to compute hash functions and load hash buckets [12]. 06/23/intel-re-architects-the-fundamental-building- 2 block-for-high-performance-computing software.intel.com/blogs/2013/avx-512-instructions 1494

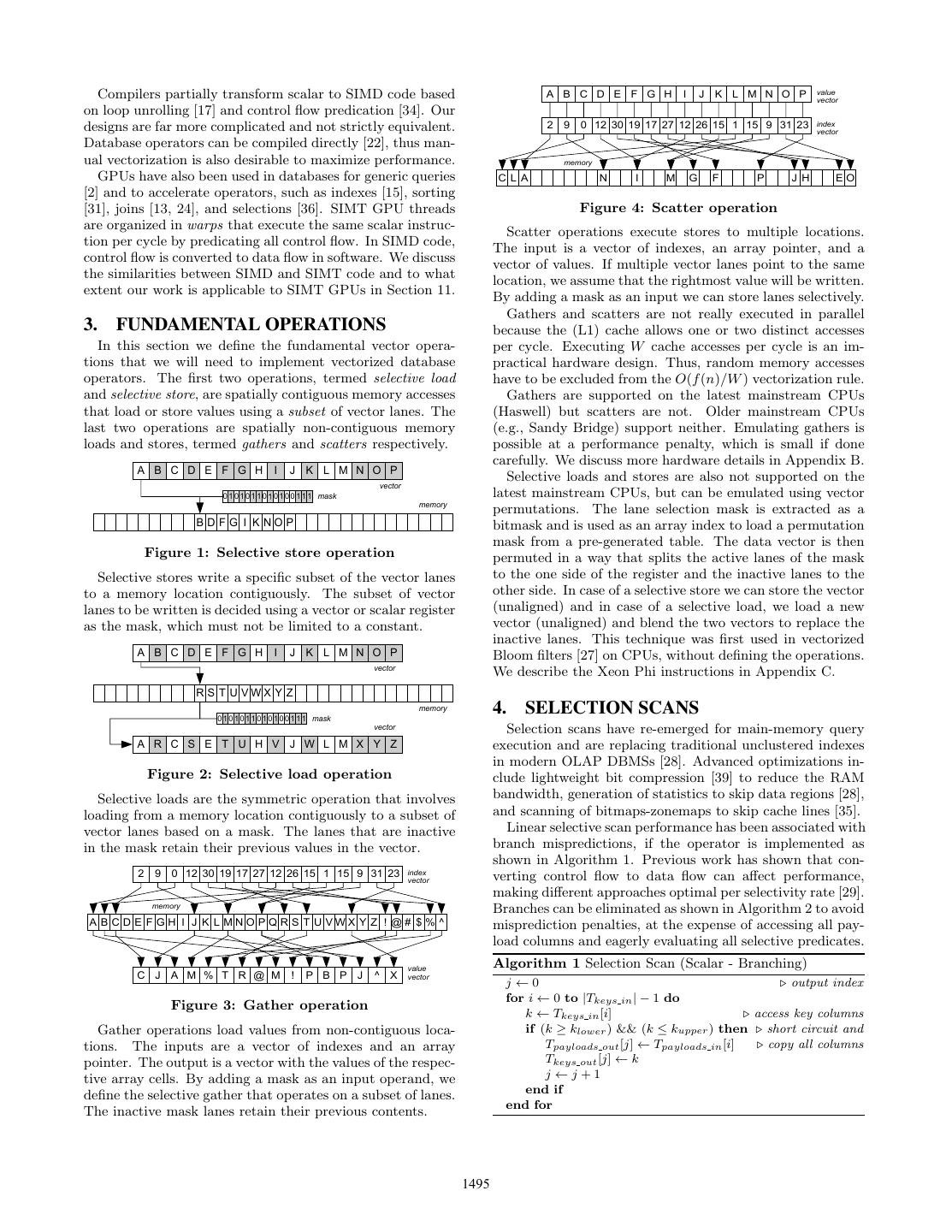

3 . Compilers partially transform scalar to SIMD code based A B C D E F G H I J K L M N O P value vector on loop unrolling [17] and control flow predication [34]. Our designs are far more complicated and not strictly equivalent. 2 9 0 12 30 19 17 27 12 26 15 1 15 9 31 23 index vector Database operators can be compiled directly [22], thus man- ual vectorization is also desirable to maximize performance. memory GPUs have also been used in databases for generic queries C L A N I M G F P J H EO [2] and to accelerate operators, such as indexes [15], sorting [31], joins [13, 24], and selections [36]. SIMT GPU threads Figure 4: Scatter operation are organized in warps that execute the same scalar instruc- Scatter operations execute stores to multiple locations. tion per cycle by predicating all control flow. In SIMD code, The input is a vector of indexes, an array pointer, and a control flow is converted to data flow in software. We discuss vector of values. If multiple vector lanes point to the same the similarities between SIMD and SIMT code and to what location, we assume that the rightmost value will be written. extent our work is applicable to SIMT GPUs in Section 11. By adding a mask as an input we can store lanes selectively. Gathers and scatters are not really executed in parallel 3. FUNDAMENTAL OPERATIONS because the (L1) cache allows one or two distinct accesses In this section we define the fundamental vector opera- per cycle. Executing W cache accesses per cycle is an im- tions that we will need to implement vectorized database practical hardware design. Thus, random memory accesses operators. The first two operations, termed selective load have to be excluded from the O(f (n)/W ) vectorization rule. and selective store, are spatially contiguous memory accesses Gathers are supported on the latest mainstream CPUs that load or store values using a subset of vector lanes. The (Haswell) but scatters are not. Older mainstream CPUs last two operations are spatially non-contiguous memory (e.g., Sandy Bridge) support neither. Emulating gathers is loads and stores, termed gathers and scatters respectively. possible at a performance penalty, which is small if done carefully. We discuss more hardware details in Appendix B. A B C D E F G H I J K L M N O P Selective loads and stores are also not supported on the vector 0 1 0 1 0 1 1 0 0 1 0 0 1 1 1 mask latest mainstream CPUs, but can be emulated using vector memory permutations. The lane selection mask is extracted as a BD FG I KNOP bitmask and is used as an array index to load a permutation mask from a pre-generated table. The data vector is then Figure 1: Selective store operation permuted in a way that splits the active lanes of the mask Selective stores write a specific subset of the vector lanes to the one side of the register and the inactive lanes to the to a memory location contiguously. The subset of vector other side. In case of a selective store we can store the vector lanes to be written is decided using a vector or scalar register (unaligned) and in case of a selective load, we load a new as the mask, which must not be limited to a constant. vector (unaligned) and blend the two vectors to replace the inactive lanes. This technique was first used in vectorized A B C D E F G H I J K L M N O P Bloom filters [27] on CPUs, without defining the operations. vector We describe the Xeon Phi instructions in Appendix C. R S T U VWX Y Z 0 1 0 1 0 1 1 0 1 0 1 0 0 1 1 1 mask memory 4. SELECTION SCANS vector Selection scans have re-emerged for main-memory query A R C S E T U H V J W L M X Y Z execution and are replacing traditional unclustered indexes in modern OLAP DBMSs [28]. Advanced optimizations in- Figure 2: Selective load operation clude lightweight bit compression [39] to reduce the RAM Selective loads are the symmetric operation that involves bandwidth, generation of statistics to skip data regions [28], loading from a memory location contiguously to a subset of and scanning of bitmaps-zonemaps to skip cache lines [35]. vector lanes based on a mask. The lanes that are inactive Linear selective scan performance has been associated with in the mask retain their previous values in the vector. branch mispredictions, if the operator is implemented as shown in Algorithm 1. Previous work has shown that con- 2 9 0 12 30 19 17 27 12 26 15 1 15 9 31 23 index verting control flow to data flow can affect performance, vector making different approaches optimal per selectivity rate [29]. memory Branches can be eliminated as shown in Algorithm 2 to avoid A B C D E F G H I J K L MNO P Q R S T U VWX Y Z ! @# $ % ^ misprediction penalties, at the expense of accessing all pay- load columns and eagerly evaluating all selective predicates. value Algorithm 1 Selection Scan (Scalar - Branching) C J A M % T R @ M ! P B P J ^ X vector j←0 output index for i ← 0 to |Tkeys in | − 1 do Figure 3: Gather operation k ← Tkeys in [i] access key columns Gather operations load values from non-contiguous loca- if (k ≥ klower ) && (k ≤ kupper ) then short circuit and tions. The inputs are a vector of indexes and an array Tpayloads out [j] ← Tpayloads in [i] copy all columns pointer. The output is a vector with the values of the respec- Tkeys out [j] ← k tive array cells. By adding a mask as an input operand, we j ←j+1 define the selective gather that operates on a subset of lanes. end if end for The inactive mask lanes retain their previous contents. 1495

4 .Algorithm 2 Selection Scan (Scalar - Branchless) 5. HASH TABLES j←0 output index Hash tables are used in database systems to execute joins for i ← 0 to |Tkeys in | − 1 do and aggregations since they allow constant time key lookups. k ← Tkeys in [i] copy all columns In hash join, one relation is used to build the hash table and Tpayloads out [j] ← Tpayloads in [i] the other relation probes the hash table to find matches. In Tkeys out [j] ← k group-by aggregation they are used either to map tuples to m ←(k ≥ klower ? 1 : 0) & (k ≤ kupper ? 1 : 0) unique group ids or to insert and update partial aggregates. j ←j+m if-then-else expressions use conditional ... Using SIMD instructions in hash tables has been proposed end for ... flags to update the index without branching as a way to build bucketized hash tables. Rather than com- Vectorized selection scans use selective stores to store the paring against a single key, we place multiple keys per bucket lanes that satisfy the selection predicates. We use SIMD in- and compare them to the probing key using SIMD vector structions to evaluate the predicates resulting in a bitmask of comparisons. We term the approach of comparing a single the qualifying lanes. Partially vectorized selection extracts input (probing) key with multiple hash table keys, horizontal one bit at a time from the bitmask and accesses the corre- vectorization. Some hash table variants such as bucketized sponding tuple. Instead, we use the bitmask to selectively cuckoo hashing [30] can support much higher load factors. store the qualifying tuples to the output vector at once. Loading a single 32-bit word is as fast as loading an entire When the selection has a very low selectivity, it is de- vector, thus, the cost of bucketized probing diminishes to sirable to avoid accessing the payload columns due to the extracting the correct payload, which requires log W steps. performance drop caused by the memory bandwidth. Fur- Horizontal vectorization, if we expect to search fewer than thermore, when the branch is speculatively executed, we is- W buckets on average per probing key, is wasteful. For sue needless loads to payloads. To avoid reducing the band- example, a 50% full hash table with one match per key needs width, we use a small cache resident buffer that stores in- to access ≈ 1.5 buckets on average to find the match using dexes of qualifiers rather than the actual values. When the linear probing. In such a case, comparing one input key buffer is full, we reload the indexes from the buffer, gather against multiple table keys cannot yield high improvement the actual values from the columns, and flush them to the and takes no advantage of the increasing SIMD register size. output. This variant is shown in Algorithm 3. Appendix A In this paper, we propose a generic form of hash table vec- describes the notation used in the algorithmic descriptions. torization termed vertical vectorization that can be applied When we materialize data on RAM without intent to reuse to any hash table variant without altering the hash table them soon, we use streaming stores. Mainstream CPUs pro- layout. The fundamental principle is to process a different vide non-temporal stores that bypass the higher cache levels input key per vector lane. All vector lanes process different and increase the RAM bandwidth for storing data. Xeon keys from the input and access different hash table locations. Phi does not support scalar streaming stores, but provides The hash table variants we discuss are linear probing (Sec- an instruction to overwrite a cache line with data from a tion 5.1), double hashing (Section 5.2), and cuckoo hashing vector without first loading it. This technique requires the (Section 5.3). For the hash function, we use multiplicative vector length to be equal to the cache line and eliminates the hashing, which requires two multiplications, or for 2n buck- need for write-combining buffers used in mainstream CPUs. ets, one multiplication and a shift. Multiplication costs very All operators that write the output to memory sequentially, few cycles in mainstream CPUs and is supported in SIMD. use buffering, which we omit in the algorithmic descriptions. 5.1 Linear Probing Algorithm 3 Selection Scan (Vector) Linear probing is an open addressing scheme that, to ei- i, j, l ← 0 input, output, and buffer indexes ther insert an entry or terminate the search, traverses the r ← {0, 1, 2, 3, ..., W − 1} input indexes in vector for i ← 0 to |Tkeys in | − 1 step W do # of vector lanes table linearly until an empty bucket is found. The hash ta- ble stores keys and payloads but no pointers. The scalar k ← Tkeys in [i] load vectors of key columns code for probing the hash table is shown in Algorithm 4. m ← (k ≥ klower ) & (k ≤ kupper ) predicates to mask if m = false then optional branch Algorithm 4 Linear Probing - Probe (Scalar) B[l] ←m r selectively store indexes j←0 output index l ← l + |m| update buffer index for i ← 0 to |Skeys | − 1 do outer (probing) relation if l > |B| − W then flush buffer k ← Skeys [i] for b ← 0 to |B| − W step W do v ← Spayloads [i] p ← B[b] load input indexes h ← (k · f ) × ↑ |T | “× ↑”: multiply & keep upper half k ← Tkeys in [p] dereference values while Tkeys [h] = kempty do until empty bucket v ← Tpayloads in [p] if k = Tkeys [h] then Tkeys out [b + j] ← k flush to output with ... RSR payloads [j] ← Tpayloads [h] inner payloads Tpayloads out [b + j] ← v ... streaming stores RSS payloads [j] ← v outer payloads end for RSkeys [j] ← k join keys p ← B[|B| − W ] move overflow ... j ←j+1 B[0] ← p ... indexes to start end if j ← j + |B| − W update output index h←h+1 next bucket l ← l − |B| + W update buffer index if h = |T | then reset if last bucket end if h←0 end if end if r ←r+W update index vector end while end for flush last items after the loop end for 1496

5 .Algorithm 5 Linear Probing - Probe (Vector) Algorithm 7 Linear Probing - Build (Vector) i, j ← 0 input & output indexes (scalar register) l ← {1, 2, 3, ..., W } any vector with unique values per lane o←0 linear probing offsets (vector register) i, j ← 0 , m ← true input & output index & bitmask m ← true boolean vector register o←0 linear probing offset while i + W ≤ |Skeys in | do W : # of vector lanes while i + W ≤ |Rkeys | do k ←m Skeys [i] selectively load input tuples k ←m Rkeys [i] selectively load input tuples v ←m Spayloads [i] v ←m Rpayloads [i] i ← i + |m| i ← i + |m| h ← (k · f ) × ↑ |T | multiplicative hashing h ← o + (k · f ) × ↑ |T | multiplicative hashing h←h+o add offsets & fix overflows h ← (h < |T |) ? h : (h − |T |) fix overflows h ← (h < |T |) ? h : (h − |T |) “m ? x : y”: vector blend kT ← Tkeys [h] gather buckets kT ← Tkeys [h] gather buckets m ← kT = kempty find empty buckets vT ← Tpayloads [h] T [h] ←m l detect conflicts m ← kT = k lback ←m Tkeys [h] RSkeys [j] ←m k selectively store matching tuples m ← m & (l = lback ) RSS payloads [j] ←m v Tkeys [h] ←m k scatter to buckets ... RSR payloads [j] ←m vT Tpayloads [h] ←m v ... if not conflicting j ← j + |m| o ← m ? 0 : (o + 1) increment or reset offsets m ← kT = kempty discard finished tuples end while o ← m ? 0 : (o + 1) increment or reset offsets end while The basics of vectorized probe and build of linear probing The vectorized implementation of probing a hash table hash tables are the same. We process different input keys per using a linear probing scheme is shown in Algorithm 5. Our SIMD lane and on top of gathers, we now also use scatters to vectorization principle is to process a different key per SIMD store the keys non-contiguously. We access the input “out-of- lane using gathers to access the hash table. Assuming W order” to reuse lanes as soon as keys are inserted. To insert vector lanes, we process W different input keys on each loop. tuples, we first gather to check if the buckets are empty Instead of using a nested loop to find all matches for the W and then scatter the tuples only if the bucket is empty. The keys before loading the next W keys, we reuse vector lanes tuples that accessed a non-empty bucket increment an offset as soon as we know there are no more matches in the table, vector in order to search the next bucket in the next loop. by selectively loading new keys from the input to replace In order to ensure that multiple tuples will not try to fill finished keys. Thus, each key executes the same number of the same empty bucket, we add a conflict detection step loops as in scalar code. Every time a match is found, we use before scattering the tuples. Two lanes are conflicting if selective stores to write to the output the vector lanes that they point to the same location. However, we do not need have matches. In order to support each key having executed to identify both lanes but rather the leftmost one that would an arbitrary number of loops already, we keep a vector of get its value overwritten by the rightmost during the scatter. offsets that maintain how far each key has searched in the To identify these lanes, we scatter arbitrary values using a table. When a key is overwritten, the offset is reset to zero. vector with unique values per lane (e.g., [1,2,3,...,W ]). Then, A simpler approach is to process W keys at a time and use we gather using the same index vector. If the scattered a nested loop to find all matches. However, the inner loop matches the gather value, the lane can scatter safely. The would be executed as many times as the maximum number conflicting lanes search the next bucket in the next loop. of buckets accessed by any one of the W keys, underutiliz- Future SIMD instruction sets include special instructions ing the SIMD lanes, because the average number of accessed that can support this functionality (vpconflictd in AVX buckets of W keys can be significantly smaller than the max- 3), thus saving the need for the extra scatter and gather imum. By reusing vector lanes dynamically, we are reading to detect conflicts. Nevertheless, these instructions are not the probing input “out-of-order”. Thus, the probing algo- supported on mainstream CPUs or the Xeon Phi as of yet. rithm is no longer stable, i.e., the order of the output does If the input keys are unique (e.g., join on a candidate key), not always match the previous order of the probing input. we can scatter the keys to the table and gather them back to Building a linear probing table is similar. We need to find the conflicting lanes instead of a constant vector with reach an empty bucket to insert a new tuple. The scalar code unique values per lane. Thus, we save one scatter operation. is shown in Algorithm 6 and the vector code in Algorithm 7. The algorithmic descriptions show the keys and values of the hash table on separate arrays. In practice, the hash table Algorithm 6 Linear Probing - Build (Scalar) uses an interleaved key-value layout. To halve the number for i ← 0 to |Rkeys | − 1 do inner (building) relation of cache accesses, we pack multiple gathers into fewer wider k ← Rkeys [i] gathers. For example, when using 32-bit keys and 32-bit h ← (k · f ) × ↑ |T | multiplicative hashing payloads, the two consecutive 16-way 32-bit gathers of the while Tkeys [h] = kempty do until empty bucket above code can be replaced with two 8-way 64-bit gathers h←h+1 next bucket and a few shuffle operations to split keys and payloads. The if h = |T | then same applies to scatters (see Appendix E for details). h←0 reset if last For both probing and building, selective loads and stores end if assume there are enough items in the input to saturate the end while vector register. To process the last items in the input, we Tkeys [h] ← k set empty bucket switch to scalar code. The last items are bounded in number Tpayloads [h] ← Rpayloads [i] by 2 · W , which is negligible compared to the total number end for of input tuples. Thus, the overall throughput is unaffected. 1497

6 .5.2 Double Hashing Vectorized cuckoo table probing is shown in Algorithm 9. Duplicate keys in hash tables can be handled by stor- No inner loop is required since we have only two choices. We ing the payloads in a separate table, or by repeating the load W keys with an aligned vector load and gather the first keys. The first approach works well when most matching bucket per key. For the keys that do not match, we gather keys are repeated. The second approach works well with the second bucket. Cuckoo tables do not directly support mostly unique keys, but suffers from clustering duplicate key repeats. Probing is stable by reading the input “in- keys in the same region, if linear probing is used. Double order”, but accesses remote buckets when out of the cache. hashing uses a second hash function to distribute collisions Building a cuckoo hashing table is more complicated. If so that the number of accessed buckets is close to the num- both bucket choices are not empty, we create space by dis- ber of true matches. Thus, we can use the second approach placing the tuple of one bucket to its alternate location. This for both cases. Comparing multiple hash table layouts based process may be repeated until an empty bucket is reached. on the number of repeats is out of the scope of this paper. Algorithm 10 Cuckoo Hashing - Building Algorithm 8 Double Hashing Function i, j ← 0 , m ← true while i + W ≤ |R| do f L ← m ? f1 : f2 pick multiplicative hash factor k ←m Rkeys in [i] selectively load new ... fH ← m ? |T | : (|T | − 1) the collision bucket ... v ←m Rpayloads in [i] ... tuples from the input h ← m ? 0 : (h + 1) ... is never repeated i ← i + |m| h ← h + ((k × ↓ fL ) × ↑ fH ) multiplicative hashing h1 ← (k · f1 ) × ↑ |B| 1st hash function h ← (h < |T |) ? h : (h − |T |) fix overflows (no modulo) h2 ← (k · f2 ) × ↑ |B| 2nd hash function Algorithm 8 shows the double hashing scheme that we h ← h1 + h2 − h use other function if old propose. Here, m is the subset of vector lanes that have h ← m ? h1 : h use 1st function if new probed at least one bucket. If the primary hash function h1 kT ← Tkeys [h] gather buckets for ... is in [0, |T |), the collision hash function h2 is in [1, |T |), and vT ← Tpayloads [h] ... new & old tuples |T | is prime, then h = h1 +N ·h2 modulo |T | (double hashing) m ← m & (kT = kempty ) use 2nd function if new ... never repeats the same bucket for N < |T | collisions. To h ← m ? h2 : h ... & 1st is non-matching avoid the expensive modulos, we use h − |T | when h ≥ |T |. kT ←m Tkeys [h] selectively (re)gather ... vT ←m Tpayloads [h] ... for new using 2nd 5.3 Cuckoo Hashing Tkeys [h] ← k scatter all tuples ... Cuckoo hashing [23] is another hashing scheme that uses Tpayloads [h] ← v ... to store or swap multiple hash functions. and is the only hash table scheme kback ← Tkeys [h] gather (unique) keys ... that has been vectorized in previous work [30], as a means m ← k = kback ... to detect conflicts to allow multiple keys per bucket (horizontal vectorization). k ← m ? kT : k conflicting tuples are ... Here, we study cuckoo hashing to compare our (vertical vec- v ← m ? vT : v ... kept to be (re)inserted torization) approach against previous work [30, 42]. We also m ← k = kempty inserted tuples are replaced show that complicated control flow logic, such as cuckoo ta- end while ble building, can be transformed to data flow vector logic. Vectorized cuckoo table building, shown in Algorithm 10, The scalar code for cuckoo table probing, which we omit reuses vector lanes to load new tuples from the input. The due to space requirements, can be written in two ways. In remaining lanes are either previously conflicting or displaced the simple way, we check the second bucket only if the first tuples. The newly loaded tuples gather buckets using one bucket does not match. The alternative way is to always or both hash functions to find an empty bucket. The tuples access both buckets and blend their results using bitwise that were carried from the previous loop use the alternative operations [42]. The latter approach eliminates branching hash function compared to the previous loop. We scatter the at the expense of always accessing both buckets. Still, it has tuples to the hash table and gather back the keys to detect been shown to be faster than other variants on CPUs [42]. conflicts. The lanes with newly displaced tuples, which were Algorithm 9 Cuckoo Hashing - Probing gathered earlier in this loop, and the conflicting lanes are j←0 passed through to the next loop. The other lanes are reused. for i ← 0 to |S| − 1 step W do k ← Skeys [i] load input tuples 6. BLOOM FILTERS v ← Spayloads [i] Bloom filters are an essential data structure for applying h1 ← (k · f1 ) × ↑ |T | 1st hash function selective conditions across tables before joining them, a semi h2 ← (k · f2 ) × ↑ |T | 2nd hash function join. A tuple qualifies from the Bloom filter, if k specific bits kT ← Tkeys [h1 ] gather 1st function bucket are set in the filter, based on k hash functions. Aborting a vT ← Tpayloads [h1 ] tuple as soon as one bit-test fails is essential to achieve high m ← k = kT performance, because most tuples fail after a few bit tests. kT ←m Tkeys [h2 ] gather 2nd function bucket ... Vectorized Bloom filter probing was recently shown to get vT ←m Tpayloads [h2 ] ... if 1st is not matching a significant performance boost over scalar code on the lat- m ← k = kT est mainstream CPUs, especially when the Bloom filter is RSkeys [j] ←m k selectively store matches cache resident [27]. The design and implementation follows RSS payloads [j] ←m v the principle of processing different input keys per lane and RSR payloads [j] ←m vT is one of our influences for this paper. However, no fun- j ← j + |m| damental vector opeations were explicitly defined. Here, we end for evaluate the vectorized Bloom filter design [27] on Xeon Phi. 1498

7 .7. PARTITIONING Recently, a range index was proposed where each node Partitioning is a ubiquitous operation for modern hard- has multiple splitters that are compared against one input ware query execution as a way to split large inputs into key using SIMD comparisons [26]. Each node is at least as cache-conscious non-overlapping sub-problems. For exam- wide as a vector and scalar code is used for index arithmetic ple, join and aggregation operators can use hash partitioning and to access the nodes (without gathers), relying on the to split the input into small partitions that are distributed superscalar pipeline to hide the cost of scalar instructions. among threads and now fit in the cache [3, 4, 5, 14, 19, 26]. The SIMD index can be seen as horizontal vectorization for We study all types of partitioning: radix, hash, and range. binary search and is evaluated on simple and complex cores. 7.1 Radix & Hash Histogram 7.3 Shuffling Prior to moving any data, in order to partition into con- The data shuffling phase of partitioning involves the actual tiguous segments, we use a histogram to set the boundaries. movement of tuples. To generate the output partitions in To compute the histogram, we increment a count based on contiguous space, we maintain an array of partition offsets, the partition function of each key. By using multiplicative initialized by the prefix sum of the histogram. The offset hashing, hash partitioning becomes equally fast to radix. array is updated for every tuple transferred to the output. Vectorized shuffling uses gathers and scatters to increment Algorithm 11 Radix Partitioning - Histogram the offset array and scatters the tuples to the output. How- o ← {0, 1, 2, 3, ..., W − 1} ever, if multiple vector lanes have tuples that belong to the Hpartial [P × W ] ← 0 initialize replicated histograms same partition, the offset would be incremented by one and for i ← 0 to |Tkeys in | − 1 step W do these tuples would be (over)written to the same location. k ← Tkeys in [i] We compute a vector of conflict offsets, by using gathers h ← (k << bL ) >> bR radix function and scatters to detect conflicts iteratively, as shown in Algo- h ← o + (h · W ) index for multiple histograms rithm 13. First, we scatter unique values per lane to an array c ← Hpartial [h] increment W counts with P entries. Then, we gather using the same indexes and Hpartial [h] ← c + 1 compare against the scattered vector to find conflicts. We end for increment the conflicting lanes and repeat the process for for i ← 0 to P − 1 do these lanes only until no lanes conflict. Even if W iterations c ← Hpartial [i · W ] load W counts of partition are executed, the total number of accesses to distinct mem- H[i] ← sum across(c) reduce into single result ory locations is always W , i.e., if ai is the number of accesses end for to distinct memory locations in iteration i, then ai = W . Vectorized histogram generation, shown in Algorithm 11, uses gathers and scatters to increment counts. However, Algorithm 13 Conflict Serialization Function (h, A) if multiple lanes scatter to the same histogram count, the l ← {W − 1, W − 2, W − 3, ..., 0} reversing mask count will still be incremented by 1 and all items (over)written h ← permute(h, l) reverse hashes to the same location. To avoid conflicts, we replicate the c ← 0 , m ← true serialization offsets & conflict mask histogram to isolate each lane. Thus, lane j increments repeat H [i · W + j] instead of H[i]. In the end, the W histograms A[h] ←m l detect conflicts are reduced into one. If the histograms do not fit in the lback ←m A[h] fastest cache, we use 1-byte counts and flush on overflow. m ← m & (l = lback ) update conflicting lanes c ← m ? (c + 1) : c increment offsets ... 7.2 Range Histogram until m = false ... for conflicting lanes return permute(c, l) reverse to original order Radix and hash partitioning functions are significantly faster than range partitioning functions. In range function, Since the rightmost lane is written during conflicts, tuples we execute a binary search over a sorted array of splitters. of the same partition in the same vector are written in re- Although the array is cache resident, the number of accesses verse order. Also, per group of k conflicting lanes, the right- is logarithmic and all accesses are dependent on each other, most lane will incorrectly increment the offset by 1, not by thus the cache hit latency in the critical path is exposed [26]. k. By reversing the index vector during serialization, we up- Branch elimination only marginally improves performance. date the offsets correctly and also maintain the input order. Binary search can be vectorized using gather instructions Stable partitioning is essential for algorithms such as LSB to load the splitters from the sorted array, as shown in Al- radixsort. Vectorized shuffling is shown in Algorithm 14. gorithm 12, by processing W keys in parallel. The search Algorithm 14 Radix Partitioning - Shuffling path is computed by blending low and high pointers. We O ← prefix sum(H) partition offsets from histogram can assume without loss of generality that P = 2n , since we for i ← 0 to |Tkeys in | − 1 step W do can always patch the splitter array with maximum values. k ← Tkeys in [i] load input tuples Algorithm 12 Range Partitioning Function v ← Tpayloads in [i] l←0,h←P l is also the output vector h ← (k << bL ) >> bR radix function for i ← 0 to log P − 1 do o ← O[h] gather partition offsets a ← (l + h) >> 1 compute middle c ← serialize conflicts(h, O) serialize conflicts d ← D[a − 1] gather splitters o←o+c add serialization offsets m←k>d compare with splitters O[h] ← o + 1 scatter incremented offsets l←m? a: l select upper half Tkeys out [o] ← k scatter tuples h←m? h: a select lower half Tpayloads out [o] ← v end for end for 1499

8 .7.4 Buffered Shuffling Since multiple tuples can be written to the buffer for Shuffling, as described so far, is fast if the input is cache the same partition on each loop, we identify which vector resident, but falls into certain performance pitfalls when lanes will not cause overflow and scatter them selectively larger than the cache. First, it suffers from TLB thrash- before flushing the buffers that are full. After the buffers ing when the partitioning fanout exceeds the TLB capacity are flushed, we scatter the remaining tuples to the buffer. [20]. Second, it generates many cache conflicts [31] and in We identify which vector lanes point to partitions that the worst case, may be bound by the size of the cache as- have filled their buffers using the output index. Given that sociativity set. Third, using normal stores, we trigger cache tuples in multiple vector lanes can belong to the same par- loads to execute the stores and reduce the bandwidth due tition, we identify the lanes that wrote to the last partition to loading cache lines that will only be overwritten [38]. slot and ensure that we will not flush the same data twice in The vectorized implementation of simple non-buffered shuf- the same loop. Flushing occurs “horizontally” one partition fling improves performance, but suffers from the same per- at a time, after selectively storing the partitions in the stack. formance pitfalls as the scalar version. In general, vector- Flushing data from the buffers to the output is done by ization improves performance compared to its scalar coun- streaming stores to avoid polluting the cache with output terpart, but does not overcome algorithmic inefficiencies. data [38]. Note that we are streaming to multiple outputs, To solve these problems, recent work proposed keeping thus, single output buffering (Section 4) does not apply. the data in buffers and flushing them in groups [31]. If the Hash partitioning is used to split the data into groups with buffers are small and packed together, they will not cause non-overlapping keys and has no need to be stable. Instead TLB or cache misses. Thus, with W buffer slots per parti- of conflict serialization, we detect and process conflicting tion, we reduce cache and TLB misses to 1/W . If the buffers lanes during the next loop. Performance is slightly increased are flushed with non-temporal stores, we facilitate hardware because very few conflicts normally occur per loop if P > W . write combining and avoid polluting the cache with output As in hash tables, if the tuples are keys and rids stored data [38]. The fanout is bounded by the cache capacity to on separate arrays, we do fewer and wider scatters by inter- keep the buffer cache resident. The scalar code for buffered leaving the two columns before storing to the buffers. shuffling is thoroughly described in previous work [4, 26]. To partition multiple columns of payloads, we can either The improvement of vectorized buffered shuffling shown in shuffle all the columns together as a unified tuple or shuf- Algorithm 15 over vectorized unbuffered shuffling shown in fle one column at a time. Shuffling unified tuples should Algorithm 14, is scattering the tuples to the cache resident optimally compile specific code for each query at run time. buffer rather than directly to the output. For each vector Otherwise, we can process one column at a time using pre- of tuples, once the tuples are scattered, we iterate over the compiled type-specialized code. In the latter approach, which partitions that the current W input tuples belong to, and we use here, during histogram generation, we store partition flush to the output when all available buffer slots are filled. destinations alongside the conflict serialization offsets in a temporary array. Thus, we avoid reloading the wider keys Algorithm 15 Radix Partitioning - Buffered Shuffling as well as redoing conflict serialization for each column. The O ← prefix sum(H) partition offsets from histogram temporary array must be log P + log W bits wide per entry. for i ← 0 to |Tkeys in | − 1 step W do k ← Tkeys in [i] load input tuples 8. SORTING v ← Tpayloads in [i] Sorting is used in databases as a subproblem for join and h ← (k << bL ) >> bR radix function o ← O[h] gather partition offsets aggregation. Sorting is also used for declustering, index c ← serialize conflicts(h, O) serialize conflicts building, compression, and duplicate elimination. Recent o←o+c add serialization offsets work showed that large-scale sorting is synonymous to par- O[h] ← o + 1 scatter incremented offsets titioning. Radixsort and comparison sorting based on range oB ← o & (W − 1) buffer offsets in partition partitioning have comparable performance, by maximizing m ← oB < W find non-overflowing lanes the fanout to minimize the number of partition passes [26]. m ← !m Here, we implement least-significant-bit (LSB) radixsort, o B ← o B + (h · W ) buffer offsets across partitions which is the fastest method for 32-bit keys [26]. We do not Bkeys [oB ] ←m k scatter tuples to buffer ... evaluate larger keys as Xeon Phi only supports 32-bit inte- Bpayloads [oB ] ←m v ... for non-overflowing lanes ger arithmetic in vector code. Parallel LSB radixsort splits m ← oB = (W − 1) find lanes to be flushed the input equally among threads and uses the prefix sum of if m = false then the histograms from all threads to interleave the partition H[0] ←m h pack partitions to be flushed outputs. Histogram generation and shuffling operate shared- for j ← 0 to |m| − 1 do nothing maximizing thread parallelism. By using vectorized h ← H[j] buffered partitioning, we also maximize data parallelism. o ← (O[h] & −W ) − W output location kB ← Bkeys [h · W ] load tuples from buffer vB ← Bpayloads [h · W ] 9. HASH JOIN Tkeys out [o] ← kB flush tuples to output ... Joins are one of the most frequent operators in analytical Tpayloads out [o] ← vB ... using streaming stores queries that can be expensive enough to dominate query ex- end for ecution time. Recent work has focused on comparing main- Bkeys [oB − W ] ←m k scatter tuples to buffer ... memory equi-joins, namely sort-merge join and hash join. Bpayloads [oB − W ] ←m v ... for overflowing lanes The former is dominated by sorting [4, 14]. In the baseline end if hash join, the inner relation is built into a hash table and end for cleanup the buffers after the loop the outer relation probes the hash table to find matches. 1500

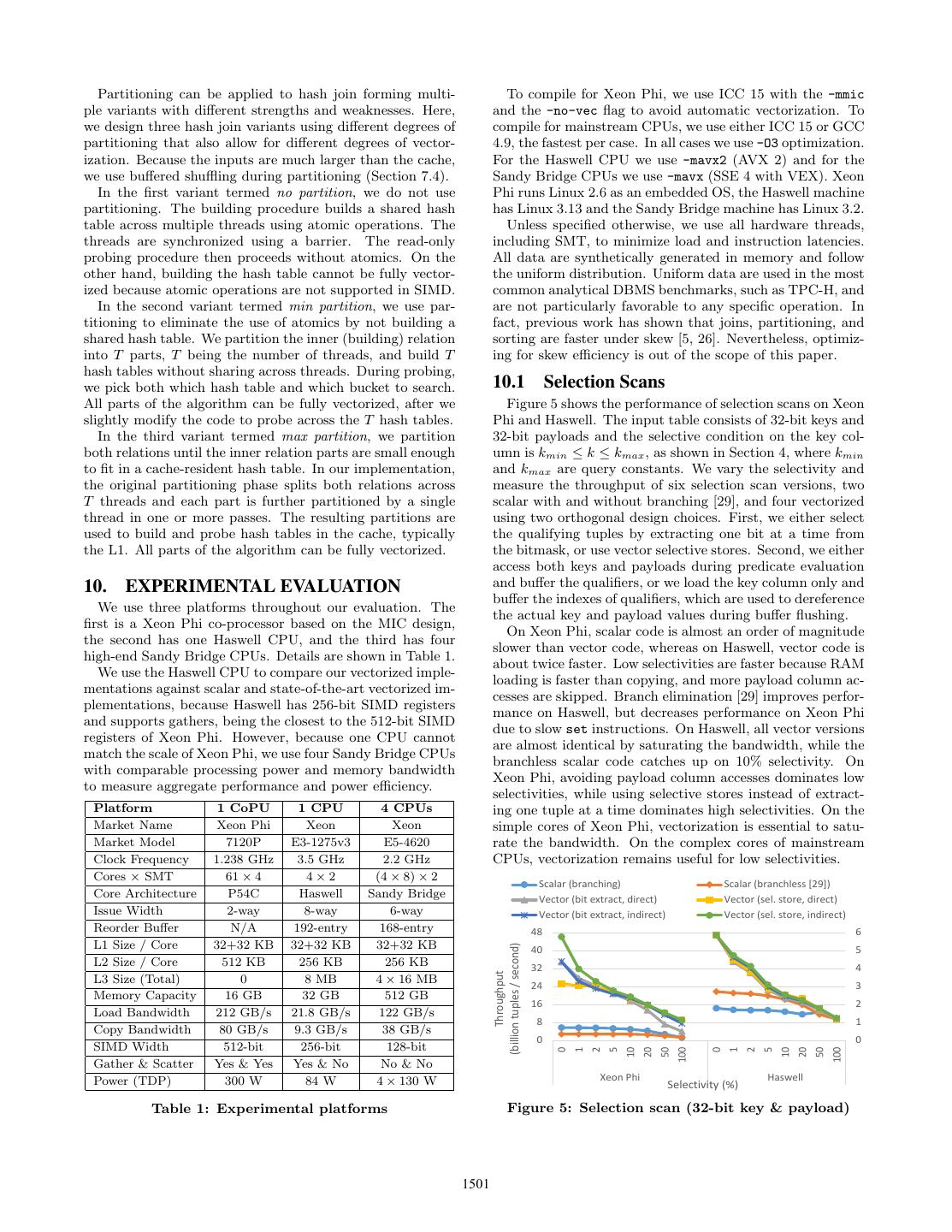

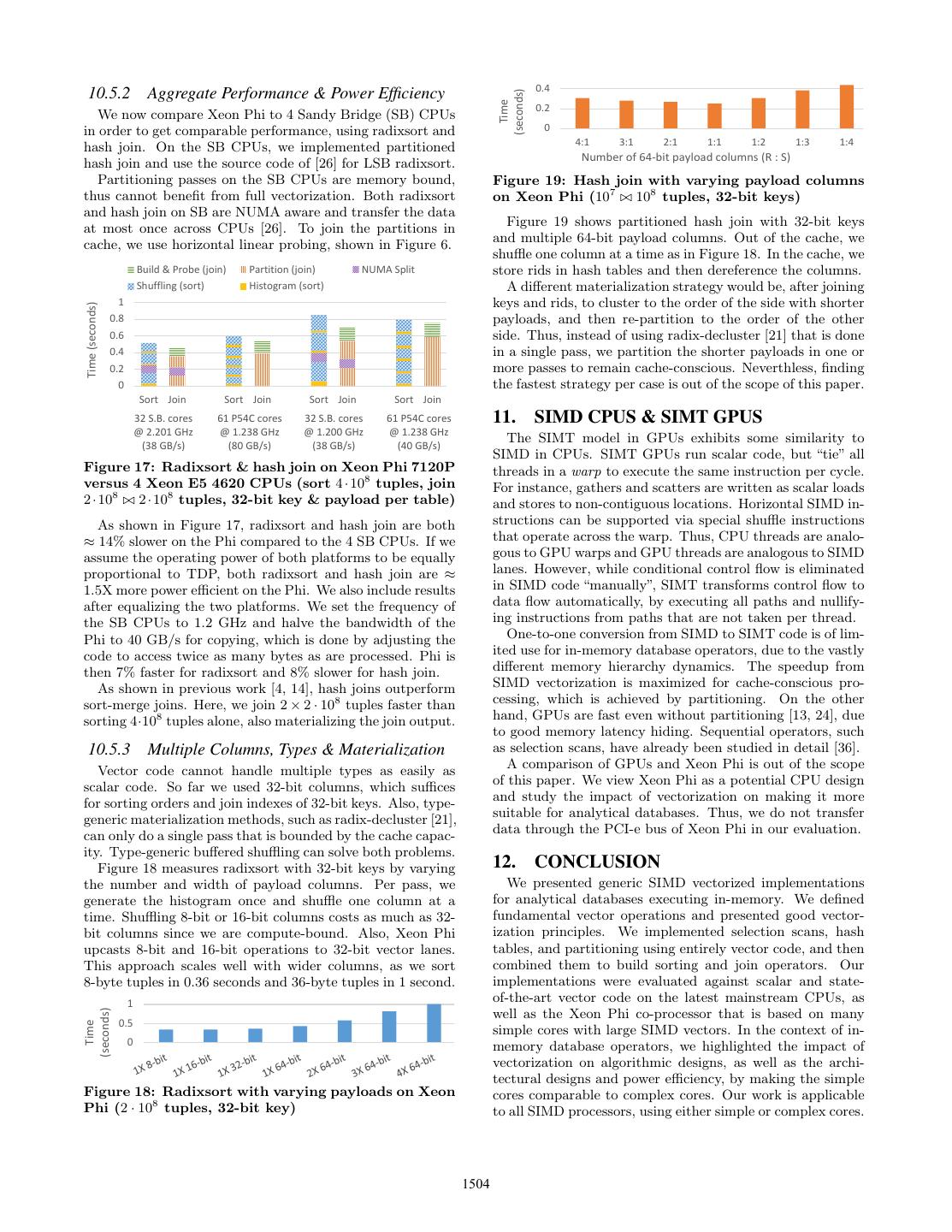

9 . Partitioning can be applied to hash join forming multi- To compile for Xeon Phi, we use ICC 15 with the -mmic ple variants with different strengths and weaknesses. Here, and the -no-vec flag to avoid automatic vectorization. To we design three hash join variants using different degrees of compile for mainstream CPUs, we use either ICC 15 or GCC partitioning that also allow for different degrees of vector- 4.9, the fastest per case. In all cases we use -O3 optimization. ization. Because the inputs are much larger than the cache, For the Haswell CPU we use -mavx2 (AVX 2) and for the we use buffered shuffling during partitioning (Section 7.4). Sandy Bridge CPUs we use -mavx (SSE 4 with VEX). Xeon In the first variant termed no partition, we do not use Phi runs Linux 2.6 as an embedded OS, the Haswell machine partitioning. The building procedure builds a shared hash has Linux 3.13 and the Sandy Bridge machine has Linux 3.2. table across multiple threads using atomic operations. The Unless specified otherwise, we use all hardware threads, threads are synchronized using a barrier. The read-only including SMT, to minimize load and instruction latencies. probing procedure then proceeds without atomics. On the All data are synthetically generated in memory and follow other hand, building the hash table cannot be fully vector- the uniform distribution. Uniform data are used in the most ized because atomic operations are not supported in SIMD. common analytical DBMS benchmarks, such as TPC-H, and In the second variant termed min partition, we use par- are not particularly favorable to any specific operation. In titioning to eliminate the use of atomics by not building a fact, previous work has shown that joins, partitioning, and shared hash table. We partition the inner (building) relation sorting are faster under skew [5, 26]. Nevertheless, optimiz- into T parts, T being the number of threads, and build T ing for skew efficiency is out of the scope of this paper. hash tables without sharing across threads. During probing, we pick both which hash table and which bucket to search. 10.1 Selection Scans All parts of the algorithm can be fully vectorized, after we Figure 5 shows the performance of selection scans on Xeon slightly modify the code to probe across the T hash tables. Phi and Haswell. The input table consists of 32-bit keys and In the third variant termed max partition, we partition 32-bit payloads and the selective condition on the key col- both relations until the inner relation parts are small enough umn is kmin ≤ k ≤ kmax , as shown in Section 4, where kmin to fit in a cache-resident hash table. In our implementation, and kmax are query constants. We vary the selectivity and the original partitioning phase splits both relations across measure the throughput of six selection scan versions, two T threads and each part is further partitioned by a single scalar with and without branching [29], and four vectorized thread in one or more passes. The resulting partitions are using two orthogonal design choices. First, we either select used to build and probe hash tables in the cache, typically the qualifying tuples by extracting one bit at a time from the L1. All parts of the algorithm can be fully vectorized. the bitmask, or use vector selective stores. Second, we either access both keys and payloads during predicate evaluation 10. EXPERIMENTAL EVALUATION and buffer the qualifiers, or we load the key column only and buffer the indexes of qualifiers, which are used to dereference We use three platforms throughout our evaluation. The the actual key and payload values during buffer flushing. first is a Xeon Phi co-processor based on the MIC design, On Xeon Phi, scalar code is almost an order of magnitude the second has one Haswell CPU, and the third has four slower than vector code, whereas on Haswell, vector code is high-end Sandy Bridge CPUs. Details are shown in Table 1. about twice faster. Low selectivities are faster because RAM We use the Haswell CPU to compare our vectorized imple- loading is faster than copying, and more payload column ac- mentations against scalar and state-of-the-art vectorized im- cesses are skipped. Branch elimination [29] improves perfor- plementations, because Haswell has 256-bit SIMD registers mance on Haswell, but decreases performance on Xeon Phi and supports gathers, being the closest to the 512-bit SIMD due to slow set instructions. On Haswell, all vector versions registers of Xeon Phi. However, because one CPU cannot are almost identical by saturating the bandwidth, while the match the scale of Xeon Phi, we use four Sandy Bridge CPUs branchless scalar code catches up on 10% selectivity. On with comparable processing power and memory bandwidth Xeon Phi, avoiding payload column accesses dominates low to measure aggregate performance and power efficiency. selectivities, while using selective stores instead of extract- Platform 1 CoPU 1 CPU 4 CPUs ing one tuple at a time dominates high selectivities. On the Market Name Xeon Phi Xeon Xeon simple cores of Xeon Phi, vectorization is essential to satu- Market Model 7120P E3-1275v3 E5-4620 rate the bandwidth. On the complex cores of mainstream Clock Frequency 1.238 GHz 3.5 GHz 2.2 GHz CPUs, vectorization remains useful for low selectivities. Cores × SMT 61 × 4 4×2 (4 × 8) × 2 Scalar (branching) Scalar (branchless [29]) Core Architecture P54C Haswell Sandy Bridge Vector (bit extract, direct) Vector (sel. store, direct) Issue Width 2-way 8-way 6-way Vector (bit extract, indirect) Vector (sel. store, indirect) Reorder Buffer N/A 192-entry 168-entry 48 6 L1 Size / Core 32+32 KB 32+32 KB 32+32 KB (billion tuples / second) 40 5 L2 Size / Core 512 KB 256 KB 256 KB 32 4 Throughput L3 Size (Total) 0 8 MB 4 × 16 MB 24 3 Memory Capacity 16 GB 32 GB 512 GB 16 2 Load Bandwidth 212 GB/s 21.8 GB/s 122 GB/s 8 1 Copy Bandwidth 80 GB/s 9.3 GB/s 38 GB/s 0 0 SIMD Width 512-bit 256-bit 128-bit 0 1 2 5 10 20 50 100 0 1 2 5 10 20 50 100 Gather & Scatter Yes & Yes Yes & No No & No Power (TDP) 300 W 84 W 4 × 130 W Xeon Phi Haswell Selectivity (%) Table 1: Experimental platforms Figure 5: Selection scan (32-bit key & payload) 1501

10 . 5 10.2 Hash Tables 4 Scalar Vector (billion tuples / In this section we evaluate hash table vectorization. The 3 Throughput second) first set of experiments measures the probing throughput 2 and compares against state-of-the-art scalar and vectorized 1 approaches, on both Xeon Phi and Haswell. The second set 0 evaluates iterative building and probing of shared-nothing LP DH CH LP DH CH LP DH CH hash tables on Xeon Phi only since Haswell has no scatters. L1 cache L2 cache RAM Figure 6 shows linear probing (LP) and double hashing Figure 8: Build & probe linear probing, double (DH). The input is a 32-bit column with 109 keys, the output hashing, & cuckoo hashing on Xeon Phi (1:1 build– is a 32-bit column with the payloads of matches, almost all probe, shared-nothing, 2X 32-bit key & payload) keys match, and the hash table is half full. The horizontal vector code uses bucketized hash table where each input Figure 8 interleaves building and probing of shared-nothing key is compared against multiple table keys [30]. In our tables, as done in the last phase of partitioned hash join, approach, vertical vector code, we compare multiple input using Xeon Phi. The build to probe ratio is 1:1, all keys keys against one hash table key each using gathers. We are match, and we vary the hash table size. The hash tables up to 6X faster than everything else on Xeon Phi, and gain are ≈ 4 KB in L1, 64 KB in L2, and 1 MB out of cache. a smaller speedup for cache resident hash tables on Haswell. Both inputs have 32-bit keys and payloads, the output has LP Scalar DH Scalar the matching keys and the two payloads, the load factor is LP Vector (horizontal) DH Vector (horizontal) 50%, and we saturate Phi’s memory. Throughput is defined LP Vector (vertical) DH Vector (vertical) as (|R| + |S|)/t. The speedup is 2.6–4X when the hash table 14 3.5 resides in L1, 2.4–2.7X in L2, and 1.2–1.4X out of the cache. 12 3 7 (billion tuples / second) (billion tuples / second) 10 2.5 6 8 2 Scalar Vector 5 Throughput Throughput 6 1.5 4 4 1 2 0.5 3 0 0 2 4 KB 64 KB 1 MB 4 KB 64 KB 1 MB 16 KB 256 KB 4 MB 16 KB 16 MB 256 KB 4 MB 64 MB 16 MB 64 MB 1 0 Xeon Phi Haswell LP DH CH LP DH LP DH LP DH Hash table size no repeats allowed 1.25 repeats 2.5 repeats 5 repeats Figure 6: Probe linear probing & double hashing 100% of keys match 80% match 40% match 20% match tables (shared, 32-bit key → 32-bit probed payload) Figure 9: Build & probe linear probing, double Figure 7 shows the probing throughput of cuckoo hashing, hashing, & cuckoo hashing on Xeon Phi (1:10 build– with the same setting as Figure 6. The scalar versions are probe, L1, shared-nothing, 2X 32-bit key & payload) either branching or branchless [42], and the vector versions are either horizontal (bucketized) [30] or vertical, where we In Figure 9, we modify the experiment of Figure 8 by evaluate two vertical techniques, loading both buckets and varying the number of key repeats with the same output blending them, or loading the second bucket selectively. The size. All tables are in L1 and the build to probe ratio is branchless scalar version [42] is slower than branching scalar 1:10. The other settings are as in Figure 8. With no key code on both Xeon Phi and Haswell. Also, branching scalar repeats, the speedup is 6.6–7.7X, higher than Figure 8, since code is faster than branchless on Haswell using ICC, while building is more expensive than probing and also detects GCC produces very slow branching code. Vertically vector- conflicts. Here, building alone gets a speedup of 2.5–2.7X. ized code is 5X faster on Xeon Phi and 1.7X on Haswell. With 5 key repeats, the speedup is 4.1X for DH and 2.7X Bucketized hash table probing is faster by using 128-bit for LP. Thus, DH is more resilient to key repeats than LP. SIMD (SSE 4) to probe 4 key, rather than use 256-bit SIMD 10.3 Bloom Filters (AVX 2) to probe 8 keys, due to faster in-register broadcasts. In Figure 10, we measure Bloom filter probing throughput Scalar (branching) Scalar (branchless [42]) using the design of [27] with selective loads and stores for Vector (horizontal [30]) Vector (vertical blend) Xeon Phi. We disable loop unrolling and add buffering. The Vector (vertical select) speedup is 3.6–7.8X on Xeon Phi and 1.3–3.1X on Haswell 14 3.5 12 3 12 Scalar 3 (billion tuples / second) 10 2.5 10 2.5 (billion tuples / second) Vector 8 2 8 2 Throughput 6 1.5 Throughput 6 1.5 4 1 4 1 2 0.5 2 0.5 0 0 0 0 4 KB 16 KB 64 KB 256 KB 1 MB 4 MB 4 KB 16 KB 64 KB 256 KB 1 MB 4 MB 16 MB 64 MB 16 MB 64 MB 4 KB 16 KB 64 KB 256 KB 4 KB 1 MB 16 KB 64 KB 256 KB 1 MB 4 MB 16 MB 64 MB 4 MB 16 MB 64 MB Xeon Phi Haswell Xeon Phi Haswell Hash table size Bloom filter size Figure 7: Probe cuckoo hashing table (2 functions, Figure 10: Bloom filter probing (5 functions, shared, shared, 32-bit key → 32-bit probed payload) 10 bits / item, 5% selectivity, 32-bit key & payload) 1502

11 .10.4 Partitioning 10.5 Sorting & Hash Join Figure 11 shows radix and hash histogram generation on We now evaluate sorting and hash joins in three stages: Xeon Phi. On mainstream CPUs, the memory load band- first, we measure performance on Xeon Phi and highlight the width is saturated [26]. Scalar code is dominated by the impact of vectorization on algorithmic designs; second, we partition function. By replicating each count W times, we compare against 4 high-end CPUs to highlight the impact get a 2.55X speedup over scalar radix. A slowdown occurs on power efficiency; and third, we study the cost of a generic when we exceed the L1, but we can increase P by compress- implementation and materialization of multiple columns. ing to 8-bit counts. Conflict serialization does not replicate but is slower, especially if P ≤ W . If P is too large, both 10.5.1 Vectorization Speedup & Algorithmic Designs count replication and conflict serialization are too expensive. Figure 14 shows the performance of LSB radixsort on Xeon Phi. The vectorization speedup is 2.2X over state- 25 Vector hash/radix (repl. & compress) of-the-art scalar code and time scales with size. In main- (billion tuples / 20 stream CPUs, we are already almost saturated, since each Throughput Vector hash/radix second) 15 (replicate counts) partitioning pass runs close to the bandwidth [31, 26, 38]. 10 Vector hash/radix 2.5 Scalar (serialize conflicts) Time (seconds) 5 2 Vector Scalar radix 0 1.5 3 4 5 6 7 8 9 10 11 12 13 Scalar hash 1 Fanout (log # of partitions) 0.5 0 Figure 11: Radix & hash histogram on Xeon Phi 100 200 400 800 100 200 400 800 Figure 12 shows the performance of computing the range 32-bit key 32-bit key & payload partition function. The binary search vectorization speedup Tuples (in millions) is 7–15X on Xeon Phi and 2.4–2.8X on Haswell. SIMD range Figure 14: Radixsort on Xeon Phi (LSB) indexes [26] are even faster on Haswell but are slower on Xeon Phi, where the pipeline is saturated by scalar instruc- Figure 15 shows the performance of the three hash join tions. Each node has W keys and the root stays in registers. variants as described in Section 9, on Xeon Phi. We assume a foreign key join but our implementation is generic. The “no- Scalar (branching) Scalar (branchless) partition” and the “min-partition” methods get small 1.05X Vector (binary search) Vector (tree index [26]) (billion tuples / second) 24 6 and 1.25X speedups, while the fully partitioned gets a 3.3X 9 speedup and becomes the fastest overall by a 2.25X gap, 20 5 Throughput 16 17 9x9 4 which is too large to justify hardware-oblivious joins [12]. 12 9x9x9 3 Hash join is faster than sort-merge join [4, 14], since we sort 17x17 17x17x17 9x9x9x9 2 8 4·108 in 0.6 seconds and join 2×2·108 tuples in 0.54 seconds. 4 1 0 0 Build & Probe Probe Build Partition 2 8 16 32 64 128 256 512 8 16 32 64 128 256 512 1024 2048 4096 8192 1024 2048 4096 8192 Time (seconds) 1.5 Xeon Phi Haswell Fanout 1 Figure 12: Range function on Xeon Phi (32-bit key) 0.5 0 Figure 13 measures shuffling on Xeon Phi using inputs Scalar Vector Scalar Vector Scalar Vector larger than the cache. On mainstream CPUs, shuffling can- No partition Min partition Max partition not be fully vectorized without scatters, but saturates the memory copy bandwidth at higher fanout [4, 26]. On Xeon Figure 15: Multiple hash join variants on Xeon Phi Phi, among the unbuffered versions, vectorization achieves (2 · 108 2 · 108 32-bit key & payload) up to 1.95X speedup. Among the scalar versions, buffering Figure 16 shows the thread scalability of radixsort and achieves up to 1.8X speedup. Among the buffered versions, partitioned hash join on Xeon Phi. The speedup is almost vectorization achieves up to 2.85X speedup, using up to 60% linear, even when using 2-way and 4-way SMT due to hiding of the bandwidth. The optimal fanout, maximizing through- load and instruction latencies. On Xeon Phi, using 4-way put × bits, is 5–8 radix bits per pass. Unstable hash parti- SMT is essential to hide the 4-cycle vector instruction la- tioning, shown here, is up to 17% faster than stable radix. tencies. On mainstream CPUs, LSB radixsort is again satu- 7 Vector hash rated, gaining only marginal speedup from 2-way SMT [26]. 6 (buffered) Scalar Vector (billion tuples / 500 Throughput 5 Vector radix Time (seconds) second) 4 (buffered) [logscale] 50 3 Vector radix 5 2 (unbuffered) 1 Scalar radix 0.5 1 3 7 15 30 61 122 244 1 3 7 15 30 61 122 244 0 (buffered) 3 4 5 6 7 8 9 10 11 12 13 Scalar radix Radixsort Hash Join Fanout (log # of partitions) (unbuffered) Threads Figure 13: Radix shuffling on Xeon Phi (shared- Figure 16: Radixsort & hash join scalability (4 · 108 nothing, out-of-cache, 32-bit key & payload) & 2 · 108 2 · 108 32-bit key & payload, log/log scale) 1503

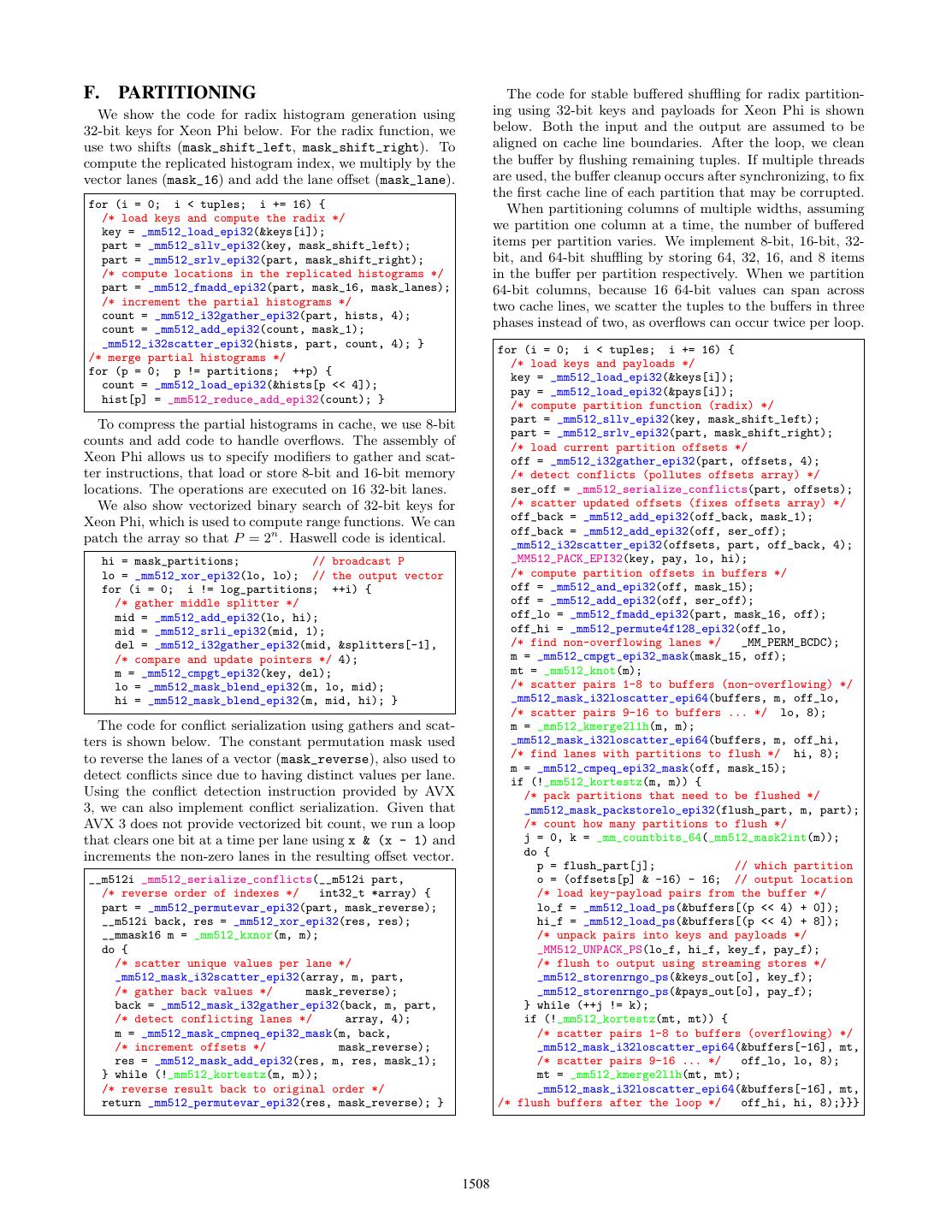

12 . 0.4 10.5.2 Aggregate Performance & Power Efficiency (seconds) Time 0.2 We now compare Xeon Phi to 4 Sandy Bridge (SB) CPUs in order to get comparable performance, using radixsort and 0 4:1 3:1 2:1 1:1 1:2 1:3 1:4 hash join. On the SB CPUs, we implemented partitioned Number of 64-bit payload columns (R : S) hash join and use the source code of [26] for LSB radixsort. Partitioning passes on the SB CPUs are memory bound, Figure 19: Hash join with varying payload columns thus cannot benefit from full vectorization. Both radixsort on Xeon Phi (107 108 tuples, 32-bit keys) and hash join on SB are NUMA aware and transfer the data Figure 19 shows partitioned hash join with 32-bit keys at most once across CPUs [26]. To join the partitions in and multiple 64-bit payload columns. Out of the cache, we cache, we use horizontal linear probing, shown in Figure 6. shuffle one column at a time as in Figure 18. In the cache, we Build & Probe (join) Partition (join) NUMA Split store rids in hash tables and then dereference the columns. Shuffling (sort) Histogram (sort) A different materialization strategy would be, after joining 1 keys and rids, to cluster to the order of the side with shorter Time (seconds) 0.8 payloads, and then re-partition to the order of the other 0.6 side. Thus, instead of using radix-decluster [21] that is done 0.4 in a single pass, we partition the shorter payloads in one or 0.2 more passes to remain cache-conscious. Neverthless, finding 0 the fastest strategy per case is out of the scope of this paper. Sort Join Sort Join Sort Join Sort Join 32 S.B. cores 61 P54C cores 32 S.B. cores 61 P54C cores 11. SIMD CPUS & SIMT GPUS @ 2.201 GHz @ 1.238 GHz @ 1.200 GHz @ 1.238 GHz (38 GB/s) (80 GB/s) (38 GB/s) (40 GB/s) The SIMT model in GPUs exhibits some similarity to SIMD in CPUs. SIMT GPUs run scalar code, but “tie” all Figure 17: Radixsort & hash join on Xeon Phi 7120P threads in a warp to execute the same instruction per cycle. versus 4 Xeon E5 4620 CPUs (sort 4 · 108 tuples, join For instance, gathers and scatters are written as scalar loads 2 · 108 2 · 108 tuples, 32-bit key & payload per table) and stores to non-contiguous locations. Horizontal SIMD in- As shown in Figure 17, radixsort and hash join are both structions can be supported via special shuffle instructions ≈ 14% slower on the Phi compared to the 4 SB CPUs. If we that operate across the warp. Thus, CPU threads are analo- assume the operating power of both platforms to be equally gous to GPU warps and GPU threads are analogous to SIMD proportional to TDP, both radixsort and hash join are ≈ lanes. However, while conditional control flow is eliminated 1.5X more power efficient on the Phi. We also include results in SIMD code “manually”, SIMT transforms control flow to after equalizing the two platforms. We set the frequency of data flow automatically, by executing all paths and nullify- the SB CPUs to 1.2 GHz and halve the bandwidth of the ing instructions from paths that are not taken per thread. Phi to 40 GB/s for copying, which is done by adjusting the One-to-one conversion from SIMD to SIMT code is of lim- code to access twice as many bytes as are processed. Phi is ited use for in-memory database operators, due to the vastly then 7% faster for radixsort and 8% slower for hash join. different memory hierarchy dynamics. The speedup from As shown in previous work [4, 14], hash joins outperform SIMD vectorization is maximized for cache-conscious pro- sort-merge joins. Here, we join 2 × 2 · 108 tuples faster than cessing, which is achieved by partitioning. On the other sorting 4·108 tuples alone, also materializing the join output. hand, GPUs are fast even without partitioning [13, 24], due to good memory latency hiding. Sequential operators, such 10.5.3 Multiple Columns, Types & Materialization as selection scans, have already been studied in detail [36]. Vector code cannot handle multiple types as easily as A comparison of GPUs and Xeon Phi is out of the scope scalar code. So far we used 32-bit columns, which suffices of this paper. We view Xeon Phi as a potential CPU design for sorting orders and join indexes of 32-bit keys. Also, type- and study the impact of vectorization on making it more generic materialization methods, such as radix-decluster [21], suitable for analytical databases. Thus, we do not transfer can only do a single pass that is bounded by the cache capac- data through the PCI-e bus of Xeon Phi in our evaluation. ity. Type-generic buffered shuffling can solve both problems. Figure 18 measures radixsort with 32-bit keys by varying 12. CONCLUSION the number and width of payload columns. Per pass, we We presented generic SIMD vectorized implementations generate the histogram once and shuffle one column at a for analytical databases executing in-memory. We defined time. Shuffling 8-bit or 16-bit columns costs as much as 32- fundamental vector operations and presented good vector- bit columns since we are compute-bound. Also, Xeon Phi ization principles. We implemented selection scans, hash upcasts 8-bit and 16-bit operations to 32-bit vector lanes. tables, and partitioning using entirely vector code, and then This approach scales well with wider columns, as we sort combined them to build sorting and join operators. Our 8-byte tuples in 0.36 seconds and 36-byte tuples in 1 second. implementations were evaluated against scalar and state- 1 of-the-art vector code on the latest mainstream CPUs, as (seconds) well as the Xeon Phi co-processor that is based on many 0.5 Time simple cores with large SIMD vectors. In the context of in- 0 memory database operators, we highlighted the impact of vectorization on algorithmic designs, as well as the archi- tectural designs and power efficiency, by making the simple Figure 18: Radixsort withPayload columnspayloads on Xeon varying cores comparable to complex cores. Our work is applicable Phi (2 · 108 tuples, 32-bit key) to all SIMD processors, using either simple or complex cores. 1504

13 .13. REFERENCES [29] K. A. Ross. Selection conditions in main memory. ACM [1] M.-C. Albutiu et al. Massively parallel sort-merge joins in Trans. Database Systems, 29(1):132–161, Mar. 2004. main memory multi-core database systems. PVLDB, [30] K. A. Ross. Efficient hash probes on modern processors. In 5(10):1064–1075, June 2012. ICDE, pages 1297–1301, 2007. [2] P. Bakkum et al. Accelerating SQL database operations on [31] N. Satish et al. Fast sort on CPUs and GPUs: a case for a GPU with CUDA. In GPGPU, pages 94–103, 2010. bandwidth oblivious SIMD sort. In SIGMOD, pages [3] C. Balkesen et al. Main-memory hash joins on multi-core 351–362, 2010. CPUs: Tuning to the underlying hardware. In ICDE, pages [32] B. Schlegel et al. Scalable frequent itemset mining on 362–373, 2013. many-core processors. In DaMoN, 2013. [4] C. Balkesen et al. Multicore, main-memory joins: Sort vs. [33] L. Seiler et al. Larrabee: A many-core x86 architecture for hash revisited. PVLDB, 7(1):85–96, Sept. 2013. visual computing. ACM Trans. Graphics, 27(3), Aug. 2008. [5] S. Blanas et al. Design and evaluation of main memory [34] J. Shin. Introducing control flow into vectorized code. In hash join algorithms for multi-core CPUs. In SIGMOD, PACT, pages 280–291, 2007. pages 37–48, 2011. [35] L. Sidirourgos et al. Column imprints: A secondary index [6] P. Boncz et al. MonetDB/X100: Hyper-pipelining query structure. In SIGMOD, pages 893–904, 2013. execution. In CIDR, 2005. [36] E. A. Sitaridi et al. Optimizing select conditions on GPUs. [7] J. Chhugani et al. Efficient implementation of sorting on In DaMoN, 2013. multi-core SIMD CPU architecture. In VLDB, pages [37] M. Stonebraker et al. C-store: A column-oriented DBMS. 1313–1324, 2008. In VLDB, pages 553–564, 2005. [8] J. Cieslewicz et al. Automatic contention detection and [38] J. Wassenberg et al. Engineering a multi core radix sort. In amelioration for data-intensive operations. In SIGMOD, EuroPar, pages 160–169, 2011. pages 483–494, 2010. [39] T. Willhalm et al. SIMD-scan: ultra fast in-memory table [9] D. J. DeWitt et al. Materialization strategies in a scan using on-chip vector processing units. PVLDB, column-oriented DBMS. In ICDE, pages 466–475, 2007. 2(1):385–394, Aug. 2009. [10] J. Hofmann et al. Comparing the performance of different [40] Y. Ye et al. Scalable aggregation on multicore processors. x86 SIMD instruction sets for a medical imaging In DaMoN, 2011. application on modern multi- and manycore chips. CoRR, [41] J. Zhou et al. Implementing database operations using arXiv:1401.7494, 2014. SIMD instructions. In SIGMOD, pages 145–156, 2002. [11] H. Inoue et al. AA-sort: A new parallel sorting algorithm [42] M. Zukowski et al. Architecture-conscious hashing. In for multi-core SIMD processors. In PACT, pages 189–198, DaMoN, 2006. 2007. [12] S. Jha et al. Improving main memory hash joins on Intel Xeon Phi processors: An experimental approach. PVLDB, APPENDIX 8(6):642–653, Feb. 2015. A. NOTATION [13] T. Kaldewey et al. GPU join processing revisited. In DaMoN, 2012. We now explain the notation of the vectorized algorithmic [14] C. Kim et al. Sort vs. hash revisited: fast join descriptions. Boolean vector operations result in boolean implementation on modern multicore CPUs. In VLDB, vector bitmasks that are shown as scalar variables. For ex- pages 1378–1389, 2009. ample, m ← x < y results in the bitmask m. Assignments [15] C. Kim et al. Fast: fast architecture sensitive tree search on such as m ← true, set the W bits of m. Vectors as array in- modern CPUs and GPUs. In SIGMOD, pages 339–350, 2010. dexes denote a gather or a scatter. For example, x ← A[y] is [16] K. Krikellas et al. Generating code for holistic query a vector load, while x ← A[y] is a gather. Selective loads and evaluation. In ICDE, pages 613–624, 2010. stores as well as selective gathers and scatters use a bitmask [17] S. Larsen et al. Exploiting superword level parallelism with as a subscript in the assignment. For example, x ←m A[y] multimedia instruction sets. In PLDI, pages 145–156, 2000. is a selective load, while x ←m A[y] is a selective gather. [18] V. Leis et al. Morsel-driven parallelism: A NUMA-aware |m| is the bit population count of m and |T | is the size of query evaluation framework for the many-core age. In SIGMOD, pages 743–754, 2014. array T . If-then-else statements, such as x ← m ? y : z, use [19] S. Manegold et al. Optimizing database architecture for the vector blending. Finally, scalar values in vector expressions new bottleneck: Memory access. J. VLDB, 9(3):231–246, use vectors generated before the main loop. For example, Dec. 2000. x ← x + k and m ← x > k use a vector with k in all lanes. [20] S. Manegold et al. What happens during a join? dissecting CPU and memory optimization effects. In VLDB, pages B. GATHER & SCATTER 339–350, 2000. [21] S. Manegold et al. Cache-conscious radix-decluster In the latest mainstream CPUs (AVX 2), gathers and scat- projections. In VLDB, pages 684–695, 2004. ters are executed in one instruction. On Xeon Phi, each in- [22] T. Neumann. Efficiently compiling efficient query plans for struction call accesses one cache line and is called repeatedly modern hardware. PVLDB, 4(9):539–550, June 2011. until all W lanes are processed. If N distinct cache lines are [23] R. Pagh et al. Cuckoo hashing. J. Algorithms, accessed, the instructions are issued N times with N ≤ W . 51(2):122–144, May 2004. [24] H. Pirk et al. Accelerating foreign-key joins using An implementation for 16-way gather on Xeon Phi is shown asymmetric memory channels. In ADMS, 2011. below. The rax scalar register holds the array pointer. The [25] O. Polychroniou et al. High throughput heavy hitter 512-bit zmm0 vector holds the indexes and zmm1 vector holds aggregation for modern SIMD processors. In DaMoN, 2013. the loaded values. k1 is a 16-bit boolean vector set by kxnor. [26] O. Polychroniou et al. A comprehensive study of Each call to vpgatherdd finds the leftmost set bit in k1, ex- main-memory partitioning and its application to large-scale tracts the index from zmm0, identifies other lanes pointing to comparison- and radix-sort. In SIGMOD, pages 755–766, 2014. the same cache line, loads the values in zmm1, and resets the [27] O. Polychroniou et al. Vectorized Bloom filters for bits in k1. The jknzd branches back if k1 has more set bits. advanced SIMD processors. In DaMoN, 2014. kxnor %k1, %k1 [28] V. Raman et al. DB2 with BLU acceleration: So much loop: vpgatherdd (%rax, %zmm0, 4), %zmm1 {%k1} more than just a column store. PVLDB, 6(11):1080–1091, jknzd loop, %k1 Aug. 2013. 1505