- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/u231/AVL_Trees?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

AVL Trees

展开查看详情

1 .CSE 373: Data Structures & Algorithms Wrap up Amortized Analysis; A VL Trees Riley Porter Winter 2017 Winter 2017 CSE373: Data Structures & Algorithms

2 .Course Logistics Symposium offered by CSE department today HW2 released, Big-O, Heaps (lecture slides have pseudocode that will help a lot) Weekly summaries out soon 2 CSE373: Data Structures & Algorithms Winter 2017

3 .Review: Amortized Complexity For a Stack implemented with an array can we claim push is O ( 1 ) time if resizing is O ( n ) time? We can’t , but we can claim it’s an O ( 1 ) amortized operation Why is this good? Why do we care? If amortized is good enough for our problem, then it’s great to be able to say O(1) instead of O(n) 3 CSE373: Data Structures & Algorithms Winter 2017

4 .Review: Amortized Complexity Like an Average . N inserts at 1 unit cost + 1 insert at N unit cost = N * 1 + 1 * N 2N overall cost for N + 1 insertions = 2N cost / (N + 1) insertions = O( 2N/(N+1) ) = O(1) amortized cost 4 CSE373: Data Structures & Algorithms Winter 2017

5 .Not-Amortized Complexity What if we only add 3 slots instead of N slots? 3 inserts at 1 unit cost + 1 insert at N unit cost = 3 * 1 + 1 * N = N + 3 overall cost for N + 1 insertions = N + 3 cost / (3 + 1) insertions = O( (N+3)/ (3+ 1) ) = O (N) Not Amortized! Didn’t build up enough “credit” 5 CSE373: Data Structures & Algorithms Winter 2017

6 .Example #1: Resizing stack A stack implemented with an array where we double the size of the array if it becomes full Claim: Any sequence of push / pop / isEmpty is amortized O ( 1 ) Need to show any sequence of M operations takes time O ( M ) Recall the non-resizing work is O ( M ) (i.e., M* O ( 1 )) The resizing work is proportional to the total number of element copies we do for the resizing So it suffices to show that: After M operations, we have done < 2M total element copies (So average number of copies per operation is bounded by a constant) 6 CSE373: Data Structures & Algorithms Winter 2017

7 .Amount of copying After M operations, we have done < 2M total element copies Let n be the size of the array after M operations Then we have done a total of: n/2 + n/4 + n/8 + … INITIAL_SIZE < n element copies Because we must have done at least enough push operations to cause resizing up to size n : M n/2 So 2M n > number of element copies 7 CSE373: Data Structures & Algorithms Winter 2017

8 .Have to be careful If array grows by a constant amount (say 1000), operations are not amortized O ( 1 ) After O ( M ) operations, you may have done ( M 2 ) copies If array doubles when full and shrinks when 1/2 empty, operations are not amortized O ( 1 ) Terrible case : pop once and shrink, push once and grow, pop once and shrink, … If array doubles when full and shrinks when 3/4 empty, it is amortized O ( 1 ) Proof is more complicated, but basic idea remains: by the time an expensive operation occurs, many cheap ones occurred 8 CSE373: Data Structures & Algorithms Winter 2017

9 .Amortized Complexity Summary Like an average Y ou’re building up “credit” with N cheap tasks proportional to 1 expensive N task Sometimes hard to prove, but useful if your application doesn’t require every single operation to be cheap. There’s another example on slides from Wednesday involving Queues, if you’re curious 9 CSE373: Data Structures & Algorithms Winter 2017

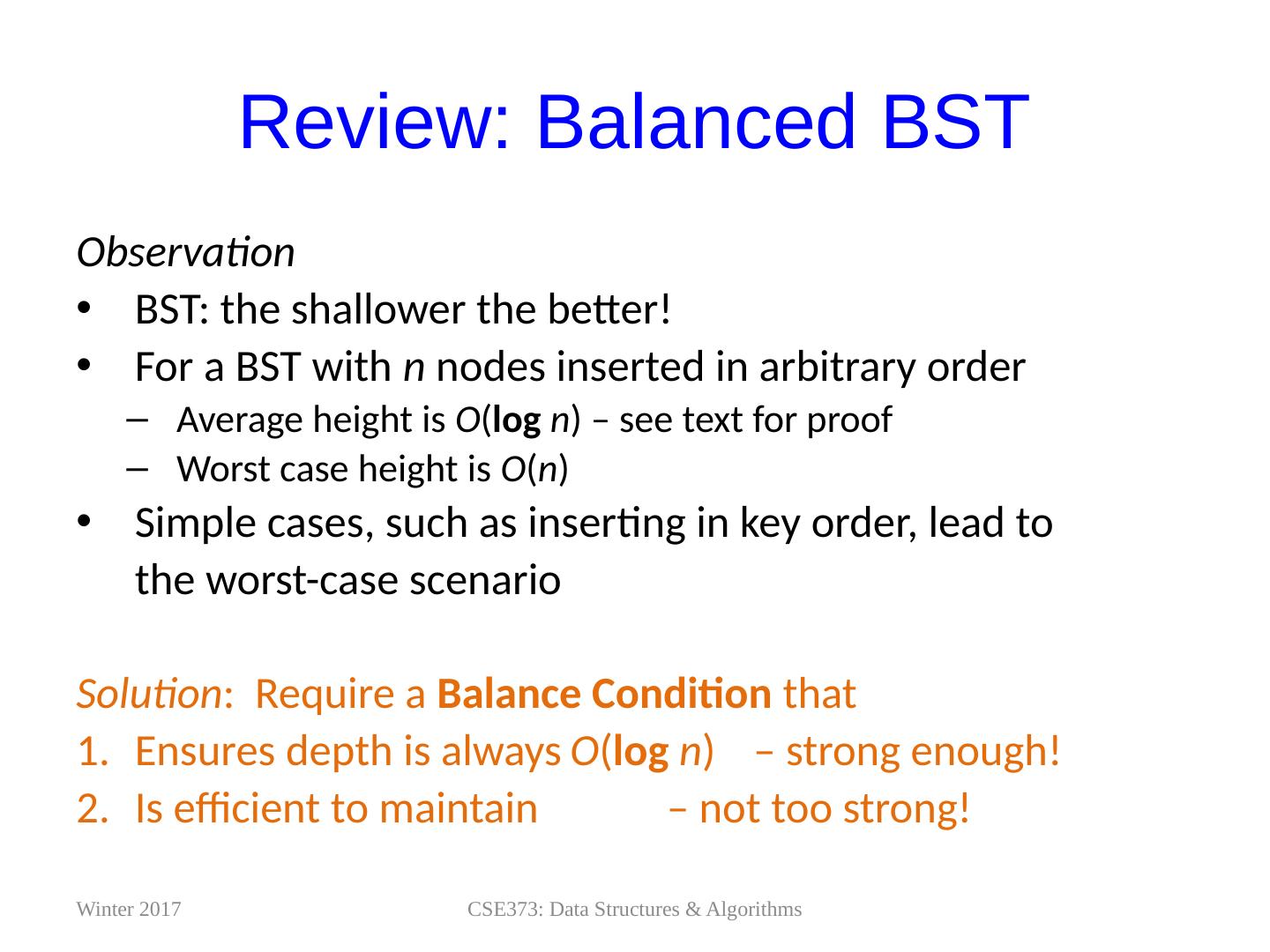

10 .Review: Balanced BST Observation BST: the shallower the better! For a BST with n nodes inserted in arbitrary order Average height is O ( log n ) – see text for proof Worst case height is O ( n ) Simple cases, such as inserting in key order, lead to the worst-case scenario Solution : Require a Balance Condition that E nsures depth is always O ( log n ) – strong enough! I s efficient to maintain – not too strong! CSE373: Data Structures & Algorithms Winter 2017

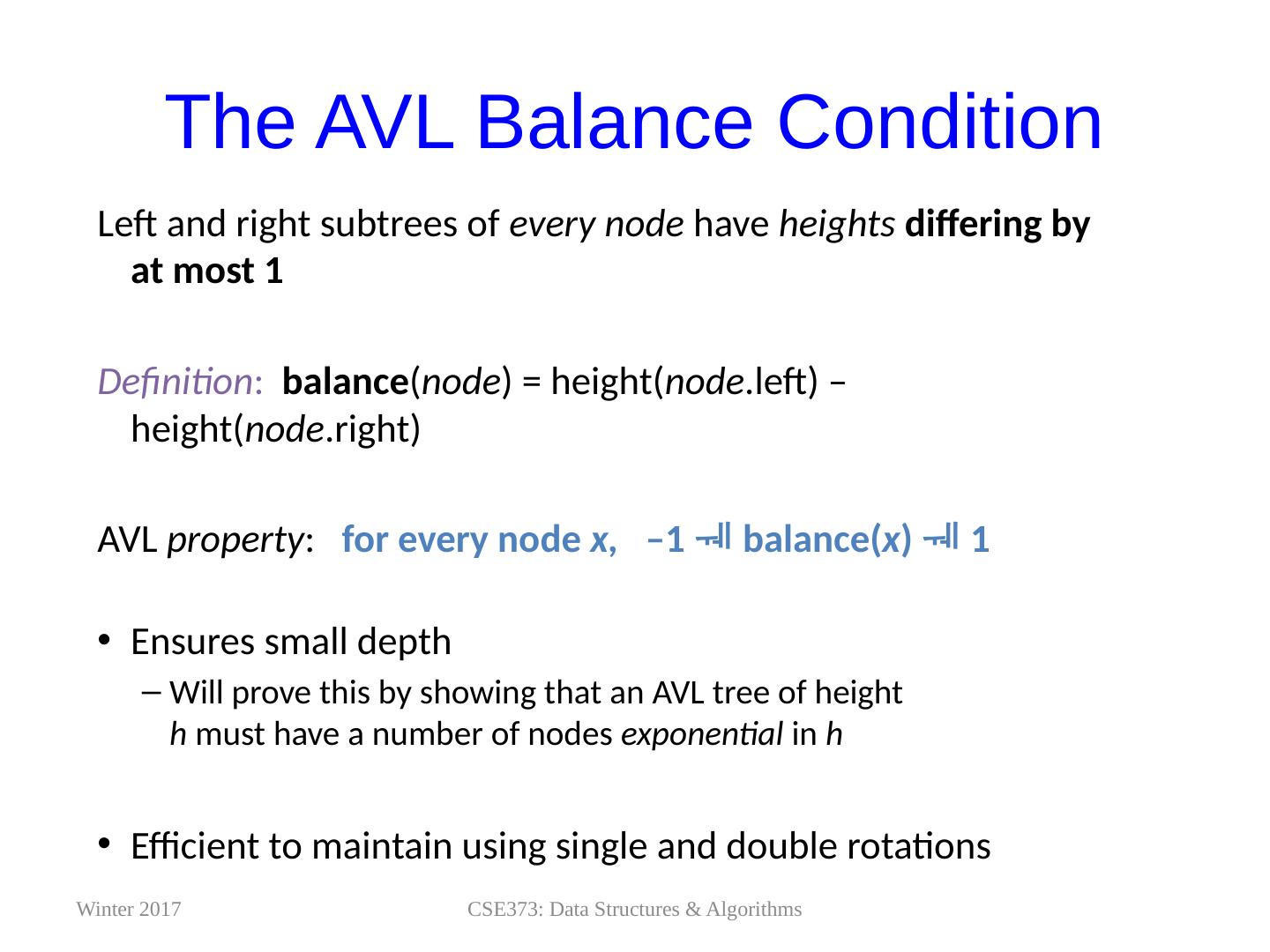

11 .The AVL Balance Condition Left and right subtrees of every node have heights differing by at most 1 Definition : balance ( node ) = height( node .left ) – height( node .right ) AVL property : for every node x, –1 balance( x ) 1 Ensures small depth Will prove this by showing that an AVL tree of height h must have a number of nodes exponential in h Efficient to maintain using single and double rotations Adelson-Velskii and Landis CSE373: Data Structures & Algorithms Winter 2017

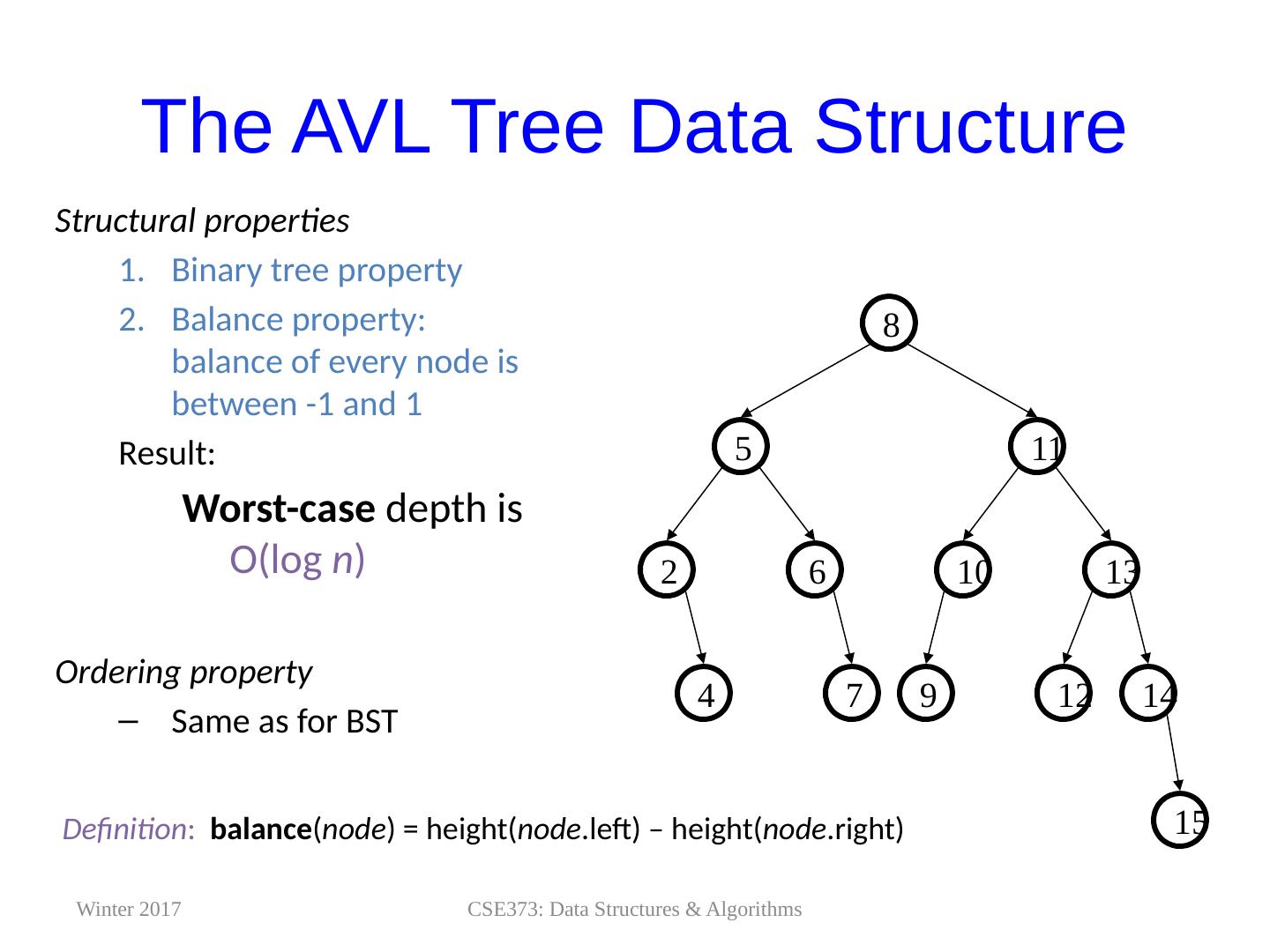

12 .The AVL Tree Data Structure 4 13 10 6 2 11 5 8 14 12 7 9 Structural properties Binary tree property Balance property: balance of every node is between -1 and 1 Result: Worst-case depth is O(log n ) Ordering property Same as for BST 15 CSE373: Data Structures & Algorithms Definition : balance ( node ) = height( node .left ) – height( node .right ) Winter 2017

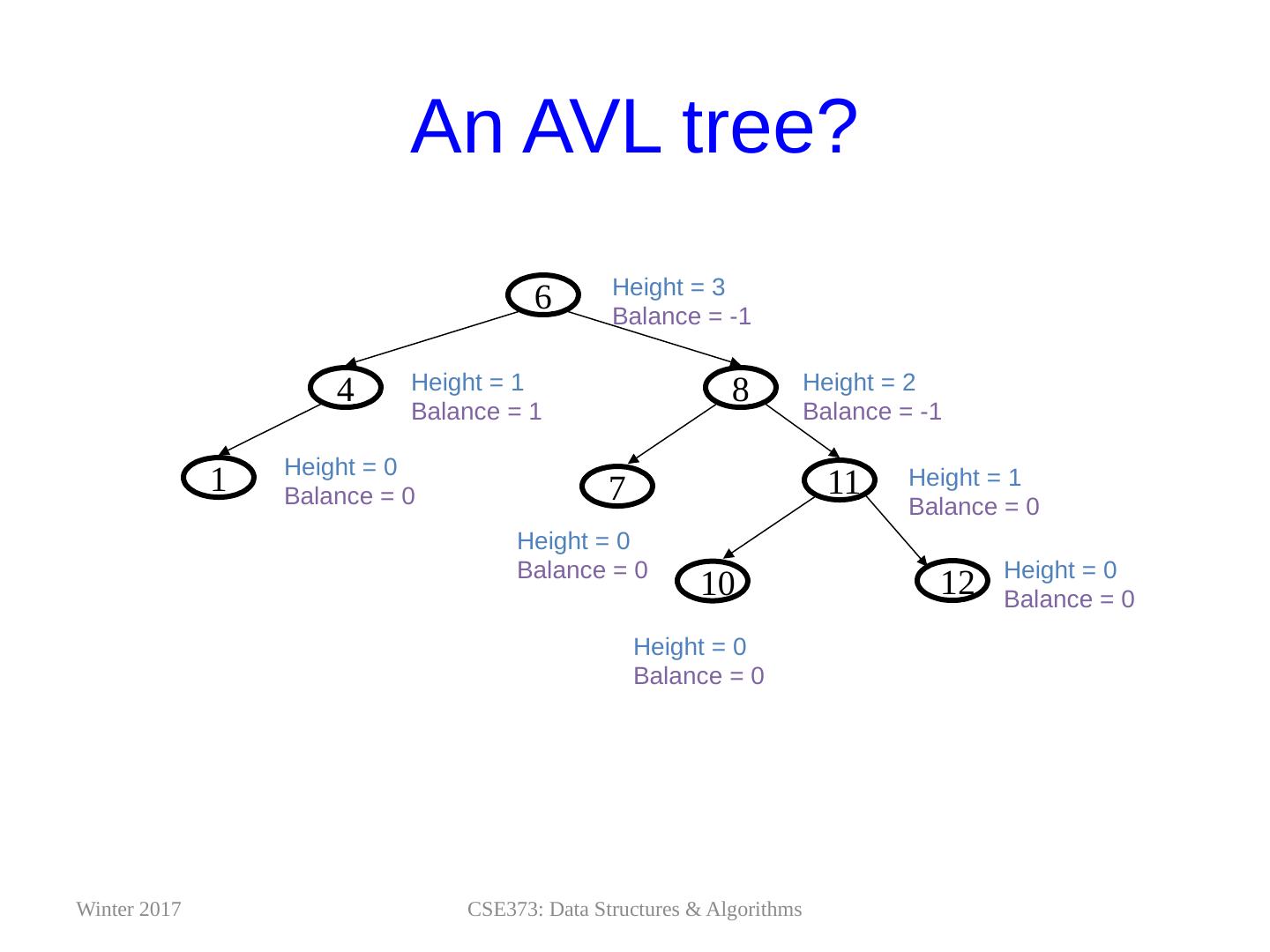

13 .11 1 8 4 6 10 12 7 Height = 0 Balance = 0 Height = 0 Balance = 0 Height = 0 Balance = 0 Height = 0 Balance = 0 Height = 1 Balance = 1 Height = 1 Balance = 0 Height = 2 Balance = -1 Height = 3 Balance = -1 An AVL tree? CSE373: Data Structures & Algorithms Winter 2017

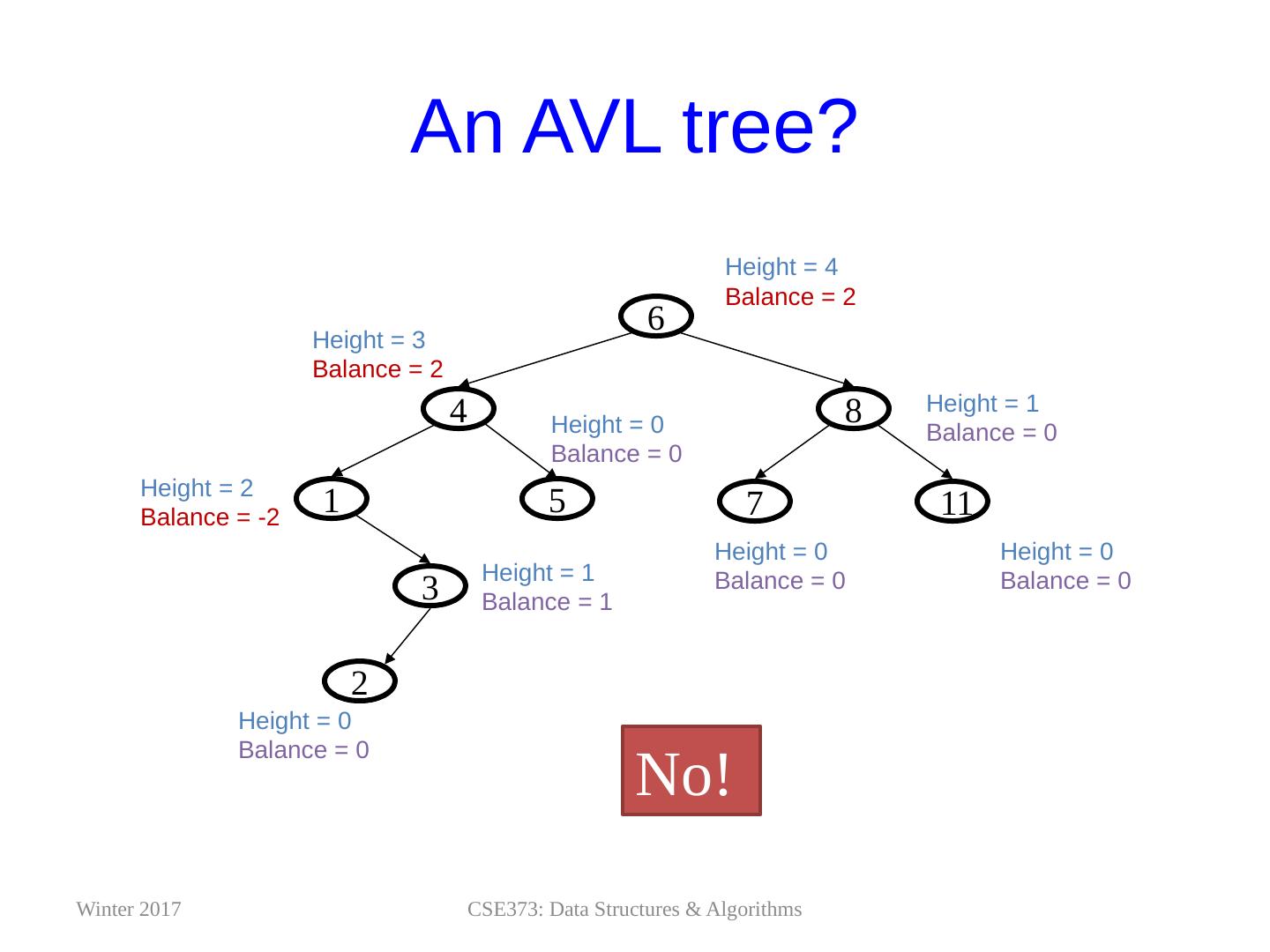

14 .3 11 7 1 8 4 6 2 5 Height = 0 Balance = 0 Height = 0 Balance = 0 Height = 0 Balance = 0 Height = 0 Balance = 0 Height = 1 Balance = 1 Height = 1 Balance = 0 Height = 2 Balance = -2 Height = 3 Balance = 2 Height = 4 Balance = 2 An AVL tree? CSE373: Data Structures & Algorithms No! Winter 2017

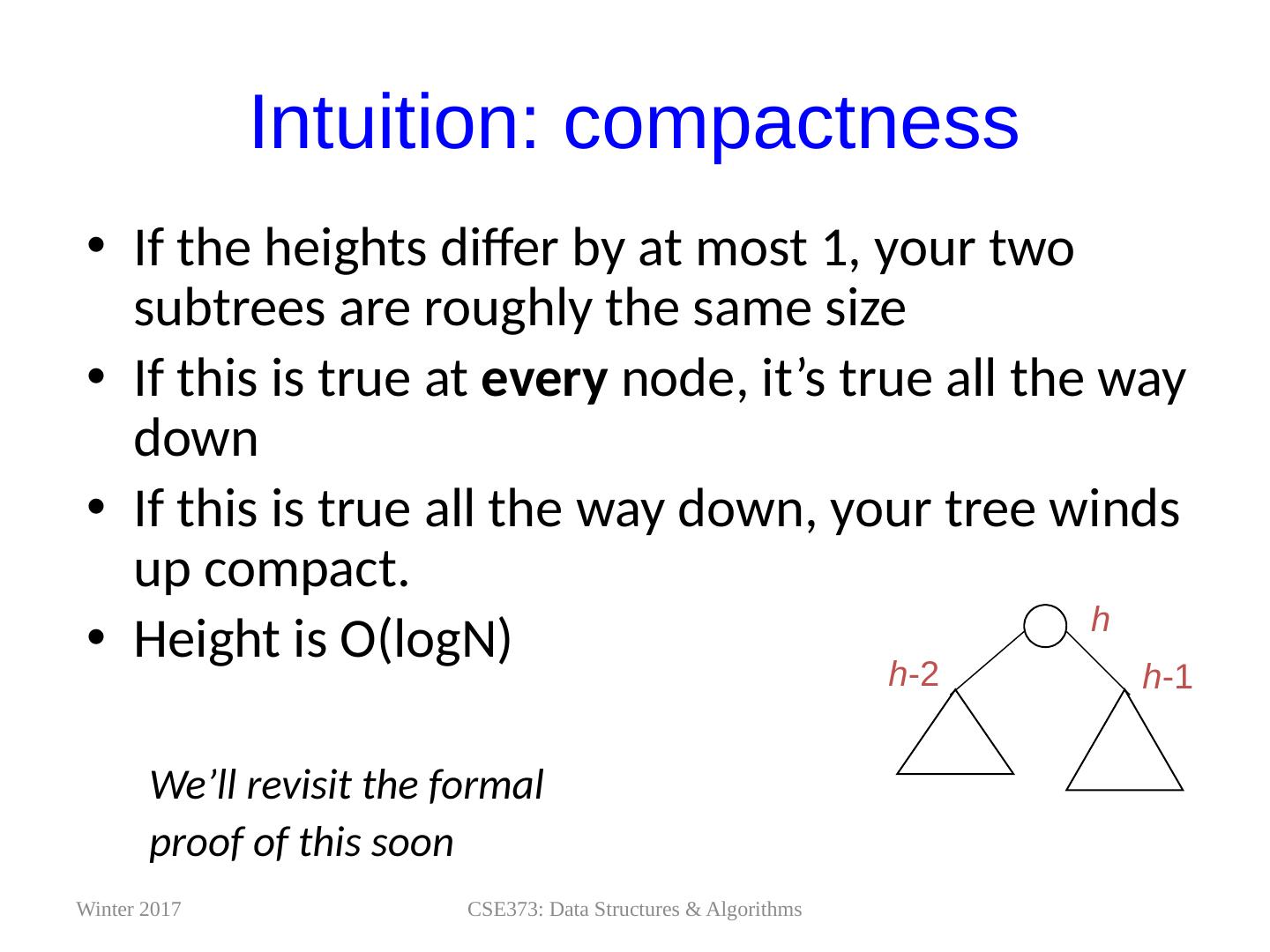

15 .Intuition: compactness If the heights differ by at most 1, your two subtrees are roughly the same size If this is true at every node, it’s true all the way down If this is true all the way down, your tree winds up compact. Height is O( logN ) We’ll revisit the formal proof of this soon h -1 h -2 h Proving O(log n) depth bound = m (h-1) + m (h-2) + 1 CSE373: Data Structures & Algorithms Winter 2017

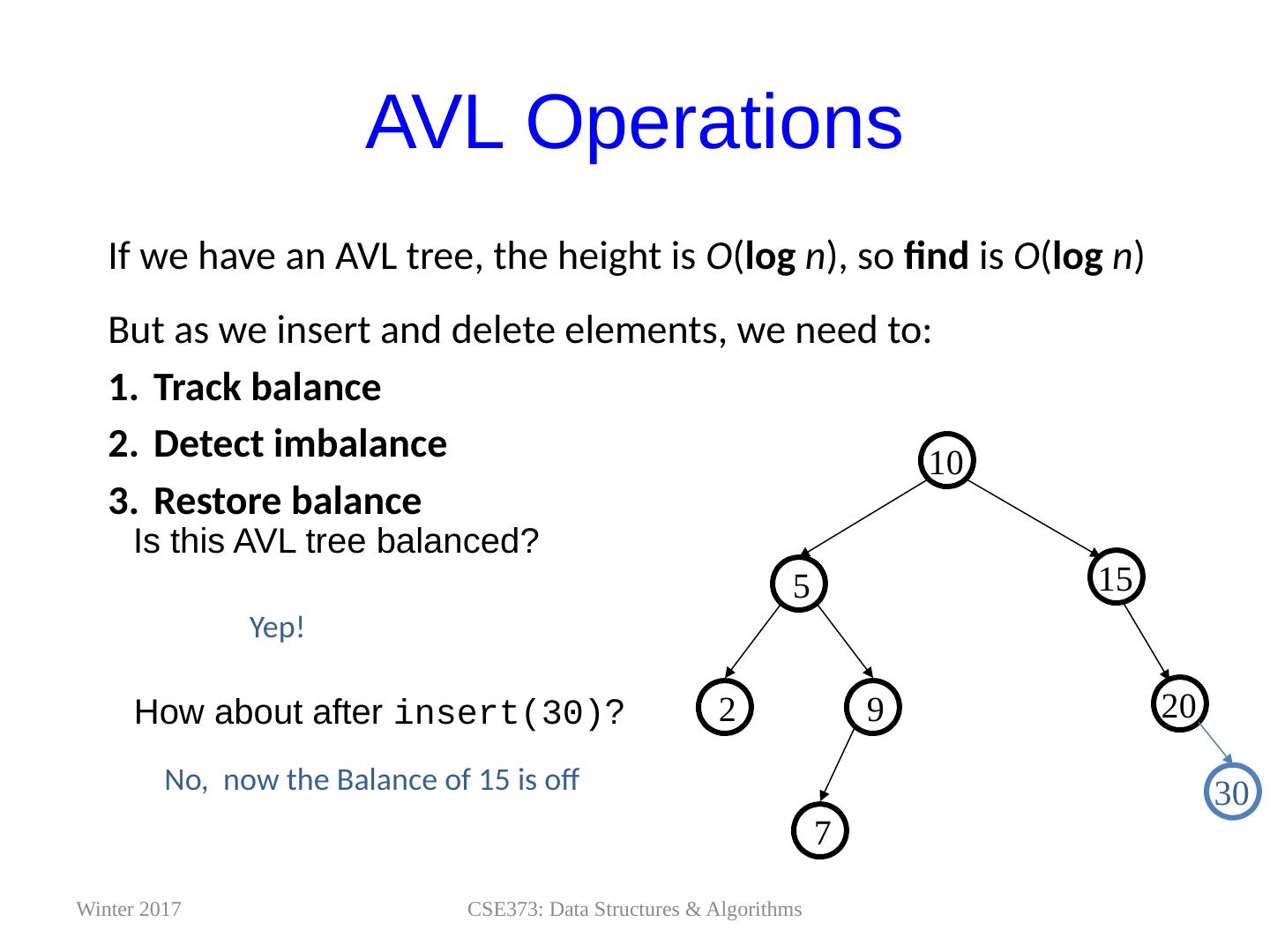

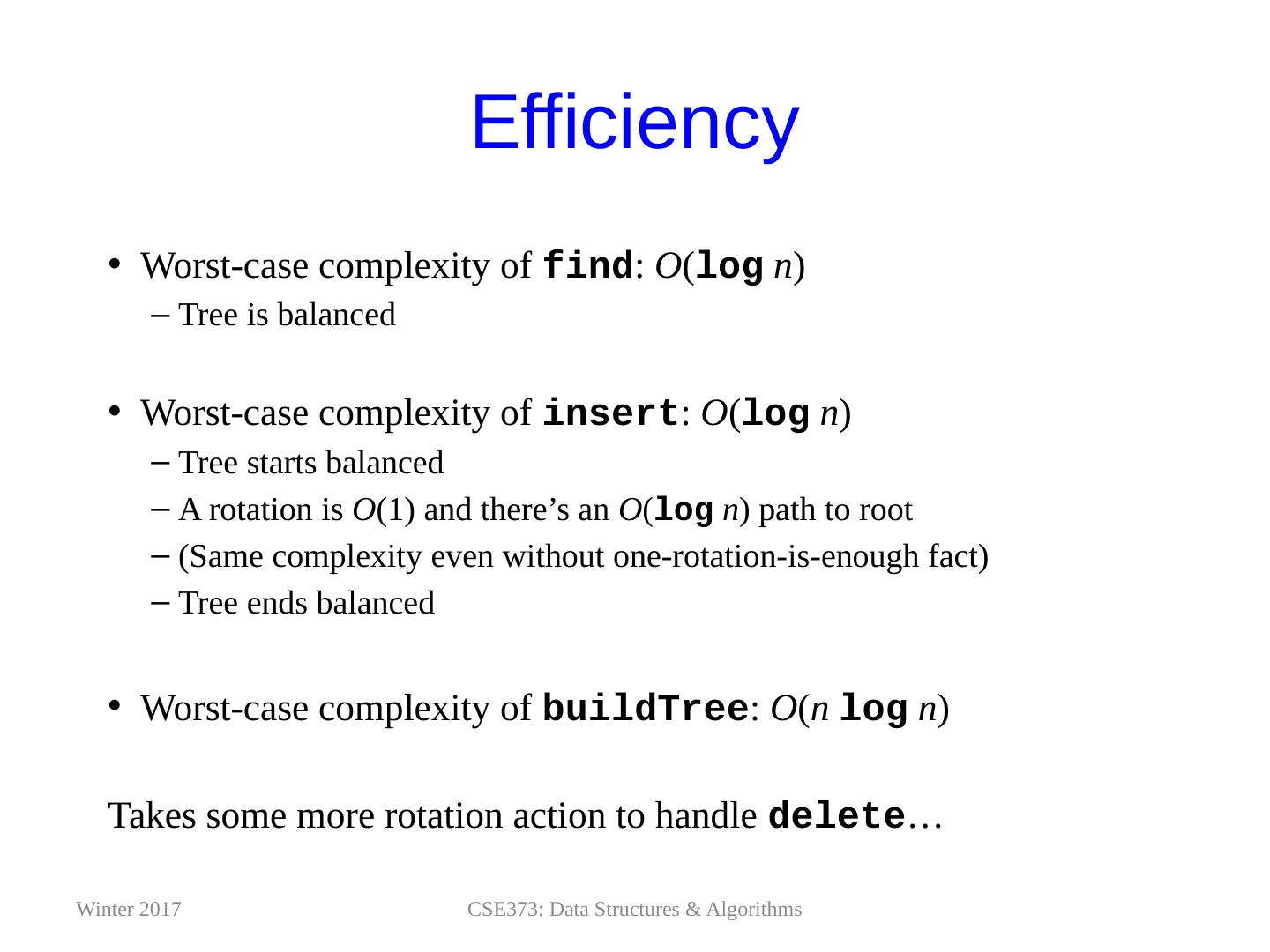

16 .AVL Operations If we have an AVL tree, the height is O ( log n ), so find is O ( log n ) But as we insert and delete elements, we need to: Track balance Detect imbalance Restore balance CSE373: Data Structures & Algorithms 9 2 5 10 7 Is this AVL tree balanced ? 15 20 Winter 2017 How about after insert(30) ? Yep! No, now the Balance of 15 is off 3 0

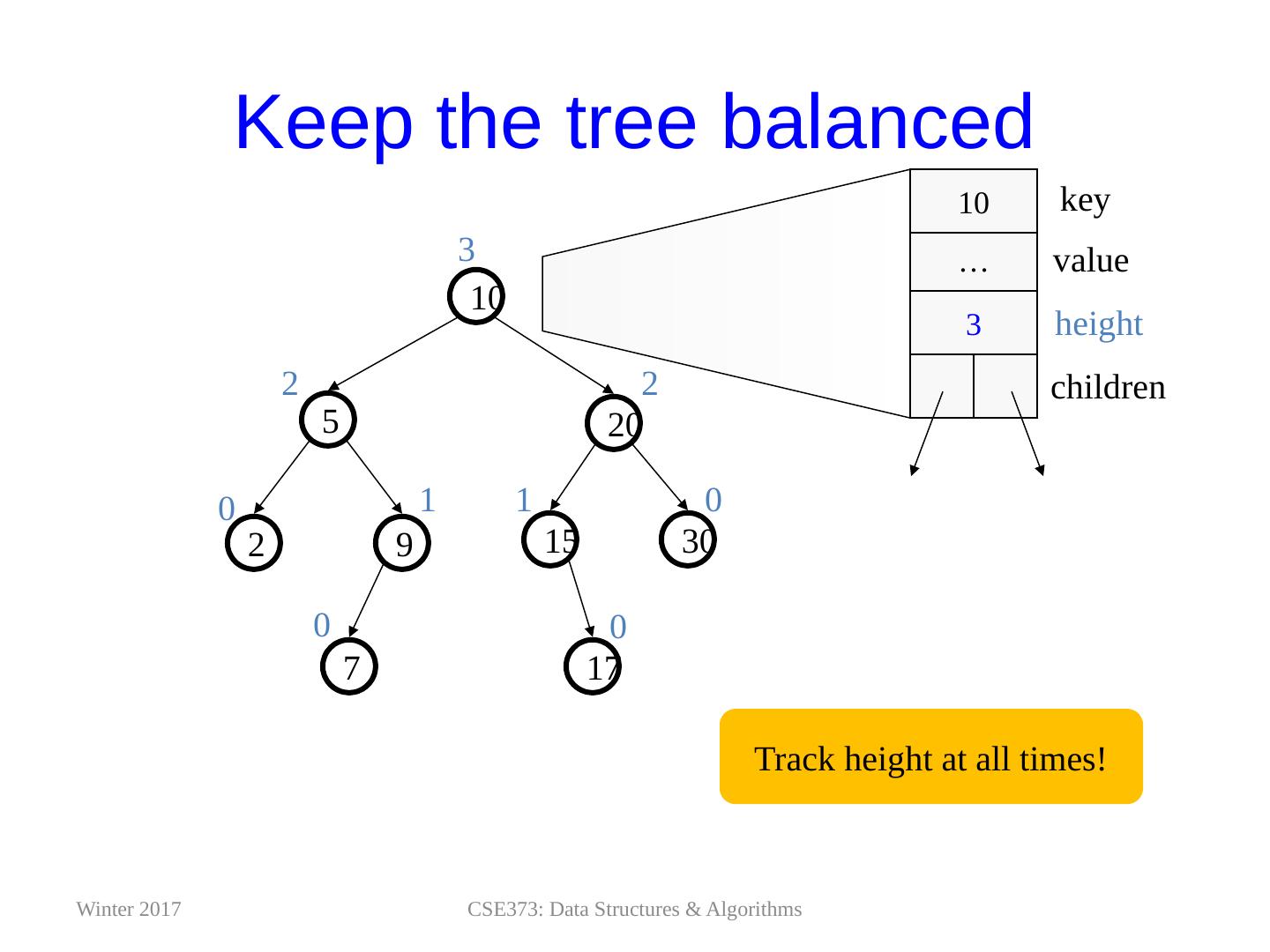

17 .Keep the tree balanced 20 9 2 15 5 10 30 17 7 0 0 0 0 1 1 2 2 3 … 3 value height children Track height at all times! 10 key CSE373: Data Structures & Algorithms Winter 2017

18 .AVL tree Operations AVL find : Same as BST find AVL insert : First BST insert , then check balance and potentially “ fix” the AVL tree Four different imbalance cases AVL delete : The “easy way” is lazy deletion Otherwise, do the deletion and then have several imbalance cases CSE373: Data Structures & Algorithms Winter 2017

19 .Insert: detect potential imbalance Insert the new node as in a BST (a new leaf) For each node on the path from the root to the new leaf, the insertion may (or may not) have changed the node’s height So after recursive insertion in a subtree , detect height imbalance and perform a rotation to restore balance at that node Type of rotation will depend on the location of the imbalance (if any) Facts about insert imbalances: If there’s an imbalance, there must be a deepest element that is imbalanced after the insert After rebalancing this deepest node, every node is balanced So at most one node needs to be rebalanced CSE373: Data Structures & Algorithms Winter 2017

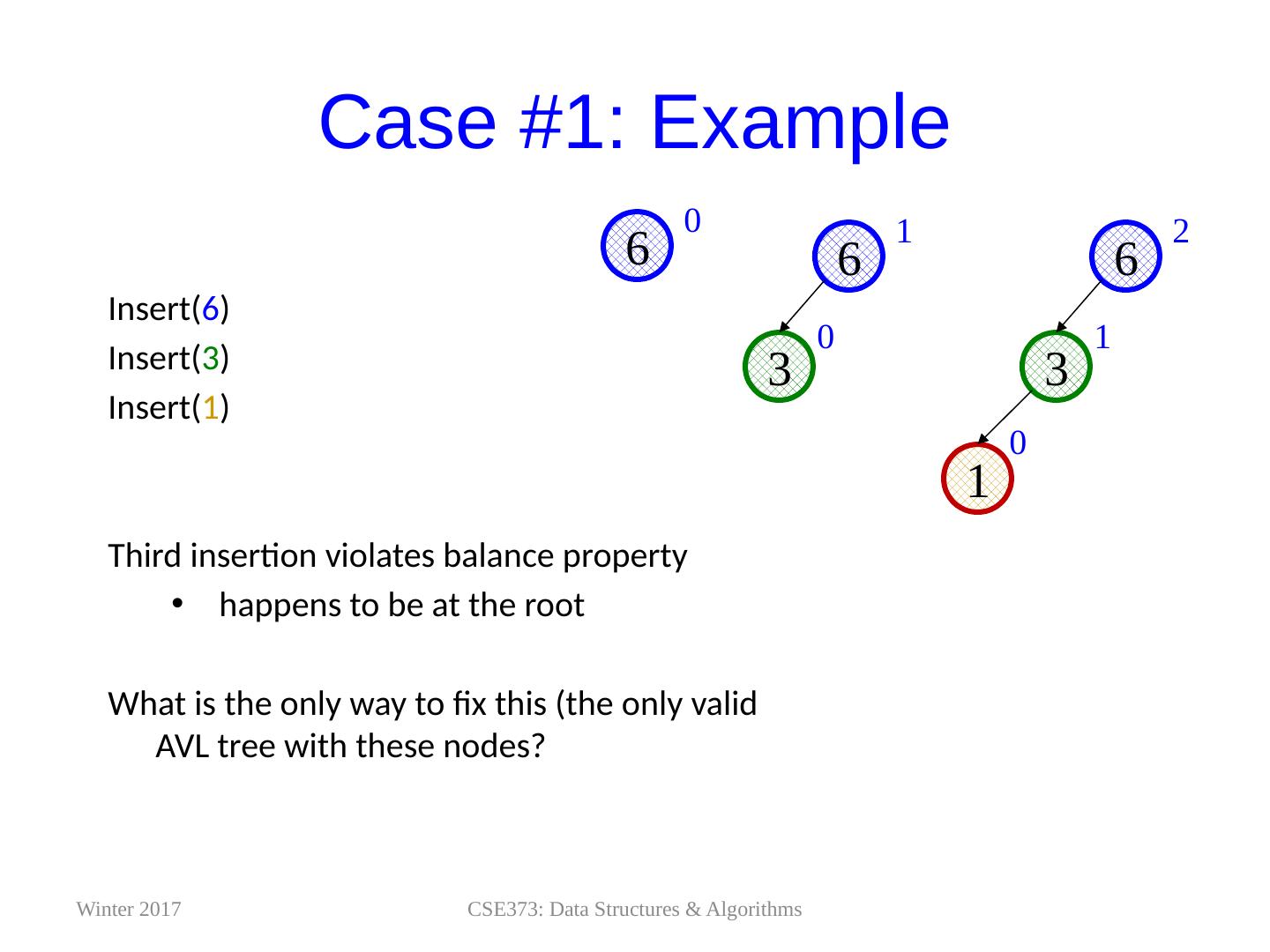

20 .Case #1: Example CSE373: Data Structures & Algorithms Insert( 6 ) Insert( 3 ) Insert( 1 ) Third insertion violates balance property happens to be at the root What is the only way to fix this (the only valid AVL tree with these nodes? 6 3 1 2 1 0 6 3 1 0 6 0 Winter 2017

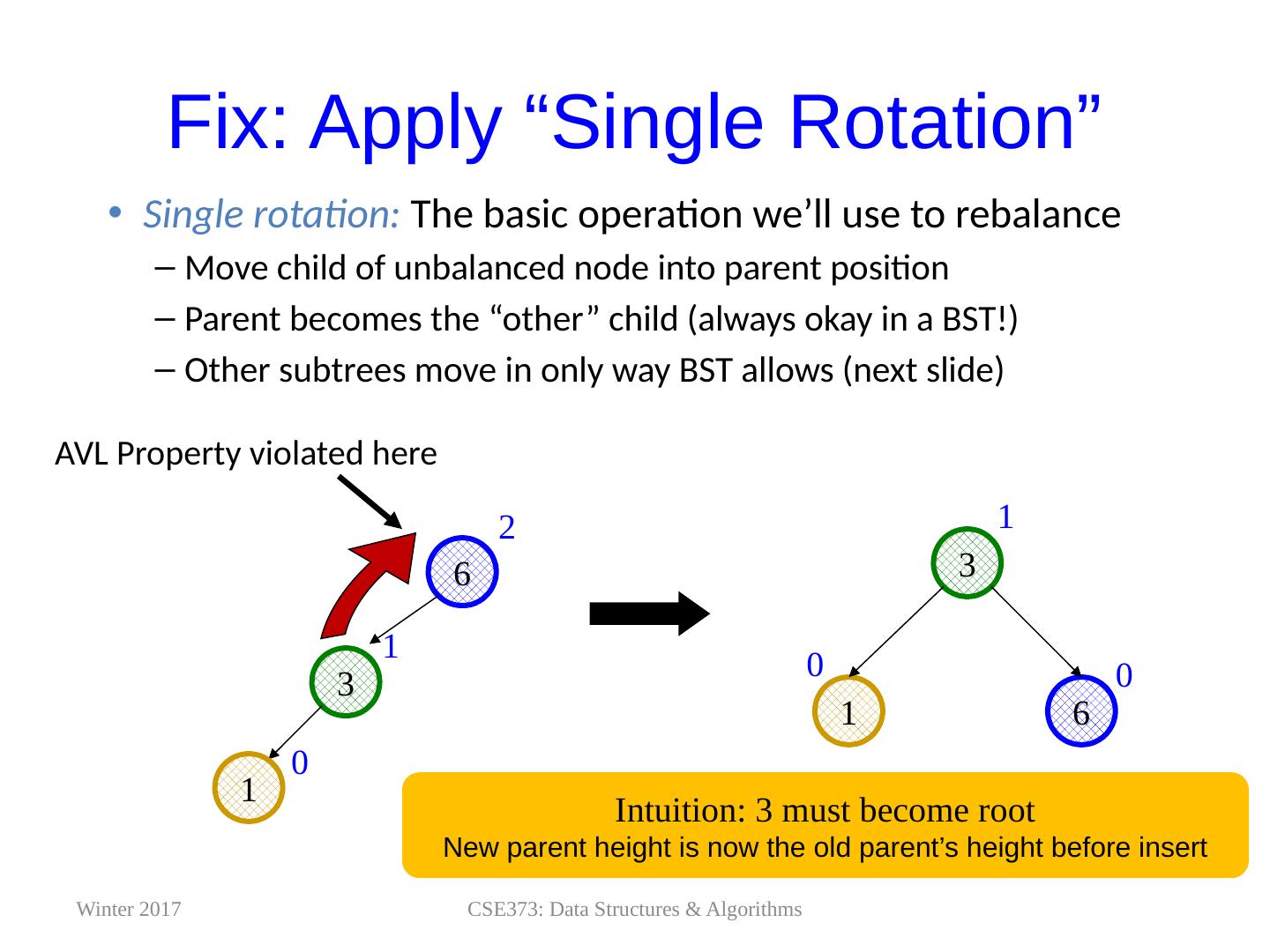

21 .Fix: Apply “Single Rotation” Single rotation: The basic operation we’ll use to rebalance Move child of unbalanced node into parent position Parent becomes the “other” child (always okay in a BST!) Other subtrees move in only way BST allows (next slide) CSE373: Data Structures & Algorithms 3 1 6 0 0 1 6 3 0 1 2 AVL Property violated here Intuition : 3 must become root New parent height is now the old parent’s height before insert 1 Winter 2017

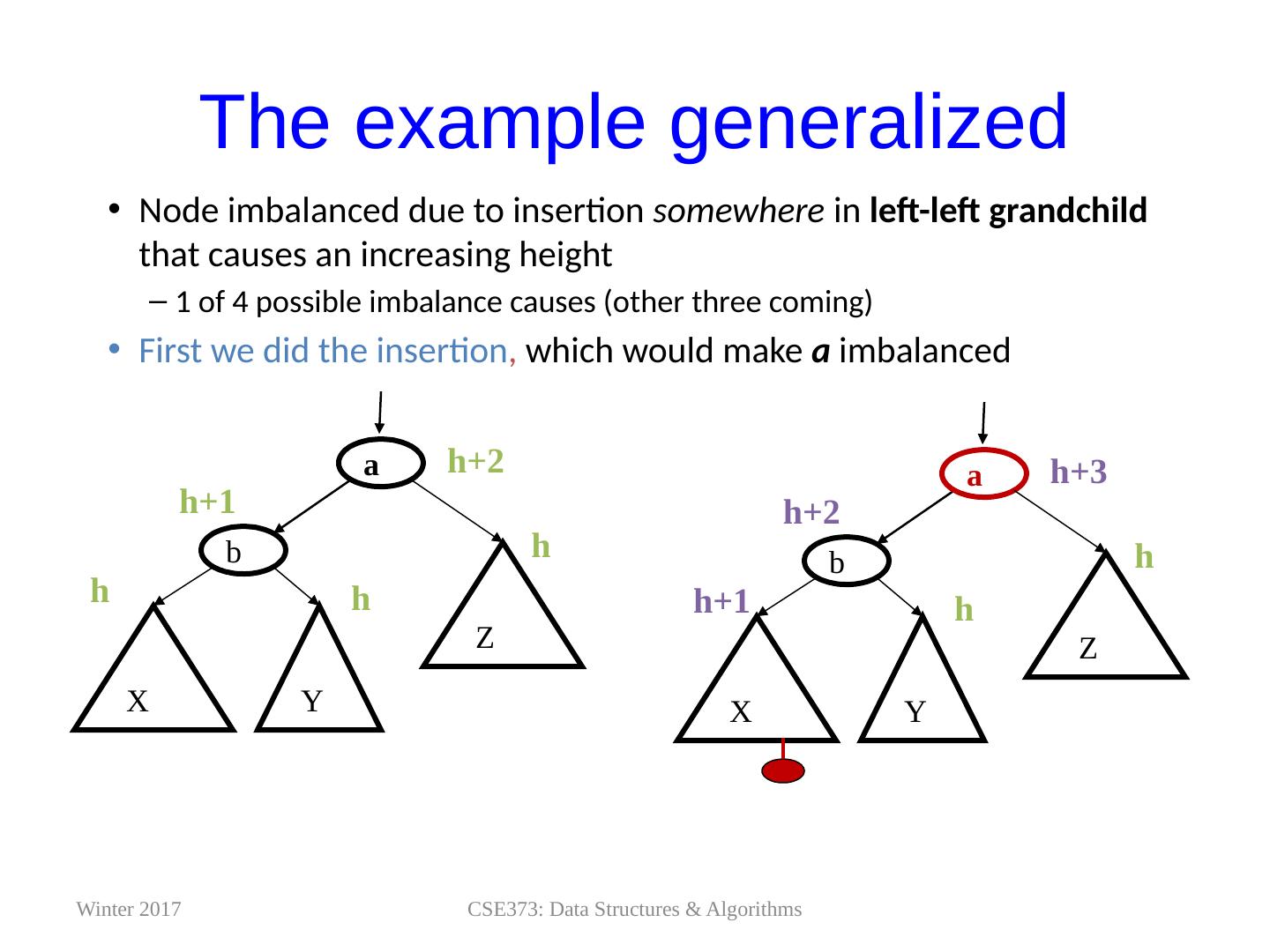

22 .The example generalized Node imbalanced due to insertion somewhere in left-left grandchild that causes an increasing height 1 of 4 possible imbalance causes (other three coming) First we did the insertion , which would make a imbalanced CSE373: Data Structures & Algorithms a Z Y b X h h h h+1 h+2 a Z Y b X h+1 h h h+2 h+3 Winter 2017

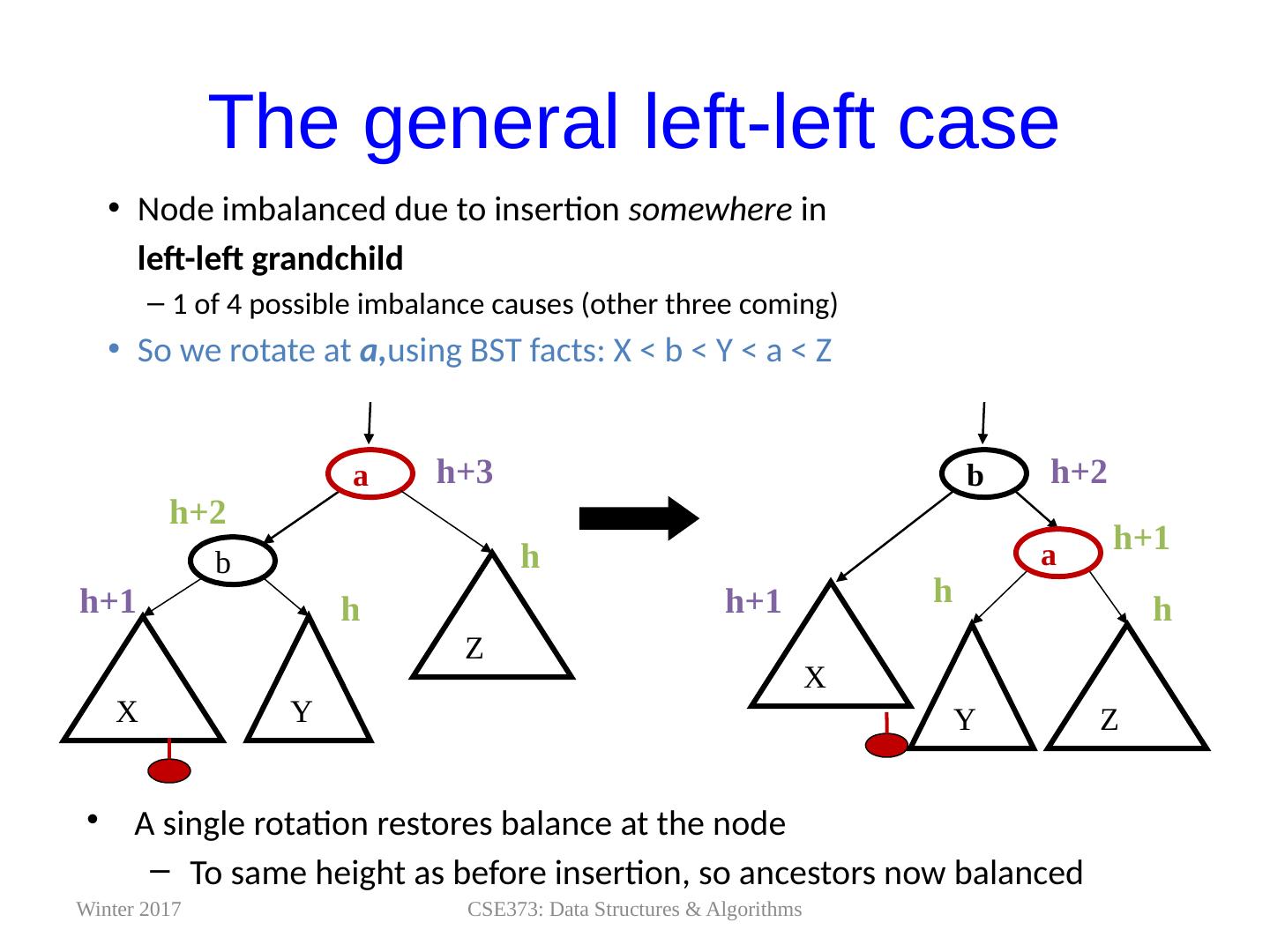

23 .The general left-left case Node imbalanced due to insertion somewhere in left-left grandchild 1 of 4 possible imbalance causes (other three coming) So we rotate at a, using BST facts: X < b < Y < a < Z CSE373: Data Structures & Algorithms A single rotation restores balance at the node To same height as before insertion, so ancestors now balanced a Z Y b X h+1 h h h+2 h+3 b Z Y h+1 h h h+1 h+2 X a Winter 2017

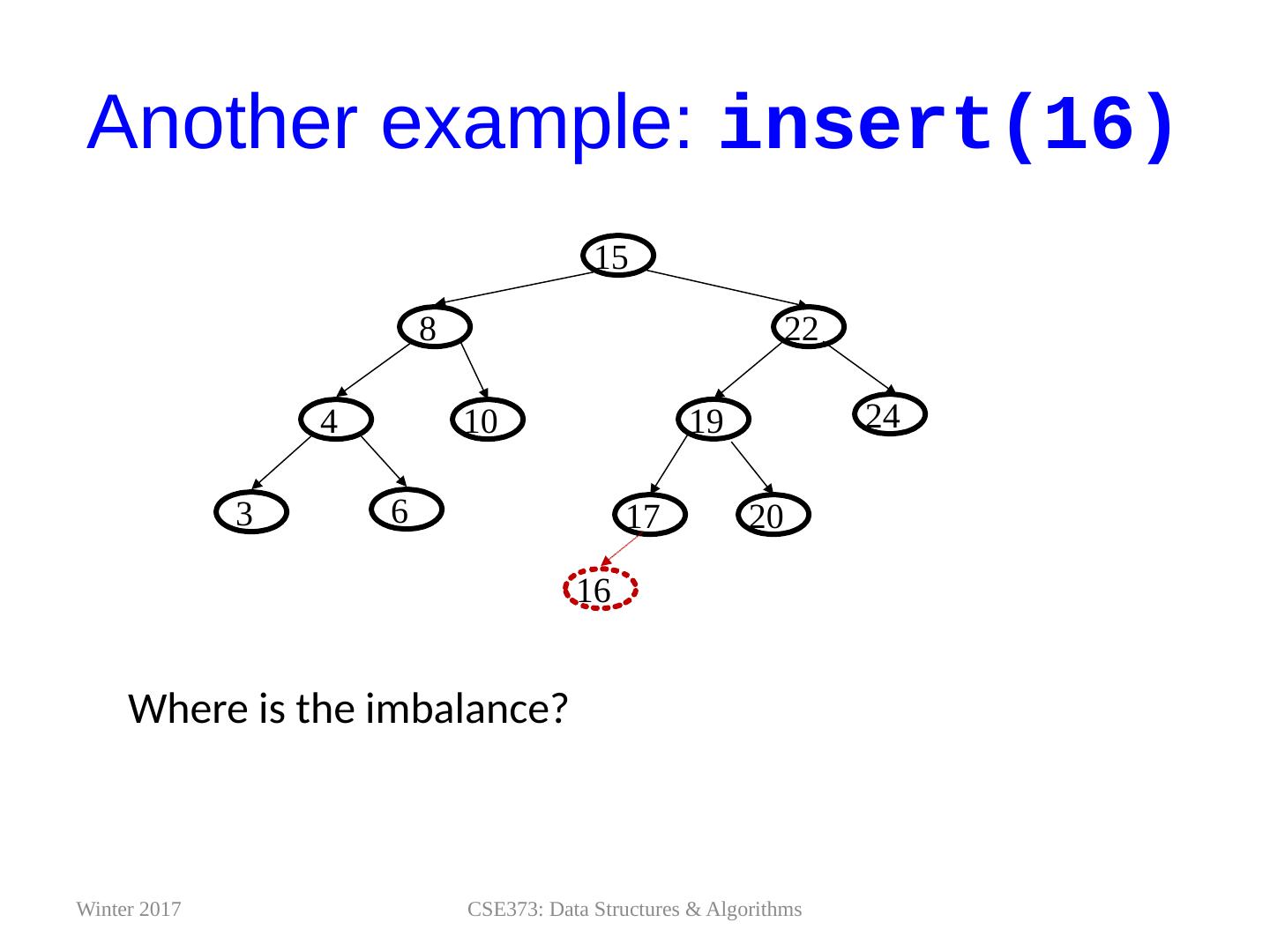

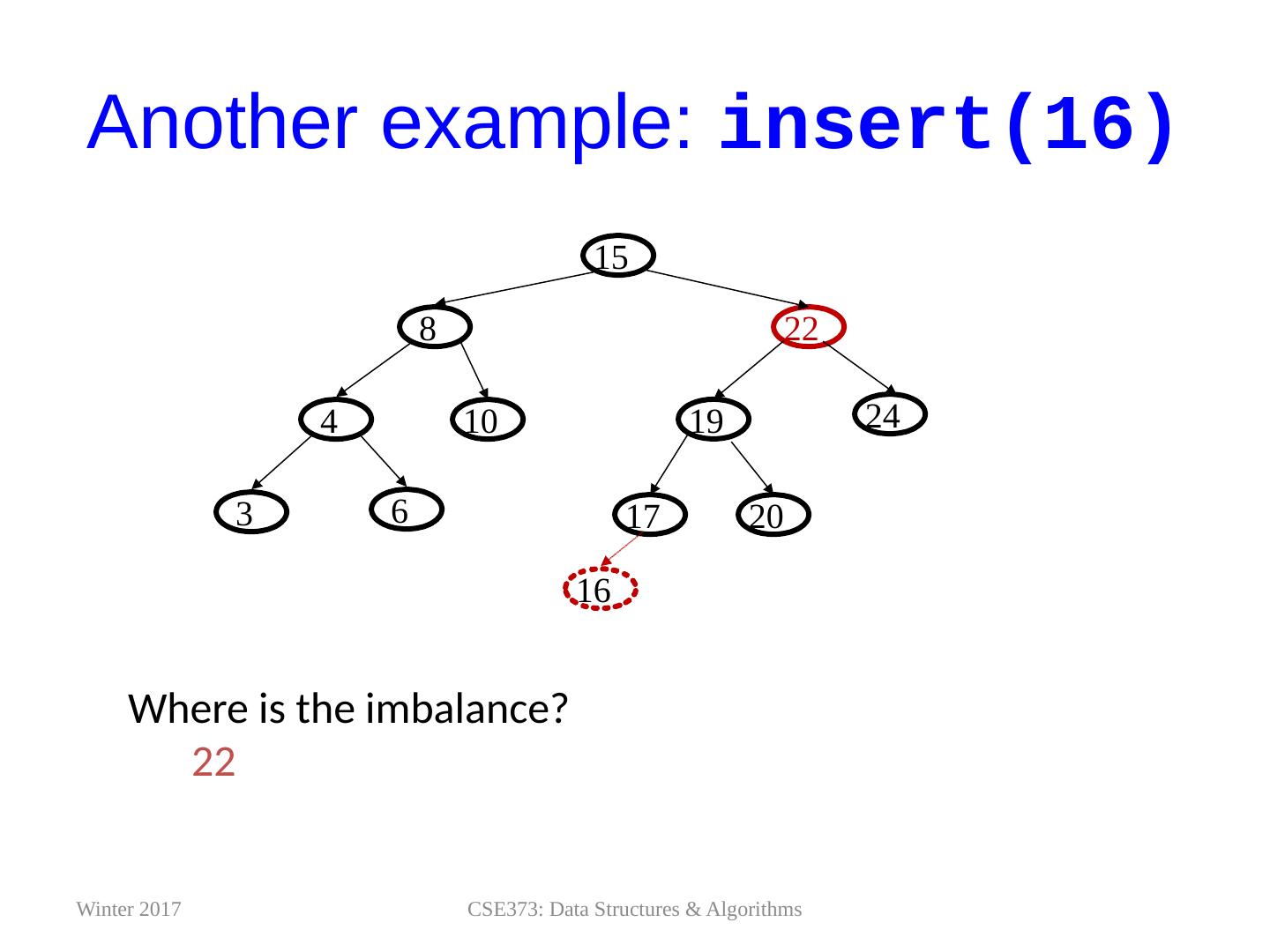

24 .Another example: insert(16) CSE373: Data Structures & Algorithms 10 4 22 8 15 3 6 19 17 20 24 16 Winter 2017 Where is the imbalance?

25 .Another example: insert(16) CSE373: Data Structures & Algorithms 10 4 22 8 15 3 6 19 17 20 24 16 Winter 2017 Where is the imbalance? 22

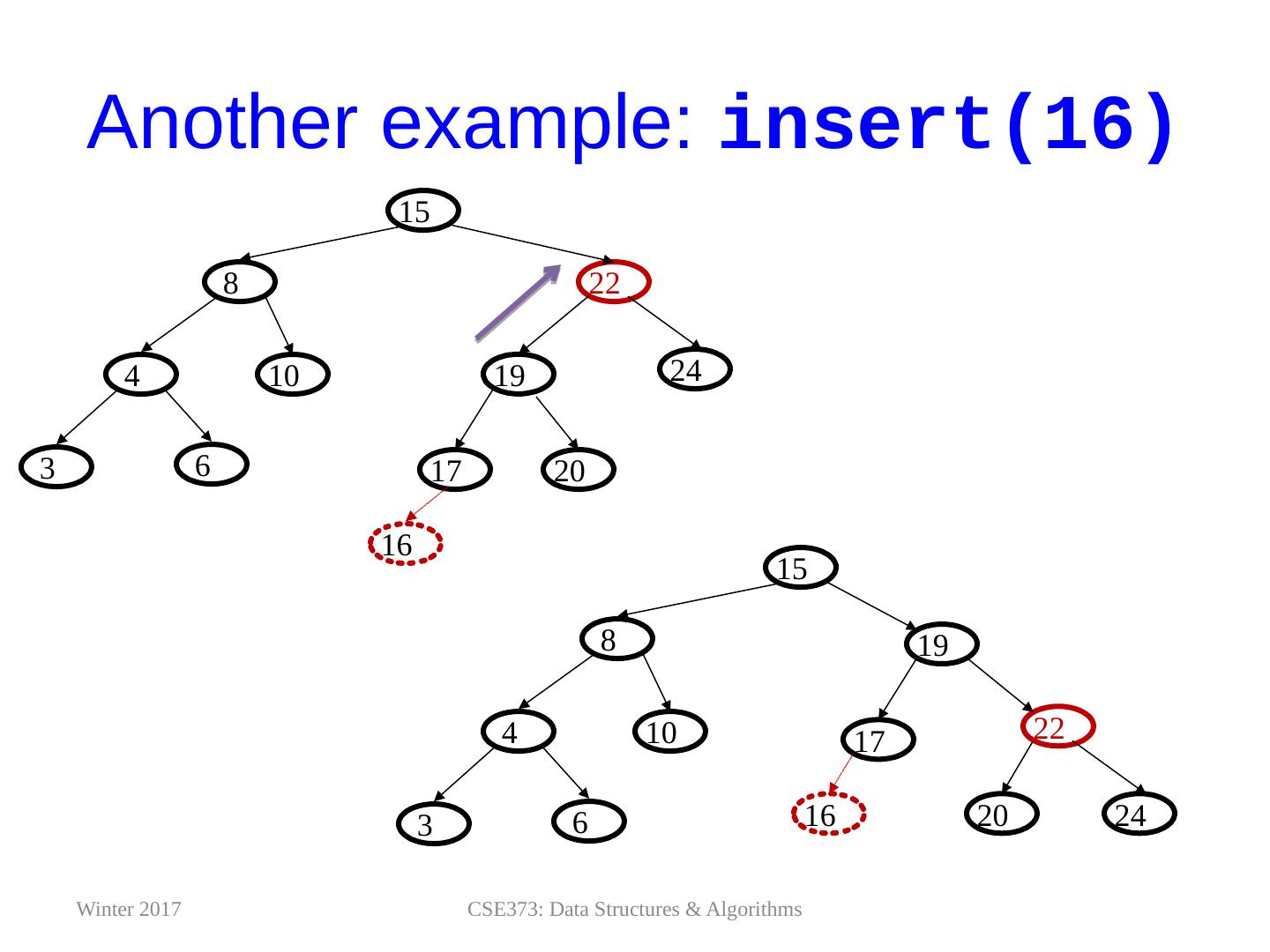

26 .Another example: insert(16) CSE373: Data Structures & Algorithms 10 4 22 8 15 3 6 19 17 20 24 16 10 4 8 15 3 6 19 17 20 16 22 24 Winter 2017

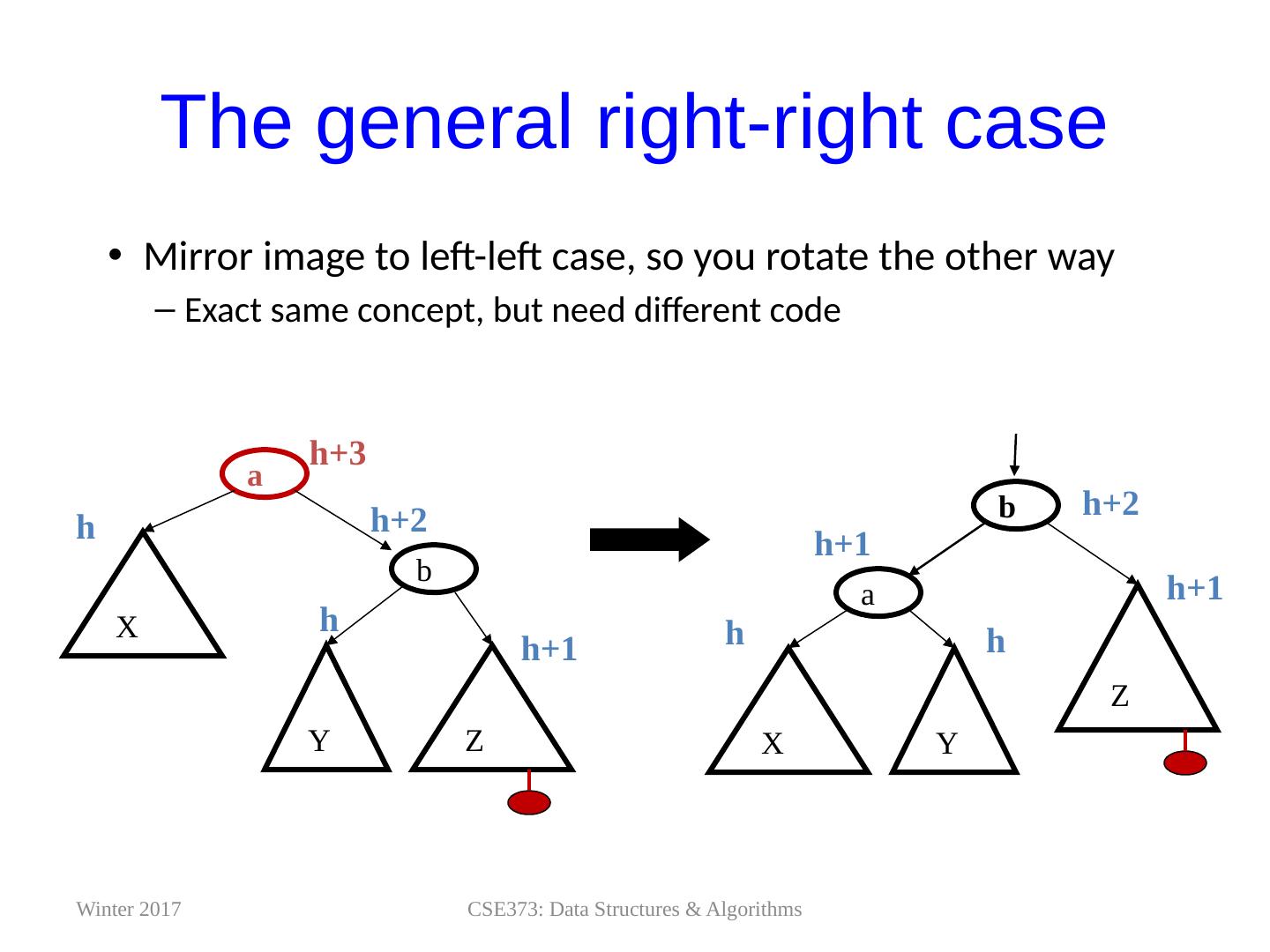

27 .The general right-right case Mirror image to left-left case, so you rotate the other way Exact same concept, but need different code CSE373: Data Structures & Algorithms a Z Y X h h h+1 h+3 b h+2 b Z Y a X h h h+1 h+1 h+2 Winter 2017

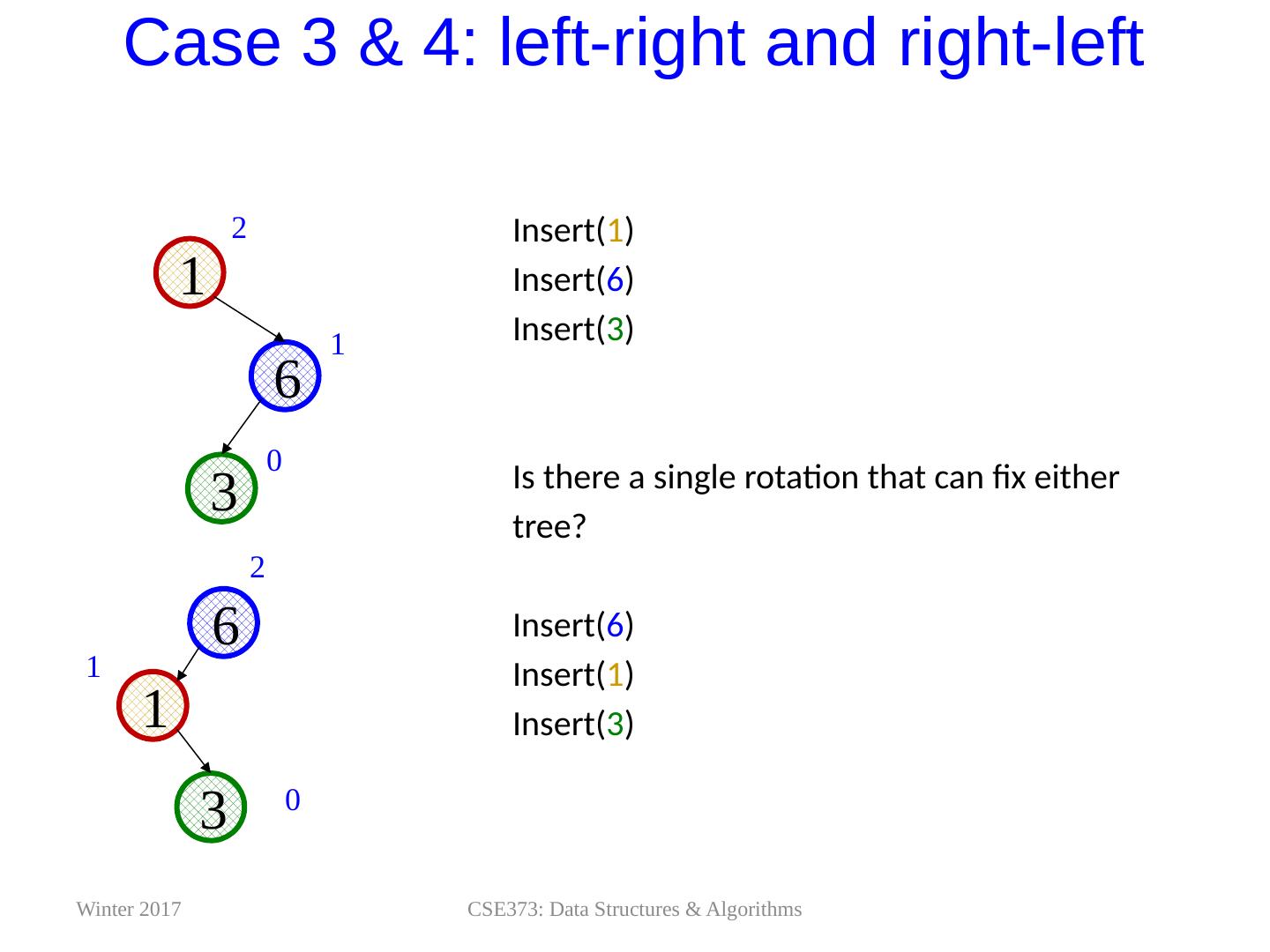

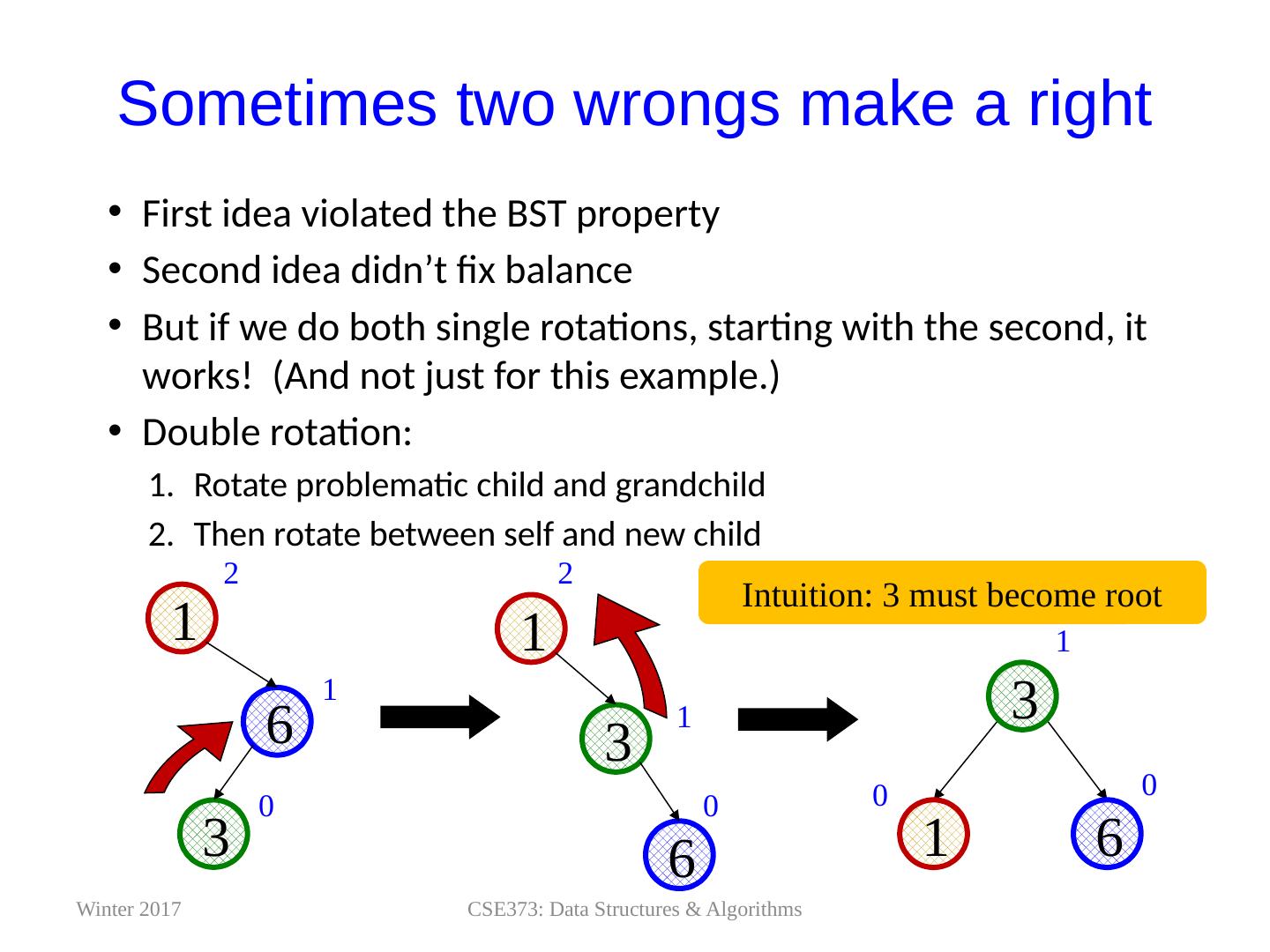

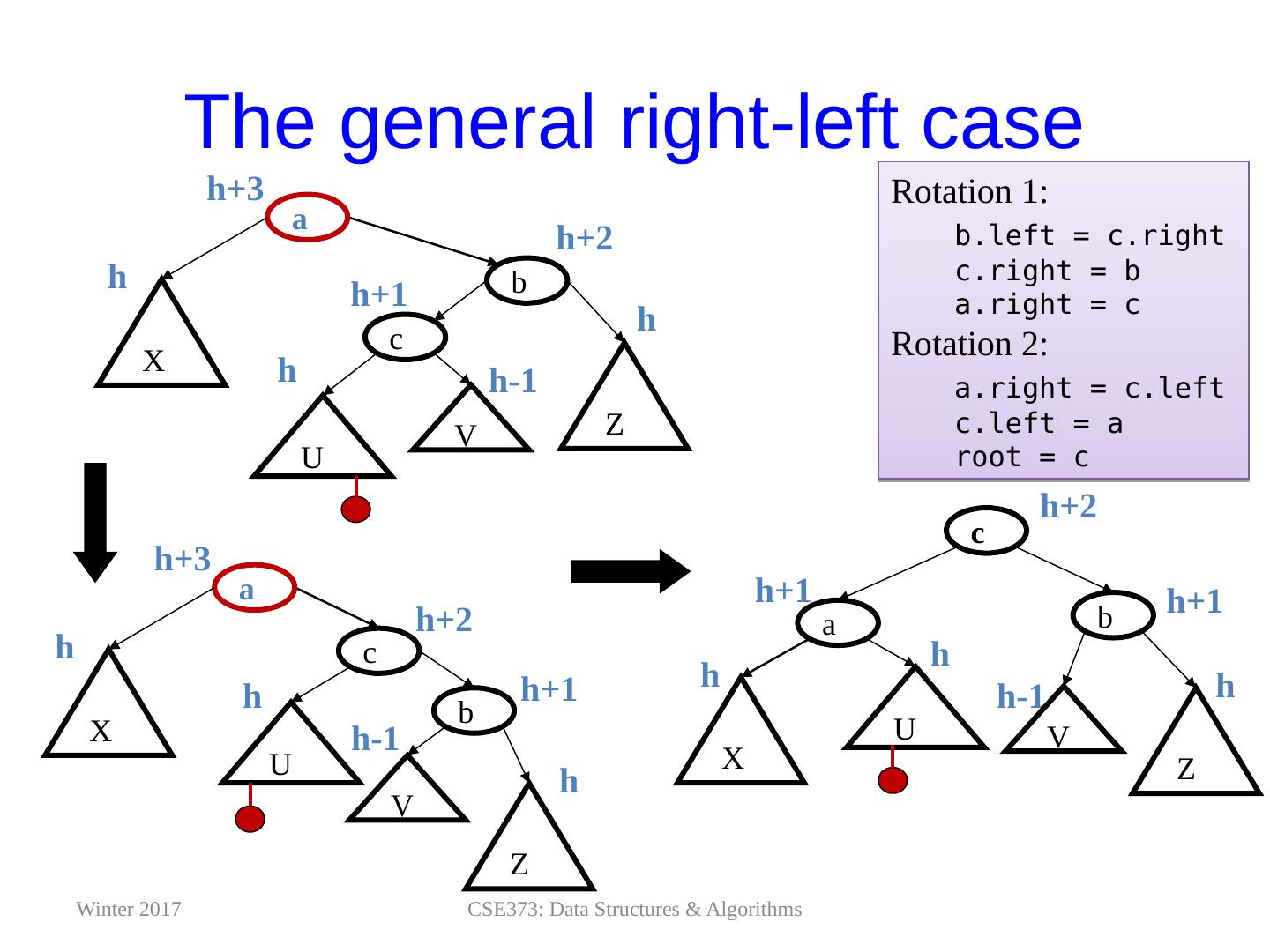

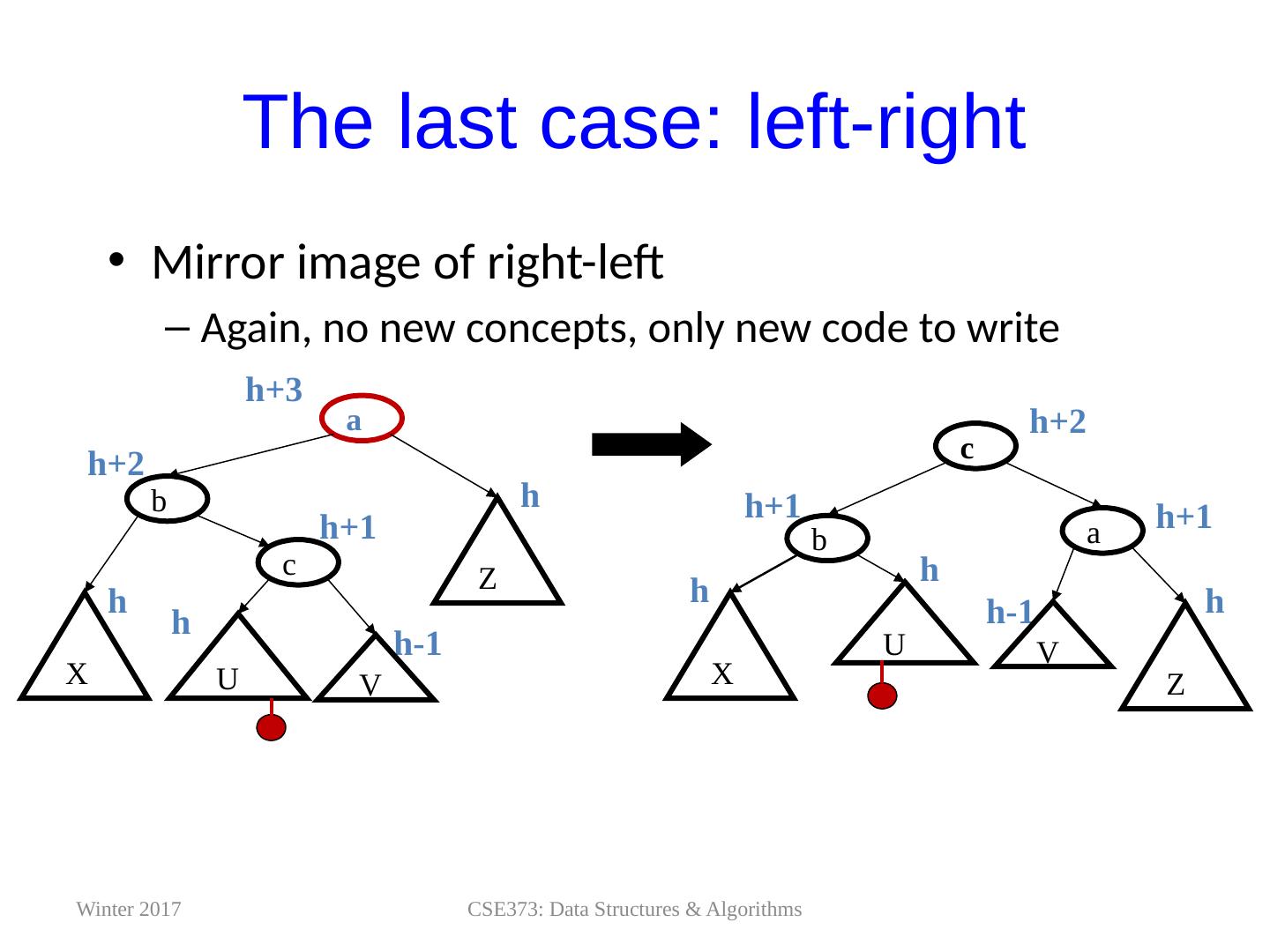

28 .Case 3 & 4: left-right and right-left CSE373: Data Structures & Algorithms Insert( 1 ) Insert( 6 ) Insert( 3 ) Is there a single rotation that can fix either tree? Insert ( 6 ) Insert( 1 ) Insert( 3 ) Winter 2017 3 6 1 0 1 2 3 6 1 0 1 2

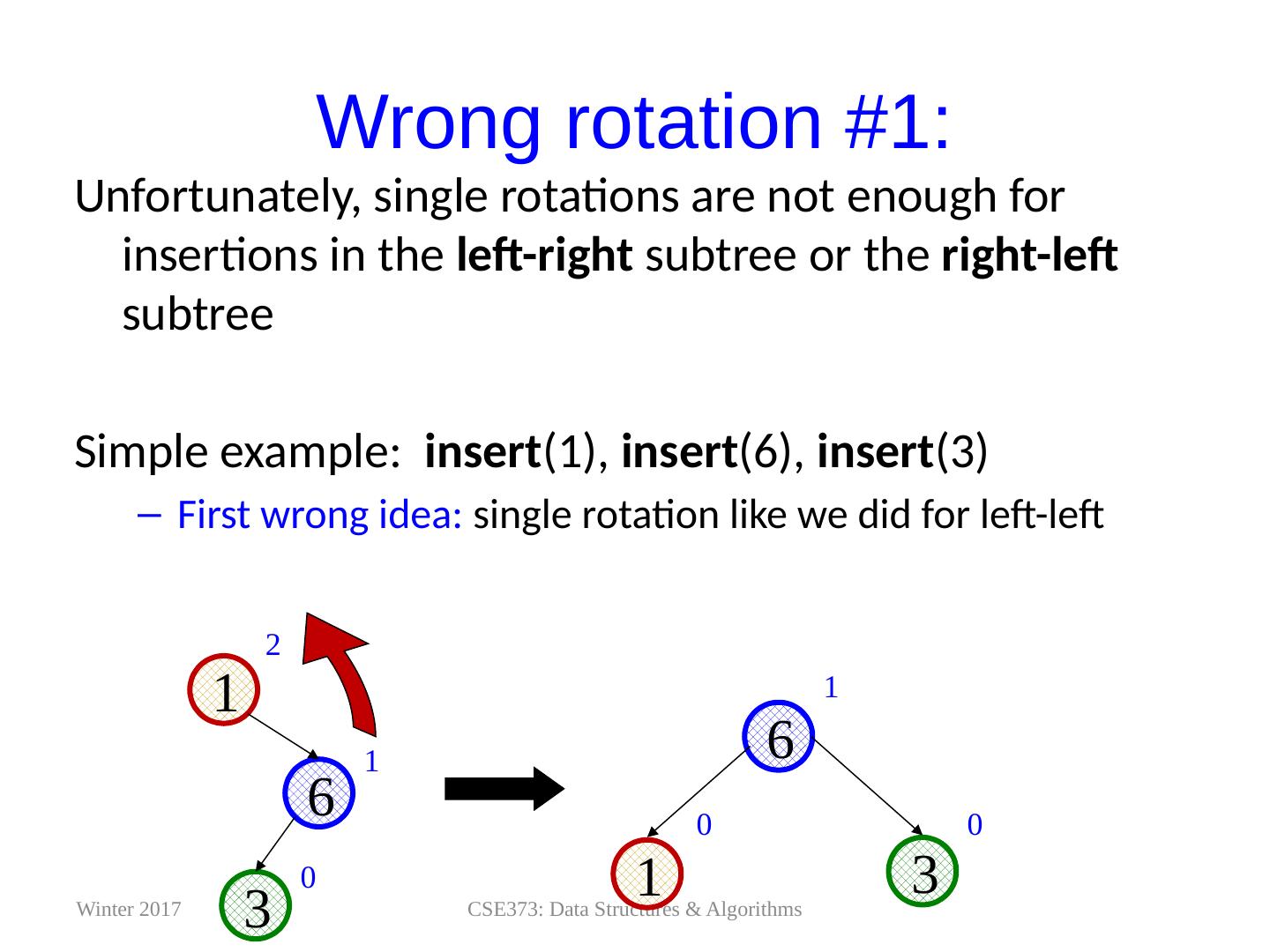

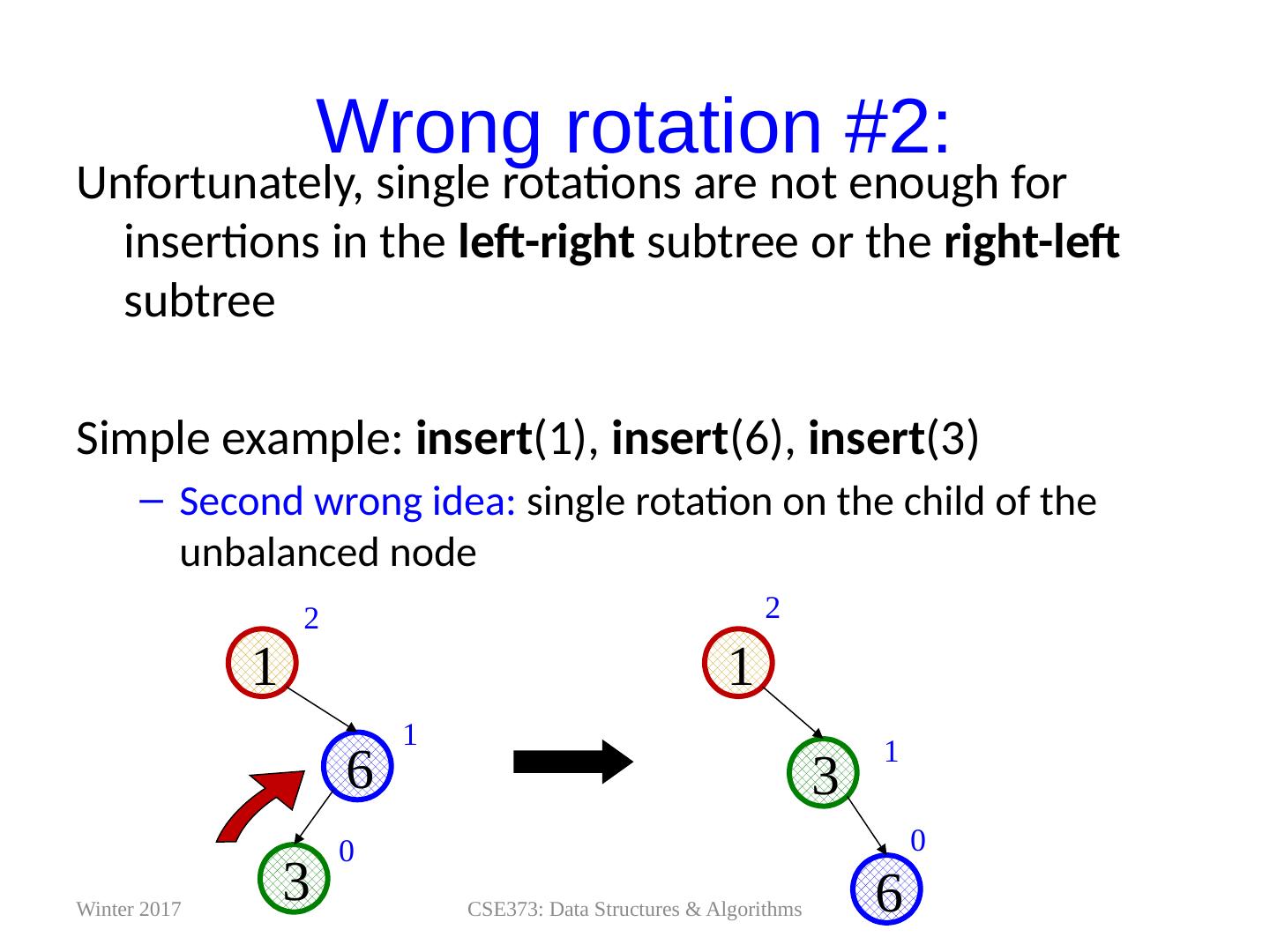

29 .Wrong rotation #1: Unfortunately, single rotations are not enough for insertions in the left-right subtree or the right-left subtree Simple example: insert (1), insert (6), insert (3) First wrong idea: single rotation like we did for left-left CSE373: Data Structures & Algorithms 3 6 1 0 1 2 6 1 3 1 0 0 Winter 2017