- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/u231/Warehouse_computer_data?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

仓库级的计算

展开查看详情

1 .CS 61C: Great Ideas in Computer Architecture (Machine Structures) Warehouse-Scale Computing, MapReduce , and Spark Instructors: Krste Asanović & Randy H. Katz http:// inst.eecs.berkeley.edu /~cs61c/ 11/8/17 Fall 2017 -- Lecture #21 1

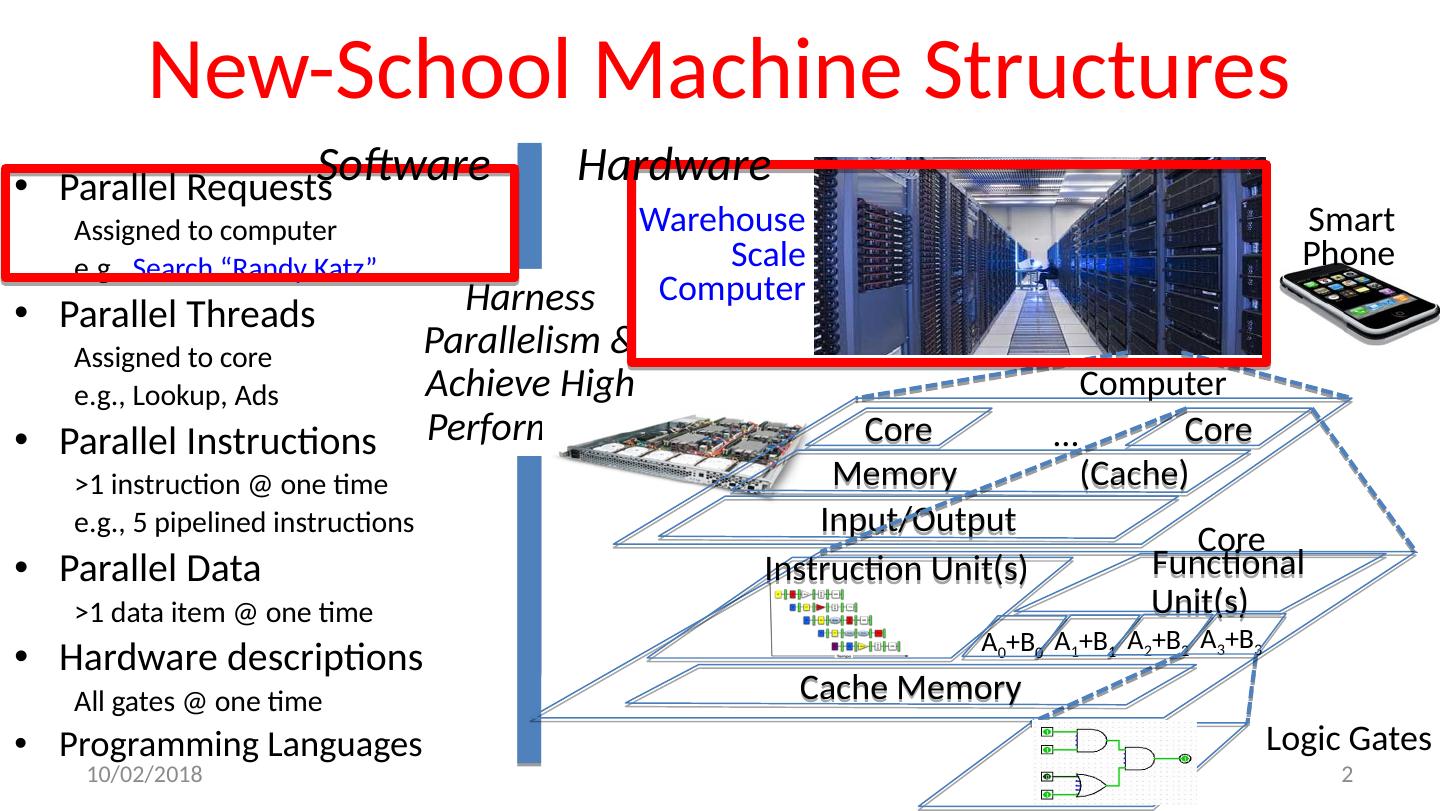

2 .New-School Machine Structures Parallel Requests Assigned to computer e.g., Search “Randy Katz” Parallel Threads Assigned to core e.g., Lookup, Ads Parallel Instructions >1 instruction @ one time e.g., 5 pipelined instructions Parallel Data >1 data item @ one time Hardware descriptions All gates @ one time Programming Languages Smart Phone Warehouse Scale Computer Harness Parallelism & Achieve High Performance Logic Gates Core Core … Memory (Cache) Input/Output Computer Cache Memory Core Instruction Unit(s ) Functional Unit(s ) A 3 +B 3 A 2 +B 2 A 1 +B 1 A 0 +B 0 Software Hardware 11/8/17 2

3 .Agenda Warehouse-Scale Computing Cloud Computing Request-Level Parallelism (RLP) Map-Reduce Data Parallelism And, in Conclusion … 11/8/17 3

4 .Agenda Warehouse-Scale Computing Cloud Computing Request Level Parallelism (RLP) Map-Reduce Data Parallelism And, in Conclusion … 11/8/17 4

5 .Google’s WSCs 5 11/8/17 Ex: In Oregon 11/8/17 Fall 2016 -- Lecture #21 5

6 .WSC Architecture 1U Server: 8 cores, 16 GiB DRAM, 4x1 TB disk Rack: 40-80 servers, Local Ethernet (1-10Gbps) switch (30$/1Gbps/server) Array (aka cluster): 16-32 racks Expensive switch (10X bandwidth 100x cost) 11/8/17 6

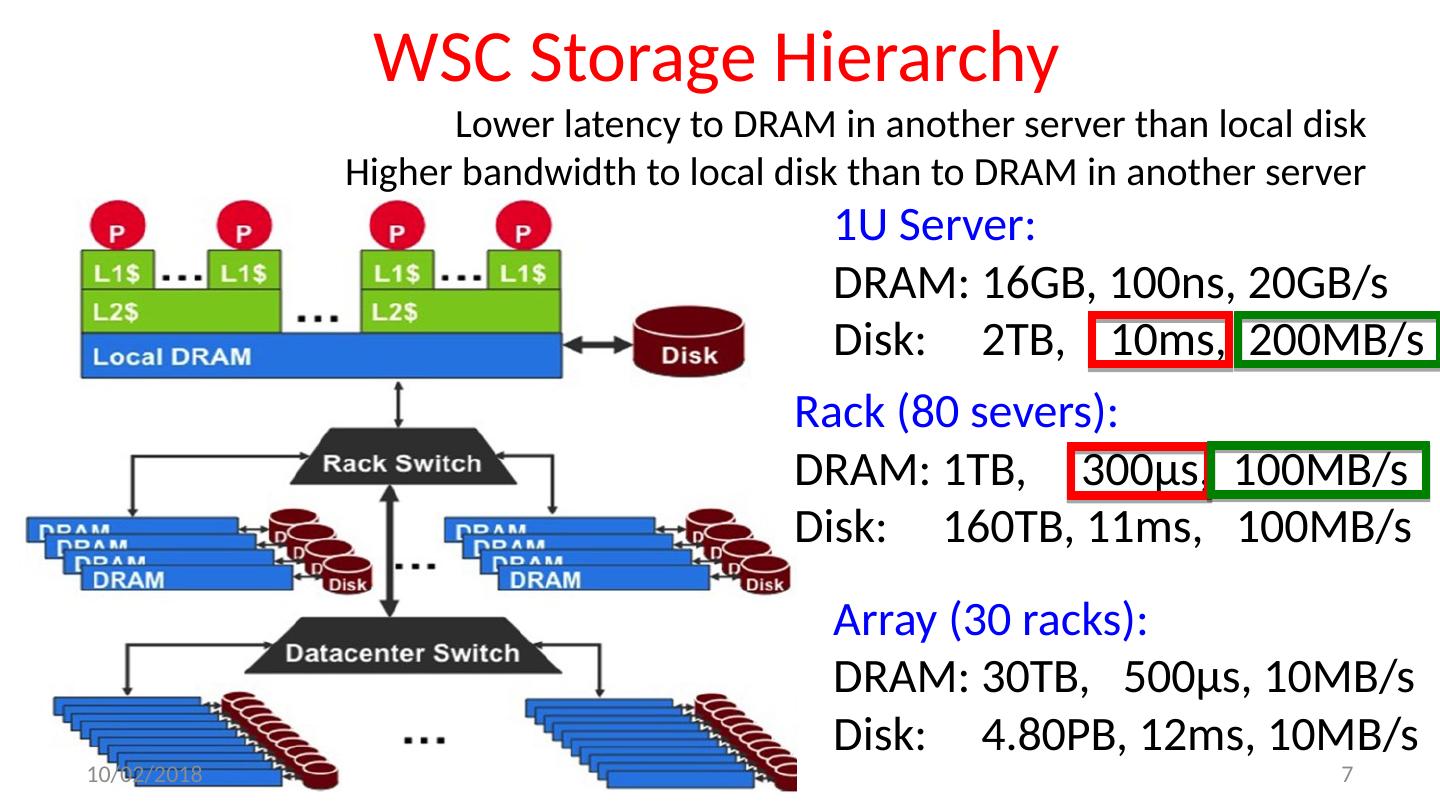

7 .WSC Storage Hierarchy 1U Server: DRAM: 16GB, 100ns, 20GB/s Disk: 2TB, 10ms, 200MB/s Rack (80 severs): DRAM: 1TB, 300µs, 100MB/s Disk: 160TB, 11ms, 100MB/s Array (30 racks): DRAM: 30TB, 500µs, 10MB/s Disk: 4.80PB, 12ms, 10MB/s Lower latency to DRAM in another server than local disk Higher bandwidth to local disk than to DRAM in another server 11/8/17 7

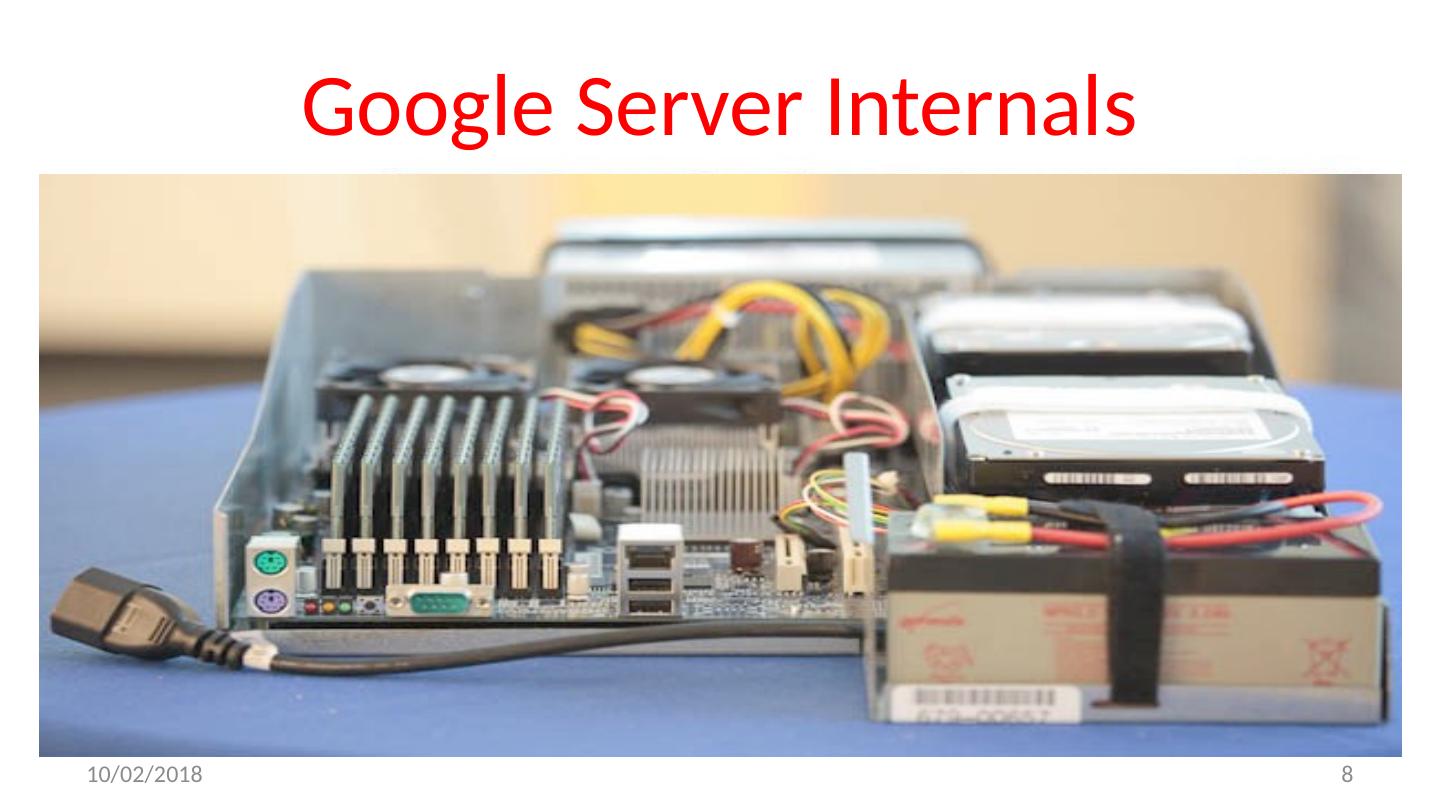

8 .Google Server Internals Google Server 11/8/17 8

9 .

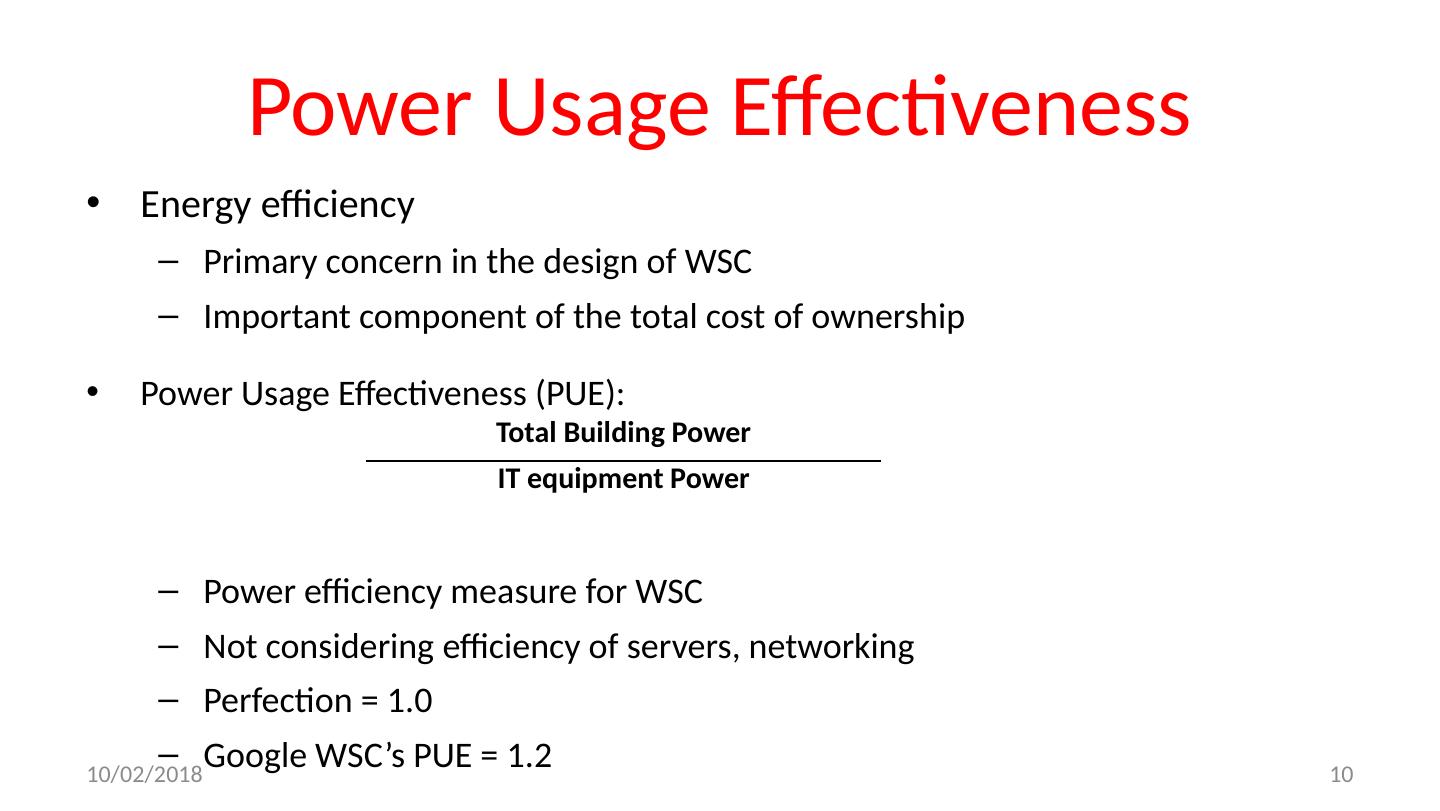

10 .Power Usage Effectiveness Energy efficiency Primary concern in the design of WSC Important component of the total cost of ownership Power Usage Effectiveness (PUE): P ower efficiency measure for WSC Not considering efficiency of servers, networking Perfection = 1.0 Google WSC’s PUE = 1.2 Total Building Power IT equipment Power 11/8/17 10

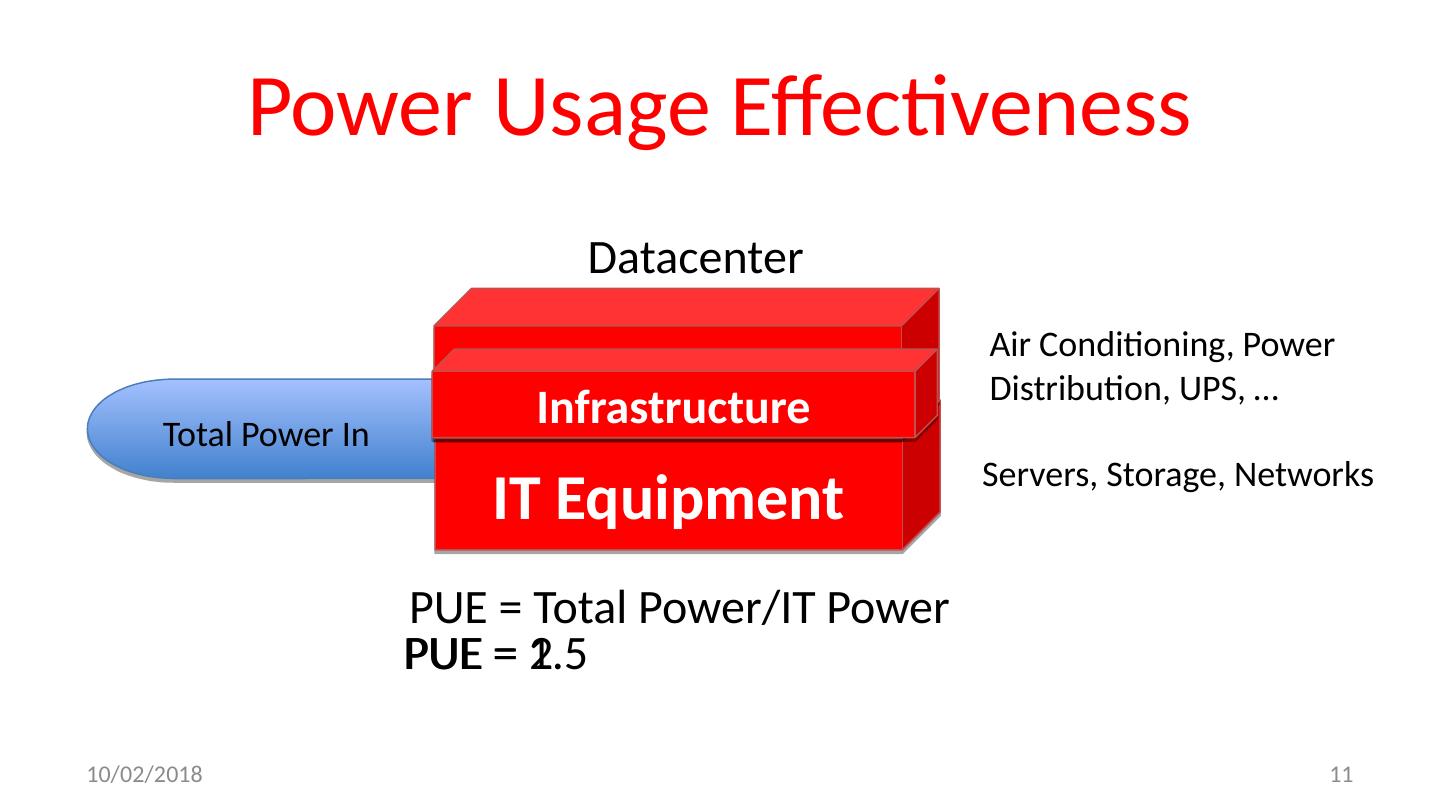

11 .Power Usage Effectiveness 11/8/17 11 IT Equipment Total Power In Datacenter Servers, Storage, Networks Air Conditioning, Power Distribution, UPS, … PUE = Total Power/IT Power Infrastructure PUE = 2 Infrastructure PUE = 1.5

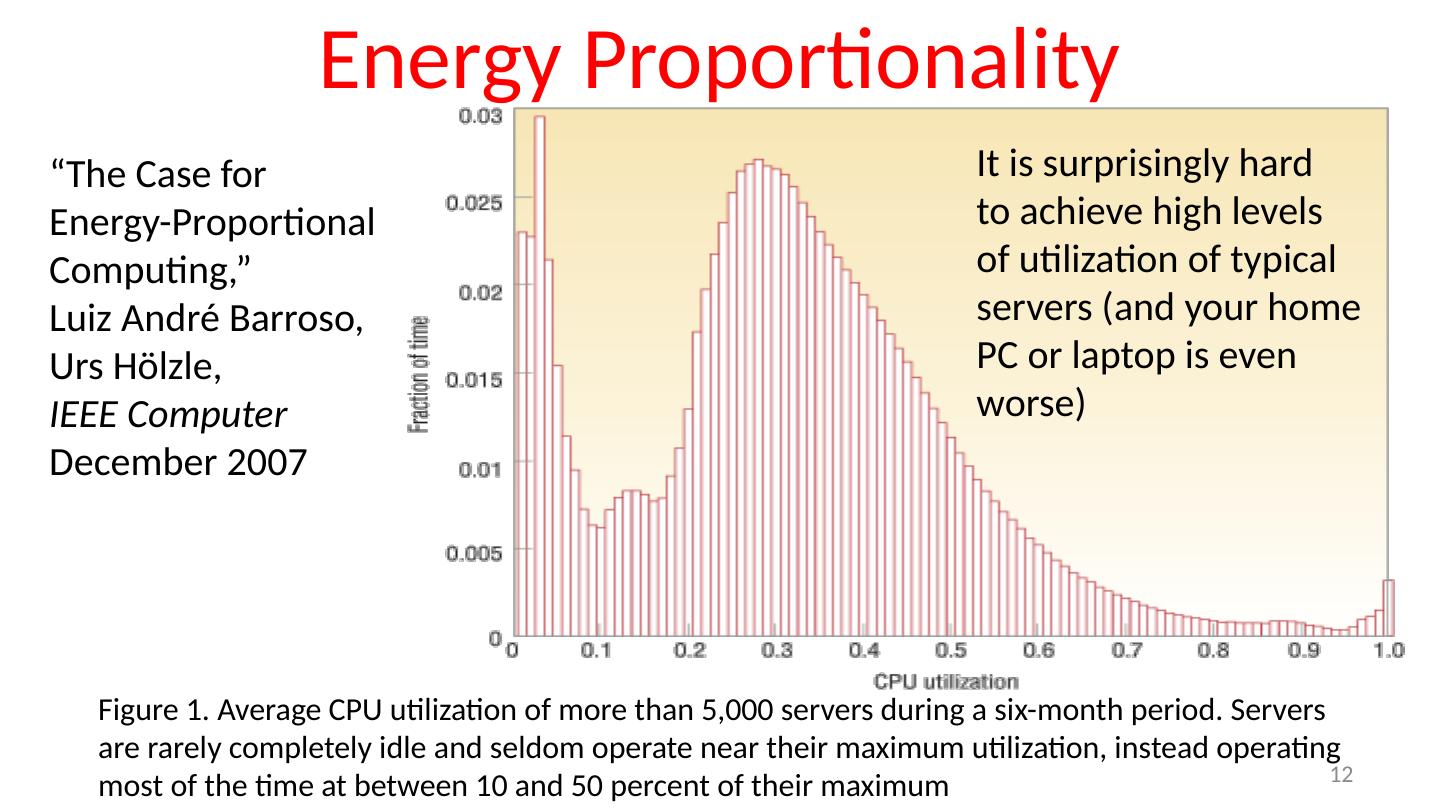

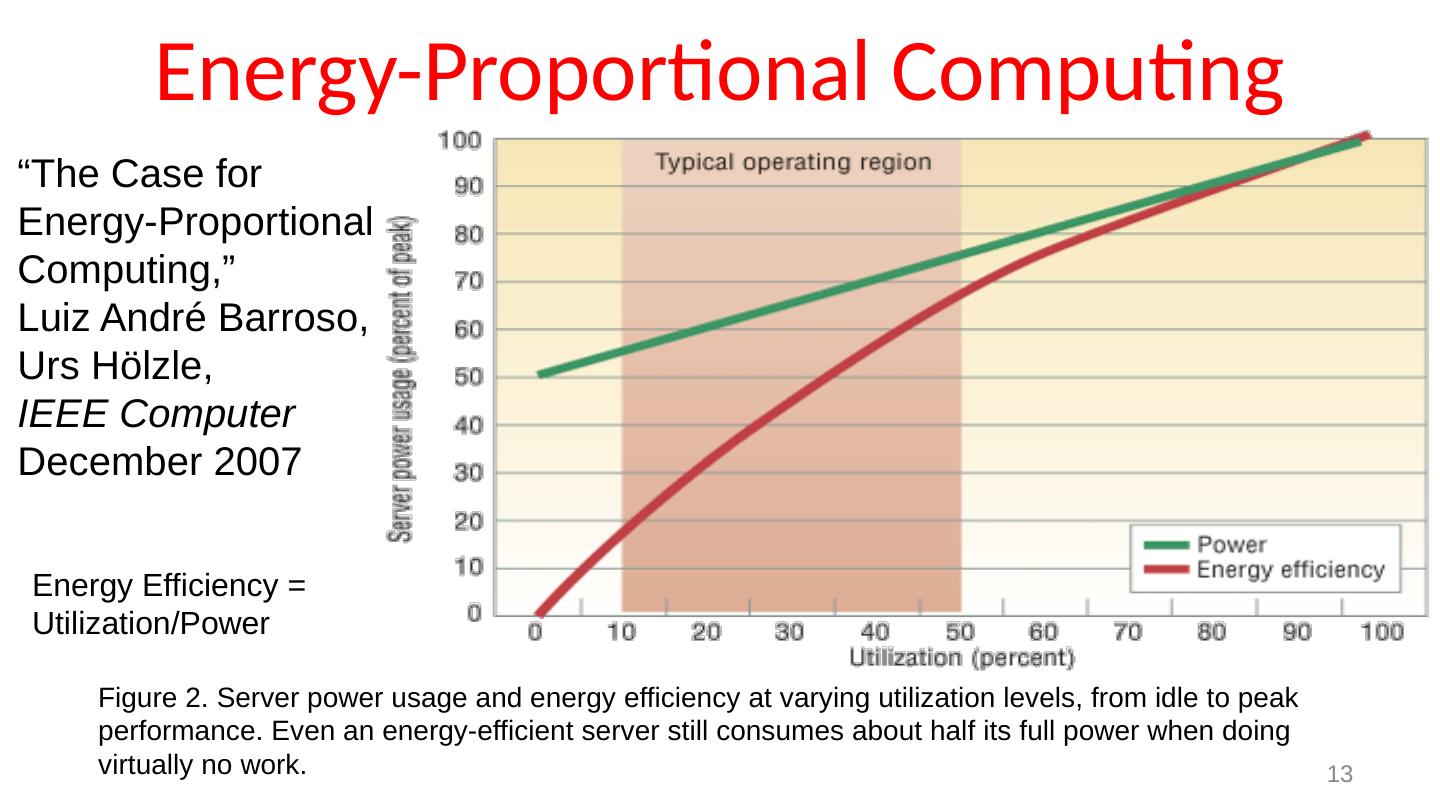

12 .Energy Proportionality 12 Figure 1. Average CPU utilization of more than 5,000 servers during a six-month period. Servers are rarely completely idle and seldom operate near their maximum utilization, instead operating most of the time at between 10 and 50 percent of their maximum It is surprisingly hard to achieve high levels of utilization of typical servers (and your home PC or laptop is even worse) “The Case for Energy-Proportional Computing,” Luiz André Barroso, Urs Hölzle, IEEE Computer December 2007

13 .Energy-Proportional Computing 13 Figure 2. Server power usage and energy efficiency at varying utilization levels, from idle to peak performance. Even an energy-efficient server still consumes about half its full power when doing virtually no work. “ The Case for Energy-Proportional Computing, ” Luiz André Barroso, Urs Hölzle, IEEE Computer December 2007 Energy Efficiency = Utilization/Power

14 .Energy Proportionality 14 Figure 4. Power usage and energy efficiency in a more energy-proportional server. This server has a power efficiency of more than 80 percent of its peak value for utilizations of 30 percent and above, with efficiency remaining above 50 percent for utilization levels as low as 10 percent. “The Case for Energy-Proportional Computing,” Luiz André Barroso , Urs Hölzle , IEEE Computer December 2007 Design for wide dynamic power range and active low power modes Energy Efficiency = Utilization/Power

15 .Agenda Warehouse Scale Computing Cloud Computing Request Level Parallelism (RLP) Map-Reduce Data Parallelism And, in Conclusion … 11/8/17 15

16 .Scaled Communities, Processing, and Data 11/8/17 16

17 .Quality and Freshness 11/8/17 17 Increased Freshness Of Results Today ’ s Cloud Future Better, Faster, More Timely Results Google Translation Quality vs. Corpora Size LM training data size, Million tokens target KN + ldcnews KN + webnews KN target SB + ldcnews SB + webnews SB + web SB 0.44 0.34 0.40 0.42 0.38 0.36 10 10 6 10 5 10 4 10 3 10 2 Test data BLEU Accuracy of Response More Accurate Timeliness of Response More Timely

18 .Cloud Distinguished by … Shared platform with illusion of isolation Collocation with other tenants Exploits technology of VMs and hypervisors (next lectures!) At best “fair” allocation of resources, but not true isolation Attraction of low-cost cycles Economies of scale driving move to consolidation Statistical multiplexing to achieve high utilization/efficiency of resources Elastic service Pay for what you need, get more when you need it But no performance guarantees: assumes uncorrelated demand for resources 11/8/17 18

19 .Cloud Distinguished by … Shared platform with illusion of isolation Collocation with other tenants Exploits technology of VMs and hypervisors (next lectures!) At best “fair” allocation of resources, but not true isolation Attraction of low-cost cycles Economies of scale driving move to consolidation Statistical multiplexing to achieve high utilization/efficiency of resources Elastic service Pay for what you need, get more when you need it But no performance guarantees: assumes uncorrelated demand for resources 11/8/17 18

20 .Agenda Warehouse Scale Computing Cloud Computing Request-Level Parallelism (RLP) Map-Reduce Data Parallelism And, in Conclusion … 11/8/17 20

21 .Request-Level Parallelism (RLP) Hundreds of thousands of requests per second Popular Internet services like web search, social networking, … Such requests are largely independent Often involve read-mostly databases Rarely involve read-write sharing or synchronization across requests Computation easily partitioned across different requests and even within a request 11/8/17 21

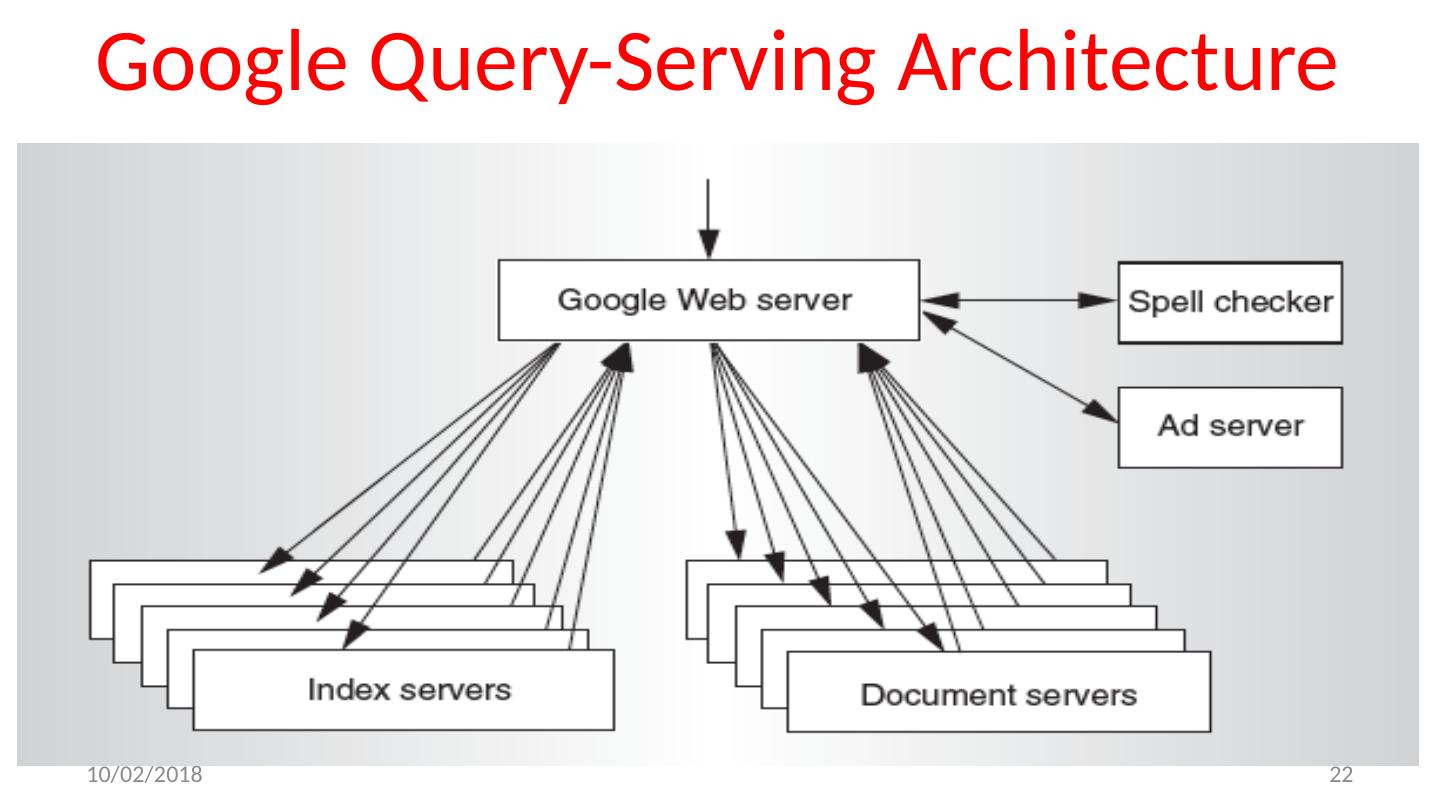

22 .Google Query-Serving Architecture 11/8/17 22

23 .Web Search Result 11/8/17 23

24 .Anatomy of a Web Search (1/3) Google “Randy Katz” Direct request to “closest” Google Warehouse-Scale Computer Front-end load balancer directs request to one of many clusters of servers within WSC Within cluster, select one of many Google Web Servers (GWS) to handle the request and compose the response pages GWS communicates with Index Servers to find documents that contain the search words, “Randy”, “Katz”, uses location of search as well Return document list with associated relevance score 11/8/17 24

25 .Anatomy of a Web Search (2/3) In parallel, Ad system: books by Katz at Amazon.com Images of Randy Katz Use docids (document IDs) to access indexed documents Compose the page Result document extracts (with keyword in context) ordered by relevance score Sponsored links (along the top) and advertisements (along the sides) 11/8/17 25

26 .Anatomy of a Web Search (3/3) Implementation strategy Randomly distribute the entries Make many copies of data (aka “replicas”) Load balance requests across replicas Redundant copies of indices and documents Breaks up hot spots, e.g., “Justin Bieber ” Increases opportunities for request-level parallelism Makes the system more tolerant of failures 11/8/17 26

27 .Administrivia 11/8/17 27 Project 3: Performance has been released! Due Monday, November 20 Compete in a performance context (Proj5) for extra credit! HW5 was released; due Wednesday Nov 15 Regrade requests are open Due this Friday (Nov 10) Please consult the solutions and GradeScope rubric first

28 .Agenda Warehouse Scale Computing Cloud Computing Request Level Parallelism (RLP) Map-Reduce Data Parallelism And, in Conclusion … 11/8/17 Fall 2016 -- Lecture #21 28

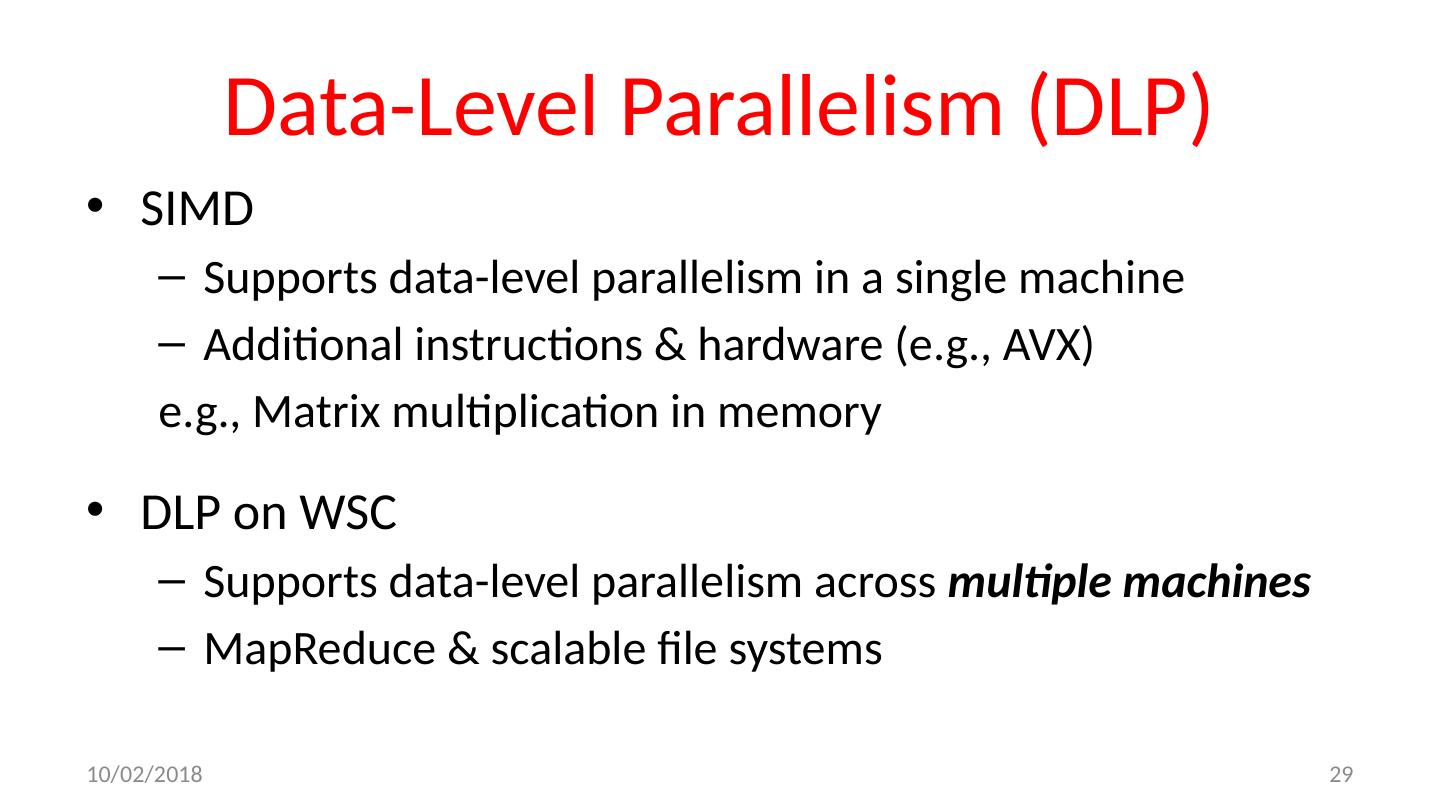

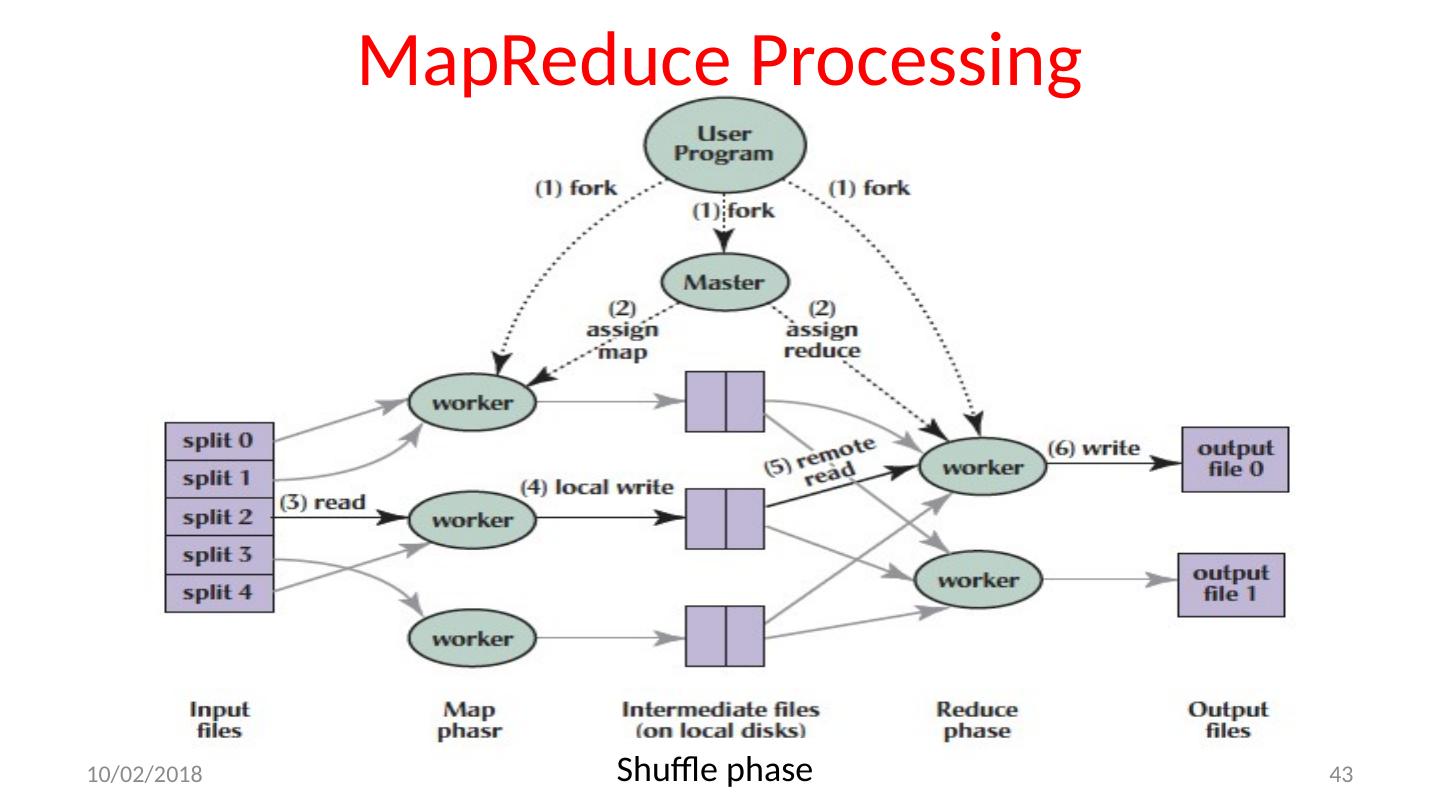

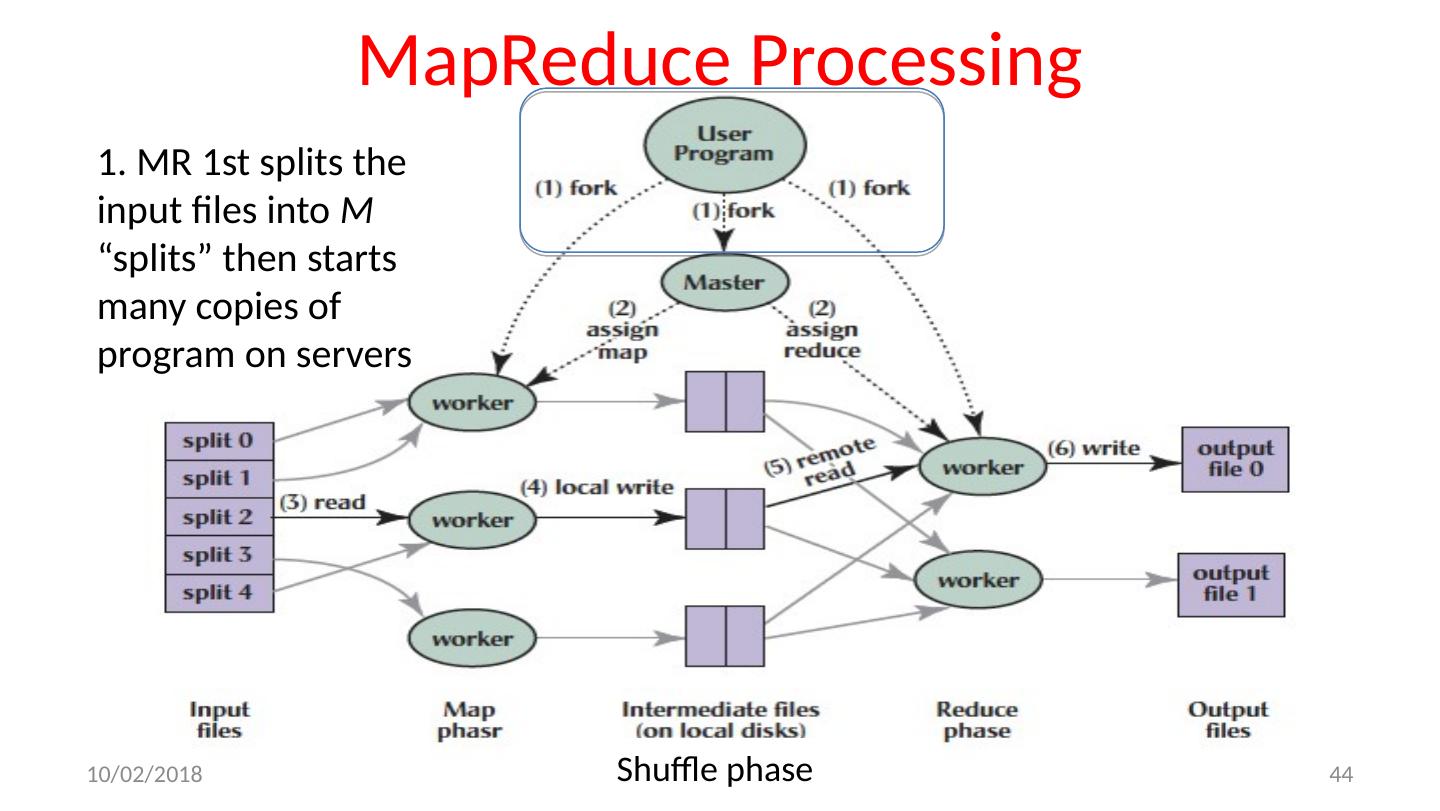

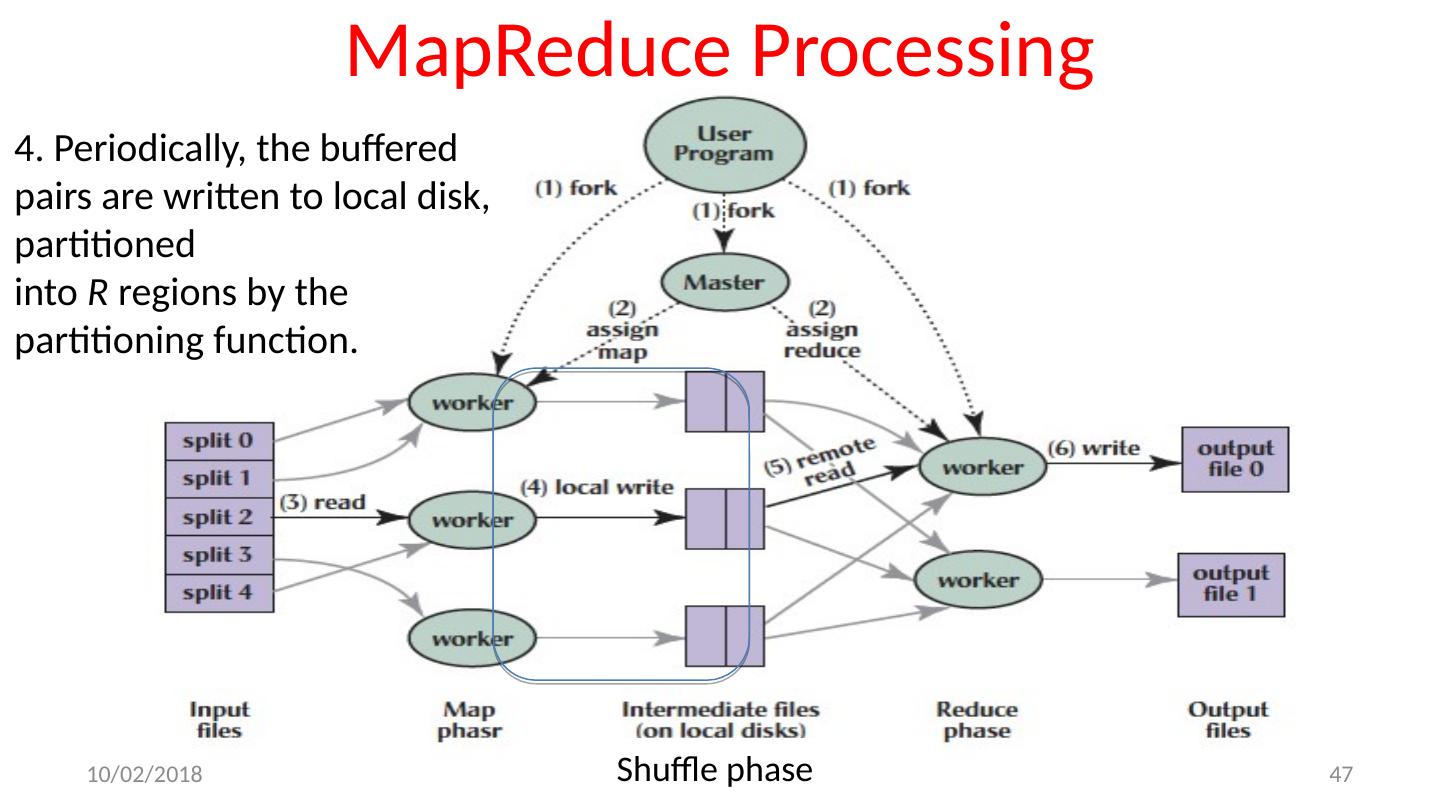

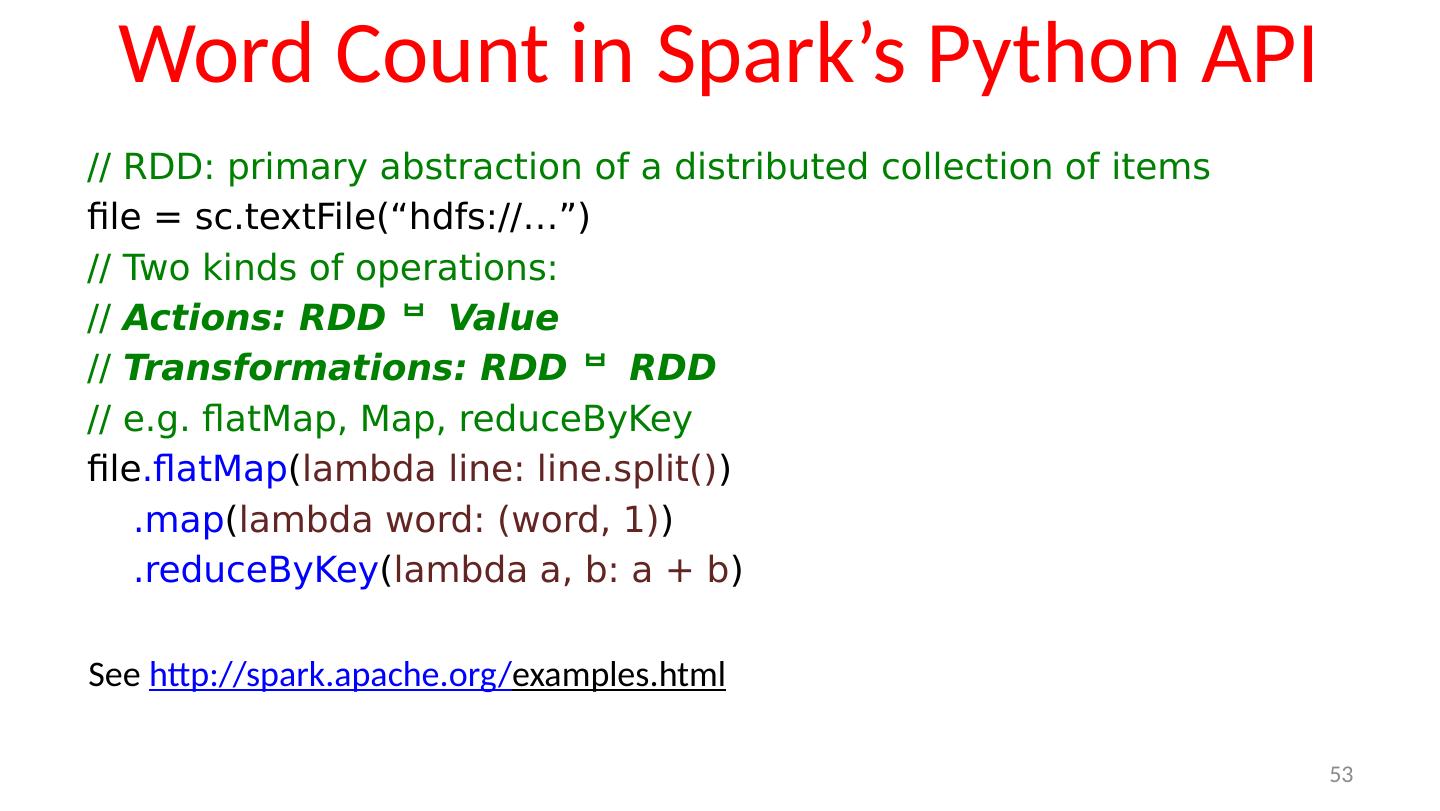

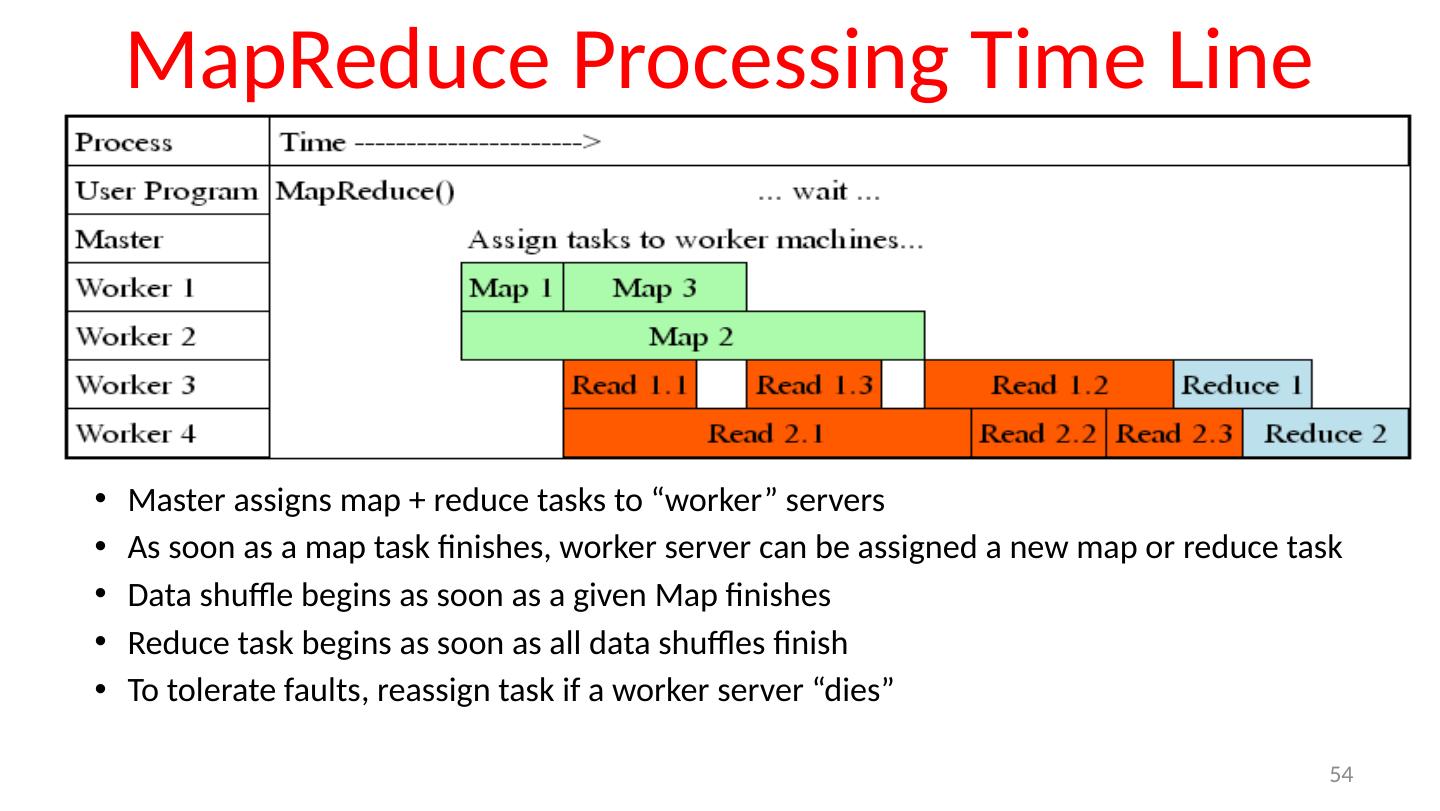

29 .Data-Level Parallelism (DLP) SIMD Supports data-level parallelism in a single machine Additional instructions & hardware (e.g., AVX) e.g., Matrix multiplication in memory DLP on WSC Supports data-level parallelism across multiple machines MapReduce & scalable file systems 11/8/17 29