- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/u3790/graphlab19587?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

GraphLab: A New Framework For Parallel Machine Learning

展开查看详情

1 .GraphLab : A New Framework For Parallel Machine Learning Name: Siyu Bian NetID : siyub2

2 .Background: R ecent developments in computer architecture have shifted the focus away from frequency scaling and towards parallel scaling , threatening the future of sequential ML algorithms . Existing high-level parallel abstractions like MapReduce are insufficiently expressiv e while low-level tools like MPI and Pthreads leave ML experts repeatedly solving the same design challenges.

3 .Goal: GraphLab enables ML experts easily design and implement efficient scalable parallel algorithms by composing problem specific computation , data-dependencies , and scheduling . Main Contributions : 1. A graph-based data model which simultaneously represents data and computational dependencies . 2. A set of concurrent access models which provide a range of sequential-consistency guarantees . 3. A sophisticated modular scheduling mechanism. 4. An aggregation framework to manage global state . 5. GraphLab implementations and experimental evaluations of parameter learning and inference in graphical models, Gibbs sampling, CoEM , Lasso and compressed sensing on real-world problems.

4 .Existing Frameworks: MapReduce : Consists of a Map operation and a Reduce operation. The Map operation is a function which is applied independently and in parallel to each datum (e.g., webpage) in a large data set (e.g., computing the word-count). The Reduce operation is an aggregation function which combines the Map outputs (e.g., computing the total word count ). Good When: the algorithm is embarrassingly parallel and can be decomposed into a large number of independent computations Struggles when: there are computational dependencies in the data .

5 .Existing Frameworks: DAG Abstraction : P arallel computation is represented as a directed acyclic graph with data flowing along edges between vertices. Vertices correspond to functions which receive information on inbound edges and output results to outbound edges . Good: permits rich computational dependencies Bad: Does not naturally express iterative algorithms , also cannot express dynamically prioritized computation.

6 .Existing Frameworks: Systolic : Systolic abstraction extends the DAG framework to the iterative setting, forces the computation to be decomposed into small atomic components with limited communication between the components. The Systolic abstraction uses a directed graph G = (V, E) which is not necessarily acyclic) where each vertex represents a processor, and each edge represents a communication link. Good: can express iterative computation Bad: unable to express the wide variety of update schedules used in ML algorithms. (not possible to implement coordinate descent which requires the more sequential Gauss-Seidel schedule.), also cannot express the dynamic and specialized structured schedules

7 .GraphLab Abstraction 1. Data Model : The GraphLab data model consists of two parts: a directed data graph and a shared data table . Data graph: The data graph G = ( V, E ) encodes both the problem specific sparse computational structure and directly modifiable program state . The user can associate arbitrary blocks of data (or parameters) with each vertex and directed edge in G. Shared Data Table ( SDT ) : To support globally shared state , GraphLab provides a shared data table (SDT) which is an associative map, T [Key] → Value, between keys and arbitrary blocks of data.

8 .GraphLab Abstraction 2. User Defined Computation : Computation in GraphLab can be performed either through an update function which defines the local computation, or through the sync mechanism which defines global aggregation. The update f unction is analogous to the Map in MapReduce, but unlike in MapReduce, update functions are permitted to access and modify overlapping contexts in the graph. The sync mechanism is analogous to the Reduce operation, but unlike in MapReduce, the sync mechanism runs concurrently with the update functions.

9 .2.1 Update function : Defination : A GraphLab update function is a stateless user-defined function which operates on the data associated with small neighborhoods in the graph and represents the core element . Details : For every vertex v , we define S v as the neighborhood of v which consists of v , its adjacent edges (both inbound and outbound) and its neighboring vertices . We define D Sv as the data corresponding to the neighborhood S v . In addition to D Sv , update functions also have read-only access, to the shared data table T . We define the application of the update function f to the vertex v as the state mutating computation :

10 .2.2 Sync Mechanism: Definition : The sync mechanism aggregates data across all vertices in the graph. The result of the sync operation is associated with a particular entry in the Shared Data Table (SDT). Details : The user provides a key k, a fold function, an apply function, as well as an initial value r k (0 ) to the SDT and an optional merge function used to construct parallel tree reductions .

11 .GraphLab Abstraction 3 . Data Consistency : Since scopes may overlap, the simultaneous execution of two update functions can lead to race-conditions resulting in data inconsistency and even corruption . GraphLab provides a choice of three data consistency models which enable the user to balance performance and data consistency

12 .3 . Data Consistency: Three consistency models Full consistency model ensures that during the execution of f(v) no other function will read or modify data within Sv . Therefore, parallel execution may only occur on vertices that do not share a common neighbor. The slightly weaker edge consistency model ensures that during the execution of f(v) no other function will read or modify any of the data on v or any of the edges adjacent to v. Under the edge consistency model, parallel execution may only occur on non-adjacent vertices. Finally, the weakest vertex consistency model only ensures that during the execution of f(v) no other function will be applied to v.

13 .3 . Data Consistency: Definition 3.1 (Sequential Consistency ). A GraphLab program is sequentially consistent if for every parallel execution, there exists a sequential execution of update functions that produces an equivalent result. Proposition 3.1. GraphLab guarantees sequential consistency under the following three conditions: 1. The full consistency model is used 2. The edge consistency model is used and update functions do not modify data in adjacent vertices. 3. The vertex consistency model is used and update functions only access local vertex data.

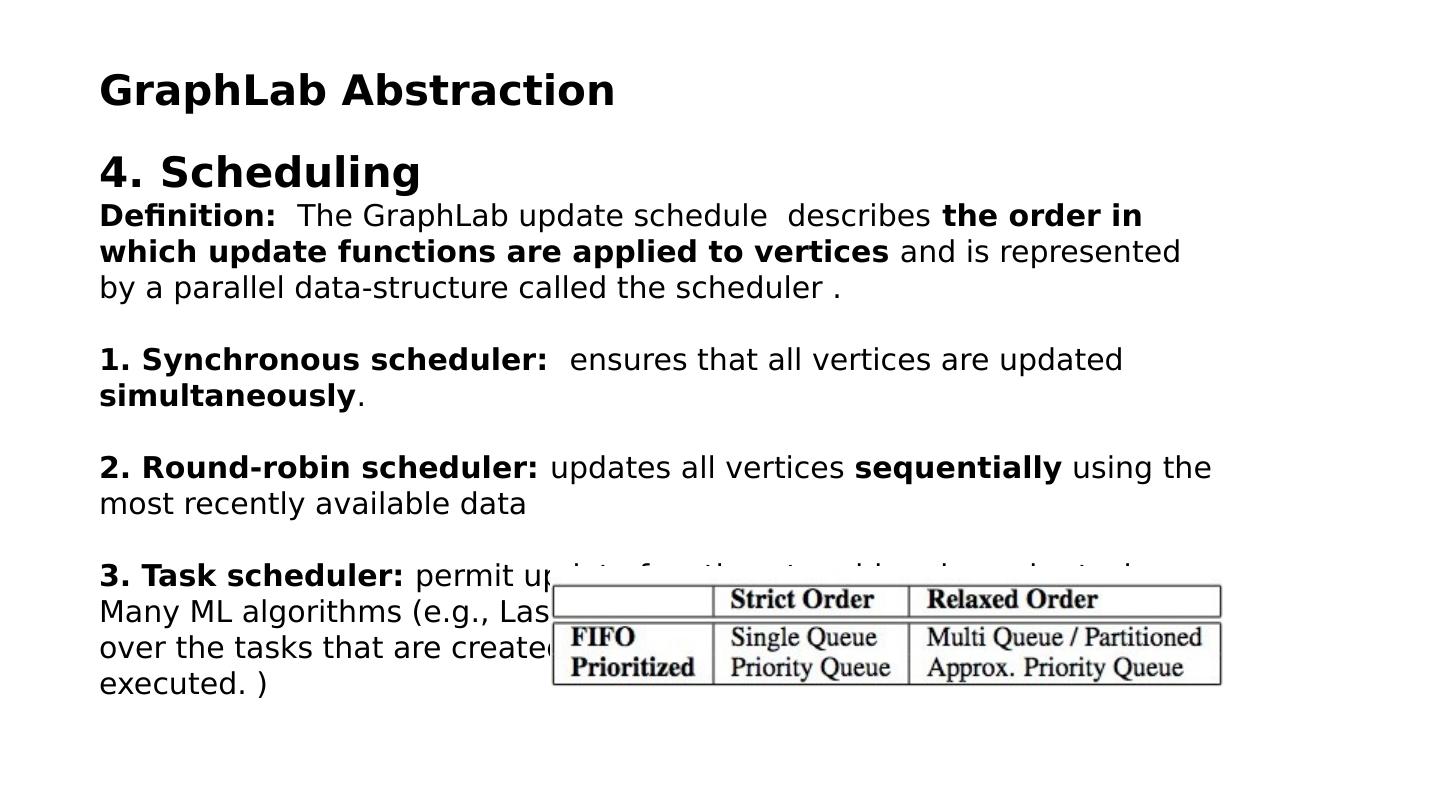

14 .GraphLab Abstraction Scheduling Definition: The GraphLab update schedule describes the order in which update functions are applied to vertices and is represented by a parallel data-structure called the scheduler . 1. Synchronous scheduler: ensures that all vertices are updated simultaneously . 2 . Round-robin scheduler: updates all vertices sequentially using the most recently available data 3. Task scheduler: permit update functions to add and reorder tasks. Many ML algorithms (e.g., Lasso, CoEM , Residual BP) require more control over the tasks that are created and the order in which they are executed. ) 4. Splash scheduler : executes tasks along spanning trees.

15 .GraphLab Abstraction 4.1 Set Scheduler GraphLab provides a scheduler construction framework called the set scheduler which enables users to safely and easily compose custom update schedules. To use the set scheduler the user specifies a sequence of vertex set and update function pairs ((S1, f1),(S2, f2)· · ·( Sk , fk )), where Si ⊆ V and fi is an update function. This sequence implies the following execution semantics : The amount of parallelism depends on the size of each set; the procedure is highly sequential if the set sizes are small.

16 .GraphLab Abstraction 4.1 Set Scheduler Executing the schedule in the manner described above can lead to the majority of the processors waiting for a few processors to complete the current set. However , by leveraging the causal data dependencies encoded in the graph structure we are able to construct an execution plan which identifies tasks in future sets that can be executed early while still producing an equivalent result. The set scheduler compiles an execution plan by rewriting the execution sequence as a Directed Acyclic Graph (DAG), where each vertex in the DAG represents an update task in the execution sequence and edges represent execution dependencies.

17 .GraphLab Abstraction 5. Temination Assessment Determines when to stop Two approaches: The first method relies on the scheduler which signals termination when there are no remaining tasks. The second termination method relies on user provided termination functions which examine the SDT and signal when the algorithm has converged.

18 .GraphLab Abstraction 6. SUMMARY A GraphLab program is composed of the following parts : 1. A data graph which represents the data and computational dependencies. 2. Update functions which describe local computation 3. A Sync mechanism for aggregating global state . 4. A data consistency model (i.e., Fully Consistent, Edge Consistent or Vertex Consistent), which determines the extent to which computation can overlap . 5. Scheduling primitives which express the order of computation and may depend dynamically on the data.

19 .Case Study CO-EM: To illustrate how GraphLab scales in settings with large structured models we designed and implemented a parallel version of Co-EM, a semi supervised learning algorithm for named entity recognition (NER). Given a list of noun phrases (NP) (e.g., “big apple”), contexts (CT) (e.g., “citizen of ”), and co- occurence counts for each NP-CT pair in a training corpus, CoEM tries to estimate the probability (belief) that each entity (NP or CT) belongs to a particular class (e.g., “country” or “person”).

20 .Case Study CO-EM: Data Model: 1. Data graph: a bipartite graph with each NP and CT represented as a vertex , connected by edges with weights corresponding to the co-occurrence counts . Each vertex stores the current estimate of the belief for the corresponding entity. 2. Data table:NA Computation: Update function: recomputes the local belief by taking a weighted average of the adjacent vertex beliefs. Scheduling: Partitioned Scheduler and the MultiQueue FIFO scheduler on both small and large datasets respectively.

21 .Case Study CO-EM Result: With 16 parallel processors, GraphLab could complete three full Round-robin iterations on the large dataset in less than 30 minutes . As a comparison, a comparable Hadoop implementation took approximately 7.5 hours to complete the exact same task, executing on an average of 95 cpus . Analysis: Our large performance gain can be attributed to data persistence in the GraphLab framework . Data persistence allows us to avoid the extensive data copying and synchronization required by the Hadoop implementation of MapReduce

22 .Conclusions and Future Work CO-EM Unlike existing parallel abstractions, GraphLab supports the representation of 1.structured data dependencies, 2.iterative computation , 3.flexible scheduling . We developed an optimized shared memory implementation GraphLab and we demonstrated its performance and flexibility through a series of case studies. Our ongoing research includes extending the GraphLab framework to the distributed setting allowing for computation on even larger datasets.