- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Unsupervised Learning: Deep Auto-encoder

展开查看详情

1 .Unsupervised Learning: Deep Auto-encoder

2 . Unsupervised Learning “We expect unsupervised learning to become far more important in the longer term. Human and animal learning is largely unsupervised: we discover the structure of the world by observing it, not by being told the name of every object.” – LeCun, Bengio, Hinton, Nature 2015 As I've said in previous statements: most of human and animal learning is unsupervised learning. If intelligence was a cake, unsupervised learning would be the cake, supervised learning would be the icing on the cake, and reinforcement learning would be the cherry on the cake. We know how to make the icing and the cherry, but we don't know how to make the cake. - Yann LeCun, March 14, 2016 (Facebook)

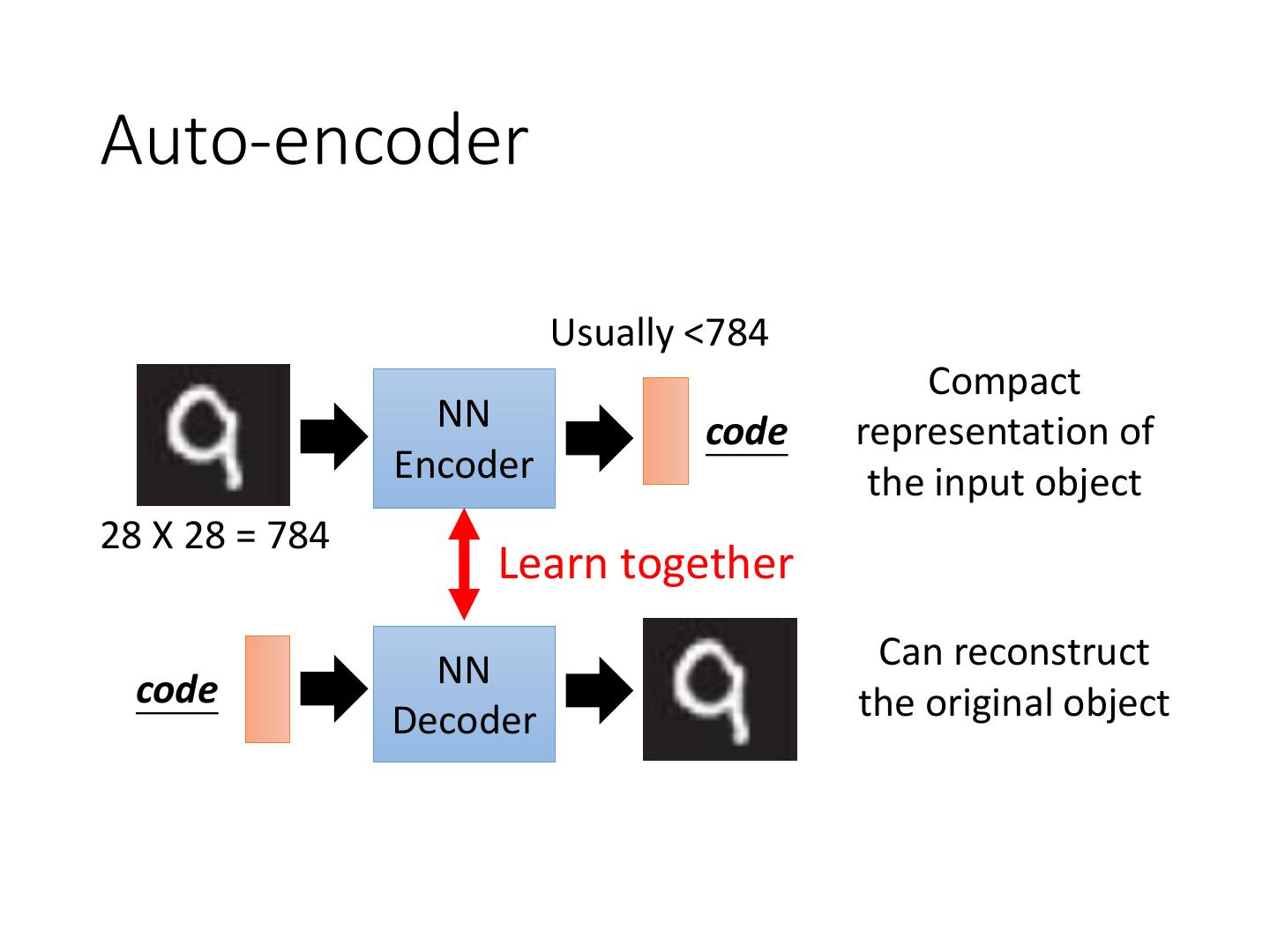

3 .Auto-encoder Usually <784 Compact NN code representation of Encoder the input object 28 X 28 = 784 Learn together NN Can reconstruct code the original object Decoder

4 .Recap: PCA 2 Minimize 𝑥 − 𝑥ො As close as possible encode decode 𝑥 𝑐 𝑥ො 𝑊 𝑊𝑇 hidden layer Input layer (linear) output layer Bottleneck later Output of the hidden layer is the code

5 . Symmetric is not Deep Auto-encoder necessary. • Of course, the auto-encoder can be deep As close as possible Output Layer Input Layer bottle Layer Layer Layer Layer Layer Layer … … 𝑊1 𝑊2 𝑊2𝑇 𝑊1𝑇 𝑥 Initialize by RBM 𝑥ො Code layer-by-layer Reference: Hinton, Geoffrey E., and Ruslan R. Salakhutdinov. "Reducing the dimensionality of data with neural networks." Science 313.5786 (2006): 504-507

6 . Deep Auto-encoder Original Image 784 784 30 PCA Deep Auto-encoder 500 500 250 250 30 1000 1000 784 784

7 .784 784 1000 2 500 784 250 2 250 500 1000 784

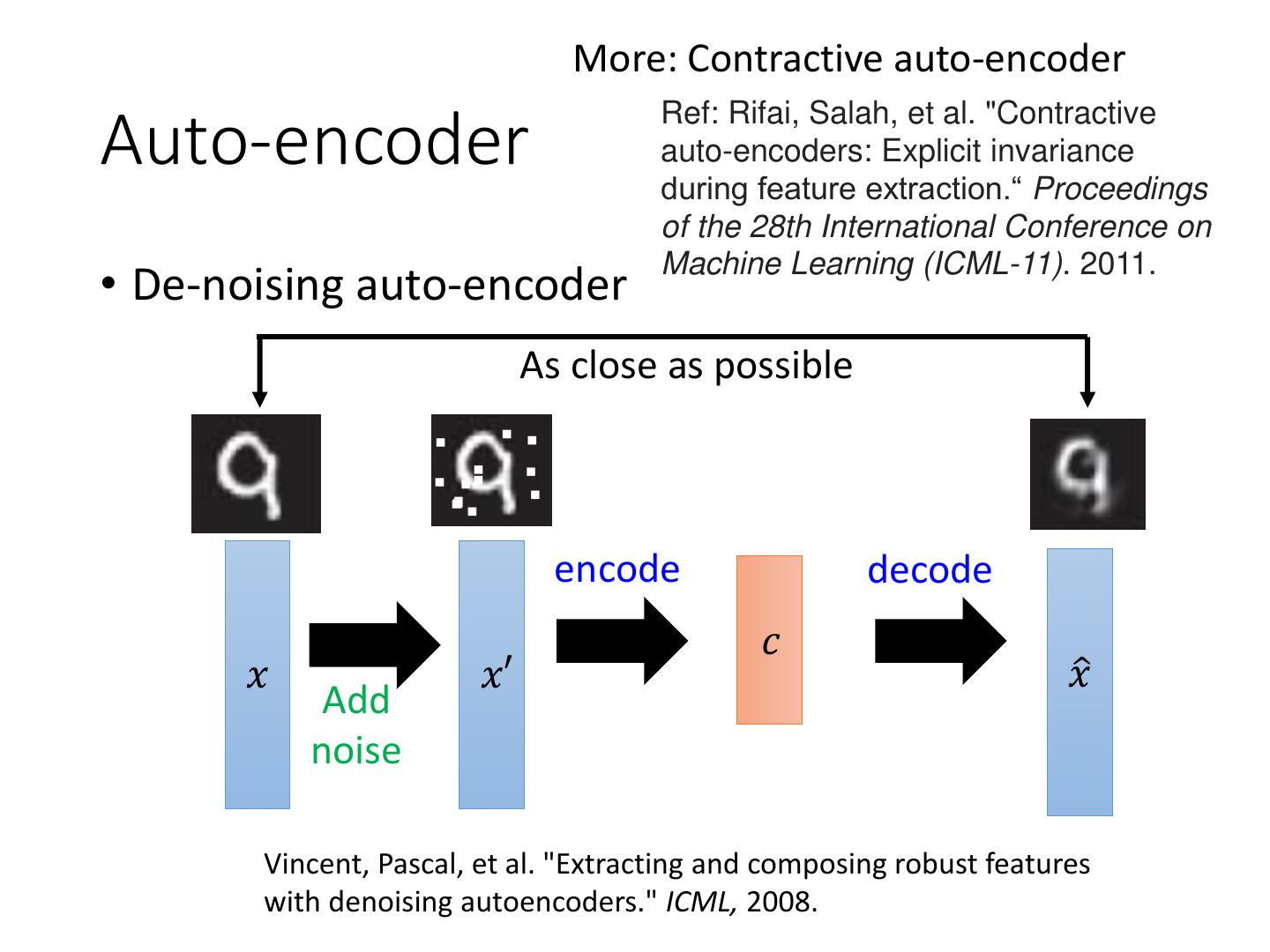

8 . More: Contractive auto-encoder Ref: Rifai, Salah, et al. "Contractive Auto-encoder auto-encoders: Explicit invariance during feature extraction.“ Proceedings of the 28th International Conference on Machine Learning (ICML-11). 2011. • De-noising auto-encoder As close as possible encode decode 𝑐 𝑥 𝑥′ 𝑥ො Add noise Vincent, Pascal, et al. "Extracting and composing robust features with denoising autoencoders." ICML, 2008.

9 .Deep Auto-encoder - Example NN 𝑐 Encoder PCA 降到 32-dim Pixel -> tSNE

10 .Auto-encoder – Text Retrieval Vector Space Model Bag-of-word this 1 is 1 word string: query “This is an apple” a 0 an 1 apple 1 pen 0 document … Semantics are not considered.

11 . Auto-encoder – Text Retrieval The documents talking about the same thing will have close code. 2 query 125 250 500 LSA: project documents to 2000 2 latent topics Bag-of-word (document or query)

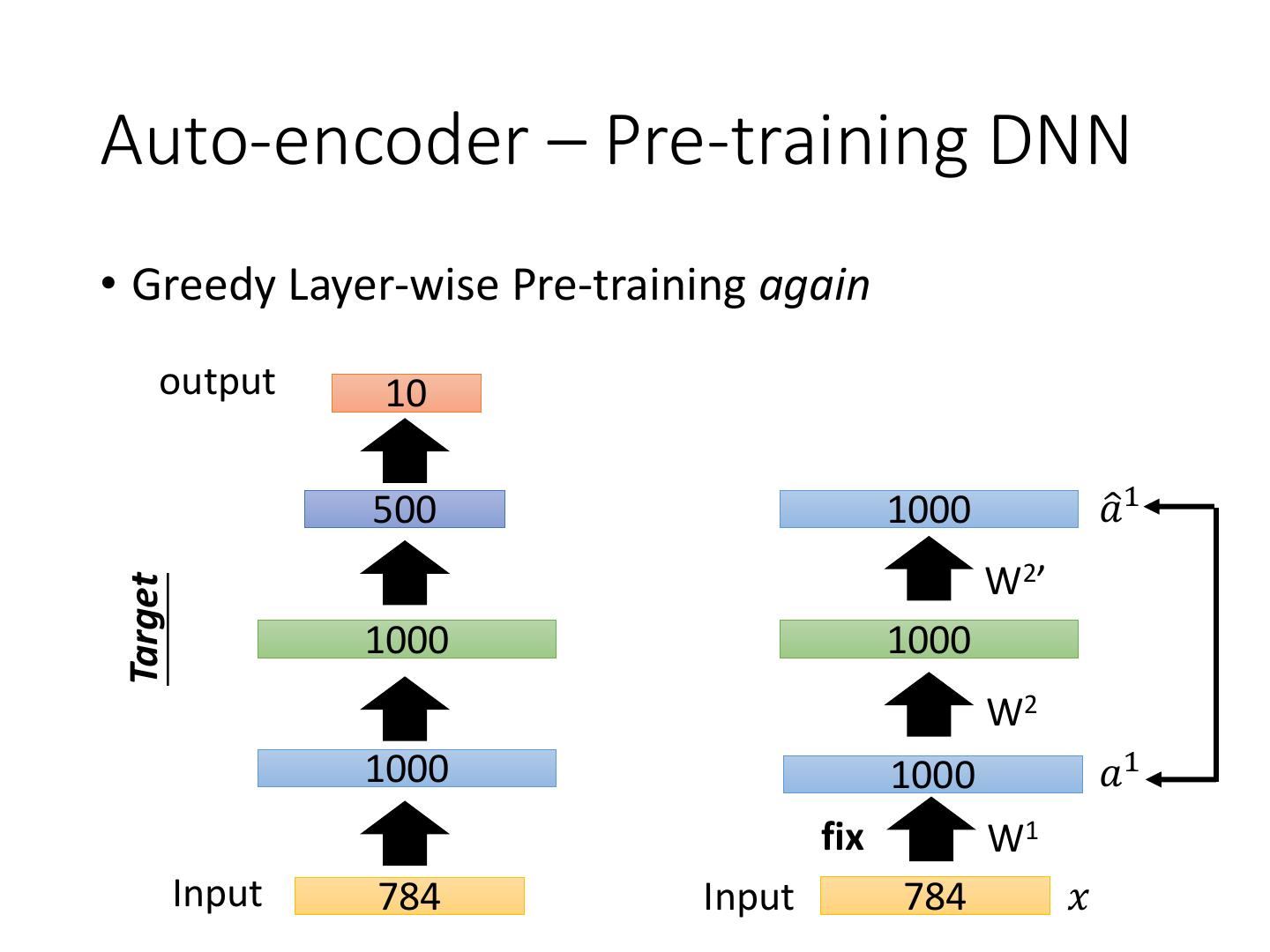

12 .Auto-encoder – Similar Image Search Retrieved using Euclidean distance in pixel intensity space (Images from Hinton’s slides on Coursera) Reference: Krizhevsky, Alex, and Geoffrey E. Hinton. "Using very deep autoencoders for content-based image retrieval." ESANN. 2011.

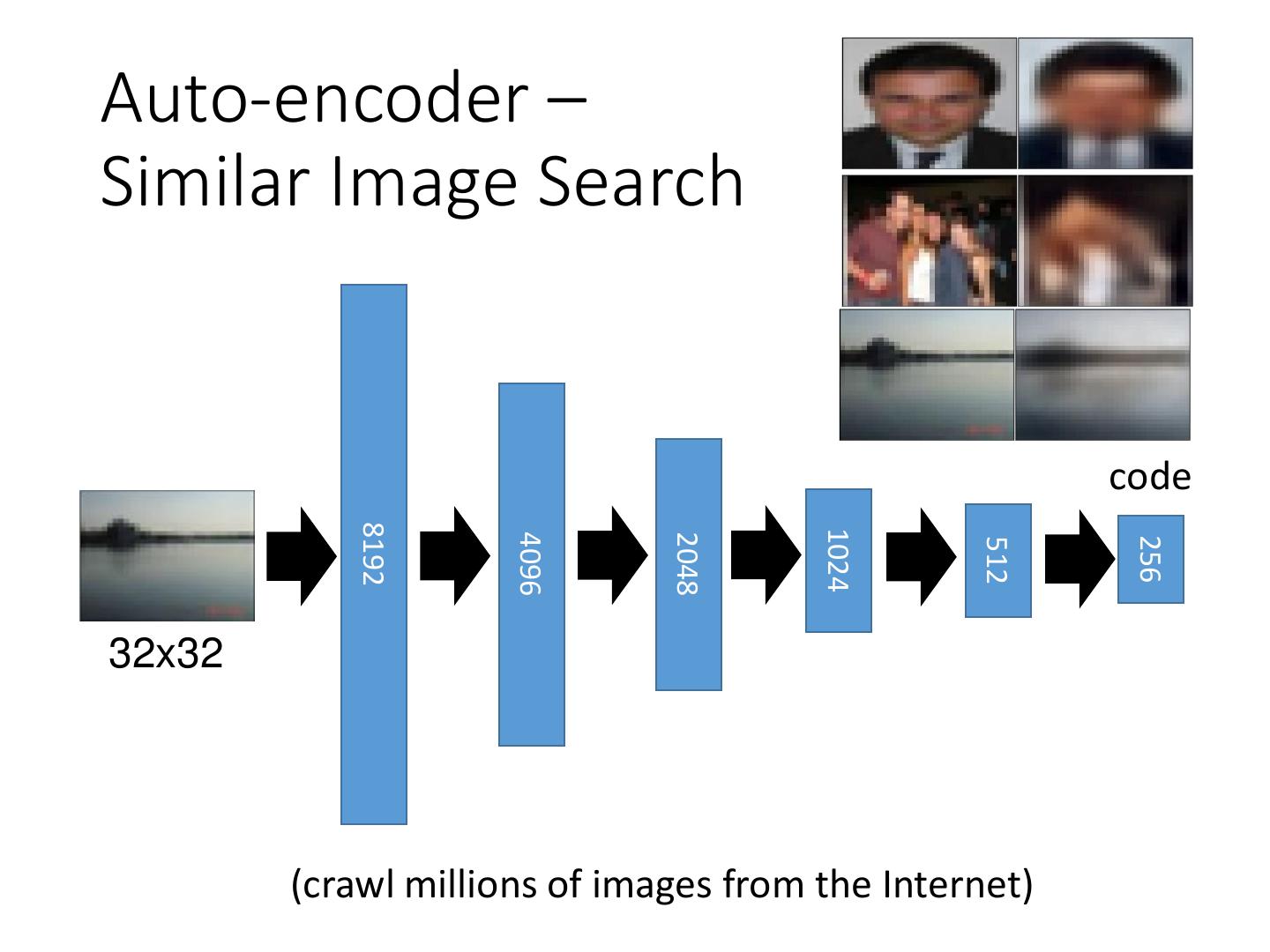

13 .Auto-encoder – Similar Image Search code 8192 1024 4096 2048 256 512 32x32 (crawl millions of images from the Internet)

14 .Retrieved using Euclidean distance in pixel intensity space retrieved using 256 codes

15 .Auto- As close as encoder possible for CNN Deconvolution Unpooling Convolution Deconvolution Pooling Unpooling Convolution code Deconvolution Pooling

16 .CNN -Unpooling 14 x 14 28 x 28 Alternative: simply Source of image : repeat the values https://leonardoaraujosantos.gitbooks.io/artificial- inteligence/content/image_segmentation.html

17 . Actually, deconvolution is convolution. CNN - Deconvolution + + + = +

18 .Auto-encoder – Pre-training DNN • Greedy Layer-wise Pre-training again output 10 500 Target 1000 784 𝑥ො W1’ 1000 1000 W1 Input 784 Input 784 𝑥

19 .Auto-encoder – Pre-training DNN • Greedy Layer-wise Pre-training again output 10 500 1000 𝑎ො1 W2’ Target 1000 1000 W2 1000 1000 𝑎1 fix W1 Input 784 Input 784 𝑥

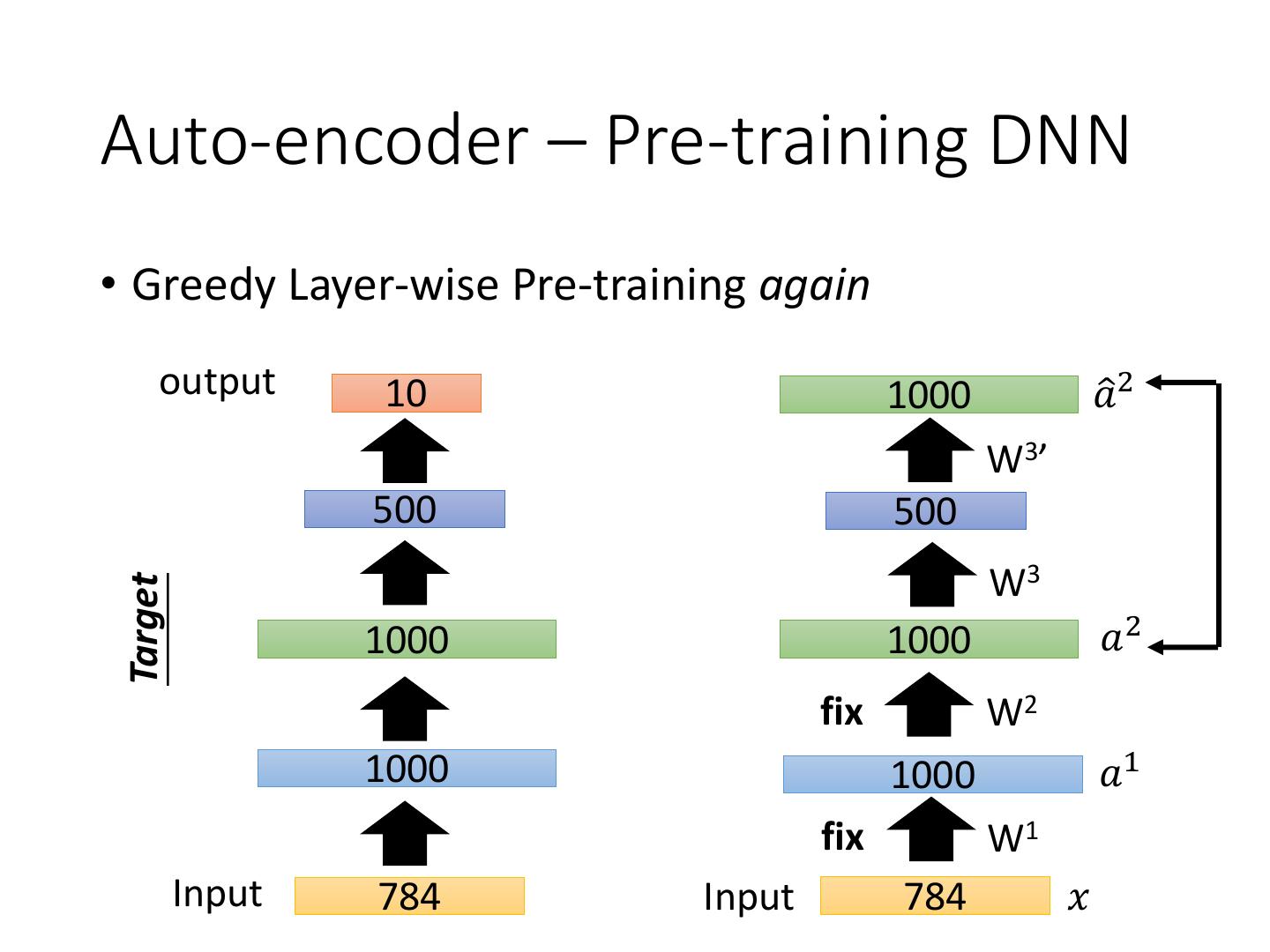

20 .Auto-encoder – Pre-training DNN • Greedy Layer-wise Pre-training again output 10 1000 𝑎ො 2 W3’ 500 500 W3 Target 1000 1000 𝑎2 fix W2 1000 1000 𝑎1 fix W1 Input 784 Input 784 𝑥

21 .Auto-encoder – Pre-training DNN Find-tune by • Greedy Layer-wise Pre-training again backpropagation output 10 output 10 Random W4 init 500 500 W3 Target 1000 1000 W2 1000 1000 W1 Input 784 Input 784 𝑥

22 .Learning More - Restricted Boltzmann Machine • Neural networks [5.1] : Restricted Boltzmann machine – definition • https://www.youtube.com/watch?v=p4Vh_zMw- HQ&index=36&list=PL6Xpj9I5qXYEcOhn7TqghAJ6NAPrN mUBH • Neural networks [5.2] : Restricted Boltzmann machine – inference • https://www.youtube.com/watch?v=lekCh_i32iE&list=PL 6Xpj9I5qXYEcOhn7TqghAJ6NAPrNmUBH&index=37 • Neural networks [5.3] : Restricted Boltzmann machine - free energy • https://www.youtube.com/watch?v=e0Ts_7Y6hZU&list= PL6Xpj9I5qXYEcOhn7TqghAJ6NAPrNmUBH&index=38

23 .Learning More - Deep Belief Network • Neural networks [7.7] : Deep learning - deep belief network • https://www.youtube.com/watch?v=vkb6AWYXZ5I&list= PL6Xpj9I5qXYEcOhn7TqghAJ6NAPrNmUBH&index=57 • Neural networks [7.8] : Deep learning - variational bound • https://www.youtube.com/watch?v=pStDscJh2Wo&list= PL6Xpj9I5qXYEcOhn7TqghAJ6NAPrNmUBH&index=58 • Neural networks [7.9] : Deep learning - DBN pre-training • https://www.youtube.com/watch?v=35MUlYCColk&list= PL6Xpj9I5qXYEcOhn7TqghAJ6NAPrNmUBH&index=59

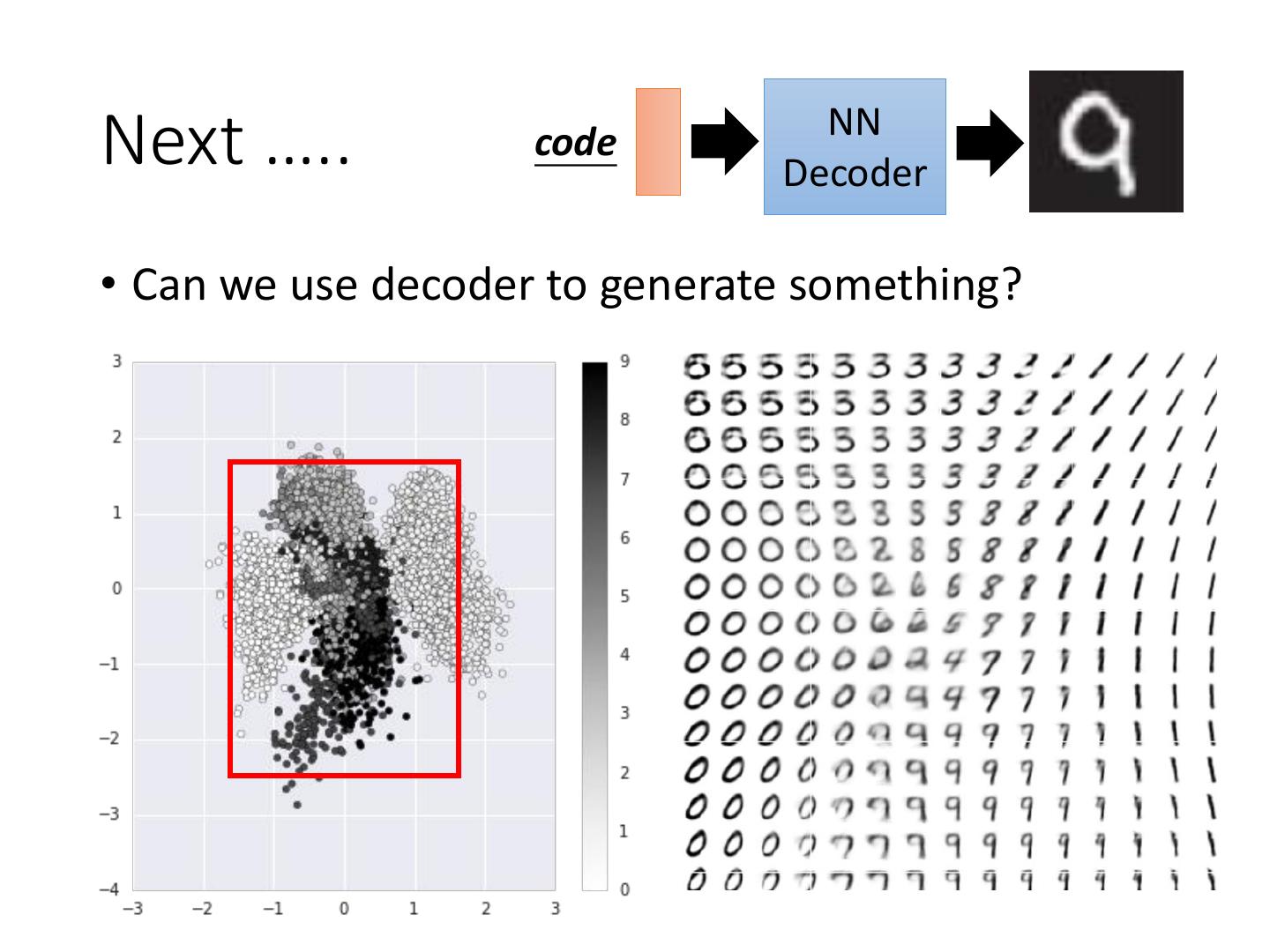

24 . NN Next ….. code Decoder • Can we use decoder to generate something?

25 . NN Next ….. code Decoder • Can we use decoder to generate something?

26 .Appendix

27 .Pokémon • http://140.112.21.35:2880/~tlkagk/pokemon/pca.html • http://140.112.21.35:2880/~tlkagk/pokemon/auto.html • The code is modified from • http://jkunst.com/r/pokemon-visualize-em-all/

28 .Add: Ladder Network • http://rinuboney.github.io/2016/01/19/ladder- network.html • https://mycourses.aalto.fi/pluginfile.php/146701/ mod_resource/content/1/08%20semisup%20ladde r.pdf • https://arxiv.org/abs/1507.02672

29 .