- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Why Deep Learning?

展开查看详情

1 .Why Deep Learning?

2 .Deeper is Better? Word Error Word Error Layer X Size Layer X Size Rate (%) Rate (%) 1 X 2k 24.2 2 X 2k 20.4 Not surprised, more 3 X 2k 18.4 parameters, better 4 X 2k 17.8 performance 5 X 2k 17.2 1 X 3772 22.5 7 X 2k 17.1 1 X 4634 22.6 1 X 16k 22.1 Seide, Frank, Gang Li, and Dong Yu. "Conversational Speech Transcription Using Context-Dependent Deep Neural Networks." Interspeech. 2011.

3 .Fat + Short v.s. Thin + Tall The same number of parameters Which one is better? …… x1 x2 …… xN x1 x2 …… xN Shallow Deep

4 .Fat + Short v.s. Thin + Tall Word Error Word Error Layer X Size Layer X Size Rate (%) Rate (%) 1 X 2k 24.2 2 X 2k 20.4 Why? 3 X 2k 18.4 4 X 2k 17.8 5 X 2k 17.2 1 X 3772 22.5 7 X 2k 17.1 1 X 4634 22.6 1 X 16k 22.1 Seide, Frank, Gang Li, and Dong Yu. "Conversational Speech Transcription Using Context-Dependent Deep Neural Networks." Interspeech. 2011.

5 .Modularization • Deep → Modularization Don’t put everything in your main function. http://rinuboney.github.io/2015/10/18/theoretical-motivations-deep-learning.html

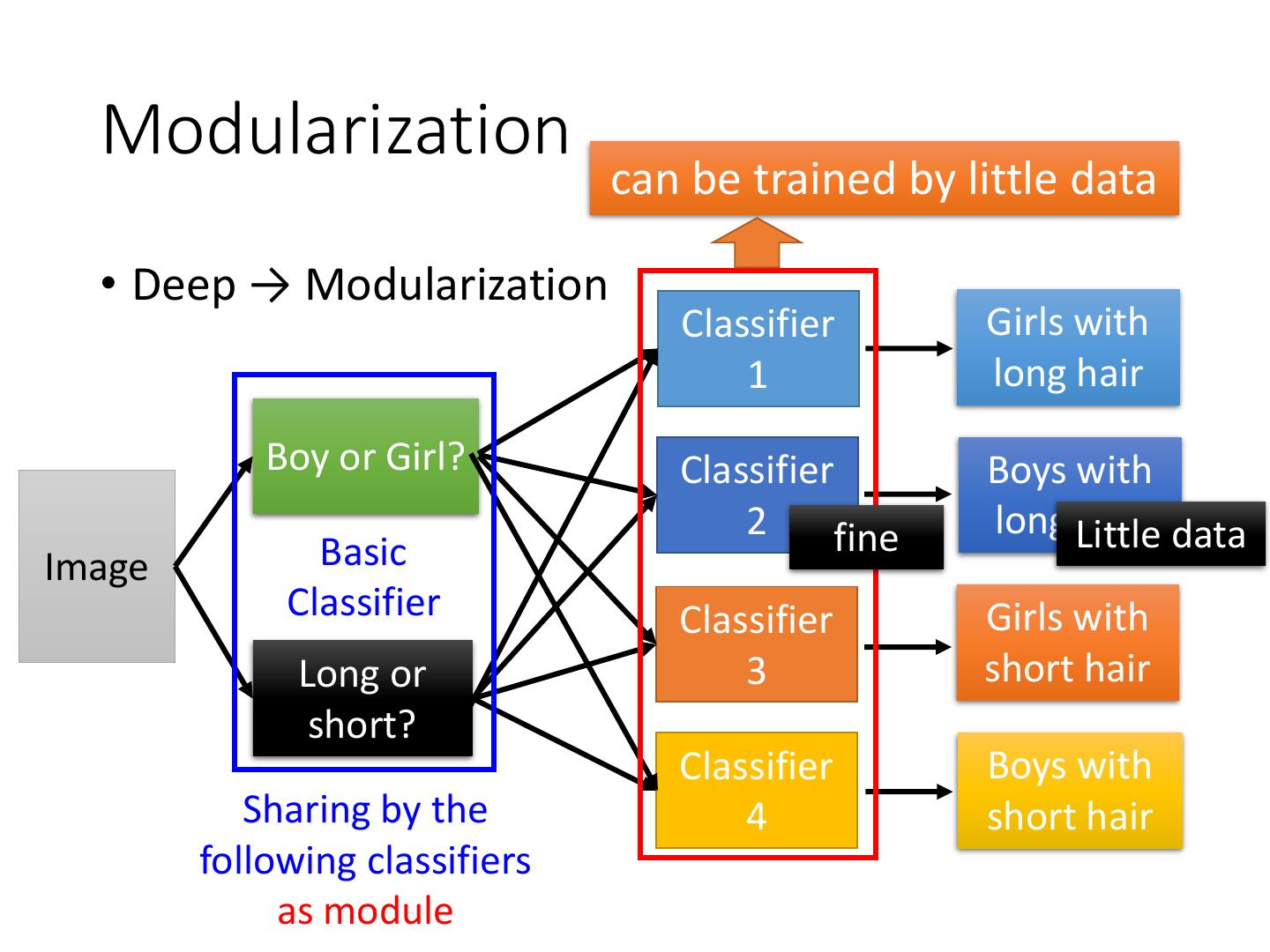

6 .Modularization • Deep → Modularization Classifier Girls with 長髮 長髮 1 long hair 女 女長髮長髮 女女 Classifier Boys with 長髮 2 weak long hair 男 examples Little Image Classifier Girls with 短髮短髮 3 short hair 女 女短髮短髮 女女 Classifier Boys with 短髮短髮 4 short hair 男 男短髮短髮 男男

7 . Each basic classifier can have Modularization sufficient training examples. • Deep → Modularization 長髮 長髮 長髮長髮 男 短髮 女 短髮 女 長髮 Boy or Girl? 女 女短髮 女 v.s. 短髮短髮 短髮 女 男 男短髮 女女 短髮 Basic 男男 Image Classifier 長髮長髮 短髮短髮 Long or 女 女長髮長髮 女 女短髮短髮 short? 女女 v.s. 女女 長髮 短髮短髮 Classifiers for the 男 男 男短髮短髮 attributes 男男

8 . Modularization can be trained by little data • Deep → Modularization Classifier Girls with 1 long hair Boy or Girl? Classifier Boys with 2 fine long Little hair data Image Basic Classifier Classifier Girls with Long or 3 short hair short? Classifier Boys with Sharing by the 4 short hair following classifiers as module

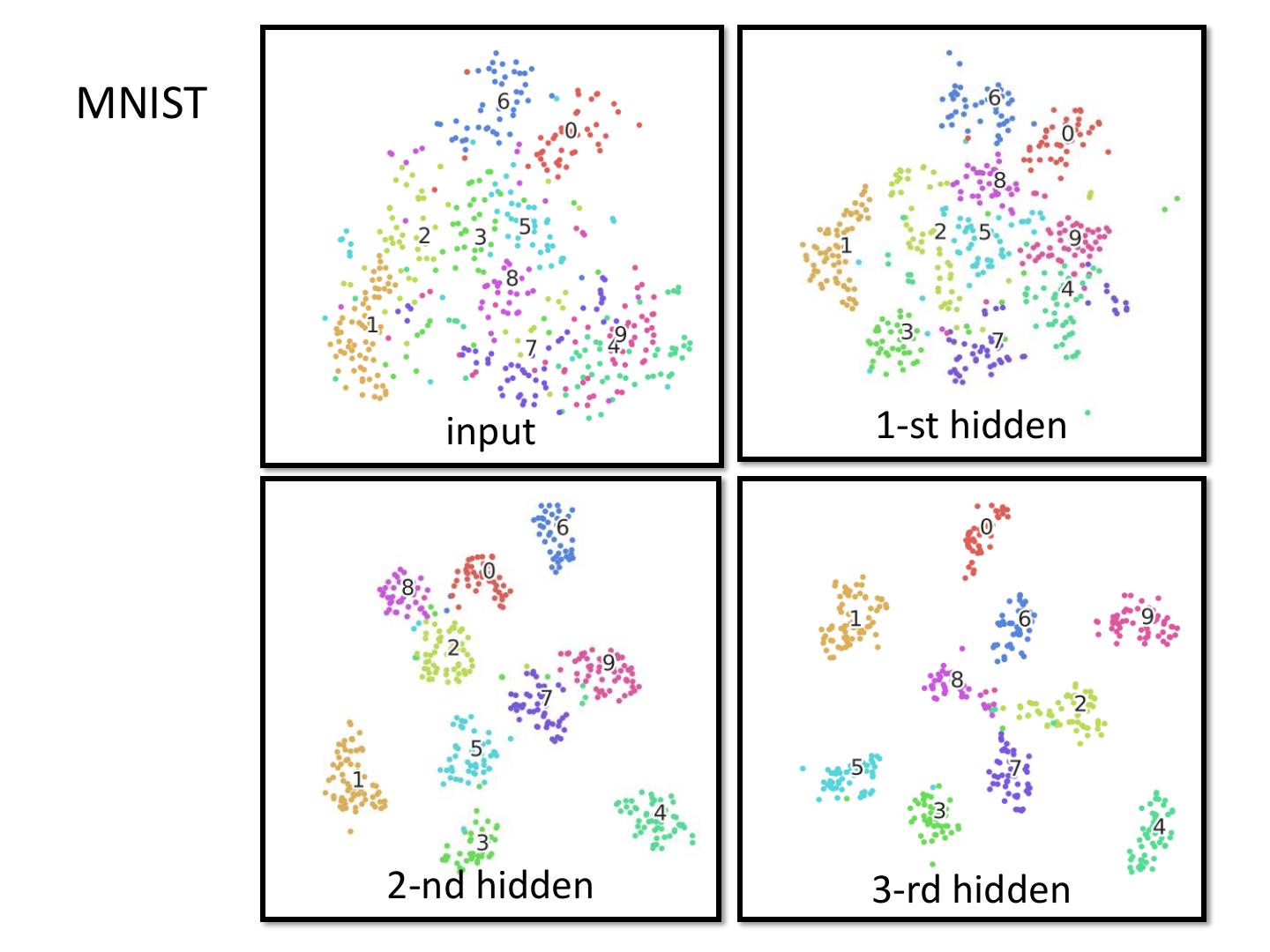

9 .Modularization • Deep → Modularization → Less training data? x1 …… x2 The modularization is …… automatically learned from data. …… …… …… …… xN …… The most basic Use 1st layer as module Use 2nd layer as classifiers to build classifiers module ……

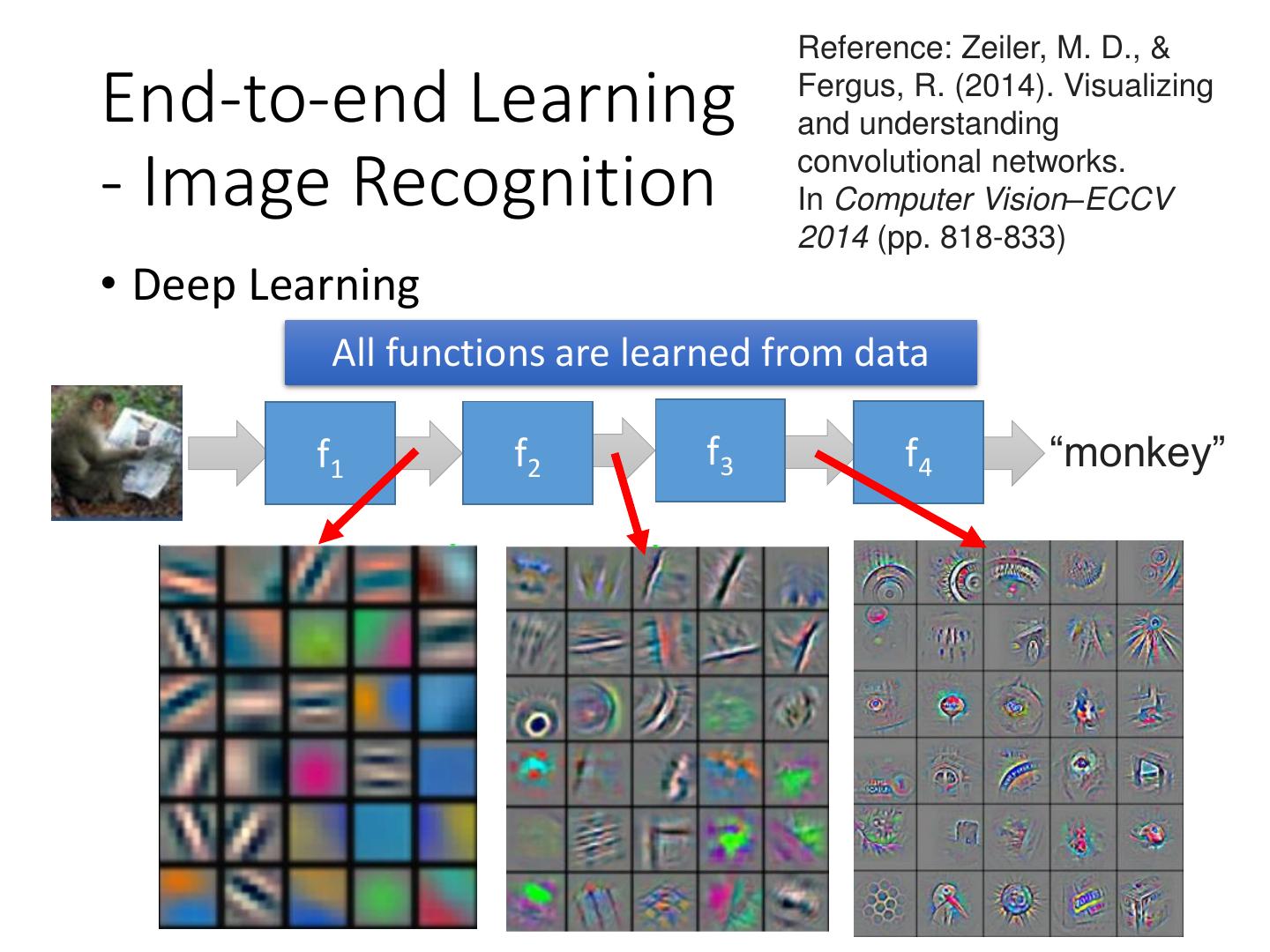

10 .Modularization - Image • Deep → Modularization x1 …… x2 …… …… …… …… …… xN …… The most basic Use 1st layer as module Use 2nd layer as classifiers to build classifiers module …… Reference: Zeiler, M. D., & Fergus, R. (2014). Visualizing and understanding convolutional networks. In Computer Vision–ECCV 2014 (pp. 818-833)

11 . Modularization - Speech • The hierarchical structure of human languages what do you think Phoneme: hh w aa t d uw y uw th ih ng k Tri-phone: …… t-d+uw d-uw+y uw-y+uw y-uw+th …… t-d+uw1 t-d+uw2 t-d+uw3 d-uw+y1 d-uw+y2 d-uw+y3 State:

12 .Modularization - Speech • The first stage of speech recognition • Classification: input → acoustic feature, output → state Determine the state each acoustic feature …… belongs to Acoustic feature States: a a a b b c c

13 .Modularization - Speech • Each state has a stationary distribution for acoustic features Gaussian Mixture Model (GMM) t-d+uw1 P(x|”t-d+uw1”) d-uw+y3 P(x|”d-uw+y3”)

14 .Modularization - Speech • Each state has a stationary distribution for acoustic features Tied-state pointer pointer P(x|”d-uw+y3”) P(x|”y-uw+th3”) Same Address

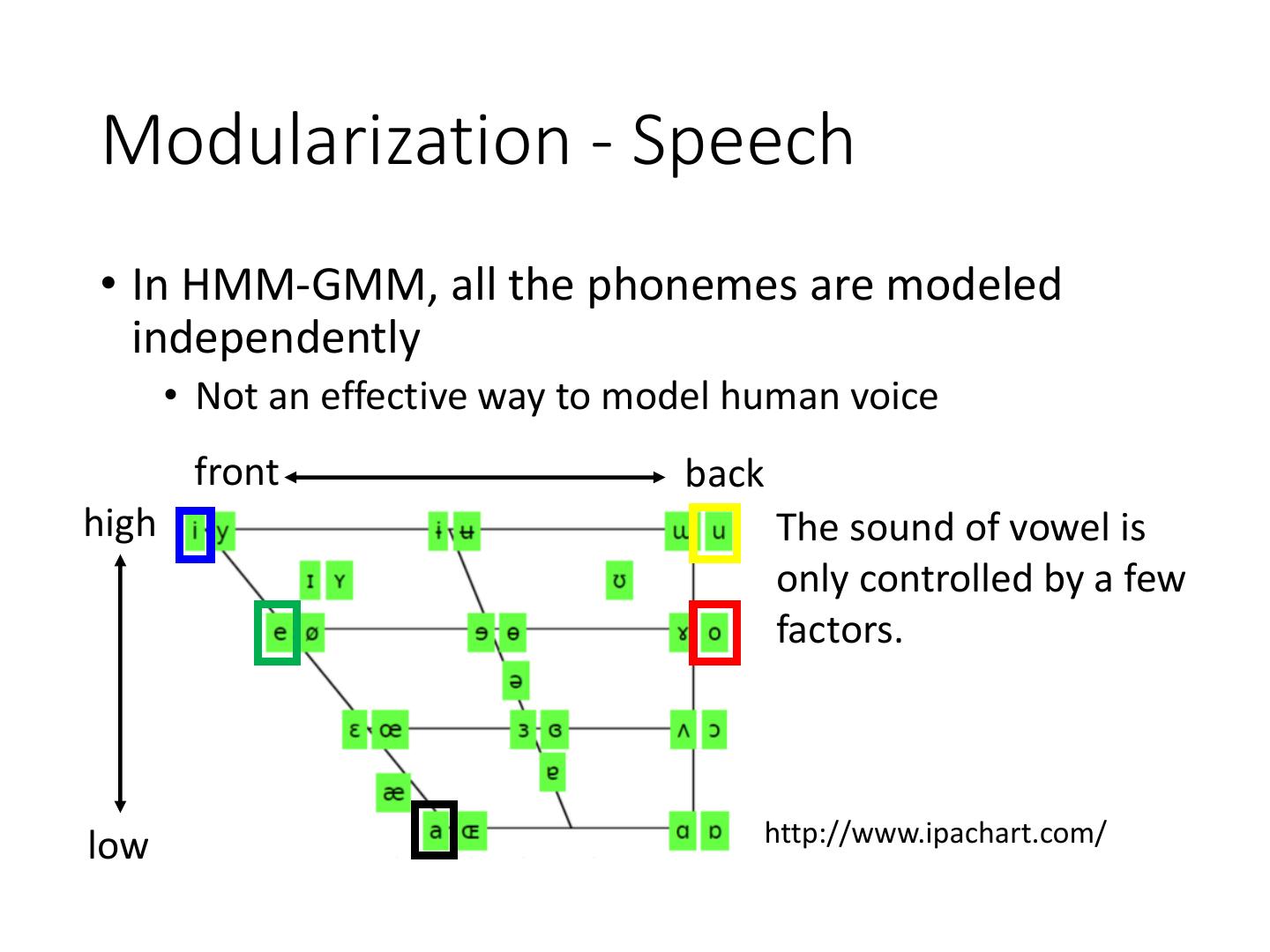

15 .Modularization - Speech • In HMM-GMM, all the phonemes are modeled independently • Not an effective way to model human voice front back high The sound of vowel is only controlled by a few factors. low http://www.ipachart.com/

16 . Modularization - Speech ➢ DNN input: One acoustic feature P(a|xi) P(b|xi) P(c|xi) …… ➢ DNN output: …… Probability of each state Size of output layer = No. of states All the states use DNN the same DNN …… …… xi

17 . front back Modularization high Vu, Ngoc Thang, Jochen Weiner, and Tanja Schultz. "Investigating the Learning Effect of Multilingual Bottle-Neck Features for ASR." Interspeech. 2014. Output of hidden layer reduce to two dimensions low /i/ ➢ The lower layers detect the /u/ manner of articulation ➢ All the phonemes share the results from the same /e/ /o/ set of detectors. /a/ ➢ Use parameters effectively

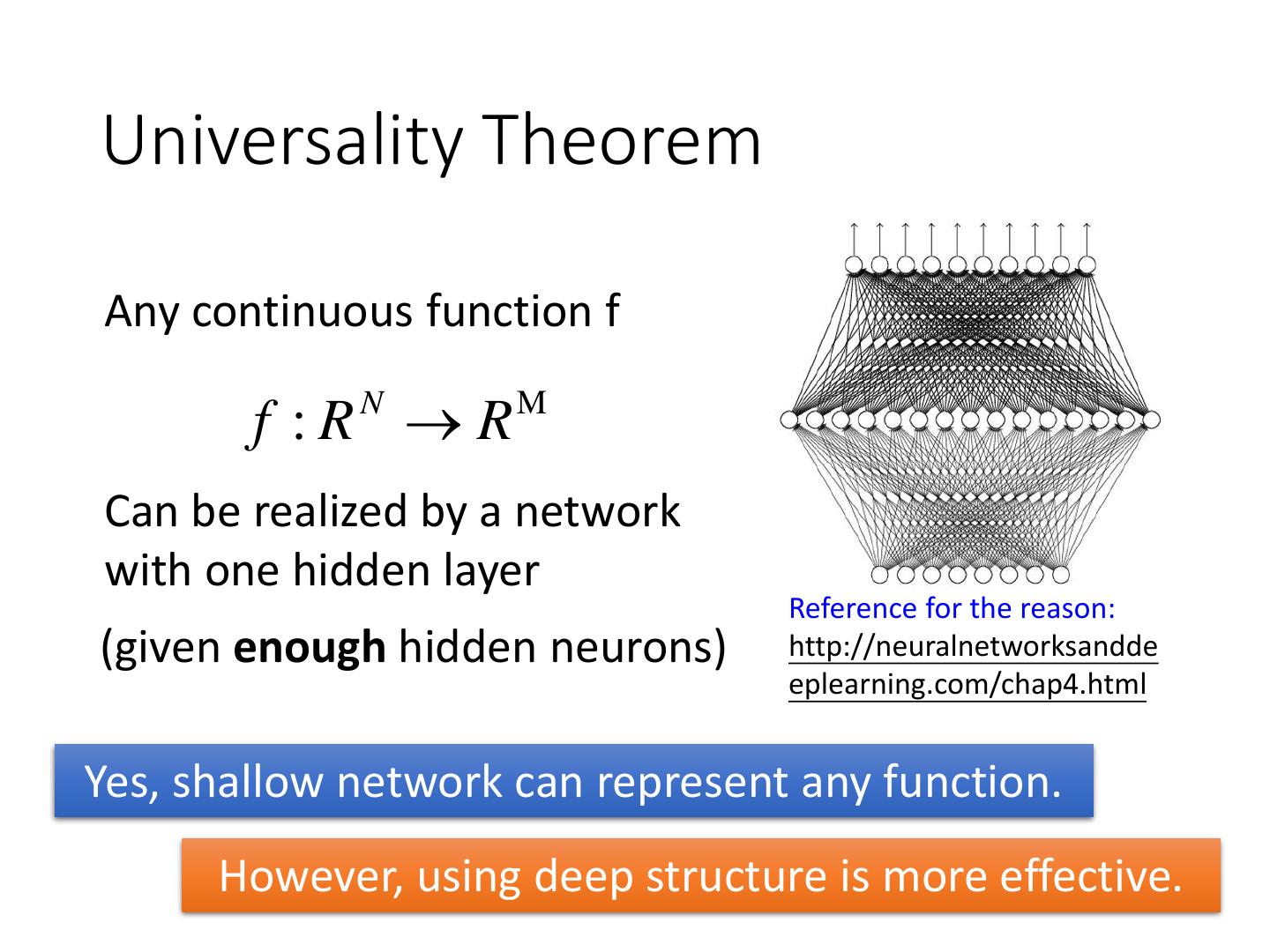

18 .Universality Theorem Any continuous function f f :R R N M Can be realized by a network with one hidden layer Reference for the reason: (given enough hidden neurons) http://neuralnetworksandde eplearning.com/chap4.html Yes, shallow network can represent any function. However, using deep structure is more effective.

19 .Analogy Logic circuits Neural network • Logic circuits consists of • Neural network consists of gates neurons • A two layers of logic gates • A hidden layer network can can represent any Boolean represent any continuous function. function. • Using multiple layers of • Using multiple layers of logic gates to build some neurons to represent some functions are much simpler functions are much simpler less gates needed less less parameters data? This page is for EE background.

20 .Analogy • E.g. parity check For input sequence 1 0 1 0 Circuit 1 (even) with d bits, Two-layer circuit 0 0 0 1 Circuit 0 (odd) need O(2d) gates. XNOR 1 0 0 1 0 1 0 With multiple layers, we need only O(d) gates.

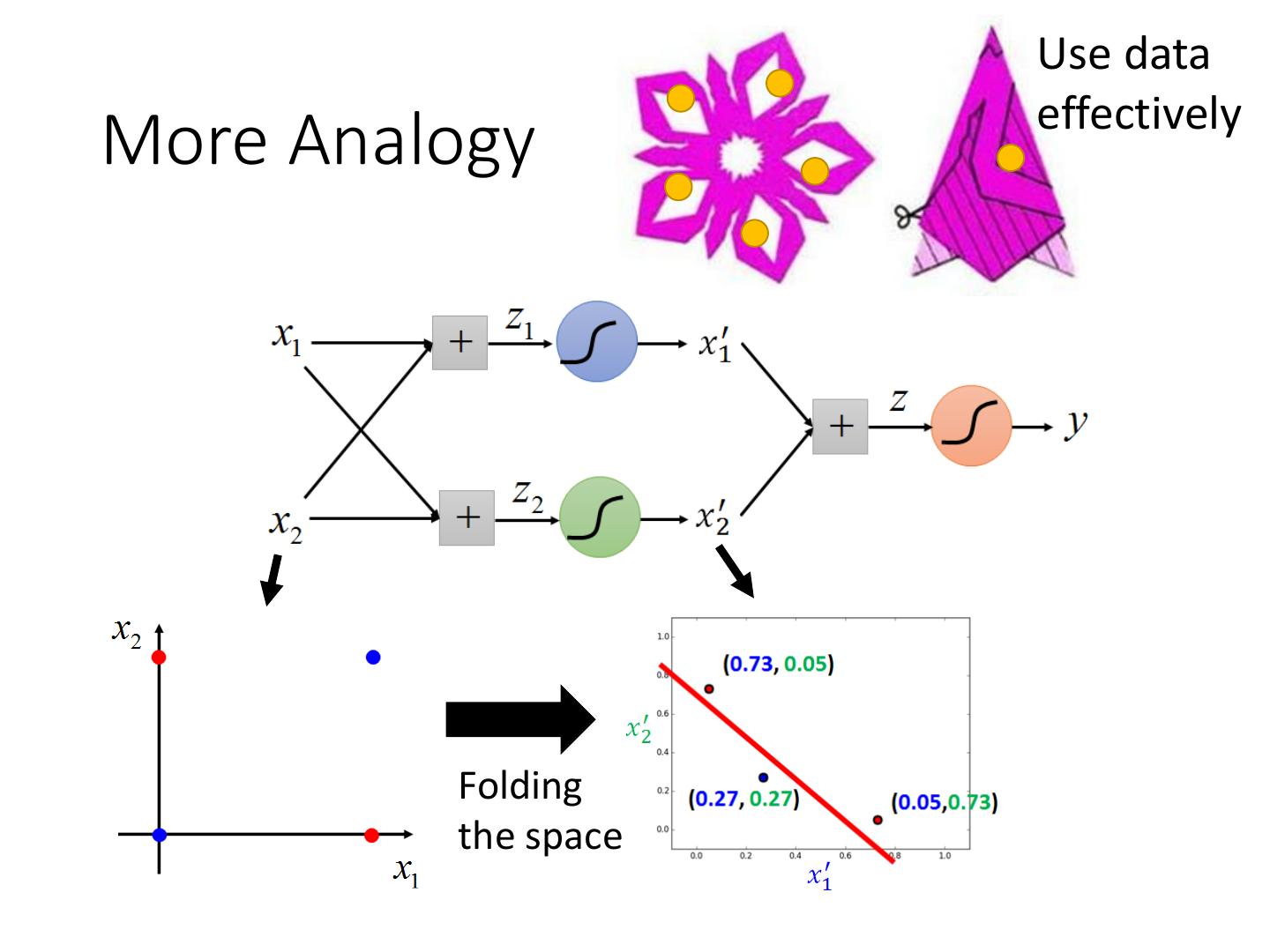

21 .More Analogy

22 . Use data effectively More Analogy Folding the space

23 .More Analogy - Experiment Different numbers of training examples 10,0000 2,0000 1 hidden layer 3 hidden layers

24 .End-to-end Learning • Production line Hypothesis Model Functions Simple Simple …… Simple “Hello” Function 1 Function 2 Function N A very complex function End-to-end training: What each function should do is learned automatically

25 . End-to-end Learning - Speech Recognition • Shallow Approach DFT Waveform spectrogram … “Hello” GMM DCT log Filter bank MFCC Each box is a simple function in the production line: :hand-crafted :learned from data

26 .End-to-end Learning - Speech Recognition • Deep Learning f1 f2 All functions are learned from data f3 “Hello” f5 f4 Less engineering labor, but machine learns more

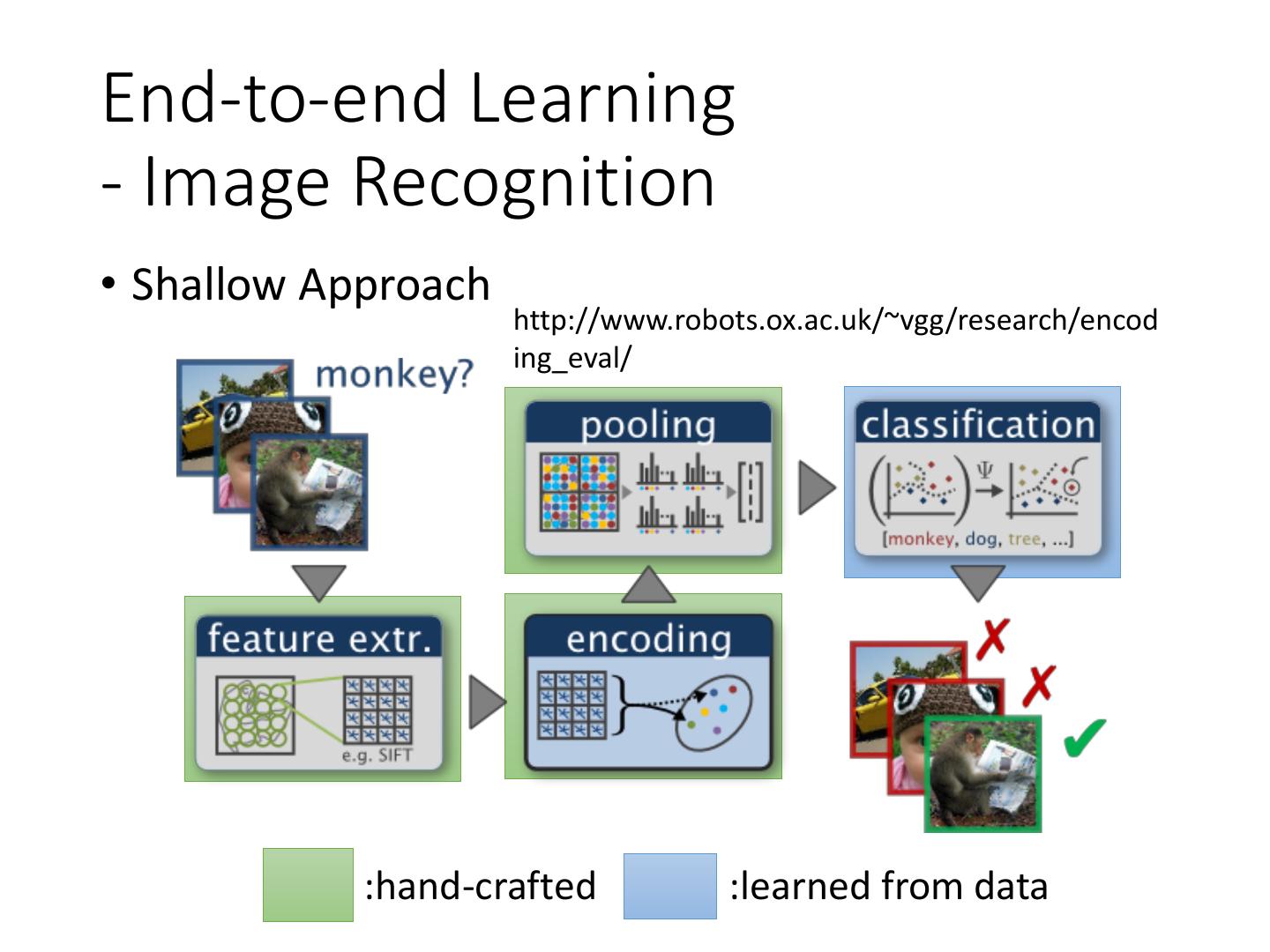

27 .End-to-end Learning - Image Recognition • Shallow Approach http://www.robots.ox.ac.uk/~vgg/research/encod ing_eval/ :hand-crafted :learned from data

28 . Reference: Zeiler, M. D., & End-to-end Learning Fergus, R. (2014). Visualizing and understanding - Image Recognition convolutional networks. In Computer Vision–ECCV 2014 (pp. 818-833) • Deep Learning All functions are learned from data f1 f2 f3 f4 “monkey”

29 .Complex Task … • Very similar input, different output System dog System bear • Very different input, similar output System train System train