- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

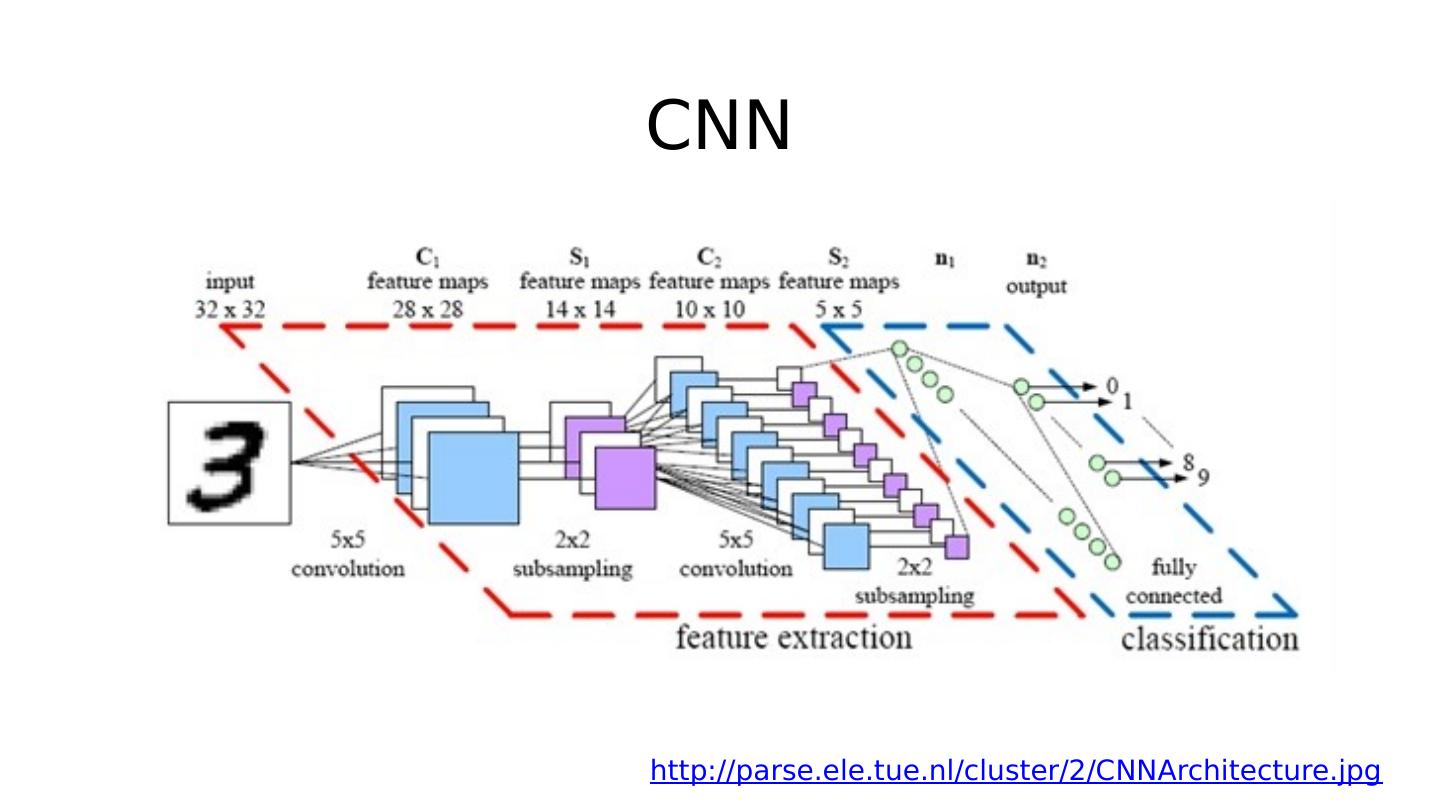

10_ Basic CNN

展开查看详情

1 .

2 .

3 .

4 .

5 .

6 .

7 .

8 .

9 .

10 .

11 .

12 .

13 .

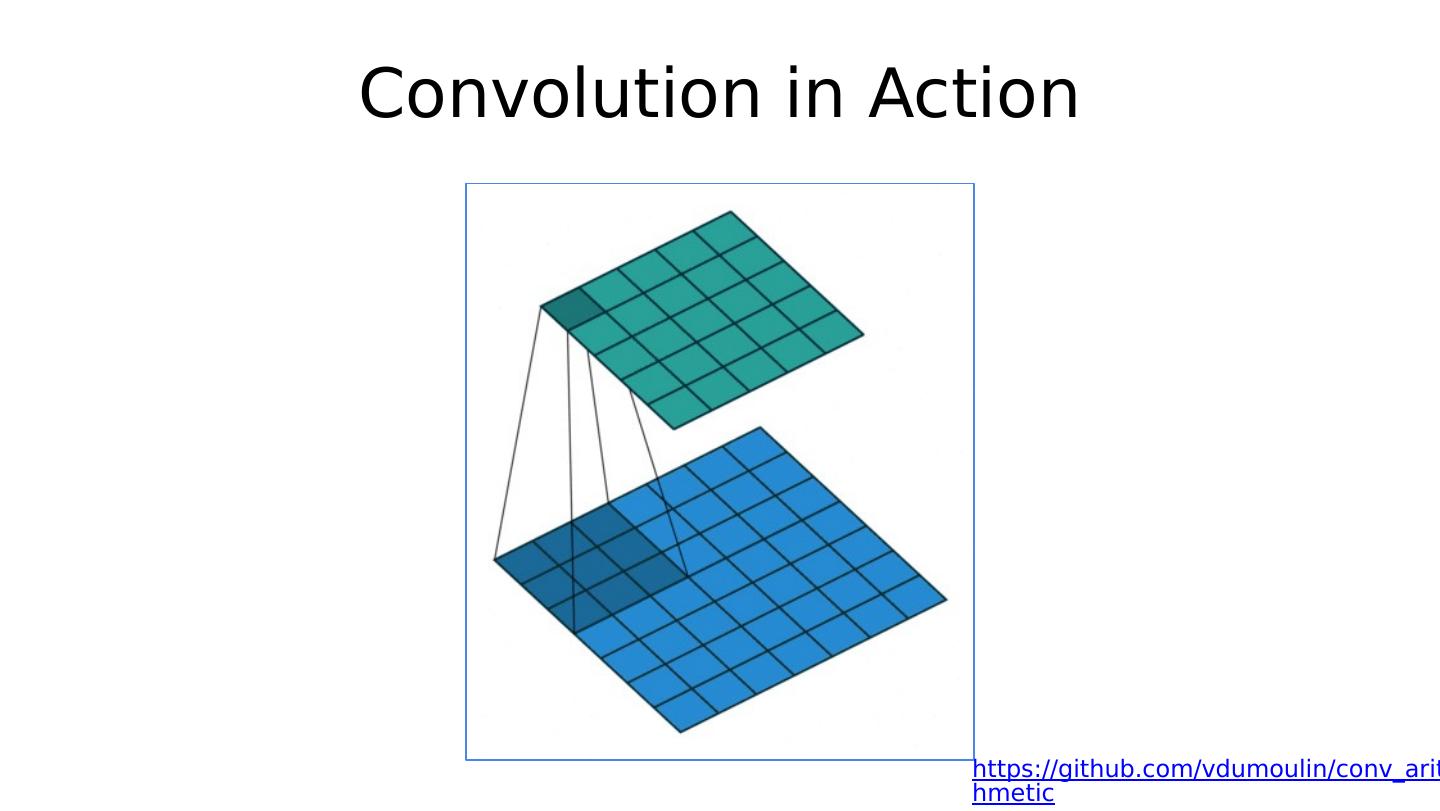

14 .Convolution in Action https://github.com/vdumoulin/conv_arithmetic

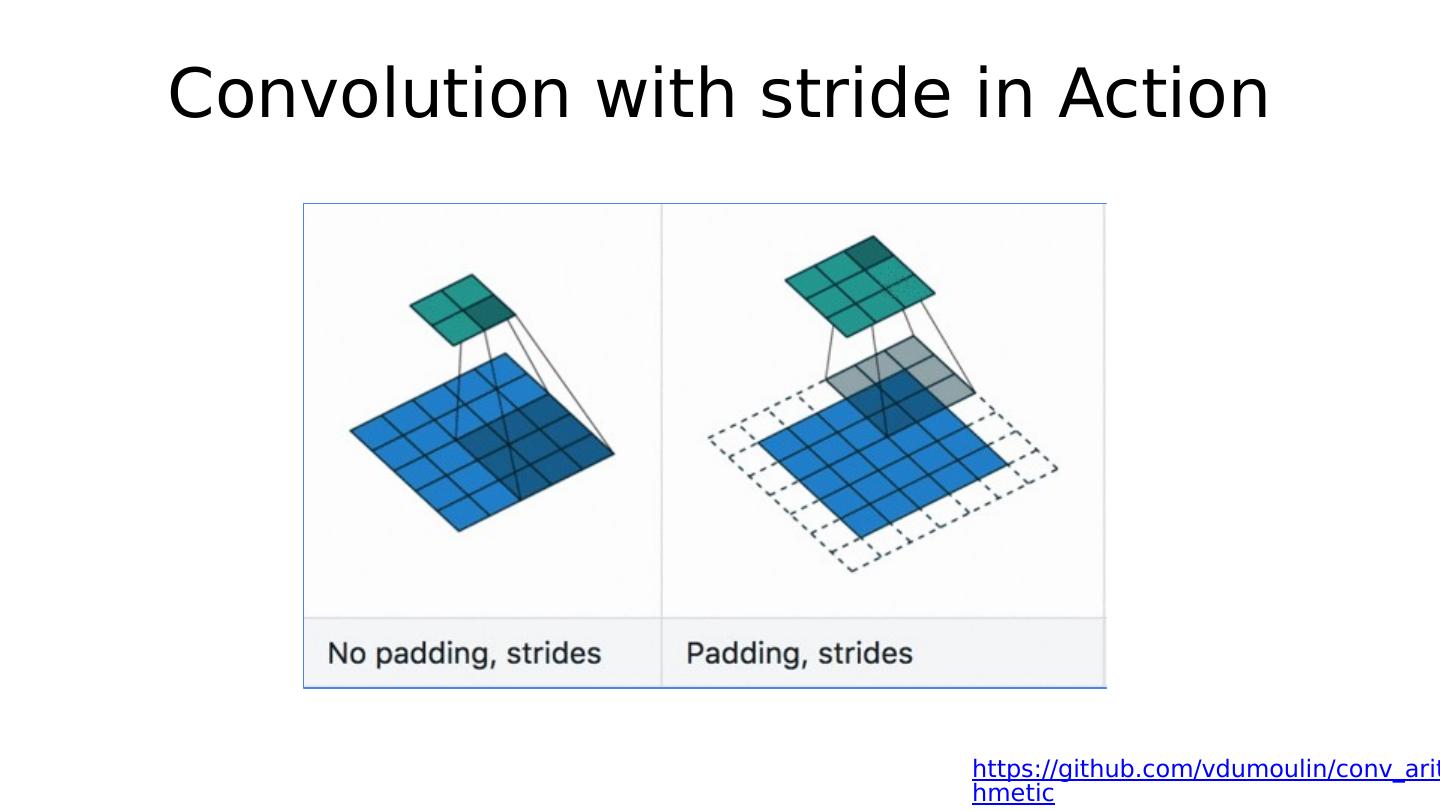

15 .Convolution with stride in Action https://github.com/vdumoulin/conv_arithmetic

16 .Convolution with stride in Action https://github.com/vdumoulin/conv_arithmetic

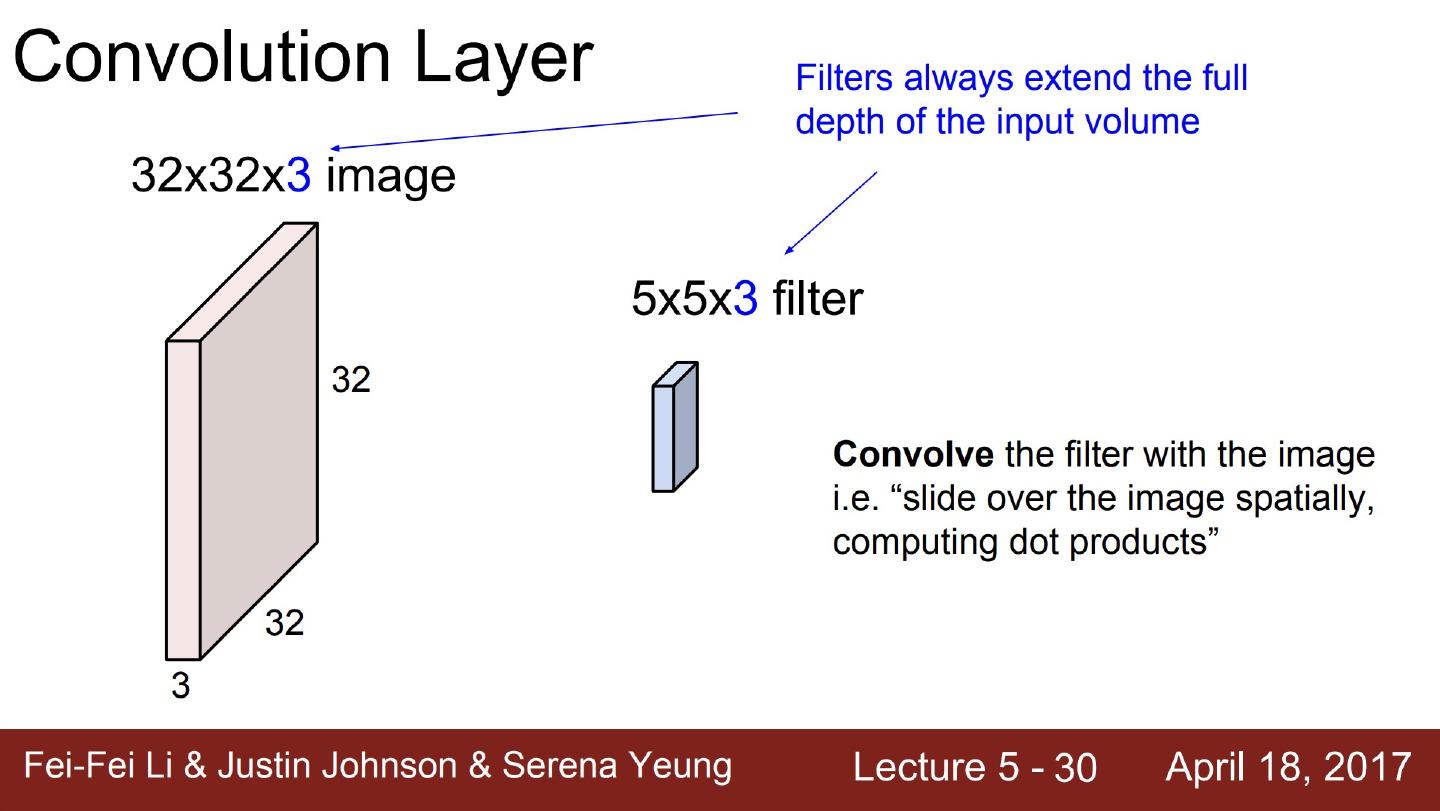

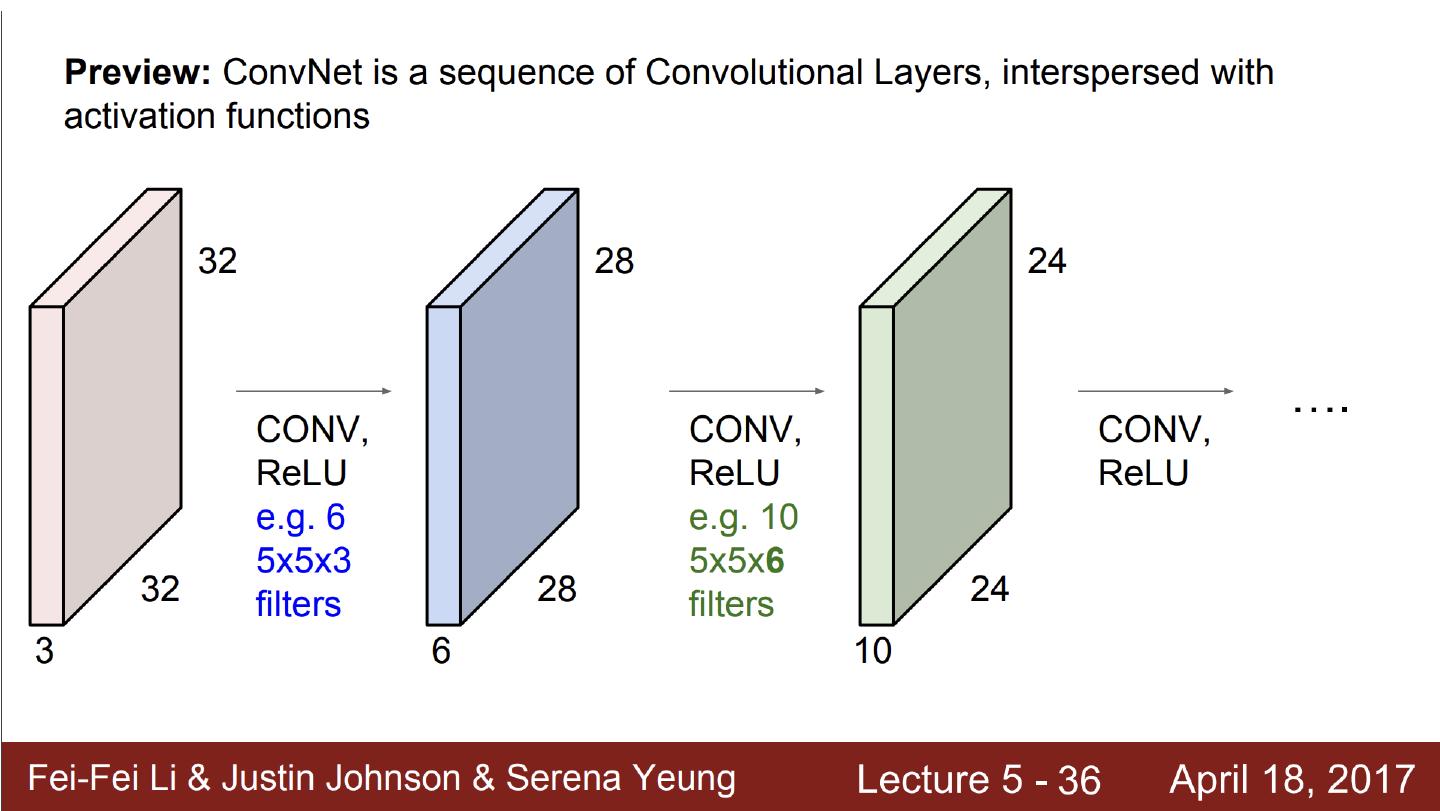

17 .ML/DL for Everyone with Lecture 10: Basic CNN Sung Kim < hunkim+ml@gmail.com > HKUST Code: https://github.com/hunkim/PyTorchZeroToAll Slides: http://bit.ly/PyTorchZeroAll Videos: http://bit.ly/PyTorchVideo

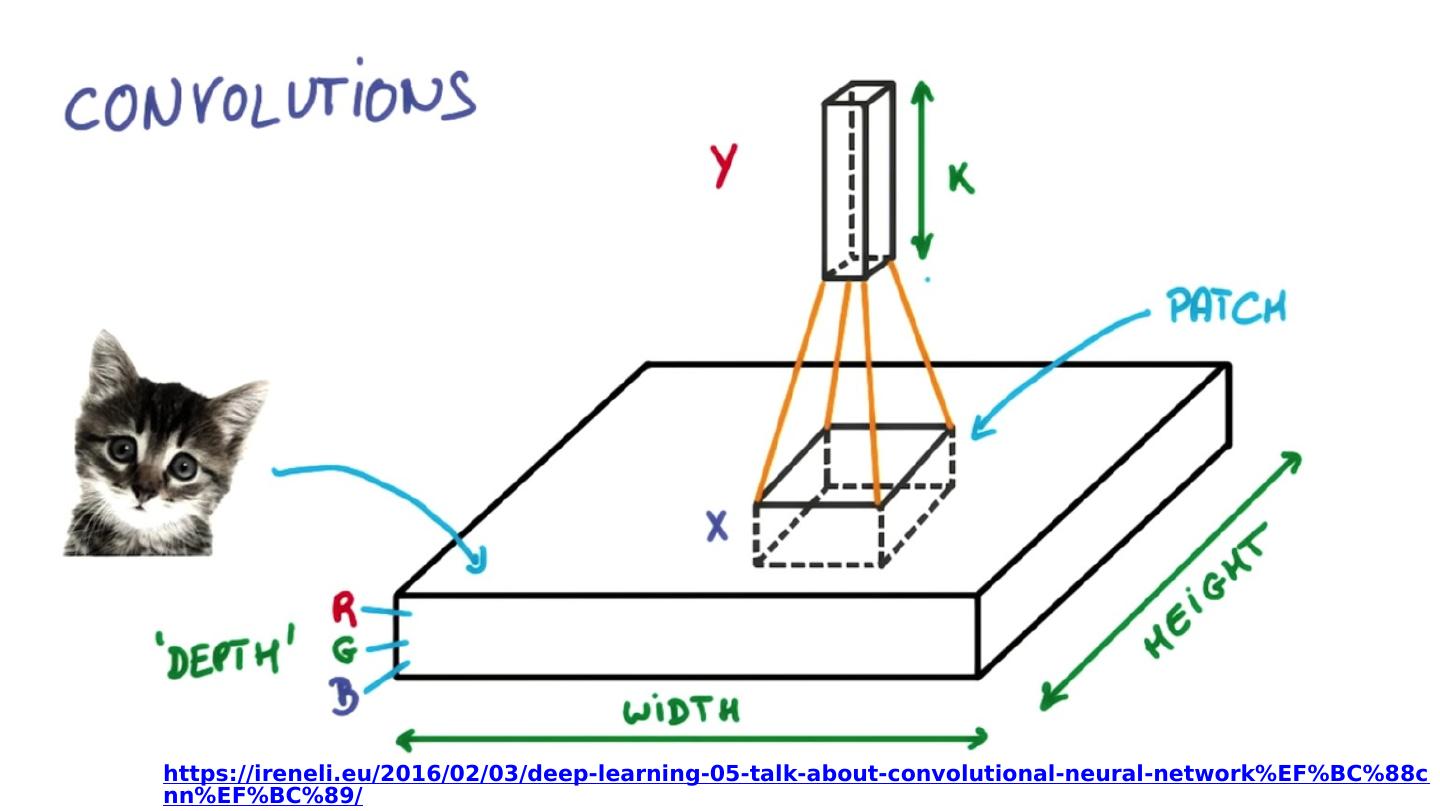

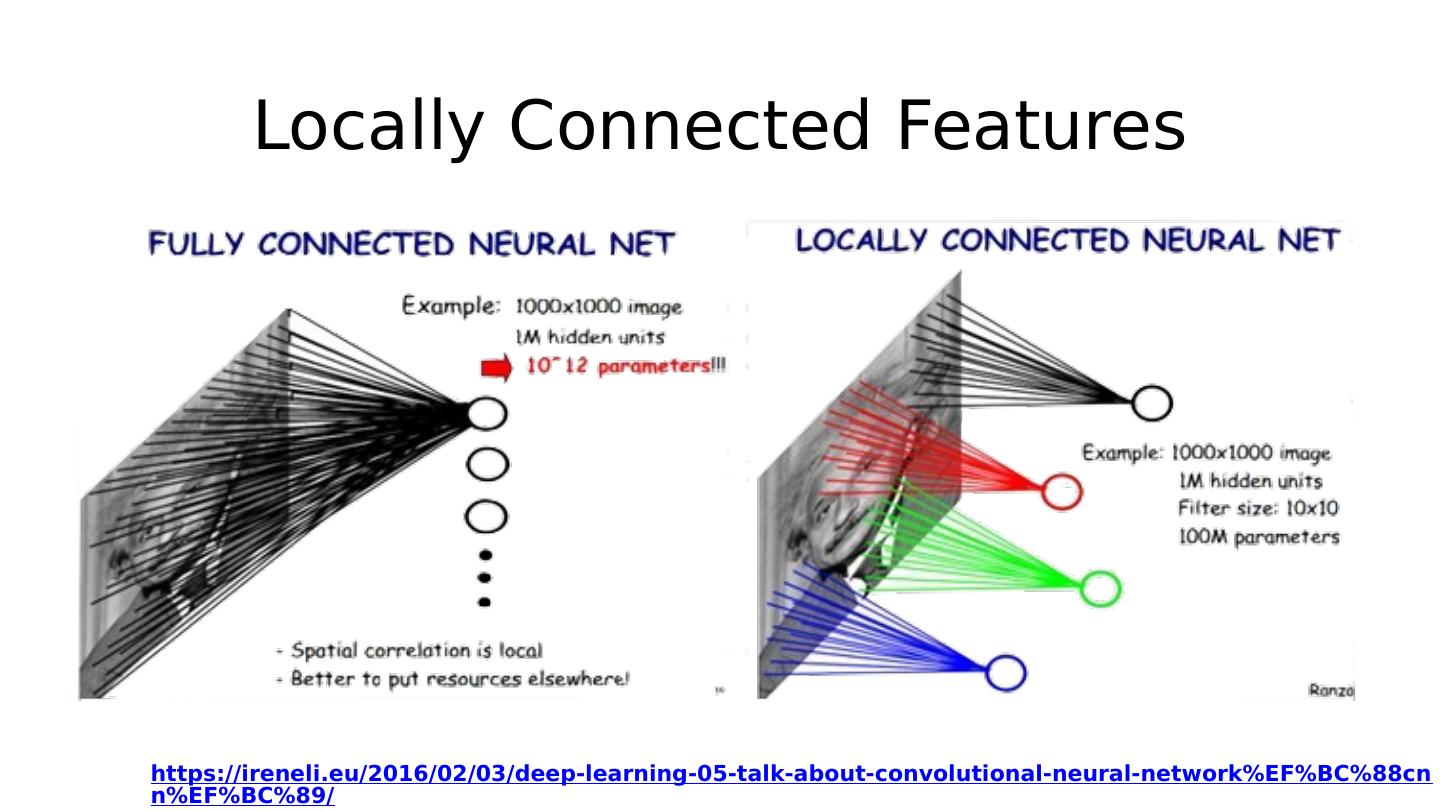

18 .Locally Connected Features https://ireneli.eu/2016/02/03/deep-learning-05-talk-about-convolutional-neural-network%EF%BC%88cnn%EF%BC%89/

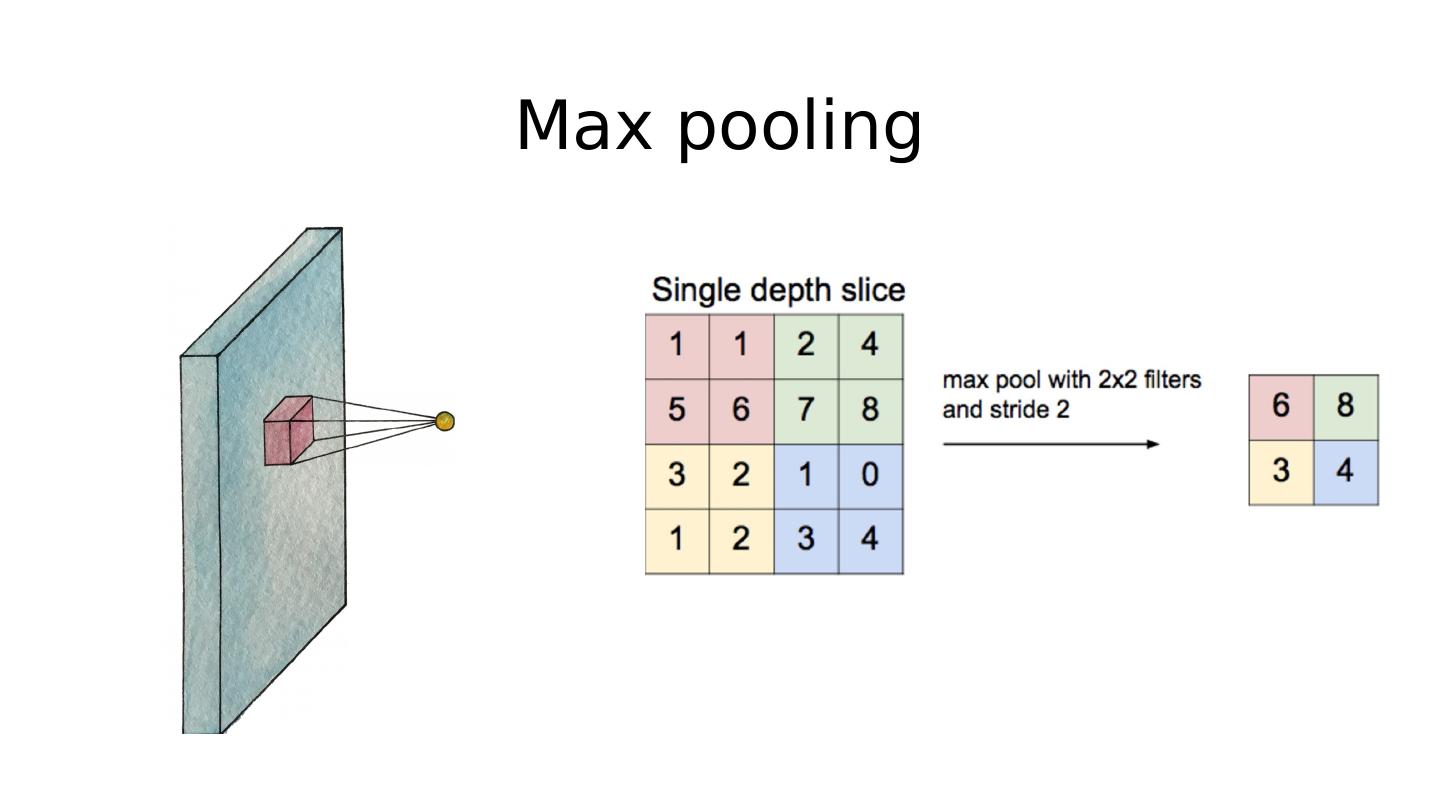

19 .Max Pooling in Action https://github.com/vdumoulin/conv_arithmetic

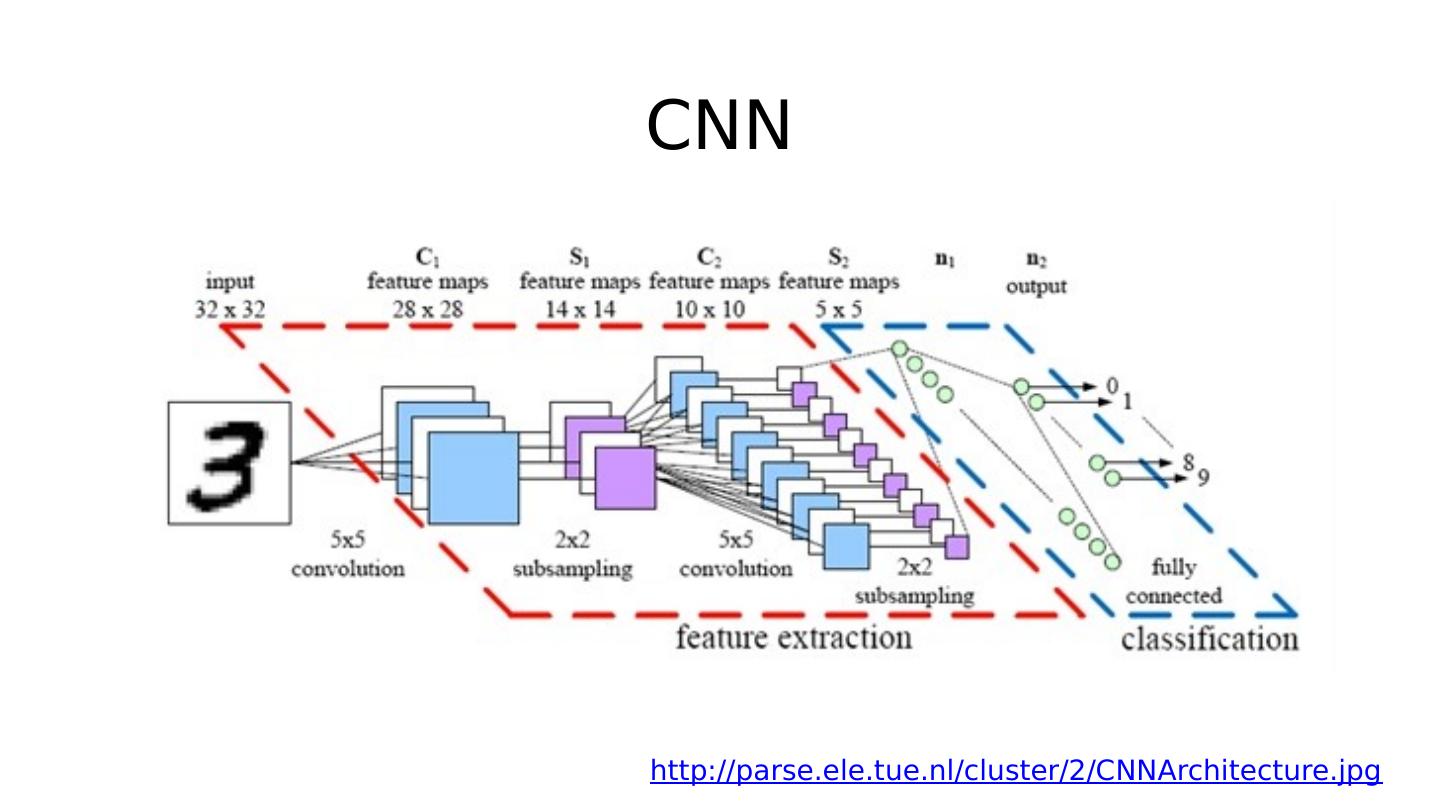

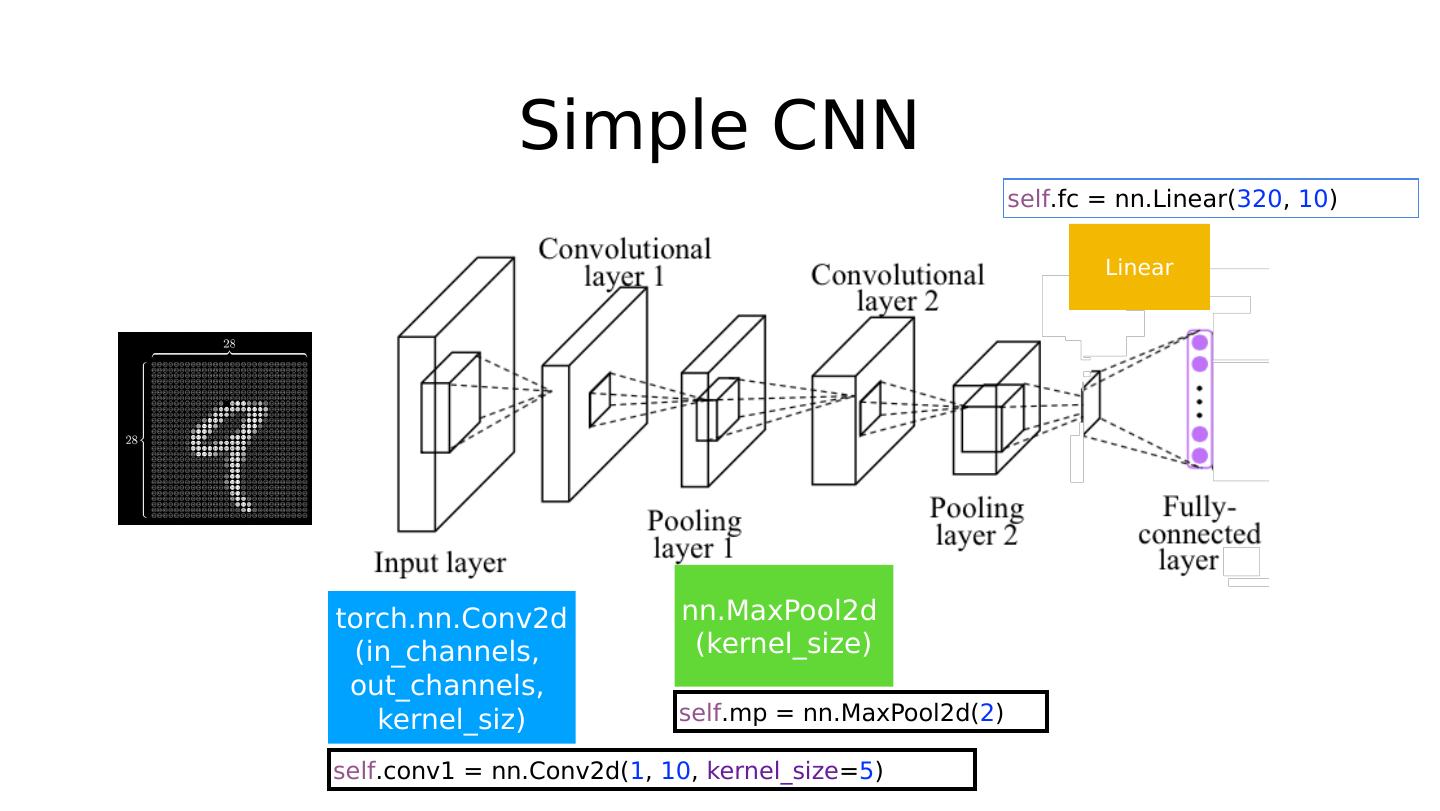

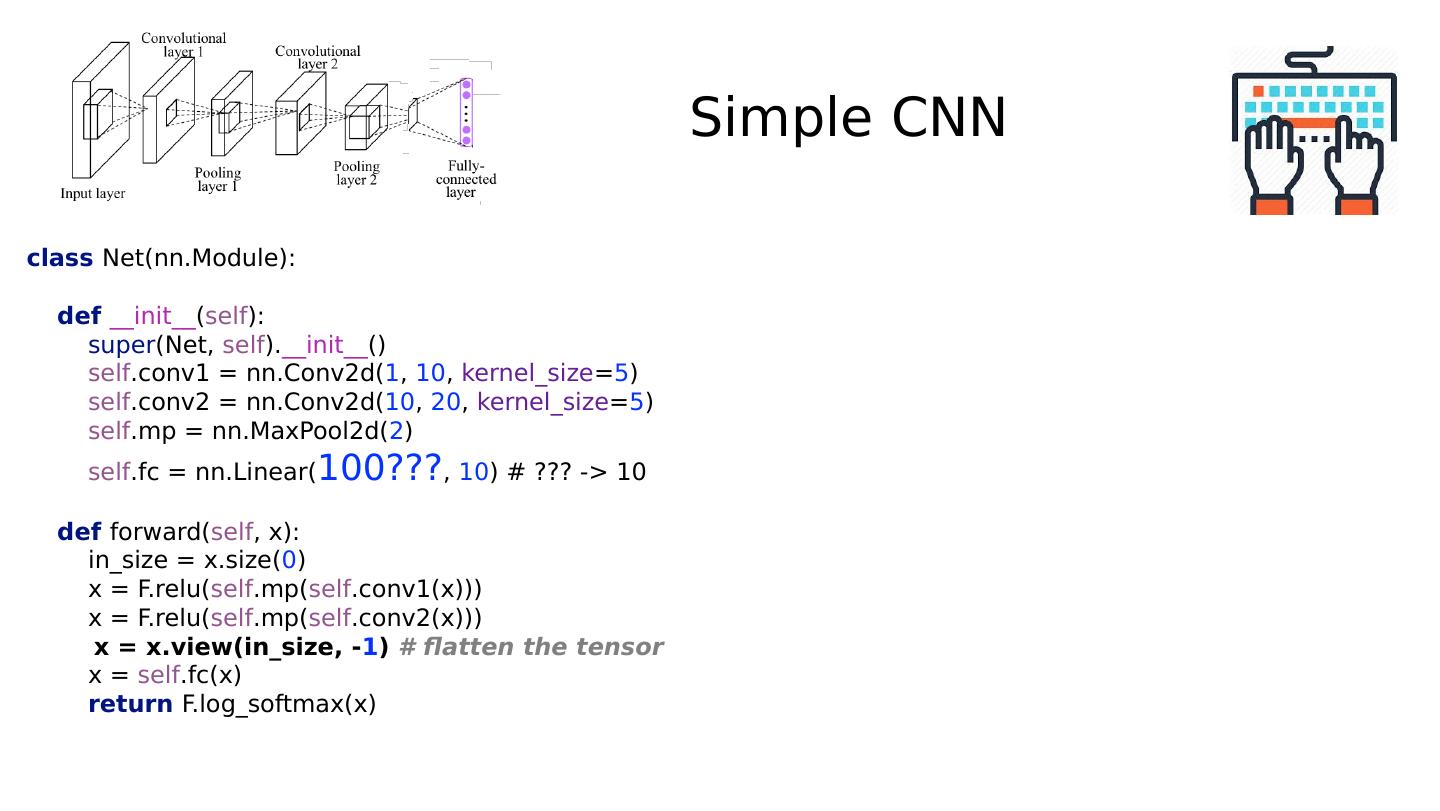

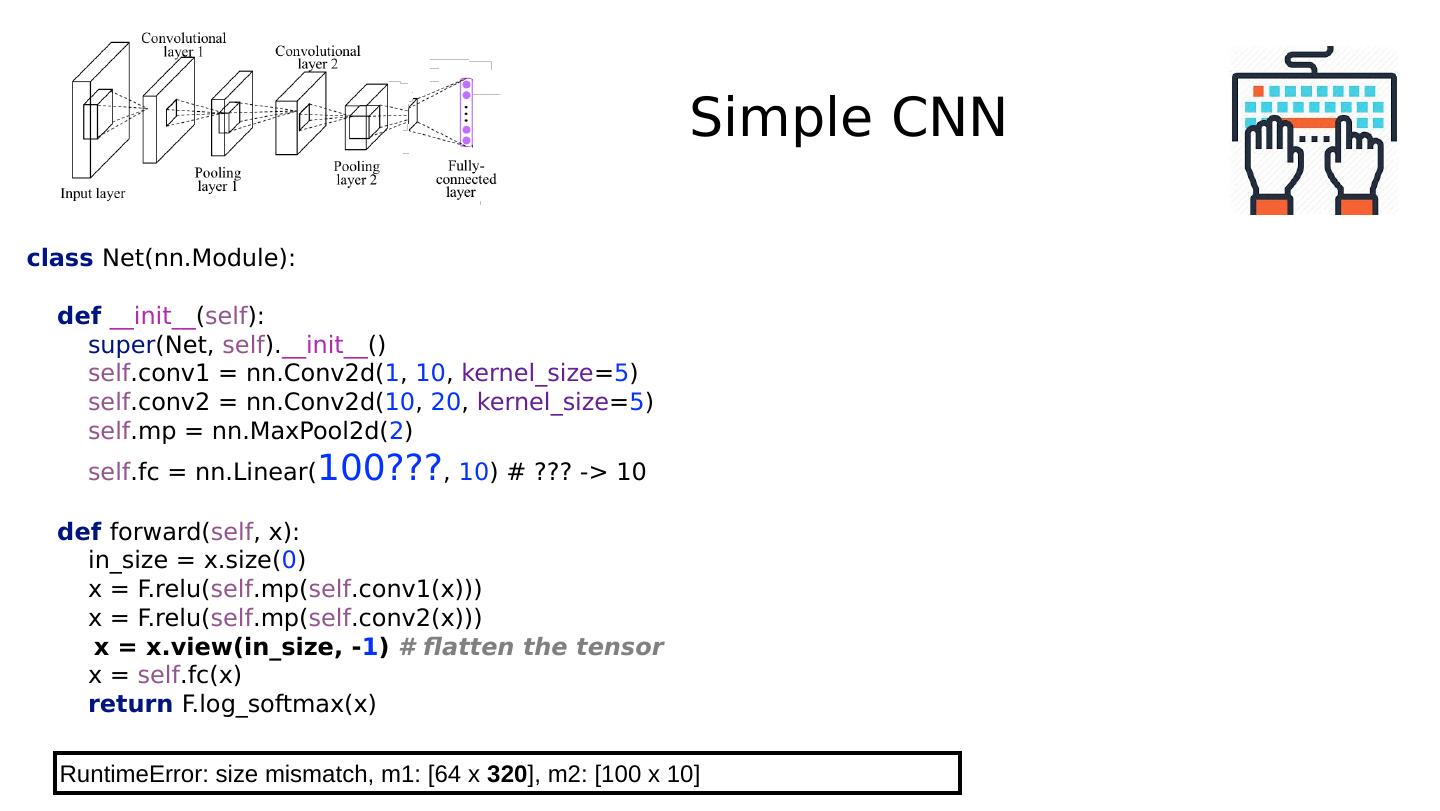

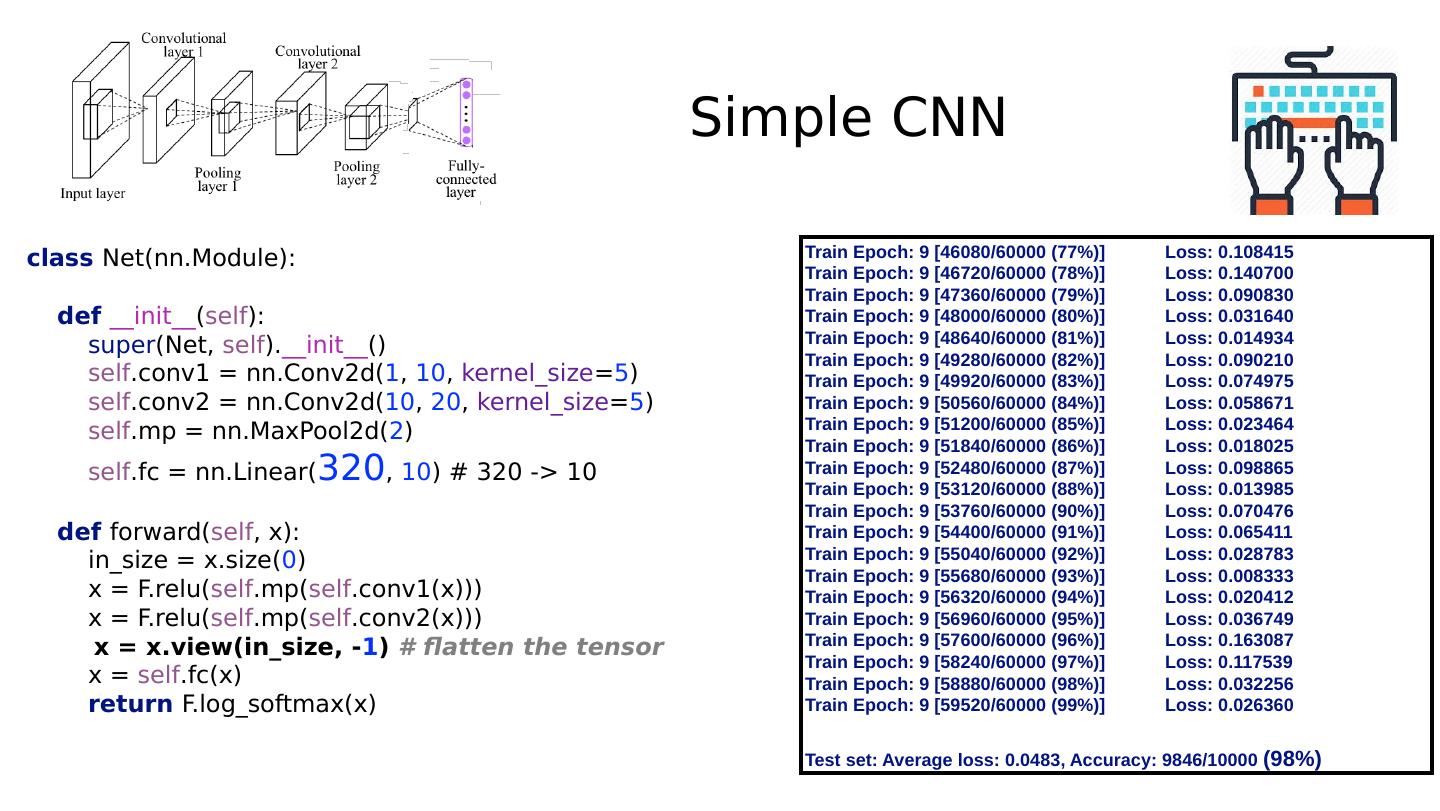

20 .Simple CNN class Net(nn.Module): def __init__ ( self ): super (Net, self ). __init__ () self .conv1 = nn.Conv2d( 1 , 10 , kernel_size = 5 ) self .conv2 = nn.Conv2d( 10 , 20 , kernel_size = 5 ) self .mp = nn.MaxPool2d( 2 ) self .fc = nn.Linear( 100??? , 10 ) # ??? -> 10 def forward( self , x): in_size = x.size( 0 ) x = F.relu( self .mp( self .conv1(x))) x = F.relu( self .mp( self .conv2(x))) x = x.view(in_size, - 1 ) # flatten the tensor x = self .fc(x) return F.log_softmax(x)

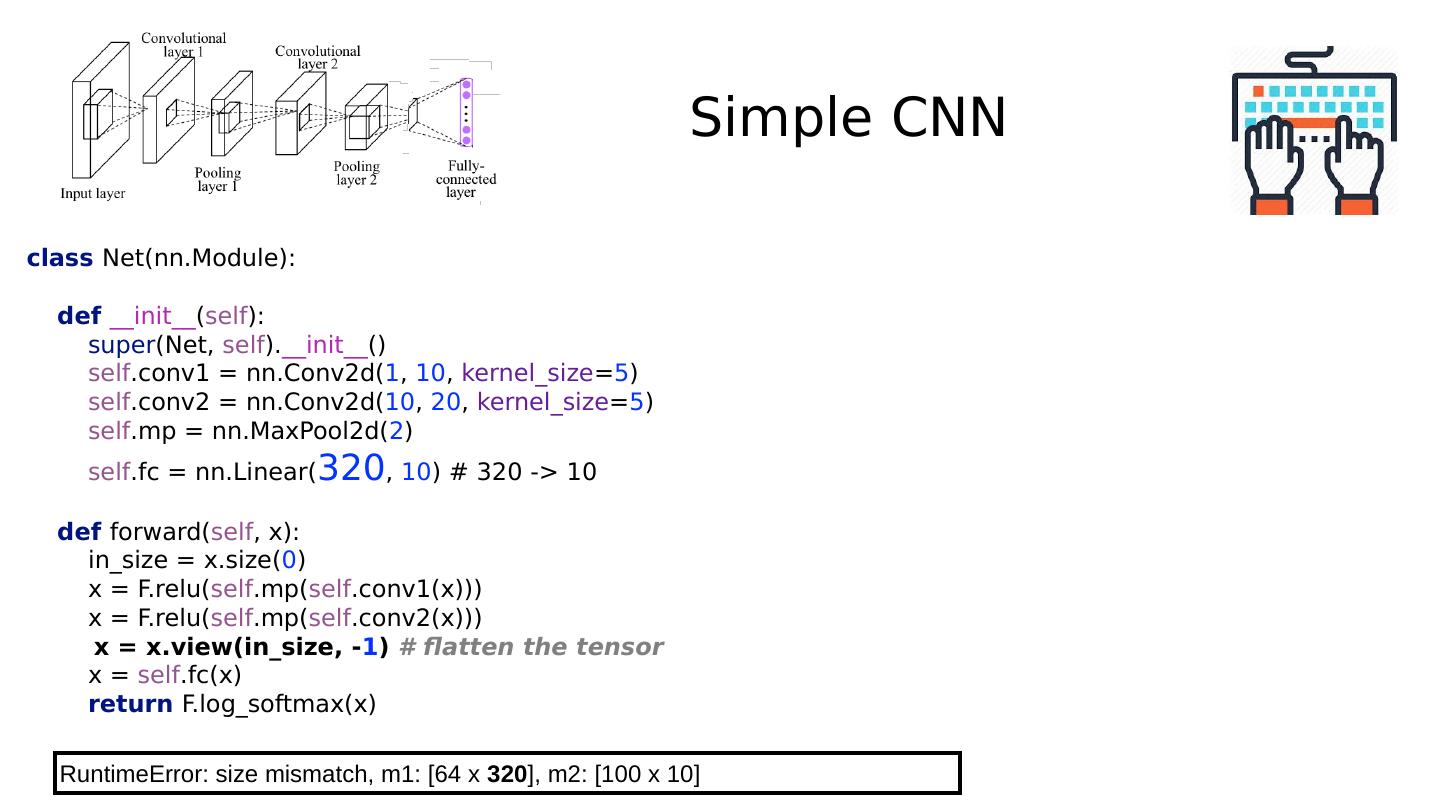

21 .Simple CNN class Net(nn.Module): def __init__ ( self ): super (Net, self ). __init__ () self .conv1 = nn.Conv2d( 1 , 10 , kernel_size = 5 ) self .conv2 = nn.Conv2d( 10 , 20 , kernel_size = 5 ) self .mp = nn.MaxPool2d( 2 ) self .fc = nn.Linear( 320 , 10 ) # 320 -> 10 def forward( self , x): in_size = x.size( 0 ) x = F.relu( self .mp( self .conv1(x))) x = F.relu( self .mp( self .conv2(x))) x = x.view(in_size, - 1 ) # flatten the tensor x = self .fc(x) return F.log_softmax(x) Train Epoch: 9 [46080/60000 (77%)] Loss: 0.108415 Train Epoch: 9 [46720/60000 (78%)] Loss: 0.140700 Train Epoch: 9 [47360/60000 (79%)] Loss: 0.090830 Train Epoch: 9 [48000/60000 (80%)] Loss: 0.031640 Train Epoch: 9 [48640/60000 (81%)] Loss: 0.014934 Train Epoch: 9 [49280/60000 (82%)] Loss: 0.090210 Train Epoch: 9 [49920/60000 (83%)] Loss: 0.074975 Train Epoch: 9 [50560/60000 (84%)] Loss: 0.058671 Train Epoch: 9 [51200/60000 (85%)] Loss: 0.023464 Train Epoch: 9 [51840/60000 (86%)] Loss: 0.018025 Train Epoch: 9 [52480/60000 (87%)] Loss: 0.098865 Train Epoch: 9 [53120/60000 (88%)] Loss: 0.013985 Train Epoch: 9 [53760/60000 (90%)] Loss: 0.070476 Train Epoch: 9 [54400/60000 (91%)] Loss: 0.065411 Train Epoch: 9 [55040/60000 (92%)] Loss: 0.028783 Train Epoch: 9 [55680/60000 (93%)] Loss: 0.008333 Train Epoch: 9 [56320/60000 (94%)] Loss: 0.020412 Train Epoch: 9 [56960/60000 (95%)] Loss: 0.036749 Train Epoch: 9 [57600/60000 (96%)] Loss: 0.163087 Train Epoch: 9 [58240/60000 (97%)] Loss: 0.117539 Train Epoch: 9 [58880/60000 (98%)] Loss: 0.032256 Train Epoch: 9 [59520/60000 (99%)] Loss: 0.026360 Test set: Average loss: 0.0483, Accuracy: 9846/10000 (98%)

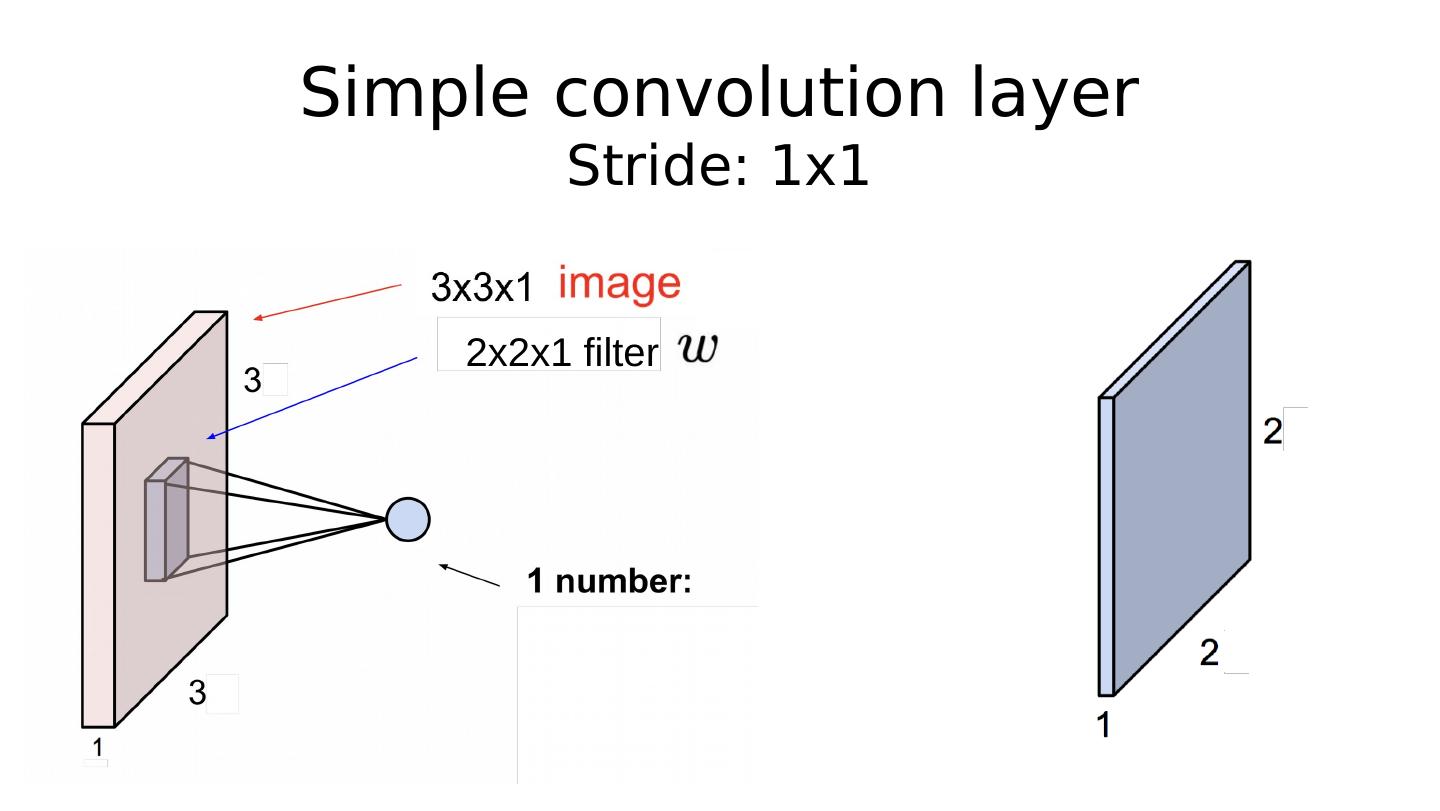

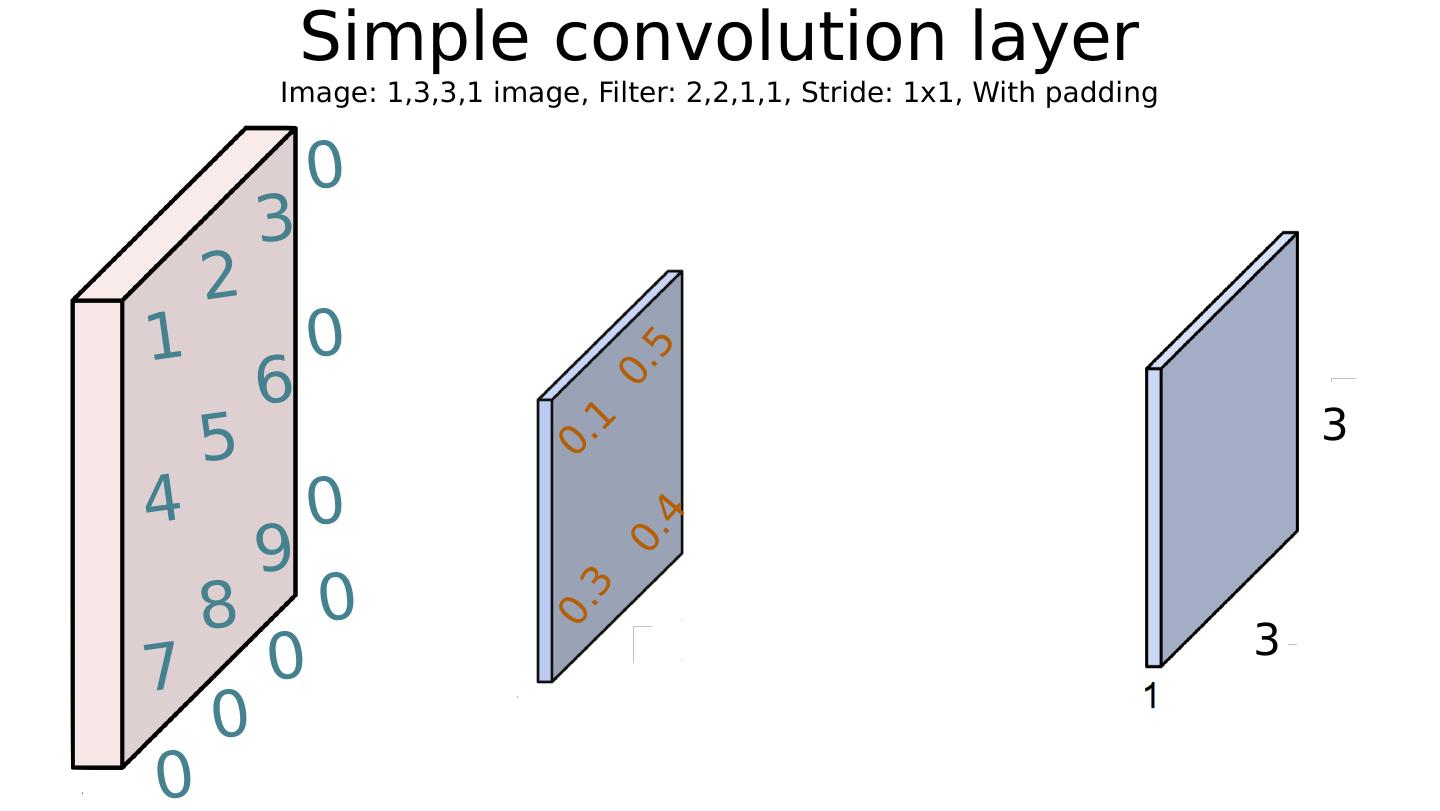

22 .Simple convolution layer Image: 1,3,3,1 image, Filter: 2,2,1,1, Stride: 1x1, No Padding 0.1 0.5 0.3 0.4 1 2 3 4 5 6 7 8 9