- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/u3807/LiveBot?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

LiveBot: Generating Live Video Comments Based on Visual and Textual Contexts

展开查看详情

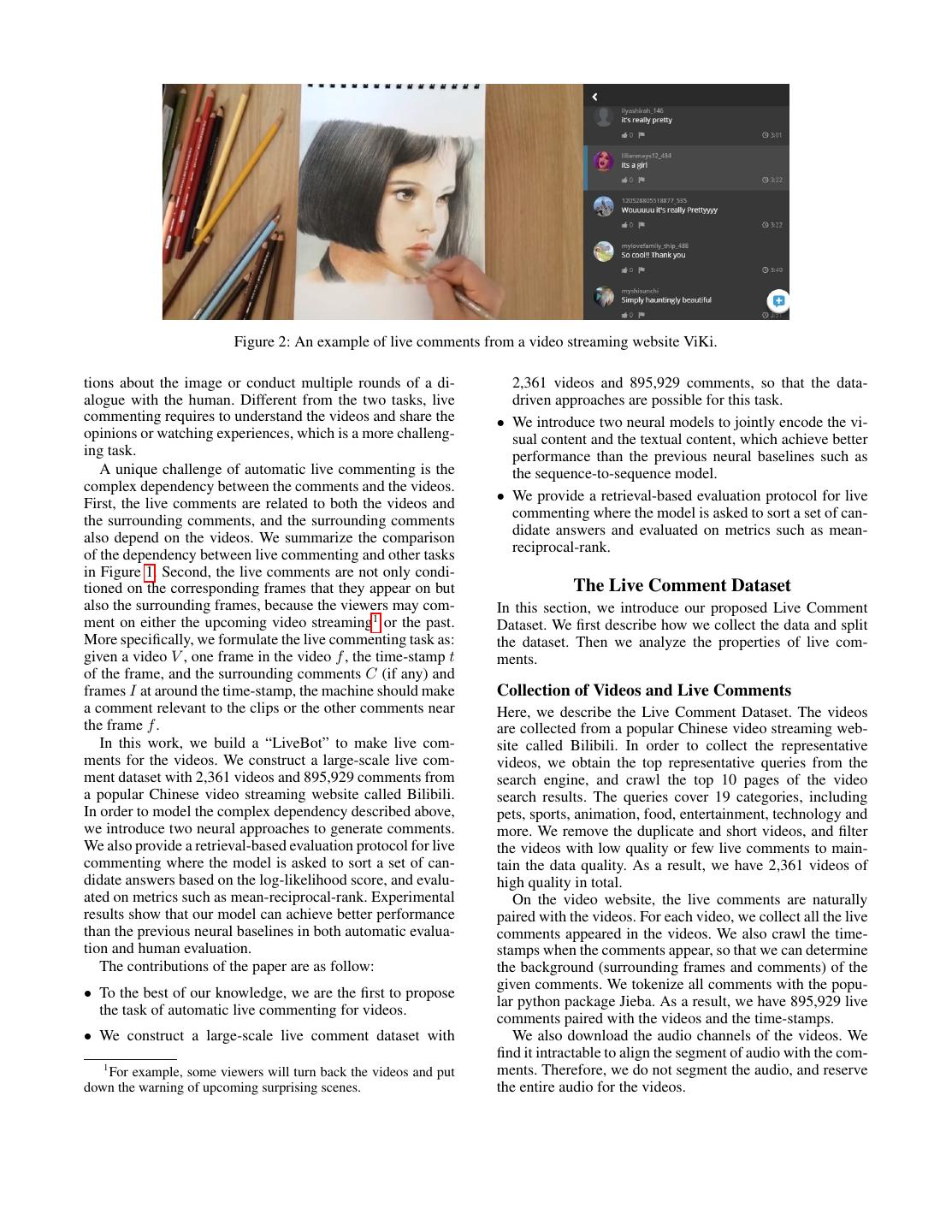

1 . LiveBot: Generating Live Video Comments Based on Visual and Textual Contexts Shuming Ma1∗ , Lei Cui2 , Damai Dai1 , Furu Wei2 , Xu Sun1 1 MOE Key Lab of Computational Linguistics, School of EECS, Peking University 2 Microsoft Research Asia {shumingma,daidamai,xusun}@pku.edu.cn {lecu,fuwei}@microsoft.com arXiv:1809.04938v2 [cs.CL] 29 Nov 2018 Abstract Vision Vision Language We introduce the task of automatic live commenting. Live commenting, which is also called “video barrage”, is an emerging feature on online video sites that allows real-time Language Language comments from viewers to fly across the screen like bullets Image captioning Visual question answering or roll at the right side of the screen. The live comments are a mixture of opinions for the video and the chit chats with other comments. Automatic live commenting requires Language Vision Language AI agents to comprehend the videos and interact with hu- man viewers who also make the comments, so it is a good testbed of an AI agent’s ability to deal with both dynamic vi- sion and language. In this work, we construct a large-scale Language Language live comment dataset with 2,361 videos and 895,929 live Machine translation Live commenting comments. Then, we introduce two neural models to gener- ate live comments based on the visual and textual contexts, Figure 1: The relationship between vision and language in which achieve better performance than previous neural base- lines such as the sequence-to-sequence model. Finally, we different tasks. provide a retrieval-based evaluation protocol for automatic live commenting where the model is asked to sort a set of in the comment section. These features have a tremendously candidate comments based on the log-likelihood score, and positive effect on the number of users, video clicks, and us- evaluated on metrics such as mean-reciprocal-rank. Putting it age duration. all together, we demonstrate the first “LiveBot”. The datasets and the codes can be found at https://github.com/ Motivated by the advantages of live comments for the lancopku/livebot. videos, we propose a novel task: automatic live comment- ing. The live comments are a mixture of the opinions for the video and the chit chats with other comments, so the task Introduction of living commenting requires AI agents to comprehend the The comments of videos bring many viewers fun and new videos and interact with human viewers who also make the ideas. Unfortunately, on many occasions, the videos and the comments. Therefore, it is a good testbed of an AI agent’s comments are separated, forcing viewers to make a trade- ability to deal with both dynamic vision and language. off between the two key elements. To address this problem, With the rapid progress at the intersection of vision and some video sites provide a new feature: viewers can put language, there are some tasks to evaluate an AI’s ability down the comments during watching videos, and the com- of dealing with both vision and language, including image ments will fly across the screen like bullets or roll at the right captioning (Donahue et al. 2017; Fang et al. 2015; Karpathy side of the screen. We show an example of live comments in and Fei-Fei 2017), video description (Rohrbach et al. 2015; Figure 2. The video is about drawing a girl, and the view- Venugopalan et al. 2015a; Venugopalan et al. 2015b), vi- ers share their watching experience and opinions with the sual question answering (Agrawal, Batra, and Parikh 2016; live comments, such as “simply hauntingly beautiful”. The Antol et al. 2015), and visual dialogue (Das et al. 2017). Live live comments make the video more interesting and appeal- commenting is different from all these tasks. Image caption- ing. Besides, live comments can also better engage viewers ing is to generate the textual description of an image, and and create a direct link among viewers, making their opin- video description aims to assign a description for a video. ions and responses more visible than the average comments Both the two tasks only require the machine to see and un- ∗ Joint work between Microsoft Research Asia and Peking Uni- derstand the images or the videos, rather than communicate versity with the human. Visual question answering and visual dia- Copyright c 2019, Association for the Advancement of Artificial logue take a significant step towards human-machine inter- Intelligence (www.aaai.org). All rights reserved. action. Given an image, the machine should answer ques-

2 . Figure 2: An example of live comments from a video streaming website ViKi. tions about the image or conduct multiple rounds of a di- 2,361 videos and 895,929 comments, so that the data- alogue with the human. Different from the two tasks, live driven approaches are possible for this task. commenting requires to understand the videos and share the • We introduce two neural models to jointly encode the vi- opinions or watching experiences, which is a more challeng- sual content and the textual content, which achieve better ing task. performance than the previous neural baselines such as A unique challenge of automatic live commenting is the the sequence-to-sequence model. complex dependency between the comments and the videos. First, the live comments are related to both the videos and • We provide a retrieval-based evaluation protocol for live the surrounding comments, and the surrounding comments commenting where the model is asked to sort a set of can- also depend on the videos. We summarize the comparison didate answers and evaluated on metrics such as mean- of the dependency between live commenting and other tasks reciprocal-rank. in Figure 1. Second, the live comments are not only condi- tioned on the corresponding frames that they appear on but The Live Comment Dataset also the surrounding frames, because the viewers may com- In this section, we introduce our proposed Live Comment ment on either the upcoming video streaming1 or the past. Dataset. We first describe how we collect the data and split More specifically, we formulate the live commenting task as: the dataset. Then we analyze the properties of live com- given a video V , one frame in the video f , the time-stamp t ments. of the frame, and the surrounding comments C (if any) and frames I at around the time-stamp, the machine should make Collection of Videos and Live Comments a comment relevant to the clips or the other comments near Here, we describe the Live Comment Dataset. The videos the frame f . are collected from a popular Chinese video streaming web- In this work, we build a “LiveBot” to make live com- site called Bilibili. In order to collect the representative ments for the videos. We construct a large-scale live com- videos, we obtain the top representative queries from the ment dataset with 2,361 videos and 895,929 comments from search engine, and crawl the top 10 pages of the video a popular Chinese video streaming website called Bilibili. search results. The queries cover 19 categories, including In order to model the complex dependency described above, pets, sports, animation, food, entertainment, technology and we introduce two neural approaches to generate comments. more. We remove the duplicate and short videos, and filter We also provide a retrieval-based evaluation protocol for live the videos with low quality or few live comments to main- commenting where the model is asked to sort a set of can- tain the data quality. As a result, we have 2,361 videos of didate answers based on the log-likelihood score, and evalu- high quality in total. ated on metrics such as mean-reciprocal-rank. Experimental On the video website, the live comments are naturally results show that our model can achieve better performance paired with the videos. For each video, we collect all the live than the previous neural baselines in both automatic evalua- comments appeared in the videos. We also crawl the time- tion and human evaluation. stamps when the comments appear, so that we can determine The contributions of the paper are as follow: the background (surrounding frames and comments) of the given comments. We tokenize all comments with the popu- • To the best of our knowledge, we are the first to propose lar python package Jieba. As a result, we have 895,929 live the task of automatic live commenting for videos. comments paired with the videos and the time-stamps. • We construct a large-scale live comment dataset with We also download the audio channels of the videos. We find it intractable to align the segment of audio with the com- 1 ments. Therefore, we do not segment the audio, and reserve For example, some viewers will turn back the videos and put down the warning of upcoming surprising scenes. the entire audio for the videos.

3 . (a) 0:48 (b) 1:52 (c) 3:41 Time Stamp Comments 0:48 橙猫是短腿吗 (Is the orange cat short leg?) 1:06 根本停不下来 (Simply can’t stop) 1:09 哎呀好可爱啊 (Oh so cute) 1:52 天哪这么多,天堂啊 (OMG, so many kittens, what a paradise!) 1:56 这么多只猫 (So many kittens!) 2:39 我在想猫薄荷对老虎也有用吗 (I am wondering whether the catmint works for the tiger.) 2:41 猫薄荷对老虎也有用 (Catmint also works for the tiger.) 3:41 活得不如猫 (The cat lives even better than me) 3:43 两个猫头挤在一起超可爱 (It’s so cute that two heads are together) Figure 3: A data example of a video paired with selected live comments in the Live Comment Dataset. Above are three selected frames from the videos to demonstrate the content. Below is several selected live comments paired with the time stamps when the comments appear on the screen. Table 3 shows an example of our data. The pictures above Statistic Train Test Dev Total are three selected frames to demonstrate a video about feed- #Video 2,161 100 100 2,361 ing cats. The table below includes several selected live com- #Comment 818,905 42,405 34,609 895,929 #Word 4,418,601 248,399 193,246 4,860,246 ments with the corresponding time-stamps. It shows that the Avg. Words 5.39 5.85 5.58 5.42 live comments are related to the frames where the comments Duration (hrs) 103.81 5.02 5.01 113.84 appear. For example, the video describes an orange cat fed with the catmint at 0:48, while the live comment at the same Table 1: Statistics information on the training, testing, and frame is asking “is the orange cat short leg?”. The com- development sets. ment is also related to the surrounding frames. For example, the video introduces three cats playing on the floor at 1:52, while the live comment at 1:56 is saying “So many kittens!”. Moreover, the comment is related to the surrounding com- ments. For example, the comment at 2:39 asks “whether the catmint works for the tiger”, and the comment at 2:41 re- 2014) are two popular action description datasets, which fo- sponds that “catmint also works for the tiger”. cus on the cooking domain. M-VAD (Torabi et al. 2015) and MPII-MD (Rohrbach et al. 2015) are the movie description Dataset Split datasets, while MovieQA (Tapaswi et al. 2016) is a popular movie question answering dataset. MSVD (Chen and Dolan To split the dataset into training, development and testing 2011) is a dataset for the task of paraphrasing, and MSR- sets, we separate the live comments according to the corre- VTT (Xu et al. 2016) is for video captioning. A major limi- sponding videos. The comments from the same videos will tation for these datasets is limited domains (i.e. cooking and not appear solely in the training or testing set to avoid over- movie) and small size of data (in terms of videos and sen- fitting. We split the data into 2,161, 100 and 100 videos in tences). Compared with these datasets, our Bilibili dataset the training, testing and development sets, respectively. Fi- is derived from a wide variety of video categories (19 cate- nally, the numbers of live comments are 818,905, 42,405, gories), which can benefit the generalization capability of and 34,609 in the training, testing, development sets. Table 1 model learning. In addition, the previous datasets are de- presents some statistics for each part of the dataset. signed for the tasks of description, question answering, para- phrasing, and captioning, where the patterns between the Data Statistics videos and the language are clear and obvious. Our dataset Table 2 lists the statistics and comparison among dif- is for the task of commenting, where the patterns and re- ferent datasets and tasks. We will release more data in lationship between the videos and the language are latent the future. Our Bilibili dataset is among the large-scale and difficult to learn. In summary, our BiliBili dataset repre- dataset in terms of videos (2,361) and sentences (895,929). sents one of the most comprehensive, diverse, and complex YouCook (Das et al. 2013), TACos-M-L (Rohrbach et al. datasets for video-to-text learning.

4 . Dataset Task #Video #Sentence 18 14 16 YouCook Action Description 88 2,668 12 14 TACos-M-L Action Description 185 52,593 10 Percentage (%) Percentage (%) 12 M-VAD Movie Description 92 52,593 10 8 MPII-MD Movie Description 94 68,375 8 6 MovieQA Question Answering 140 150,000 6 4 MSVD Paraphrasing 1,970 70,028 4 MSR-VTT Captioning 7,180 200,000 2 2 Bilibili Commenting 2,361 895,929 0 0 1 3 5 7 9 11 13 15 17 19 1 4 7 10 13 16 19 22 25 28 # Words # Characters Table 2: Comparison of different video-to-text datasets. (a) (b) Interval Edit Distance TF-IDF Human 0-1s 11.74 0.048 4.3 Figure 4: Distribution of lengths for comments in terms of 1-3s 11.79 0.033 4.1 both word-level and character-level. 3-5s 12.05 0.028 3.9 5-10s 12.42 0.025 3.1 >10s 12.26 0.015 2.2 the comments based on the visual contexts (surrounding frames) and the textual contexts (surrounding comments). Table 3: The average similarity between two comments at The two approaches are based on two popular architectures different intervals (Edit distance: lower is more similar; Tf- for text generation: recurrent neural network (RNN) and idf: higher is more similar; Human: higher is more similar). transformer. We denote two approaches as Fusional RNN Model and Unified Transformer Model, respectively. Analysis of Live Comments Here, we analyze the live comments in our dataset. We Problem Formulation demonstrate some properties of the live comments. Here, we provide the problem formulation and some nota- Distribution of Lengths Figure 4 shows the distribution tions. Given a video V , a time-stamp t, and the surrounding of the lengths for live comments in the training set. We comments C near the time-stamp (if any), the model should can see that most live comments consist of no more than 5 generate a comment y relevant to the clips or the other com- words or 10 characters. One reason is that 5 Chinese words ments near the time-stamp. Since the video is often long or 10 Chinese characters have contained enough informa- and there are sometimes many comments, it is impossible tion to communicate with others. The other reason is that to take the whole videos and all the comments as input. the viewers often make the live comments during watching Therefore, we reserve the nearest m frames2 and n com- the videos, so they prefer to make short and quick comments ments from the time-stamp t. We denote the m frames as rather than spend much time making long and detailed com- I = {I1 , I2 , · · · , Im }, and we concatenate the n comments ments. as C = {C1 , C2 , · · · , Cn }. The model aims at generating a comment y = {y1 , y2 , · · · , yk }, where k is the number of Correlation between Neighboring Comments We also words in the sentence. validate the correlation between the neighboring comments. For each comment, we select its 20 neighboring comments to form 20 comment pairs. Then, we calculate the sentence Model I: Fusional RNN Model similarities of these pairs in terms of three metrics, which are Figure 5 shows the architecture of the Fusional RNN model. edit distance, tf-idf, and human scoring. For human scoring, The model is composed of three parts: a video encoder, a we ask three annotators to score the semantic relevance be- text encoder, and a comment decoder. The video encoder en- tween two comments, and the score ranges from 1 to 5. We codes m consecutive frames with an LSTM layer on the top group the comment pairs according to their time intervals: of the CNN layer, and the text encoder encodes m surround- 0-1s, 1-3s, 3-5s, 5-10s, and more than 10s. We take the av- ing live comments into the vectors with an LSTM layer. Fi- erage of the scores of all comment pairs, and the results are nally, the comment decoder generates the live comment. summarized in Table 3. It shows that the comments with a larger interval are less similar both literally and semantically. Video Encoder In the video encoding part, each frame Ii Therefore, it concludes that the neighboring comments have is first encoded into a vector vi by a convolution layer. We higher correlation than the non-neighboring ones. then use an LSTM layer to encode all the frame vectors into their hidden states hi : Approaches to Live Commenting A challenge of making live comments is the complex depen- vi = CN N (Ii ) (1) dency between the comments and the videos. The comments are related to the surrounding comments and video clips, and hi = LST M (vi , hi−1 ) (2) the surrounding comments also rely on the videos. To model 2 this dependency, we introduce two approaches to generate We set the interval between frames as 1 second.

5 . Text Encoder Comment Decoder Outputs Video Encoder 𝑥1 𝑥2 … 𝑥𝑛 Softmax 𝐼1 ℎ1 𝑔1 𝑔2 … 𝑔𝑛 Text Encoder Feed 𝐼2 ℎ2 Attention Attention Forward Feed Multi-head … … 𝑠1 𝑠2 … 𝑠𝑘 Video Encoder Forward Attention 𝐼𝑚 ℎ𝑚 𝑦1 𝑦2 … 𝑦𝑘 Feed Multi-head Multi-head Forward Attention Attention Comment Decoder Figure 5: An illustration of Fusional RNN Model. Multi-head Multi-head Masked Attention Attention Multi-head Text Encoder In the comment encoding part, each sur- rounding comment Ci is first encoded into a series of word- level representations, using a word-level LSTM layer: CNN Embedding Embedding rij = LST M (Cij , rij−1 ) (3) Image Text Comment (i) We use the last hidden state riL as the representation for Ci denoted as xi . Then we use a sentence-level LSTM layer Figure 6: An illustration of Unified Transformer Model. with the attention mechanism to encode all the comments into sentence-level representation gi : Video Encoder Similar to Fusional RNN model, the video gˆi = LST M (xi , gi−1 ) (4) encoder first encodes each frame Ii into a vector vi with a convolution layer. Then, it uses a transformer layer to encode gi = Attention(ˆgi , h) (5) all the frame vectors into the final representation hi : With the help of attention, the comment representation con- vi = CN N (Ii ) (10) tains the information from videos. hi = T ransf ormer(vi , v) (11) Comment Decoder The model generates the comment based on both the surrounding comments and frames. There- Inside the transformer, each frame’s representation vi at- fore, the probability of generating a sentence is defined as: tends to a collection of the other representations v = {v1 , v2 , · · · , vm }. T p(y0 , ..., yT |h, g) = p(yt |y0 , ..., yt−1 , h, g) (6) Text Encoder Different from Fusion RNN model, we t=1 concatenate the comments into a word sequence e = {e1 , e2 , · · · , eL } as the input of text encoder, so that each More specifically, the probability distribution of word wi is words can contribute to the self-attention component di- calculated as follows, rectly. The representation of each words in the comments sˆi = LST M (yi−1 , si−1 ) (7) can be written as: si = Attention(ˆ si , h, g) (8) gi = T ransf ormer(ei , e, h) (12) p(wi |w0 , ..., wi−1 , h) = Sof tmax(W si ) (9) Inside the text encoder, there are two multi-head attention components, where the first one attends to the text input e and the second attends to the outputs h of video encoder. Model II: Unified Transformer Model Different from the hierarchical structure of Fusional RNN Comment Decoder We use a transformer layer to gener- model, the unified transformer model uses a linear structure ate the live comment. The probability of generating a sen- to capture the dependency between the comments and the tence is defined as: videos. Similar to Fusional RNN model, the unified trans- T former model consists of three parts: the video encoder, the p(y0 , ..., yT |h, g) = p(wt |w0 , ..., wt−1 , h, g) (13) text encoder, and the comment decoder. Figure 6 shows the t=1 architecture of the unified transformer model. In this part, we More specifically, the probability distribution of word yi is omit the details of the inner computation of the transformer calculated as: block, and refer the readers to the related work (Vaswani et al. 2017). si = T ransf ormer(yi , y, h, g) (14)

6 . p(yi |y0 , ..., yi−1 , h, g) = Sof tmax(W si ) (15) Ba 2014) optimization method to train our models. For the Inside the comment decoder, there are three multi-head at- hyper-parameters of Adam optimizer, we set the learning tention components, where the first one attends to the com- rate α = 0.0003, two momentum parameters β1 = 0.9 and ment input y and the last two attend to the outputs of video β2 = 0.999 respectively, and = 1 × 10−8 . encoder h and text encoder g, respectively. Baselines Evaluation Metrics • S2S-I (Vinyals et al. 2015) applies the CNN to encode the frames, based on which the decoder generates the target The comments can be various for a video, and it is in- live comment. This model only uses the video as the input. tractable to find out all the possible references to be com- pared with the model outputs. Therefore, most of the • S2S-C (Sutskever, Vinyals, and Le 2014) applies an reference-based metrics for generation tasks like BLEU and LSTM to make use of the surrounding comments, based ROUGE are not appropriate to evaluate the comments. In- on which the decoder generates the comments. This spired by the evaluation methods of dialogue models (Das model can be regarded as the traditional sequence-to- et al. 2017), we formulate the evaluation as a ranking prob- sequence model, which only uses the surrounding com- lem. The model is asked to sort a set of candidate comments ments as input. based on the log-likelihood score. Since the model generates • S2S-IC is similar to (Venugopalan et al. 2015a) which the comments with the highest scores, it is reasonable to dis- makes use of both the visual and textual information. In criminate a good model according to its ability to rank the our implementation, the model has two encoders to en- correct comments on the top of the candidates. The candi- code the images and the comments respectively. Then, we date comment set consists of the following parts: concatenate the outputs of two encoders to decode the out- • Correct: The ground-truth comments of the correspond- put comments with an LSTM decoder. ing videos provided by the human. Results • Plausible: The 50 most similar comments to the title of the video. We use the title of the video as the query to re- At the training stage, we train S2S-IC, Fusional RNN, and trieval the comments that appear in the training set based Unified Transformer with both videos and comments. S2S- on the cosine similarity of their tf-idf values. We select I is trained with only videos, while S2S-C is trained with the top 30 comments that are not the correct comments as only comments. At the testing stage, we evaluate these mod- the plausible comments. els under three settings: video only, comment only, and both video and comment. Video only means that the model only • Popular: The 20 most popular comments from the uses the images as inputs (5 nearest frames), which simu- dataset. We count the frequency of each comment in lates the case when no surrounding comment is available. the training set, and select the 20 most frequent com- Comment only means that the model only takes input of the ments to form the popular comment set. The popular com- surrounding comments (5 nearest comments), which simu- ments are the general and meaningless comments, such lates the case when the video is of low quality. Both de- as “2333”, “Great”, “hahahaha”, and “Leave a comment”. notes the case when both the videos and the surrounding These comments are dull and do not carry any informa- comments are available for the models (5 nearest frames and tion, so they are regarded as incorrect comments. comments). • Random: After selecting the correct, plausible, and pop- Table 4 summarizes the results of the baseline models and ular comments, we fill the candidate set with randomly the proposed models under three settings. It shows that our selected comments from the training set so that there are proposed models outperform the baseline models in terms 100 unique comments in the candidate set. of all evaluation metrics under all settings, which demon- Following the previous work (Das et al. 2017), We mea- strates the effectiveness of our proposed models. Moreover, sure the rank in terms of the following metrics: Recall@k it concludes that given both videos and comments the same (the proportion of human comments found in the top-k rec- models can achieve better performance than those with only ommendations), Mean Rank (the mean rank of the human videos or comments. Finally, the models with only com- comments), Mean Reciprocal Rank (the mean reciprocal ments as input outperform the models with only videos as rank of the human comments). input, mainly because the surrounding comments can pro- vide more direct information for making the next comments. Experiments Human Evaluation Settings The retrieval evaluation protocol evaluates the ability to dis- For both models, the vocabulary is limited to the 30,000 criminate the good comments and the bad comments. We most common words in the training dataset. We use a shared also would like to evaluate the ability to generate human- embedding between encoder and decoder and set the word like comments. However, the existing generative evaluation embedding size to 512. For the encoding CNN, we use a metrics, such as BLEU and ROUGE, are not reliable, be- pretrained resnet with 18 layers provided by the Pytorch cause the reference comments can be various. Therefore, we package. For both models, the batch size is 64, and the conduct human evaluation to evaluate the outputs of each hidden dimension is 512. We use the Adam (Kingma and model.

7 . Model #I #C Recall@1 Recall@5 Recall@10 MR MRR S2S-I 5 0 4.69 19.93 36.46 21.60 0.1451 S2S-IC 5 0 5.49 20.71 38.35 20.15 0.1556 Video Only Fusional RNN 5 0 10.05 31.15 48.12 19.53 0.2217 Unified Transformer 5 0 11.40 32.62 50.47 18.12 0.2311 S2S-C 0 5 9.12 28.05 44.26 19.76 0.2013 S2S-IC 0 5 10.45 30.91 46.84 18.06 0.2194 Comment Only Fusional RNN 0 5 13.15 34.71 52.10 17.51 0.2487 Unified Transformer 0 5 13.95 34.57 51.57 17.01 0.2513 S2S-IC 5 5 12.89 33.78 50.29 17.05 0.2454 Both Fusional RNN 5 5 17.25 37.96 56.10 16.14 0.2710 Unified Transformer 5 5 18.01 38.12 55.78 16.01 0.2753 Table 4: The performance of the baseline models and the proposed models. (#I: the number of input frames used at the testing stage; #C: the number of input comments used at the testing stage; Recall@k, MRR: higher is better; MR: lower is better) Model Fluency Relevance Correctness (Sutskever, Vinyals, and Le 2014; Cho et al. 2014; Bah- S2S-IC 4.07 2.23 2.91 danau, Cho, and Bengio 2014), Vinyals et al. (2015) and Fusion 4.45 2.95 3.34 Mao et al. (2014) proposed to use a deep convolutional neu- Transformer 4.31 3.07 3.45 ral network to encode the image and a recurrent neural net- Human 4.82 3.31 4.11 work to generate the image captions. Xu et al. (2015) further Table 5: Results of human evaluation metrics on the test set proposed to apply attention mechanism to focus on certain (higher is better). All these models are trained and tested parts of the image when decoding. Using CNN to encode the give both videos and surrounding comments. image while using RNN to decode the description is natural and effective when generating textual descriptions. One task that is similar to live comment generation is im- We evaluate the generated comments in three aspects: age caption generation, which is an area that has been stud- Fluency is designed to measure whether the generated live ied for a long time. Farhadi et al. (2010) tried to generate comments are fluent setting aside the relevance to videos. descriptions of an image by retrieving from a big sentence Relevance is designed to measure the relevance between the pool. Kulkarni et al. (2011) proposed to generate descrip- generated live comments and the videos. Correctness is de- tions based on the parsing result of the image with a sim- signed to synthetically measure the confidence that the gen- ple language model. These systems are often applied in a erated live comments are made by humans in the context of pipeline fashion, and the generated description is not cre- the video. For all of the above three aspects, we stipulate the ative. More recent work is to use stepwise merging network score to be an integer in {1, 2, 3, 4, 5}. The higher the bet- to improve the performance (Liu et al. 2018). ter. The scores are evaluated by three human annotators and Another task which is similar to this work is video cap- finally we take the average of three raters as the final result. tion generation. Venugopalan et al. (2015a) proposed to use We compare our Fusional RNN model and Unified Trans- CNN to extract image features, and use LSTM to encode former model with the strong baseline model S2S-IC. All them and decode a sentence. Similar models(Shetty and these models are trained and tested give both videos and Laaksonen 2016; Jin et al. 2016; Ramanishka et al. 2016; surrounding comments. As shown in Table 5, our models Dong et al. 2016; Pasunuru and Bansal 2017; Shen et al. achieve higher scores over the baseline model in all three 2017) are also proposed to handle the task of video caption degrees, which demonstrates the effectiveness of the pro- generation. Das et al. (2017) introduce the task of Visual posed models. We also evaluate the reference comments in Dialog, which requires an AI agent to answer a question the test set, which are generated by the human. It shows that about an image when given the image and a dialogue history. the comments from human achieve high fluency and cor- Moreover, we are also inspired by the recent related work of rectness scores. However, the relevance score is lower than natural language generation models with the text inputs (Ma the fluency and correctness, mainly because the comments et al. 2018; Xu et al. 2018). are not always relevant to the videos, but with the surround- ing comments. According to the table, it also concludes that Conclusions the comments from unified transformer are almost near to those of real-world live comments. We use Spearman’s Rank We propose the tasks of automatic live commenting, and correlation coefficients to evaluate the agreement among the construct a large-scale live comment dataset. We also in- raters. The coefficients between any two raters are all near troduce two neural models to generate the comments which 0.6 and at an average of 0.63. These high coefficients show jointly encode the visual contexts and textual contexts. Ex- that our human evaluation scores are consistent and credible. perimental results show that our models can achieve better performance than the previous neural baselines. Related Work Acknowledgement Inspired by the great success achieved by the sequence- We thank the anonymous reviewers for their thoughtful com- to-sequence learning framework in machine translation ments. This work was supported in part by National Natural

8 . Science Foundation of China (No. 61673028). Xu Sun is the [Kingma and Ba 2014] Kingma, D. P., and Ba, J. 2014. corresponding author of this paper. Adam: A method for stochastic optimization. CoRR abs/1412.6980. References [Kulkarni et al. 2011] Kulkarni, G.; Premraj, V.; Dhar, S.; Li, [Agrawal, Batra, and Parikh 2016] Agrawal, A.; Batra, D.; S.; Choi, Y.; Berg, A. C.; and Berg, T. L. 2011. Baby and Parikh, D. 2016. Analyzing the behavior of visual ques- talk: Understanding and generating simple image descrip- tion answering models. In EMNLP 2016, 1955–1960. tions. 1601–1608. [Liu et al. 2018] Liu, F.; Ren, X.; Liu, Y.; Wang, H.; and [Antol et al. 2015] Antol, S.; Agrawal, A.; Lu, J.; Mitchell, Sun, X. 2018. Stepwise image-topic merging network for M.; Batra, D.; Zitnick, C. L.; and Parikh, D. 2015. VQA: generating detailed and comprehensive image captions. In visual question answering. In ICCV 2015, 2425–2433. EMNLP 2018. [Bahdanau, Cho, and Bengio 2014] Bahdanau, D.; Cho, K.; [Ma et al. 2018] Ma, S.; Sun, X.; Li, W.; Li, S.; Li, W.; and Bengio, Y. 2014. Neural machine translation by and Ren, X. 2018. Query and output: Generating words jointly learning to align and translate. arXiv preprint by querying distributed word representations for paraphrase arXiv:1409.0473. generation. In NAACL-HLT 2018, 196–206. [Chen and Dolan 2011] Chen, D., and Dolan, W. B. 2011. [Mao et al. 2014] Mao, J.; Xu, W.; Yang, Y.; Wang, J.; and Collecting highly parallel data for paraphrase evaluation. In Yuille, A. L. 2014. Explain images with multimodal recur- ACL 2011, 190–200. rent neural networks. arXiv: Computer Vision and Pattern [Cho et al. 2014] Cho, K.; Van Merri¨enboer, B.; Gulcehre, Recognition. C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; and Bengio, [Pasunuru and Bansal 2017] Pasunuru, R., and Bansal, M. Y. 2014. Learning phrase representations using rnn encoder- 2017. Multi-task video captioning with video and entail- decoder for statistical machine translation. arXiv preprint ment generation. arXiv preprint arXiv:1704.07489. arXiv:1406.1078. [Ramanishka et al. 2016] Ramanishka, V.; Das, A.; Park, [Das et al. 2013] Das, P.; Xu, C.; Doell, R. F.; and Corso, J. J. D. H.; Venugopalan, S.; Hendricks, L. A.; Rohrbach, M.; 2013. A thousand frames in just a few words: Lingual de- and Saenko, K. 2016. Multimodal video description. In scription of videos through latent topics and sparse object Proceedings of the 2016 ACM on Multimedia Conference, stitching. In CVPR 2013, 2634–2641. 1092–1096. ACM. [Das et al. 2017] Das, A.; Kottur, S.; Gupta, K.; Singh, A.; [Rohrbach et al. 2014] Rohrbach, A.; Rohrbach, M.; Qiu, Yadav, D.; Moura, J. M.; Parikh, D.; and Batra, D. 2017. W.; Friedrich, A.; Pinkal, M.; and Schiele, B. 2014. Co- Visual dialog. In CVPR 2017, 1080–1089. herent multi-sentence video description with variable level [Donahue et al. 2017] Donahue, J.; Hendricks, L. A.; of detail. In GCPR 2014, 184–195. Rohrbach, M.; Venugopalan, S.; Guadarrama, S.; Saenko, [Rohrbach et al. 2015] Rohrbach, A.; Rohrbach, M.; Tandon, K.; and Darrell, T. 2017. Long-term recurrent convolutional N.; and Schiele, B. 2015. A dataset for movie description. networks for visual recognition and description. IEEE In CVPR 2015, 3202–3212. Trans. Pattern Anal. Mach. Intell. 39(4):677–691. [Shen et al. 2017] Shen, Z.; Li, J.; Su, Z.; Li, M.; Chen, Y.; [Dong et al. 2016] Dong, J.; Li, X.; Lan, W.; Huo, Y.; and Jiang, Y.-G.; and Xue, X. 2017. Weakly supervised dense Snoek, C. G. 2016. Early embedding and late reranking video captioning. In CVPR 2017, volume 2, 10. for video captioning. In Proceedings of the 2016 ACM on [Shetty and Laaksonen 2016] Shetty, R., and Laaksonen, J. Multimedia Conference, 1082–1086. ACM. 2016. Frame-and segment-level features and candidate pool [Fang et al. 2015] Fang, H.; Gupta, S.; Iandola, F. N.; Srivas- evaluation for video caption generation. In Proceedings tava, R. K.; Deng, L.; Doll´ar, P.; Gao, J.; He, X.; Mitchell, of the 2016 ACM on Multimedia Conference, 1073–1076. M.; Platt, J. C.; Zitnick, C. L.; and Zweig, G. 2015. From ACM. captions to visual concepts and back. In CVPR 2015, 1473– [Sutskever, Vinyals, and Le 2014] Sutskever, I.; Vinyals, O.; 1482. and Le, Q. V. 2014. Sequence to sequence learning with neu- [Farhadi et al. 2010] Farhadi, A.; Hejrati, M.; Sadeghi, ral networks. In Advances in neural information processing M. A.; Young, P.; Rashtchian, C.; Hockenmaier, J.; and systems, 3104–3112. Forsyth, D. A. 2010. Every picture tells a story: generat- [Tapaswi et al. 2016] Tapaswi, M.; Zhu, Y.; Stiefelhagen, R.; ing sentences from images. 15–29. Torralba, A.; Urtasun, R.; and Fidler, S. 2016. Movieqa: Un- [Jin et al. 2016] Jin, Q.; Chen, J.; Chen, S.; Xiong, Y.; and derstanding stories in movies through question-answering. Hauptmann, A. 2016. Describing videos using multi-modal In CVPR 2016, 4631–4640. fusion. In Proceedings of the 2016 ACM on Multimedia [Torabi et al. 2015] Torabi, A.; Pal, C. J.; Larochelle, H.; and Conference, 1087–1091. ACM. Courville, A. C. 2015. Using descriptive video services [Karpathy and Fei-Fei 2017] Karpathy, A., and Fei-Fei, L. to create a large data source for video annotation research. 2017. Deep visual-semantic alignments for generating im- CoRR abs/1503.01070. age descriptions. IEEE Trans. Pattern Anal. Mach. Intell. [Vaswani et al. 2017] Vaswani, A.; Shazeer, N.; Parmar, N.; 39(4):664–676. Uszkoreit, J.; Jones, L.; Gomez, A. N.; Kaiser, L.; and Polo-

9 . sukhin, I. 2017. Attention is all you need. In NIPS 2017, 6000–6010. [Venugopalan et al. 2015a] Venugopalan, S.; Rohrbach, M.; Donahue, J.; Mooney, R. J.; Darrell, T.; and Saenko, K. 2015a. Sequence to sequence - video to text. In ICCV 2015, 4534–4542. [Venugopalan et al. 2015b] Venugopalan, S.; Xu, H.; Don- ahue, J.; Rohrbach, M.; Mooney, R. J.; and Saenko, K. 2015b. Translating videos to natural language using deep re- current neural networks. In NAACL HLT 2015, 1494–1504. [Vinyals et al. 2015] Vinyals, O.; Toshev, A.; Bengio, S.; and Erhan, D. 2015. Show and tell: A neural image caption generator. In CVPR 2015, 3156–3164. [Xu et al. 2015] Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; and Bengio, Y. 2015. Show, attend and tell: Neural image caption generation with visual attention. In ICML 2015, 2048–2057. [Xu et al. 2016] Xu, J.; Mei, T.; Yao, T.; and Rui, Y. 2016. MSR-VTT: A large video description dataset for bridging video and language. In CVPR 2016, 5288–5296. [Xu et al. 2018] Xu, J.; Sun, X.; Zeng, Q.; Ren, X.; Zhang, X.; Wang, H.; and Li, W. 2018. Unpaired sentiment-to- sentiment translation: A cycled reinforcement learning ap- proach. In ACL 2018, 979–988.