- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

MemNet: A Persistent Memory Network for Image Restoration

展开查看详情

1 . MemNet: A Persistent Memory Network for Image Restoration Ying Tai∗ 1 , Jian Yang1 , Xiaoming Liu2 , and Chunyan Xu1 1 Department of Computer Science and Engineering, Nanjing University of Science and Technology 2 Department of Computer Science and Engineering, Michigan State University {taiying, csjyang, cyx}@njust.edu.cn, liuxm@cse.msu.edu arXiv:1708.02209v1 [cs.CV] 7 Aug 2017 Abstract (a) Plain structure Recently, very deep convolutional neural networks (CNNs) have been attracting considerable attention in im- age restoration. However, as the depth grows, the long-term (b) Skip connections dependency problem is rarely realized for these very deep models, which results in the prior states/layers having lit- tle influence on the subsequent ones. Motivated by the fact Gate Unit that human thoughts have persistency, we propose a very Recursive Unit deep persistent memory network (MemNet) that introduces a memory block, consisting of a recursive unit and a gate (c) Proposed memory block unit, to explicitly mine persistent memory through an adap- Figure 1. Prior network structures (a,b) and our memory block (c). tive learning process. The recursive unit learns multi-level The blue circles denote a recursive unit with an unfolded structure representations of the current state under different recep- which generates the short-term memory. The green arrow denotes tive fields. The representations and the outputs from the the long-term memory from the previous memory blocks that is previous memory blocks are concatenated and sent to the directly passed to the gate unit. gate unit, which adaptively controls how much of the pre- vious states should be reserved, and decides how much of the additive noise. With this mathematical model, extensive the current state should be stored. We apply MemNet to studies are conducted on many image restoration tasks, e.g., three image restoration tasks, i.e., image denosing, super- image denoising [2, 5, 9, 37], single-image super-resolution resolution and JPEG deblocking. Comprehensive exper- (SISR) [15, 38] and JPEG deblocking [18, 26]. iments demonstrate the necessity of the MemNet and its As three classical image restoration tasks, image de- unanimous superiority on all three tasks over the state of noising aims to recover a clean image from a noisy ob- the arts. Code is available at https://github.com/ servation, which commonly assumes additive white Gaus- tyshiwo/MemNet. sian noise with a standard deviation σ; single-image super- resolution recovers a high-resolution (HR) image from a low-resolution (LR) image; and JPEG deblocking removes 1. Introduction the blocking artifact caused by JPEG compression [7]. Image restoration [29] is a classical problem in low-level Recently, due to the powerful learning ability, very deep computer vision, which estimates an uncorrupted image convolutional neural network (CNN) is widely used to from a noisy or blurry one. A corrupted low-quality image tackle the image restoration tasks. Kim et al. construct a x can be represented as: x = D(˜ x) + n, where x ˜ is a high- 20-layer CNN structure named VDSR for SISR [20], and quality version of x, D is the degradation function and n is adopts residual learning to ease training difficulty. To con- trol the parameter number of very deep models, the authors ∗ This work was supported by the National Science Fund of China un- further introduce a recursive layer and propose a Deeply- der Grant Nos. 91420201, 61472187, 61502235, 61233011, 61373063 and 61602244, the 973 Program No. 2014CB349303, Program for Recursive Convolutional Network (DRCN) [21]. To mite- Changjiang Scholars, and partially sponsored by CCF-Tencent Open Re- gate training difficulty, Mao et al. [27] introduce symmetric search Fund. Jian Yang and Xiaoming Liu are corresponding authors. skip connections into a 30-layer convolutional auto-encoder

2 .network named RED for image denoising and SISR. More- connected structure helps compensate mid/high-frequency over, Zhang et al. [40] propose a denoising convolutional signals, and ensures maximum information flow between neural network (DnCNN) to tackle image denoising, SISR memory blocks as well. To the best of our knowledge, it is and JPEG deblocking simultaneously. by far the deepest network for image restoration. The conventional plain CNNs, e.g., VDSR [20], The same MemNet structure achieves the state-of-the- DRCN [21] and DnCNN [40] (Fig. 1(a)), adopt the single- art performance in image denoising, super-resolution and path feed-forward architecture, where one state is mainly in- JPEG deblocking. Due to the strong learning ability, our fluenced by its direct former state, namely short-term mem- MemNet can be trained to handle different levels of corrup- ory. Some variants of CNNs, RED [27] and ResNet [12] tion even using a single model. (Fig. 1(b)), have skip connections to pass information across several layers. In these networks, apart from the 2. Related Work short-term memory, one state is also influenced by a spe- The success of AlexNet [22] in ImageNet [31] starts the cific prior state, namely restricted long-term memory. In era of deep learning for vision, and the popular networks, essence, recent evidence suggests that mammalian brain GoogleNet [33], Highway network [32], ResNet [12], re- may protect previously-acquired knowledge in neocortical veal that the network depth is of crucial importance. circuits [4]. However, none of above CNN models has such As the early attempt, Jain et al. [17] proposed a simple mechanism to achieve persistent memory. As the depth CNN to recover a clean natural image from a noisy observa- grows, they face the issue of lacking long-term memory. tion and achieved comparable performance with the wavelet To address this issue, we propose a very deep persis- methods. As the pioneer CNN model for SISR, super- tent memory network (MemNet), which introduces a mem- resolution convolutional neural network (SRCNN) [8] pre- ory block to explicitly mine persistent memory through an dicts the nonlinear LR-HR mapping via a fully deep con- adaptive learning process. In MemNet, a Feature Extrac- volutional network, which significantly outperforms classi- tion Net (FENet) first extracts features from the low-quality cal shallow methods. The authors further proposed an ex- image. Then, several memory blocks are stacked with a tended CNN model, named Artifacts Reduction Convolu- densely connected structure to solve the image restoration tional Neural Networks (ARCNN) [7], to effectively handle task. Finally, a Reconstruction Net (ReconNet) is adopted JPEG compression artifacts. to learn the residual, rather than the direct mapping, to ease To incorporate task-specific priors, Wang et al. adopted the training difficulty. a cascaded sparse coding network to fully exploit the nat- As the key component of MemNet, a memory block con- ural sparsity of images [36]. In [35], a deep dual-domain tains a recursive unit and a gate unit. Inspired by neuro- approach is proposed to combine both the prior knowl- science [6, 25] that recursive connections ubiquitously ex- edge in the JPEG compression scheme and the practice of ist in the neocortex, the recursive unit learns multi-level dual-domain sparse coding. Guo et al. [10] also proposed representations of the current state under different recep- a dual-domain convolutional network that jointly learns a tive fields (blue circles in Fig. 1(c)), which can be seen as very deep network in both DCT and pixel domains. the short-term memory. The short-term memory generated Recently, very deep CNNs become popular for image from the recursive unit, and the long-term memory gener- restoration. Kim et al. [20] stacked 20 convolutional lay- ated from the previous memory blocks 1 (green arrow in ers to exploit large contextual information. Residual learn- Fig. 1(c)) are concatenated and sent to the gate unit, which ing and adjustable gradient clipping are used to speed up is a non-linear function to maintain persistent memory. Fur- the training. Zhang et al. [40] introduced batch normal- ther, we present an extended multi-supervised MemNet, ization into a DnCNN model to jointly handle several im- which fuses all intermediate predictions of memory blocks age restoration tasks. To reduce the model complexity, the to boost the performance. DRCN model introduced recursive-supervision and skip- In summary, the main contributions of this work include: connection to mitigate the training difficulty [21]. Using A memory block to accomplish the gating mechanism symmetric skip connections, Mao et al. [27] proposed a to help bridge the long-term dependencies. In each memory very deep convolutional auto-encoder network for image block, the gate unit adaptively learns different weights for denoising and SISR. Very Recently, Lai et al. [23] pro- different memories, which controls how much of the long- posed LapSRN to address the problems of speed and ac- term memory should be reserved, and decides how much of curacy for SISR, which operates on LR images directly and the short-term memory should be stored. progressively reconstruct the sub-band residuals of HR im- A very deep end-to-end persistent memory network (80 ages. Tai et al. [34] proposed deep recursive residual net- convolutional layers) for image restoration. The densely work (DRRN) to address the problems of model parameters 1 For the first memory block, its long-term memory comes from the and accuracy, which recursively learns the residual unit in a output of FENet. multi-path model.

3 . B0 B1 Bm BM FENet Memory block 1 ... Memory block m ... Memory block M ReconNet fext frec x y Skip connection from input Long path transmission to Short path transmission to the ReconNet the gate unit Figure 2. Basic MemNet architecture. The red dashed box represents multiple stacked memory blocks. 3. MemNet for Image Restoration we use a residual building block, which is introduced in 3.1. Basic Network Architecture ResNet [12] and shows powerful learning ability for object Our MemNet consists of three parts: a feature extraction recognition, as a recursion in the recursive unit. A residual net FENet, multiple stacked memory blocks and finally a building block in the m-th memory block is formulated as, reconstruction net ReconNet (Fig. 2). Let’s denote x and y r Hm r−1 = Rm (Hm r−1 ) = F(Hm r−1 , Wm ) + Hm , (5) as the input and output of MemNet. Specifically, a convo- lutional layer is used in FENet to extract the features from r−1 r where Hm , Hm are the input and output of the r-th resid- the noisy or blurry input image, 0 ual building block respectively. When r = 1, Hm = Bm−1 . F denotes the residual function, Wm is the weight set to B0 = fext (x), (1) be learned and R denotes the function of residual build- where fext denotes the feature extraction function and B0 ing block. Specifically, each residual function contains two is the extracted feature to be sent to the first memory block. convolutional layers with the pre-activation structure [13], Supposing M memory blocks are stacked to act as the fea- r−1 2 1 r−1 F(Hm , Wm ) = Wm τ (Wm τ (Hm )), (6) ture mapping, we have where τ denotes the activation function, including batch Bm = Mm (Bm−1 ) = Mm (Mm−1 (...(M1 (B0 ))...)), i normalization [16] followed by ReLU [30], and Wm ,i = (2) 1, 2 are the weights of the i-th convolutional layer. The bias where Mm denotes the m-th memory block function and terms are omitted for simplicity. Bm−1 and Bm are the input and output of the m-th mem- Then, several recursions are recursively learned to gen- ory block respectively. Finally, instead of learning the direct erate multi-level representations under different receptive mapping from the low-quality image to the high-quality im- fields. We call these representations as the short-term mem- age, our model uses a convolutional layer in ReconNet to ory. Supposing there are R recursions in the recursive unit, reconstruct the residual image [20, 21, 40]. Therefore, our the r-th recursion in recursive unit can be formulated as, basic MemNet can be formulated as, r Hm = R(r) m (Bm−1 ) = Rm (Rm (...(Rm (Bm−1 ))...)), (7) y = D(x) (3) r = frec (MM (MM −1 (...(M1 (fext (x)))...))) + x, where r-fold operations of Rm are performed and where frec denotes the reconstruction function and D de- {Hmr R }r=1 are the multi-level representations of the re- notes the function of our basic MemNet. cursive unit. These representations are concatenated as Given a training set {x(i) , x ˜ (i) }N i=1 , where N is the num- the short-term memory: Bm short = [Hm 1 2 , Hm R , ..., Hm ]. ber of training patches and x ˜ (i) is the ground truth high- In addition, the long-term memory coming from the pre- quality patch of the low-quality patch x(i) , the loss function vious memory blocks can be constructed as: Bm long = of our basic MemNet with the parameter set Θ, is [B0 , B1 , ..., Bm−1 ]. The two types of memories are then N concatenated as the input to the gate unit, 1 L(Θ) = ˜ (i) − D(x(i) ) 2 , x (4) gate short long 2N Bm = [Bm , Bm ]. (8) i=1 Gate Unit is used to achieve persistent memory through 3.2. Memory Block an adaptive learning process. In this paper, we adopt a 1 × We now present the details of our memory block. The 1 convolutional layer to accomplish the gating mechanism memory block contains a recursive unit and a gate unit. that can learn adaptive weights for different memories, Recursive Unit is used to model a non-linear function that gate gate gate gate acts like a recursive synapse in the brain [6, 25]. Here, Bm = fm (Bm ) = Wm τ (Bm ), (9)

4 . Output 1 w1 ... ... ReconNet wm Final Output m Output ... ... Input FENet Memory block 1 ... Memory block m ... Memory block M Output M wM Skip connection from input Long path transmission to Transmission from memory Short path transmission to the ReconNet the gate unit block to ReconNet Figure 3. Multi-supervised MemNet architecture. The outputs with purple color are supervised. gate MemNet_NL_4 MemNet_NL_6 MemNet_4 MemNet_6 where fm and Bm denote the function of the 1 × 1 con- gate volutional layer (parameterized by Wm ) and the output of the m-th memory block, respectively. As a result, the (a) weights for the long-term memory controls how much of the previous states should be reserved, and the weights for the short-term memory decides how much of the current 27.31/0.9078 27.45/0.9101 27.29/0.9070 27.71/0.9142 state should be stored. Therefore, the formulation of the m-th memory block can be written as, (b) Bm = Mm (Bm−1 ) = fgate ([Rm (Bm−1 ), ..., R(R) m (Bm−1 ), B0 , ..., Bm−1 ]). Low frequency High frequency (10) 3.3. Multi-Supervised MemNet (c) To further explore the features at different states, inspired by [21], we send the output of each memory block to the MemNet_NL_4-MemNet_NL_6 MemNet_4-MemNet_6 MemNet_4-MemNet_NL_4 MemNet_6-MemNet_NL_6 same reconstruction net fˆrec to generate Figure 4. (a) ×4 super-resolved images and PSNR/SSIMs of dif- ym = fˆrec (x, Bm ) = x + frec (Bm ), (11) ferent networks. (b) We convert 2-D power spectrums to 1-D spec- tral densities by integrating the spectrums along each concentric where {ym }M m=1 are the intermediate predictions. All of circle. (c) Differences of spectral densities of two networks. the predictions are supervised during training, and used to compute the final output via weighted averaging: y = high-frequency signals. To verify our intuition, we train M M m=1 wm · ym (Fig. 3). The optimal weights {wm }m=1 a 80-layer MemNet without long-term connections, which are automatically learned during training and the final out- is denoted as MemNet NL, and compare with the original put from the ensemble is also supervised. The loss function MemNet. Both networks have 6 memory blocks leading to of our multi-supervised MemNet can be formulated as, 6 intermediate outputs, and each memory block contains 6 N M recursions. Fig. 4(a) shows the 4th and 6th outputs of both α networks. We compute their power spectrums, center them, L(Θ) = ˜ (i) − x (i) wm · ym 2 2N m=1 estimate spectral densities for a continuous set of frequency i=1 (12) ranges from low to high by placing concentric circles, and M N 1−α (i) (i) 2 plot the densities of four outputs in Fig. 4(b). + ˜ x − ym , 2M N m=1 i=1 We further plot differences of these densities in Fig. 4(c). where α denotes the loss weight. From left to right, the first case indicates the earlier layer does contain some mid-frequency information that the latter 3.4. Dense Connections for Image Restoration layers lose. The 2nd case verifies that with dense connec- Now we analyze why the long-term dense connections tions, the latter layer absorbs the information carried from in MemNet may benefit the image restoration. In very the previous layers, and even generate more mid-frequency deep networks, some of the mid/high-frequency informa- information. The 3rd case suggests in earlier layers, the tion can get lost at latter layers during a typical feedfor- frequencies are similar between two models. The last case ward CNN process, and dense connections from previ- demonstrates the MemNet recovers more high frequency ous layers can compensate such loss and further enhance than the version without long-term connections.

5 .4. Discussions Methods MemNet NL MemNet NS MemNet ×2 37.68/0.9591 37.71/0.9592 37.78/0.9597 Difference to Highway Network First, we discuss how ×3 33.96/0.9235 34.00/0.9239 34.09/0.9248 the memory block accomplishes the gating mechanism and ×4 31.60/0.8878 31.65/0.8880 31.74/0.8893 present the difference between MemNet and Highway Net- Table 1. Ablation study on effects of long-term and short-term con- work – a very deep CNN model using a gate unit to regulate nections. Average PSNR/SSIMs for the scale factor ×2, ×3 and ×4 on dataset Set5. Red indicates the best performance. information flow [32]. To avoid information attenuation in very deep plain net- works, inspired by LSTM, Highway Network introduced the bypassing layers along with gate units, i.e., b = A(a) · T (a) + a · (1 − T (a)), (13) where a and b are the input and output, A and T are two (a) Image denoising non-linear transform functions. T is the transform gate to control how much information produced by A should be stored to the output; and 1 − T is the carry gate to decide how much of the input should be reserved to the output. In MemNet, the short-term and long-term memories are concatenated. The 1 × 1 convolutional layer adaptively learns the weights for different memories. Compared to (b) Super-resolution Highway Network that learns specific weight for each pixel, our gate unit learns specific weight for each feature map, which has two advantages: (1) to reduce model parameters and complexity; (2) to be less prone to overfitting. Difference to DRCN There are three main differences be- tween MemNet and DRCN [21]. The first is the design of the basic module in network. In DRCN, the basic module is a convolutional layer; while in MemNet, the basic mod- (c) JPEG deblocking ule is a memory block to achieve persistent memory. The Figure 5. The norm of filter weights vm l vs. feature map index l. second is in DRCN, the weights of the basic modules (i.e., For the curve of the mth block, the left (m × 64) elements denote the convolutional layers) are shared; while in MemNet, the long-term memories and the rest (Lm − m × 64) elements the weights of the memory blocks are different. The third denote the short-term memories. The bar diagrams illustrate the is there are no dense connections among the basic mod- average norm of long-term memories, short-term memories from ules in DRCN, which results in a chain structure; while in the first R − 1 recursions and from the last recursion, respectively. MemNet, there are long-term dense connections among the E.g., each yellow bar is the average norm of the short-term mem- memory blocks leading to the multi-path structure, which ories from the last recursion in the recursive unit (i.e., the last 64 not only helps information flow across the network, but elements in each curve). also encourages gradient backpropagation during training. Benefited from the good information flow ability, MemNet 5. Experiments could be easily trained without the multi-supervision strat- 5.1. Implementation Details egy, which is imperative for training DRCN [21]. Datasets For image denoising, we follow [27] to use Difference to DenseNet Another related work to MemNet 300 images from the Berkeley Segmentation Dataset is DenseNet [14], which also builds upon a densely con- (BSD) [28], known as the train and val sets, to generate nected principle. In general, DenseNet deals with object image patches as the training set. Two popular benchmarks, recognition, while MemNet is proposed for image restora- a dataset with 14 common images and the BSD test set with tion. In addition, DenseNet adopts the densely connected 200 images, are used for evaluation. We generate the input structure in a local way (i.e., inside a dense block), while noisy patch by adding Gaussian noise with one of the three MemNet adopts the densely connected structure in a global noise levels (σ = 30, 50 and 70) to the clean patch. way (i.e., across the memory blocks). In Secs. 3.4 and 5.2, For SISR, by following the experimental setting in [20], we analyze and demonstrate the long-term dense connec- we use a training set of 291 images where 91 images are tions in MemNet indeed play an important role in image from Yang et al. [38] and other 200 are from BSD train set. restoration tasks. For testing, four benchmark datasets, Set5 [1], Set14 [39],

6 . (sec.) Dataset VDSR [20] DRCN [21] RED [27] MemNet MemNet_M6 MemNet_M5 Depth 20 20 30 80 Filters 64 256 128 64 MemNet_M4 Parameters 665K 1, 774K 4, 131K 677K MemNet_M3 DRCN Traing images 291 91 300 91 91 291 VDSR Multi-supervision No Yes No No Yes Yes PSNR 33.66 33.82 33.82 33.92 33.98 34.09 Table 2. SISR comparisons with start-of-the-art networks for scale factor ×3 on Set5. Red indicates the fewest number or best performance. Figure 6. PSNR, complexity vs. speed. BSD100 [28] and Urban100 [15] are used. Three scale fac- 5.2. Ablation Study tors are evaluated, including ×2, ×3 and ×4. The input LR Tab. 1 presents the ablation study on the effects of long- image is generated by first bicubic downsampling and then term and short-term connections. Compared to MemNet, bicubic upsampling the HR image with a certain scale. MemNet NL removes the long-term connections (green For JPEG deblocking, the same training set for image de- curves in Fig. 3) and MemNet NS removes the short-term noising is used. As in [7], Classic5 and LIVE1 are adopted connections (black curves from the first R − 1 recursions to as the test datasets. Two JPEG quality factors are used, i.e., the gate unit in Fig. 1. Connection from the last recursion 10 and 20, and the JPEG deblocking input is generated by to the gate unit is reserved to avoid a broken interaction compressing the image with a certain quality factor using between recursive unit and gate unit). The three networks the MATLAB JPEG encoder. have the same depth (80) and filter number (64). We see Training Setting Following the method [27], for image that, long-term dense connections are very important since denoising, the grayscale image is used; while for SISR and MemNet significantly outperforms MemNet NL. Further, JPEG deblocking, the luminance component is fed into the MemNet achieves better performance than MemNet NS, model. The input image size can be arbitrary due to the which reveals the short-term connections are also useful for fully convolution architecture. Considering both the train- image restoration but less powerful than the long-term con- ing time and storage complexities, training images are split nections. The reason is that the long-term connections skip into 31 × 31 patches with a stride of 21. The output of much more layers than the short-term ones, which can carry MemNet is the estimated high-quality patch with the same some mid/high frequency signals from very early layers to resolution as the input low-quality patch. We follow [34] latter layers as described in Sec. 3.4. to do data augmentation. For each task, we train a single model for all different levels of corruption. E.g., for image 5.3. Gate Unit Analysis denoising, noise augmentation is used. Images with differ- We now illustrate how our gate unit affects different ent noise levels are all included in the training set. Similarly, kinds of memories. Inspired by [14], we adopt a weight for super-resolution and JPEG deblocking, scale and quality norm as an approximate for the dependency of the current augmentation are used, respectively. layer on its preceding layers, which is calculated by the cor- We use Caffe [19] to implement two 80-layer MemNet responding weights from all filters w.r.t. each feature map: networks, the basic and the multi-supervised versions. In l 64 gate 2 vm = i=1 (Wm (1, 1, l, i)) , l = 1, 2, ..., Lm , where both architectures, 6 memory blocks, each contains 6 recur- Lm is the number of the input feature maps for the m-th sions, are constructed (i.e., M6R6). Specifically, in multi- gate unit, l denotes the feature map index, Wm gate stores the supervised MemNet, 6 predictions are generated and used weights with the size of 1 × 1 × Lm × 64, and vm l is the to compute the final output. α balances different regulariza- weight norm of the l-th feature map for the m-th gate unit. tions, and is empirically set as α = 1/(M + 1). Basically, the larger the norm is, the stronger dependency The objective functions in Eqn. 4 and Eqn. 12 are opti- it has on this particular feature map. For better visualiza- mized via the mini-batch stochastic gradient descent (SGD) tion, we normalize the norms to the range of 0 to 1. Fig. 5 with backpropagation [24]. We set the mini-batch size of presents the norm of the filter weights {vm l 6 }m=1 vs. fea- SGD to 64, momentum parameter to 0.9, and weight decay ture map index l. We have three observations: (1) Different to 10−4 . All convolutional layer has 64 filters. Except the tasks have different norm distributions. (2) The average and 1 × 1 convolutional layers in the gate units, the kernel size variance of the weight norms become smaller as the mem- of other convolutional layers is 3 × 3. We use the method ory block number increases. (3) In general, the short-term in [11] for weight initialization. The initial learning rate is memories from the last recursion in recursive unit (the last set to 0.1 and then divided 10 every 20 epochs. Training a 64 elements in each curve) contribute most than the other 80-layer basic MemNet by 91 images [38] for SISR roughly two memories, and the long-term memories seem to play a takes 5 days using 1 Tesla P40 GPU. Due to space constraint more important role in late memory blocks to recover useful and more recent baselines, we focus on SISR in Sec. 5.2, signals than the short-term memories from the first R − 1 5.4 and 5.6, while all three tasks in Sec. 5.3 and 5.5. recursions.

7 . Dataset Noise BM3D [5] EPLL [41] PCLR [2] PGPD [37] WNNM [9] RED [27] MemNet 30 28.49/0.8204 28.35/0.8200 28.68/0.8263 28.55/0.8199 28.74/0.8273 29.17/0.8423 29.22/0.8444 14 images 50 26.08/0.7427 25.97/0.7354 26.29/0.7538 26.19/0.7442 26.32/0.7517 26.81/0.7733 26.91/0.7775 70 24.65/0.6882 24.47/0.6712 24.79/0.6997 24.71/0.6913 24.80/0.6975 25.31/0.7206 25.43/0.7260 30 27.31/0.7755 27.38/0.7825 27.54/0.7827 27.33/0.7717 27.48/0.7807 27.95/0.8019 28.04/0.8053 BSD200 50 25.06/0.6831 25.17/0.6870 25.30/0.6947 25.18/0.6841 25.26/0.6928 25.75/0.7167 25.86/0.7202 70 23.82/0.6240 23.81/0.6168 23.94/0.6336 23.89/0.6245 23.95/0.6346 24.37/0.6551 24.53/0.6608 Table 3. Benchmark image denoising results. Average PSNR/SSIMs for noise level 30, 50 and 70 on 14 images and BSD200. Red color indicates the best performance and blue color indicates the second best performance. Dataset Scale Bicubic SRCNN [8] VDSR [20] DRCN [21] DnCNN [40] LapSRN [23] DRRN [34] MemNet ×2 33.66/0.9299 36.66/0.9542 37.53/0.9587 37.63/0.9588 37.58/0.9590 37.52/0.959 37.74/0.9591 37.78/0.9597 Set5 ×3 30.39/0.8682 32.75/0.9090 33.66/0.9213 33.82/0.9226 33.75/0.9222 −/− 34.03/0.9244 34.09/0.9248 ×4 28.42/0.8104 30.48/0.8628 31.35/0.8838 31.53/0.8854 31.40/0.8845 31.54/0.885 31.68/0.8888 31.74/0.8893 ×2 30.24/0.8688 32.45/0.9067 33.03/0.9124 33.04/0.9118 33.03/0.9128 33.08/0.913 33.23/0.9136 33.28/0.9142 Set14 ×3 27.55/0.7742 29.30/0.8215 29.77/0.8314 29.76/0.8311 29.81/0.8321 −/− 29.96/0.8349 30.00/0.8350 ×4 26.00/0.7027 27.50/0.7513 28.01/0.7674 28.02/0.7670 28.04/0.7672 28.19/0.772 28.21/0.7721 28.26/0.7723 ×2 29.56/0.8431 31.36/0.8879 31.90/0.8960 31.85/0.8942 31.90/0.8961 31.80/0.895 32.05/0.8973 32.08/0.8978 BSD100 ×3 27.21/0.7385 28.41/0.7863 28.82/0.7976 28.80/0.7963 28.85/0.7981 −/− 28.95/0.8004 28.96/0.8001 ×4 25.96/0.6675 26.90/0.7101 27.29/0.7251 27.23/0.7233 27.29/0.7253 27.32/0.728 27.38/0.7284 27.40/0.7281 ×2 26.88/0.8403 29.50/0.8946 30.76/0.9140 30.75/0.9133 30.74/0.9139 30.41/0.910 31.23/0.9188 31.31/0.9195 Urban100 ×3 24.46/0.7349 26.24/0.7989 27.14/0.8279 27.15/0.8276 27.15/0.8276 −/− 27.53/0.8378 27.56/0.8376 ×4 23.14/0.6577 24.52/0.7221 25.18/0.7524 25.14/0.7510 25.20/0.7521 25.21/0.756 25.44/0.7638 25.50/0.7630 Table 4. Benchmark SISR results. Average PSNR/SSIMs for scale factor ×2, ×3 and ×4 on datasets Set5, Set14, BSD100 and Urban100. Dataset Quality JPEG ARCNN [7] TNRD [3] DnCNN [40] MemNet 10 27.82/0.7595 29.03/0.7929 29.28/0.7992 29.40/0.8026 29.69/0.8107 Classic5 20 30.12/0.8344 31.15/0.8517 31.47/0.8576 31.63/0.8610 31.90/0.8658 10 27.77/0.7730 28.96/0.8076 29.15/0.8111 29.19/0.8123 29.45/0.8193 LIVE1 20 30.07/0.8512 31.29/0.8733 31.46/0.8769 31.59/0.8802 31.83/0.8846 Table 5. Benchmark JPEG deblocking results. Average PSNR/SSIMs for quality factor 10 and 20 on datasets Classic5 and LIVE1. 5.4. Comparision with Non-Persistent CNN Models (sec.) when processing a 288 × 288 image on GPU P40. In this subsection, we compare MemNet with three Results of VDSR [20] and DRCN [21] are cited from their existing non-persistent CNN models, i.e., VDSR [20], papers. RED [27] is skipped here since its high number of DRCN [21] and RED [27], to demonstrate the superior- parameters may reduce the contrast among other methods. ity of our persistent memory structure. VDSR and DRCN We see that our MemNet already achieve comparable re- are two representative networks with the plain structure sult at the 3rd prediction using much fewer parameters, and and RED is representative for skip connections. Tab. 2 significantly outperforms the state of the arts by slightly in- presents the published results of these models along with creasing model complexity. their training details. Since the training details are differ- 5.5. Comparisons with State-of-the-Art Models ent among different work, we choose DRCN as a baseline, We compare multi-supervised 80-layer MemNet with the which achieves good performance using the least training state of the arts in three restoration tasks, respectively. images. But, unlike DRCN that widens its network to in- Image Denoising Tab. 3 presents quantitative results on crease the parameters (filter number: 256 vs. 64), we deepen two benchmarks, with results cited from [27]. For BSD200 our MemNet by stacking more memory blocks (depth: 20 dataset, by following the setting in RED [27], the origi- vs. 80). It can be seen that, using the fewest training images nal image is resized to its half size. As we can see, our (91), filter number (64) and relatively few model parameters MemNet achieves the best performance on all cases. It (667K), our basic MemNet already achieves higher PSNR should be noted that, for each test image, RED rotates and than the prior networks. Keeping the setting unchanged, mirror flips the kernels, and performs inference multiple our multi-supervised MemNet further improves the perfor- times. The outputs are then averaged to obtain the final mance. With more training images (291), our MemNet sig- result. They claimed this strategy can lead to better perfor- nificantly outperforms the state of the arts. mance. However, in our MemNet, we do not perform any Since we aim to address the long-term dependency prob- post-processing. For qualitative comparisons, we use public lem in networks, we intend to make our MemNet very deep. codes of PCLR [2], PGPD [37] and WNNM [9]. The results However, MemNet is also able to balance the model com- are shown in Fig. 7. As we can see, our MemNet handles plexity and accuracy. Fig. 6 presents the PSNR of different Gaussian noise better than the previous state of the arts. intermediate predictions in MemNet (e.g., MemNet M3 de- Super-Resolution Tab. 4 summarizes quantitative results notes the prediction of the 3rd memory block) for scale ×3 on four benchmarks, by citing the results of prior methods. on Set5, in which the colorbar indicates the inference time MemNet outperforms prior methods in almost all cases.

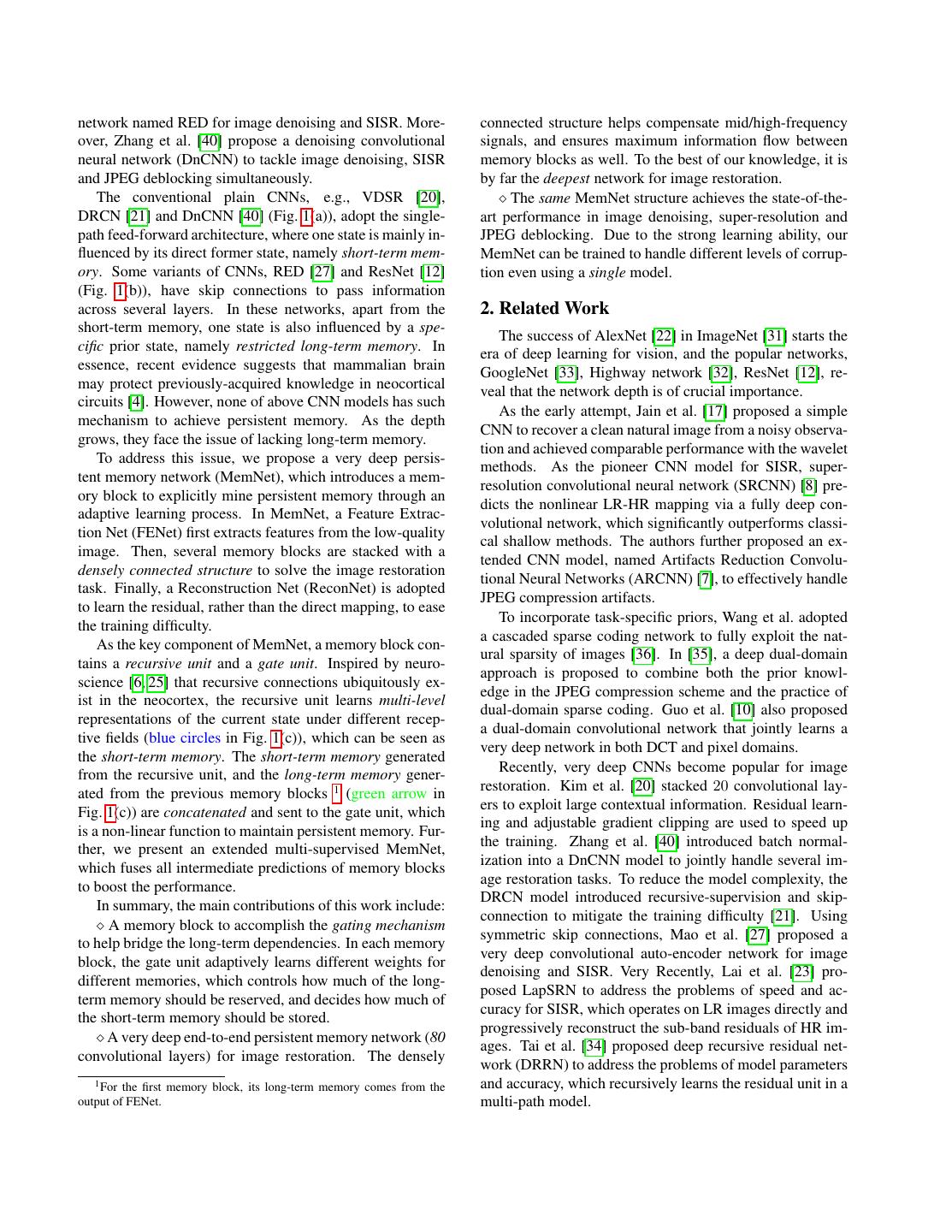

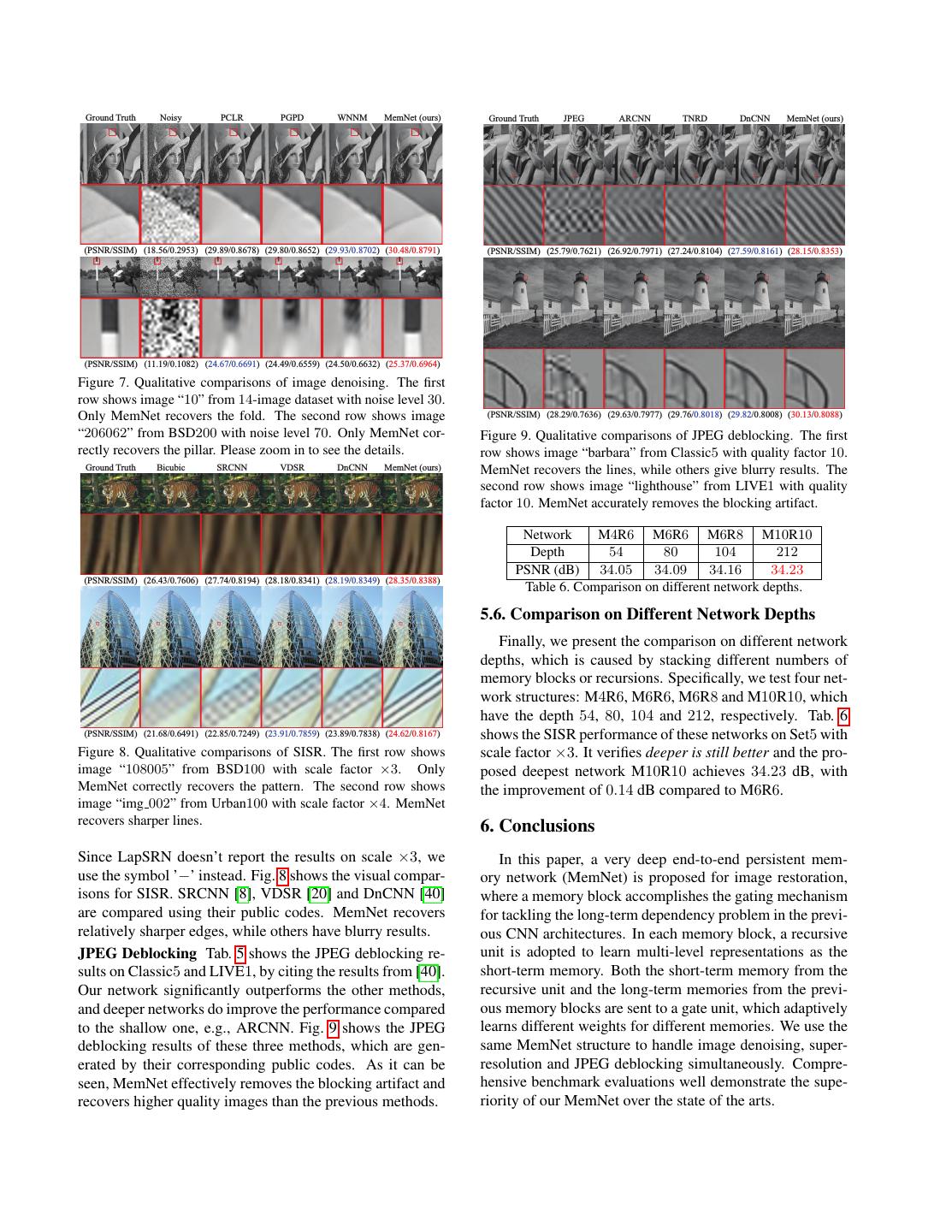

8 . Ground Truth Noisy PCLR PGPD WNNM MemNet (ours) Ground Truth JPEG ARCNN TNRD DnCNN MemNet (ours) (PSNR/SSIM) (18.56/0.2953) (29.89/0.8678) (29.80/0.8652) (29.93/0.8702) (30.48/0.8791) (PSNR/SSIM) (25.79/0.7621) (26.92/0.7971) (27.24/0.8104) (27.59/0.8161) (28.15/0.8353) (PSNR/SSIM) (11.19/0.1082) (24.67/0.6691) (24.49/0.6559) (24.50/0.6632) (25.37/0.6964) Figure 7. Qualitative comparisons of image denoising. The first row shows image “10” from 14-image dataset with noise level 30. Only MemNet recovers the fold. The second row shows image (PSNR/SSIM) (28.29/0.7636) (29.63/0.7977) (29.76/0.8018) (29.82/0.8008) (30.13/0.8088) “206062” from BSD200 with noise level 70. Only MemNet cor- Figure 9. Qualitative comparisons of JPEG deblocking. The first rectly recovers the pillar. Please zoom in to see the details. row shows image “barbara” from Classic5 with quality factor 10. Ground Truth Bicubic SRCNN VDSR DnCNN MemNet (ours) MemNet recovers the lines, while others give blurry results. The second row shows image “lighthouse” from LIVE1 with quality factor 10. MemNet accurately removes the blocking artifact. Network M4R6 M6R6 M6R8 M10R10 Depth 54 80 104 212 PSNR (dB) 34.05 34.09 34.16 34.23 (PSNR/SSIM) (26.43/0.7606) (27.74/0.8194) (28.18/0.8341) (28.19/0.8349) (28.35/0.8388) Table 6. Comparison on different network depths. 5.6. Comparison on Different Network Depths Finally, we present the comparison on different network depths, which is caused by stacking different numbers of memory blocks or recursions. Specifically, we test four net- work structures: M4R6, M6R6, M6R8 and M10R10, which have the depth 54, 80, 104 and 212, respectively. Tab. 6 (PSNR/SSIM) (21.68/0.6491) (22.85/0.7249) (23.91/0.7859) (23.89/0.7838) (24.62/0.8167) shows the SISR performance of these networks on Set5 with Figure 8. Qualitative comparisons of SISR. The first row shows scale factor ×3. It verifies deeper is still better and the pro- image “108005” from BSD100 with scale factor ×3. Only posed deepest network M10R10 achieves 34.23 dB, with MemNet correctly recovers the pattern. The second row shows the improvement of 0.14 dB compared to M6R6. image “img 002” from Urban100 with scale factor ×4. MemNet recovers sharper lines. 6. Conclusions Since LapSRN doesn’t report the results on scale ×3, we In this paper, a very deep end-to-end persistent mem- use the symbol ’−’ instead. Fig. 8 shows the visual compar- ory network (MemNet) is proposed for image restoration, isons for SISR. SRCNN [8], VDSR [20] and DnCNN [40] where a memory block accomplishes the gating mechanism are compared using their public codes. MemNet recovers for tackling the long-term dependency problem in the previ- relatively sharper edges, while others have blurry results. ous CNN architectures. In each memory block, a recursive JPEG Deblocking Tab. 5 shows the JPEG deblocking re- unit is adopted to learn multi-level representations as the sults on Classic5 and LIVE1, by citing the results from [40]. short-term memory. Both the short-term memory from the Our network significantly outperforms the other methods, recursive unit and the long-term memories from the previ- and deeper networks do improve the performance compared ous memory blocks are sent to a gate unit, which adaptively to the shallow one, e.g., ARCNN. Fig. 9 shows the JPEG learns different weights for different memories. We use the deblocking results of these three methods, which are gen- same MemNet structure to handle image denoising, super- erated by their corresponding public codes. As it can be resolution and JPEG deblocking simultaneously. Compre- seen, MemNet effectively removes the blocking artifact and hensive benchmark evaluations well demonstrate the supe- recovers higher quality images than the previous methods. riority of our MemNet over the state of the arts.

9 .References [22] A. Krizhevsky, I. Sutskever, and G. E. Hinton. Imagenet classification with deep convolutional neural networks. In [1] C. M. Bevilacqua, A. Roumy, and M. Morel. Low- NIPS, 2012. complexity single-image super-resolution based on nonneg- [23] W. Lai, J. Huang, N. Ahuja, and M. Yang. Deep laplacian ative neighbor embedding. In BMVC, 2012. pyramid networks for fast and accurate super-resolution. In [2] F. Chen, L. Zhang, and H. Yu. External patch prior guided CVPR, 2017. internal clustering for image denoising. In ICCV, 2015. [24] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. Gradient- [3] Y. Chen and T. Pock. Trainable nonlinear reaction diffusion: based learning applied to document recognition. In Proceed- A flexible framework for fast and effective image restoration. ings of the IEEE, 1998. IEEE Trans. on PAMI, 2016. [25] M. Liang and X. Hu. Recurrent convolutional neural network [4] J. Cichon and W. Gan. Branch-specific dendritic ca2+ spikes for object recognition. In CVPR, 2015. cause persistent synaptic plasticity. Nature, 520(7546):180– [26] X. Liu, X. Wu, J. Zhou, and D. Zhao. Data-driven 185, 2015. sparsity-based restoration of jpeg-compressed images in dual [5] K. Dabov, A. Foi, V. Katkovnik, and K. O. Egiazarian. Im- transform-pixel domain. In CVPR, 2015. age denoising by sparse 3-D transform-domain collaborative [27] X. Mao, C. Shen, and Y. Yang. Image restoration using very filtering. IEEE Trans. on IP, 16(8):2080–2095, 2007. deep convolutional encoder-decoder networks with symmet- [6] P. Dayan and L. F. Abbott. Theoretical neuroscience. Cam- ric skip connections. In NIPS, 2016. bridge, MA: MIT Press, 2001. [28] D. Martin, C. Fowlkes, D. Tal, and J. Malik. A database [7] C. Dong, Y. Deng, C. C. Loy, and X. Tang. Compression ar- of human segmented natural images and its application to tifacts reduction by a deep convolutional network. In ICCV, evaluating segmentation algorithms and measuring ecologi- 2015. cal statistics. In ICCV, 2001. [8] C. Dong, C. Loy, K. He, and X. Tang. Image super-resolution [29] P. Milanfar. A tour of modern image filtering: new insights using deep convolutional networks. IEEE Trans. on PAMI, and methods, both practical and theoretical. IEEE Signal 38(2):295–307, 2016. Processing Magazine, 30(1):106–128, 2013. [9] S. Gu, L. Zhang, W. Zuo, and X. Feng. Weighted nuclear [30] V. Nair and G. Hinton. Rectified linear units improve re- norm minimization with application to image denoising. In stricted boltzmann machines. In ICML, 2010. CVPR, 2014. [31] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, [10] J. Guo and H. Chao. Building dual-domain representations S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, for compression artifacts reduction. In ECCV, 2016. and et al. Imagenet large scale visual recognition challenge. [11] K. He, X. Zhang, S. Ren, and J. Sun. Delving deep into IJCV, 115(3):211–252, 2015. rectifiers: Surpassing human-level performance on imagenet [32] R. K. Srivastava, K. Greff, and J. Schmidhuber. Highway classification. In ICCV, 2015. networks. In NIPS, 2015. [12] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning [33] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, and S. Reed. Going for image recognition. In CVPR, 2016. deeper with convolutions. In CVPR, 2015. [13] K. He, X. Zhang, S. Ren, and J. Sun. Identity mappings in [34] Y. Tai, J. Yang, and X. Liu. Image super-resolution via deep deep residual networks. In ECCV, 2016. recursive residual network. In CVPR, 2017. [14] G. Huang, Z. Liu, and K. Q. Weinberger. Densely connected [35] Z. Wang, D. Liu, S. Chang, Q. Ling, Y. Yang, and T. S. convolutional networks. In CVPR, 2017. Huang. D3 : Deep dual-domain based fast restoration of jpeg- compressed images. In CVPR, 2016. [15] J.-B. Huang, A. Singh, and N. Ahuja. Single image super- resolution from transformed self-exemplars. In CVPR, 2015. [36] Z. Wang, D. Liu, J. Yang, W. Han, and T. S. Huang. Deep networks for image super-resolution with sparse prior. In [16] S. Ioffe and C. Szegedy. Batch normalization: Accelerating ICCV, 2015. deep network training by reducing internal covariate shift. In ICML, 2015. [37] J. Xu, L. Zhang, W. Zuo, D. Zhang, and X. Feng. Patch group based nonlocal self-similarity prior learning for image [17] V. Jain and H. S. Seung. Natural image denoising with con- denoising. In ICCV, 2015. volutional networks. In NIPS, 2008. [38] J. Yang, J. Wright, T. S. Huang, and Y. Ma. Image super- [18] J. Jancsary, S. Nowozin, and C. Rother. Loss-specific train- resolution via sparse representation. IEEE Trans. on IP, ing of non-parametric image restoration models: A new state 19(11):2861–2873, 2010. of the art. In ECCV, 2012. [39] R. Zeyde, M. Elad, and M. Protter. On single image scale- [19] Y. Jia, E. Shelhamer, J. Donahue, S. Karayev, J. Long, R. Gir- up using sparse-representations. Curves and Surfaces, pages shick, S. Guadarrama, and T. Darrell. Caffe: Convolutional 711–730, 2012. architecture for fast feature embedding. In ACM MM, 2014. [40] K. Zhang, W. Zuo, Y. Chen, D. Meng, and L. Zhang. Be- [20] J. Kim, J. K. Lee, and K. M. Lee. Accurate image super- yond a gaussian denoiser: Residual learning of deep CNN resolution using very deep convolutional networks. In CVPR, for image denoising. IEEE Trans. on IP, 2017. 2016. [41] D. Zoran and Y. Weiss. From learning models of natural [21] J. Kim, J. K. Lee, and K. M. Lee. Deeply-recursive convolu- image patches to whole image restoration. In ICCV, 2011. tional network for image super-resolution. In CVPR, 2016.