- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

5-Parallel Programming Patterns Overview and Map Pattern

展开查看详情

1 . Parallel Programming Patterns Overview and Map Pattern Parallel Computing CIS 410/510 Department of Computer and Information Science Lecture 5 – Parallel Programming Patterns - Map

2 . Outline q Parallel programming models q Dependencies q Structured programming patterns overview ❍ Serial / parallel control flow patterns ❍ Serial / parallel data management patterns q Map pattern ❍ Optimizations ◆ sequences of Maps ◆ code Fusion ◆ cache Fusion ❍ Related Patterns ❍ Example: Scaled Vector Addition (SAXPY) Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 2

3 . Parallel Models 101 q Sequential models Memory (RAM) ❍ von Neumann (RAM) model q Parallel model processor ❍ A parallel computer is simple a collection of processors interconnected in some manner to coordinate activities and exchange data ❍ Models that can be used as general frameworks for describing and analyzing parallel algorithms ◆ Simplicity: description, analysis, architecture independence ◆ Implementability: able to be realized, reflect performance q Three common parallel models ❍ Directed acyclic graphs, shared-memory, network Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 3

4 . Directed Acyclic Graphs (DAG) q Capturesdata flow parallelism q Nodes represent operations to be performed ❍ Inputsare nodes with no incoming arcs ❍ Output are nodes with no outgoing arcs ❍ Think of nodes as tasks q Arcs are paths for flow of data results q DAG represents the operations of the algorithm and implies precedent constraints on their order for (i=1; i<100; i++) a[0] a[1] … a[99] a[i] = a[i-1] + 100; Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 4

5 . Shared Memory Model q Parallel extension of RAM model (PRAM) ❍ Memory size is infinite P1 ❍ Number of processors in unbounded P2 ❍ Processors communicate via the memory P3 Shared . Memory ❍ Every processor accesses any memory . . location in 1 cycle PN ❍ Synchronous ◆ All processors execute same algorithm synchronously – READ phase – COMPUTE phase – WRITE phase ◆ Some subset of the processors can stay idle ❍ Asynchronous Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 5

6 . Network Model q G = (N,E) P11 P12 P1N ❍ N are processing nodes P21 P22 P2N ❍ E are bidirectional communication links P31 P32 P3N . . . q Each processor has its own memory . . . . . . q No shared memory is available PN1 PN2 PNN q Network operation may be synchronous or asynchronous q Requires communication primitives ❍ Send (X, i) ❍ Receive (Y, j) q Captures message passing model for algorithm design Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 6

7 . Parallelism q Abilityto execute different parts of a computation concurrently on different machines q Why do you want parallelism? ❍ Shorter running time or handling more work q What is being parallelized? ❍ Task: instruction, statement, procedure, … ❍ Data: data flow, size, replication ❍ Parallelism granularity ◆ Coarse-grain versus fine-grainded q Thinking about parallelism q Evaluation Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 7

8 . Why is parallel programming important? q Parallel programming has matured ❍ Standard programming models ❍ Common machine architectures ❍ Programmer can focus on computation and use suitable programming model for implementation q Increase portability between models and architectures q Reasonable hope of portability across platforms q Problem ❍ Performance optimization is still platform-dependent ❍ Performance portability is a problem ❍ Parallel programming methods are still evolving Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 8

9 . Parallel Algorithm q Recipe to solve a problem “in parallel” on multiple processing elements q Standard steps for constructing a parallel algorithm ❍ Identify work that can be performed concurrently ❍ Partition the concurrent work on separate processors ❍ Properly manage input, output, and intermediate data ❍ Coordinate data accesses and work to satisfy dependencies q Which are hard to do? Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 9

10 . Parallelism Views q Where can we find parallelism? q Program (task) view ❍ Statement level ◆ Between program statements ◆ Which statements can be executed at the same time? ❍ Block level / Loop level / Routine level / Process level ◆ Larger-grained program statements q Data view ❍ How is data operated on? ❍ Where does data reside? q Resource view Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 10

11 . Parallelism, Correctness, and Dependence q Parallel execution, from any point of view, will be constrained by the sequence of operations needed to be performed for a correct result q Parallel execution must address control, data, and system dependences q A dependency arises when one operation depends on an earlier operation to complete and produce a result before this later operation can be performed q We extend this notion of dependency to resources since some operations may depend on certain resources ❍ For example, due to where data is located Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 11

12 . Executing Two Statements in Parallel q Want to execute two statements in parallel q On one processor: Statement 1; Statement 2; q On two processors: Processor 1: Processor 2: Statement 1; Statement 2; q Fundamental (concurrent) execution assumption ❍ Processors execute independent of each other ❍ No assumptions made about speed of processor execution Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 12

13 . Sequential Consistency in Parallel Execution q Case 1: Processor 1: Processor 2: 0me statement 1; statement 2; q Case 2: Processor 1: Processor 2: 0me statement 2; statement 1; q Sequential consistency ❍ Statements execution does not interfere with each other ❍ Computation results are the same (independent of order) Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 13

14 . Independent versus Dependent q In other words the execution of statement1; statement2; must be equivalent to statement2; statement1; q Their order of execution must not matter! q If true, the statements are independent of each other q Two statements are dependent when the order of their execution affects the computation outcome Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 14

15 . Examples q Example 1 r Statements are independent S1: a=1; S2: b=1; q Example 2 r Dependent (true (flow) dependence) S1: a=1; ¦ Second is dependent on first S2: b=a; ¦ Can you remove dependency? q Example 3 r Dependent (output dependence) S1: a=f(x); ¦ Second is dependent on first S2: a=b; ¦ Can you remove dependency? How? q Example 4 r Dependent (anti-dependence) S1: a=b; ¦ First is dependent on second S2: b=1; ¦ Can you remove dependency? How? Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 15

16 . True Dependence and Anti-Dependence q Given statements S1 and S2, S1; S2; q S2 has a true (flow) dependence on S1 X = δ ... if and only if = X S2 reads a value written by S1 q S2 has a anti-dependence on S1 = X δ-‐1 ... if and only if X = S2 writes a value read by S1 Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 16

17 . Output Dependence q Given statements S1 and S2, S1; S2; q S2 has an output dependence on S1 X = if and only if δ0 ... S2 writes a variable written by S1 X = q Anti- and output dependences are “name” dependencies ❍ Are they “true” dependences? q How can you get rid of output dependences? ❍ Are there cases where you can not? Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 17

18 . Statement Dependency Graphs q Canuse graphs to show dependence relationships q Example S1: a=1; S1 flow S2: b=a; output S2 an0 S3: a=b+1; S3 S4: c=a; S4 q S2 δ S3 : S3 is flow-dependent on S2 q S1 δ0 S3 : S3 is output-dependent on S1 q S2 δ-1 S3 : S3 is anti-dependent on S2 Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 18

19 . When can two statements execute in parallel? q Statements S1 and S2 can execute in parallel if and only if there are no dependences between S1 and S2 ❍ True dependences ❍ Anti-dependences ❍ Output dependences q Some dependences can be remove by modifying the program ❍ Rearranging statements ❍ Eliminating statements Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 19

20 . How do you compute dependence? q Data dependence relations can be found by comparing the IN and OUT sets of each node q The IN and OUT sets of a statement S are defined as: ❍ IN(S) : set of memory locations (variables) that may be used in S ❍ OUT(S) : set of memory locations (variables) that may be modified by S q Note that these sets include all memory locations that may be fetched or modified q As such, the sets can be conservatively large Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 20

21 . IN / OUT Sets and Computing Dependence q Assuming that there is a path from S1 to S2 , the following shows how to intersect the IN and OUT sets to test for data dependence out (S1 )∩ in(S 2 ) ≠ ∅ S1 δ S 2 flow dependence in( S1 ) ∩ out ( S 2 ) ≠ ∅ S1 δ −1 S 2 anti - dependence out ( S1 ) ∩ out ( S 2 ) ≠ ∅ S1 δ 0 S 2 output dependence Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 21

22 . Loop-Level Parallelism q Significant parallelism can be identified within loops for (i=0; i<100; i++) for (i=0; i<100; i++) { S1: a[i] = i; S1: a[i] = i; S2: b[i] = 2*i; } q Dependencies? What about i, the loop index? q DOALL loop (a.k.a. foreach loop) ❍ All iterations are independent of each other ❍ All statements be executed in parallel at the same time ◆ Is this really true? Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 22

23 . Iteration Space q Unrollloop into separate statements / iterations q Show dependences between iterations for (i=0; i<100; i++) { for (i=0; i<100; i++) S1: a[i] = i; S1: a[i] = i; S2: b[i] = 2*i; } S10 S11 … S199 S10 S11 … S199 S20 S21 S299 Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 23

24 . Multi-Loop Parallelism q Significant parallelism can be identified between loops … for (i=0; i<100; i++) a[i] = i; a[0] a[1] a[99] … for (i=0; i<100; i++) b[i] = i; b[0] b[1] b[99] q Dependencies? q How much parallelism is available? q Given 4 processors, how much parallelism is possible? q What parallelism is achievable with 50 processors? Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 24

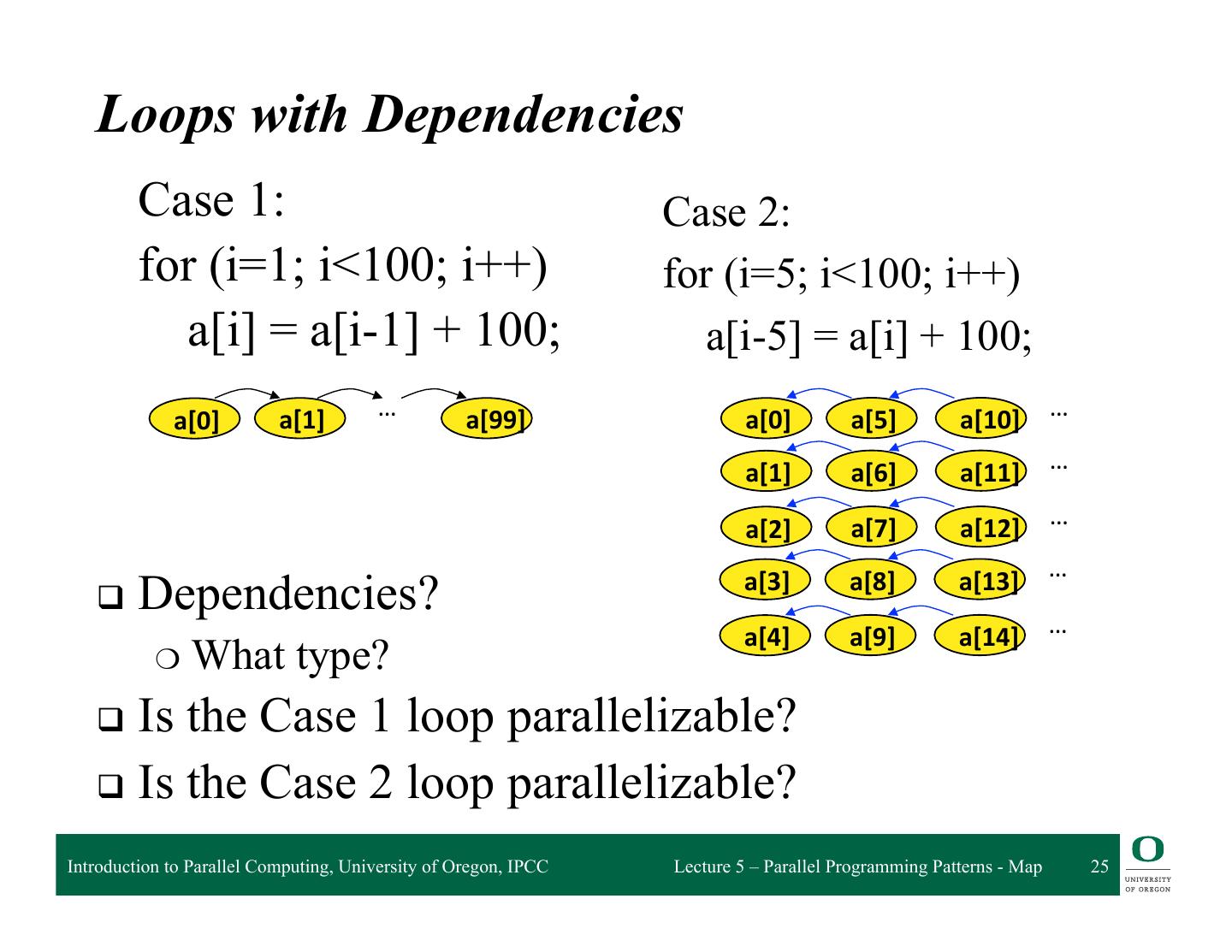

25 . Loops with Dependencies Case 1: Case 2: for (i=1; i<100; i++) for (i=5; i<100; i++) a[i] = a[i-1] + 100; a[i-5] = a[i] + 100; a[0] a[1] … a[99] a[0] a[5] a[10] … a[1] a[6] a[11] … a[2] a[7] a[12] … a[3] a[8] a[13] … q Dependencies? a[4] a[9] a[14] … ❍ What type? q Is the Case 1 loop parallelizable? q Is the Case 2 loop parallelizable? Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 25

26 . Another Loop Example for (i=1; i<100; i++) a[i] = f(a[i-1]); q Dependencies? ❍ What type? q Loop iterations are not parallelizable ❍ Why not? Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 26

27 . Loop Dependencies q A loop-carried dependence is a dependence that is present only if the statements are part of the execution of a loop (i.e., between two statements instances in two different iterations of a loop) q Otherwise, it is loop-independent, including between two statements instances in the same loop iteration q Loop-carried dependences can prevent loop iteration parallelization q The dependence is lexically forward if the source comes before the target or lexically backward otherwise ❍ Unroll the loop to see Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 27

28 . Loop Dependence Example for (i=0; i<100; i++) a[i+10] = f(a[i]); q Dependencies? ❍ Between a[10], a[20], … ❍ Between a[11], a[21], … q Some parallel execution is possible ❍ How much? Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 28

29 . Dependences Between Iterations for (i=1; i<100; i++) { S1: a[i] = …; S1 … S2 S2: … = a[i-1]; i } 1 2 3 4 5 6 q Dependencies? ❍ Between a[i] and a[i-1] q Is parallelism possible? ❍ Statements can be executed in “pipeline” manner Introduction to Parallel Computing, University of Oregon, IPCC Lecture 5 – Parallel Programming Patterns - Map 29