- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/u5174/Bench_marking_Various_CNI_Plugins?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

各种众所周知的 CNI 插件的综合性能基准测试

展开查看详情

1 .Benchmarking Various CNI Plugins Giri Kuncoro & Vijay Dhama from

2 .Agenda ● Overview of Various CNI Plugins ● Experiments ○ Goals ○ Environment ○ Results ● Takeaways

3 .Overview of Various CNI Plugins

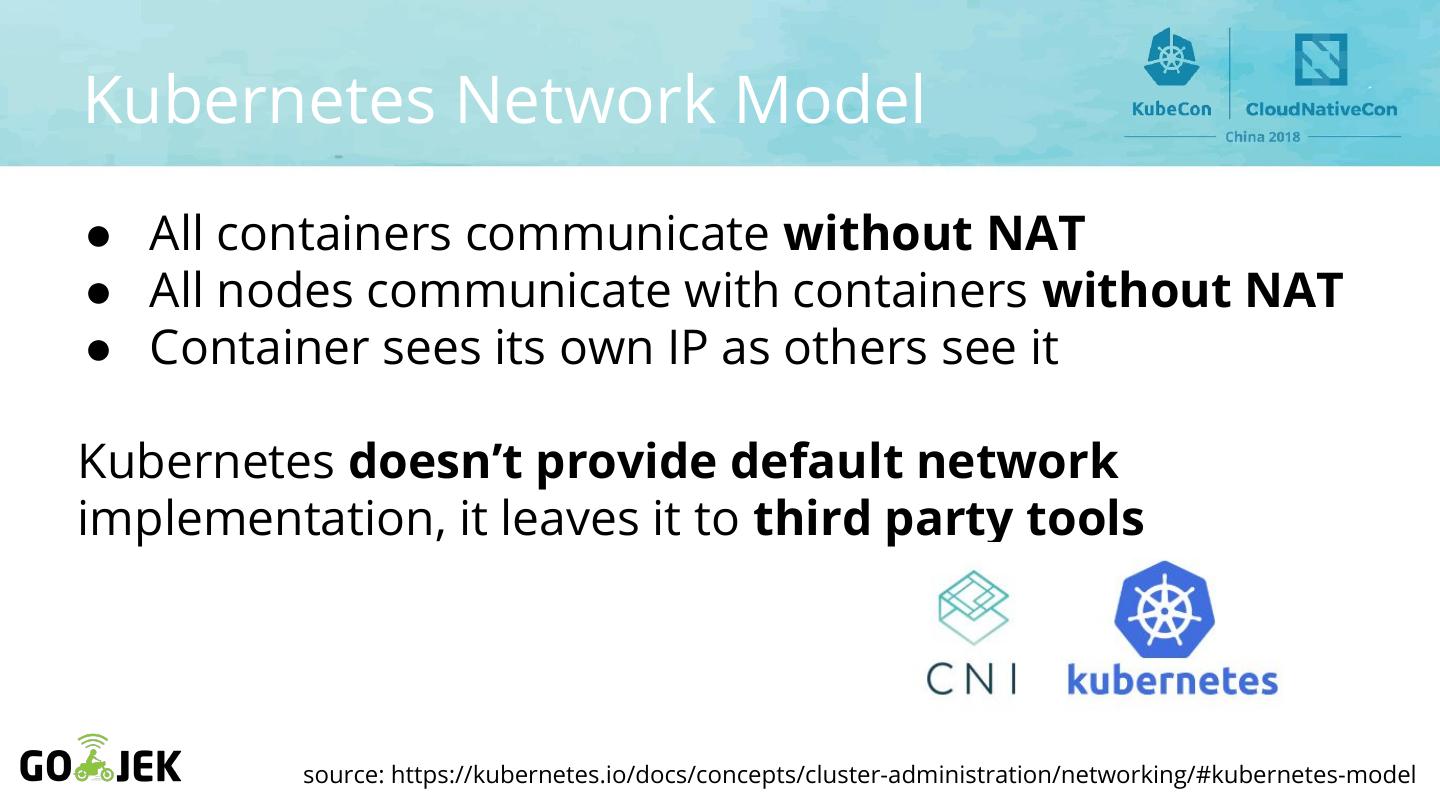

4 .Kubernetes Network Model ● All containers communicate without NAT ● All nodes communicate with containers without NAT ● Container sees its own IP as others see it Kubernetes doesn’t provide default network implementation, it leaves it to third party tools source: https://kubernetes.io/docs/concepts/cluster-administration/networking/#kubernetes-model

5 .What CNI do? Connectivity Reachability

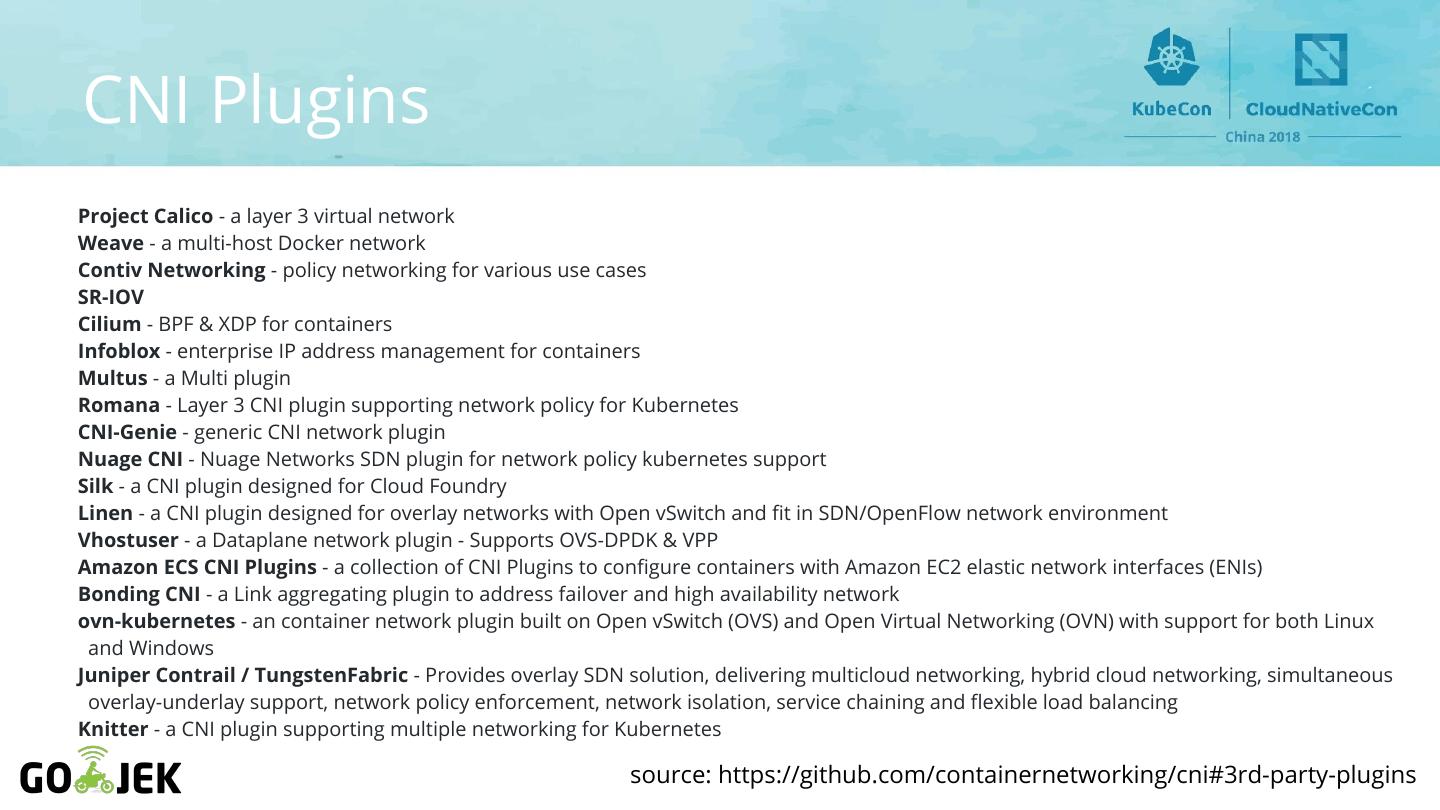

6 .CNI Plugins Project Calico - a layer 3 virtual network Weave - a multi-host Docker network Contiv Networking - policy networking for various use cases SR-IOV Cilium - BPF & XDP for containers Infoblox - enterprise IP address management for containers Multus - a Multi plugin Romana - Layer 3 CNI plugin supporting network policy for Kubernetes CNI-Genie - generic CNI network plugin Nuage CNI - Nuage Networks SDN plugin for network policy kubernetes support Silk - a CNI plugin designed for Cloud Foundry Linen - a CNI plugin designed for overlay networks with Open vSwitch and fit in SDN/OpenFlow network environment Vhostuser - a Dataplane network plugin - Supports OVS-DPDK & VPP Amazon ECS CNI Plugins - a collection of CNI Plugins to configure containers with Amazon EC2 elastic network interfaces (ENIs) Bonding CNI - a Link aggregating plugin to address failover and high availability network ovn-kubernetes - an container network plugin built on Open vSwitch (OVS) and Open Virtual Networking (OVN) with support for both Linux and Windows Juniper Contrail / TungstenFabric - Provides overlay SDN solution, delivering multicloud networking, hybrid cloud networking, simultaneous overlay-underlay support, network policy enforcement, network isolation, service chaining and flexible load balancing Knitter - a CNI plugin supporting multiple networking for Kubernetes source: https://github.com/containernetworking/cni#3rd-party-plugins

7 .Scope So many CNI plugins to test, limit scope to: ● Flannel ● Kube-Router ● Calico ● AWS CNI ● Weave ● Kopeio ● Cilium ● Romana

8 .Flannel Simple way to configure L3 network fabric with VXLAN as default source: https://github.com/coreos/flannel

9 .Calico Pure L3 approach which enables unencapsulated networks and BGP peering source: https://projectcalico.org

10 .Weave Support overlay network with different cloud network config source: https://www.weave.works/docs

11 .Cilium Based on Linux kernel technology called BPF source: https://cilium.readthedocs.io

12 .Kube-Router Built on standard Linux networking toolset: ipset, iptables, IPVS, LVS source: https://github.com/cloudnativelabs/kube-router

13 .AWS CNI Using AWS ENI interface for pod networking source: https://github.com/aws/amazon-vpc-cni-k8s

14 .Kopeio Simple VXLAN, but also support L2 with GRE and IPSEC source: https://github.com/kopeio/networking

15 .Romana Use standard L3, distributed routes with BGP or OSPF source: https://romana.io

16 .Experiments

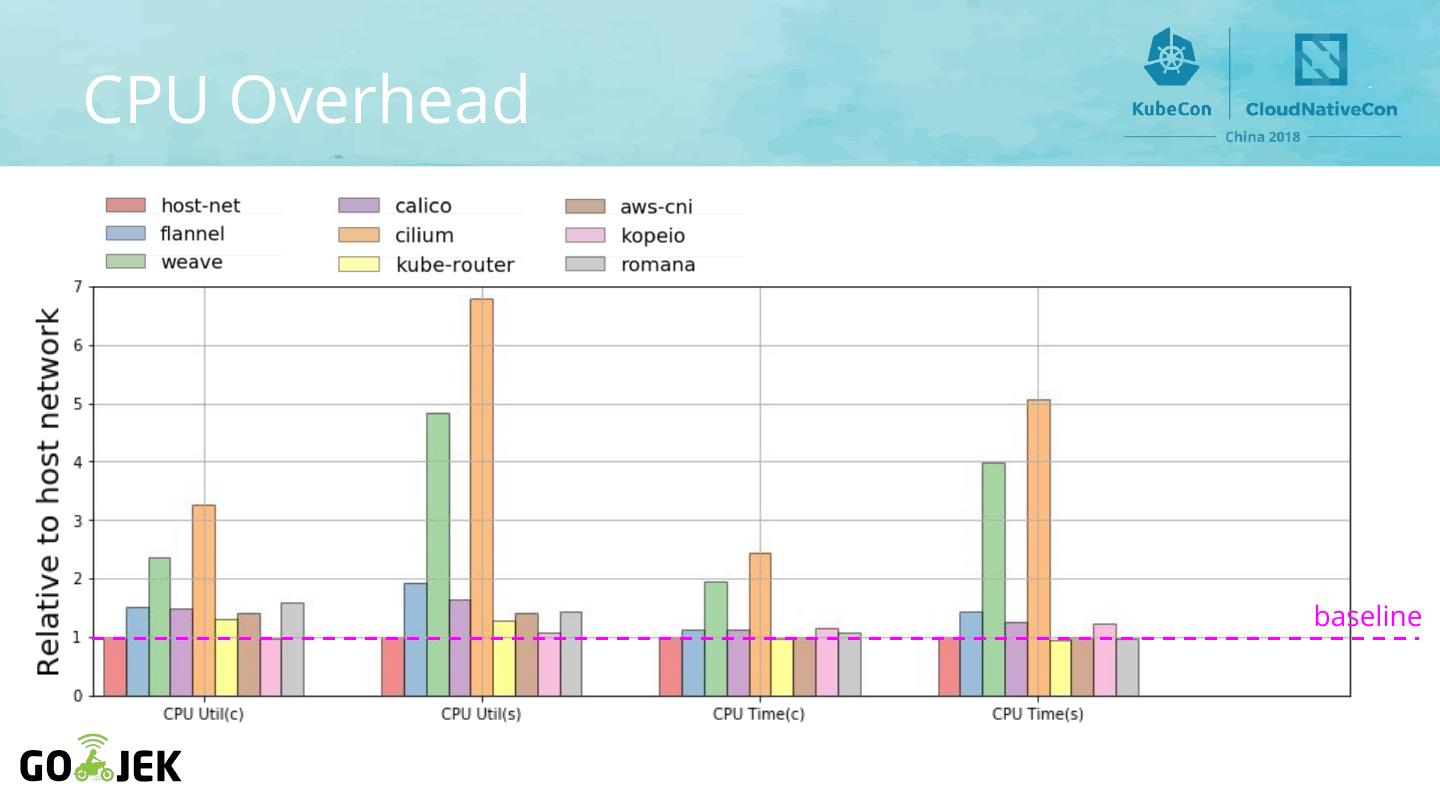

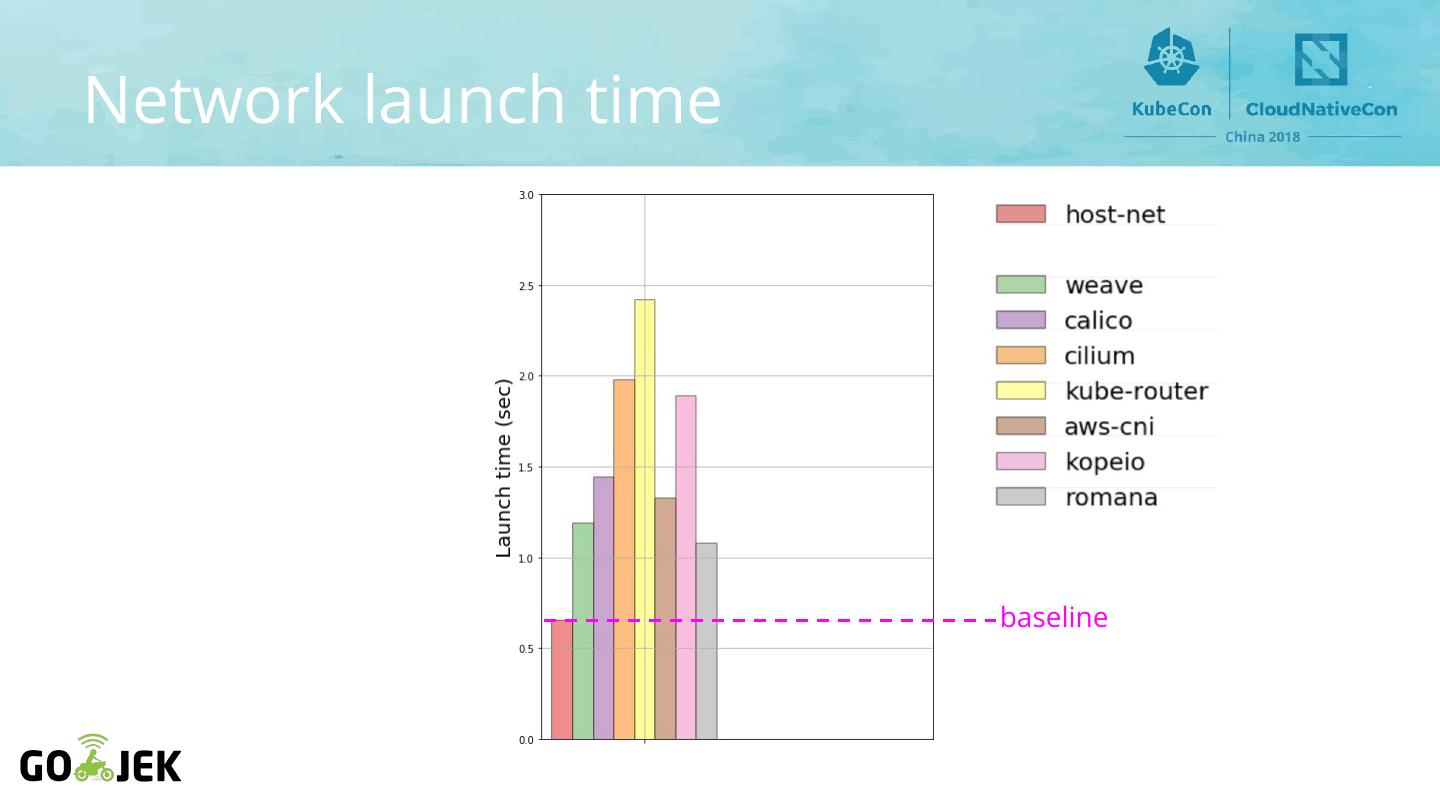

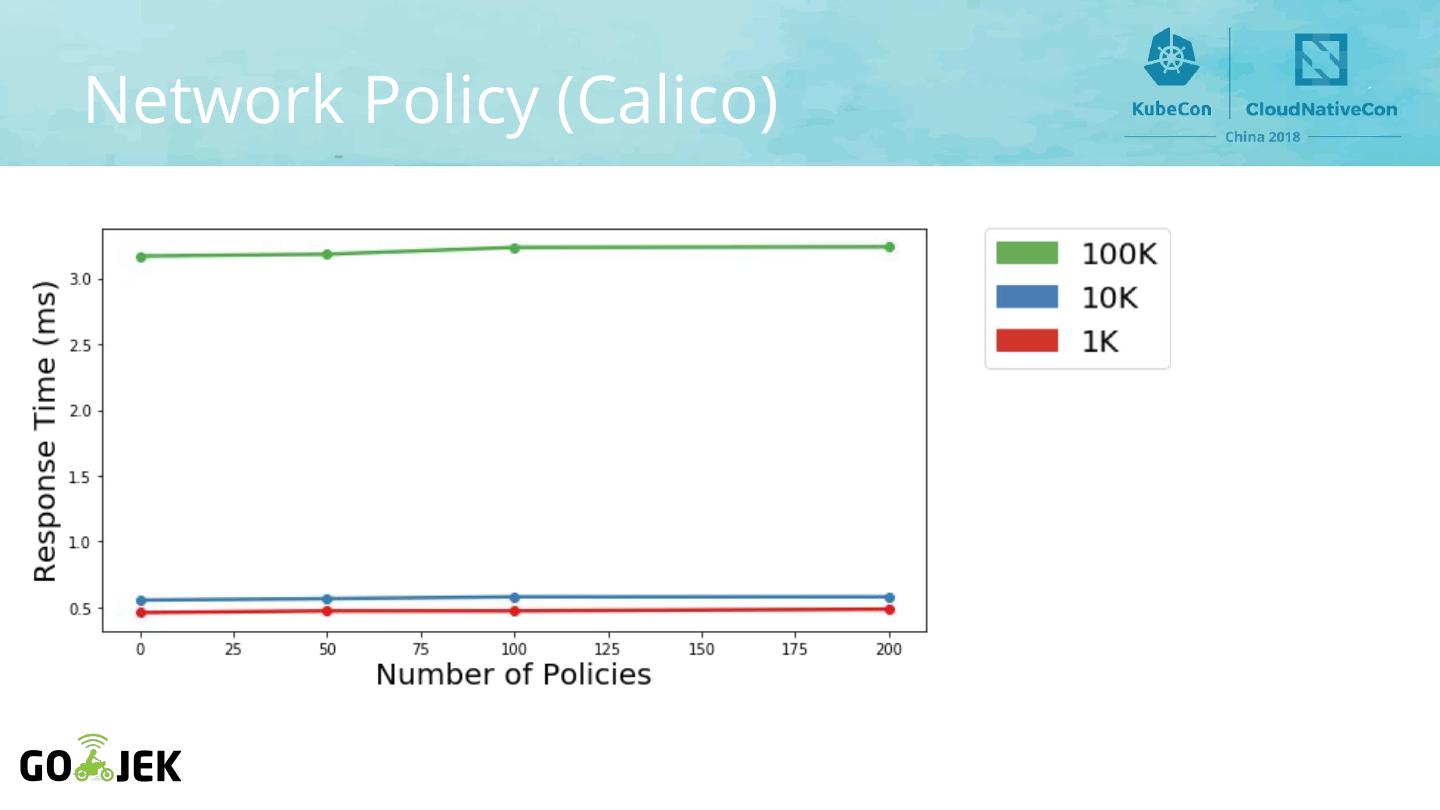

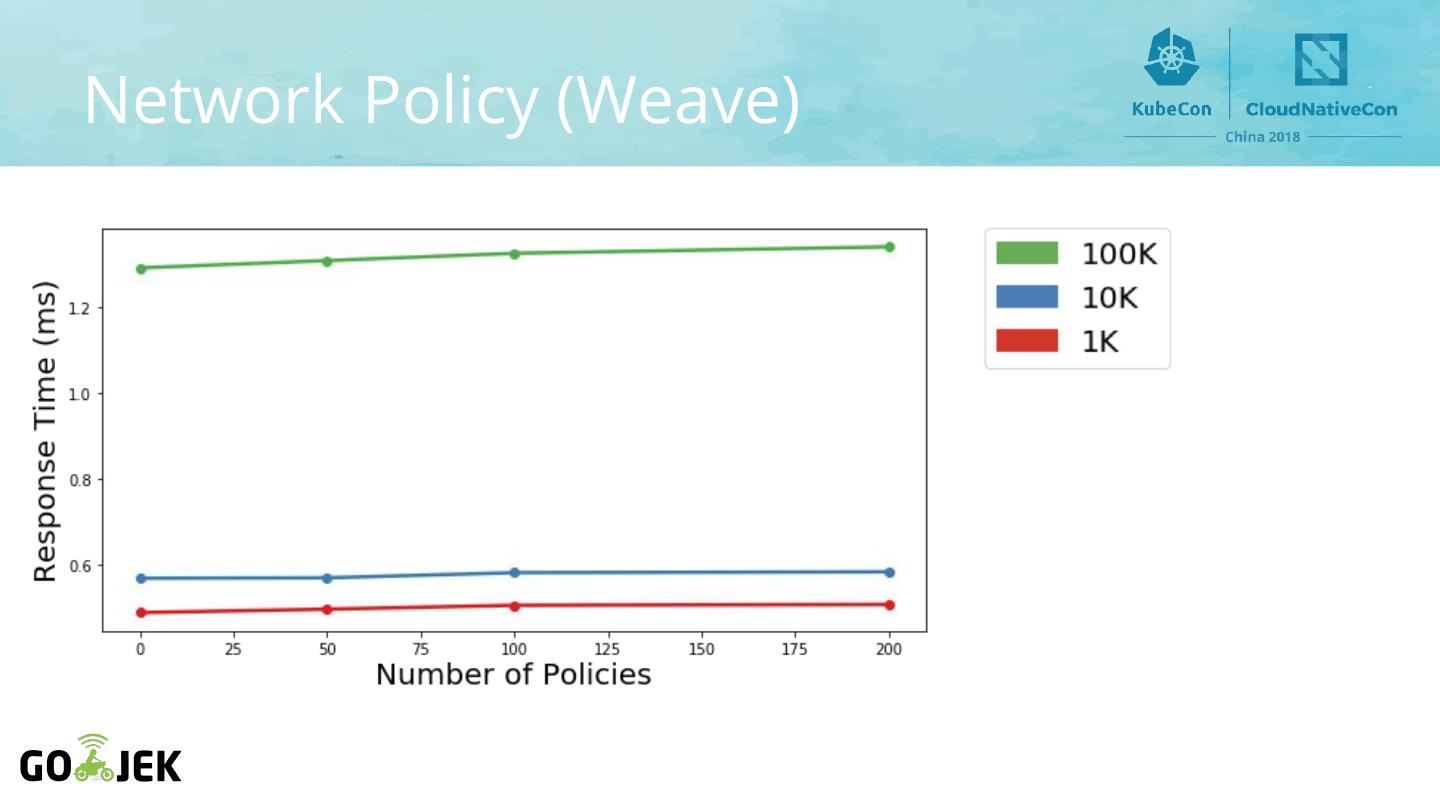

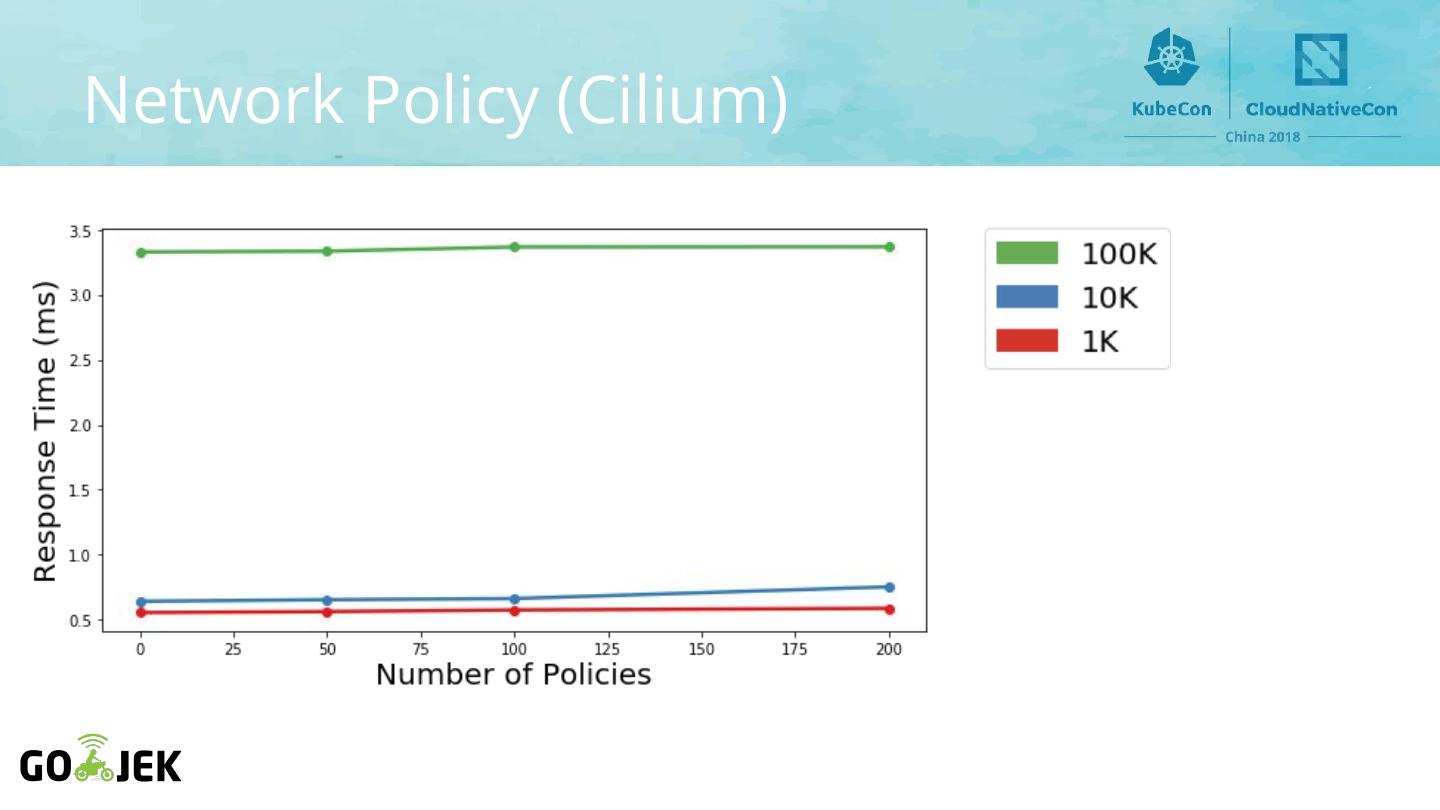

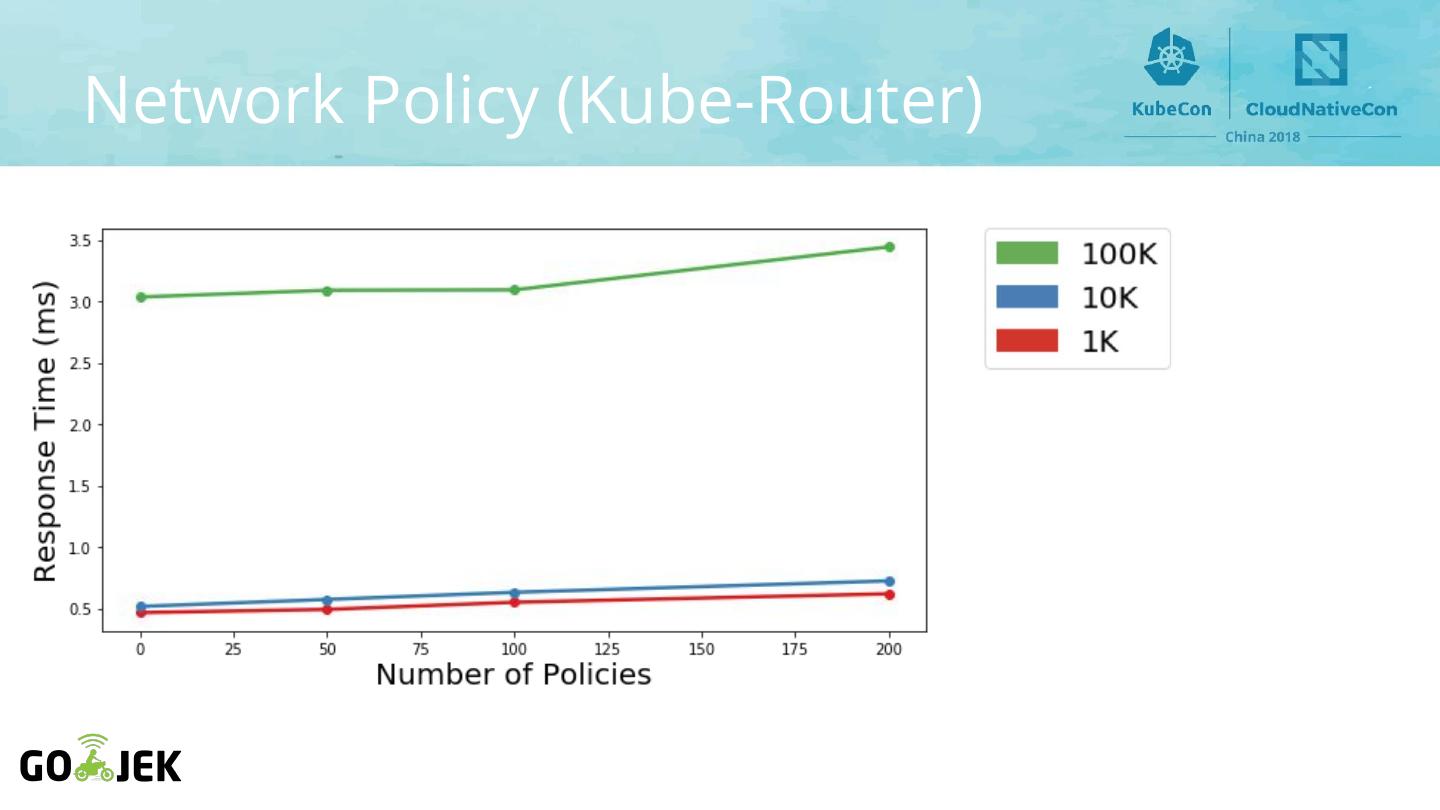

17 .Goals ● Lowest latency and highest throughput ● Different protocols and various packet sizes ● CPU consumption and launch time ● Kubernetes network policies

18 .Environment ● 8 Kubernetes clusters with different CNIs ● 2 nodes cluster with m4.xlarge type by Amazon AWS EC2 with Debian 9, kernel 4.9 ● Kubernetes v1.10.9 with Kops

19 .Environment ● All CNI plugins deployed with default config in Kops ○ No tuning or custom configuration ○ Flannel v0.10.0 ○ Kube-Router v0.1.0 ○ Calico v2.6.7 ○ AWS CNI v1.0.0 ○ Weave v2.4.0 ○ Kopeio v1.0.20180319 ○ Cilium v1.0 ○ Romana v2.0.2

20 .Tools ● Sockperf (v3.5.0) ○ Util over socket API for latency/throughput measurement ● Netperf (v2.6.0) ○ Unidirectional throughput and end-to-end latency measurement ● Tool from PaniNetworks ○ Generate HTTP workloads and measure response

21 .Experiment #1 Throughput & Latency

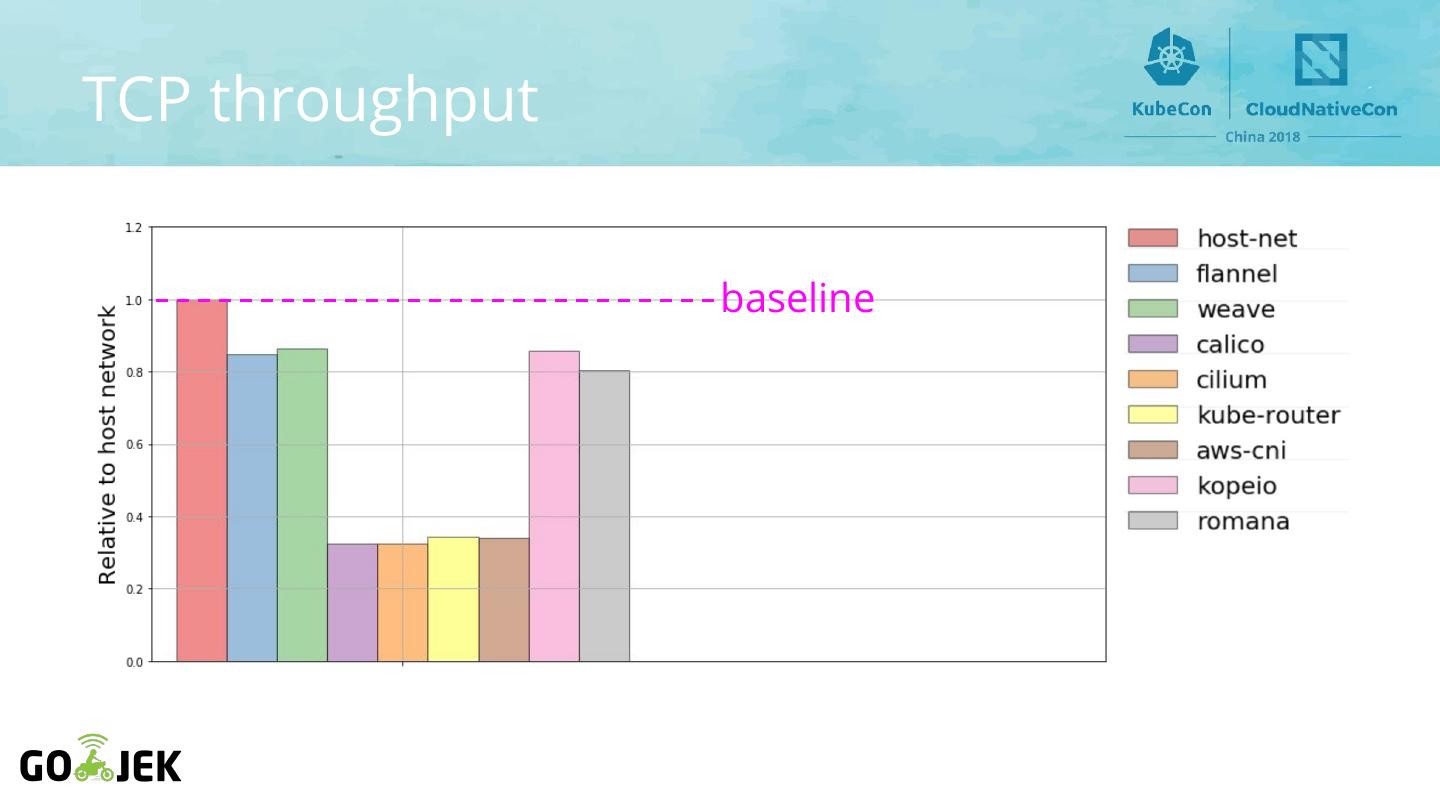

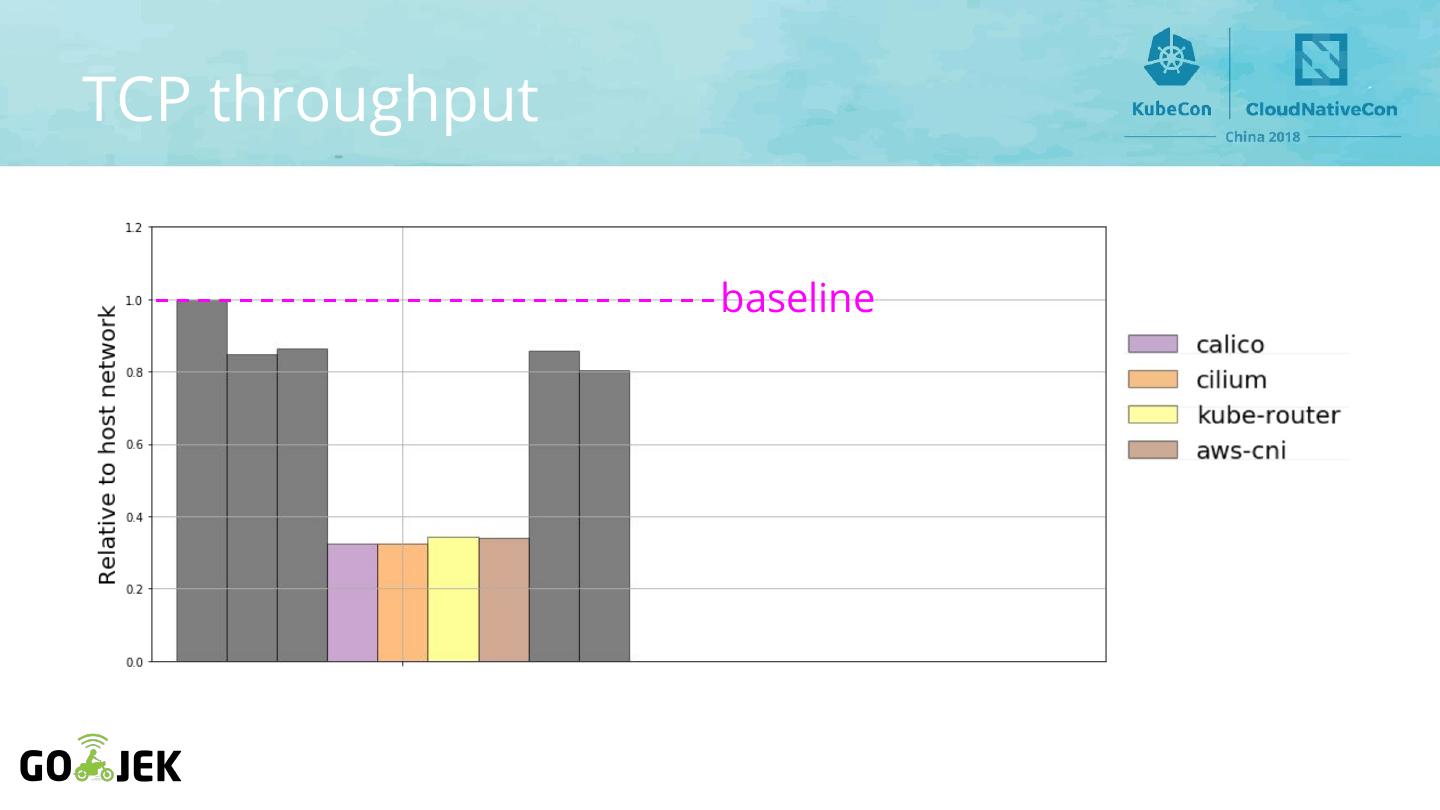

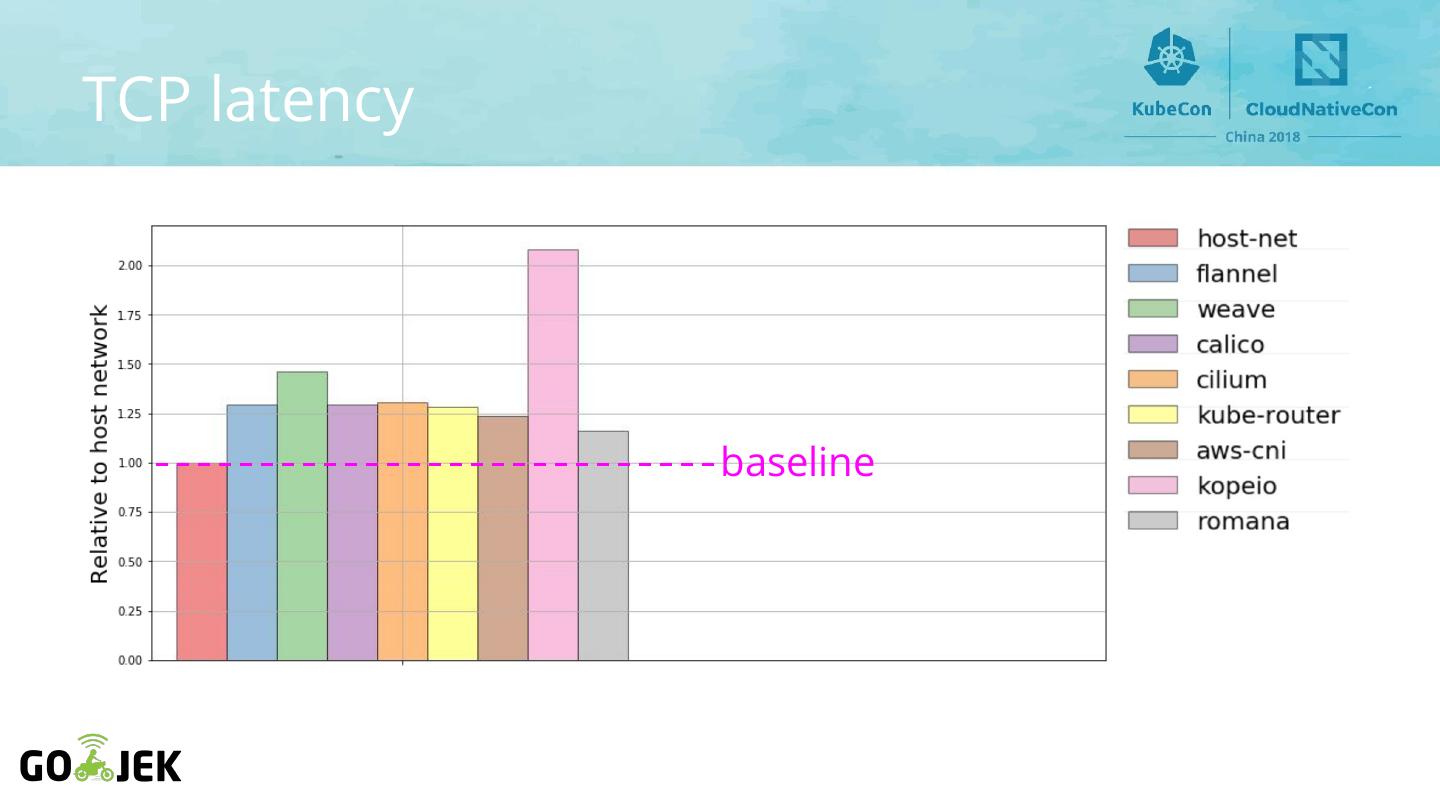

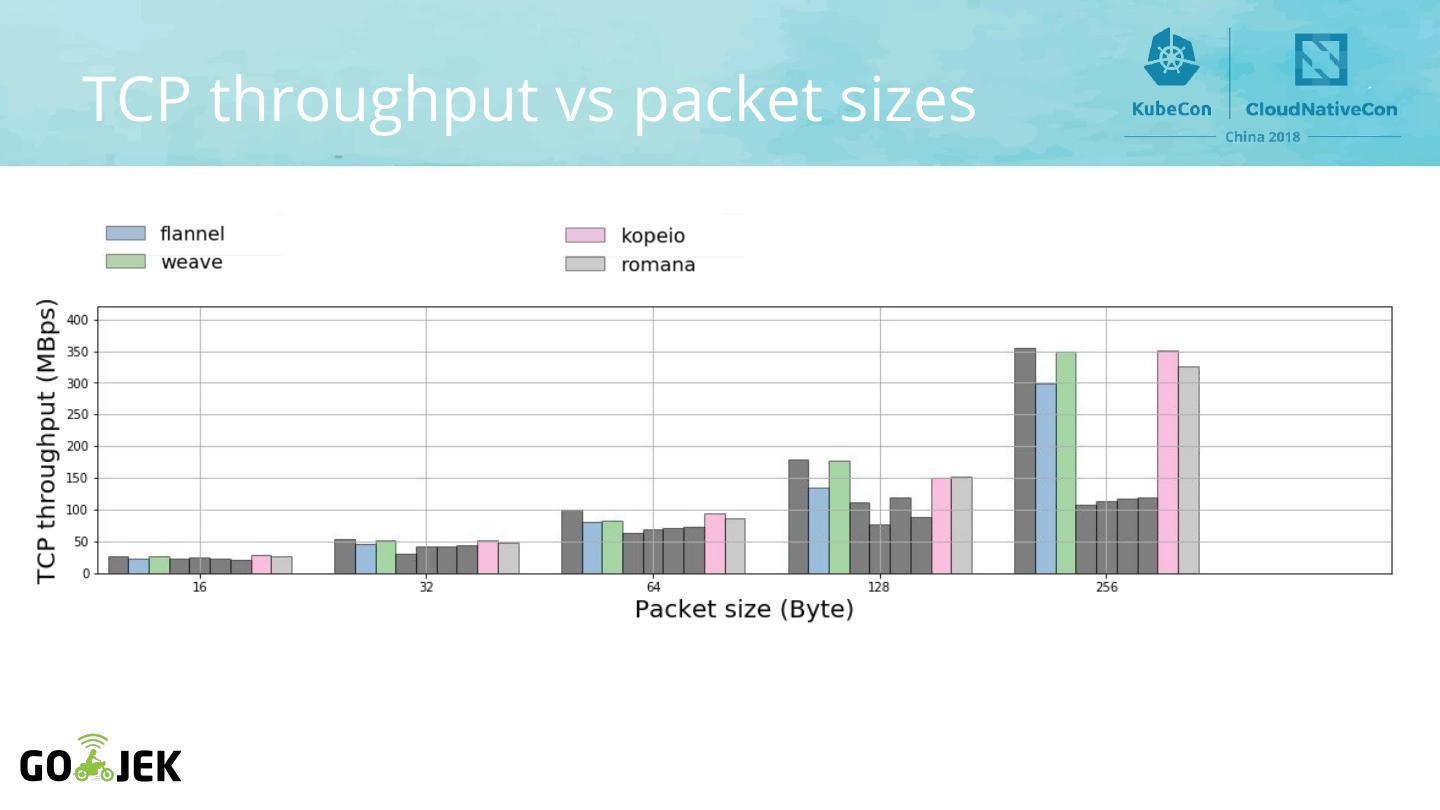

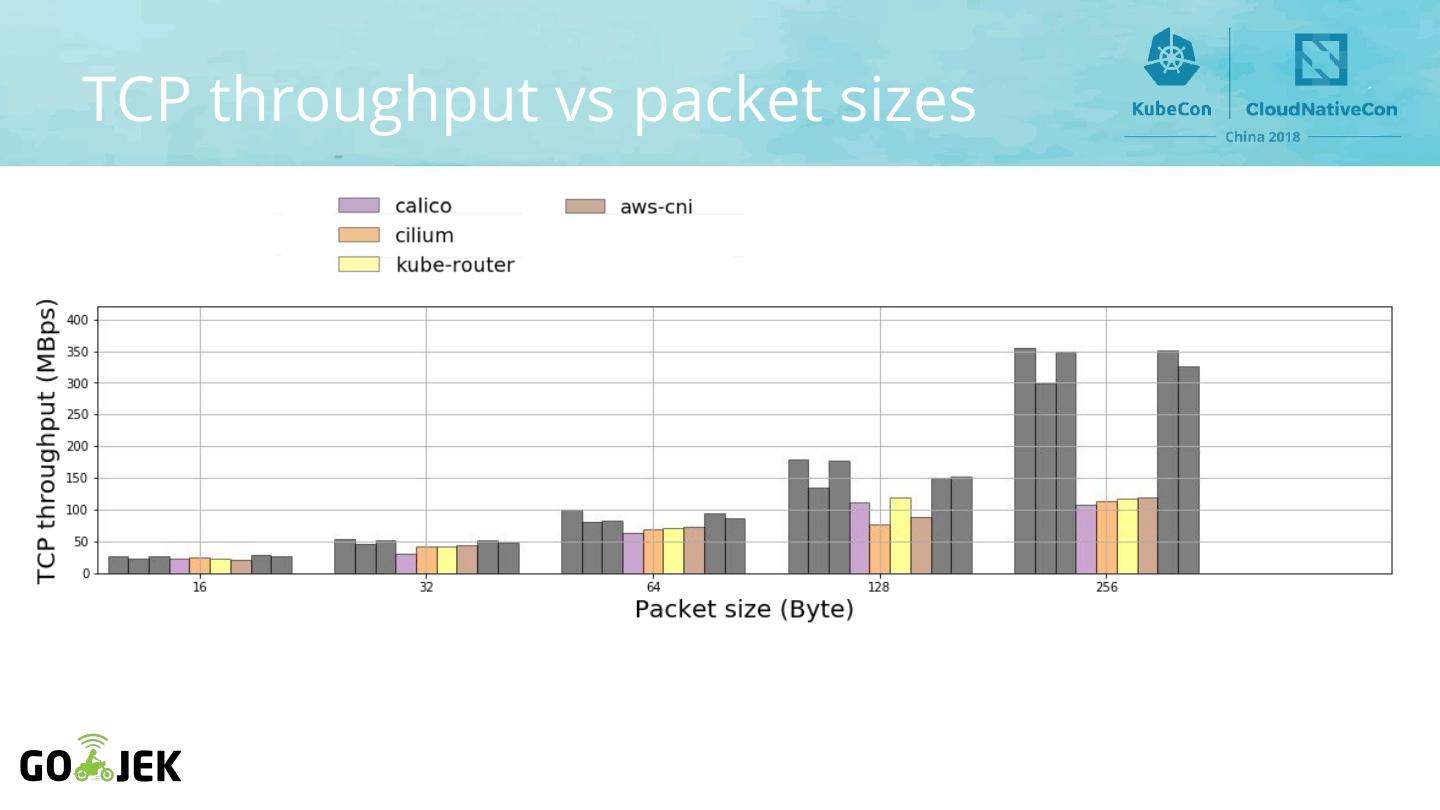

22 .Steps pod pod Sockperf (c) Sockperf (s) Node A Node B ● Sockperf client pod ● 256 bytes in Node A for TCP throughput test ● Sockperf server pod ● 16 bytes in Node B for TCP latency test

23 .TCP throughput baseline

24 .TCP throughput baseline

25 .TCP throughput baseline

26 .TCP latency baseline

27 .TCP latency baseline

28 .TCP latency baseline

29 .Experiment #2 Protocol & Packet Sizes