- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/u54/kubernetes_auto_scaling_on_azure?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

kubernetes-autoscaling-on-azure

展开查看详情

1 .Kubernetes Autoscaling on Azure Pengfei Ni Microsoft Azure

2 .Abstract • Why autoscaling • Autoscaling in Kubernetes • Practice on Azure • Q&A

3 .Why autoscaling • Autoscaling – Adjust computational resources automatically • Benefits – Reduce cost – Increase service availability – Increase elasticity

4 .Cloud provider autoscaling • Horizontal – Scale number of virtual machines • Vertical – Scale resources of virtual machines • Drawbacks – Not aware of Kubernetes scheduler (e.g. multiple node groups) – May remove nodes with critical Pods – Hard to conform kubernetes evictions

5 .Autoscaling in Kubernetes • Horizontal Pod autoscaler (HPA) – Scale number of Pods • Vertical Pod autoscaler (VPA) – Scale resources of Pods • Cluster proportional autoscaler (CPA) – Scale replicas of Pods based on number of nodes • Cluster autoscaler (CA) – Scale number of nodes

6 .Cluster Autoscaler • Adjust number of Nodes automatically – Add nodes when there’re Pods failed to schedule – Remove nodes when they are underutilized for an extended period • Supported Cloud providers – Azure (VMAS/VMSS/AKS/ACS) – AWS – GCE/GKE

7 .How CA works KubeClient Simulator Lead Election Estimator Metrics Expander Cloud Provider Azure AWS GCE

8 .Scale Up Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod

9 .Scale Up • Get node groups from cloud provider • Build template nodes for each node groups • Check Pods with unschedulable condition • Check which template node fit the pending Pods – If more than one node groups, select by expander • random, most-pods, least-waste, price • Create Node by cloud provider • Wait for node ready

10 .Scale Down Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod Pod

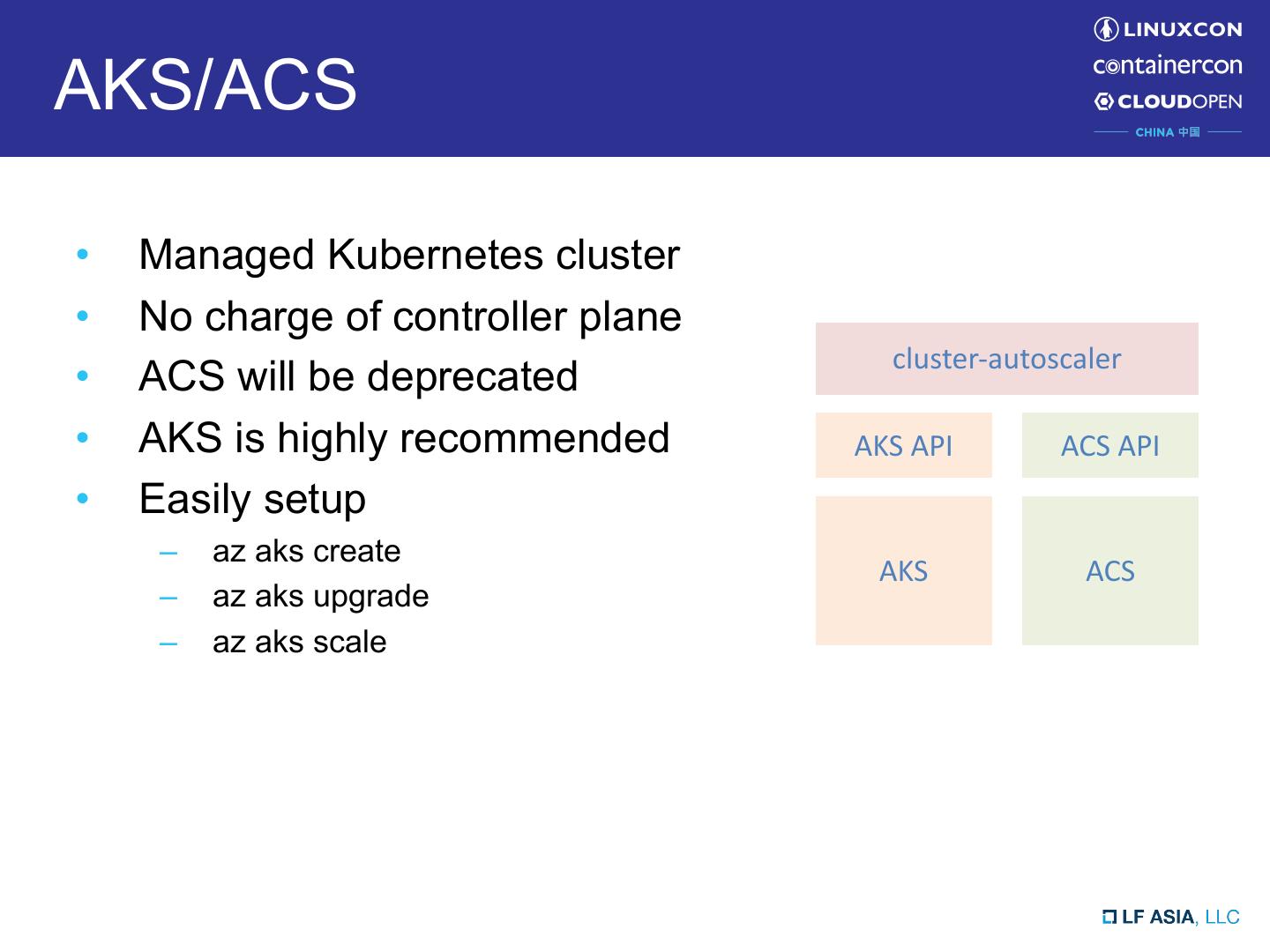

11 .Scale Down • Check unneeded nodes – Sum of CPU/Memory requests is less than 50% – All Pods on the node could be evicted • Managed by controllers • No restrictive PodDisruptionBudget • No constraints (e.g. node selector) preventing node moving – No scale down annotation • Wait a while (e.g. 10 min) • Evict, taint and then remove the node from cloud provider

12 .Avoid abrupt scaling • Mark node as unneeded and wait for a while (10 min) before removal • Evict and taint node first before removal • Stop scaling down for a while (10 min) after scaling up • Stop operating when unready nodes are more than 45% or 3 • Use PodDisruptionBudget

13 .Limitations • Up to 1000 nodes are supported • Up to 10 min graceful termination period • Not compatible with cpu-metrics based autoscalers

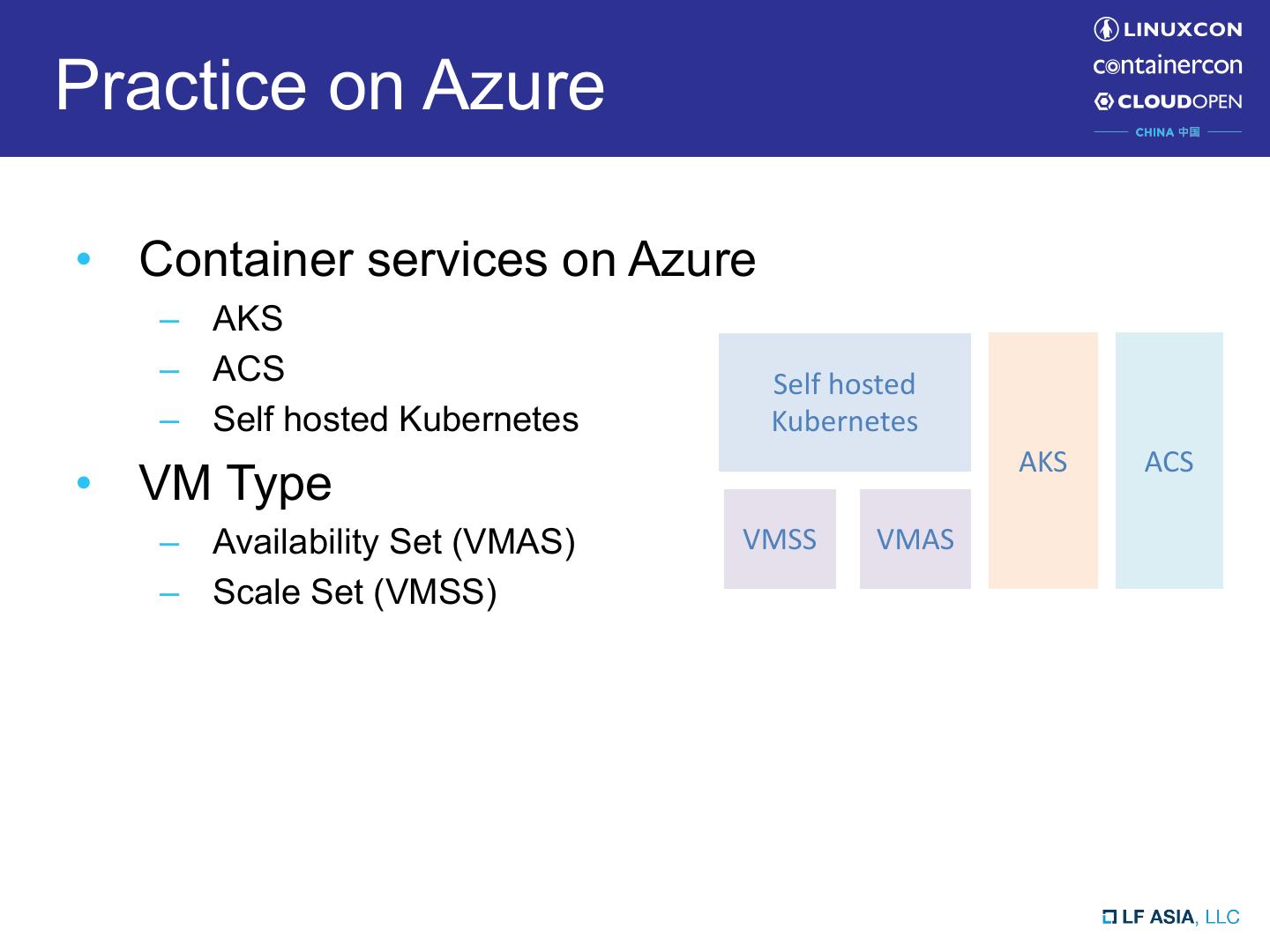

14 .Practice on Azure • Container services on Azure – AKS – ACS Self hosted – Self hosted Kubernetes Kubernetes AKS ACS • VM Type – Availability Set (VMAS) VMSS VMAS – Scale Set (VMSS)

15 .VMAS/VMSS VMSS VMAS VM configuration Identical Usually different VM creation Automated Manually Load Balancer Automated with ALB Manually Scaling Automated Manually High Availability Auto distribution across Isolated hardware, availability zones or manually setup availability sets availability zones

16 .VMAS/VMSS cluster-autoscaler Azure ARM API VMSS API VMAS VM VMSS VM VM VM VM VM

17 .AKS/ACS • Managed Kubernetes cluster • No charge of controller plane cluster-autoscaler • ACS will be deprecated • AKS is highly recommended AKS API ACS API • Easily setup – az aks create AKS ACS – az aks upgrade – az aks scale

18 .Best Practices • Run cluster-autoscaler with matched k8s version • Run containers with multiple replicas • Setup resource requests for containers • Use PodDisruptionBudgets to prevent Pods being removed abruptly • Do not manage node manually • Disable other virtual machine autoscalers (e.g. those from cloud provider) • Setup min/max nodes and ensure quota sufficient

19 .Thanks Q&A