- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- <iframe src="https://www.slidestalk.com/u54/vhost_Dataplane_in_Qemu_1537350562426?embed" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

vhost Dataplane in Qemu

展开查看详情

1 .Vhost dataplane in Qemu Jason Wang Red Hat

2 .Agenda ● History & Evolution of vhost ● Issues ● Vhost dataplane ● TODO

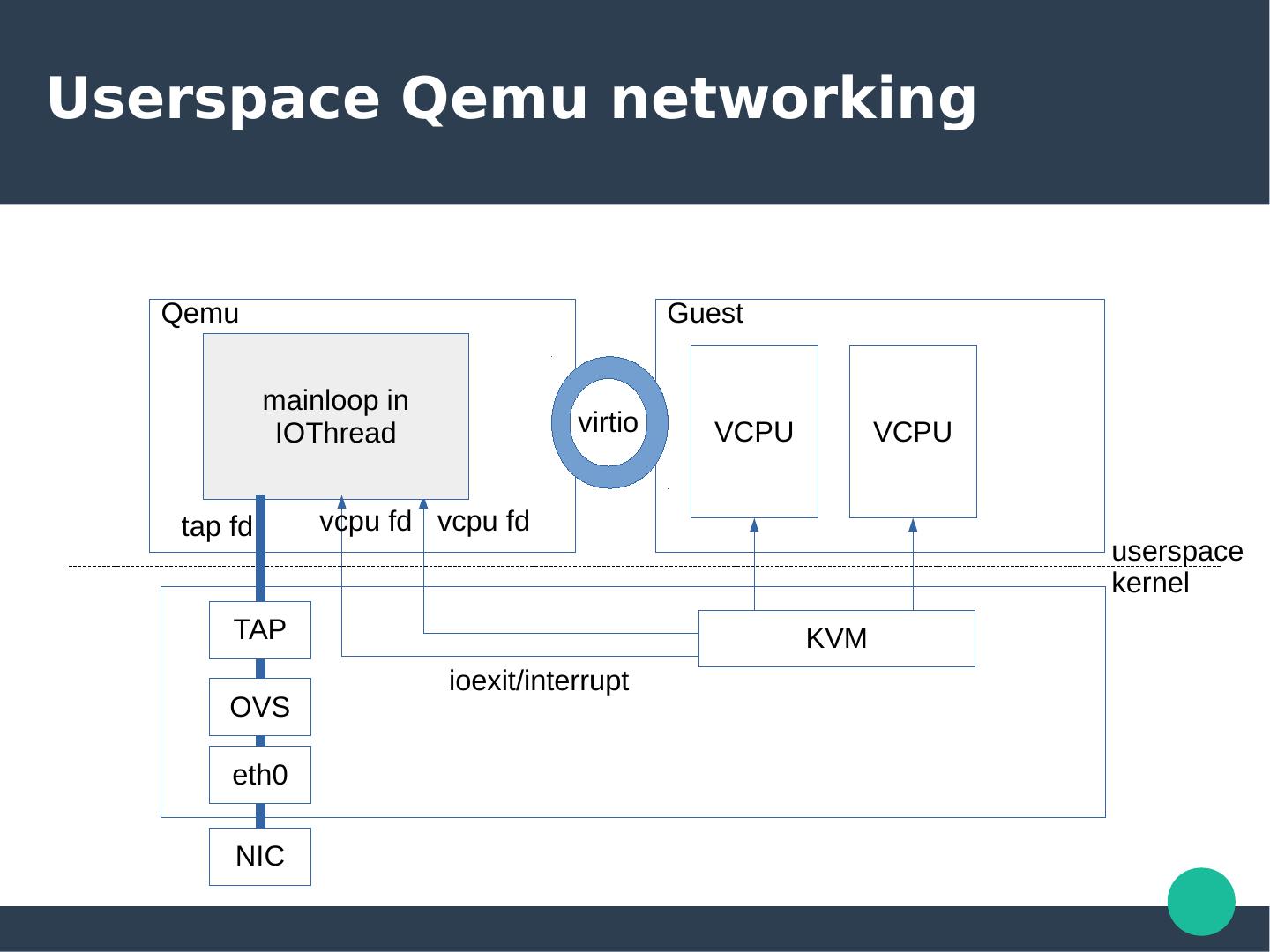

3 .Userspace Qemu networking Qemu Guest mainloop in IOThread virtio VCPU VCPU tap fd vcpu fd vcpu fd userspace kernel TAP KVM ioexit/interrupt OVS eth0 NIC

4 .Userspace qemu networking is slow ● Limitation of both qemu and backend – Run inside mainloop ● No real multiqueue ● No dedicated thread, No busy polling – Extra data copy to internal buffer – TAP ● syscall to send/receive message – IRQ/ioexit is slow ● VCPU needs to be blocked ● Slow path – No burst/bulking

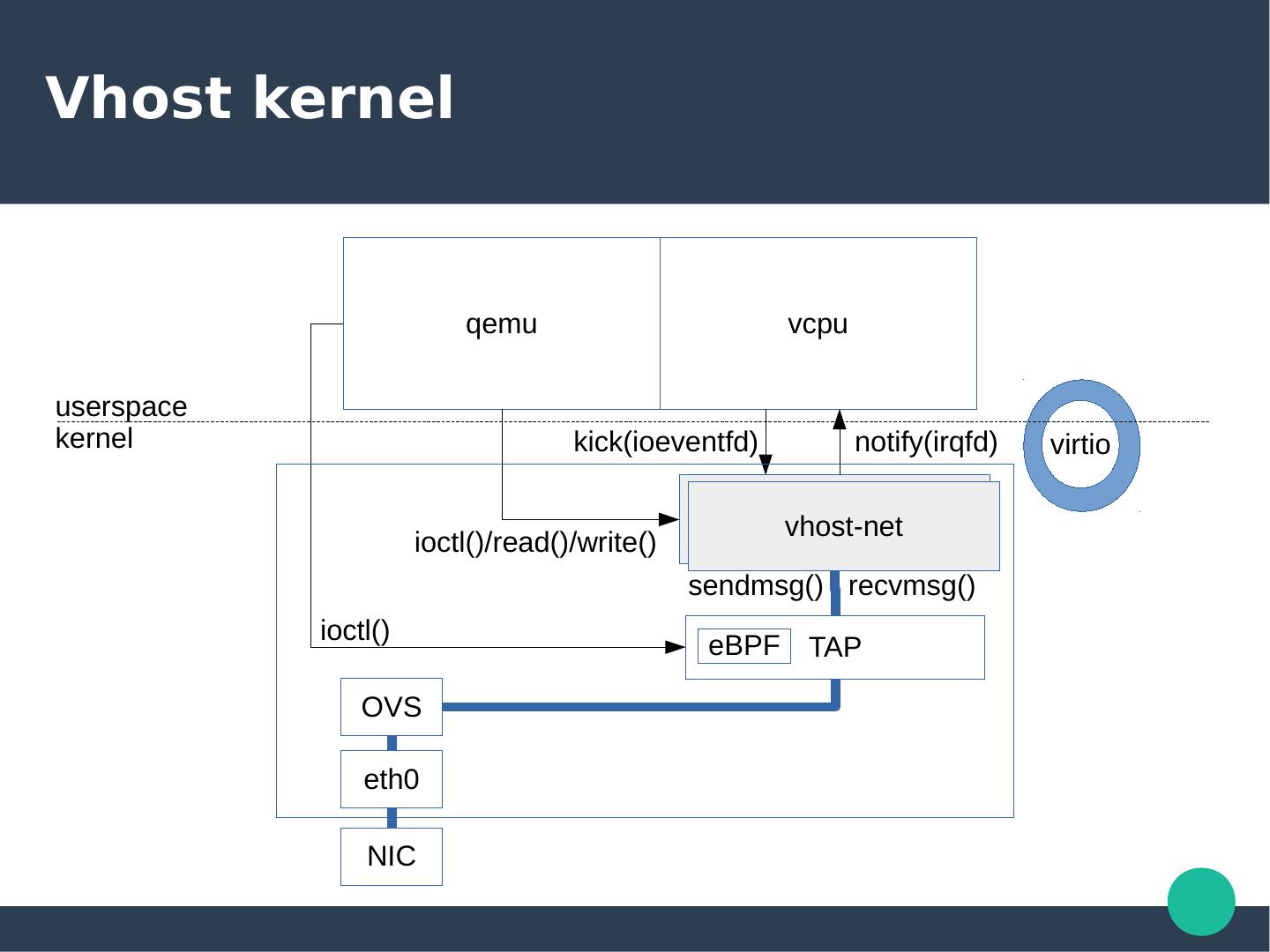

5 .Vhost kernel qemu vcpu userspace kernel kick(ioeventfd) notify(irqfd) virtio vhost-net vhost-net ioctl()/read()/write() sendmsg() recvmsg() ioctl() eBPF TAP OVS eth0 NIC

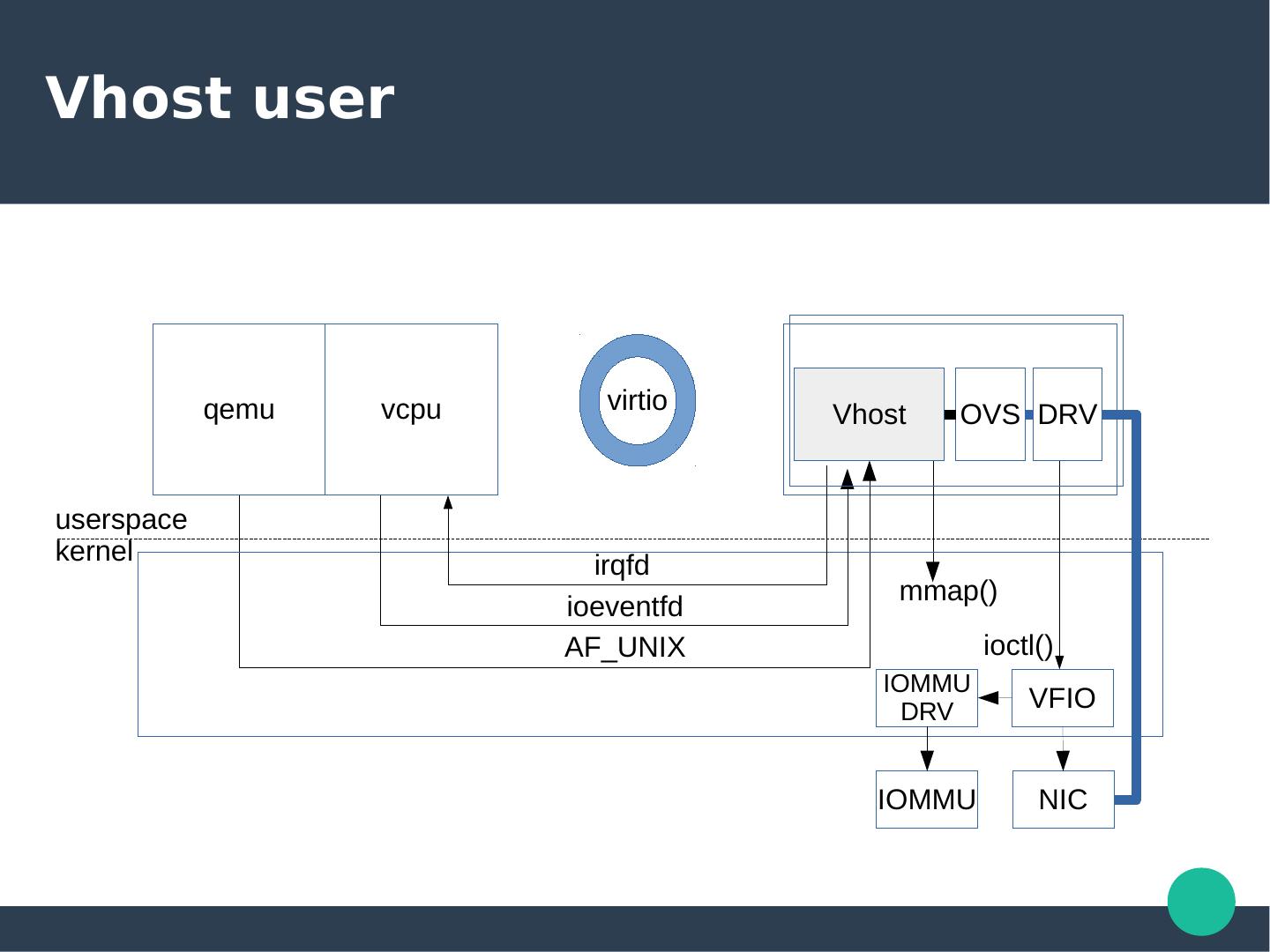

6 .Vhost user qemu vcpu virtio Vhost OVS DRV userspace kernel irqfd mmap() ioeventfd AF_UNIX ioctl() IOMMU DRV VFIO IOMMU NIC

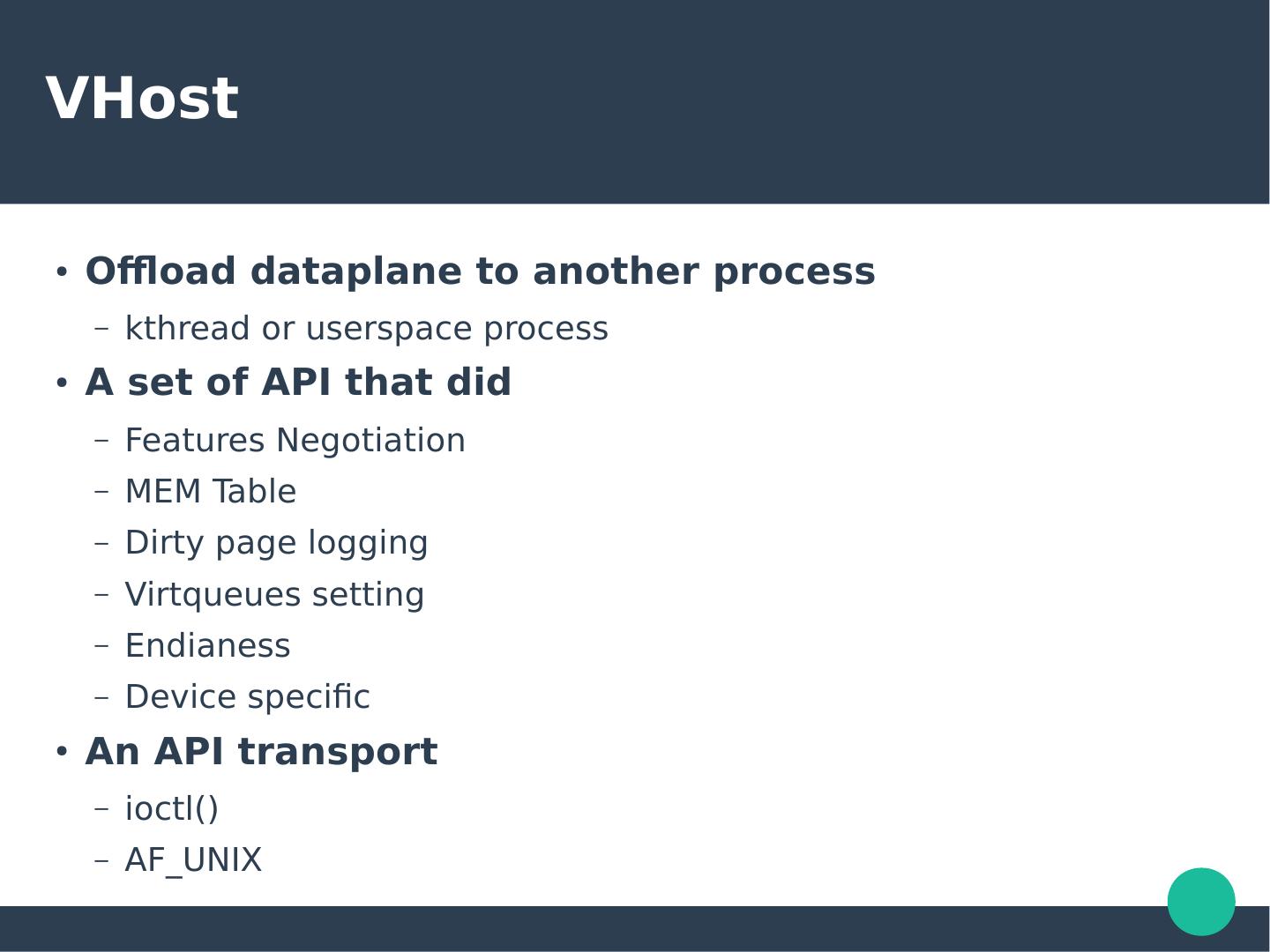

7 .VHost ● Offload dataplane to another process – kthread or userspace process ● A set of API that did – Features Negotiation – MEM Table – Dirty page logging – Virtqueues setting – Endianess – Device specific ● An API transport – ioctl() – AF_UNIX

8 .So far so good?

9 .How hard for adding a new feature ● Formalization in Virtio Specification ● Codes in qemu userspace virtio-net backend ● Vhost protocol extension: ● Vhost-kernel (uapi), vhost-user (has its own spec) ● Versions, feature negotiations, compatibility ● Vhost support codes in qemu (user and kernel) ● Features (bugs) duplicated everywhere: – vhost_net, dpdk, TAP, macvtap, OVS, VPP, qemu

10 .Even if we manage to do this

11 .Device IOTLB qemu vhost backend IOTLB miss Device vIOMMU IOTLB IOTLB update slow or even unreliable Minor impact for static mapping Poor performance for dynamic mapping

12 .Issue Datapath needs information from control path. But vhost control path is not designed for high performance.

13 .Receive Side Scaling VCPU0 VCPU1 VCPU2 VCPU3 q1 q2 qemu Network backend Indirection table? cvq MSI-X q1 RSS q2 2 vhost-user slave algo vhost AF_UNIX More kinds of steering policy?

14 .Issue Networking backend is transparent to qemu in the case of vhost-user. Net specific request through vhost-user.

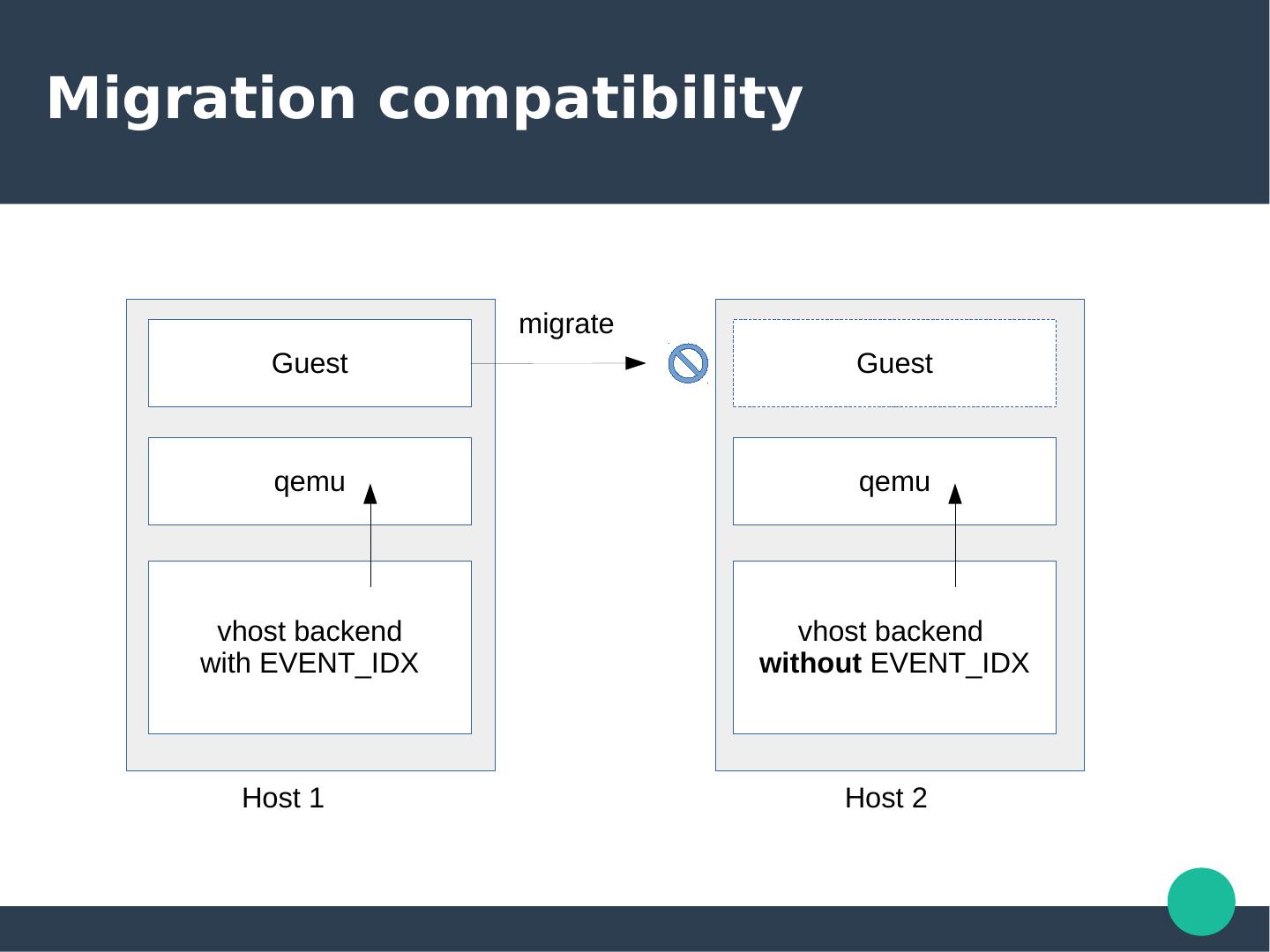

15 .Migration compatibility migrate Guest Guest qemu qemu vhost backend vhost backend with EVENT_IDX without EVENT_IDX Host 1 Host 2

16 .Issue Though features was negotiated during startup. Backend needs to implement each features for providing migration compatibility.

17 .Attack surface guest untrusted userspace drv VFIO kernel IOMMU DRV qemu vhost backend iova MEM_TABLE rw malicious rw code #DMARF rw ATS request Device vIOMMU ATS reply IOTLB can protect malicious guest usersapce driver. but not malicious vhost-backend.

18 .Issue We don’t want to trust vhost-user backend But we share (almost) all memory to it!

19 .Issues with external vhost process ● Complexity in Engineering – Hard to be extended, duplicated codes(bugs) in many places ● Performance is not always good – Datapath can not be offloaded completely ● Visibility of networking backend – Re-invent wheels in vhost-user procotol ● Divergence of protocol between vhost-kernel and vhost-user – Workarounds, how to deal with the 3rd vhost transport? ● Increasing of attack surface

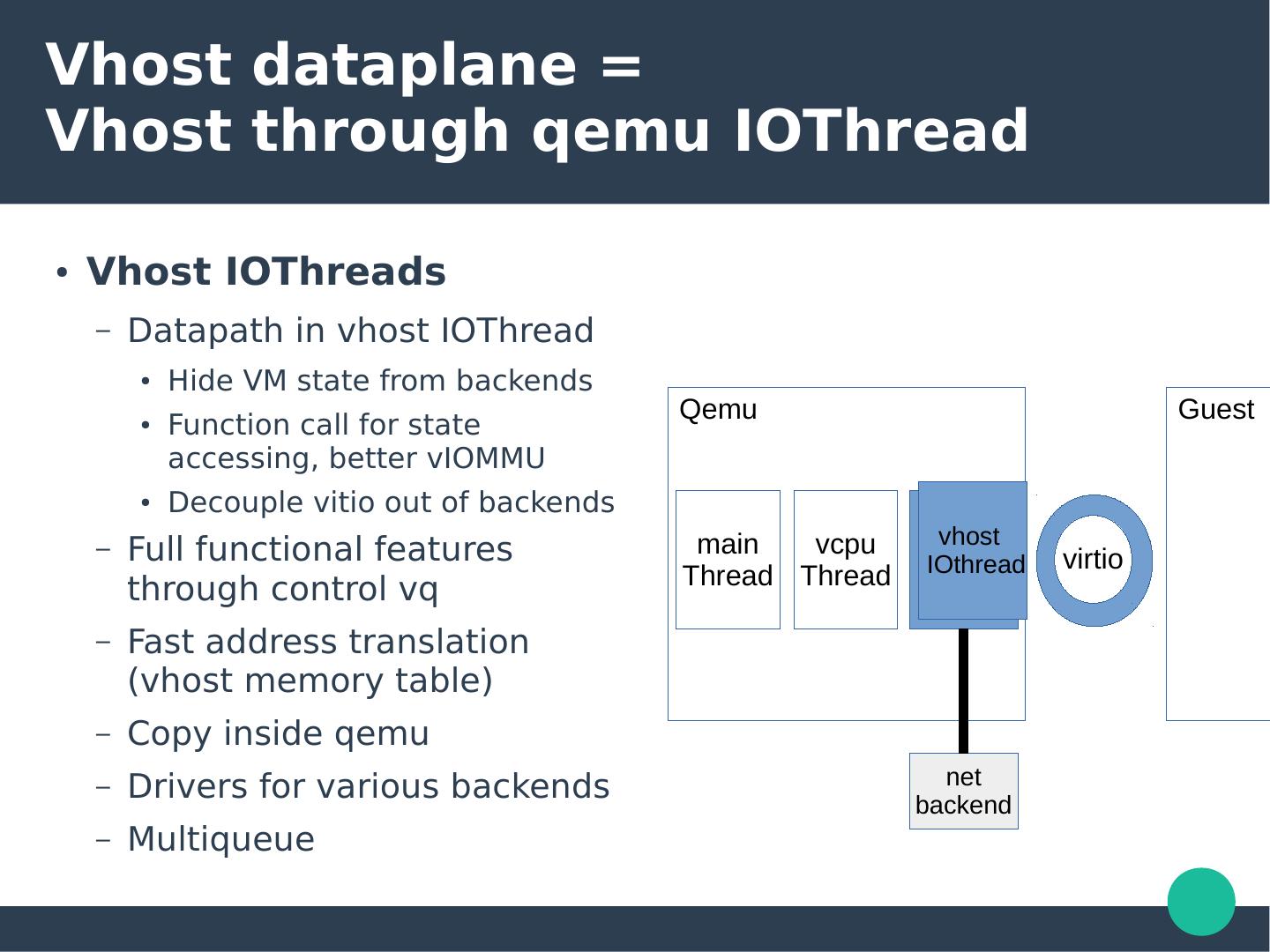

20 .Vhost dataplane = Vhost through qemu IOThread ● Vhost IOThreads – Datapath in vhost IOThread ● Hide VM state from backends Qemu Guest ● Function call for state accessing, better vIOMMU ● Decouple vitio out of backends main vcpu vhost – Full functional features vhost IOthread virtio Thread Thread IOthread through control vq – Fast address translation (vhost memory table) – Copy inside qemu net – Drivers for various backends backend – Multiqueue

21 .Vhost dataplane Qemu - Vhost IOThread virtqueue vhost protocol manipulation IOThreads helpers * vhost dataplane API AF_XDP/ vhost_net/ netmap dpdk AF_PACKET TAP shared mdev/zerocopy virtio-user memory ... Drivers

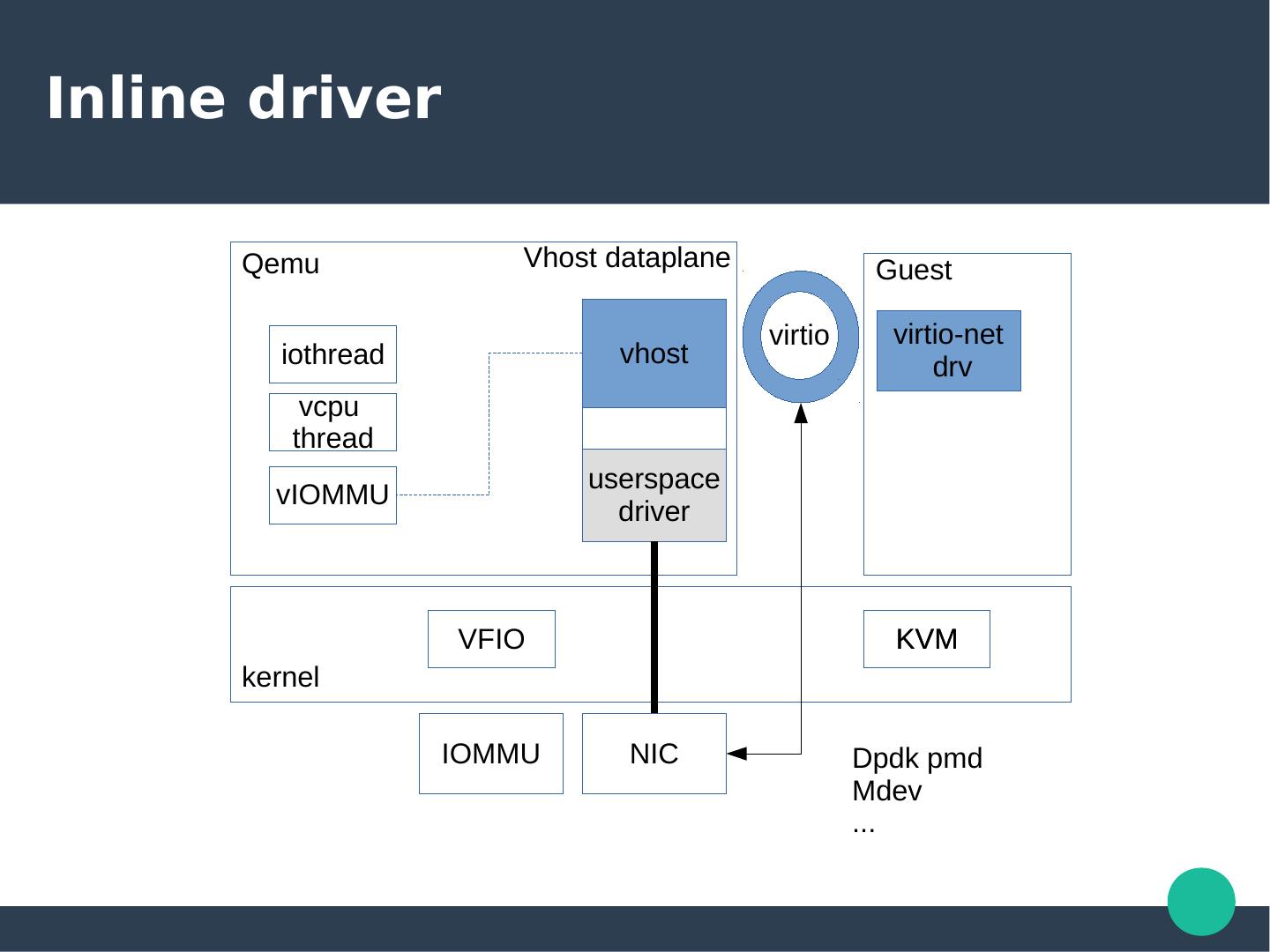

22 .Inline driver Qemu Vhost dataplane Guest virtio virtio-net iothread vhost drv vcpu thread userspace vIOMMU driver VFIO KVM kernel IOMMU NIC Dpdk pmd Mdev ...

23 .Multi-process cooperation Qemu Vhost dataplane Guest virtio-net iothread vhost virtio drv vcpu thread vIOMMU rte_ring mempool ring pmd OVS-dpdk port port

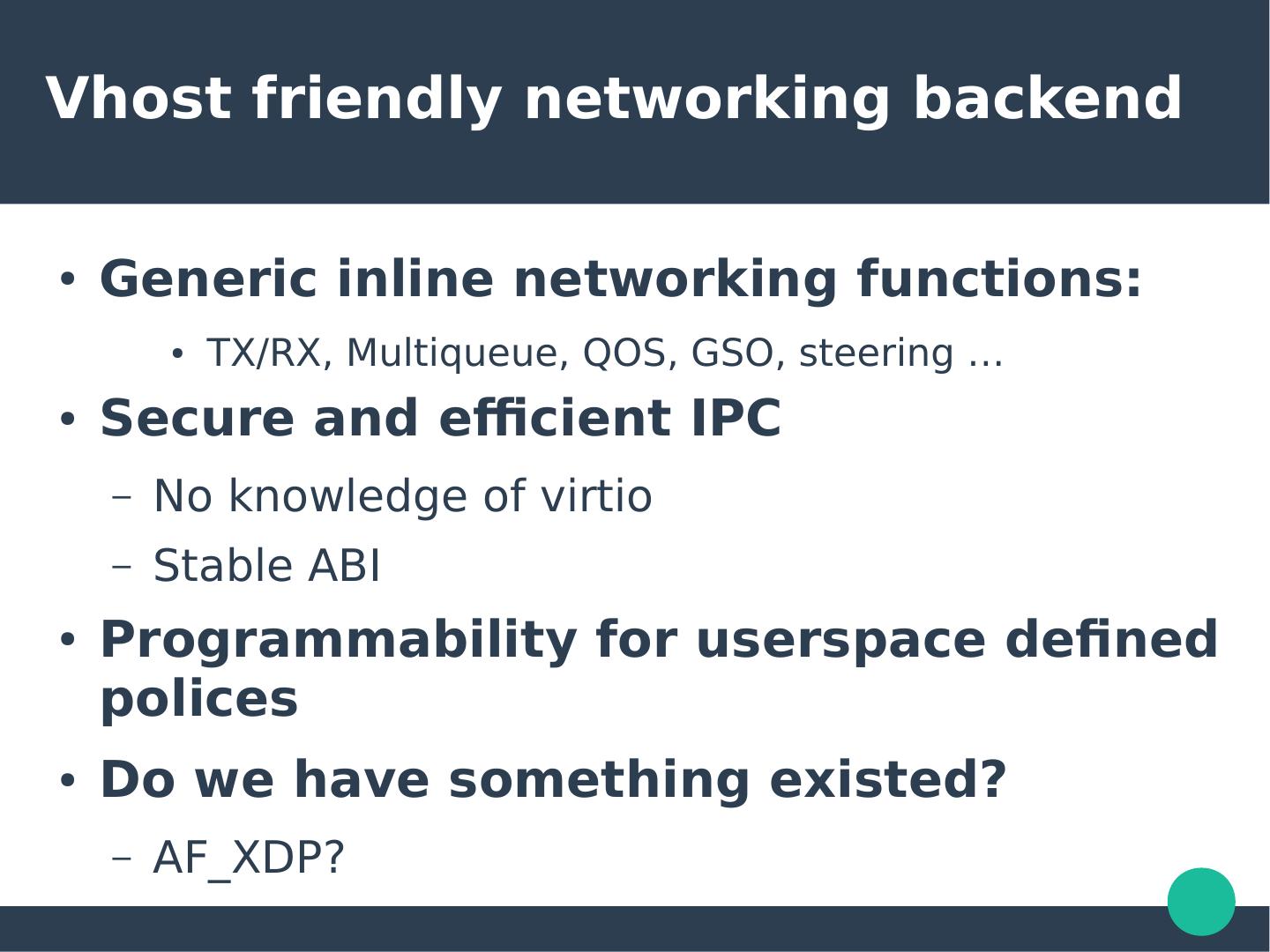

24 .Vhost friendly networking backend ● Generic inline networking functions: ● TX/RX, Multiqueue, QOS, GSO, steering … ● Secure and efficient IPC – No knowledge of virtio – Stable ABI ● Programmability for userspace defined polices ● Do we have something existed? – AF_XDP?

25 .External vs vhost-dataplane remote dataplane vhost-dataplane VM metadata access Slow, inter process fast, function call communication New feature Hard, New types of easy, limited to development IPCs qemu (or programmibility from backend) Compatibility Complex, extra easy, limited to works on the qemu backend New backend Hard, need to know easy, no need to integration all about virtio know virtio Attack surface Increased limited to qemu Backend visibility May be transparent Visible

26 .Virtio-net = virtio + networking ● Vhost dataplane – Virtio functions in vhost IOThread – Networking functions in the backend ● Limitation – More cores for multi process cooperation – The ideal networking backends does not exist in real world ● invent one? – ...

27 .Status & TODO ● Status – prototype ● Basic IOThreads / Virtqueue helpers ● TAP drive – -device virtio-net-pci,netdev=vd0 -netdev vhost- dp,id=vd0,driver=tap-driver0 -object vhost-dp-tap,id=tap-driver0 – RFC sent in next few months ● TODO – Dpdk static linking – vIOMMU, Multiqueue – Benchmarking

28 .Thanks