- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Globalizing Player Accounts with MySQL at Riot Games

防暴游戏的玩家账户团队需要巩固玩家账户基础设施,并为传奇联盟玩家基础提供单一的全球账户系统。为此,他们将数亿个玩家帐户迁移到了AWS中一个整合的、全球复制的复合数据库集群中。这提供了更高的容错性和对帐户数据的低延迟访问。在本文中,我们讨论了将八个不同的数据库集群作为一个单一的复合mysql数据库集群迁移到AWS中的工作,该集群在四个不同的AWS区域中复制,提供TerraForm,并由Ansible管理和操作。

本次演讲将简要概述播放器帐户服务从传统的独立数据中心部署到由我们的帐户服务提供的全球复制数据库集群的演变过程,并概述一些让我们走上今天的道路的成长之痛和经验。

展开查看详情

1 .Globalizing Player Accounts with MySQL at Riot Games Tyler Turk Riot Games, Inc.

2 .About Me Senior Infrastructure Engineer Player Platform at Riot Games Father of three Similar talk at re:Invent last year 2

3 .Accounts Team Responsible for account data Provides account management Ensures players can login Aims to mitigate account compromises 3

4 .Overview The old and the new

5 .League’s growth and shard deployment Launched in 2009 Experienced rapid growth Deployed multiple game shards Each shard used their own MySQL DBs 5

6 .Some context Hundreds of millions of players worldwide Localized primary / secondary replication Data federated with each shard Account transfers were difficult 6

7 .Why MySQL? Widely used & adopted at Riot Used extensively by Tencent Ensures ACID compliance 7

8 .Catalysts for globalization General Data Protection Regulation Decoupling from game platform Single source of truth for accounts 8

9 .Globalization of Player Accounts Migrating from 10 isolated databases to a single globally replicated database

10 .Data deployment considerations Globally replicated, multi-master Globally replicated, single master Federated or sharded data To cache or not to cache 10

11 .Global database expectations Highly available Geographically distributed < 1 sec latency replication < 20ms read latency Enables a better player experience 11

12 .Continuent Tungsten Third-party vendor Provides cluster orchestration Manages data replication MySQL connector proxy 12

13 .Why Continuent Tungsten? Prior issues with Aurora RDS was not multi-region Preferred asynchronous replication Automated cluster management 13

14 .Explanation & tolerating failure 14

15 .Deployment Terraform & Ansible (docker initially) 4 AWS regions r4.8xlarge (10Gbps network) 5TB GP2 EBS for data 15TB for logs / backups 15

16 .Migrating the data Multi-step migration of data Consolidated data into 1 DB Multiple rows for a single account 16

17 .Load testing 17

18 .Chaos testing 18

19 .Monitoring 19

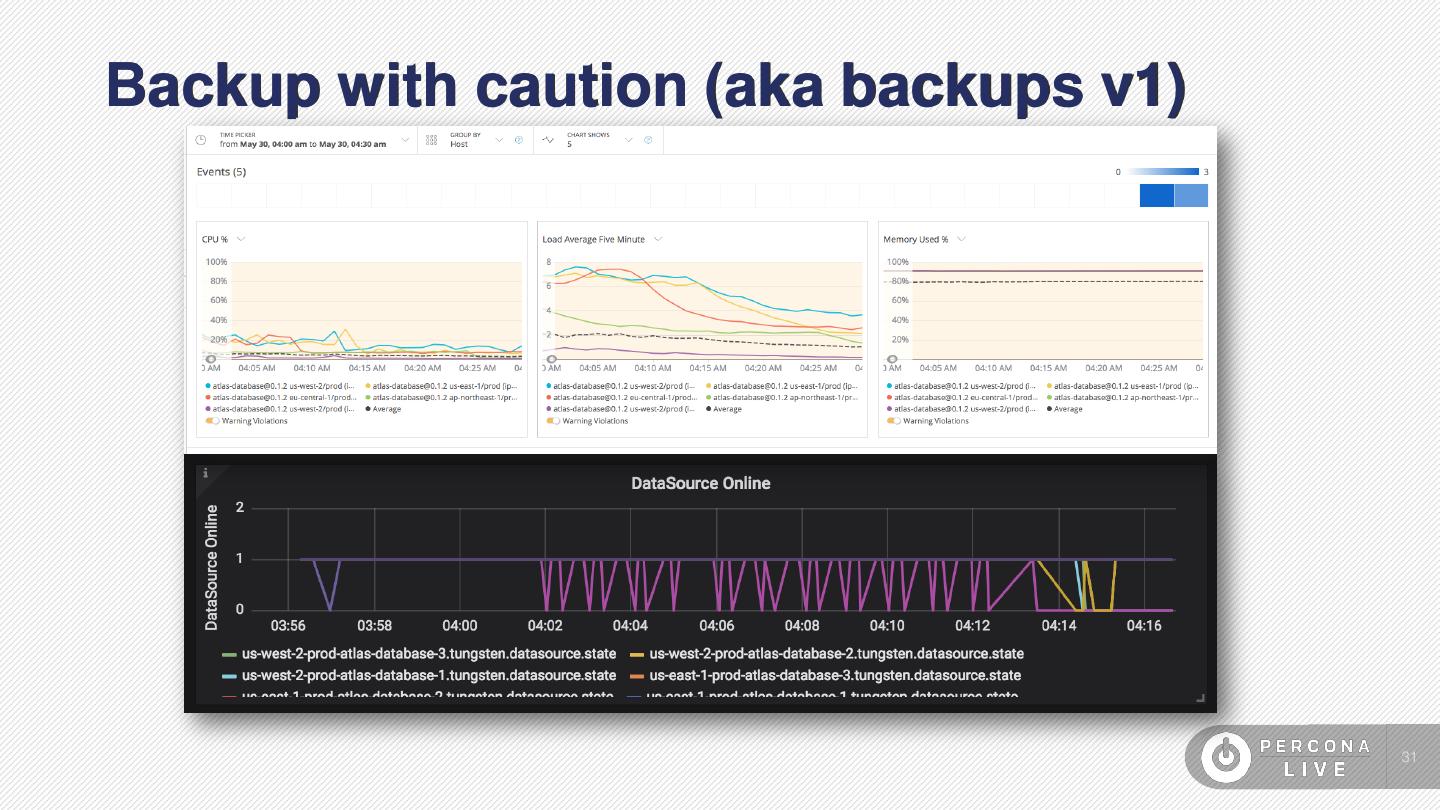

20 .Performing backups Leverage standalone replicator Backup with xtrabackup Compress and upload to S3 Optional delay on replicator 20

21 .Performing maintenance Cluster policies Offline and shun nodes Perform cluster switch 21

22 .Performing schema changes Schema MUST be backwards compatible The Process Order of operations for schema change: • Offline node 1. Replicas in non-primary region • Wait for connections to drain 2. Cluster switch on relay • Stop replicator 3. Perform change on former relay • Perform schema change 4. Repeat steps 1-3 on all non-primary regions • Start replicator 5. Replicas in primary region • Wait for replication 6. Cluster switch on write primary • Online node 7. Perform change on former write 22

23 .De-dockering Fully automated the process One server at a time Performed live Near zero downtime 23

24 .Current state Database deployed on host No docker for database / sidecars Accounts are distilled to a single row Servicing all game shards 24

25 .Lessons Learned Avoiding the same mistakes we made

26 .Databases in docker Partially immutable infrastructure Configuration divergence possible Upgrades required container restarts Pain in automating deploys 26

27 .Large data imports Consider removing indexes Perform daily delta syncs Migrate in chunks if possible 27

28 .Think about data needs Synchronous vs asynchronous Read heavy vs write heavy 28

29 .Impacts of replication latency Replication can take >1 second Impacts strongly consistent expectations Immediate read-backs can fail Think about “eventual” consistency 29