- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- <iframe src="https://www.slidestalk.com/GDG/intel_openvino_introduction?embed&video" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

OpenVINO 人工智能应用开发实践

本次分享基于Intel Distribution of OpenVINO toolkit,进行人工智能应用开发的实践,通过演示和介绍该人工智能应用程序开发套件,让用户快速掌握如何把现有的深度神经网络部署在Intel各个硬件平台上,并获得推理性能的提升。主要内容包括:OpenVINO介绍,模型优化器(MO)的使用基于推理引擎(IE)的AI应用程序开发以及神经网络量化与推理性能提升。

展开查看详情

1 .英特尔AI实践日 Intel® Distribution of OpenVINO™ toolkit 产品与功能介绍 加速深度学习与视觉计算开发

2 .AI IS CHANGING EVERY MARKET EMERGENCY ENERGY EDUCATION CITIES FINANCE HEALTH RESPONSE Real-time Maximize Transform Enhance safety, Turn data emergency and production the learning research, and into valuable Revolutionize crime response and uptime experience more intelligence patient outcomes INDUSTRIAL MEDIA RETAIL SMART TELECOM SMART HOMES CITIES Empower Create Enable homes Drive network Efficient and truly intelligent thrilling Transform stores that see, hear, and operational robust traffic Industry 4.0 experiences and inventory and respond efficiency systems 2

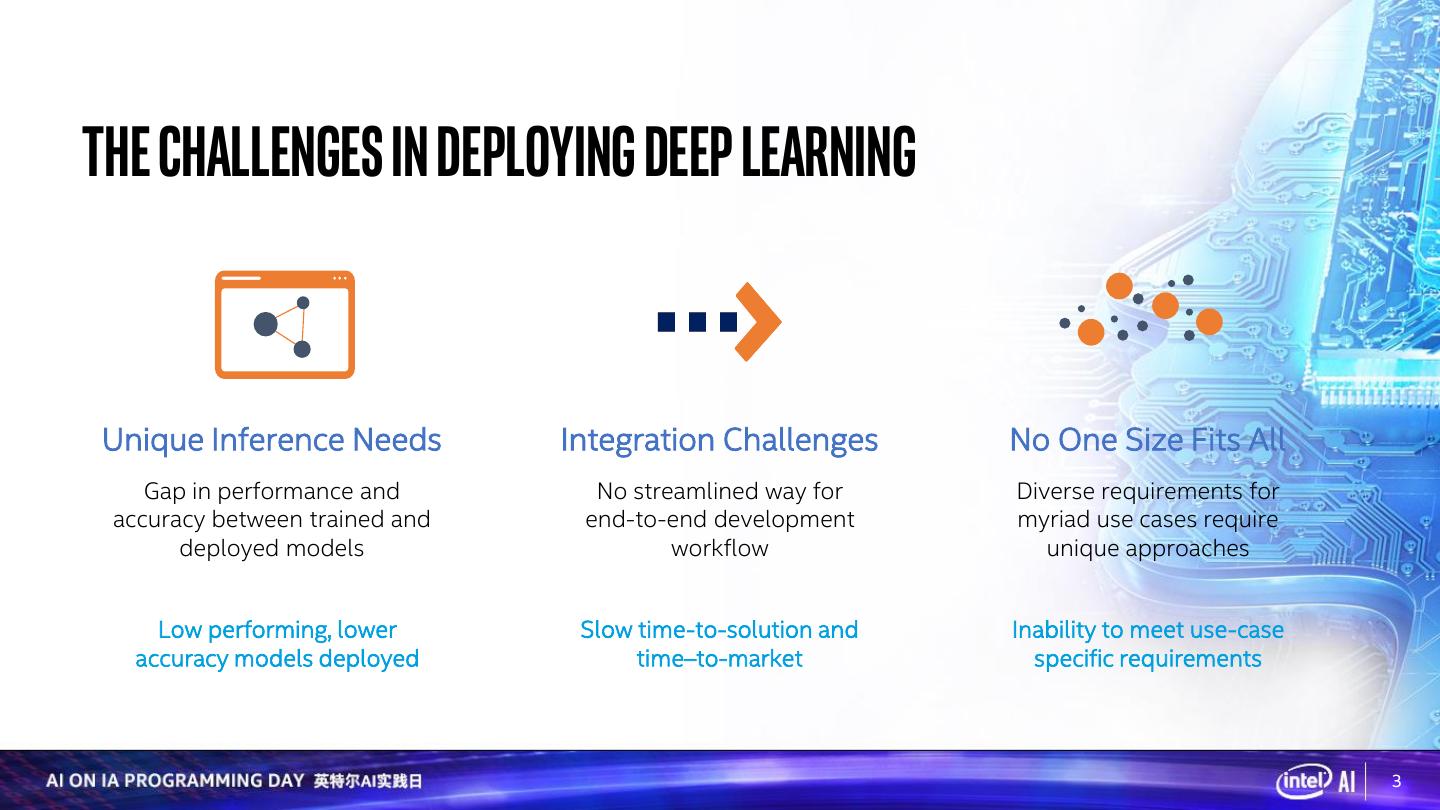

3 .THE CHALLENGES IN DEPLOYING DEEP LEARNING Unique Inference Needs Integration Challenges No One Size Fits All Gap in performance and No streamlined way for Diverse requirements for accuracy between trained and end-to-end development myriad use cases require deployed models workflow unique approaches Low performing, lower Slow time-to-solution and Inability to meet use-case accuracy models deployed time–to-market specific requirements 3

4 .INTEL® DISTRIBUTION OF OPENVINO™ TOOLKIT • Tool Suite for High-Performance, Deep Learning Inference • Faster, more accurate real-world results using high-performance, AI and computer vision inference deployed into production across Intel® architecture from edge to cloud High-Performance, Streamlined Development, Write Once, Deep Learning Inference Ease of Use Deploy Anywhere 4

5 .THE COMPOUNDING EFFECT OF BOTH HARDWARE AND SOFTWARE Improvements Means Exponential Performance Baseline Performance 25.6x *1 Additional Software Performance 1x 2.1x *3 *2 Release 2018 R1 Release 2019 R1 Release 2019 R3 1st Generation Intel® Xeon Scalable Processor 2nd Generation Intel® Xeon Scalable Processor 5

6 .1. build 2. optimize 3. deploy

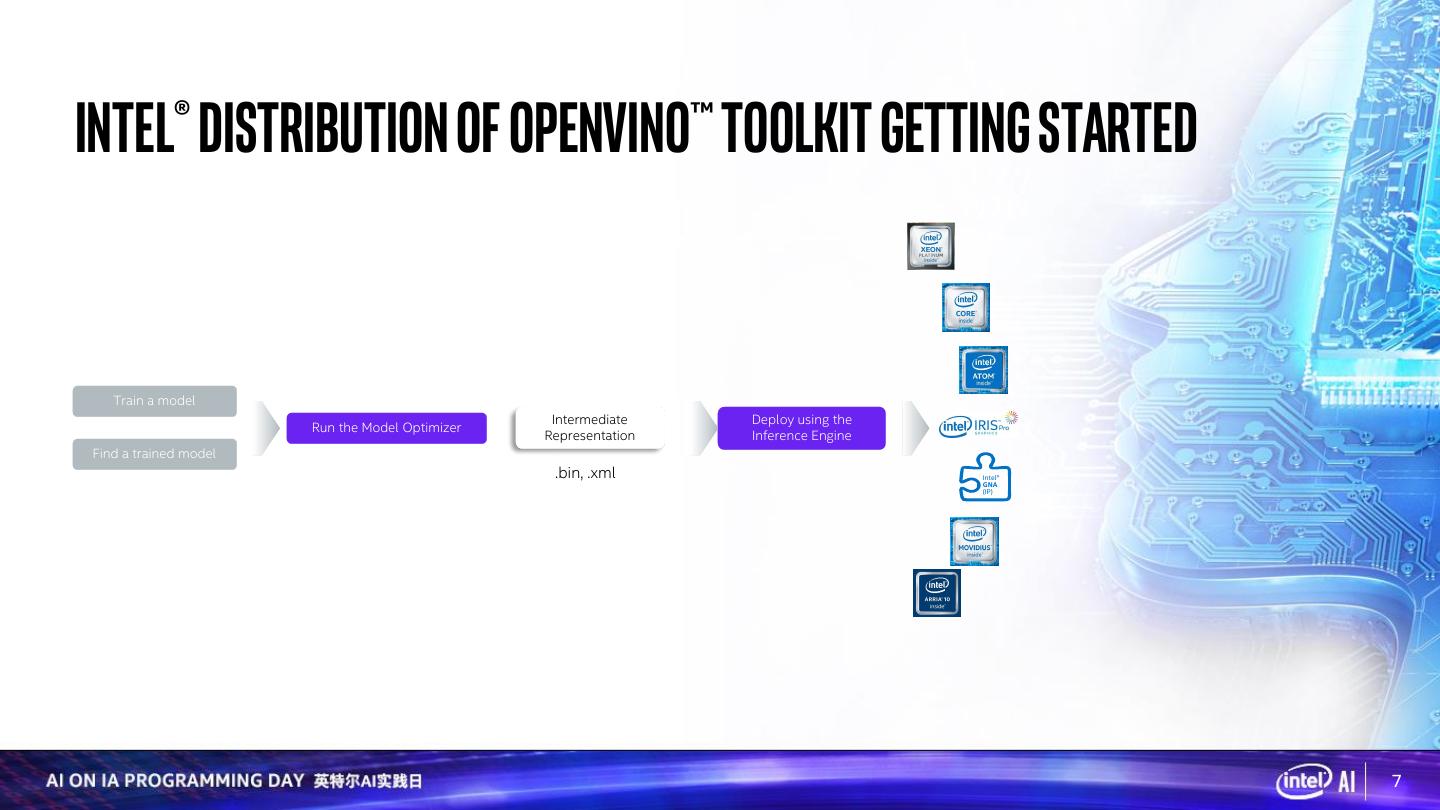

7 .INTEL® DISTRIBUTION OF OPENVINO™ TOOLKIT GETTING STARTED Train a model Intermediate Deploy using the Run the Model Optimizer Representation Inference Engine Find a trained model .bin, .xml Intel® GNA (IP) 7

8 .BREADTH OF SUPPORTED FRAMEWORKS MAXIMIZES DEVELOPMENT Trained Model (and other tools via ONNX* conversion) Supported Frameworks and Formats https://docs.openvinotoolkit.org/latest/_docs_IE_DG_Introduction.html#SupportedFW Configure the Model Optimizer for your Framework https://docs.openvinotoolkit.org/latest/_docs_MO_DG_prepare_model_Config_Model_Optimizer.html 8

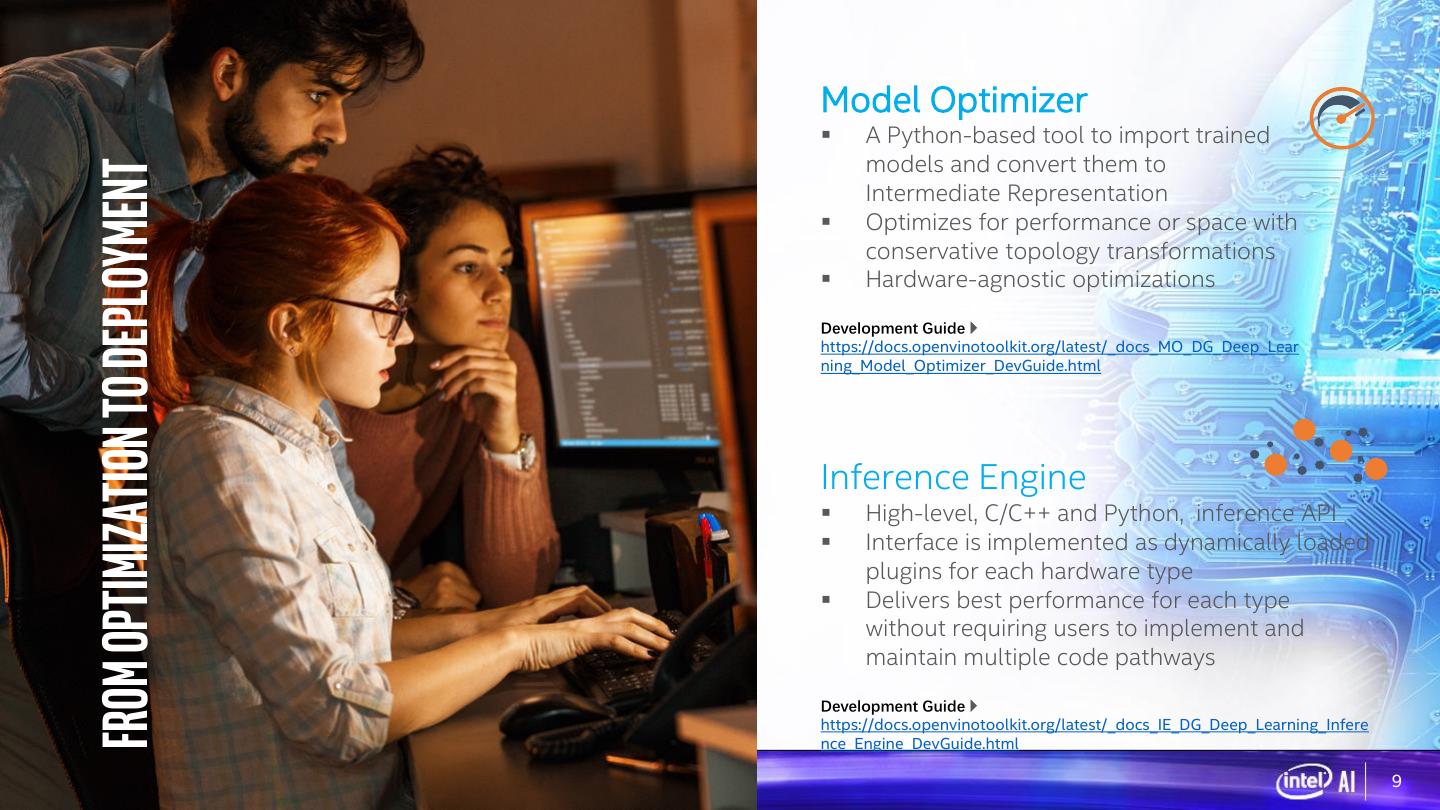

9 . Model Optimizer ▪ A Python-based tool to import trained models and convert them to From optimization to deployment Intermediate Representation ▪ Optimizes for performance or space with conservative topology transformations ▪ Hardware-agnostic optimizations Development Guide https://docs.openvinotoolkit.org/latest/_docs_MO_DG_Deep_Lear ning_Model_Optimizer_DevGuide.html Inference Engine ▪ High-level, C/C++ and Python, inference API ▪ Interface is implemented as dynamically loaded plugins for each hardware type ▪ Delivers best performance for each type without requiring users to implement and maintain multiple code pathways Development Guide https://docs.openvinotoolkit.org/latest/_docs_IE_DG_Deep_Learning_Infere nce_Engine_DevGuide.html 9

10 .Intel® Distribution of OpenVINO™ toolkit write once, deploy anywhere Applications Inference Inference Engine (Common API) Engine runtime Multi-device plugin (optional but recommended - for full system utilization) DNNL (mkl-dnn) Myriad & clDNN plugin GNA plugin FPGA plugin plugin HDDL plugins Plugin architecture Intrinsics OpenCL™ GNA API Movidius API DLA Intel® GNA (IP) 10

11 .Write once, deploy anywhere Cross-Platform Flexibility on Intel® Distribution of OpenVINO™ toolkit Write once, deploy across different platforms with the same API and framework-independent execution Consistent accuracy, performance Intel® GNA (IP) and functionality across all target devices with no re-training required [NEW]Full environment utilization, or multi- device plugin, across available hardware for greater performance results EDGE TO CLOUD Introduction https://docs.openvinotoolkit.org/latest/_docs_IE_DG_supported_plugins_HETERO.html 11

12 .Tools to speed up test cycles and development ▪ Reduce model size into low precision ▪ Generate an optimal, minimized data types, such as INT8 runtime package for deployment Post-training [NEW] ▪ Reduces model size while also Deployment ▪ Deploy with smaller footprint Optimization improving latency Manager compared to development package ▪ Provides theoretical data on models: computational complexity (flops), ▪ Check for accuracy of the model number of neurons, memory (original and after conversion) to IR Accuracy Model Analyzer consumption file using a known data set Checker ▪ Measure performance (throughput, latency) of a model ▪ Provides an easy way of accessing a number of public models as well as a ▪ Get performance metrics per layer Model Benchmark App set of pre-trained Intel models and overall basis Downloader Get Started https://docs.openvinotoolkit.org/latest/_docs_IE_DG_Tools_Overview.html –or- by using the Deep Learning Workbench 12

13 .Deep Learning Workbench ▪ Web-based, UI extension tool of the Intel® Distribution of OpenVINO™ toolkit ▪ Visualizes performance data for topologies and layers to aid in model analysis ▪ Automates analysis for optimal performance configuration (streams, batches, latency) ▪ Experiment with int8 or Winograd calibration for optimal tuning ▪ Provide accuracy information through accuracy checker ▪ Direct access to models from public set of Open Model Zoo Development Guide https://docs.openvinotoolkit.org/latest/_docs_Workbench_DG_Introduction .html 13

14 .Speed up development using the open model zoo Open source resources with pre-trained models, samples and demos Computer Vision Audio, Speech, Language Recommender Other (Data Generation, Reinforcement Learning) Object detection Text detection Action recognition Object recognition Text recognition Compression models Reidentification Image retrieval Semantic segmentation And more.. Instance segmentation Human pose estimation Image processing Pre-trained models https://github.com/opencv/open_model_zoo 14

15 .Speed up development using the open model zoo Open source resources with pre-trained models, demos, and tools The Open Model Zoo demo applications are console applications that demonstrate how you can use your applications to solve specific use-cases. Smart Classroom Super Resolution Recognition and action detection Enhances the resolution of the input image demo for classroom settings Action Recognition Multi-Camera, Multi-Person Classifies actions that are being performed on input video Tracking multiple people on multiple cameras for public safety use cases And more.. Gaze Estimation Face detection followed by gaze estimation, head pose estimation and facial landmarks regression. Demo applications https://github.com/opencv/open_model_zoo 15

16 .Traditional computer vision Powered by the Intel® Distribution of OpenVINO™ toolkit Accelerate and optimize low-level, image-processing capabilities using OpenCV • Open sourced computer vision and machine learning library • 2500+ algorithms for a common infrastructure and to accelerate time-to-market • Large number of primitives for customizability https://opencv.org/

17 .Performance benchmarks with latest release DOCS.OPENVINOTOOLKIT.ORG Online documentation of performance benchmarks 17

18 .Process of OpenVINO™ toolkit • Recommendations to the customer or developer QUALIFY INSTALLATION PREPARE HANDS ON SUPPORT ▪ Use a trained model ▪ Download the Intel® ▪ Understand sample ▪ Visualize metrics with ▪ Ask questions and and check if OpenVINO™ toolkit demos and tools the Deep Learning share information with framework is package from Intel® included Workbench others through the supported Developer Zone, or by Community Forum ▪ Understand ▪ Utilize prebuilt, YUM or APT - or – performance Reference ▪ Engage using repositories Implementations to #OpenVINO on Stack ▪ Take advantage of a ▪ Choose hardware ▪ Utilize the Getting become familiar with Overflow pre-trained model option with Started Guide capabilities from the Open Model Performance ▪ Visit documentation Zoo Benchmarks ▪ Optimize workloads site for guides, how to’s, with these and resources ▪ Build, test and performance best remotely run ▪ Attend training and get practices workloads on the certified Intel® DevCloud for ▪ Use the Deployment the Edge before Manager to minimize buying hardware deployment package 18

19 .英特尔AI实践日 谢谢