- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- <iframe src="https://www.slidestalk.com/Spark/ManagingtheCompleteMachineLearningLifecyclewithMLflow?embed&video" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

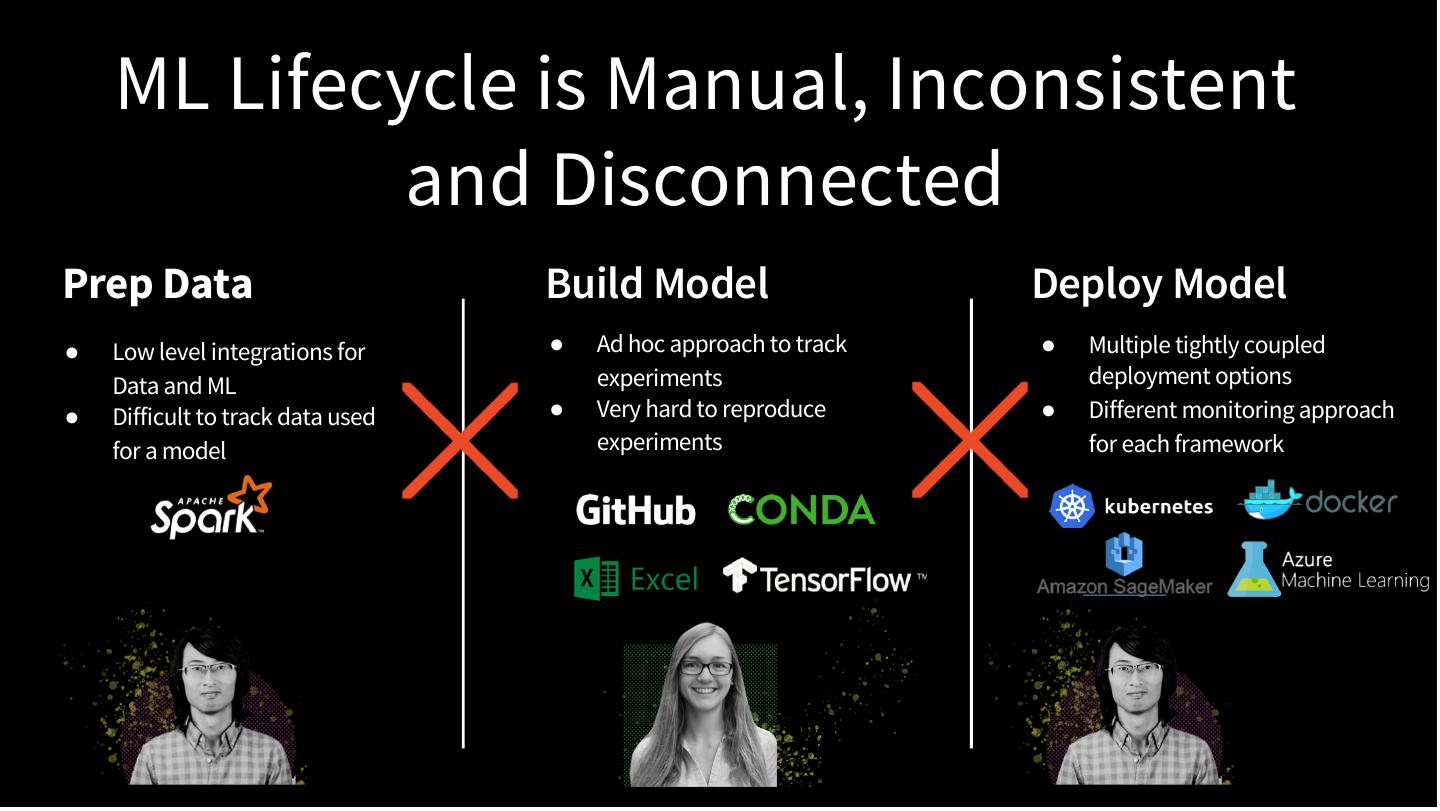

- 微信扫一扫分享

Managing the Complete Machine Learning Lifecycle with MLflow

ML development brings many new complexities beyond the traditional software development lifecycle. Unlike in traditional software development, ML developers want to try multiple algorithms, tools and parameters to get the best results, and they need to track this information to reproduce work. In addition, developers need to use many distinct systems to productionize models.

To solve for these challenges, Databricks unveiled last year MLflow, an open source project that aims at simplifying the entire ML lifecycle. MLflow introduces simple abstractions to package reproducible projects, track results, and encapsulate models that can be used with many existing tools, accelerating the ML lifecycle for organizations of any size.

In the past year, the MLflow community has grown quickly: over 120 contributors from over 40 companies have contributed code to the project, and over 200 companies are using MLflow.

In this tutorial, we will show you how using MLflow can help you:

Keep track of experiments runs and results across frameworks.

Execute projects remotely on to a Databricks cluster, and quickly reproduce your runs.

Quickly productionize models using Databricks production jobs, Docker containers, Azure ML, or Amazon SageMaker.

We will demo the building blocks of MLflow as well as the most recent additions since the 1.0 release.

What you will learn:

Understand the three main components of open source MLflow (MLflow Tracking, MLflow Projects, MLflow Models) and how each help address challenges of the ML lifecycle.

How to use MLflow Tracking to record and query experiments: code, data, config, and results.

How to use MLflow Projects packaging format to reproduce runs on any platform.

How to use MLflow Models general format to send models to diverse deployment tools.

Prerequisites:

A fully-charged laptop (8-16GB memory) with Chrome or Firefox

Python 3 and pip pre-installed

Pre-Register for a Databricks Standard Trial

Basic knowledge of Python programming language

Basic understanding of Machine Learning Concepts

展开查看详情

1 .Standardising machine learning lifecycle on Mlflow Thunder Shiviah & Michael Shtelma SAIS EUROPE - 2019 1

2 .Hardest Part of ML isn’t ML, it’s Data “Hidden Technical Debt in Machine Learning Systems,” Google NeurIPS 2015 Data Machine Resource Monitoring Verification Management Data Collection Serving Configuration Infrastructure ML Code Analysis Tools Feature Process Extraction Management Tools Figure 1: Only a small fraction of real-world ML systems is composed of the ML code, as shown by the small green box in the middle. The required surrounding infrastructure is vast and complex.

3 .Data & ML Tech and People are in Silos x DATA DATA ENGINEERS SCIENTISTS

4 . ML Lifecycle is Manual, Inconsistent and Disconnected Prep Data Build Model Deploy Model ● Low level integrations for ● Ad hoc approach to track ● Multiple tightly coupled Data and ML experiments deployment options ● Difficult to track data used ● Very hard to reproduce ● Different monitoring approach for a model experiments for each framework

5 . The need for standardization

6 .What is MLflow ? Unveiled in June 2018, MLflow is an open source framework to manage the complete Machine Learning Lifecycle. Tracking Projects Models Record and query Packaging format General model format experiments: code, for reproducible runs that supports diverse data, config, results on any platform deployment tools

7 .MLflow Momentum at a glance

8 .Rapid Community Adoption ● Time till 74 contributors: Spark = 3 years; MLflow = 8 months

9 .Experiment Tracking

10 . MLflow Tracking Notebooks Python or REST API UI Local Apps Tracking Server API Cloud Jobs

11 .Key Concepts in Tracking • Parameters: key-value inputs to your code • Metrics: numeric values (can update over time) • Artifacts: arbitrary files, including models • Source: what code ran?

12 .Experiment Tracking with Managed MLflow Record runs, and keep track of models parameters, results, code, and data from each experiment in one place. Provides: ● Pre-configured MLflow tracking server ● Databricks Workspace & Notebooks UI integration ● S3, Azure Blob Storage, Google Cloud for artifacts storage ● Experiments management via role based Access Control Lists (ACLs)

13 .Reproducible Projects

14 . MLflow Projects Local Execution Project Spec Code Config Data Remote Execution

15 .Example MLflow Project my_project/ ├── MLproject conda_env: conda.yaml │ entry_points: │ main: parameters: │ training_data: path │ lambda: {type: float, default: 0.1} command: python main.py {training_data} {lambda} │ ├── conda.yaml ├── main.py └── model.py $ mlflow run git://<my_project> ... mlflow.run(“git://<my_project>”, ...)

16 .Reproducible Projects with Managed MLflow Build composable projects, capture dependencies and code history for reproducible results, and share projects with peers. Provides: ● Support for Git, Conda, and other file storage systems ● Remote execution via command line as a Databricks Job

17 .Model Deployment

18 . MLflow Models Inference Code Model Format Flavor 1 Flavor 2 Batch & Stream Scoring Simple model flavors Run Sources usable by many tools Cloud Serving Tools

19 .Example MLflow Model my_model/ ├── MLmodel run_id: 769915006efd4c4bbd662461 time_created: 2018-06-28T12:34 │ flavors: │ tensorflow: Usable by tools that understand saved_model_dir: estimator │ signature_def_key: predict TensorFlow model format │ python_function: Usable by any tool that can run loader_module: mlflow.tensorflow │ Python (Docker, Spark, etc!) └── estimator/ ├── saved_model.pb └── variables/ ...

20 .Model Deployment with Managed MLflow Quickly deploy models to any platform based on your needs, locally or in the cloud, from experimentation to production. Supports: ● Databricks Jobs and Clusters for Production Model Operations ● Batch inference on Databricks (Apache Spark) ● REST endpoints via Docker containers, Azure ML, or SageMaker

21 .What’s next?

22 .Multi-step workflow GUI https://databricks.com/sparkaisummit/north-america/2019-spark-summit-ai-keynotes-2#keynote-e

23 .Model registry & deployment tracking https://databricks.com/sparkaisummit/north-america/2019-spark-summit-ai-keynotes-2#keynote-e

24 .Demo and exercise

25 .Questions ?

26 .Thank you!