- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Unified Approach to Interpret Machine Learning Model - SHAP + LIME

For companies that solve real-world problems and generate revenue from the data science products, being able to understand why a model makes a certain prediction can be as crucial as achieving high prediction accuracy in many applications. However, as data scientists pursuing higher accuracy by implementing complex algorithms such as ensemble or deep learning models, the algorithm itself becomes a blackbox and it creates the trade-off between accuracy and interpretability of a model’s output.

To address this problem, a unified framework SHAP (SHapley Additive exPlanations) was developed to help users interpret the predictions of complex models. In this session, we will talk about how to apply SHAP to various modeling approaches (GLM, XGBoost, CNN) to explain how each feature contributes and extract intuitive insights from a particular prediction. This talk is intended to introduce the concept of general purpose model explainer, as well as help practitioners understand SHAP and its applications.

展开查看详情

1 .WIFI SSID:Spark+AISummit | Password: UnifiedDataAnalytics

2 .Unified approach to interpret machine learning model: SHAP + LIME Layla Yang, Databricks #UnifiedDataAnalytics #SparkAISummit

3 .Overview • What is Machine Learning Interpreter? • Why is it important? • Why is interpreting ML model difficult? • Different methodologies of model explainer • SHAP: SHapley Additive exPlanations • LIME: Local Interpretable Model-Agnostic Explanations • Application: real world use case #UnifiedDataAnalytics #SparkAISummit 3

4 . ML interpreter: What is it? Accuracy and Interpretability Black box Trade-off Input Data Output #UnifiedDataAnalytics #SparkAISummit 4

5 .ML interpreter: What is it? “ Interpretability is the degree to which a human can understand the cause of a decision.” -- Miller, Tim. “Explanation in artificial intelligence: Insights from the social sciences.” #UnifiedDataAnalytics #SparkAISummit 5

6 .Why is it important? Commercial Drive: ● Many enterprises rely on machine learning models to make important decision: ○ Loan, credit card application ○ To buy / sell commodities, stocks or advertisements ○ Diagnose cancer or benign cells ● Trust ○ It is easier for humans to trust a business that explains its decisions ● Legal Regulation ○ GDPR: the customer has right to obtain explanation #UnifiedDataAnalytics #SparkAISummit 6

7 . Why is it important? Technical Drive: Can you trust your model based on accuracy? ● Knowing the ‘why’ can help you learn ● Understand edge cases and ● Improve the model more about the problem and the data circumstances of failure #UnifiedDataAnalytics #SparkAISummit 7

8 .Why is it important? Social and ethical aspect: ● Interpretability - a useful debugging tool for Detecting Bias ○ Sean Owen: What do Developer Salaries Tell us about the Gender Pay Gap? ○ Minority, Gender ● Machine learning models pick up biases and may really harm vulnerable group of people ○ Be cautious about marketing strategy ○ Fairness: e.g. automatic approval or rejection of loan applications #UnifiedDataAnalytics #SparkAISummit 8

9 . Why is it difficult? ML creates functions to explicitly or implicitly combine variables (features) in sophisticated way Disaggregate the final prediction to single feature contribution and untangle interaction between features are very difficult! #UnifiedDataAnalytics #SparkAISummit 9

10 .Why it’s difficult? “ Data will often point with almost equal emphasis on several possible models. The question of which one most accurately reflects the data is difficult to resolve.” -- Leo Breiman, “Statistical Modeling: The Two Cultures” Explain one model out of many good models: if they give different pictures of nature’s mechanism and lead to different conclusions - how to justify one against another and recommend to the business? #UnifiedDataAnalytics #SparkAISummit 10

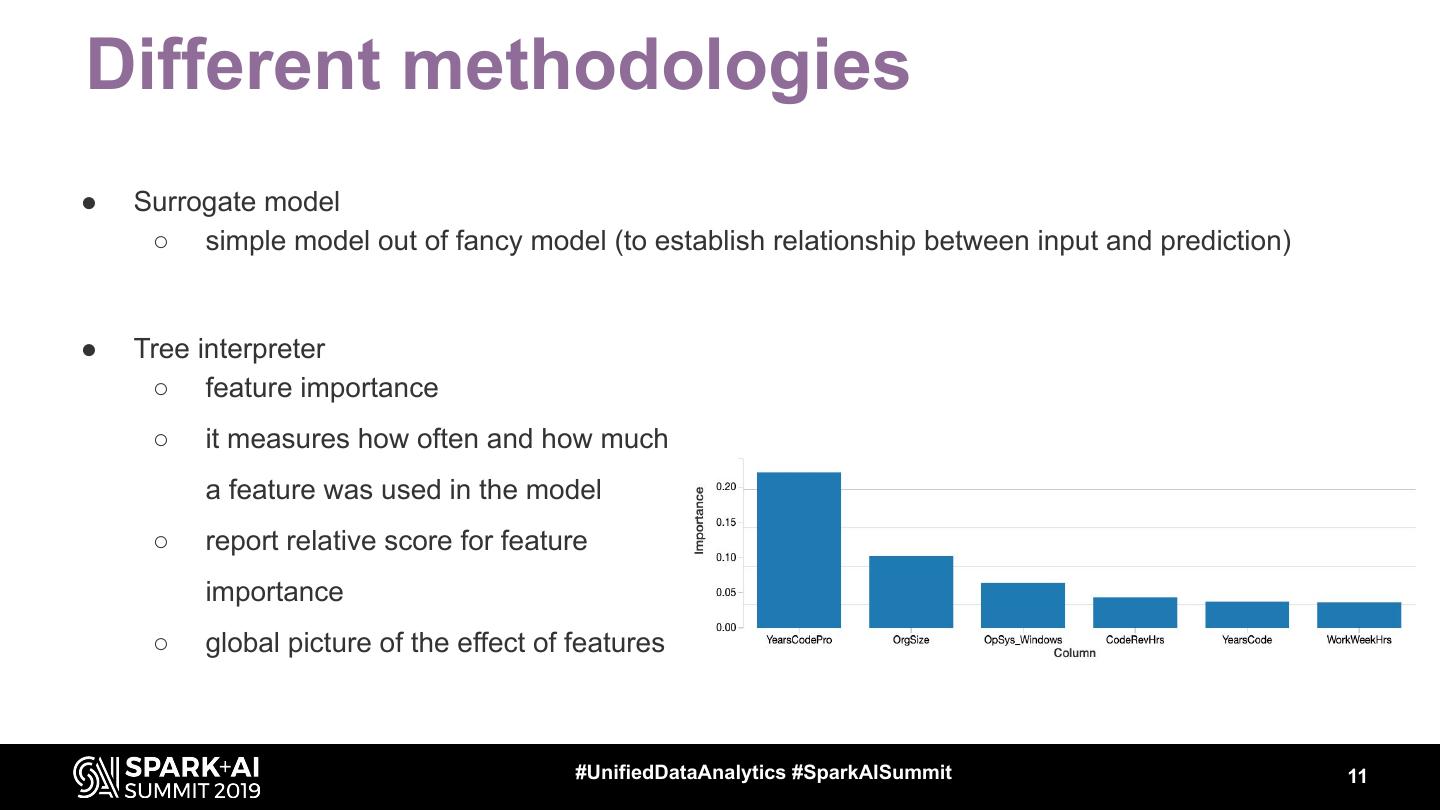

11 .Different methodologies ● Surrogate model ○ simple model out of fancy model (to establish relationship between input and prediction) ● Tree interpreter ○ feature importance ○ it measures how often and how much a feature was used in the model ○ report relative score for feature importance ○ global picture of the effect of features #UnifiedDataAnalytics #SparkAISummit 11

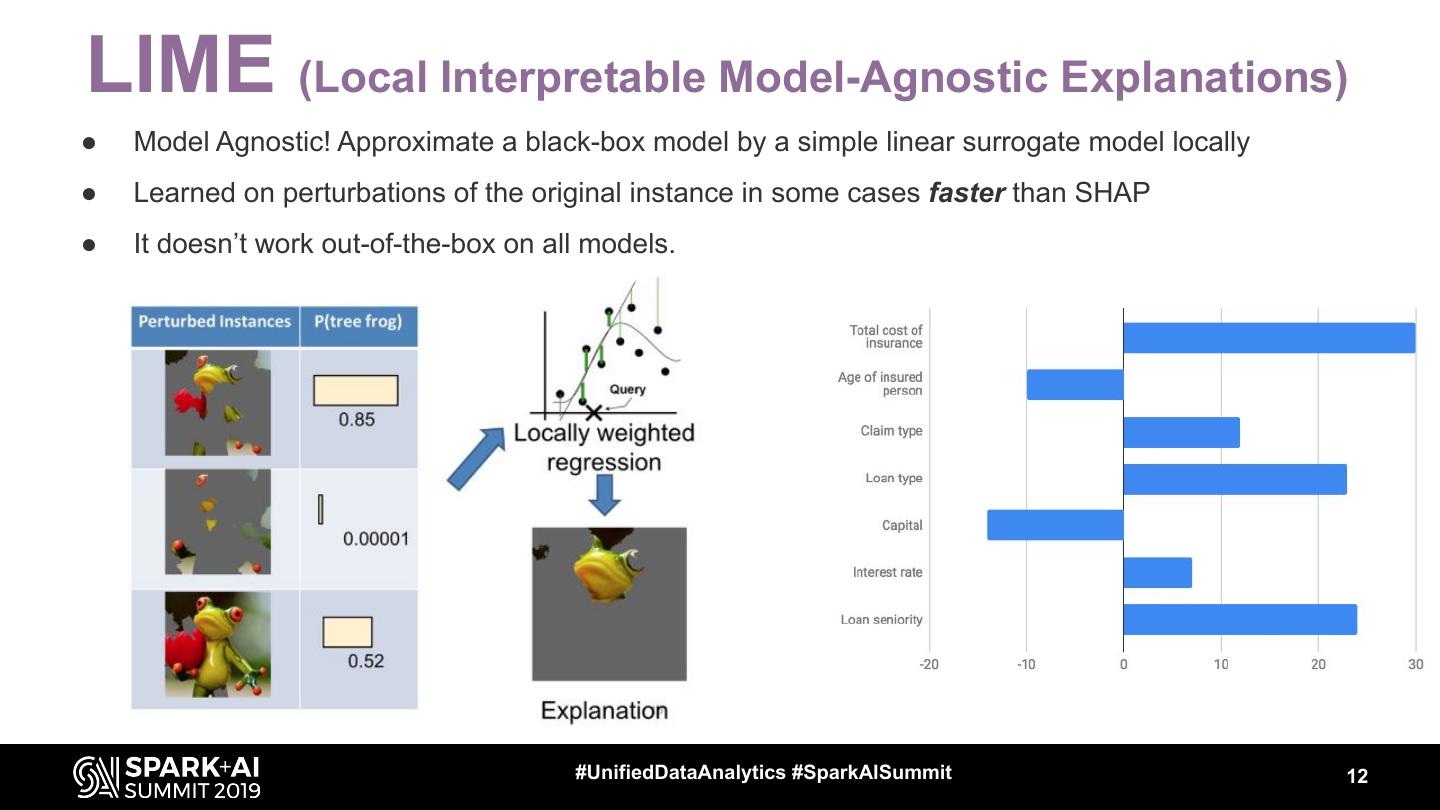

12 .LIME (Local Interpretable Model-Agnostic Explanations) ● Model Agnostic! Approximate a black-box model by a simple linear surrogate model locally ● Learned on perturbations of the original instance in some cases faster than SHAP ● It doesn’t work out-of-the-box on all models. #UnifiedDataAnalytics #SparkAISummit 12

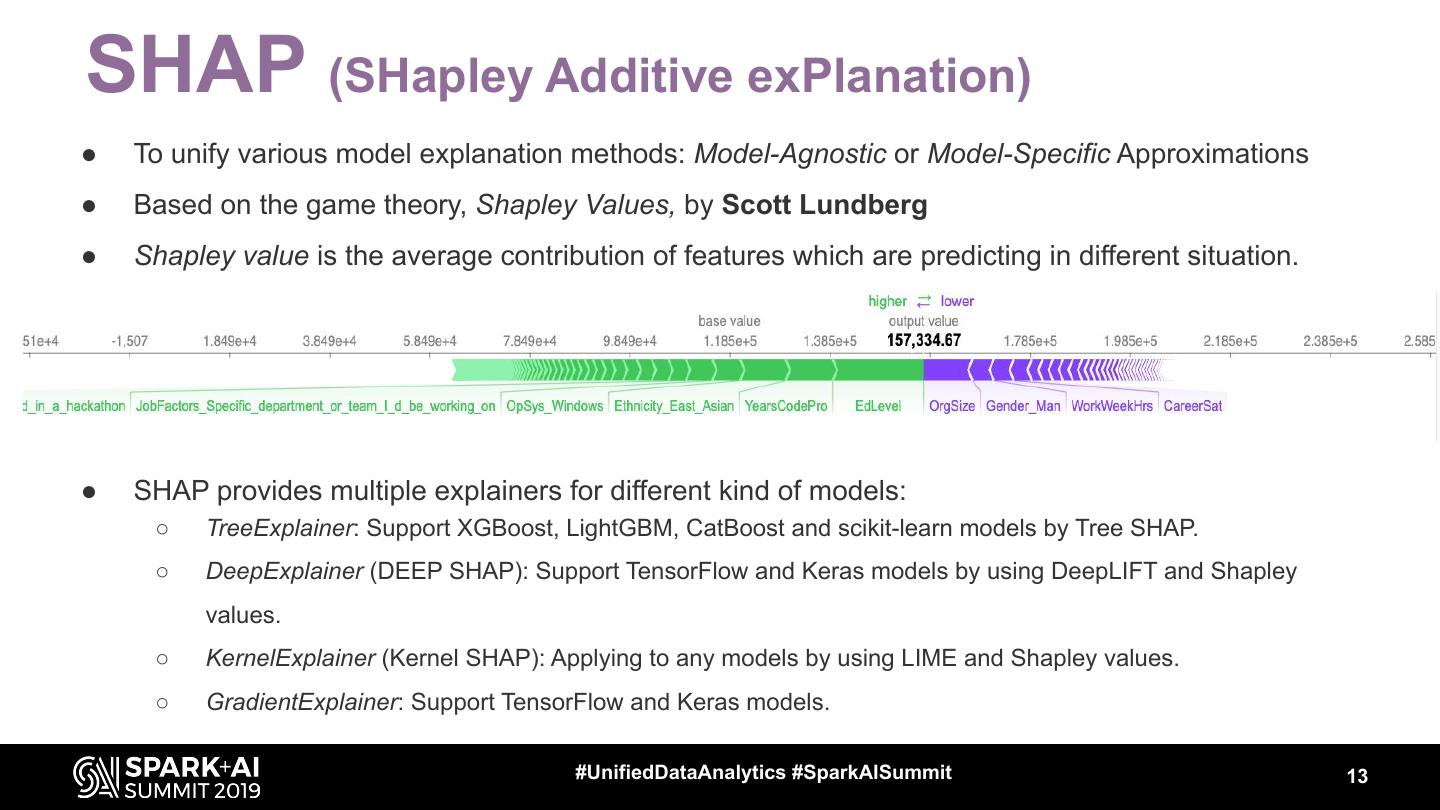

13 .SHAP (SHapley Additive exPlanation) ● To unify various model explanation methods: Model-Agnostic or Model-Specific Approximations ● Based on the game theory, Shapley Values, by Scott Lundberg ● Shapley value is the average contribution of features which are predicting in different situation. ● SHAP provides multiple explainers for different kind of models: ○ TreeExplainer: Support XGBoost, LightGBM, CatBoost and scikit-learn models by Tree SHAP. ○ DeepExplainer (DEEP SHAP): Support TensorFlow and Keras models by using DeepLIFT and Shapley values. ○ KernelExplainer (Kernel SHAP): Applying to any models by using LIME and Shapley values. ○ GradientExplainer: Support TensorFlow and Keras models. #UnifiedDataAnalytics #SparkAISummit 13

14 .SHAP: Important Benefits Produce explanations at the level of individual inputs ● Traditional feature importance algorithms will tell us which features are most important across the entire population ● With individual-level SHAP values, we can pinpoint which factors are most impactful for each customer #UnifiedDataAnalytics #SparkAISummit 14

15 .SHAP: Important Benefits Can directly relate feature values to the output, which greatly improves interpretation of the results ● SHAP can quantify the impact of a feature on the unit of the model target ● Avoid decomp matrix which involves complex transformation and calculation Read the impact of a feature in dollars! #UnifiedDataAnalytics #SparkAISummit 15

16 .Application SHAP explainer: CNN LIME: NLP Fasttext #UnifiedDataAnalytics #SparkAISummit 16

17 .Real world implementation Run on Single Node Distributed Implementation #UnifiedDataAnalytics #SparkAISummit 17

18 .DON’T FORGET TO RATE AND REVIEW THE SESSIONS SEARCH SPARK + AI SUMMIT