- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- <iframe src="https://www.slidestalk.com/slidestalk/4bigdl46902?embed&video" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

4.黄凯-使用BigDL构建和扩展大规模端到端的人工智能应用

展开查看详情

1 .

2 .BigDL Seamlessly scale end-to-end AI pipelines Kai Huang AI Frameworks Engineer, Intel

3 .Outline • BigDL Overview • BigDL Nano • BigDL Orca • BigDL Use Cases

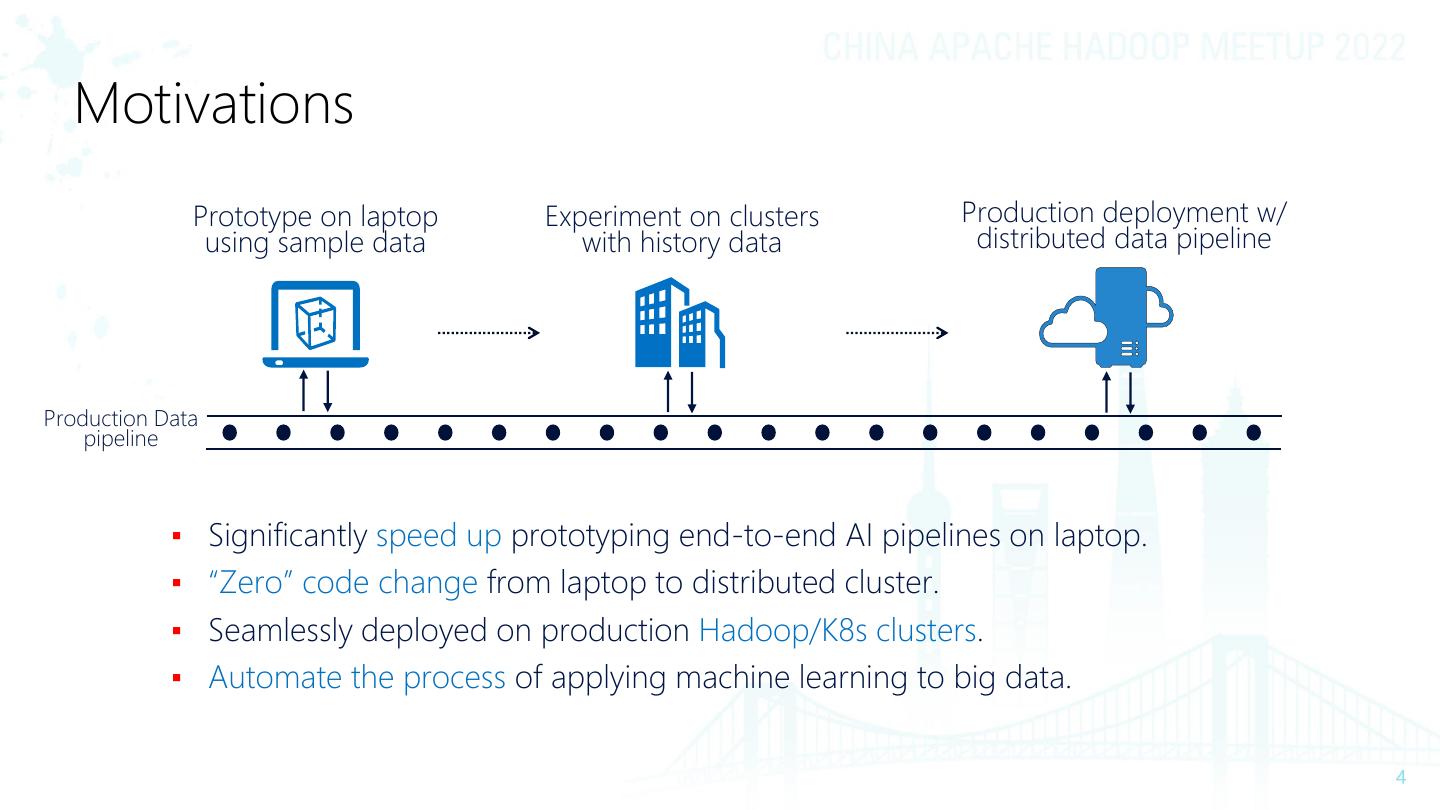

4 . Motivations Prototype on laptop Experiment on clusters Production deployment w/ using sample data with history data distributed data pipeline Production Data pipeline ▪ Significantly speed up prototyping end-to-end AI pipelines on laptop. ▪ “Zero” code change from laptop to distributed cluster. ▪ Seamlessly deployed on production Hadoop/K8s clusters. ▪ Automate the process of applying machine learning to big data.

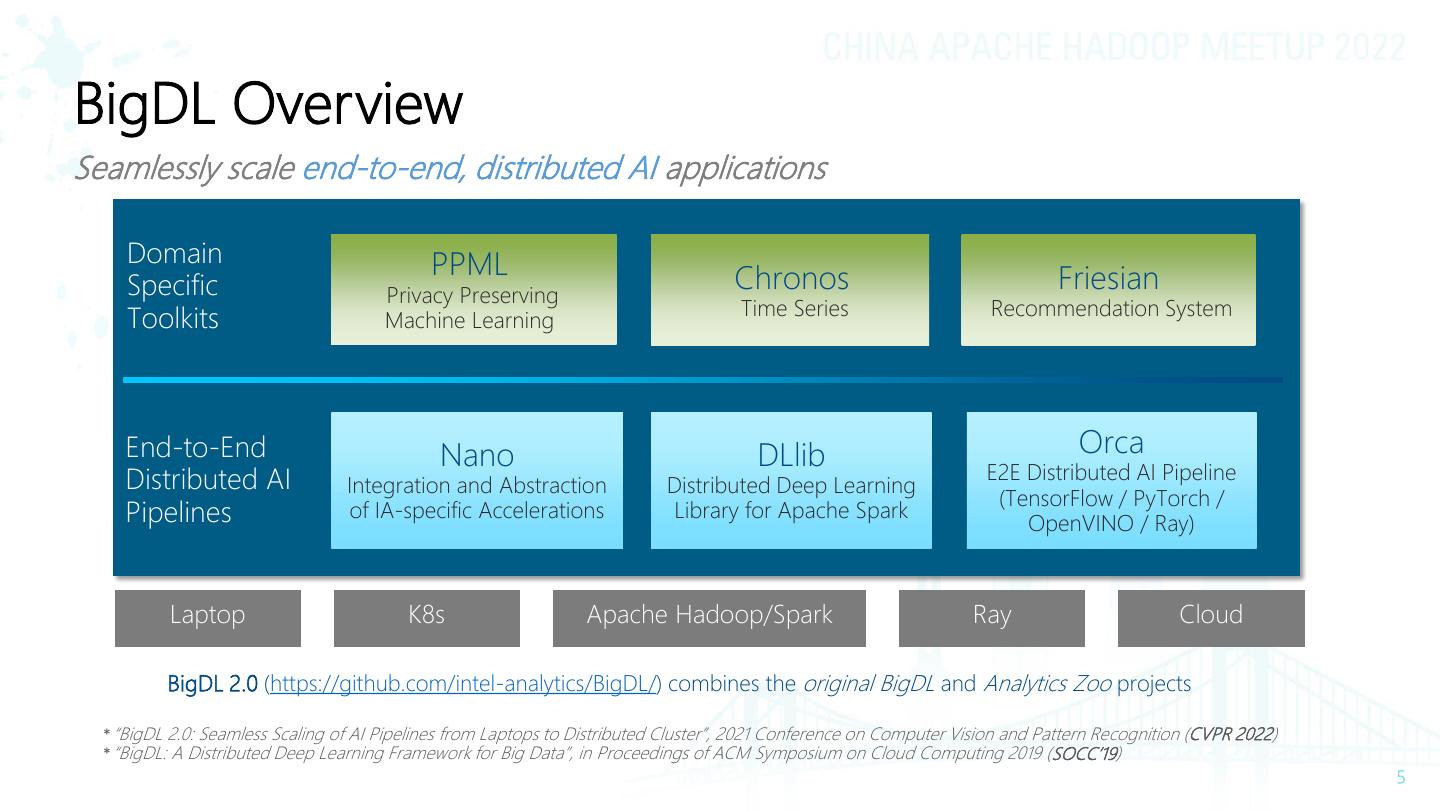

5 .BigDL Overview Seamlessly scale end-to-end, distributed AI applications Domain PPML Specific Privacy Preserving Chronos Friesian Time Series Recommendation System Toolkits Machine Learning End-to-End Nano DLlib Orca Distributed AI E2E Distributed AI Pipeline Integration and Abstraction Distributed Deep Learning (TensorFlow / PyTorch / Pipelines of IA-specific Accelerations Library for Apache Spark OpenVINO / Ray) Laptop K8s Apache Hadoop/Spark Ray Cloud BigDL 2.0 (https://github.com/intel-analytics/BigDL/) combines the original BigDL and Analytics Zoo projects * “BigDL 2.0: Seamless Scaling of AI Pipelines from Laptops to Distributed Cluster”, 2021 Conference on Computer Vision and Pattern Recognition (CVPR 2022) * “BigDL: A Distributed Deep Learning Framework for Big Data”, in Proceedings of ACM Symposium on Cloud Computing 2019 (SOCC’19)

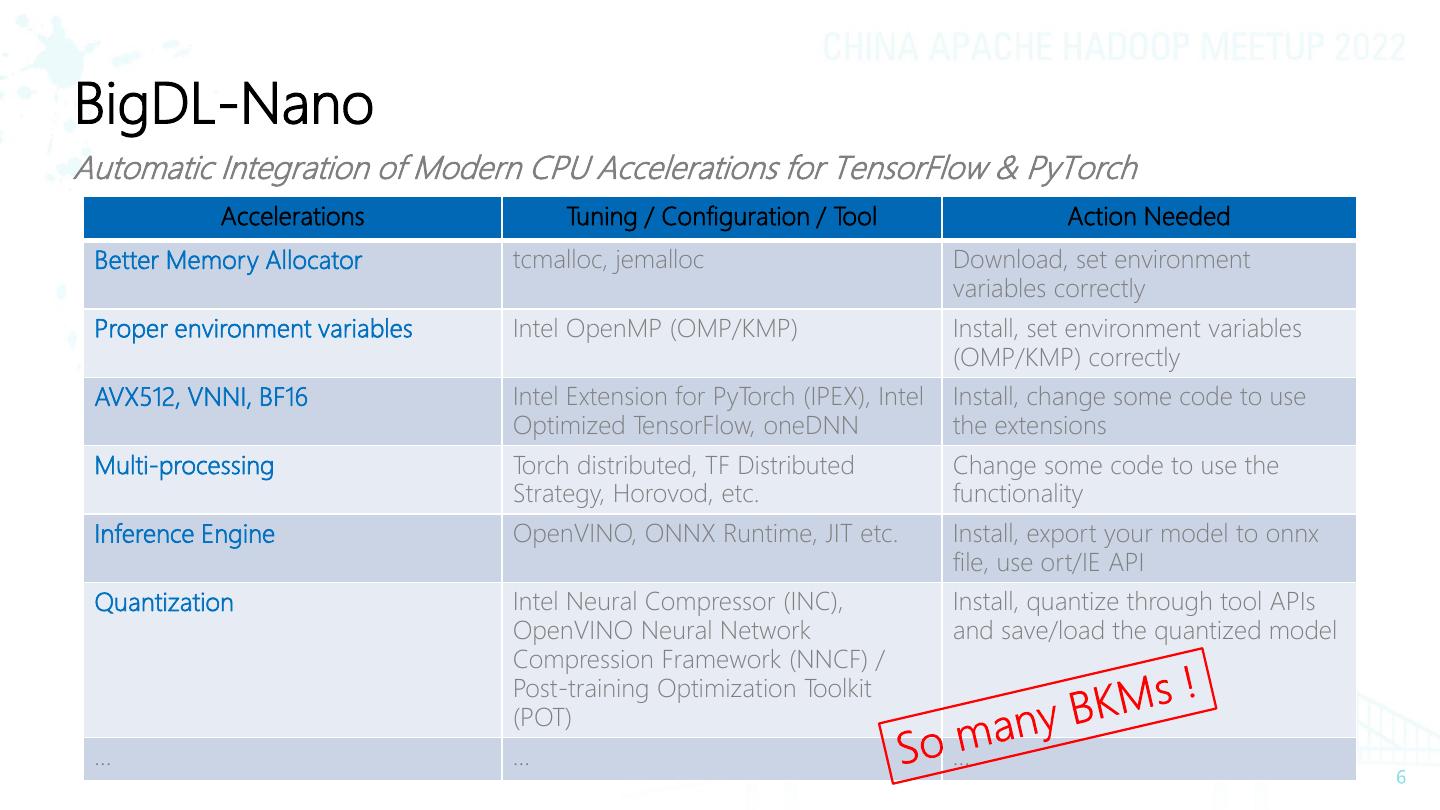

6 .BigDL-Nano Automatic Integration of Modern CPU Accelerations for TensorFlow & PyTorch Accelerations Tuning / Configuration / Tool Action Needed Better Memory Allocator tcmalloc, jemalloc Download, set environment variables correctly Proper environment variables Intel OpenMP (OMP/KMP) Install, set environment variables (OMP/KMP) correctly AVX512, VNNI, BF16 Intel Extension for PyTorch (IPEX), Intel Install, change some code to use Optimized TensorFlow, oneDNN the extensions Multi-processing Torch distributed, TF Distributed Change some code to use the Strategy, Horovod, etc. functionality Inference Engine OpenVINO, ONNX Runtime, JIT etc. Install, export your model to onnx file, use ort/IE API Quantization Intel Neural Compressor (INC), Install, quantize through tool APIs OpenVINO Neural Network and save/load the quantized model Compression Framework (NNCF) / Post-training Optimization Toolkit (POT) … … …

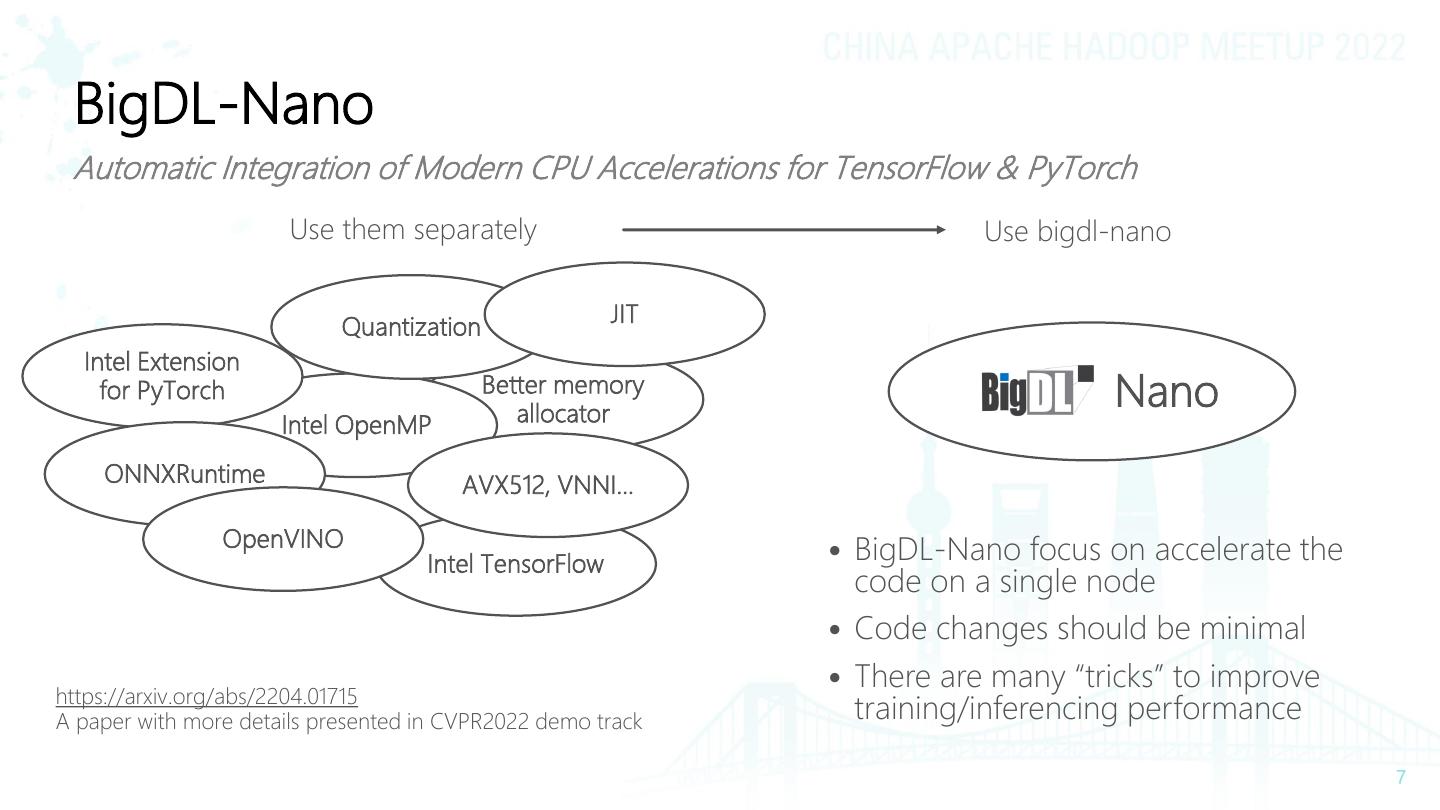

7 . BigDL-Nano Automatic Integration of Modern CPU Accelerations for TensorFlow & PyTorch Use them separately Use bigdl-nano JIT Quantization Intel Extension for PyTorch Better memory allocator Nano Intel OpenMP ONNXRuntime AVX512, VNNI… OpenVINO Intel TensorFlow • BigDL-Nano focus on accelerate the code on a single node • Code changes should be minimal • There are many “tricks” to improve https://arxiv.org/abs/2204.01715 A paper with more details presented in CVPR2022 demo track training/inferencing performance

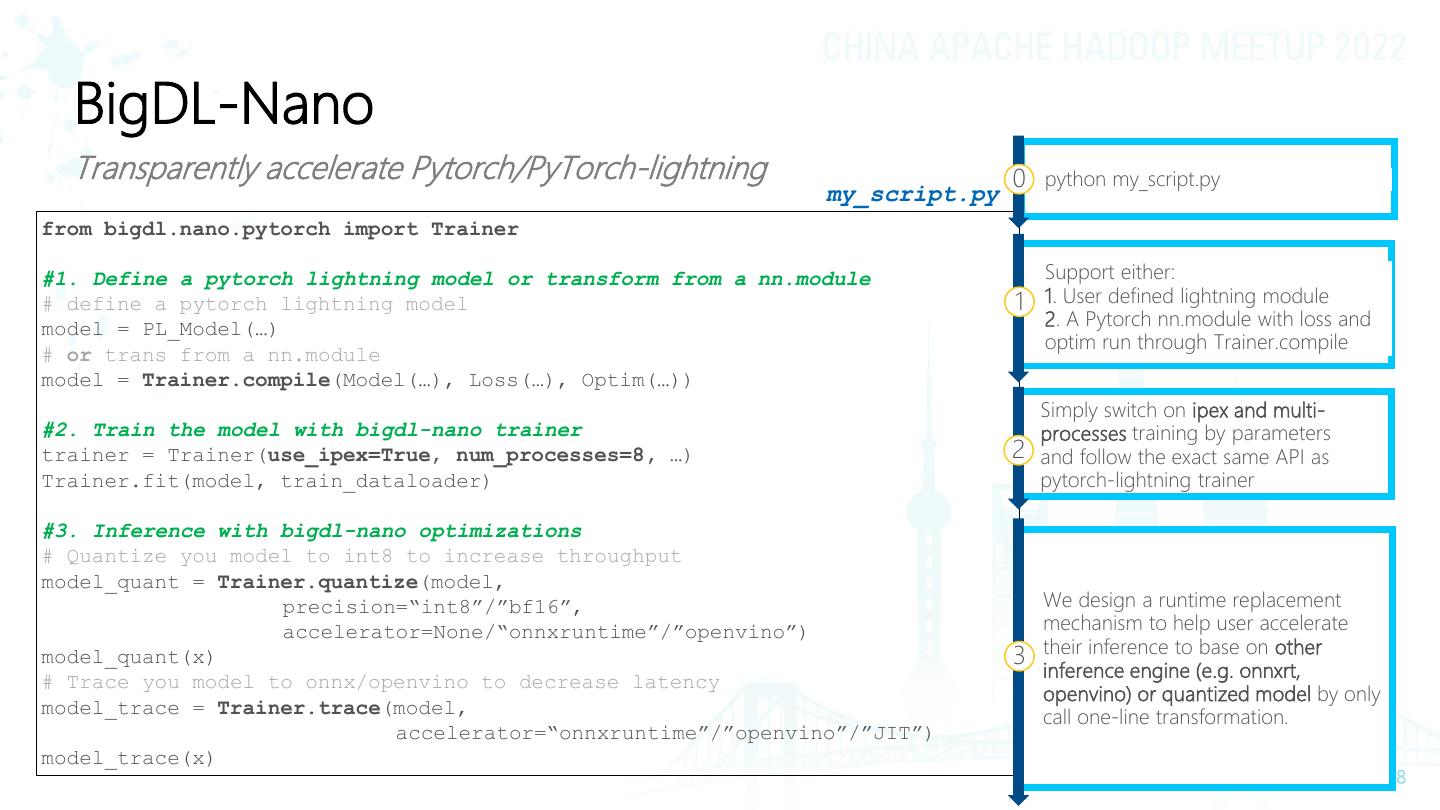

8 . BigDL-Nano Transparently accelerate Pytorch/PyTorch-lightning 0 python my_script.py my_script.py from bigdl.nano.pytorch import Trainer #1. Define a pytorch lightning model or transform from a nn.module Support either: # define a pytorch lightning model 1 1. User defined lightning module model = PL_Model(…) 2. A Pytorch nn.module with loss and optim run through Trainer.compile # or trans from a nn.module model = Trainer.compile(Model(…), Loss(…), Optim(…)) Simply switch on ipex and multi- #2. Train the model with bigdl-nano trainer processes training by parameters trainer = Trainer(use_ipex=True, num_processes=8, …) 2 and follow the exact same API as Trainer.fit(model, train_dataloader) pytorch-lightning trainer #3. Inference with bigdl-nano optimizations # Quantize you model to int8 to increase throughput model_quant = Trainer.quantize(model, precision=“int8”/”bf16”, We design a runtime replacement accelerator=None/“onnxruntime”/”openvino”) mechanism to help user accelerate their inference to base on other model_quant(x) 3 inference engine (e.g. onnxrt, # Trace you model to onnx/openvino to decrease latency openvino) or quantized model by only model_trace = Trainer.trace(model, call one-line transformation. accelerator=“onnxruntime”/”openvino”/”JIT”) model_trace(x)

9 . BigDL-Nano Transparently accelerate TensorFlow Keras my_script.py 0 python my_script.py from bigdl.nano.tf.keras import Sequential from bigdl.nano.tf.keras import Model #1. Define and Train the model with your own code model = Sequential([…]) 1 Minor changes on training code. model.compile(…) model.fit(…, num_processes=8) #2. Inference with bigdl-nano optimizations Quantize the model for tensorflow’s model_quant = model.quantize(train_dataset) 2 optimization model_quant(x)

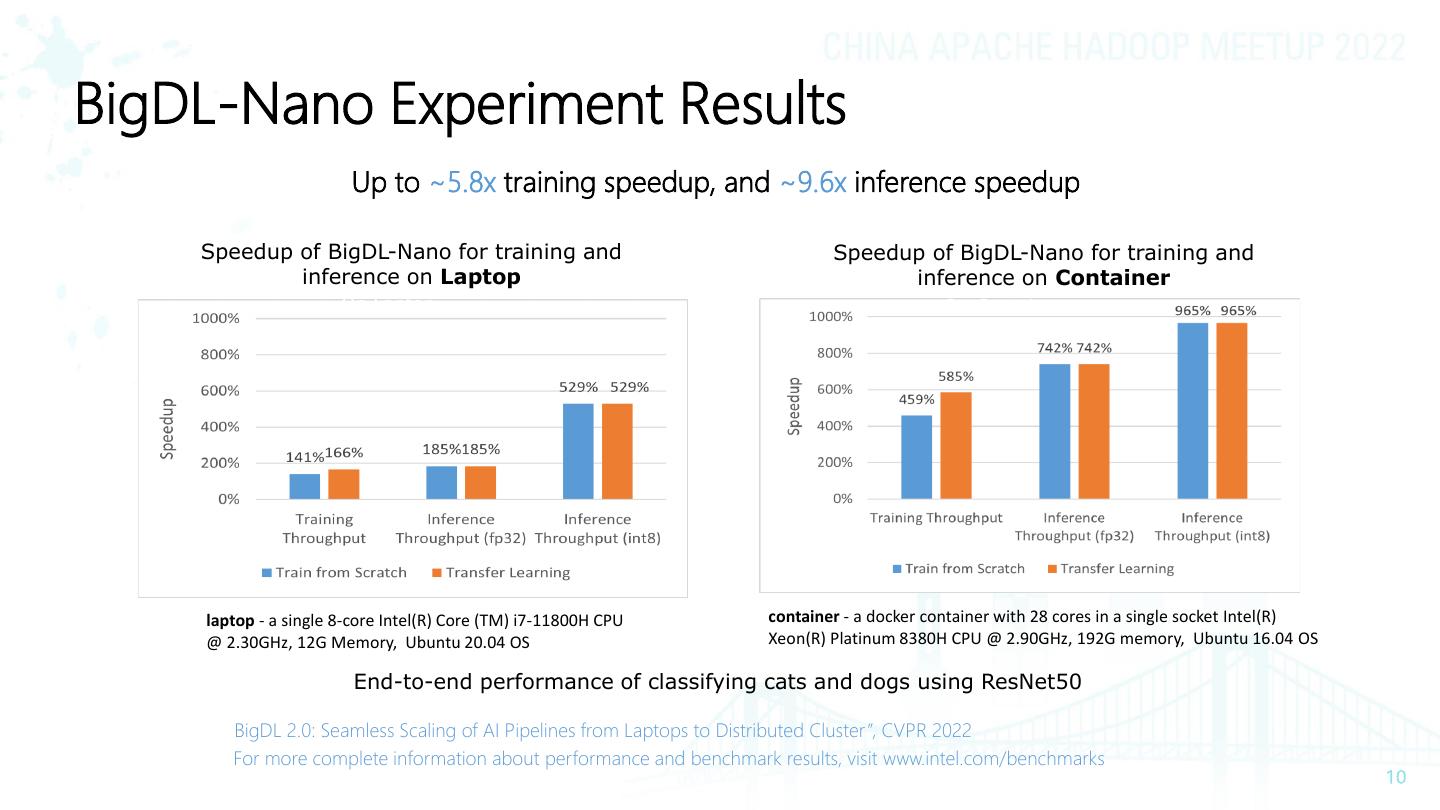

10 .BigDL-Nano Experiment Results Up to ~5.8x training speedup, and ~9.6x inference speedup Speedup of BigDL-Nano for training and Speedup of BigDL-Nano for training and inference on Laptop inference on Container On Laptop On Container laptop - a single 8-core Intel(R) Core (TM) i7-11800H CPU container - a docker container with 28 cores in a single socket Intel(R) @ 2.30GHz, 12G Memory, Ubuntu 20.04 OS Xeon(R) Platinum 8380H CPU @ 2.90GHz, 192G memory, Ubuntu 16.04 OS End-to-end performance of classifying cats and dogs using ResNet50 BigDL 2.0: Seamless Scaling of AI Pipelines from Laptops to Distributed Cluster ”, CVPR 2022 For more complete information about performance and benchmark results, visit www.intel.com/benchmarks

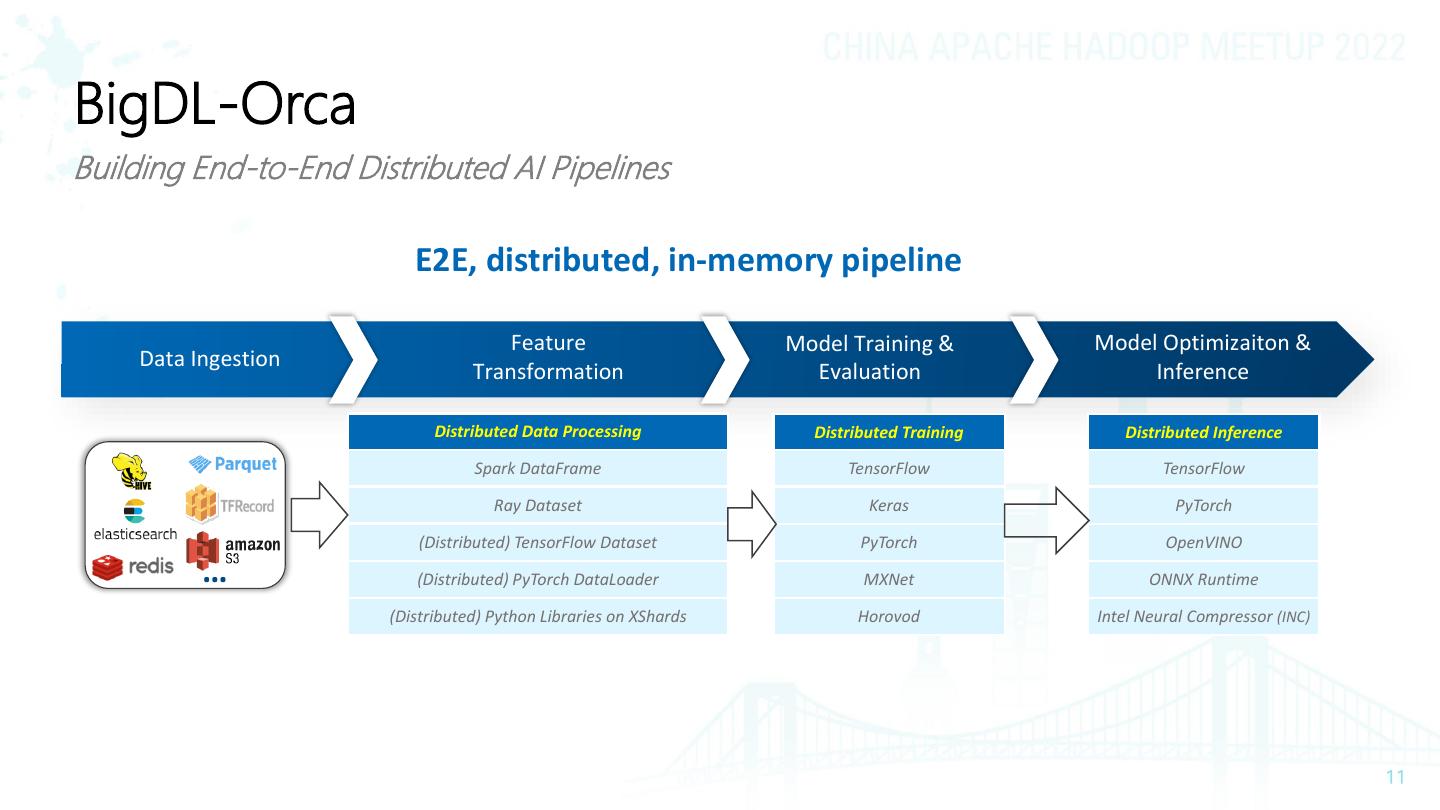

11 .BigDL-Orca Building End-to-End Distributed AI Pipelines E2E, distributed, in-memory pipeline Feature Model Training & Model Optimizaiton & Data Ingestion Transformation Evaluation Inference Distributed Data Processing Distributed Training Distributed Inference Spark DataFrame TensorFlow TensorFlow Ray Dataset Keras PyTorch (Distributed) TensorFlow Dataset PyTorch OpenVINO … (Distributed) PyTorch DataLoader MXNet ONNX Runtime (Distributed) Python Libraries on XShards Horovod Intel Neural Compressor (INC)

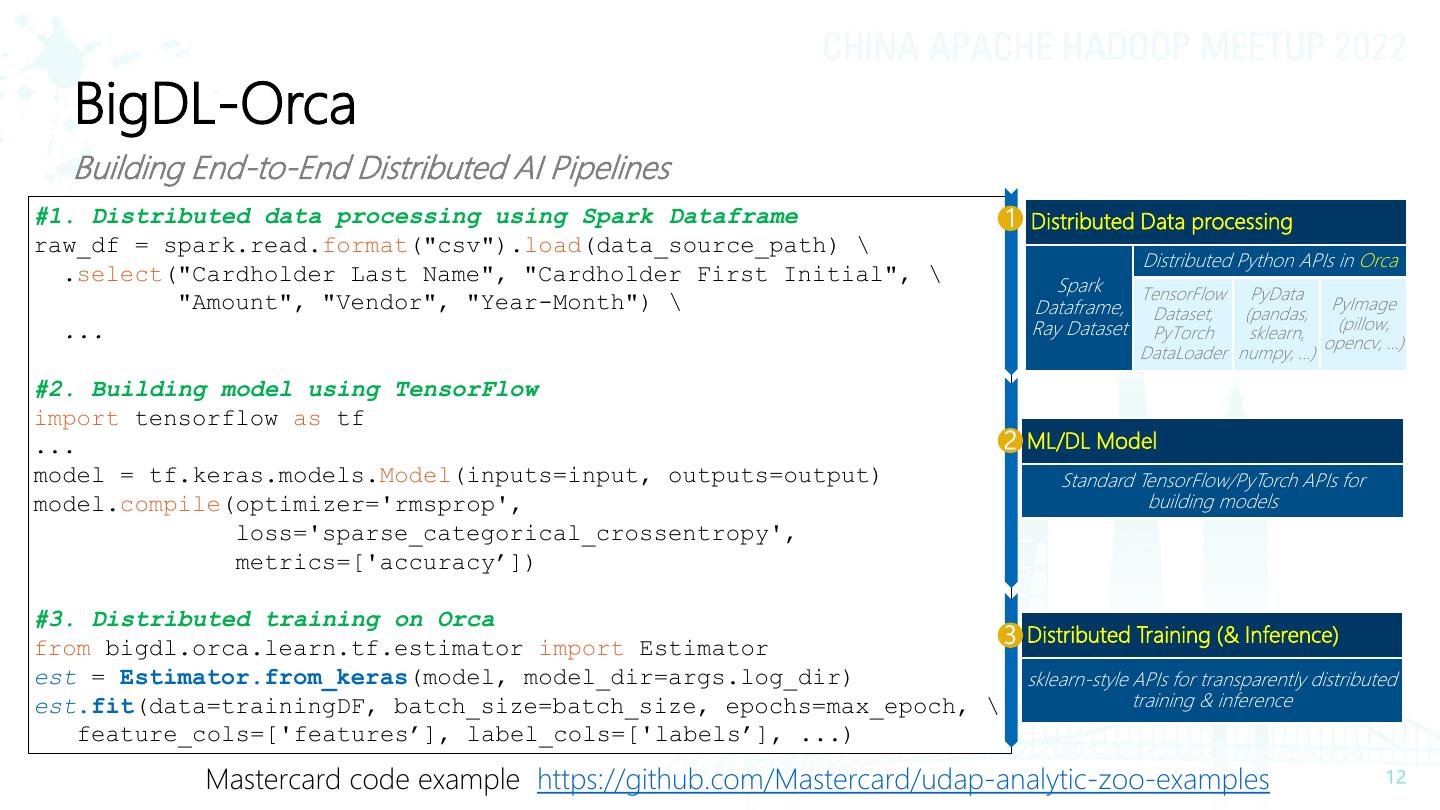

12 . BigDL-Orca Building End-to-End Distributed AI Pipelines #1. Distributed data processing using Spark Dataframe 1 Distributed Data processing raw_df = spark.read.format("csv").load(data_source_path) \ Distributed Python APIs in Orca .select("Cardholder Last Name", "Cardholder First Initial", \ Spark TensorFlow PyData "Amount", "Vendor", "Year-Month") \ Dataframe, Dataset, (pandas, PyImage ... Ray Dataset PyTorch (pillow, sklearn, opencv, …) DataLoader numpy, ...) #2. Building model using TensorFlow import tensorflow as tf ... 2 ML/DL Model model = tf.keras.models.Model(inputs=input, outputs=output) Standard TensorFlow/PyTorch APIs for model.compile(optimizer='rmsprop', building models loss='sparse_categorical_crossentropy', metrics=['accuracy’]) #3. Distributed training on Orca from bigdl.orca.learn.tf.estimator import Estimator 3 Distributed Training (& Inference) est = Estimator.from_keras(model, model_dir=args.log_dir) sklearn-style APIs for transparently distributed est.fit(data=trainingDF, batch_size=batch_size, epochs=max_epoch, \ training & inference feature_cols=['features’], label_cols=['labels’], ...) Mastercard code example (https://github.com/Mastercard/udap-analytic-zoo-examples)

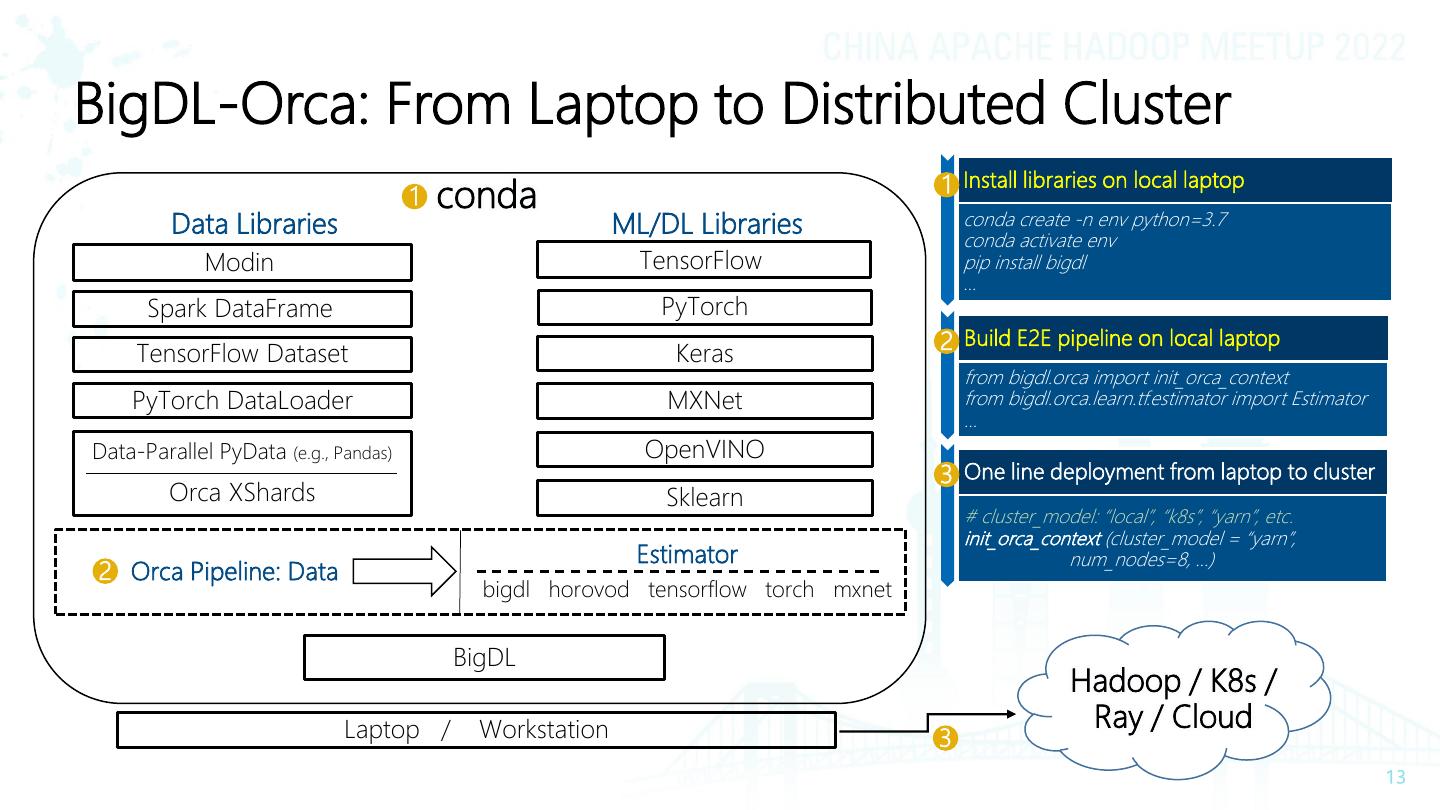

13 .BigDL-Orca: From Laptop to Distributed Cluster 1 Install libraries on local laptop 1 conda Data Libraries ML/DL Libraries conda create -n env python=3.7 conda activate env Modinl TensorFlowl pip install bigdl … Spark DataFramel PyTorch 2 Build E2E pipeline on local laptop TensorFlow Dataset Keras from bigdl.orca import init_orca_context PyTorch DataLoader MXNet from bigdl.orca.learn.tf.estimator import Estimator … Data-Parallel PyData (e.g., Pandas) OpenVINO 3 One line deployment from laptop to cluster Orca XShards Sklearn # cluster_model: “local”, “k8s”, “yarn”, etc. init_orca_context (cluster_model = “yarn”, Estimator num_nodes=8, ...) 2 Orca Pipeline: Data bigdl horovod tensorflow torch mxnet BigDL Hadoop / K8s / Laptop / Workstation Ray / Cloud 3

14 .BigDL-Orca: RayOnSpark Run Ray Programs Directly on Spark from bigdl.orca import init_orca_context YARN/K8s/Standalone/Hosted init_orca_context(cluster_mode="yarn", ..., init_ray_on_spark=True) import ray @ray.remote class Counter(object): def __init__(self): self.n = 0 def inc(self): self.n += 1 return self.n counters = [Counter.remote() for i in range(5)] print(ray.get([c.inc.remote() for c in counters]))

15 .BigDL Domain Specific Toolkits • BigDL PPML: Privacy Preserving ML & Data Analytics • Project Chronos: Scalable Time Series Toolkit • Project Friesian: E2E Recommender Toolkit

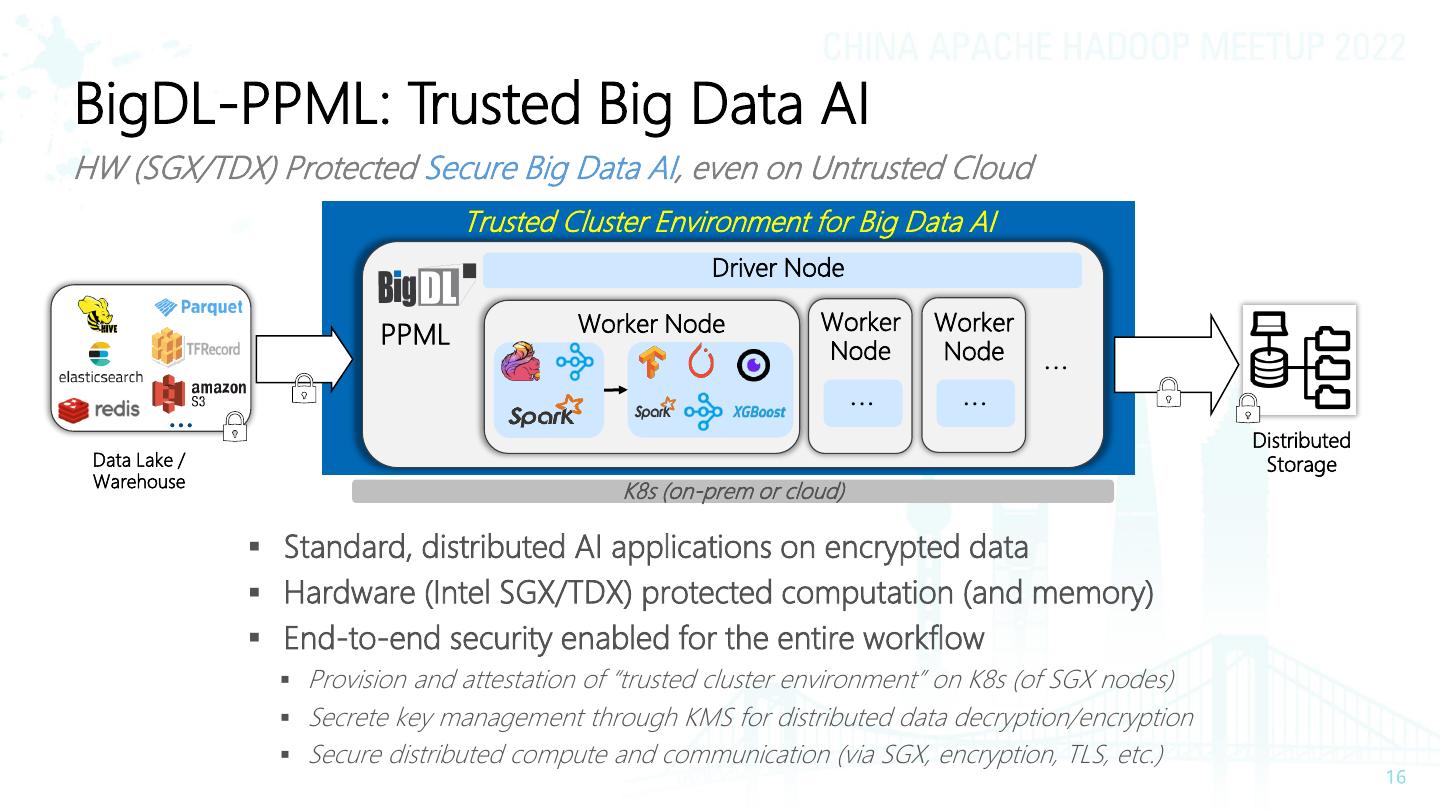

16 .BigDL-PPML: Trusted Big Data AI HW (SGX/TDX) Protected Secure Big Data AI, even on Untrusted Cloud Trusted Cluster Environment for Big Data AI Driver Node Worker Node Worker Worker PPML Node Node ... ... ... … Distributed Data Lake / Storage Warehouse K8s (on-prem or cloud) ▪ Standard, distributed AI applications on encrypted data ▪ Hardware (Intel SGX/TDX) protected computation (and memory) ▪ End-to-end security enabled for the entire workflow ▪ Provision and attestation of “trusted cluster environment” on K8s (of SGX nodes) ▪ Secrete key management through KMS for distributed data decryption/encryption ▪ Secure distributed compute and communication (via SGX, encryption, TLS, etc.)

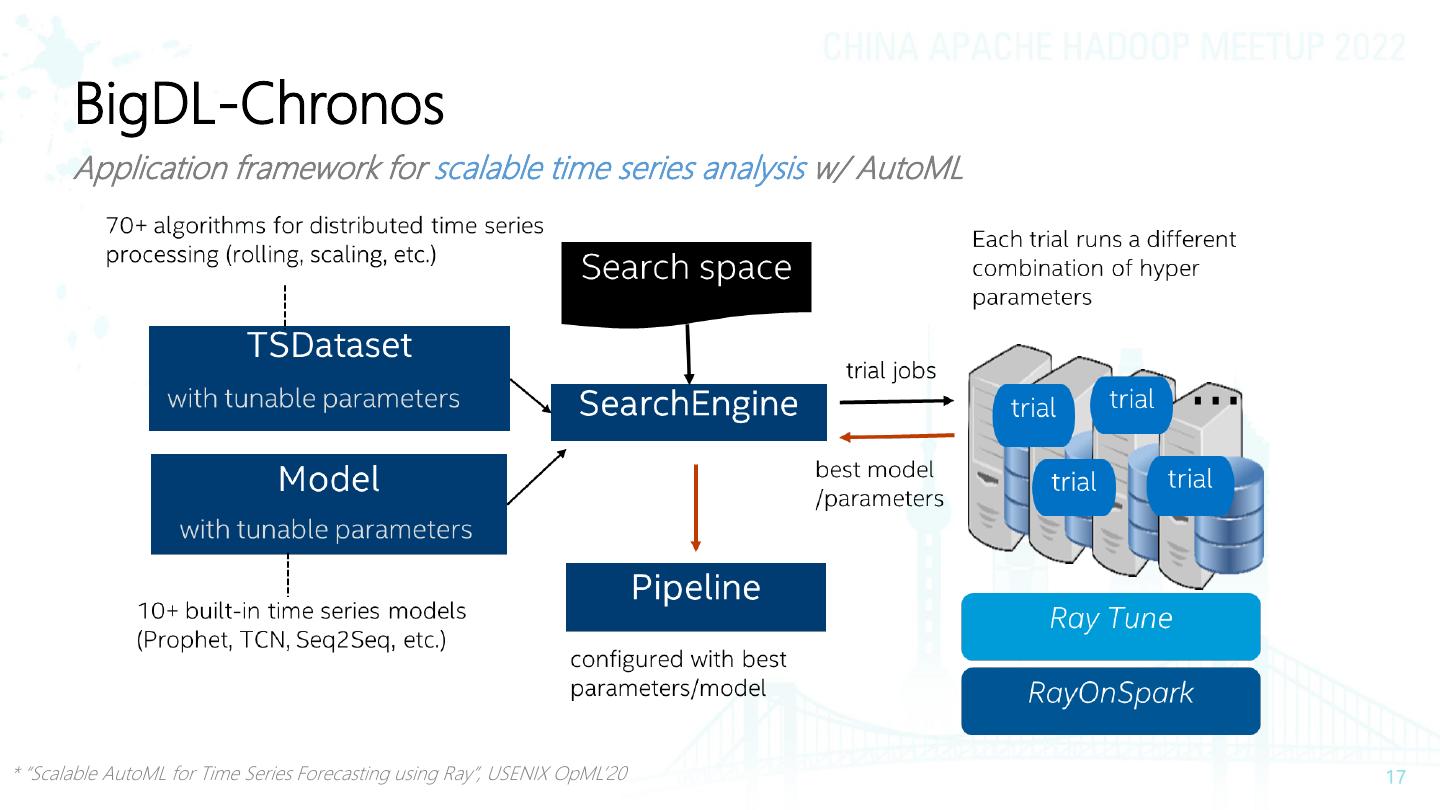

17 . BigDL-Chronos Application framework for scalable time series analysis w/ AutoML * “Scalable AutoML for Time Series Forecasting using Ray”, USENIX OpML’20

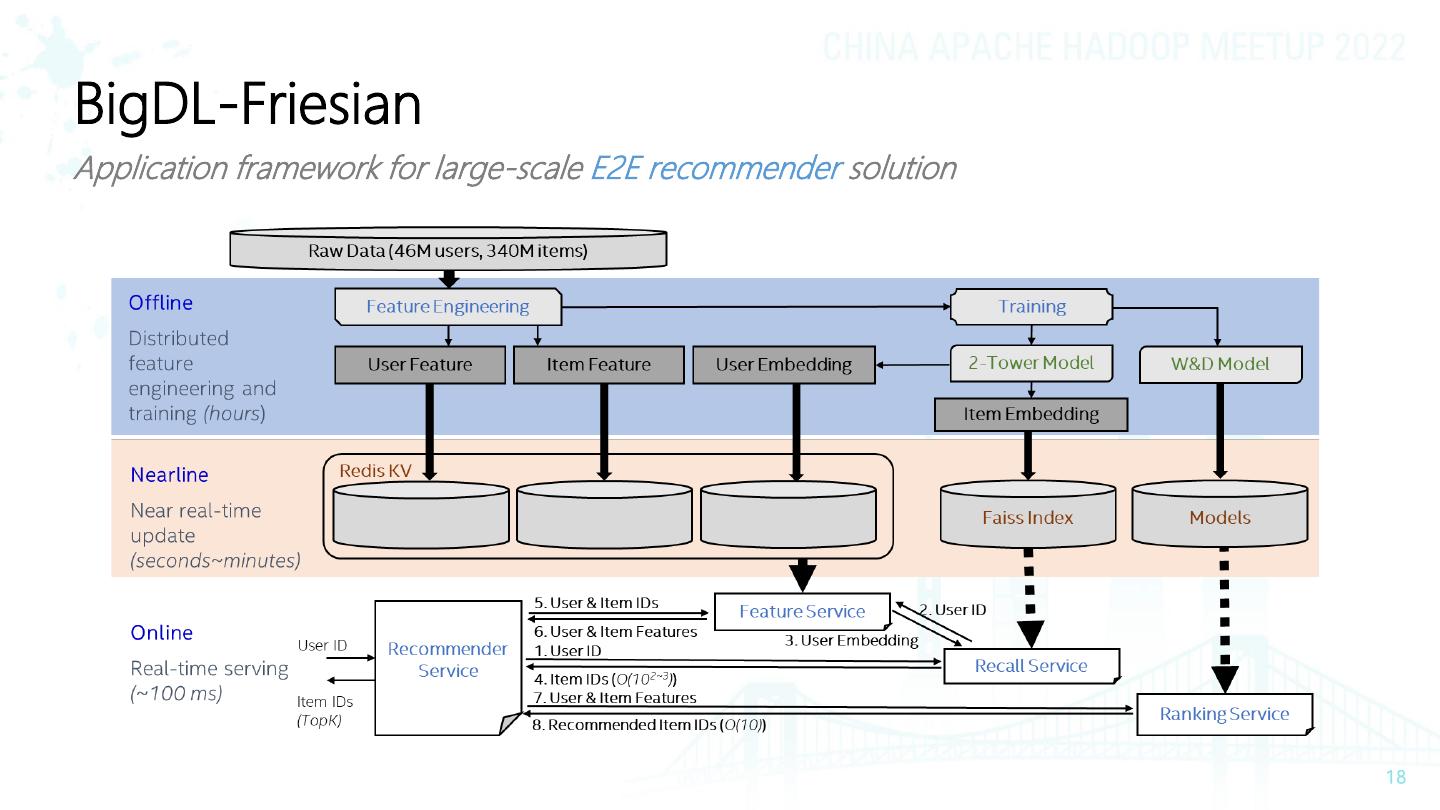

18 .BigDL-Friesian Application framework for large-scale E2E recommender solution

19 .“AI at Scale” in Mastercard with BigDL Distributed Distributed Transactions Data ETL DL Models Training / Tuning Inference • Building distributed AI applications directly on Enterprise Data Warehouse platform • Supporting up to 2.2 billion users, 100s of billions of records, and distributed training on several hundred Intel Xeon servers https://www.intel.com/content/www/us/en/developer/articles/technical/ai-at-scale-in-mastercard-with-bigdl0.html

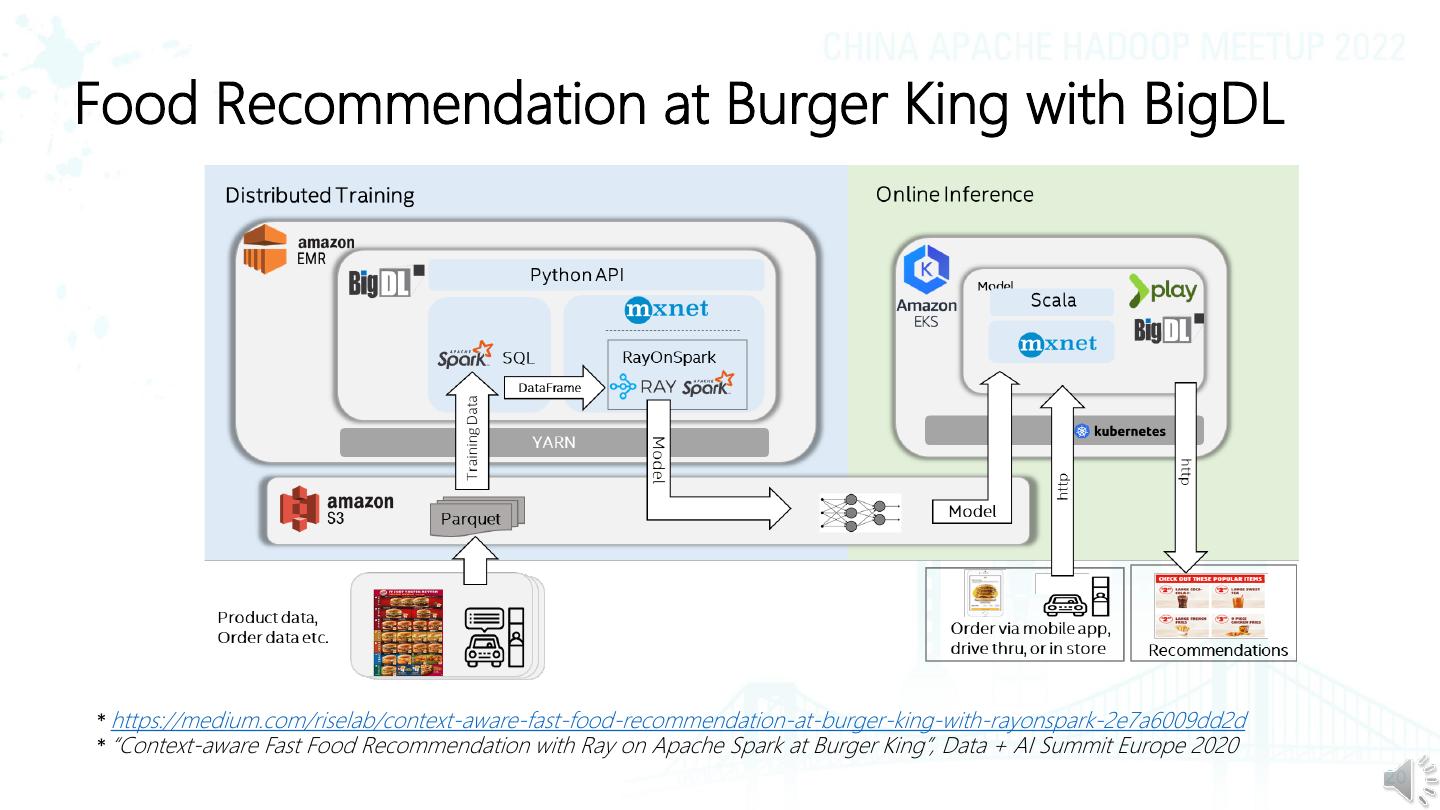

20 .Food Recommendation at Burger King with BigDL * https://medium.com/riselab/context-aware-fast-food-recommendation-at-burger-king-with-rayonspark-2e7a6009dd2d * “Context-aware Fast Food Recommendation with Ray on Apache Spark at Burger King”, Data + AI Summit Europe 2020

21 .Summary BigDL: Building Large-Scale AI Applications for Distributed Big Data o E2E distributed AI pipelines (seamless scaling from laptop to cluster) o Domain-specific AI toolkits (PPML, Time Series, Recommender) o 40+ real-world customer use cases • Project repo: https://github.com/intel-analytics/BigDL • Use case: https://bigdl.readthedocs.io/en/latest/doc/Application/powered-by.html • Talks: https://bigdl.readthedocs.io/en/latest/doc/Application/presentations.html • BigDL 2.0: Seamless Scaling of AI Pipelines from Laptops to Distributed Cluster: https://arxiv.org/abs/2204.01715 • ACM SoCC 2019 paper: https://arxiv.org/abs/1804.05839

22 .

23 .