- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- <iframe src="https://www.slidestalk.com/slidestalk/linyiqun?embed&video" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

eBay Hadoop集群优化与3.3升级实践-林意群

林意群-eBay大数据工程师,Apache Hadoop PMC Member

eBay大数据工程师,Apache Hadoop PMC成员,Apache Ozone PMC成员,拥有多年参与开源社区的经验。目前专注于eBay HDFS集群性能优化方面的工作,著有《深度剖析Hadoop HDFS》一书。

分享介绍:

在eBay,我们主要使用Hadoop 2.7版本作为内部运行的稳定版本。随着公司业务的发展,我们针对Hadoop 2.7版本做了很多的优化和改进。但是随着社区的快速迭代更新,我们所使用的Hadoop版本已经远远落后于社区最新的3.3版本,导致我们无法直接使用Hadoop 3.x的很多新特性。另一方面,eBay有着严格的安全合规要求,Hadoop 2.7版本存在着许多CVE问题,升级到3.3版本能够帮助我们很好地解决这些问题。本次分享将介绍我们在Hadoop 2.7版本做的部分优化,以及如何将eBay Hadoop集群从2.7版本平滑升级到3.3版本的经验和解决方案。

展开查看详情

1 .eBay Hadoop optimization and 3.3 upgrade Yiqun Lin – eBay Software engineer 1

2 .Agenda 01 Background and Challenge 02 Data migration and HDFS RBF 03 Hadoop 3.3 Rolling Upgrade 2

3 .Part 01 Background and Challenge 3

4 .Background and Challenge Data Scale Growth Security Issues Outdated Hadoop Version 4

5 .Background and Challenge Migrate data and balance data across clusters Transparent and no impact for customers Automation and few manual operations Data migration with HDFS RBF Catch up with latest community Hadoop version No downtime during the version upgrade API and protocol compatible Hadoop 3.3 Rolling Upgrade 5

6 .Part 02 Data migration with HDFS RBF 6

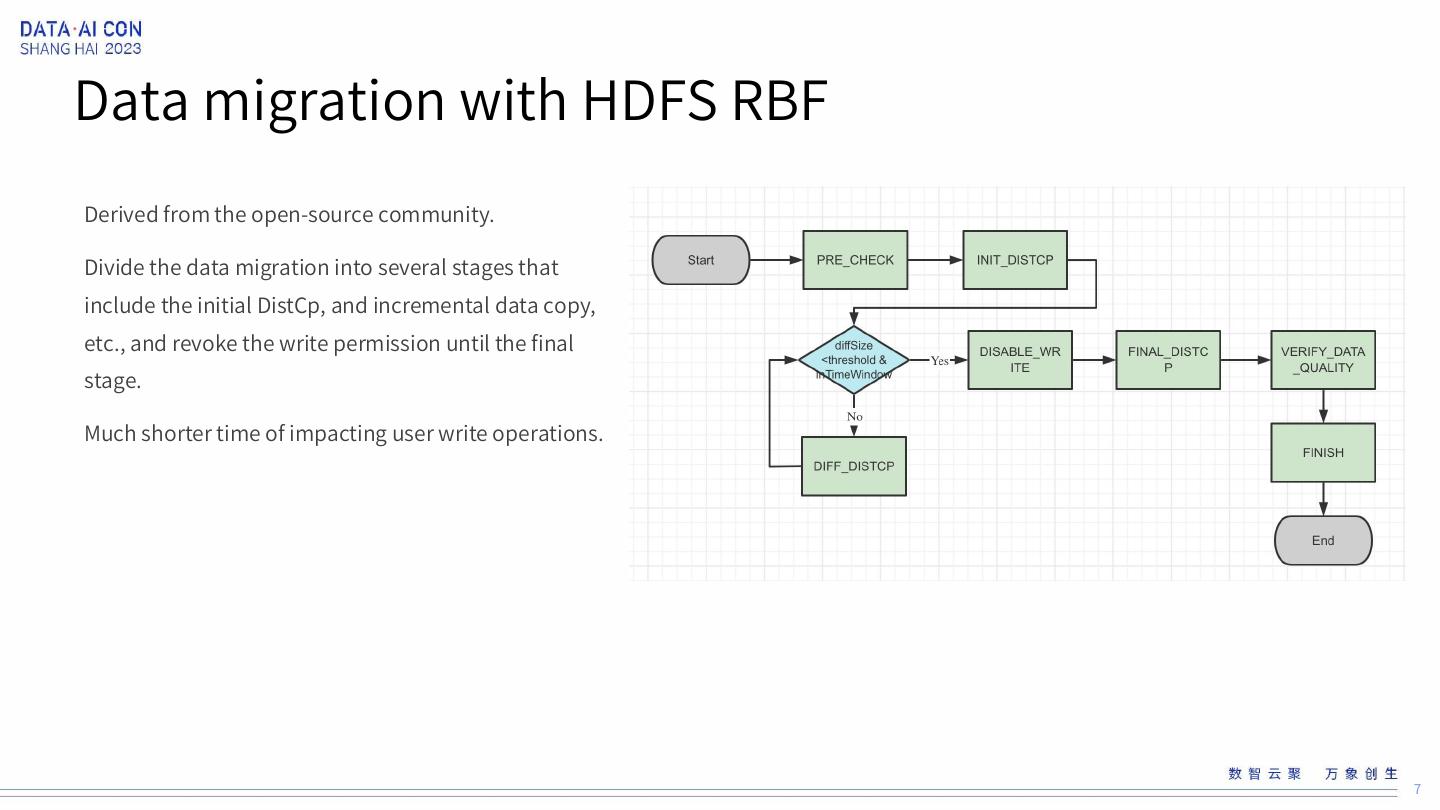

7 .Data migration with HDFS RBF Derived from the open-source community. Divide the data migration into several stages that include the initial DistCp, and incremental data copy, etc., and revoke the write permission until the final stage. Much shorter time of impacting user write operations. 7

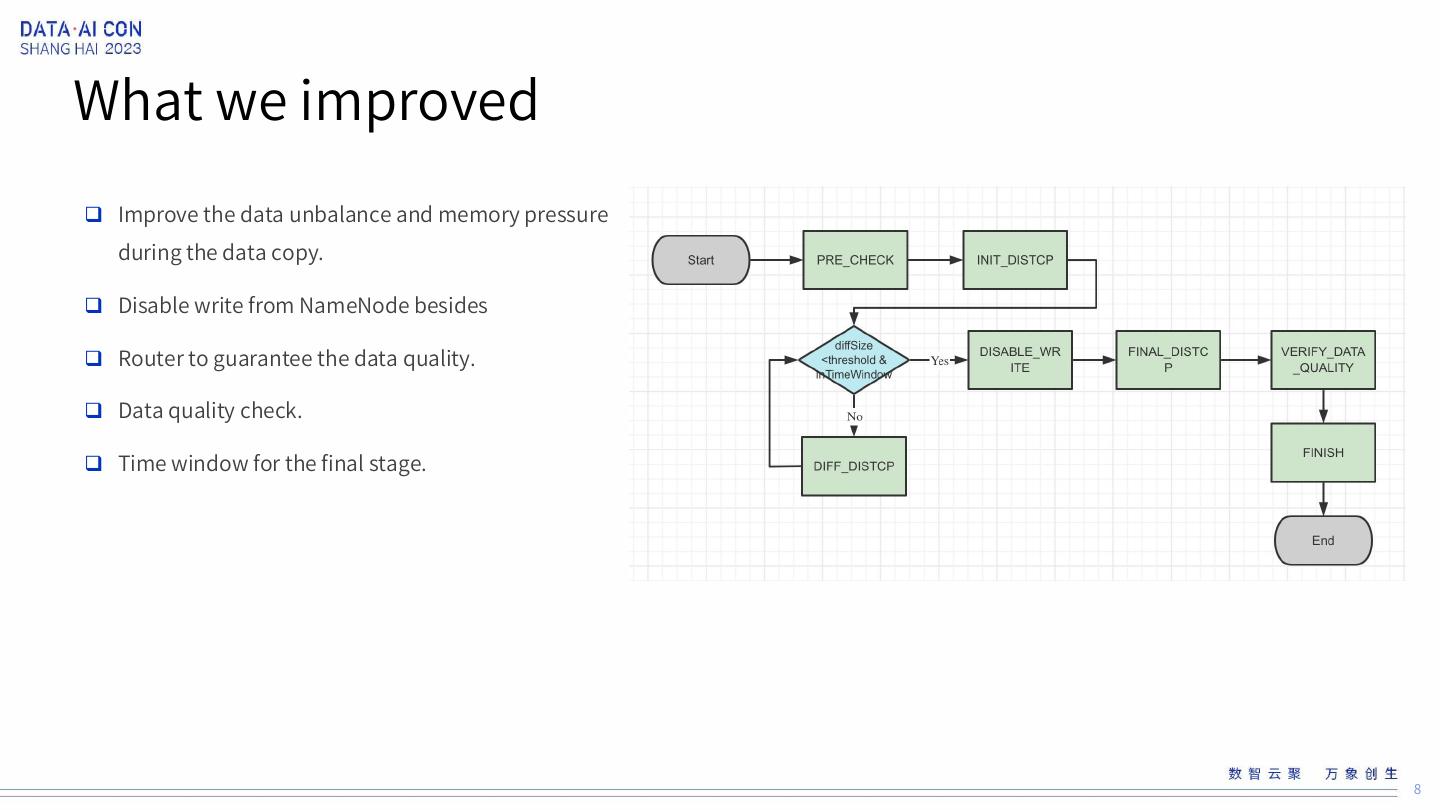

8 .What we improved Improve the data unbalance and memory pressure during the data copy. Disable write from NameNode besides Router to guarantee the data quality. Data quality check. Time window for the final stage. 8

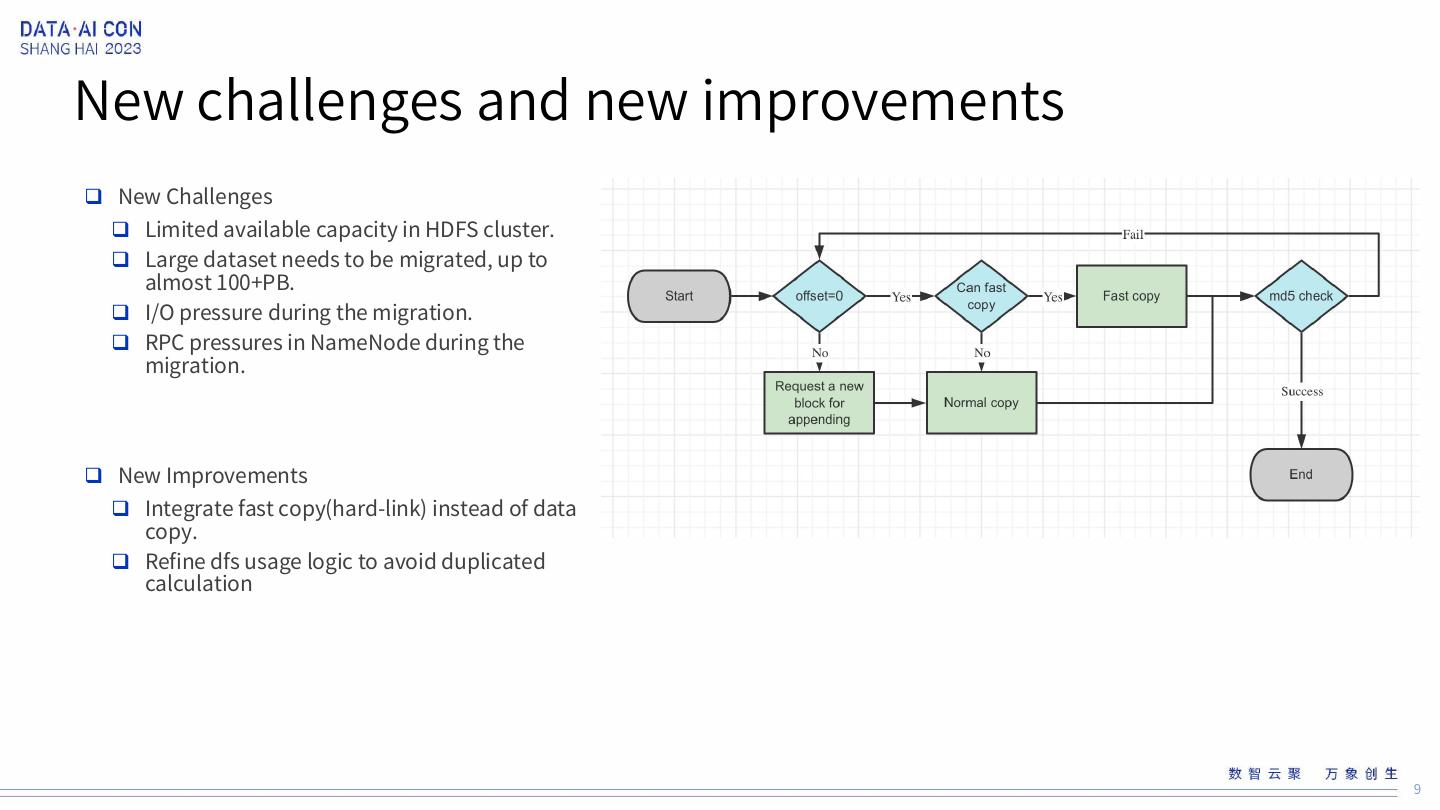

9 .New challenges and new improvements New Challenges Limited available capacity in HDFS cluster. Large dataset needs to be migrated, up to almost 100+PB. I/O pressure during the migration. RPC pressures in NameNode during the migration. New Improvements Integrate fast copy(hard-link) instead of data copy. Refine dfs usage logic to avoid duplicated calculation 9

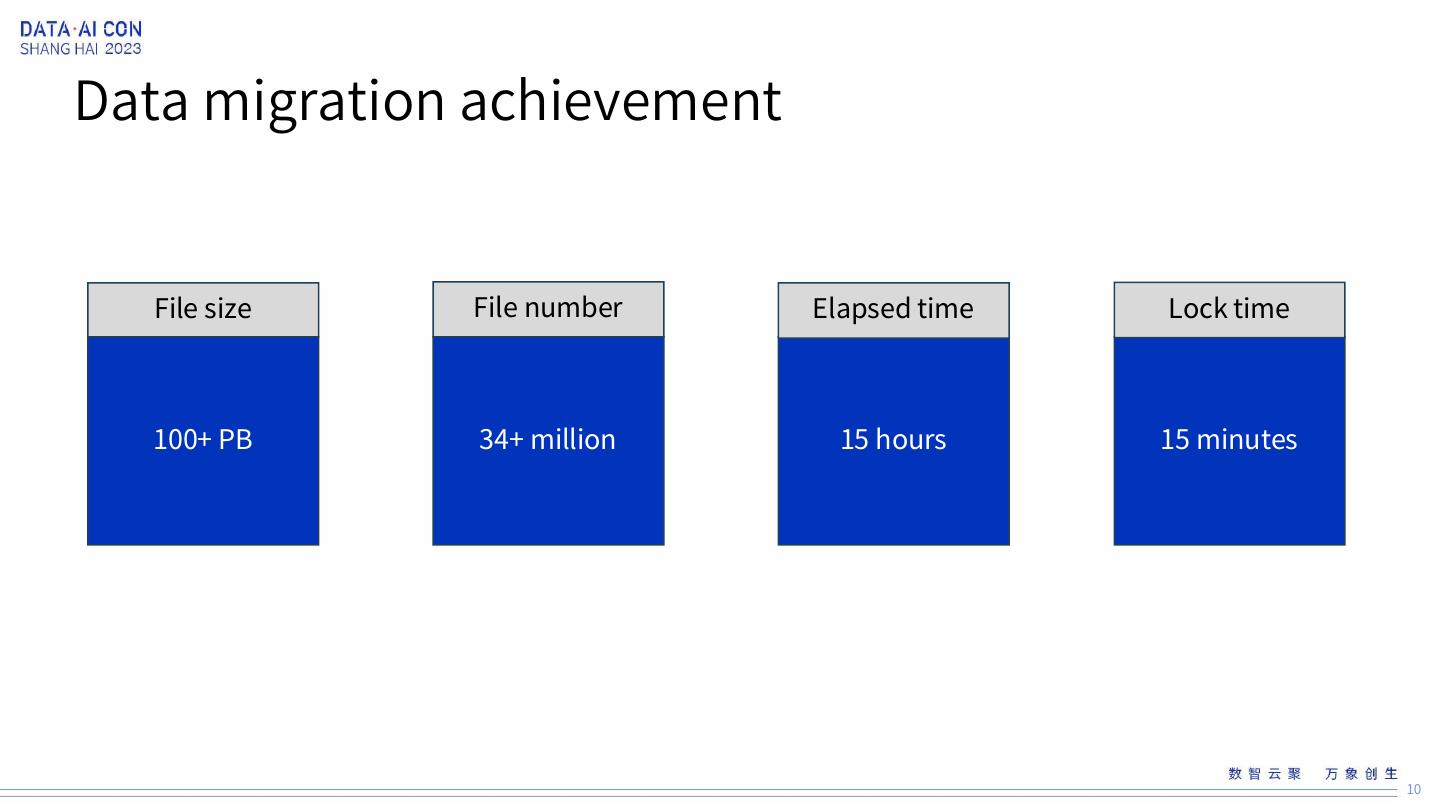

10 .Data migration achievement File size File number Elapsed time Lock time 100+ PB 34+ million 15 hours 15 minutes 10

11 .Part 03 Hadoop 3.3 Rolling Upgrade 11

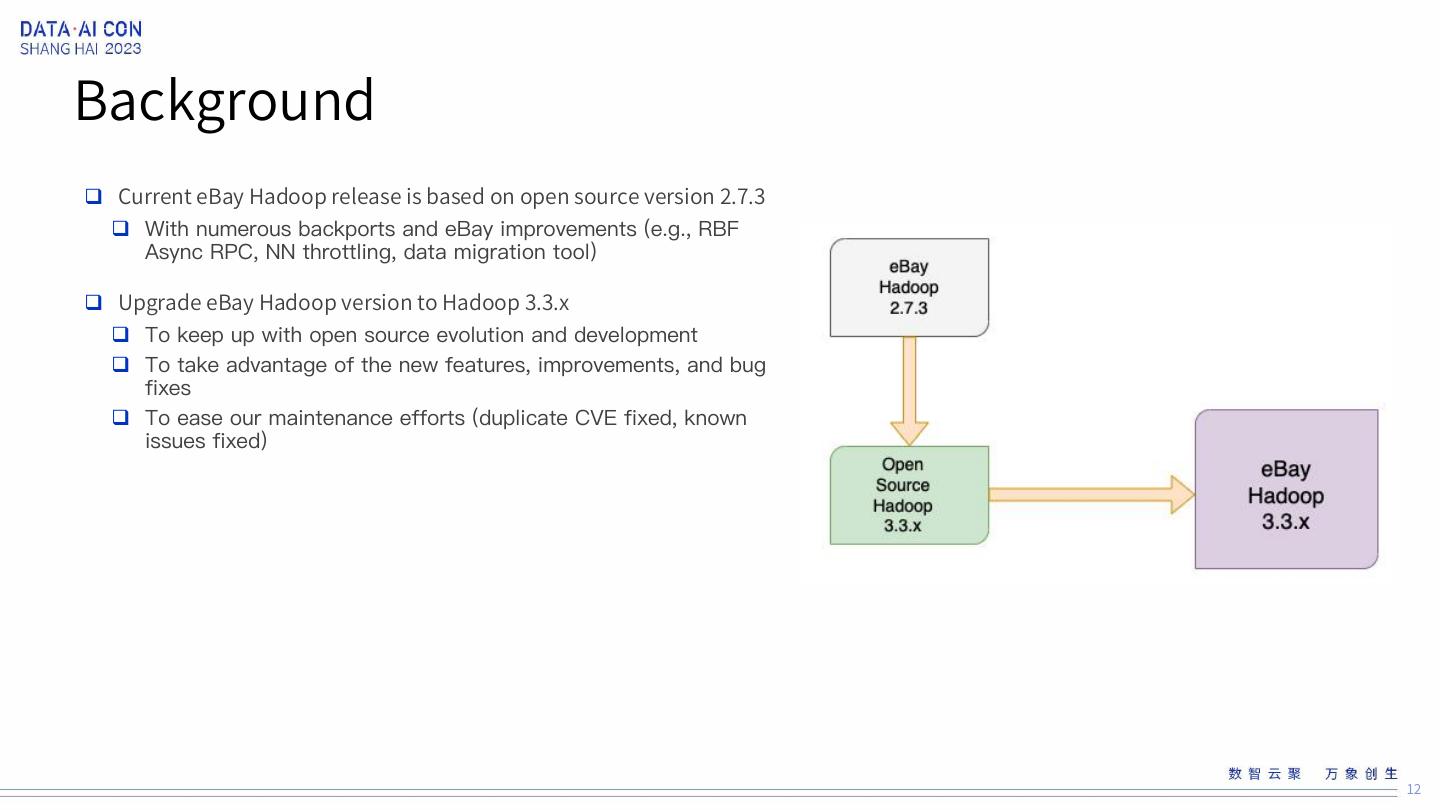

12 .Background Current eBay Hadoop release is based on open source version 2.7.3 With numerous backports and eBay improvements (e.g., RBF Async RPC, NN throttling, data migration tool) Upgrade eBay Hadoop version to Hadoop 3.3.x To keep up with open source evolution and development To take advantage of the new features, improvements, and bug fixes To ease our maintenance efforts (duplicate CVE fixed, known issues fixed) 12

13 .Upgrade strategy and plan Our ultimate goal No customer impact Cluster is always available, rolling upgrade on cluster. Separation of cluster and customer application upgrade Dynamic Hadoop runtime on cluster Hadoop3 and Hadoop2 are API and protocol compatible Review and fix all incompatible changes Hadoop ecosystem component service needs to work with Hadoop3 Include Spark, MapReduce, Hive, HBase applications and services. 13

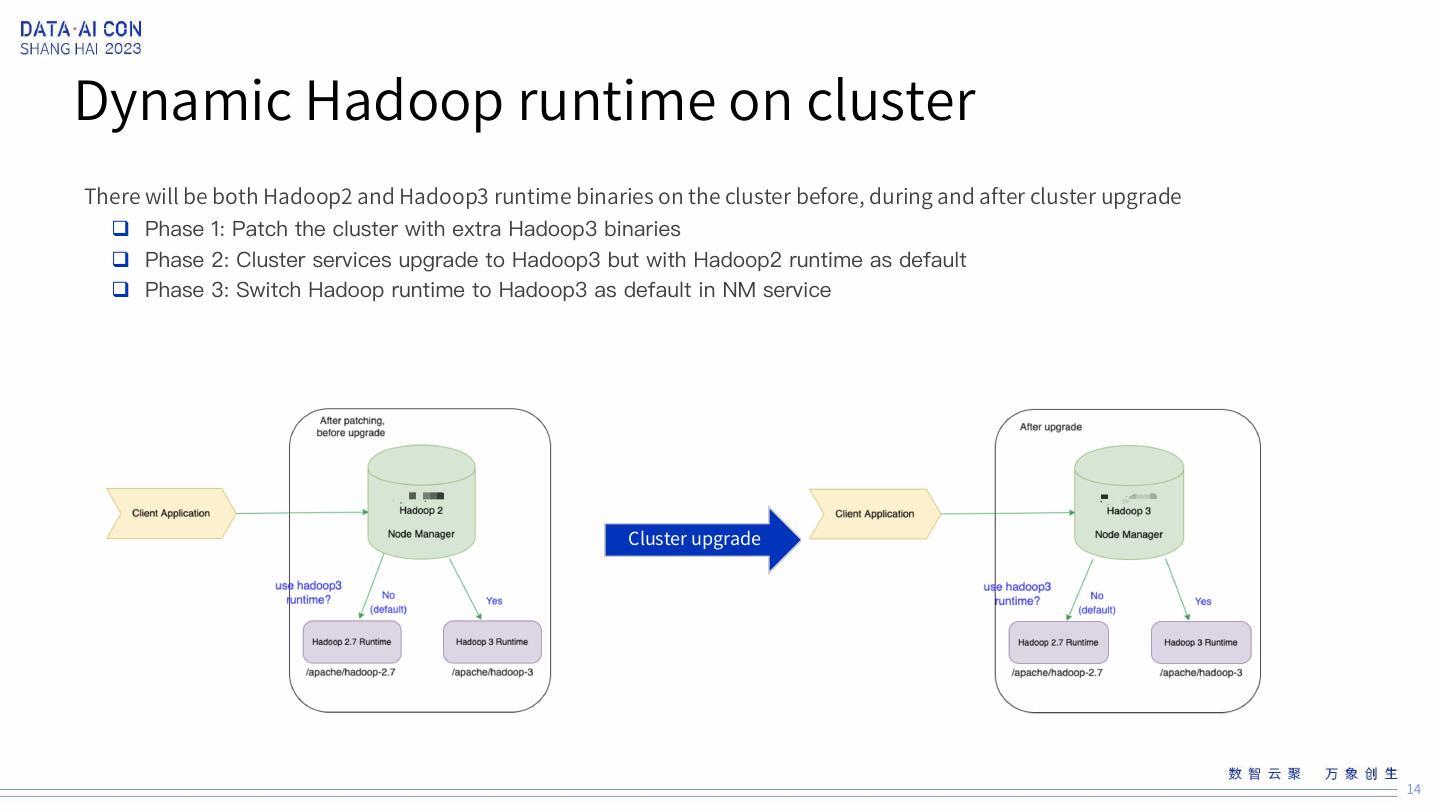

14 .Dynamic Hadoop runtime on cluster There will be both Hadoop2 and Hadoop3 runtime binaries on the cluster before, during and after cluster upgrade Phase 1: Patch the cluster with extra Hadoop3 binaries Phase 2: Cluster services upgrade to Hadoop3 but with Hadoop2 runtime as default Phase 3: Switch Hadoop runtime to Hadoop3 as default in NM service Cluster upgrade 14

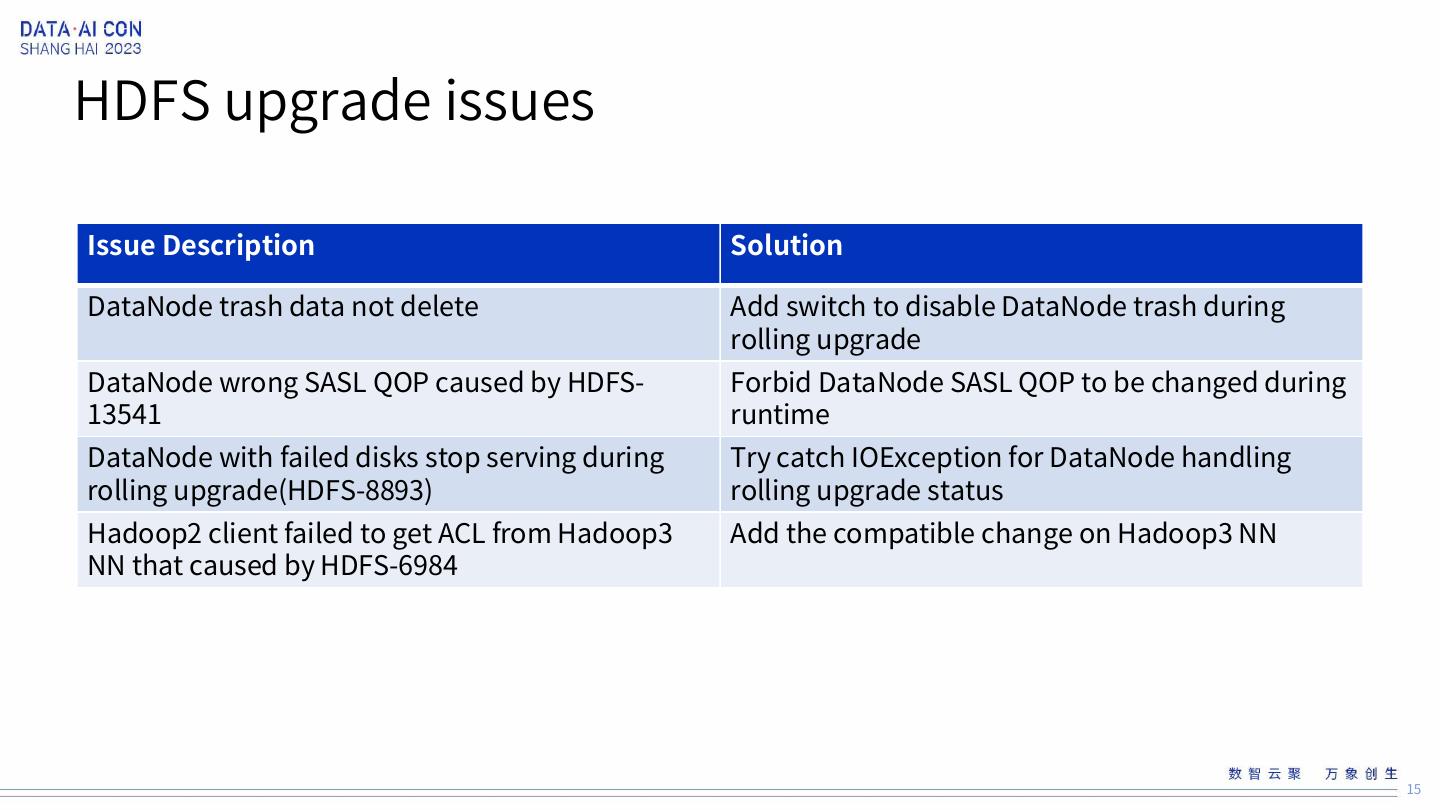

15 .HDFS upgrade issues Issue Description Solution DataNode trash data not delete Add switch to disable DataNode trash during rolling upgrade DataNode wrong SASL QOP caused by HDFS- Forbid DataNode SASL QOP to be changed during 13541 runtime DataNode with failed disks stop serving during Try catch IOException for DataNode handling rolling upgrade(HDFS-8893) rolling upgrade status Hadoop2 client failed to get ACL from Hadoop3 Add the compatible change on Hadoop3 NN NN that caused by HDFS-6984 15

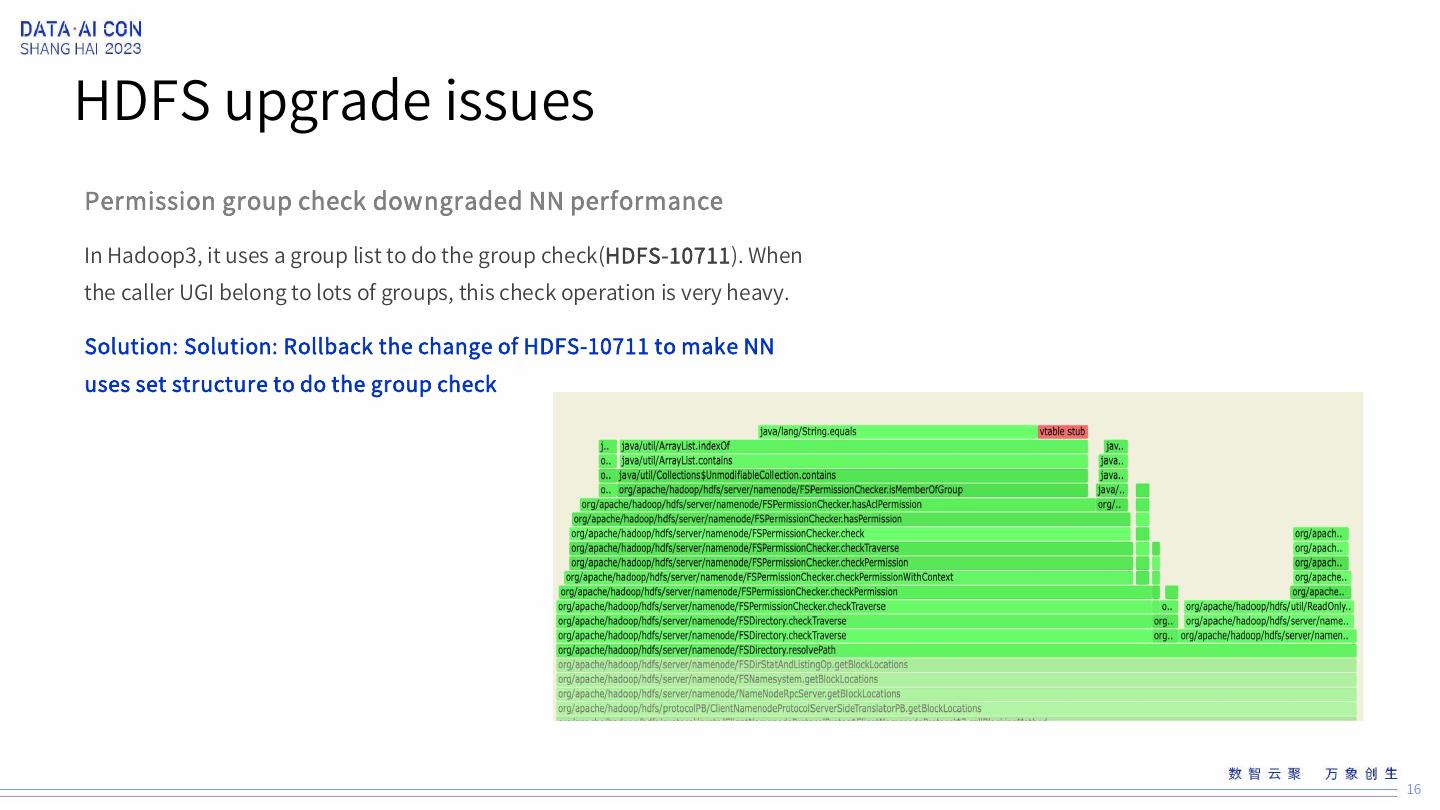

16 .HDFS upgrade issues Permission group check downgraded NN performance In Hadoop3, it uses a group list to do the group check(HDFS-10711). When the caller UGI belong to lots of groups, this check operation is very heavy. Solution: Solution: Rollback the change of HDFS-10711 to make NN uses set structure to do the group check 16

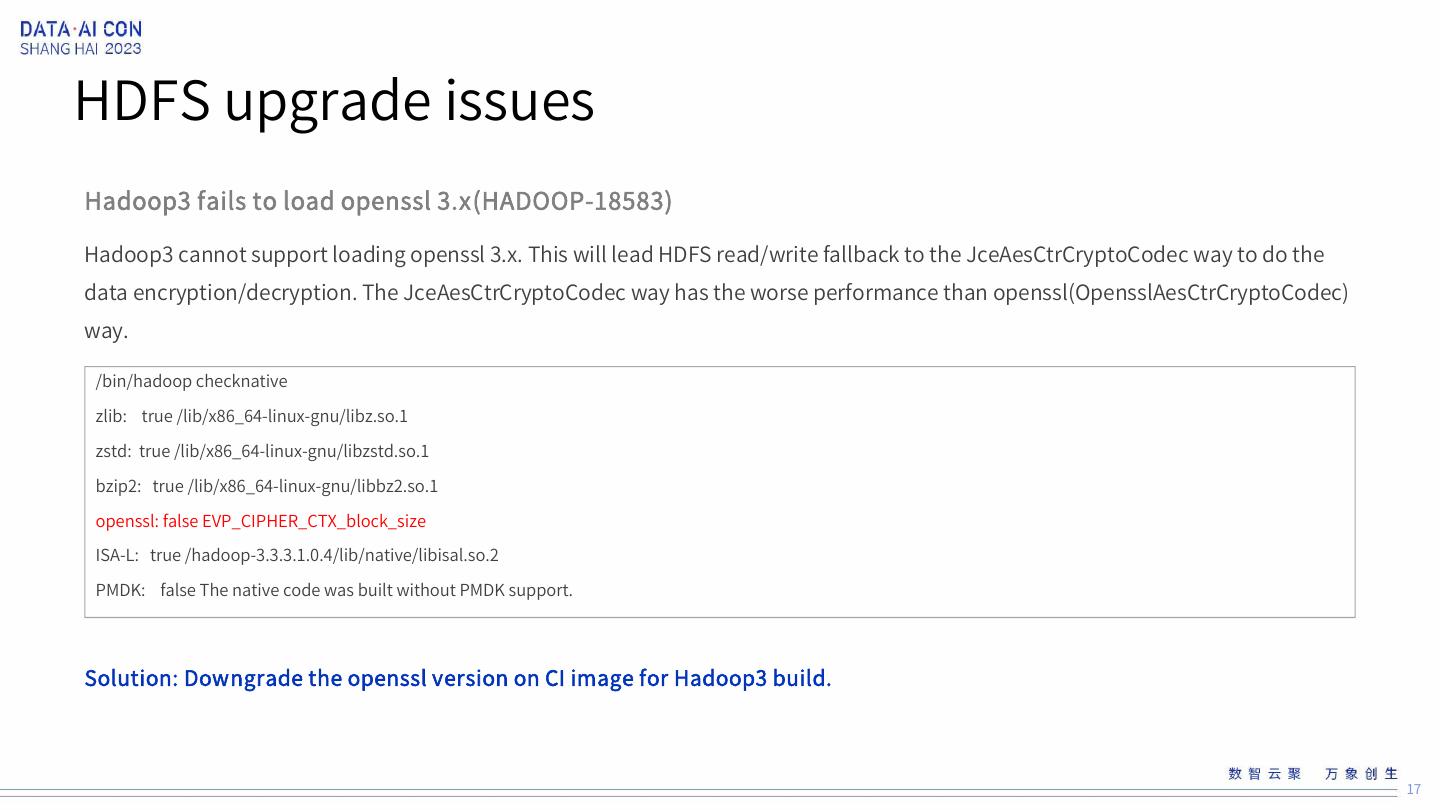

17 .HDFS upgrade issues Hadoop3 fails to load openssl 3.x(HADOOP-18583) Hadoop3 cannot support loading openssl 3.x. This will lead HDFS read/write fallback to the JceAesCtrCryptoCodec way to do the data encryption/decryption. The JceAesCtrCryptoCodec way has the worse performance than openssl(OpensslAesCtrCryptoCodec) way. /bin/hadoop checknative zlib: true /lib/x86_64-linux-gnu/libz.so.1 zstd: true /lib/x86_64-linux-gnu/libzstd.so.1 bzip2: true /lib/x86_64-linux-gnu/libbz2.so.1 openssl: false EVP_CIPHER_CTX_block_size ISA-L: true /hadoop-3.3.3.1.0.4/lib/native/libisal.so.2 PMDK: false The native code was built without PMDK support. Solution: Downgrade the openssl version on CI image for Hadoop3 build. 17

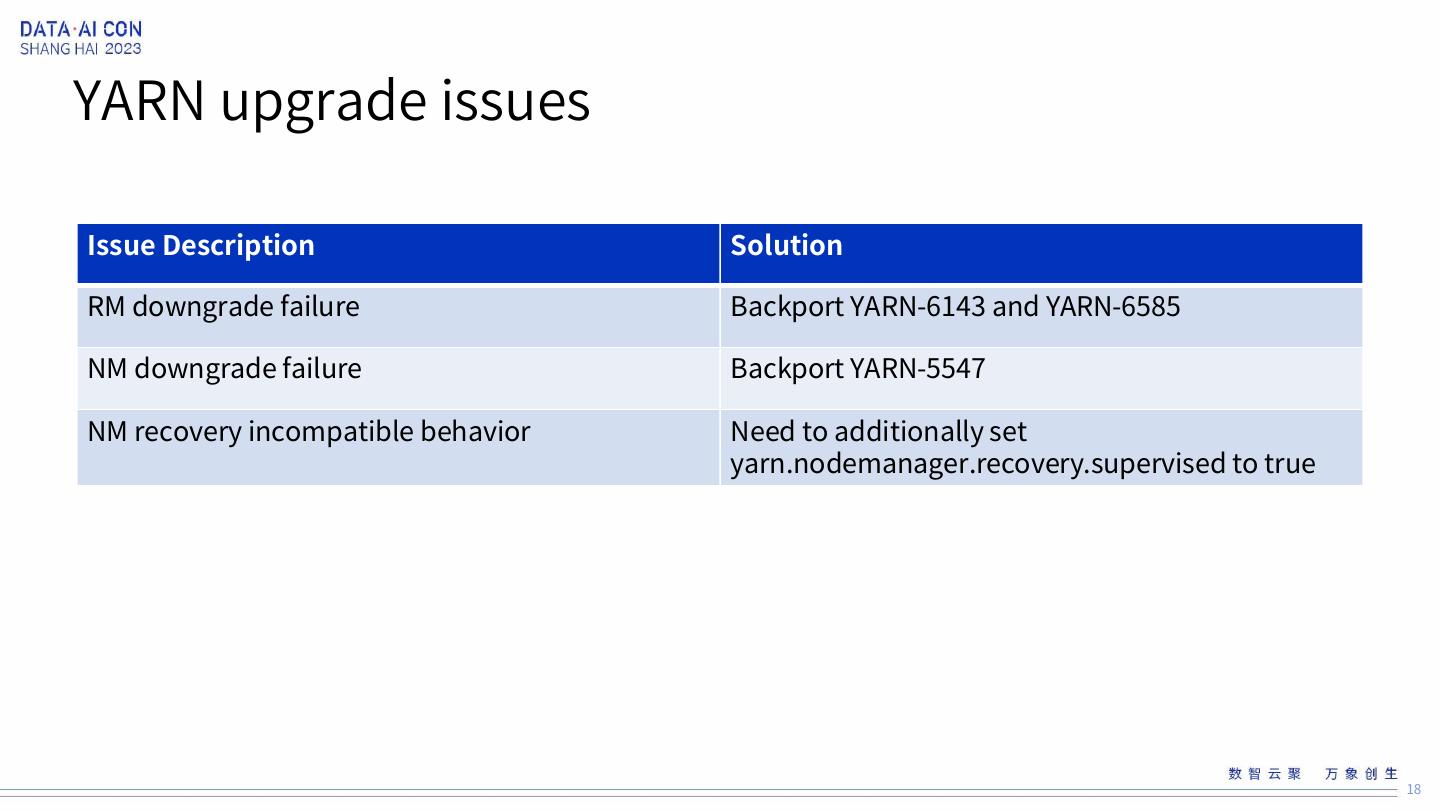

18 .YARN upgrade issues Issue Description Solution RM downgrade failure Backport YARN-6143 and YARN-6585 NM downgrade failure Backport YARN-5547 NM recovery incompatible behavior Need to additionally set yarn.nodemanager.recovery.supervised to true 18

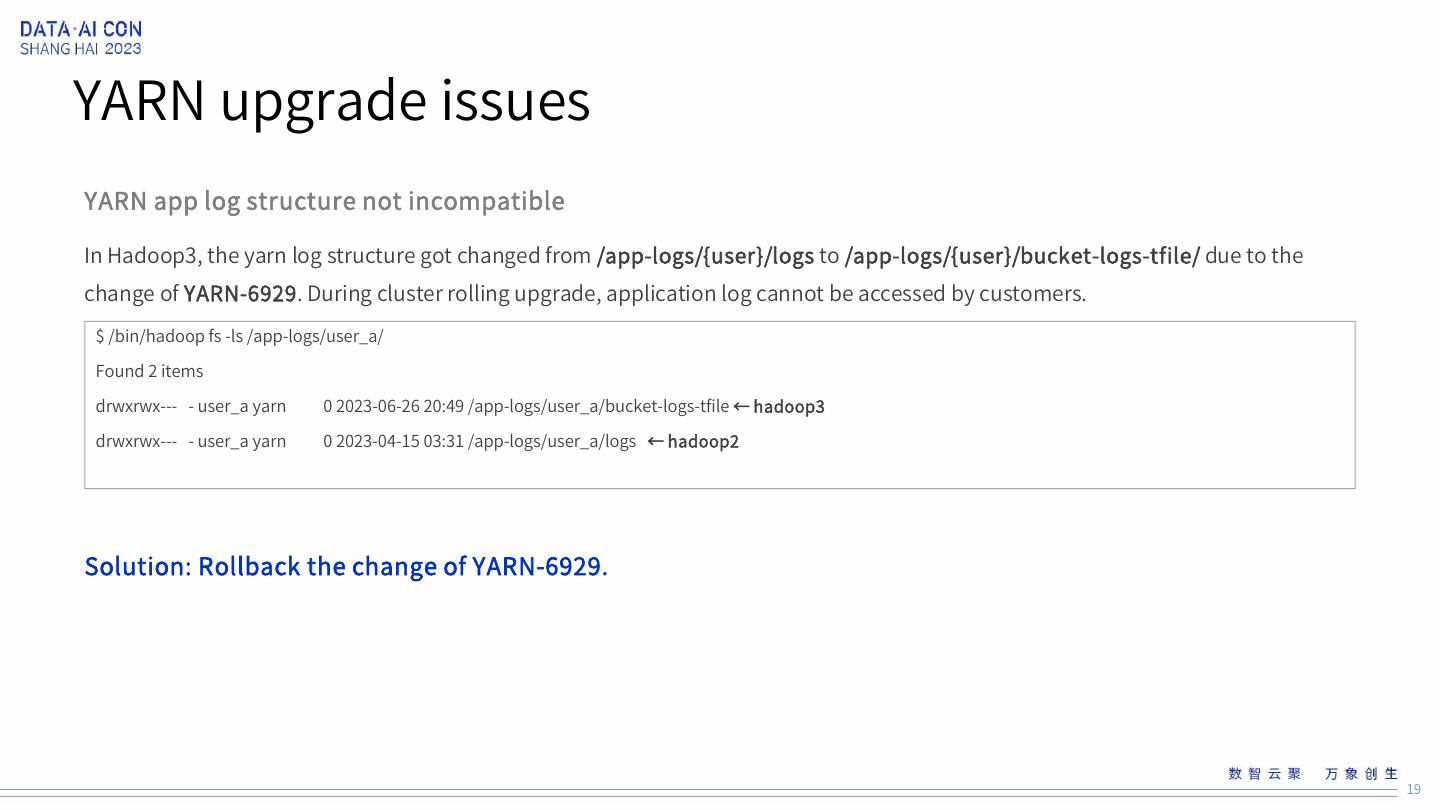

19 .YARN upgrade issues YARN app log structure not incompatible In Hadoop3, the yarn log structure got changed from /app-logs/{user}/logs to /app-logs/{user}/bucket-logs-tfile/ due to the change of YARN-6929. During cluster rolling upgrade, application log cannot be accessed by customers. $ /bin/hadoop fs -ls /app-logs/user_a/ Found 2 items drwxrwx--- - user_a yarn 0 2023-06-26 20:49 /app-logs/user_a/bucket-logs-tfile ← hadoop3 drwxrwx--- - user_a yarn 0 2023-04-15 03:31 /app-logs/user_a/logs ← hadoop2 Solution: Rollback the change of YARN-6929. 19

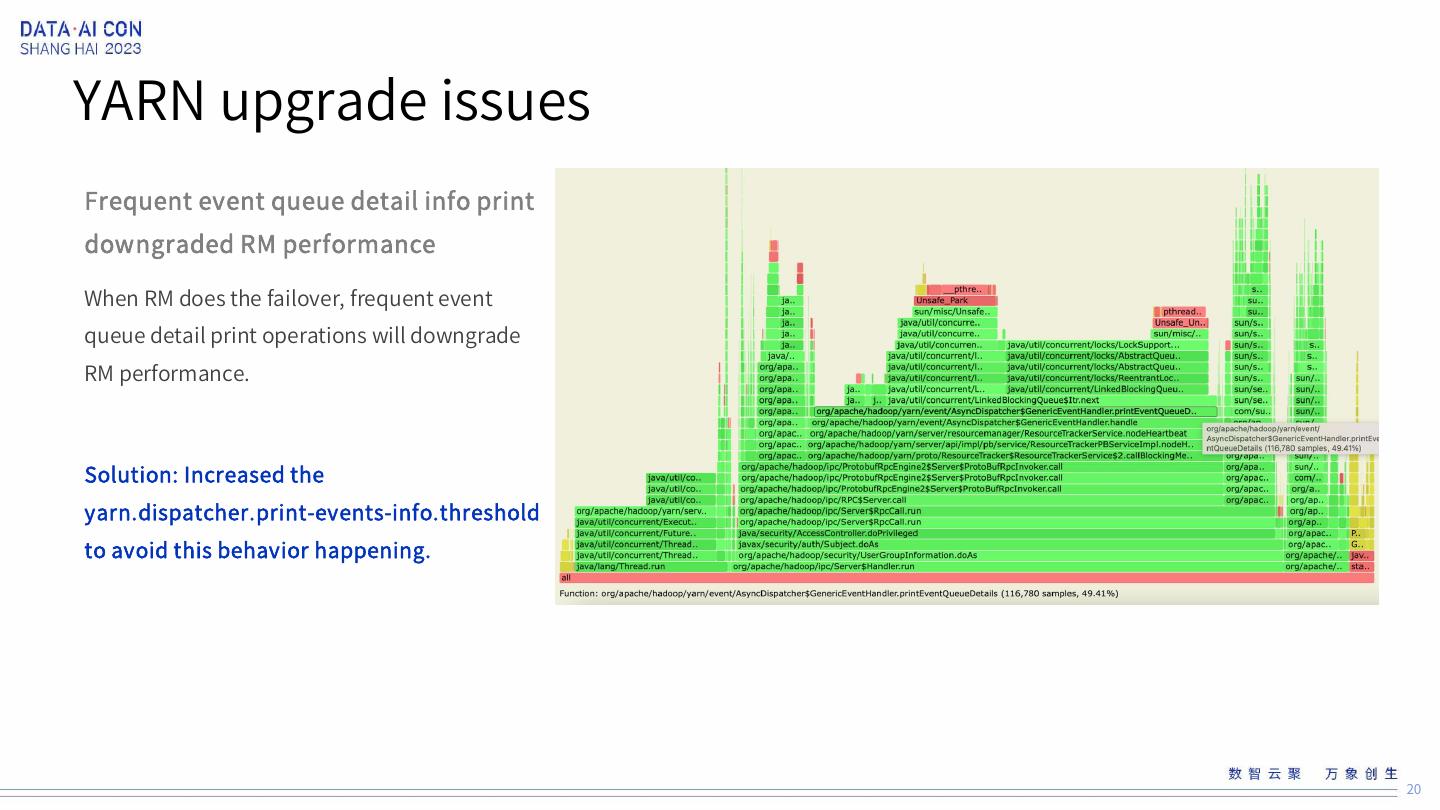

20 .YARN upgrade issues Frequent event queue detail info print downgraded RM performance When RM does the failover, frequent event queue detail print operations will downgrade RM performance. Solution: Increased the yarn.dispatcher.print-events-info.threshold to avoid this behavior happening. 20

21 .MAPREDUCE job upgrade issues TaskUmbilicalProtocol version mismatch error During the partial cluster upgrade, MR task will throw below error on Hadoop3 node due to not compatible with Hadoop2 protocol. 2022-10-27 00:44:39,721 WARN [main] org.apache.hadoop.mapred.YarnChild: Exception running child : org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.RPC$VersionMismatch): Protocol org.apache.hadoop.mapred.TaskUmbilicalProtocol version mismatch. (client = 21, server = 19) at org.apache.hadoop.ipc.WritableRpcEngine$Server$WritableRpcInvoker.call(WritableRpcEngine.java:521) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:993) at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:981) at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:918) at java.security.AccessController.doPrivileged(Native Method) Solution: NM dynamic runtime feature can make the application execute in the consistent runtime. 21

22 .MAPREDUCE job upgrade issues DistCp job failed due to incompatible issue Due to incompatible list seq file generated by Hadoop3 client(HADOOP-11794), the DistCp job could be failed on Hadoop2 runtime. We need to make sure the DistCp executed on the consistent runtime. MR job failed due to FileNotFoundException: File does not exist: hdfs:/xxxx/.staging/job_xxxx/libjars/*' error After the incompatible change by MAPREDUCE-6719, the MR libjars path will be changed to libjars/* rather than libjars/xx.jar which cannot be recognized by Hadoop2. Solution: Set mapreduce.client.libjars.wildcard=false to disable this incompatible behavior. 22

23 .Hive upgrade issue Hive shims cannot recognize Hadoop3 version Caused by: java.lang.IllegalArgumentException: Unrecognized Hadoop major version number: 3.3.3.1.0.3 at org.apache.hadoop.hive.shims.ShimLoader.getMajorVersion(ShimLoader.java:178) at org.apache.hadoop.hive.shims.ShimLoader.loadShims(ShimLoader.java:143) at org.apache.hadoop.hive.shims.ShimLoader.getHadoopShims(ShimLoader.java:102) Solution: Backport the fix HIVE-16081 to let old hive client can support with Hadoop3. 23

24 .Hadoop3 client upgrade tracking 2023-11-07 23:00:00,000 INFO FSNamesystem.audit: allowed=true ugi=xxxx ip=xxxx cmd=open src=xxxx dst=null perm=null proto=rpc version=3.3.3.1.0.18 Hadoop3 client upgrade tracking based on Hadoop version info in HDFS audit log 24

25 .25