- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Leveraging Kernel Tables with XDP

xdp是在nic驱动程序中运行bpf程序的框架,允许在Linux网络堆栈。bpf程序在很大程度上依赖于用于驱动数据包决策的映射,例如由用户空间代理。这个架构对系统是如何配置、监视和调试。另一种方法是创建内核网络表可由BPF程序访问。这种方法允许使用标准管理网络配置和状态的linuxapi和工具仍然实现xdp提供的更性能。一个例子提供对内核表的访问是最近添加的允许xdp程序中的ipv4和ipv6 fib(和nexthop)查找。路由套件例如frr管理fib表,而xdp包路径的好处是实时自动适应fib更新。当一个巨大的第一步,仅使用fib查找不足以实现一般的网络连接部署。

本文讨论了将内核表提供给xdp程序创建一个可编程的包管道,有哪些特性已于2018年10月实施,主要缺失功能,以及当前的挑战。

展开查看详情

1 .Leveraging Kernel Tables with XDP Linux Plumbers Conference, November 2018 David Ahern | Cumulus Networks 1

2 .Is XDP Really Different than DPDK? Cumulus Networks 2

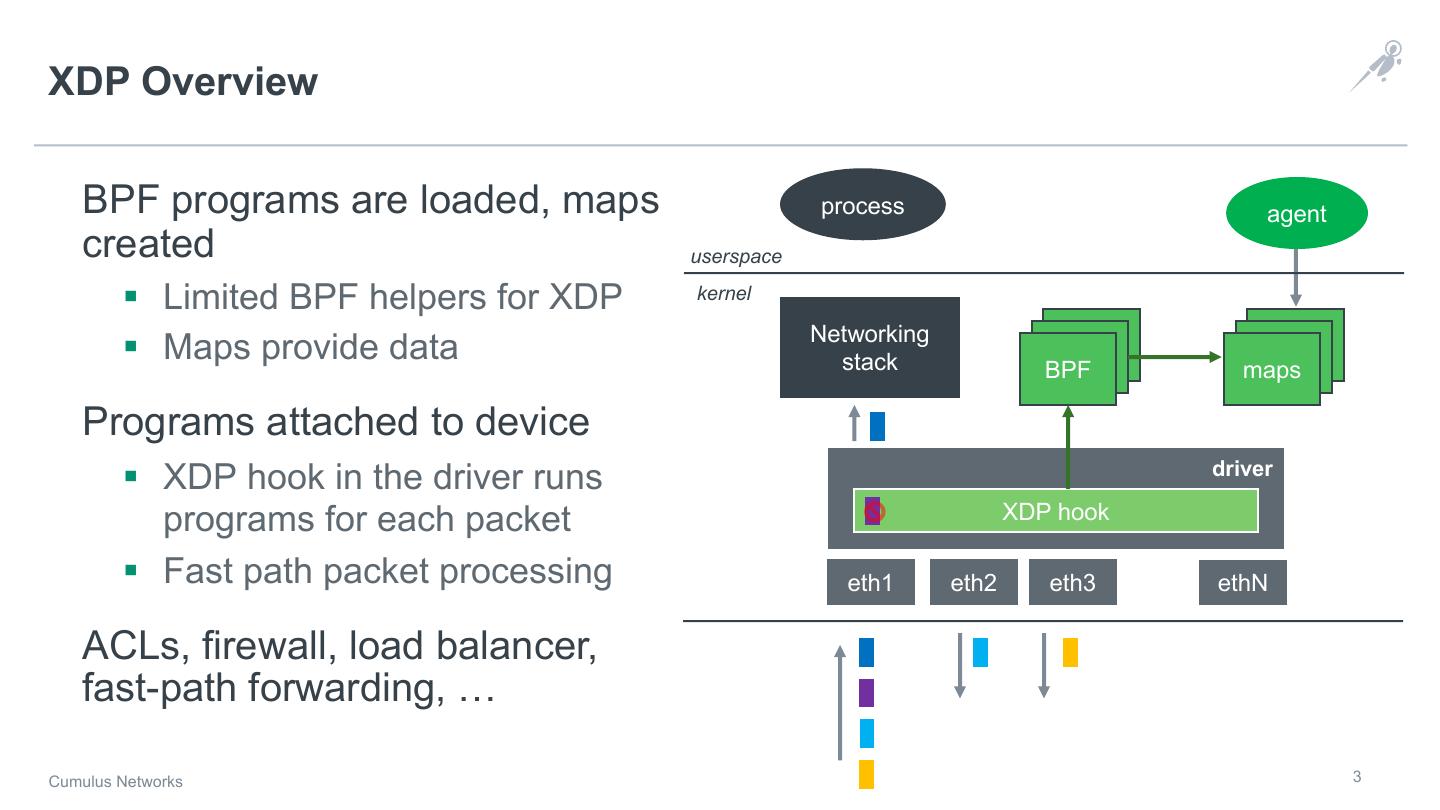

3 .XDP Overview BPF programs are loaded, maps process agent created userspace § Limited BPF helpers for XDP kernel Networking § Maps provide data stack BPF maps Programs attached to device driver § XDP hook in the driver runs programs for each packet XDP hook § Fast path packet processing eth1 eth2 eth3 ethN ACLs, firewall, load balancer, fast-path forwarding, … Cumulus Networks 3

4 . Focus Discussion on L2 / L3 Forwarding in XDP 4

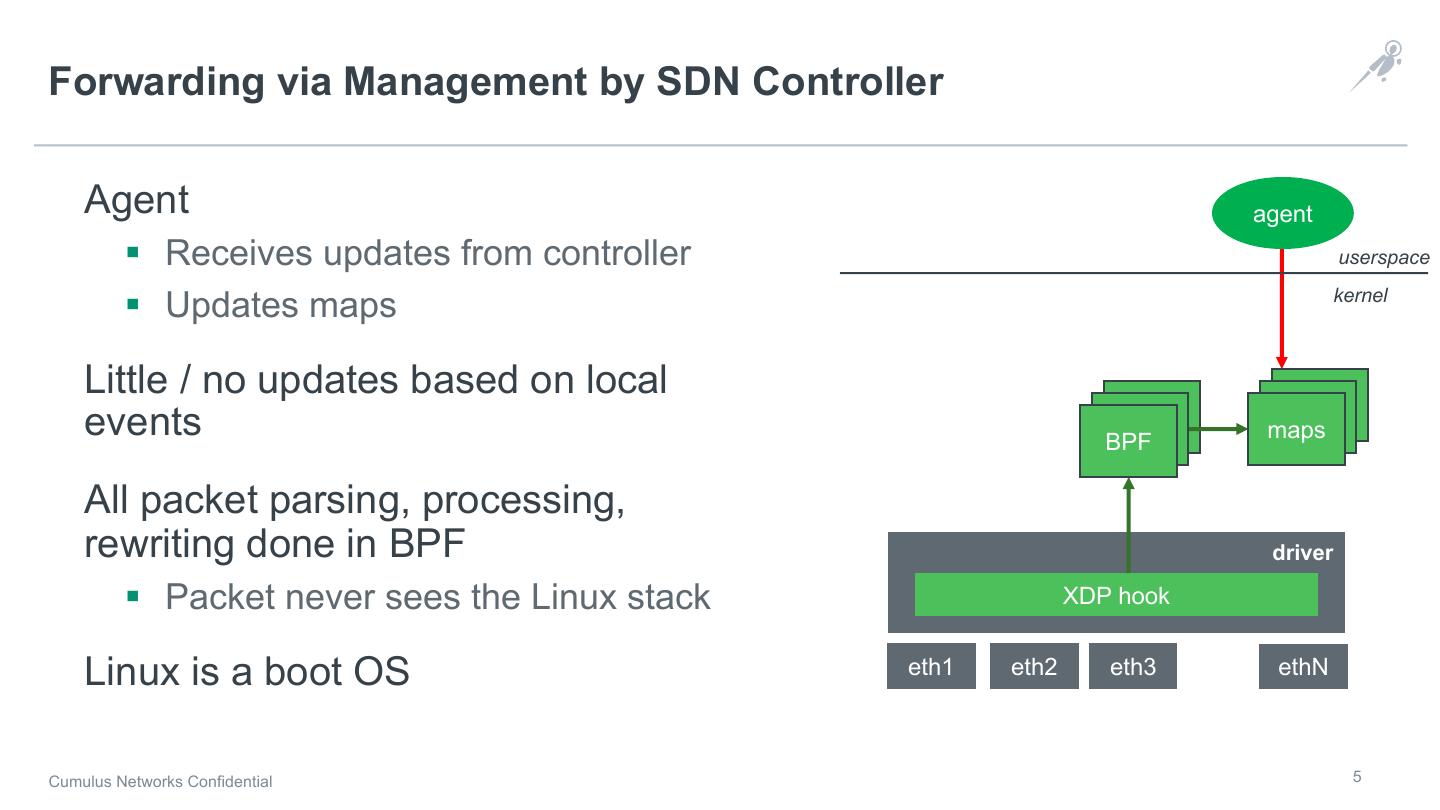

5 .Forwarding via Management by SDN Controller Agent agent § Receives updates from controller userspace kernel § Updates maps Little / no updates based on local events BPF maps All packet parsing, processing, rewriting done in BPF driver § Packet never sees the Linux stack XDP hook Linux is a boot OS eth1 eth2 eth3 ethN Cumulus Networks Confidential 5

6 .Is XDP with Maps Really Different than DPDK? Both bypass Linux networking stack and its networking APIs Custom implementations of standard protocols § e.g., Bridging and aging fdb entries Custom tools to manage solution § Configuration, monitoring and debugging … Need better integration of XDP with Linux § More complete and consistent Linux solution Cumulus Networks 6

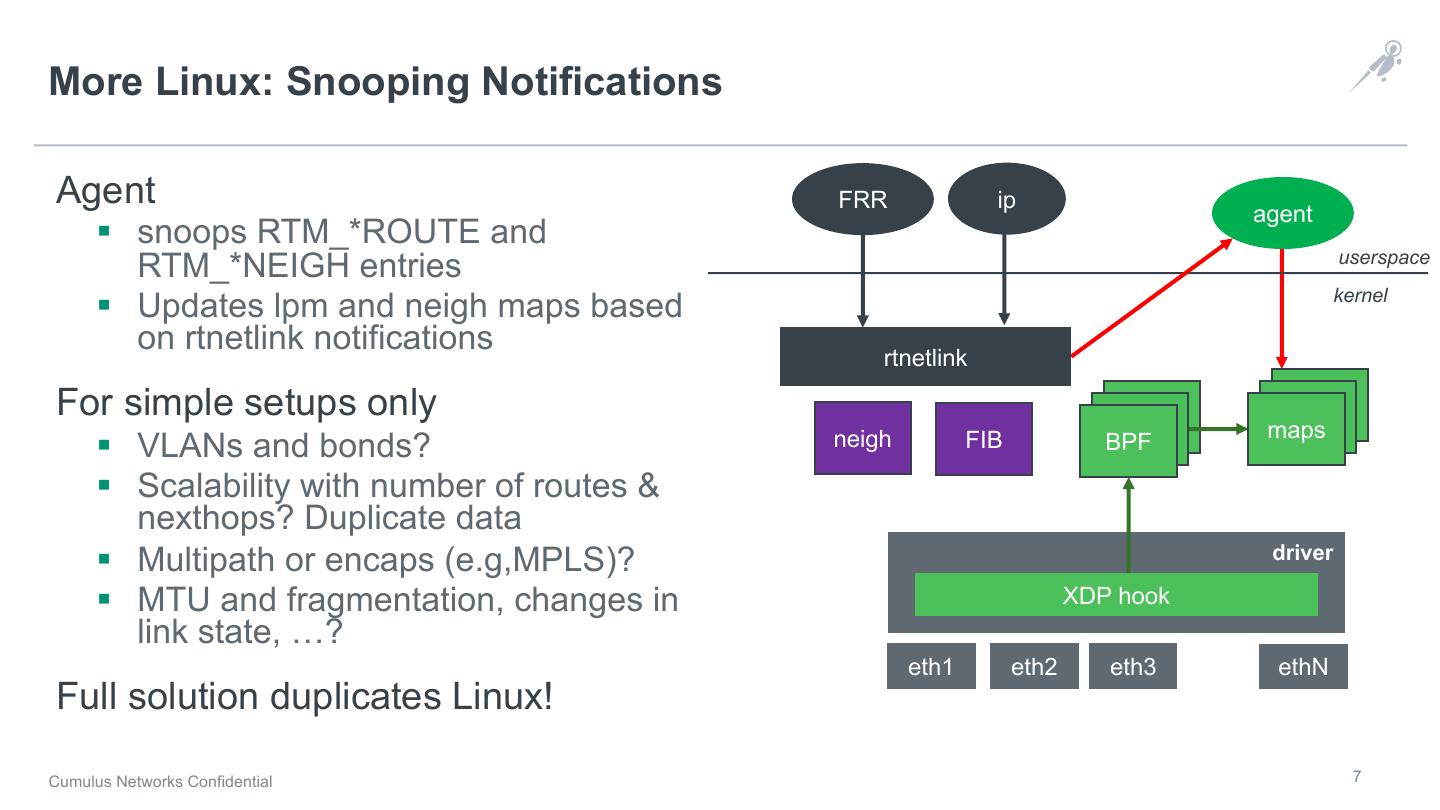

7 .More Linux: Snooping Notifications Agent FRR ip agent § snoops RTM_*ROUTE and RTM_*NEIGH entries userspace kernel § Updates lpm and neigh maps based on rtnetlink notifications rtnetlink For simple setups only neigh FIB maps § VLANs and bonds? BPF § Scalability with number of routes & nexthops? Duplicate data driver § Multipath or encaps (e.g,MPLS)? § MTU and fragmentation, changes in XDP hook link state, …? eth1 eth2 eth3 ethN Full solution duplicates Linux! Cumulus Networks Confidential 7

8 .Linux and Hardware Offload Standard Linux APIs configure FRR ip tc ifupdown2 networking userspace kernel Kernel modified so that ASIC rtnetlink driver gets necessary information upper ASIC driver handles notifications ACLs FDB neigh FIB dev and programs hardware ASIC driver swp1 swp2 swp3 swpN ASIC Cumulus Networks 8

9 .XDP as a Software “Offload” Consider XDP as an offload rather FRR ip tc ifupdown2 than new framework § Do not want to reinvent rtnetlink implementations of networking features maps Allow BPF programs to access FDB neigh FIB upper dev kernel tables BPF ACLs Consistency all the way around driver § Existing protocols, APIs and XDP hook processes to configure networking eth1 eth2 eth3 eth4 ethN § Slow path and fast path driven by same data Cumulus Networks 9

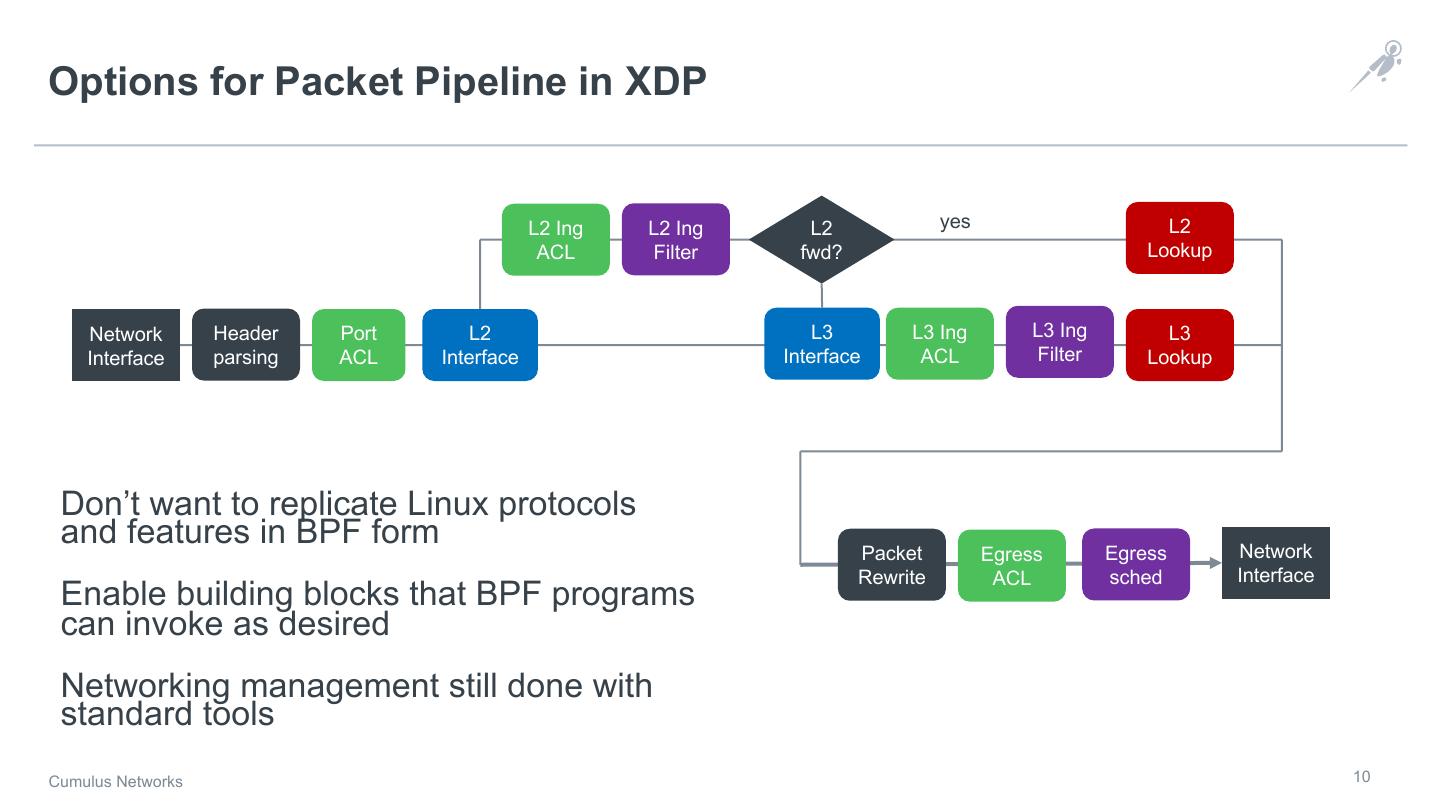

10 .Options for Packet Pipeline in XDP L2 Ing L2 Ing L2 yes L2 ACL Filter fwd? Lookup Network Header Port L2 L3 L3 Ing L3 Ing L3 Interface parsing ACL Interface Interface ACL Filter Lookup Don’t want to replicate Linux protocols and features in BPF form Network Packet Egress Egress Rewrite ACL sched Interface Enable building blocks that BPF programs can invoke as desired Networking management still done with standard tools Cumulus Networks 10

11 .Key Elements for Packet Forwarding in XDP Support for essential networking features § vlans, bonds, bridges, macvlan ACL and filters - ingress and egress § Several attach points Forwarding lookup § FDB Lookup - L2 forwarding § FIB Lookup - L3 forwarding Packet Scheduling § Crossing bandwidths, traffic shaping and priority Cumulus Networks Confidential 11

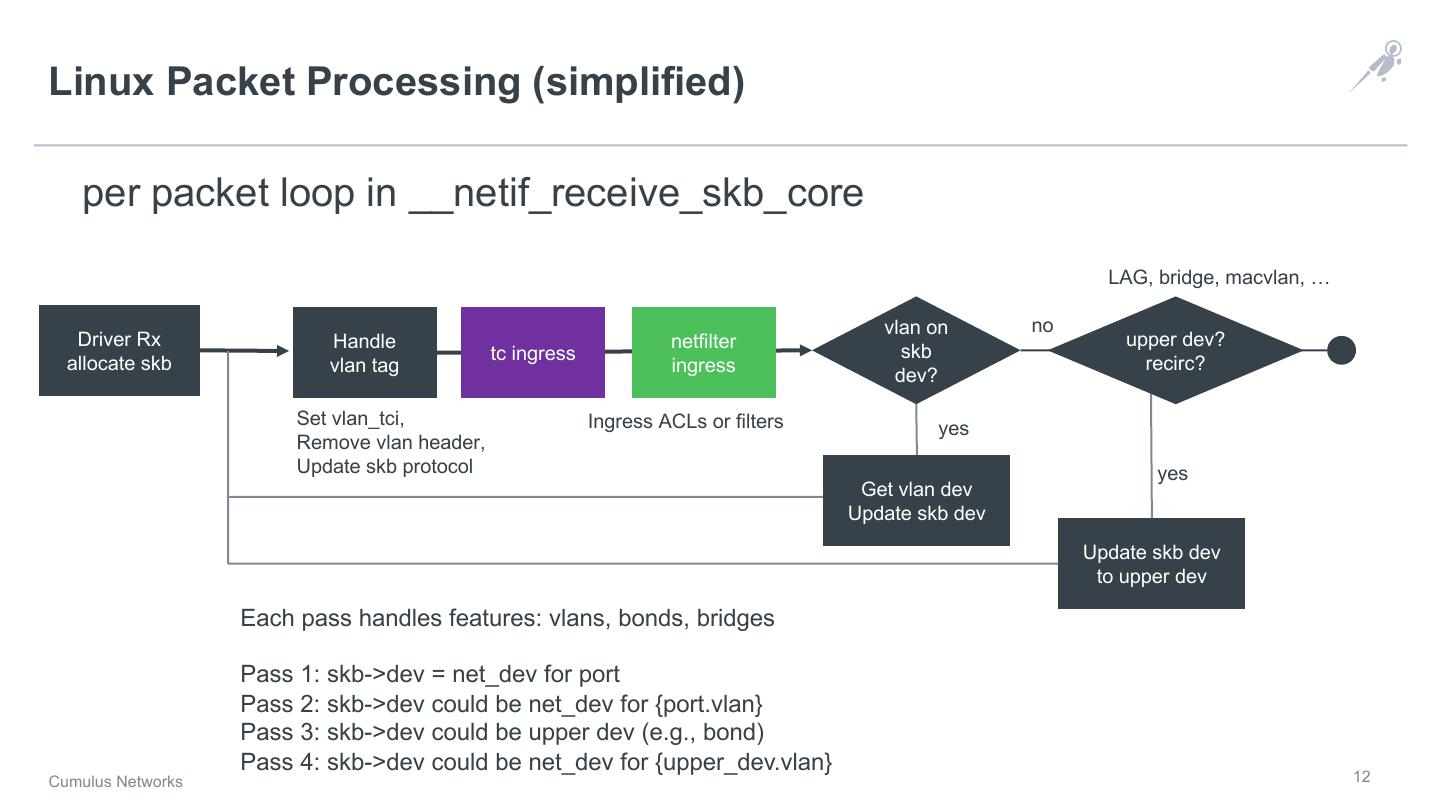

12 .Linux Packet Processing (simplified) per packet loop in __netif_receive_skb_core LAG, bridge, macvlan, … vlan on no Driver Rx Handle netfilter upper dev? tc ingress skb allocate skb vlan tag ingress recirc? dev? Set vlan_tci, Ingress ACLs or filters yes Remove vlan header, Update skb protocol yes Get vlan dev Update skb dev Update skb dev to upper dev Each pass handles features: vlans, bonds, bridges Pass 1: skb->dev = net_dev for port Pass 2: skb->dev could be net_dev for {port.vlan} Pass 3: skb->dev could be upper dev (e.g., bond) Pass 4: skb->dev could be net_dev for {upper_dev.vlan} 12 Cumulus Networks

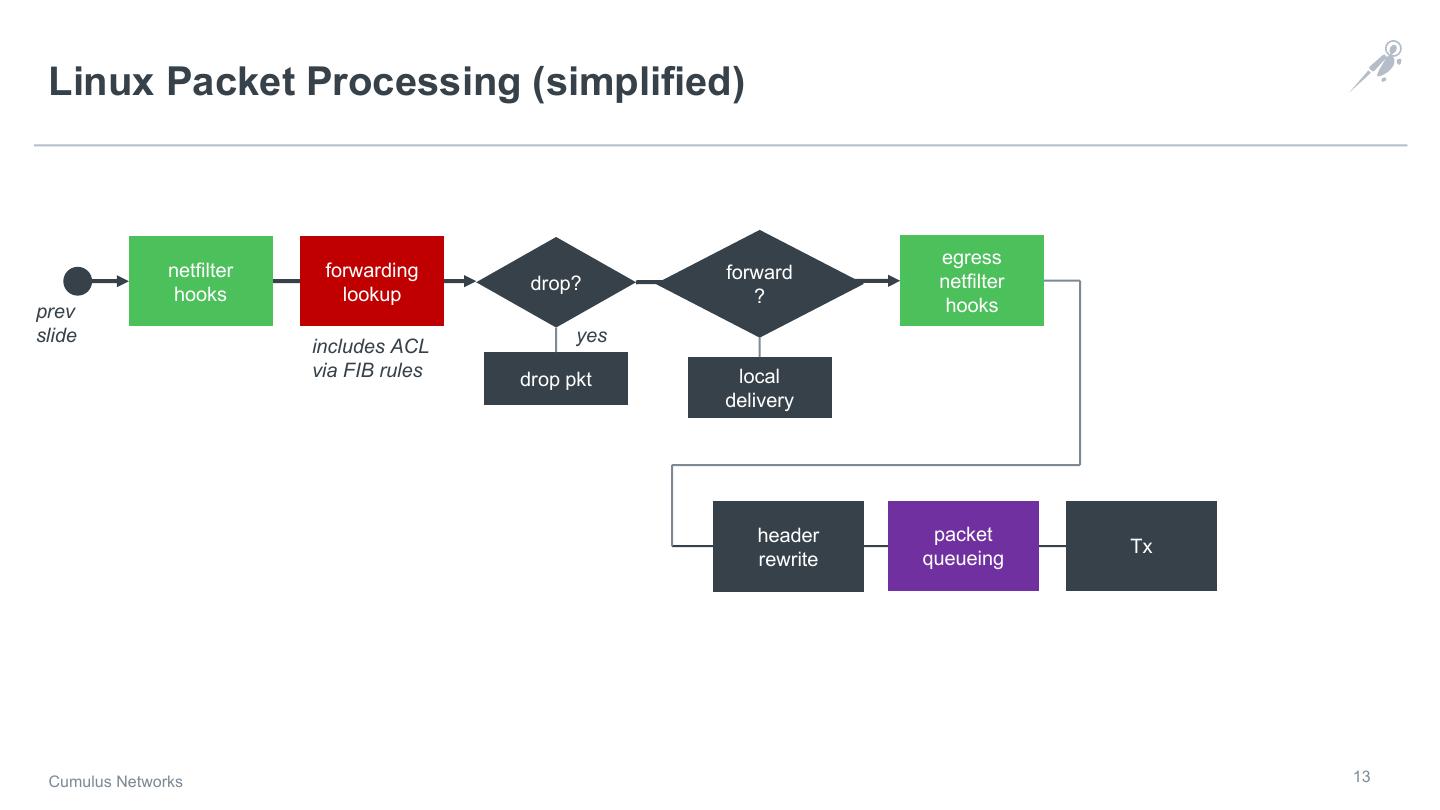

13 . Linux Packet Processing (simplified) egress netfilter forwarding forward drop? netfilter hooks lookup ? prev hooks slide yes includes ACL via FIB rules drop pkt local delivery header packet Tx rewrite queueing Cumulus Networks 13

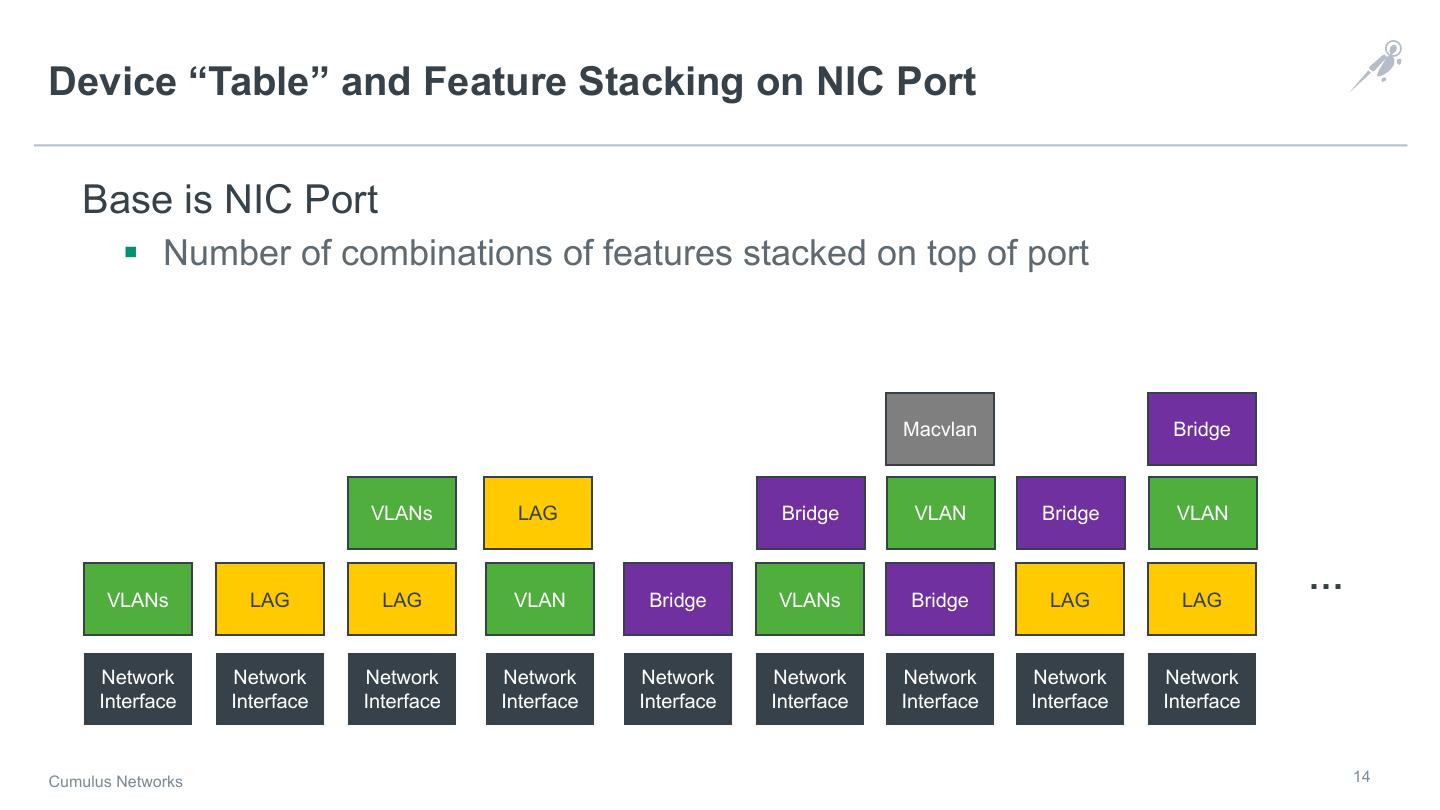

14 .Device “Table” and Feature Stacking on NIC Port Base is NIC Port § Number of combinations of features stacked on top of port Macvlan Bridge VLANs LAG Bridge VLAN Bridge VLAN VLANs LAG LAG VLAN Bridge VLANs Bridge LAG LAG … Network Network Network Network Network Network Network Network Network Interface Interface Interface Interface Interface Interface Interface Interface Interface Cumulus Networks 14

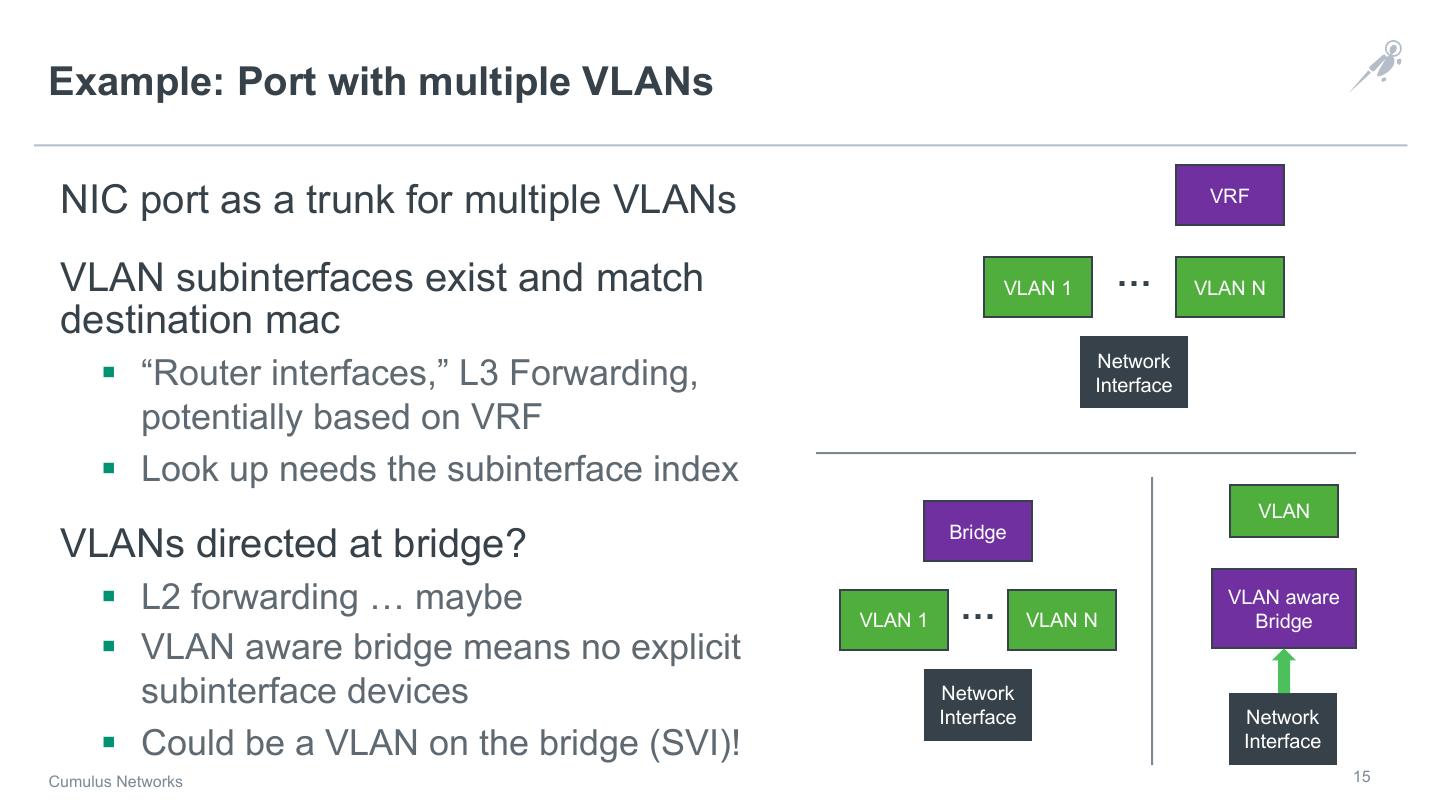

15 .Example: Port with multiple VLANs NIC port as a trunk for multiple VLANs VRF VLAN subinterfaces exist and match VLAN 1 … VLAN N destination mac Network § “Router interfaces,” L3 Forwarding, Interface potentially based on VRF § Look up needs the subinterface index VLAN Bridge VLANs directed at bridge? § L2 forwarding … maybe … VLAN aware VLAN 1 VLAN N Bridge § VLAN aware bridge means no explicit subinterface devices Network Interface Network § Could be a VLAN on the bridge (SVI)! Interface Cumulus Networks 15

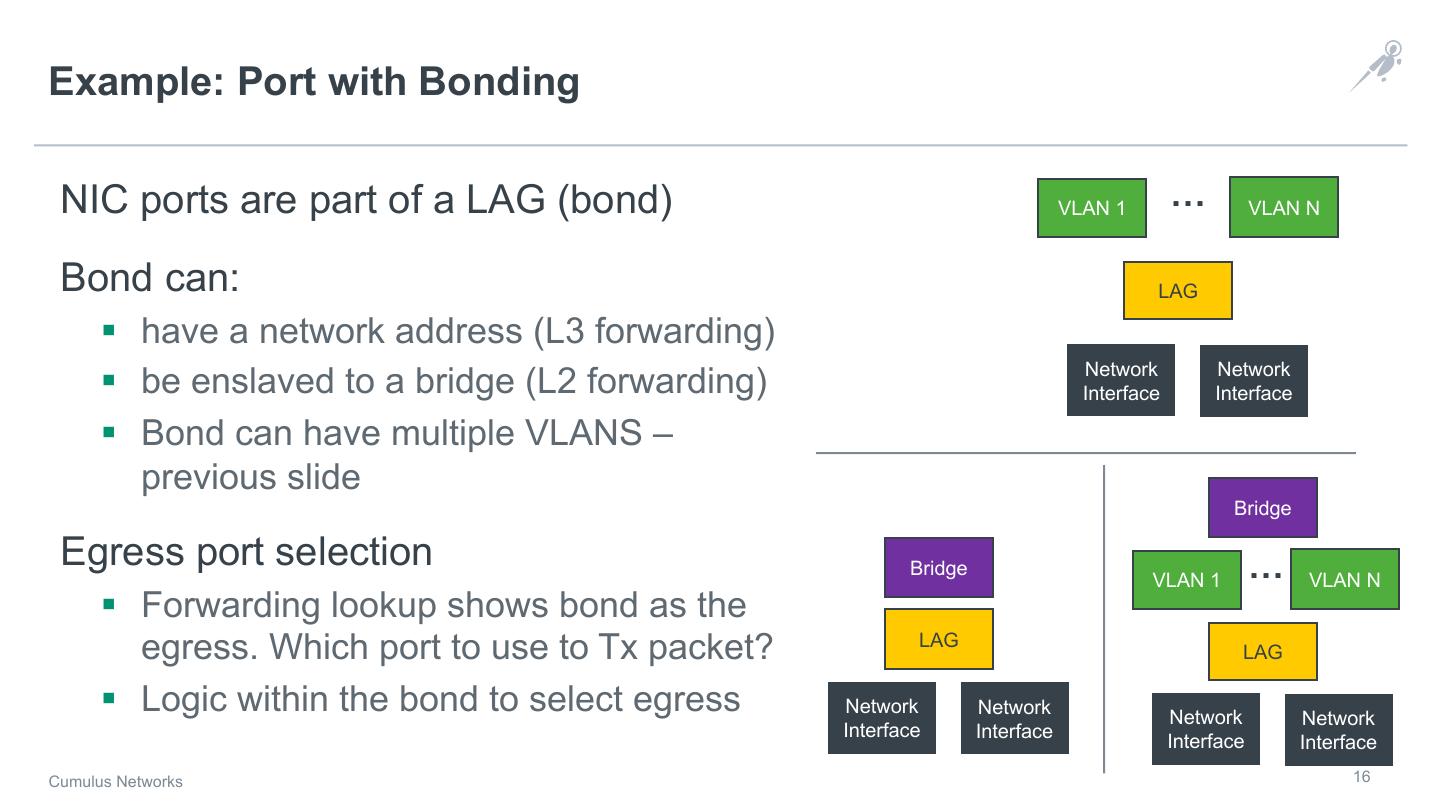

16 .Example: Port with Bonding NIC ports are part of a LAG (bond) VLAN 1 … VLAN N Bond can: LAG § have a network address (L3 forwarding) Network Network § be enslaved to a bridge (L2 forwarding) Interface Interface § Bond can have multiple VLANS – previous slide Bridge Egress port selection Bridge … VLAN 1 VLAN N § Forwarding lookup shows bond as the egress. Which port to use to Tx packet? LAG LAG § Logic within the bond to select egress Network Network Network Network Interface Interface Interface Interface Cumulus Networks 16

17 .Device Stacking on NIC Port Networking features typically implemented in modules and instantiated as virtual net_devices § VLANs, LAG, bridging, VRF § Manages upper/lower relationships § Could be manipulating the packet (adding/removing vlan header) or dropping it (ingress on inactive slave) § Could be implementing a protocol (bond and 802.3ad; bridge and stp) § Selecting an egress device (bond, team) § Influencing a lookup (bridge, vrf) or learning (bridge) Do not want to have to reimplement networking features for XDP Allow standard Linux interface managers to configure/manage networking features § VLANs, bonds, macvlans, vlan-aware bridge, SVIs Cumulus Networks 17

18 .Access to Device Table Need bpf helpers to convert {port index, vlan, dmac} to: § LAG id § L2 device index – device enslaved to a bridge; L2 forwarding lookup § L3 / RIF device index – input to L3 forwarding lookup § Other intermediary devices? Features as a device is a Linux’ism Device (or index) is key to other features § ACL, filtering, scheduling, forwarding Egress port index § L2/L3 forwarding returns upper device § Need egress port index for redirect Cumulus Networks 18

19 .Prototype One option is to use existing upper/lower list_heads § Reduces code refactoring and APIs § Does not scale (e.g., number of VLANs) Better solution is to mimic logic of __netif_receive_skb_core § Heavy refactoring, exporting APIs or creating new ones to move from one device type (feature) to another Ingress port If vlan tag, search for vlan device on port Is net_device a LAG, bridge or macvlan slave? Tested with VLANs and bonds – ingress and egress Cumulus Networks Confidential 19

20 .Challenges Core kernel code vs modules § BPF helpers in filter.c – compiled into kernel § Driver functionality often loaded as modules § Common problem: vlan, bond, bridge, mpls, … Code refactoring needed to not duplicate code § Functions need to be invoked from 2 different contexts – XDP and skb Number of use cases to cover to converge device lookup API Cumulus Networks 20

21 .ACL, Filters and Packet Scheduling ACL § FIB rules supported via FIB lookup helper – L3 ACL § tc vs netfilter Packet Scheduling § tc and qdiscs Start point § Converting tc code to handle frames from XDP context is the bigger ROI Cumulus Networks Confidential 21

22 .L2 / L3 Forwarding FIB lookup helper exists for L3 Forwarding § Needs more work – e.g., handle lwtunnel encaps § MPLS support (started) Bridges and L2 forwarding § FDB access per bridge § MAC learning § Flooding Cumulus Networks Confidential 22

23 .Summary Need better integration of XDP with Linux § More complete and consistent Linux solution Do not need BPF programs reinventing / re-implementing Linux § Established APIs for configuring, monitoring, troubleshooting § Established implementations of protocols with notifications and cleanups (e.g., aging entries) Enables Linux to be a slow-path assist § Same data for full-stack and fast-path in XDP Cumulus Networks Confidential 23

24 . Thank you! Visit us at cumulusnetworks.com or follow us @cumulusnetworks © 2018 Cumulus Networks. Cumulus Networks, the Cumulus Networks Logo, and Cumulus Linux are trademarks or registered trademarks of Cumulus Networks, Inc. or its affiliates in the U.S. and other countries. Other names may be trademarks of their respective owners. The registered trademark Linux® is used pursuant to a sublicense from LMI, the exclusive licensee of Linus Torvalds, owner of the mark on a world-wide basis. Cumulus Networks 24