- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- <iframe src="https://www.slidestalk.com/u10024/scaling_bridge_fdb_database?embed&video" frame border="0" width="640" height="360" scrolling="no" allowfullscreen="true">复制

- 微信扫一扫分享

scaling bridge fdb database

LinuxBridge部署在主机、管理程序、容器操作系统上,最近几年也部署在数据中心交换机上。它的功能集包括转发、学习、代理和窥探功能。它可以在虚拟机、容器、机架、pod之间以及数据中心之间桥接第2层域,如以太网虚拟专用网络[1,2]所示。随着linux网桥部署的升级,它现在正在跨接更大的第2层域,带来大规模的挑战。网桥转发数据库可以在支持硬件加速的情况下扩展到数据中心交换机上的数千个条目。

本文讨论了大型网桥fdb数据库的性能和操作挑战,以及解决这些问题的方法。我们将讨论解决方案,如FDB DST端口故障转移,以加快收敛速度,从控制平面更新FDB的更快API,以及减少具有用于桥接隧道解决方案(如VXLAN)的轻量级隧道端点的FDB DST端口数量。

尽管围绕以下部署场景讨论了大多数解决方案,但这些解决方案都是通用的,可以应用于所有网桥用例:

多机箱链路聚合方案,其中Linux网桥是活动主动交换机冗余解决方案的一部分

以太网vpn解决方案,其中linux网桥转发数据库通过网络覆盖(如vxlan)扩展到第2层域

展开查看详情

1 . Scaling bridge forwarding database Roopa Prabhu, Nikolay Aleksandrov

2 .Agenda ● Linux bridge forwarding database (FDB): quick overview ● Linux bridge deployments at scale: focus on multihoming ● Scaling bridge database: challenges and solutions 2

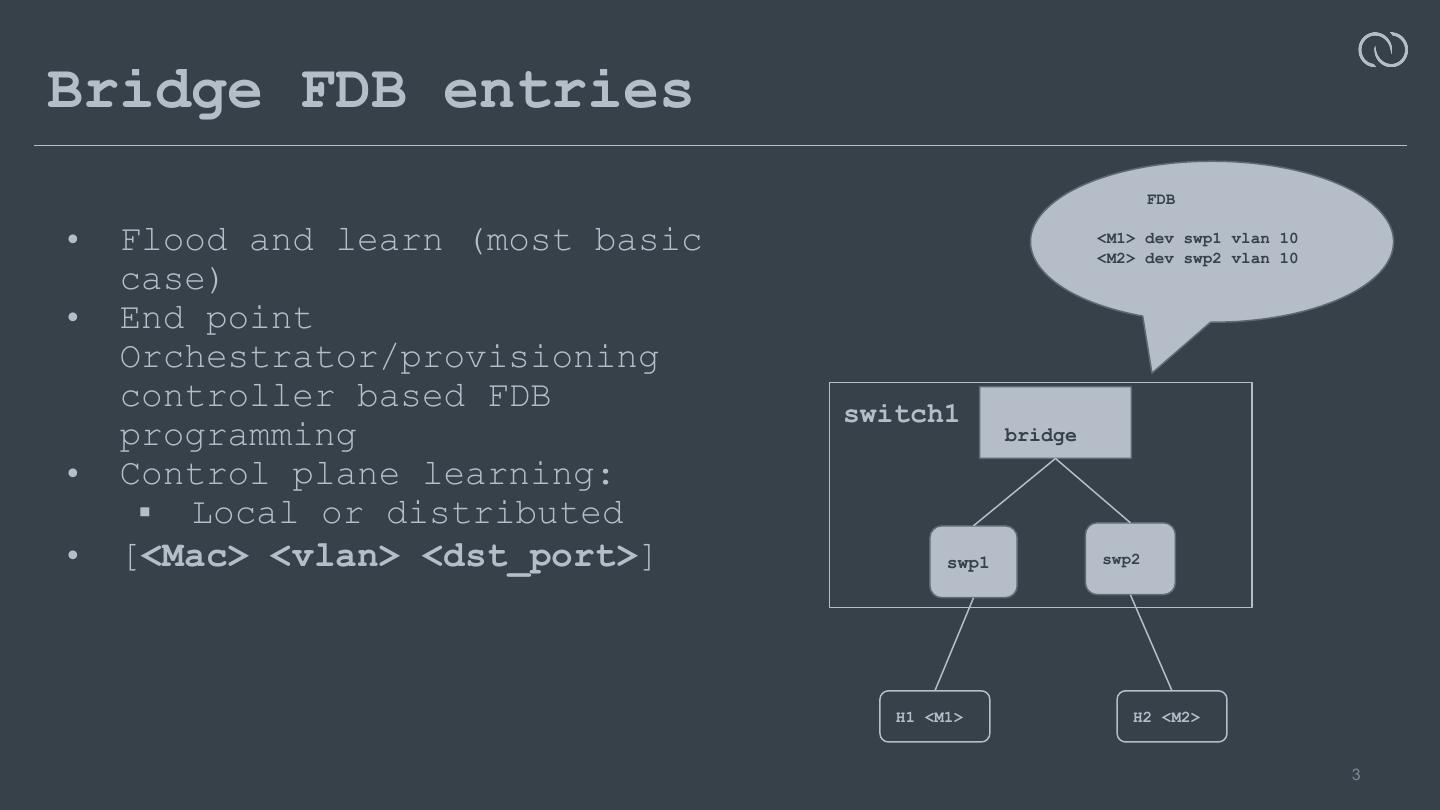

3 .Bridge FDB entries FDB • Flood and learn (most basic <M1> dev swp1 vlan 10 <M2> dev swp2 vlan 10 case) • End point Orchestrator/provisioning controller based FDB switch1 programming bridge • Control plane learning: ▪ Local or distributed • [<Mac> <vlan> <dst_port>] swp1 swp2 H1 <M1> H2 <M2> 3

4 .Bridge FDB entries: network virtualization (overlay: eg vxlan) ● Overlay macs point to overlay termination end-points ● Eg Vxlan tunnel termination endpoints (VTEPS) ○ Vxlan FDB extends bridge FDB ○ Vxlan FDB carries remote dst info ○ [ <mac> <vni> <remote_dst list> ] ■ Where remote_dst_list = remote overlay endpoint ip’s ■ Pkt is replicated to list of remote_dsts 4

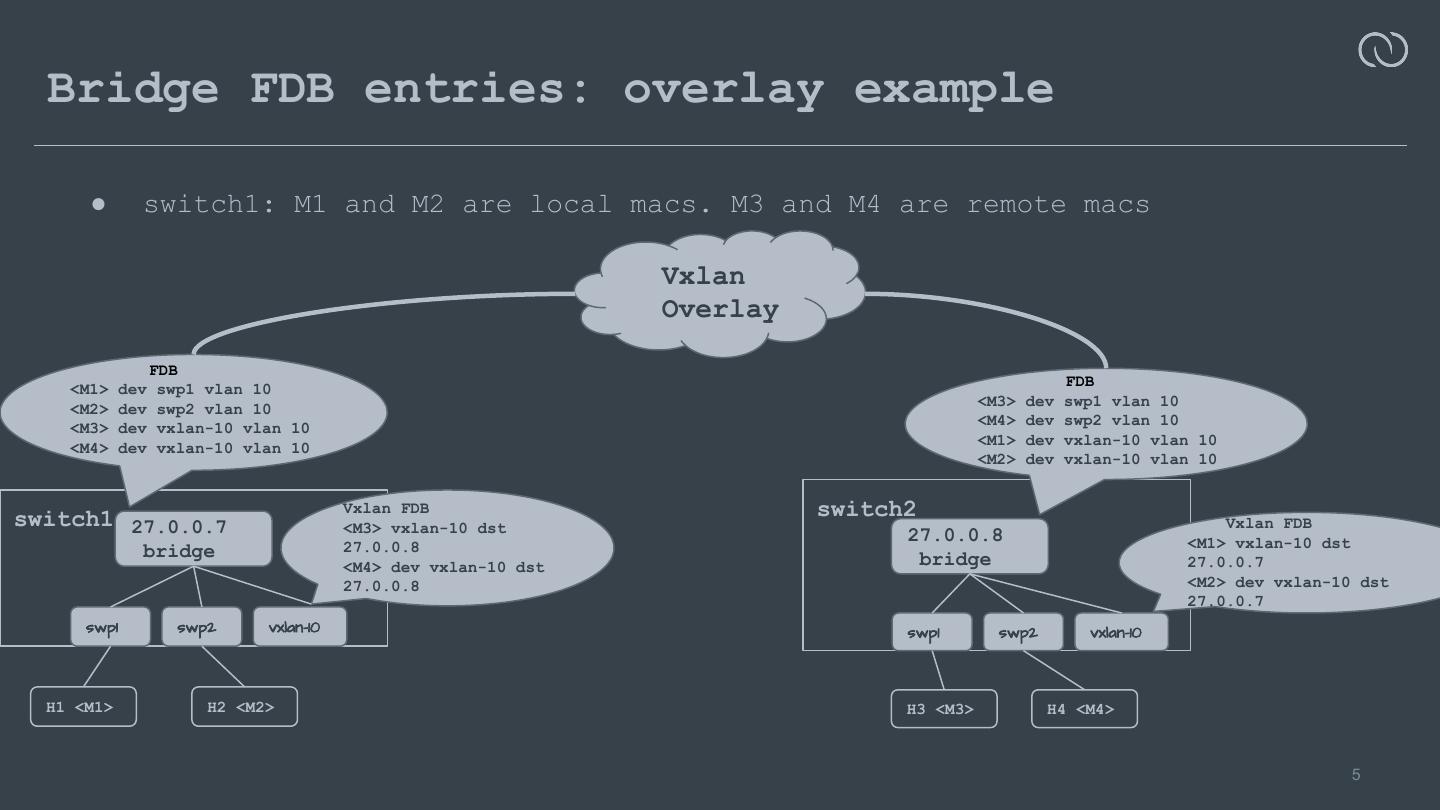

5 . Bridge FDB entries: overlay example ● switch1: M1 and M2 are local macs. M3 and M4 are remote macs Vxlan Overlay FDB <M1> dev swp1 vlan 10 FDB <M2> dev swp2 vlan 10 <M3> dev swp1 vlan 10 <M3> dev vxlan-10 vlan 10 <M4> dev swp2 vlan 10 <M4> dev vxlan-10 vlan 10 <M1> dev vxlan-10 vlan 10 <M2> dev vxlan-10 vlan 10 switch1 27.0.0.7 Vxlan FDB switch2 <M3> vxlan-10 dst Vxlan FDB 27.0.0.8 <M1> vxlan-10 dst bridge 27.0.0.8 <M4> dev vxlan-10 dst bridge 27.0.0.7 27.0.0.8 <M2> dev vxlan-10 dst 27.0.0.7 swp1 swp2 vxlan-10 swp1 swp2 vxlan-10 H1 <M1> H2 <M2> H3 <M3> H4 <M4> 5

6 .Bridge FDB database scale 6

7 .Bridging scale on a data center switch • layer-2 gateway • Bridging accelerated by hardware ▪ HW support for more than 100k entries ▪ Learning in hardware at line rate ▪ Flooding in hardware and software • IGMP snooping + optimized multicast forwarding • Bridging larger L2 domains with overlays (eg vxlan) • Multihoming: Bridging with distributed state 7

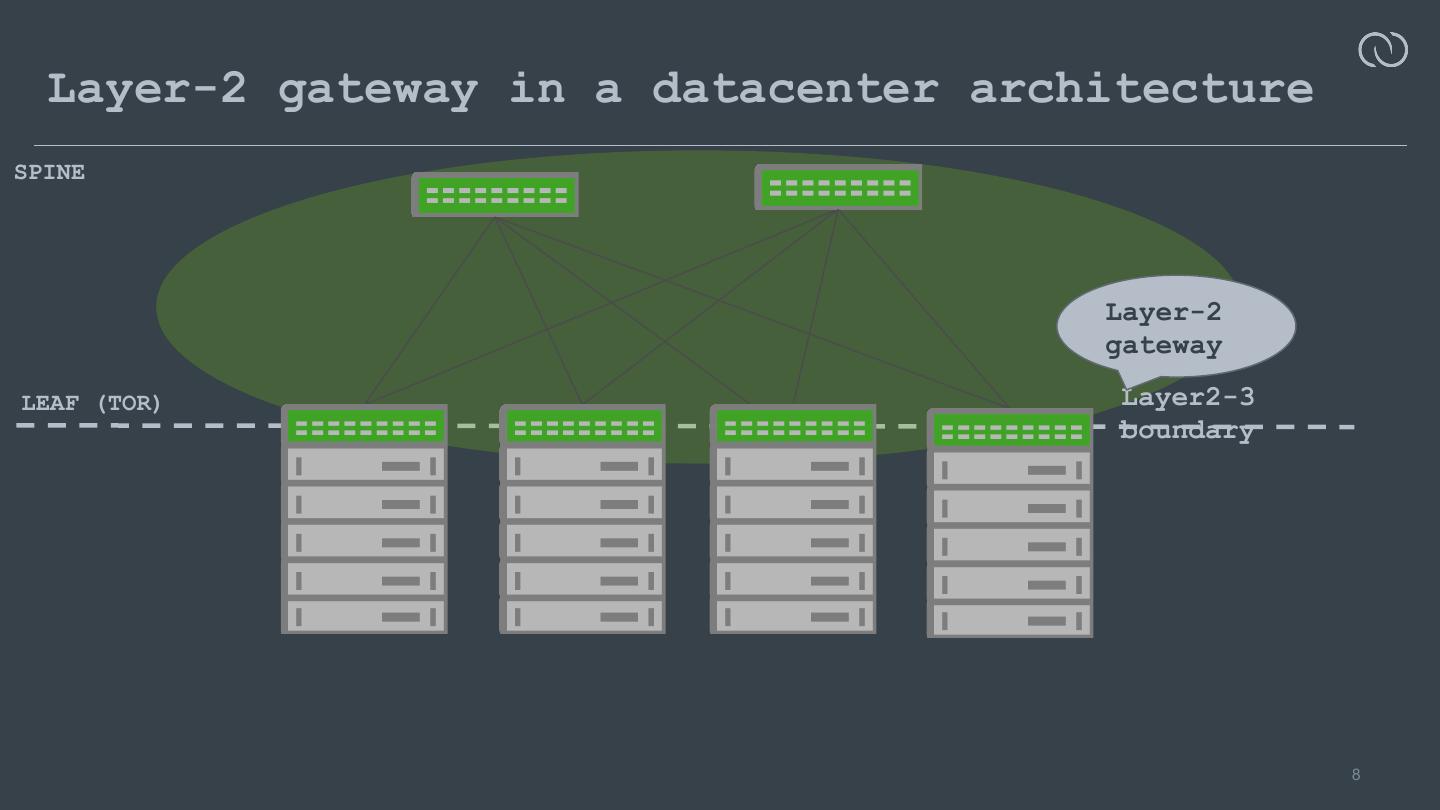

8 . Layer-2 gateway in a datacenter architecture SPINE Layer-2 gateway LEAF (TOR) Layer2-3 boundary 8

9 .Bridge FDB performance parameters at scale • Learning • Adding, deleting and updating FDB entries • Reduce flooding • Optimized Broadcast-Multicast-Unknown unicast handling • Network convergence on link failure events • Mac moves 9

10 .Multihoming 10

11 .Multihoming • Multihoming is the practice of connecting host or a network to more than one network (device) ▪ To increase reliability and performance • For the purpose of this discussion, let’s just say its a “Cluster of switches running Linux” providing redundancy to hosts 11

12 .Common functions of a multihoming solution • Provide redundant paths to multihomed end-points • Faster network convergence in event of failures: ▪ Establish alternate redundant paths and move to them faster • Distributed state: ▪ Reduce flooding of unknown unicast, broadcast and multicast traffic regardless of which switch is active: • By keeping forwarding database in sync between peers • By Keeping multicast forwarding database in sync between peers 12

13 .Multihoming: dedicated link ● Dedicated physical link peerlink (peerlink) between switch1 switch2 switches to sync multihoming state ● Hosts are connected to both switches Host1 Host2 ● Non-standard multihoming control plane 13

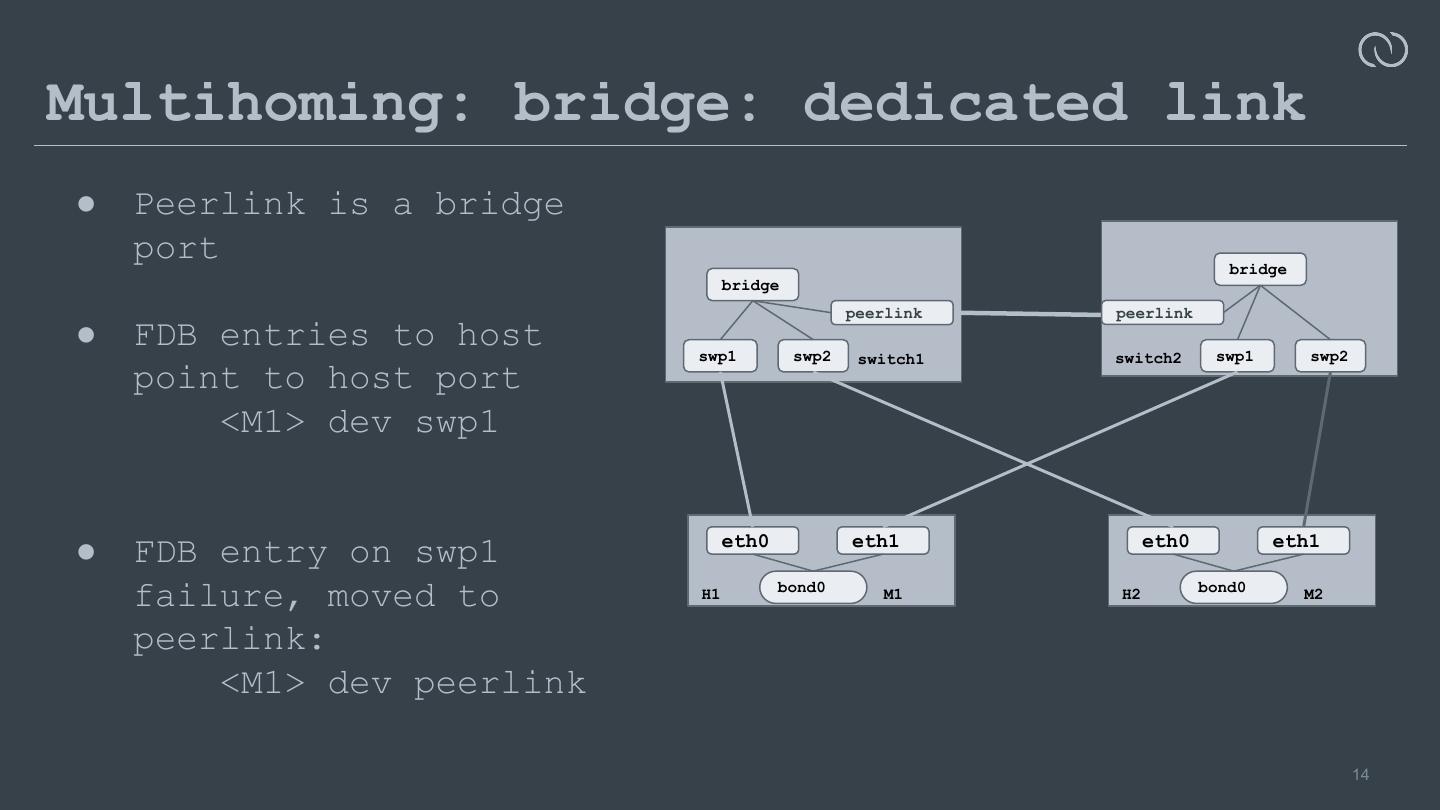

14 .Multihoming: bridge: dedicated link ● Peerlink is a bridge port bridge bridge peerlink peerlink ● FDB entries to host swp1 swp2 swp1 swp2 switch1 switch2 point to host port <M1> dev swp1 eth0 eth1 eth0 eth1 ● FDB entry on swp1 bond0 bond0 failure, moved to H1 M1 H2 M2 peerlink: <M1> dev peerlink 14

15 .Network convergence during failures • Multihoming Control plane reprogrames the FDB database: ▪ Update FDB entries to point to peer switch link ▪ Uses bridge FDB replace ▪ Restore when network failure is fixed • Problems: ▪ Too many FDB updates and netlink notifications ▪ Affects convergence 15

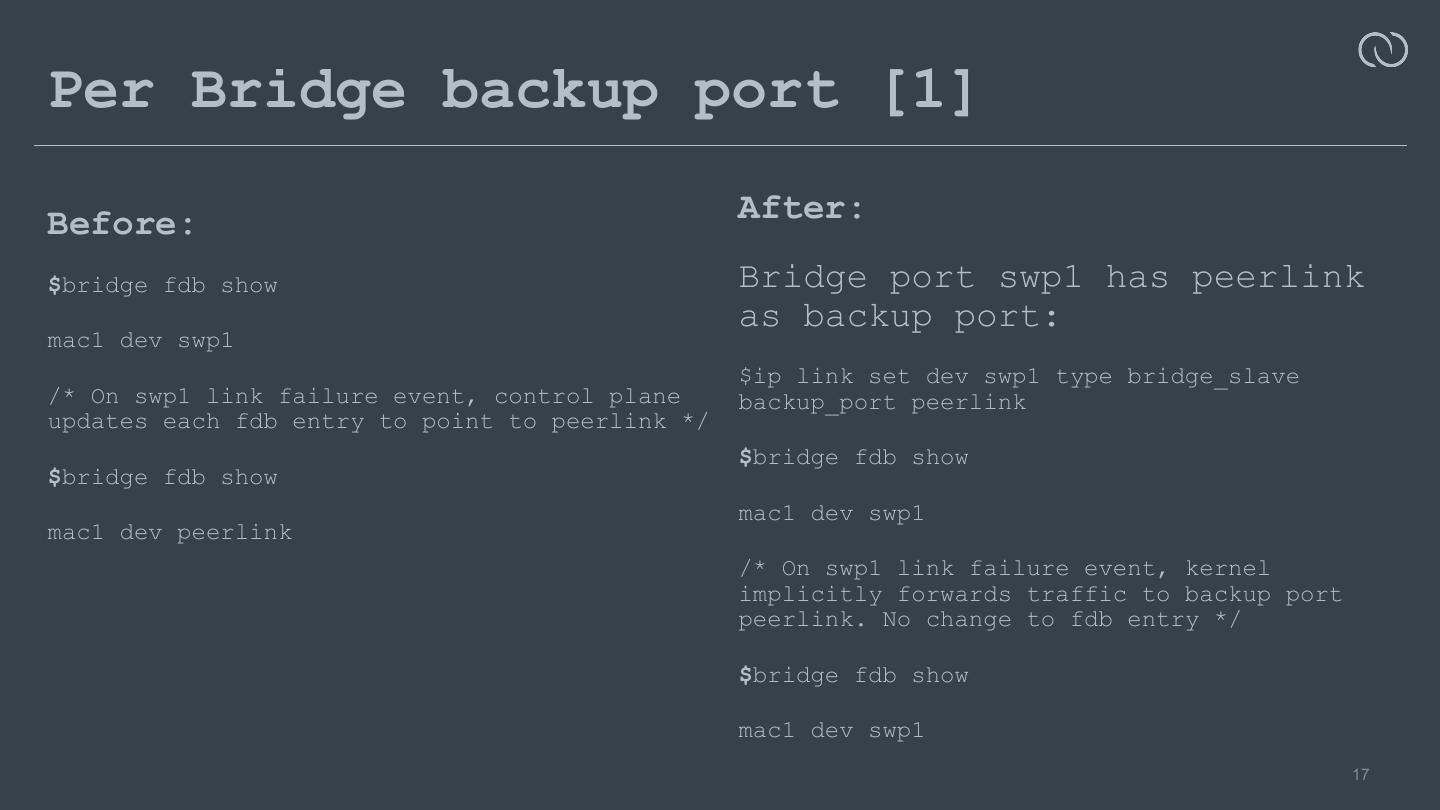

16 .Bridge port backup port • For Faster network convergence: ▪ peer link is the static backup port for all host bridge ports ▪ Make peer link the backup port at config time: • bridge seamlessly redirects traffic to backup port ▪ Patch [1] does just that 16

17 .Per Bridge backup port [1] Before: After: $bridge fdb show Bridge port swp1 has peerlink as backup port: mac1 dev swp1 $ip link set dev swp1 type bridge_slave /* On swp1 link failure event, control plane backup_port peerlink updates each fdb entry to point to peerlink */ $bridge fdb show $bridge fdb show mac1 dev swp1 mac1 dev peerlink /* On swp1 link failure event, kernel implicitly forwards traffic to backup port peerlink. No change to fdb entry */ $bridge fdb show mac1 dev swp1 17

18 .Future enhancements Debuggability: • FDB dumps to carry indication that backup port is active 18

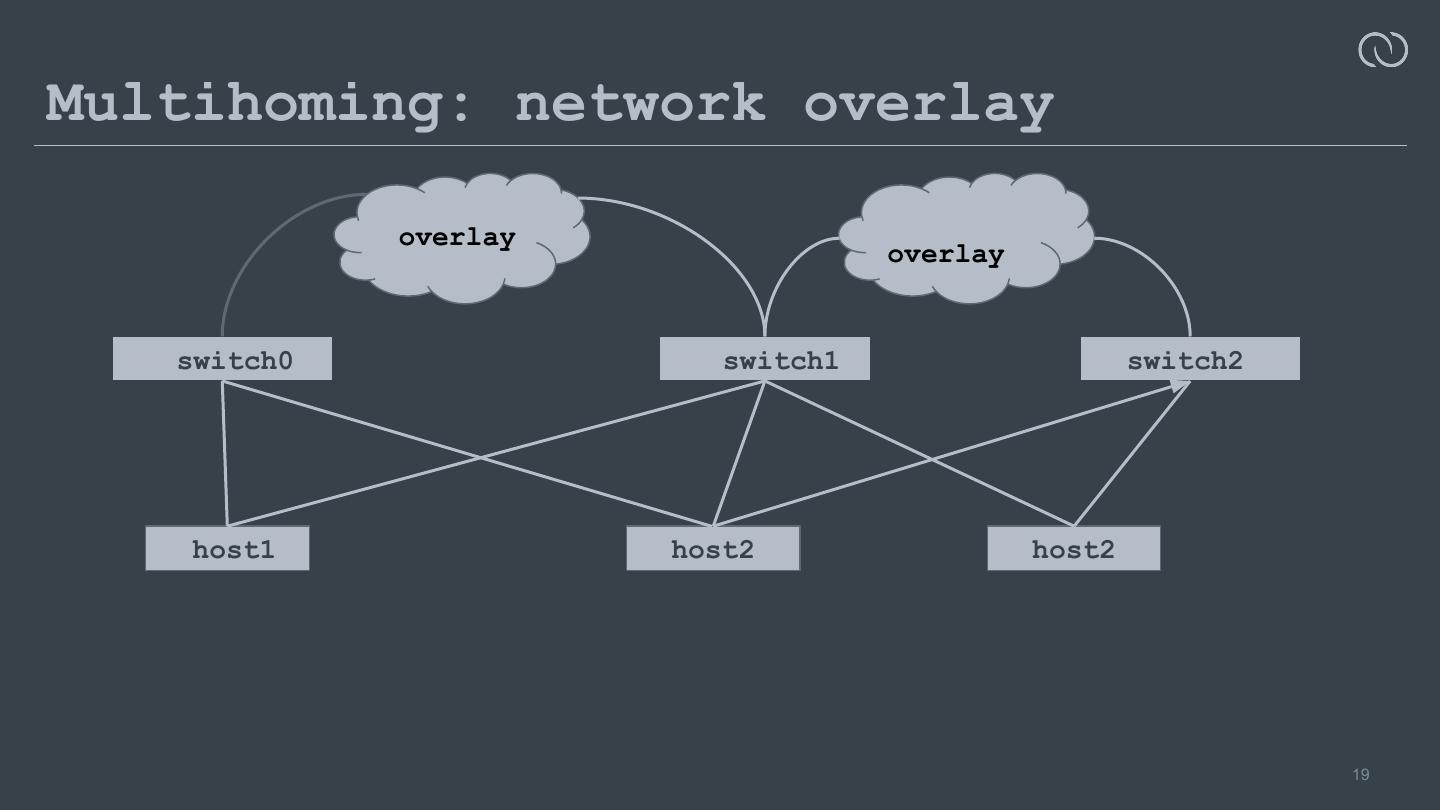

19 .Multihoming: network overlay overlay overlay switch0 switch1 switch2 host1 host2 host2 19

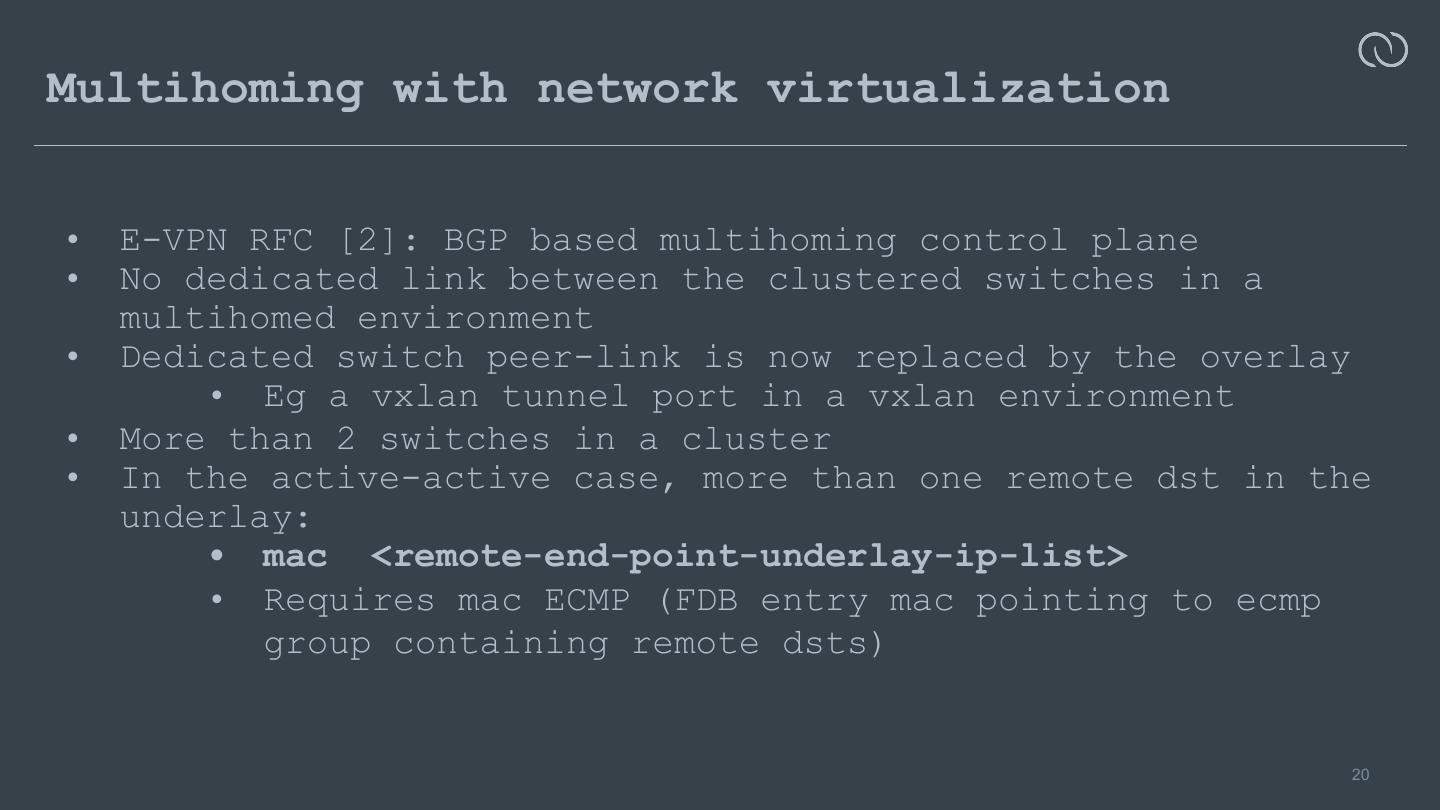

20 .Multihoming with network virtualization • E-VPN RFC [2]: BGP based multihoming control plane • No dedicated link between the clustered switches in a multihomed environment • Dedicated switch peer-link is now replaced by the overlay • Eg a vxlan tunnel port in a vxlan environment • More than 2 switches in a cluster • In the active-active case, more than one remote dst in the underlay: • mac <remote-end-point-underlay-ip-list> • Requires mac ECMP (FDB entry mac pointing to ecmp group containing remote dsts) 20

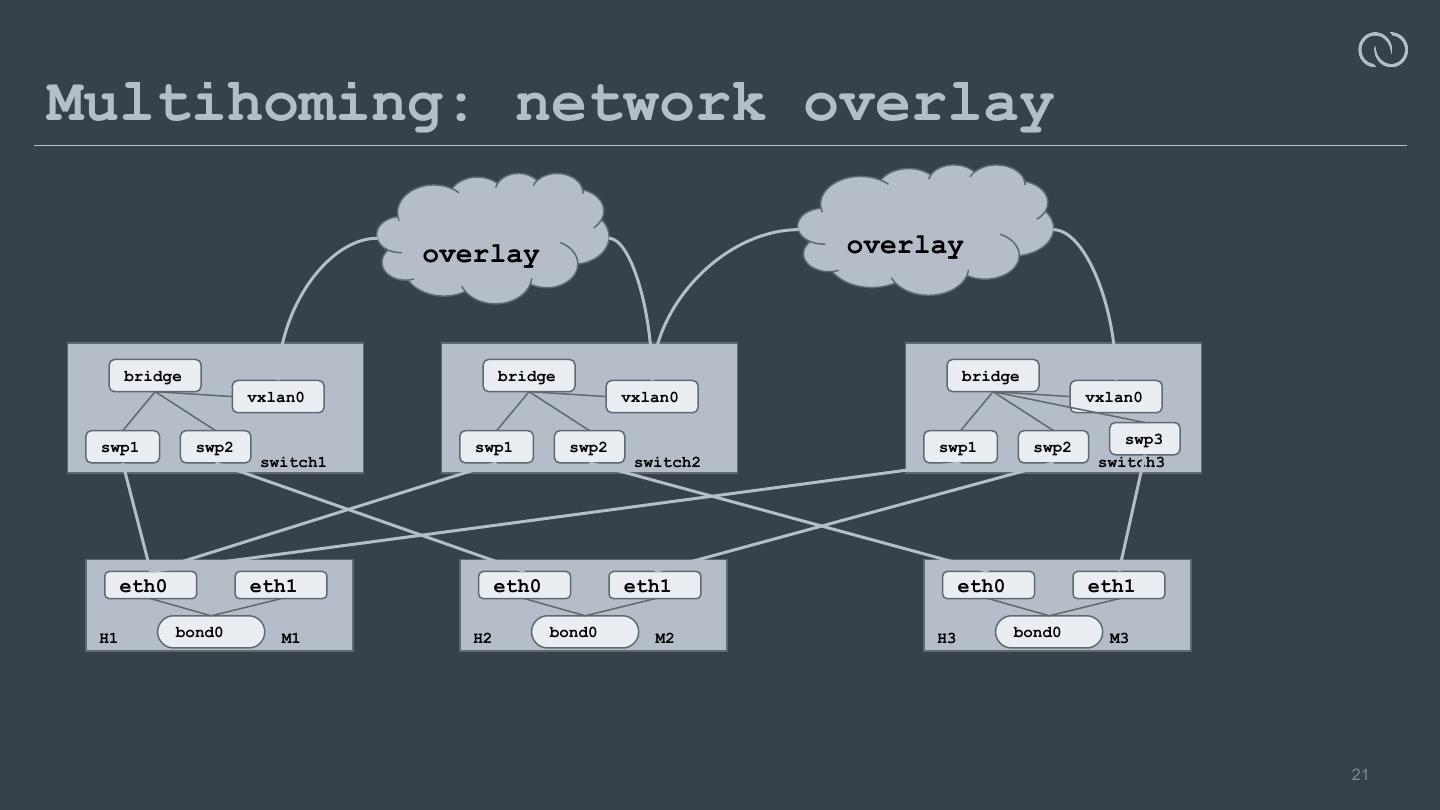

21 .Multihoming: network overlay overlay overlay bridge bridge bridge switch0 vxlan0 switch0 vxlan0 switch0 vxlan0 swp3 swp1 swp2 swp1 swp2 swp1 swp2 switch1 switch2 switch3 eth0 eth1 eth0 eth1 eth0 eth1 H1 bond0 M1 H2 bond0 M2 H3 bond0 M3 21

22 .Control plane strategies for faster convergence • Designated forwarder: avoid duplicating pkts [2,3] • Split horizon checks [4] • Aliasing: Instead of distributing all macs and withdrawing during failures infer from membership advertisements [5] 22

23 .Forwarding database changes for faster convergence • Backup port: to redirect traffic to network overlay on failure [1] • Mac dst groups (for faster updates to FDB entries): ▪ FDB entry points to dst group (dst is an overlay end-point) ▪ Dst group is a list of vteps with paths to the MAC ▪ Think FDB entries as routes: • Ability to update dst groups separately is a huge win • Similar to recent updates to the routing API [6] 23

24 .New way to look at overlay FDB entry: dst groups Current vxlan fwding database New proposed vxlan fwding database Eg: Vxlan fdb entry: Eg: Vxlan fdb entry: mac, vni dst_grp_id remote vni, mac, vni remote_ip Dst group db: remote vni, remote_ip dst_grp remote vni, (id) remote_ip remote vni, remote vni, remote_ip remote_ip remote vni, remote_ip 24

25 .Fdb database API update New fdb netlink attribute to link an fdb entry to a dst group: • NDA_DST_GRP 25

26 .New dst group API enum { To create/delete/update a dst NDA_DST_UNSPEC, group: NDA_DST_IP, RTM_NEW_DSTGRP/RTM_DEL_DSTGRP NDA_DST_IFINDEX, /RTM_GET_DSTGRP NDA_DST_VNI, enum { NDA_DST_PORT, NDA_DST_GROUP_UNSPEC, __NDA_DST_MAX, NDA_DST_GROUP_ID, } NDA_DST_GROUP_FLAGS, #define NDA_DST_MAX (__NDA_DST_MAX - 1) NDA_DST_GROUP_ENTRY, #define NTF_DST_GROUP_REPLICATION 0x01 __NDA_DST_GROUP_MAX, #define NTF_DST_GROUP_ECMP 0x02 }; #define NDA_DST_GROUP_MAX (__NDA_DST_GROUP_MAX - 1) 26

27 .Other considerations for the dst group api • Investigating possible re-use of route nexthop API [6] 27

28 .Acknowledgements We would like to thank Wilson Kok, Anuradha Karuppiah, Vivek Venkataraman and Balki Ramakrishnan for discussion, knowledge and requirements for building better Multihoming solutions on Linux. 28

29 .References [1] net: bridge: add support for backup port: https://patchwork.ozlabs.org/cover/947461/ [2] E-VPN Multihoming: https://tools.ietf.org/html/rfc7432#section-8 [3] E-VPN Multihoming: Fast convergence: https://tools.ietf.org/html/rfc7432#section-8.2 [4] E-VPN multihoming split horizon: https://tools.ietf.org/html/rfc7432#section-8.3 [5] E-VPN Aliasing and Backup Path: https://tools.ietf.org/html/rfc7432#section-8.4 [6] Nexthop groups: https://lwn.net/Articles/763950/ 29